Abstract

Research involving human participants continues to grow dramatically, fueled by advances in medical technology, globalization of research, and financial and professional incentives. This creates increasing opportunities for ethical errors with devastating effects. The typical professional and policy response to calamities involving human participants in research is to layer on more ethical guidelines or strictures.

We used a recent case—the Johns Hopkins University/Kennedy Kreiger Institute Lead Paint Study—to examine lessons learned since the Tuskegee Syphilis Study about the role of institutionalized science ethics in the protection of human participants in research. We address the role of the institutional review board as the focal point for policy attention.

THE HISTORY OF EFFORTS TO institutionalize ethical health science is one of disaster response. The script changes little from one episode to the next, a situation that is troubling given increasing layers of regulation and bureaucracy specifically designed to address the problem. Scientists or medical professionals, through callousness, ignorance, or misguided good intentions, perpetrate a human calamity and, in its aftermath, professional associations or policymakers develop additional organizational ethical guidelines or strictures.

We do not suggest that these ethical code responses are disingenuous. In most instances of science-induced disaster, the vast majority of scientists are as horrified as other outraged citizens. Nor do we feel that the incidence of insensitive and arrogant individuals in the health sciences is any higher than in business, the legal profession, education, the military, or most other fields. When scientists and medical professionals err, no matter how infrequently, no matter how pure or impure the motives, the results can be devastating, and their effects can resonate far beyond the immediate circumstances.

So for medical scientists there is little or no tolerance for lapses in judgment or faulty response to confounding bureaucracy. For example, one of the many results of occasional instances of science-induced catastrophe is a decline in societies' and individuals' trust in institutions of science and in practicing scientists. Studies have shown that distrust is one of the reasons that the poor are less likely to take advantage of available medical care and of economically and socially advantageous technologies.1,2 Distrust is also one of many factors in the low proportions of poor and disadvantaged persons seeking careers in science and research.3,4

It is the predictability of this cycle that is especially troubling. We used the term “institutionalized science ethics” to refer to the statutory, professional, and institution-based ethical standards that guide and constrain scientists' research work, and we focused on the primary institution responsible for implementing institutionalized science ethics in medical centers, the institutional review board (IRB).5 We considered the progress of institutionalized science ethics to prevent catastrophic outcomes in science and medical research for which it is designed. We reviewed two examples, one recent and one that occurred before the contemporary regime of institutionalized science ethics, and explored the fundamental question, “Why can't we build a better IRB?”

The first case we examined is the familiar Tuskegee Syphilis Study, the landmark exploitative research project that ultimately gave rise to many of the human participant protections now in force, including IRBs. The second case, a relatively recent one, is the Johns Hopkins University/Kennedy Krieger Institute study (hereafter designated as the KKI study). The latter study, designed to assess cost-effective methods of household lead paint abatement, was terminated by the Maryland Court of Appeals in a decision whose opinion described the study as “a new Tuskegee.”6 We believe the comparison of the two cases sheds light on some of the prospects for institutionalized science ethics,7 as well as provides food for thought about the adequacy of the organization and operation of IRBs to protect both researchers and the public from research calamities.

THE PREDICTABILITY OF ETHICS BREACH IN THE KKI LEAD PAINT STUDY

To address this issue we posed the question, was the KKI study really a “new Tuskegee?” If so, how did this occur in light of the many specific protections that have been put in place since the Tuskegee case came to light? That the KKI study occurred is not surprising given the limitations of research bureaucracies and of institutionalized science ethics. The fact that science ethics are institutionalized in the form of more than 6000 differentiated IRBs—dispersed throughout disparate institutions (universities, hospitals, foundations, and federal agencies)—that are accountable for federally funded research on human participants5,8 does not bode well for preventing ethical breaches. The challenge has not gone unnoticed and it is the subject of a growing body of organizational behavior theory that addresses how people (such as well-meaning scientists) adapt and strategize to deal with the issues and challenges that face them.9

Institutional review boards often—indeed, almost always—succeed in protecting human participants and in protecting researchers from themselves. Decisions by IRBs have provided judicious assessments for tens of thousands of research studies that have produced beneficial results in the form of effective treatments, drugs, and biologics. That these IRB organizations sometimes fail should be no surprise. Considering IRBs' excessive workloads, mounting duties, and regulatory directives, and sometimes problematic group expectations and dynamics,9 the greater surprise is that IRBs almost always prove an effective second-line (beyond the investigator) guarantor of research ethics. The success rate is a good thing inasmuch as, along with other sorts of zero-tolerance, high-reliability institutions, failure of an IRB can be catastrophic.10–12 But a high success rate does not mean that there is not room for improvement.

We demonstrate a need for further improvement of the IRB as a science ethics institution by showing that, even though Tuskegee history has not been repeated in most respects, the more recent KKI study experience suggests that current science ethics institutions need more work. We believe improvements are best addressed through accepted theory of organizational behavior, specifically bureaucracy of IRBs overseeing institutional science ethics. The American public has high expectations for science institutes in terms of improvement of personal and public health and betterment of society in general. But we often underestimate the impacts of the organizational aspects of institutionalized science ethics on science researchers' monumental tasks. The messages here are that organization and organizational behavior matter and must be well understood in building better science institutions that prevent harm while helping people.

For one to understand why institutionalized science ethics need more work requires a brief overview of some salient aspects of the Tuskegee Syphilis Study that precipitated many of the current IRB rules and regulations for vulnerable populations. In 1932, 399 African American sharecroppers living in Macon County, Alabama, were told by medical researchers at the Tuskegee Institute that they suffered from “bad blood,” a term that African Americans of the time and in that region used to describe a host of ailments including syphilis, anemia, and fatigue. The men had in fact been diagnosed with syphilis, but they were not informed of the diagnosis. Instead, they were invited to take part in a research study, funded in large part by the Public Health Service, of the effects of untreated syphilis on the African American man. As incentive they were offered free meals, free medical exams, and burial insurance.

Although the men had agreed to be examined and “treated,” they were not informed of the real purpose of the study. When the study began, there was no reliable treatment of syphilis. But even after penicillin came to be considered an effective treatment of syphilis in the 1940s, decisions were made purposely to withhold treatment from the research participants so as not to interfere with the disease's natural progression. If the men in the study left Macon County or joined the military, penicillin was withheld from them via coordination between the Public Health Service and local health departments. Amazingly, the study continued without any significant changes in the methodology or protocol until 1972, when numerous news articles condemning the study were published.13

The Tuskegee study was not devoid of ethical intent and interests and institutional impact. It was ahead of its time in terms of including members of minority populations in all aspects of the study's design: as members of the funding institution, as researchers, and as research participants.14 Further, this was one of the first large-scale, highly organized medical studies that paid any attention to problems differentially affecting African Americans, in stark contrast to the bulk of clinical studies both in the past and today.15,16

Despite the proposed benefits to minorities, in the final analysis, the Tuskegee study is rarely lauded for its inclusion of African American patients and professionals and its focus on a problem affecting the poor, and most often it is condemned for its exploitation of them. Tuskegee surely motivated the complex regulatory system for research involving human participants from vulnerable groups and it continues to fuel public and regulatory scrutiny of research institutions. However, no amount of outrage or intolerance for historically unethical science behavior is enough to overcome contemporary institutional organizational inadequacies and, therefore, it should be no surprise that exploitation of vulnerable populations still occurs.

For example, take the 1992 KKI lead paint study where minority children were purposely exposed to lead to study its effects. Although different in many respects from the purposeful neglect of the Tuskegee research participants, it is still considered a major ethical calamity in human participant research. In this study, launched 60 years after the initiation of the Tuskegee study and 20 years after its termination and the initial proliferation of ethical guidelines and laws aimed at protecting human participants, a group of researchers from the Kennedy Krieger Institute, an affiliate of Baltimore's Johns Hopkins University, conducted a study designed to assess the effectiveness of low-cost techniques for reducing the amount of lead children are exposed to in their homes.17–19 In 1992, there was general recognition of the deleterious impacts of lead on children, and despite attempts to remove lead from their environment (e.g., through elimination of lead in gasoline and reduction of lead in diets), lead remained common in household paint.17 (A later study by Leighton et al. showed that about 1 in 5 children exhibit dangerous levels of lead and nearly 900 000 have sufficient concentrations of lead as to pose a serious threat of mental retardation and other brain disorders.20) The problem was well known and its treatment a high priority in 1991, when the US Department of Health and Human Services called for a society-wide effort to eliminate childhood lead poisoning in 20 years.

The KKI study research methodology, which included measuring the blood lead levels of children living in houses that had received one of three lead abatement measures,19 was reviewed and approved by a federal agency that provided significant funding (the Environmental Protection Agency) and with enthusiastic support by Johns Hopkins University's IRB.21 Despite the fact that all of the houses in the study received some form of lead abatement (i.e., there was no placebo), and that the majority of participants experienced reductions in blood lead levels,19 the study engendered a lawsuit by two mothers whose sons' blood lead levels became elevated during their participation in the study.17 Public outrage followed, fueled by the revelation that the researchers encouraged the landlords of the lead-abated houses to rent to families with young children.17 The case went to Maryland's highest court, the Court of Appeals, which denounced the study and directly compared the research protocol and its societal outcomes to the Tuskegee studies.21

INADEQUACIES OF INSTITUTIONALIZED SCIENCE ETHICS

In an era of heavily regulated science ethics and lingering effects of outrage against the Tuskegee study, how did exploitation of the child participants of the KKI study occur? We believe the answer rests on challenges facing the organizations that manage protection of human participants of current health science research. Evidence of these challenges comes in the form of the contretemps surrounding the KKI study that played out in the court system from 1992 to 2001, where both judicial officials and public opinion excoriated the KKI study researchers and the organization that supported their work and the researchers called on the supposed security of institutionalized science ethics standards to defend their actions. The KKI study dispute has entertaining theatrical elements but in most respects it is a classic organizational tragedy theoretically resulting from shortcomings, contradictions, inconsistencies, and excessive expectations of the institution and the rules that bind its protection of especially vulnerable human research participants.

The KKI study sought to address needs of the poor (middle-class children also suffer from lead poisoning, but at much lower rates). Critics of health care policy22 and public policy makers23,24 decry the paucity of research addressing the needs of the poor. The KKI lead paint study seemed to be exactly the sort of research that is in line with government, especially National Institutes of Health, objectives focusing research on the needs of underserved, poor, and minority populations. The legal, political, organizational, and scientific aspects of the KKI study have been carefully examined by a variety of scholars,25,26 resulting in embarrassment and a stain on the reputation of the institute and the researchers involved in the case.

Yet, surprisingly, little attention is paid to the organizations with arguably as much responsibility as the researchers themselves, specifically the two IRBs that vetted the research. There is no public transcript of their deliberations; however we do know that the IRBs in question were duly formed, were duly authorized, and followed prescribed behaviors that resulted in the travesty of exposure of children to lead paint for research purposes.7 Clearly something in the composition, formation, management, or deliberation of the IRBs (and IRBs in general) is problematic to say the least and potentially disastrous at its worst. This is not a new concept or concern.27 However, viewing IRBs in terms of their organizational vulnerabilities seems to be far less popular than questioning the character and intentions of the researchers themselves or engaging in scrutiny of superficial procedural problems in IRB meetings.28

Evidence that the system for protection of human participants of research worked in some respects in this case is clearly not enough to give a clean bill of health to the institution itself. For years we have witnessed excessive confidence in the rules and regulations of institutionalized science ethics, with too little understanding or appreciation of the limitations of rules and of the hazards of implementing rules. Some have suggested rules reforms to improve IRB performance (see, for example, Kafelides,29 Larson et al.,30 and Shaul31). However, the few extant studies of the impacts of rules on organizational performance32–34 converge in their opinion that having “good rules” is never more than a partial solution to organizational and institutional performance, and that adding layers of rules, even ones that are effective when taken one at a time, can produce aggregate negative consequences.

INSTITUTIONAL REVIEW BOARDS AND THEIR RULES

Thanks in part to the outrage and policy response to the Tuskegee case, there was in 1992, as today, no dearth of laws, institutional guidelines, or formal professional ethics guiding research. Does the KKI study result imply that these laws and guidelines are inadequate? If so, how could this have happened given the labyrinth of standards and regulations developed in the wake of Tuskegee? Could the debacle have been prevented by better rules, procedures, and institutional guarantees?

One interpretation is that the KKI study represents a specific failure in one instance of institutionalized science ethics implementation. Our interpretation is that the case suggests systemic problems with overreliance on institutionalized science ethics as prevention of research catastrophe when the organizational aspects of the institution itself are too poorly understood. If greater transparency in the IRB review process were given, we might focus on specific problematic rules or forecasts of rule failure. Therein lays an alternative for building a better IRB. Transparency in IRB deliberation, to the extent that it does not compromise privacy of participants, can produce better understanding of IRB members and whether they are meeting the implicit or explicit assumptions and forecasts of their behavior.9

A crucial component of the Federal Policy for the Protection of Human Subjects, which was passed into law in 1974 with the National Research Act, is to require that all research protocols involving human participants be reviewed and approved by an IRB. Although there is much variance in the organization, structure, compositions, and norms of IRBs, their functions and charters are quite similar. Two of the primary operating criteria and rules for IRBs are especially relevant. The first rule is that IRBs must ensure that research participants willingly and voluntarily provide their informed consent.35 In the case of the KKI study, the study was reviewed and passed by the Johns Hopkins University IRB, an arm of a university that has vast experience with research on human participants and that is considered by many to be the leading medical research university in the nation. This group clearly had significant experience reviewing and approving participant consent forms. This is not to suggest that the researchers were blameless for ethical lapses or that their communication with the IRB could not have contributed to poor IRB decision making, but the research could not be conducted without IRB approval and, thus, the lapse in judgment represents a focal point in the comparative analysis between Tuskegee and the KKI study. Despite all of the legislation and regulation that was implemented as a result of Tuskegee, ultimately, the last vestige of consideration of potential harm to research participants in the KKI study rested in the hands of a group of diverse John Hopkins University IRB members.

Indeed, based on the submissions of the Johns Hopkins legal counsel, the KKI researchers were well informed, well meaning, and properly intentioned about consent for the vulnerable population they served.36 Yet little is known about the deliberations of the Johns Hopkins IRB or how they arrived at the decision to approve the lead paint study protocol. We do know that layers of ethical guidelines, laws, and regulations did not deter the IRB from granting permission to the KKI group to pursue the lead paint study. What we do not know is whether the Johns Hopkins IRB was used as, essentially, a device for legitimation, moving the ethical onus away from the researcher. This is a worrisome possibility not only for the KKI case but also for research writ large because it suggests not only a continuing possibility but also increasing probability of bad outcomes and ethical lapses.

The second of the major rules for IRBs is that they ensure that safeguards must be provided for vulnerable populations.35 Vulnerable populations include children, prisoners, pregnant women, handicapped, mentally disabled, or economically or educationally disadvantaged persons. It is important to note that today's IRBs are asked to make discrete decisions but that some policies and legislative requirements are imprecise and thereby not only permit but also necessitate considerable interpretation and exercise of discretion. Because of the inexact meaning of “vulnerable,” “informed consent,” and, especially, “risk” and “benefit,” the KKI study researchers and IRB members arguably may have had little more clarity concerning science ethics for vulnerable populations than their Tuskegee researcher counterparts had decades ago.

One of many problems of institutionalized science ethics is that its range of application seems to have been stretched to the breaking point. For today's IRB, the line between human participation in experiments and human interaction in discussing social issues (e.g., 2 people talking) has been blurred, with increasing demands for informed consent for study groups at little or no risk as a result of the research.37 Most researchers know that the answer to nearly all questions about science ethics, whether the study involves drugs injected by poor youth or interviews conducted about innocuous topics with highly sophisticated participants is “submit it to the IRB.”37

The vulnerability of certain minority groups seems to have lost force and attention. Now “vulnerability” is associated more with capacity to provide consent than with justice in selection of participants for the research.38 In some instances, institutionalized science ethics seems to be synonymous with the institutionalization of condescension. For example, a poor person may agree to participate in a research study, and be knowledgeable and able to understand the risk, but is vulnerable because of his or her attraction to the few benefits of study participation (such as free health care and clinical assessments, lodging, and meal stipends). Research centers have experienced dramatic shutdowns by regulatory agencies (such as the Food and Drug Administration and the Office for Human Research Protections) with responsive contortions in their subsequent research on vulnerable populations.39

OFFERING A NEW THEORETICAL LENS

We need not dwell further on the specific limitations of IRBs as others have preceded us (see, for example, Gray,5 Emanuel et al.,28 and Goldman and Katz40). Nor do we need to focus on remedies that might be appropriate to the KKI case because it is well known that IRBs vary from institution to institution. Rather, we focus on the theoretical limitations of IRBs as bureaucratic organizations. Formal organizations of all sorts are rooted in fundamental rules and procedures. The IRB is not an exception. One enduring challenge for effective management is to determine which behaviors should be formally prescribed by the organization and which should be based on discretion. Organizations based more on rules are said to be more “formalized.” With respect to rules-based organizational activities (such as the production of IRB decision outcomes), the organization is prone to many well-known pathologies (see Bozeman9 and Zhou33), including problems with the implementation of rules. Even in instances where the rules and procedures themselves are effective and well accepted, persons in bureaucratic organizations often have difficulty with their implementation. The circumstances where formalized rule implementations are especially problematic are well known to students of organizations.

We suggest that if one thinks of IRBs as organizational systems for rule implementation, then their inherent organizational vulnerabilities can be explained. In most organizational settings, problems with rule implementation are largely a function of not only the quality of the rules, but also:

the number of relevant rules,

the number of persons enacting the rules,

the frequency with which rules are enacted,

the mastery of rules by those enacting them,

the relevant heterogeneity of the rule enactors,

the degree of concentration or dispersion among rule enactors, and

the degree and type of linkage among rule enactors.

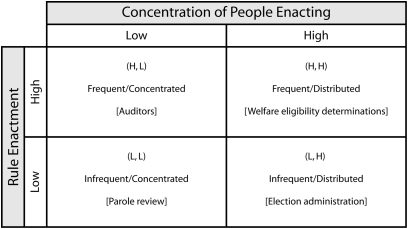

Bozeman, in his study of formal rules,9 developed a model of “rule enactment.” Figure 1 provides the rule enactment model where rule enactment ranges from frequent to infrequent (vertical axis) based on the concentration of participants (concentrated to distributed on the horizontal axis).

FIGURE 1.

Rule enactment model.

As we see from the figure, we can expect systems to work best when (ceteris paribus) the rules are enacted frequently by a concentrated and homogenous set of enactors. One example is auditors. The rules are fixed and relatively precise, they are exercised often, and the enactors typically are homogeneous in the sense that they have similar understandings and technical mastery of the rules. This is not to say that problems are unknown in the highly concentrated or frequently enacted context, only that problems are less common here than in other contexts.

In contrast, we can expect frequent problems when we have relatively infrequent decisions by dispersed decision makers, especially in those cases where the rules are not precise, the decision makers are dissimilar, and technical mastery of the rules is either missing or, in the case of the IRB, has little meaning because of inconsistencies and complexity.28 If we assume that a very significant percentage of IRB members’ rule-based discussion is about relatively trivial cases (e.g., semistructured interviews with knowledgeable participants, survey questionnaires on innocuous topics) and that only a small percentage of decisions have great import (i.e., significant and palpable threat to subjects’ well-being), then IRB decisions would, except for the dross of largely extraneous decisions, exist in the “infrequent/distributed” quadrant, in which rule enactment disasters are most likely to occur.

The primary implication of this modest theory of IRB rule enactment is that the current institutional design of IRBs is likely to fail at an unacceptable rate. This failure is most likely to take place when the decisions faced are not routine and have strong elements of idiosyncrasy and novelty that require more judgment than straightforward enactment of rules. In other words, IRBs should work reasonably well for unimportant decisions, but have built-in design flaws for high-stakes decisions.

If one accepts the vulnerability of these institutions that are set up to protect the vulnerable, then what is the necessary response? Is organizational reform the answer? Others have made arguments for (1) reducing the IRB's attention to relative trivia, (2) stabilizing and rationalizing requirements for IRB members (i.e., “technical mastery of the rules”), and (3) standardizing IRBs, which now vary greatly in their design and norms (see, for example, Barnes and Florencio41). Is greater participation the answer? Elsewhere,7 we have argued that “representativeness” occurs best when IRB representatives are individuals who are candidates for the participant pool. Indeed, as we have asserted in other work, there is no good substitute for identification and empathy with the people who will be recruited for and exposed to the research. But representativeness, especially in group decision making, presents its own problems (see Surowiecki42 for a discussion of the challenges of representativeness in group decision making that create problems of power and influence), similar to the problems of defining “vulnerable.”

A NEW AGENDA FOR INSTITUTIONALIZED SCIENCE ETHICS

There are no easy answers in science ethics. The KKI study example is only one of many that demonstrate that institutionalized science ethics are insufficient. The reason KKI is instructive is not that the associated IRB processes were extraordinary; it is quite the reverse. There is no evidence that IRB procedures differed significantly in this case from hundreds of other instances, ones that drew less attention and escaped the wrath of the press and the public.

Perhaps we can do better with a set of interrelated changes: more realistic policies and procedures more easily enacted, organizational and institutional reform, greater participation, and better representation of potential research participants and their interests. It is perhaps also useful that we do not rush to vilification. Lest we too eagerly tar all with the same brush, let us recall that literally tens of thousands of IRB processes unfold each year, affecting the vast majority of scientific and medical researchers. In only a very small percentage of these cases does either the IRB process or the subsequent research receive any negative attention. Although this does not imply that the IRB process is working optimally, it at least seems to suggest that institutional reform and continued vigilance are needed, not a full-scale overhaul or eradication of the system or addition of new layers of edicts and rules.

The reform agenda carries with it an implicit research design. If local IRB controllers will consent to intrusive study of their own operations, then it should be possible to conduct a number of natural field experiments on the reforms that will show the impact of such factors as committee composition, group interaction dynamics, procedures, red tape, and decision processes on outcomes. In our view, developing research useful for improving IRBs and institutionalized ethics processes should involve multiple methods. Each of three popular research methods seems to have considerable potential for the study of IRBs, and each has very different strengths and weaknesses. Methods warranting further use in connection with IRB research include: (1) systematic case studies and ethnographic work, (2) survey research and questionnaires, and (3) experiments and simulations.

In those instances where it is possible to engage in what is considered ethnographic work, the “wide-angle lens” of such studies is likely to be quite useful in developing hypotheses for broader studies. When researchers have sufficient access for ethnography, there is great potential for developing deep and quite specific insights into IRB operations and outcomes. When access is somewhat more limited, case studies provide many of the same advantages. Although case analysis presents known problems related to generalization,43 the method nonetheless provides an excellent means of mounting an empirically based argument and for developing initial research propositions.44 With respect to research on IRBs, the case study would likely prove especially useful for understanding exceptional outcomes. A great advantage of case studies is they enable one to examine a wide range of influences on science ethics decision making. Whereas many quantitative approaches are not well adapted to focusing simultaneously on different levels of analysis (e.g., individual, group, organizational, and environmental), case studies, which require no commensurate measurement among analytical levels, easily finesse this problem.

Whereas case studies prove useful for a more holistic examination of science ethics implementation, survey questionnaires have somewhat less analytical reach but provide data that can be aggregated and applied to multiple contexts. Surprisingly few surveys and questionnaire-based studies have been conducted focusing on IRBs and their operations. The few studies that have been conducted with surveys seem to be based on convenience samples and are plagued by low response rates, generally with 75% or so refusals.45 Even some of the more prominent published questionnaire studies46 are not easily evaluated according to traditional social science survey research criteria. Often studies are quite focused on specific questions with little attention to sample selection dynamics, response bias artifacts, or developing valid and reliable attitudinal scales.

Moreover, the study of IRBs seems not to have yet drawn much attention from persons trained in either organizational analysis or survey research techniques. The door is open for more rigorous, technically grounded studies seeking to generalize about IRB members' and stakeholders' behavior. Survey research would likely prove extremely useful for understanding the variations in structures and processes among the many and diverse IRBs and associated organizations.

Field and laboratory experiments could play a useful role in studies of IRBs. A frequent problem in analysis of IRBs is inaccessible or unavailable data because of legal protections (privacy, confidentiality) or simply because of the reticence of participants. But to the extent that IRB behaviors can be replicated via artificial analysis, experiments can provide useful insights. To our knowledge, experiments simulating IRB behavior are quite rare. B. B. developed an experiment to examine the effects of racial match on simulated IRB groups' decisions, focusing on the race of the “IRB members,” the “researcher” whose research is vetted, and the “chair of the IRB committee.”47 Race seemed unimportant per se, but racial homophyly had some impact on the willingness to accept the researchers’ arguments, all else equal. Because so much of IRB outcomes depends on group dynamics and decision making, laboratory experiments seem to hold promise as an economical and unobtrusive means of shedding light on existing processes and, perhaps more important, trying new ones.

SUMMARY

We know that group processes in general are subject to improvement by observation, evaluation, and planned change. There is no reason to assume that IRB processes should prove a more intractable learning environment than, say, corporate board rooms, air control towers, space centers, or war rooms. Finally, even if we move to solution-by-experimentation, it is important to remember that perfecting institutions is only part of the answer. As Dunn and Chadwick note in their handbook on human subjects research:

Sometimes, with the best of intentions, scientists, public officials, and others working for the benefit of all of us, forget that people are people. They concentrate so totally on plans and programs, experiments, statistics—on abstractions—that people become objects, symbols on paper, figures in a mathematical formula or impersonal ‘subjects’ in a scientific study.35

Effective institutions and processes remain vulnerable to those who work within them, and when researchers become too detached from their research participants, the results sometimes defy the ability of institutions to prevent harm.

Human Participant Protection

No human participants were involved in this study.

References

- 1.Gamble VN. Under the shadow of Tuskegee: African Americans and health care. Am J Public Health 1997;87:1773–1778 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Moore RD, Stanton D, Gopalan R, Chaisson RE. Racial differences in the use of drug therapy for HIV disease in an urban community. N Engl J Med 1994;330:763–768 [DOI] [PubMed] [Google Scholar]

- 3.Mervis J. Minority faculty. New data in chemistry show ‘zero’ diversity. Science 2001;292:1291–1292 [DOI] [PubMed] [Google Scholar]

- 4.Lewin ME, Rice B. Balancing the Scales of Opportunity: Ensuring Racial and Ethnic Diversity in the Health Professions. Washington, DC: National Academy Press; 1994 [PubMed] [Google Scholar]

- 5.Gray BH. Institutional review boards as an instrument of assessment: research involving human subjects in the U.S. Sci Technol Human Values 1978;Fall(25) [PubMed] [Google Scholar]

- 6. Grimes v Kennedy Krieger Institute Inc, 782a 2d (MD 2001) [PubMed]

- 7.Bozeman B, Hirsch P. Science ethics as a bureaucratic problem: IRBs, rules, and failures of control. Policy Sci 2006;38:269–291 [Google Scholar]

- 8.Lane EON. Decision-making in the Human Subjects Review System [doctoral dissertation]. Atlanta: Georgia Institute of Technology; 2005 [Google Scholar]

- 9.Bozeman B. Bureaucracy and Red Tape. Upper Saddle River, NJ: Prentice Hall; 2000 [Google Scholar]

- 10.La Porte T, Consolini P. Theoretical and operational challenges of “high-reliability organizations”: air-traffic control and aircraft carriers. Int J Public Adm 1998;21:847–852 [Google Scholar]

- 11.Romzek BS, Dubnick MJ. Accountability in the public sector: lessons from the Challenger tragedy. Public Adm Rev 1987;47:227–238 [Google Scholar]

- 12.Perrow C. Normal Accidents: Living With High-Risk Technologies. New York, NY: Basic Books; 1984 [Google Scholar]

- 13.Jones JH, Tuskegee Institute. Bad Blood: The Tuskegee Syphilis Experiment. New York, NY: Free Press; 1993 [Google Scholar]

- 14.Timeline—The Tuskegee Syphilis Study: A Hard Lesson Learned. Atlanta, GA: Centers for Disease Control and Prevention; 2004. Available at: http://www.cdc.gov/tuskegee/timeline.htm. Accessed March 4, 2009 [Google Scholar]

- 15.Rosser SV. An overview of women's health in the US since the mid-1960s. Hist Technol 2002;18:355–369 [Google Scholar]

- 16.Corbie-Smith G, Miller WC, Ransohoff DF. Interpretations of ‘appropriate’ minority inclusion in clinical research. Am J Med 2004;116:249–252 [DOI] [PubMed] [Google Scholar]

- 17.Kaiser J. Human subjects. Court rebukes Hopkins for lead paint study. Science 2001;293:1567–1569 [DOI] [PubMed] [Google Scholar]

- 18.Kaiser J. Lead paint trial castigated. Science Now 2001;4 [Google Scholar]

- 19.Lewin T. U.S. investigating Johns Hopkins study of lead paint hazard. New York Times. August 24, 2001;A:11 [PubMed] [Google Scholar]

- 20.Leighton J, Klitzman S, Sedlar S, Matte T, Cohen NL. The effect of lead-based paint hazard remediation on blood lead levels of lead poisoned children in New York City. Environ Res 2003;92:182–190 [DOI] [PubMed] [Google Scholar]

- 21. Grimes v Kennedy Krieger Institute Inc and Higgins v Kennedy Krieger Institute Inc, No. 128 and 129 (MD Sept. term 2000), Appellee's motion for partial reconsideration and modification of opinion (filed September 17, 2000)

- 22.Farmer P. Infections and Inequalities: The Modern Plagues. Berkeley, CA: University of California Press; 1999 [Google Scholar]

- 23.Brown GE. The mother of necessity: technology policy and social equity. Sci Public Policy 1993;20:411–416 [Google Scholar]

- 24.Johnson EB, Jackson L, Hooley D, Lee B. Dissenting opinion. Unlocking the Future: Toward a New National Science Policy. Washington, DC: US House of Representatives Committee on Science; 1998 [Google Scholar]

- 25.Ross LF. In defense of the Hopkins lead abatement studies. J Law Med Ethics 2002;30:50–57 [DOI] [PubMed] [Google Scholar]

- 26.Spriggs M. Canaries in the mines: children, risk, non-therapeutic research, and justice. J Med Ethics 2004;30:176–181 [PMC free article] [PubMed] [Google Scholar]

- 27.Candilis PJ, Lidz CW, Arnold RM. The need to understand IRB deliberations. IRB 2006;28:1–5 [PubMed] [Google Scholar]

- 28.Emanuel EJ, Wood A, Fleischman A, et al. Oversight of human participants research: identifying problems to evaluate reform proposals. Ann Intern Med 2004;141:282–291 [DOI] [PubMed] [Google Scholar]

- 29.Kefalides PT. Research on humans faces scrutiny; new policies adopted. Ann Intern Med 2000;132:513. [DOI] [PubMed] [Google Scholar]

- 30.Larson E, Bratts T, Zwanziger J, Stone P. A survey of IRB process in 68 U.S. hospitals. J Nurs Scholarsh 2004;36:260–264 [DOI] [PubMed] [Google Scholar]

- 31.Shaul R. Reviewing the reviewers: the vague accountability of research ethics committees. Crit Care 2002;6:121–122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rushing WA. Organizational rules and surveillance: propositions in comparative organizational analysis. Adm Sci Q 1966;10:423–443 [Google Scholar]

- 33.Zhou X. The dynamics of organizational rules. Am J Sociol 1993;98:1134–1166 [Google Scholar]

- 34.March JG, Schulz M, Zhou X. The Dynamics of Rules Change in Written Organizational Codes. Palo Alto, CA: Stanford University Press; 2000 [Google Scholar]

- 35.Dunn CM, Chadwick G. Protecting Study Volunteers in Research—a Manual for Investigative Sites. Boston, MA: CenterWatch Inc; 1999 [Google Scholar]

- 36.Pollak J. The lead-based paint abatement repair and maintenance study in Baltimore: historic framework and study design. J Health Care Law Policy 2002;6:89–108 [PubMed] [Google Scholar]

- 37.Gunsalus CK. The nanny state meets the inner lawyer: overregulating while underprotecting human participants in research. Ethics Behav 2004;14:369–382 [DOI] [PubMed] [Google Scholar]

- 38.Levine C, Faden R, Grady C, Hammerschmidt D, Eckenwiler L, Sugarman J. The limitations of “vulnerability” as a protection for human research participants. Am J Bioeth 2004;4:44–49 [DOI] [PubMed] [Google Scholar]

- 39.Putney SB, Gruskin S. Time, place, and consciousness: three dimensions of meaning for US institutional review boards. Am J Public Health 2002;92:1067–1070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Goldman J, Katz MD. Inconsistency and institutional review boards. JAMA 1982;248:197–202 [PubMed] [Google Scholar]

- 41.Barnes M, Florencio PS. Financial conflicts of interest in human subjects research: the problem of institutional conflicts. J Law Med Ethics 2002;30:390–402 [DOI] [PubMed] [Google Scholar]

- 42.Surowiecki J. The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations. 1st ed.New York, NY: Doubleday; 2004 [Google Scholar]

- 43.Kennedy M. Generalizing from single case studies. Eval Rev 1979;3:661–678 [Google Scholar]

- 44.Siggelkow N. Persuasion with case studies. Acad Manage J 2007;50:20–24 [Google Scholar]

- 45.White MT, Gamm JL. Informed consent for research on stored blood and tissue samples: a survey of institutional review board practices. Account Res 2002;9:1–16 [DOI] [PubMed] [Google Scholar]

- 46.Shah S, Whittle A, Wilfond B, Gensler G, Wendler D. How do institutional review boards apply the federal risk and benefit standards for pediatric research? JAMA 2004;291:476–482 [DOI] [PubMed] [Google Scholar]

- 47.Bozeman B, Sarawitz D, Feeney M. Science and Inequality: Final Report to the Kellogg Foundation. Tempe, AZ: Consortium for Science, Policy and Outcomes, Arizona State University; 2007 [Google Scholar]