Abstract

Health behavior interventions delivered at point of service include those that yoke an intervention protocol with existing systems of care (e.g., clinical care, social work, or case management). Though beneficial in a number of ways, such “hosted” intervention studies may be unable to retain participants that specifically discontinue their use of the hosting service.

In light of recent practices that use percent total attrition as indicative of methodological flaws, hosted interventions targeting hard-to-reach populations may be excluded from consideration in effective intervention compendiums or research synthesis because of high attrition rates that may in fact be secondary to the natural flow of service use or unrelated to differential attrition or internal design flaws. Better methods to characterize rigor are needed.

EXAMINATION OF METHODOlogical rigor in behavioral intervention research is essential for the systematic identification of interventions that are empirically demonstrated to be effective and deemed by advisory groups and funding agencies to be most appropriate for widespread dissemination and adoption. The establishment and implementation of formulas for defining rigor, though essential in this process, must nonetheless demonstrate sensitivity to a wide diversity of implementation and evaluation strategies. The manner in which attrition and retention factor into these formulas is an important, though understudied, consideration, which can exert influence on policy development. Oversimplified applications of attrition as exclusion criteria can produce public policy that is biased toward certain types of research and away from others.

Hosted interventions, where an intervention is offered within or in conjunction with existing community, health, or private services, are promising for capacity building and rapid deployment but also are affected by multiple causes of attrition, making total rates of attrition an oversimplified metric of study rigor. Similarly, interventions for hard-to-reach or transient populations, though a promising avenue for reaching national public health goals of reducing health disparities, also require a complex conceptualization of attrition. Thus, how attrition is used in evaluating rigor is of consequence to public health policy and the progression of scientific inquiry.

I review the role of attrition in terms of validity of outcomes, and present practices in evaluation of methodological rigor and problems that can arise from such practices. I explore cases in which total percent attrition appears particularly inappropriate as a metric for rigor and discuss more viable alternatives. Finally, I offer methods that researchers and reviewers could adopt for more careful evaluations that provide greater sensitivity to a wide range of designs and methods.

ATTRITION-RELATED THREATS TO VALIDITY

Attrition is the loss of randomly assigned participants or participants’ data, which can bias a randomized controlled trial's external validity by producing a final sample that is not representative of the population sampled, or, if differential between study arms, can result in some characteristic of the retained sample causing an observed intervention effect (see, for example, Alexander,1 Cook and Campbell,2 Goodman and Blum,3 Little,4 Miller and Wright,5 Shadish et al.,6,7 and Valentine and McHugh8). Attrition in some form is a nearly universal reality in longitudinal research with human participants.

Though common, attrition-related threats to validity in randomized controlled trials have historically been underreported or largely ignored.6–8 Increased attention to this issue has promoted improvements over the past decade in reporting total number of randomized participants who complete and who do not complete a study's protocol, although the manner in which attrition is reported continues to be inconsistent across studies8 and arguably remains oversimplified. Most frequently, attrition is reported as simple point descriptive statistic (e.g., percent total attrition) with the presumed understanding that lower rates imply better science. However, percent attrition, per se, fails to provide sufficiently detailed information (e.g., see recommendations from the Consolidated Standards of Reporting of Trials statement [CONSORT]9,10) and does not address if or how that rate may impact the validity of outcomes.

Attrition is a highly complex phenomenon that has a well-developed area of inquiry and theory building.1,2,10–13 Despite an impressive literature across research domains delineating the need to adopt refined approaches to attrition assessments, taxonomies for characterizing type of attrition, and procedures to evaluate potential effects of attrition on internal and external validity,8,13–15 longitudinal research in the social and health sciences has generally not adopted such practices (see, for example, Ahern and Le Brocque14). Amico et al.16 found that almost half of the published antiretroviral adherence intervention trials they reviewed failed to provide sufficient data pertaining to differential attrition, categories for attrition, or characteristics of those retained or attrited. Similarly, Ahern and Le Brocque's14 review of the social sciences longitudinal literature found that less than one quarter of studies provided sufficient details describing patterns of attrition.

The primary concern in evaluating threats caused by attrition is the extent to which participants or their data are missing at random or not at random.11 Missing data has multiple causes,17 each of which has an extensive body of literature to access for recommendations on analytic procedures to evaluate the potential impact of attrition on outcomes obtained and, even in the presence of differential attrition, possibly minimize identified threats.14 A given rate of attrition in and of itself does not equate to bias, nor does it confer methodological flaws or, conversely, integrity.15 Thus, an overreliance on total percent attrition or retention risks ignoring the wealth of information provided by analyzing attrition carefully and underutilizing sophisticated methods of establishing scientific rigor in complex research designs.

RATES OF RETENTION AS INCLUSION CRITERIA

Of concern is a growing trend for national registries of effective interventions, funding agencies, and research synthesis studies to adopt policies and inclusion criteria that support an oversimplified use of attrition. Despite the vast complexity of attrition, many registries and reviewers alike have moved toward adopting cut-offs for acceptable and unacceptable rates of total attrition applied to an entire sample or within each study arm, but not in relation to one another (differential). Cut-offs are interpreted as representing necessary criteria for scientific rigor in a study and are applied across study designs and contexts.

For example, the inclusion criteria for best-evidence behavioral interventions required retention rates of 70% or greater in each study arm for an intervention with positive outcomes to be considered.18 Recently, this criterion was reaffirmed with the Centers for Disease Control and Prevention's strategic plan through 201019 with an emphasis on increasing the number of “effective” interventions that receive funding for implementation. Effective interventions are those that meet all criteria including 70% or greater retention rates in each study arm at follow-up to qualify for Tier I (effective interventions), or 60% or greater retention to qualify for Tier II (promising interventions). Thus, attrition rates of greater than 30% or 40% in either study arm are considered indicative of “fatal” flaws in the study, in effect negating intervention outcome results regardless of other qualifications, and, importantly, steering funding away from supporting such interventions.

Valentine and McHugh8 also note that the National Registry of Effective Prevention Programs’ study quality assessment scale rates attrition most favorably handled in a given study if rates are less than 20%. Similarly, work aiming to synthesize intervention literature has also adopted this 20% mark (see, for example, Whitlock et al.20). However, adopting total attrition and retention cut-off points or even the view that attrition is in all cases a threat arguably decontextualizes an extremely context-dependent phenomenon and inappropriately substitutes the main threat of differential attrition for total attrition.

Assuming a causal, linear, positive relation between retention and validity of outcomes largely ignores the fact that bias from attrition draws on a delicate interplay between the type of attrition in question, the context in which the intervention is delivered, and the series of statistical analyses employed. Certainly, the need to establish metrics for study quality is important in the progression of research on effective interventions.18,21 Given the vast number of other possible criteria—including effect size, power, longevity of results, cost benefits, feasibility of implementation, and acceptability of intervention—percent retention or attrition seems weakly situated as a valuable inclusion or exclusion criterion.

ATTRITION IN HOSTED INTERVENTION DESIGNS

Health promotion intervention research that uses existing systems of care as vehicles for or partners in intervention deployment and measurement collection have the added complexity of needing to consider 2 patterns of attrition and retention: patterns for participants in the study and patterns for service users. I define hosted intervention designs as intervention protocols that are developed and designed for implementation in conjunction with events occurring in an existing service, with intervention exposure or dose being yoked to attendance to that existing service.

For example, risk reduction, prevention screening, or health promotion interventions may be mapped directly on provision of clinical care.22–24 Nutrition promotion, abuse screening or prevention, or drug or alcohol risk-reduction interventions may be mapped onto the delivery of education or case management services for various at-risk populations.25–29 Mental health screening and services may be mapped upon systems providing transient housing, walk-in clinic services, and homeless shelter services.30,31 Many areas of health behavior research and intervention are adopting these kinds of mapping strategies because they emphasize capacity building in existing services and are more hybrid in nature (merging efficacy with effectiveness) by utilizing systems of care that are already and will continue to be available to participants after the study ends.

Loss of participants within a hosted intervention design carries the same potential threats to external and internal validity previously noted. Attrition is the net result of those who complete some but not all of the intended intervention exposure, those who complete the intervention but have missing measures, and those who do not engage in the intervention at all (e.g., never-takers32). A possible reason for any of these outcomes within a hosted intervention design is that missingness or protocol noncompliance may be secondary to discontinuation of use of the host service. For example, recent research on reasons for attrition from a study hosted by an HIV clinic found that approximately one quarter of attrited participants had moved or relocated and, thus, no longer were active clinic patients.33 They had discontinued, or attrited, from service use.

It could be highly informative to distinguish between loss occurring because of discontinuation of service use and loss because of requested withdrawal, noncompliance to treatment, or failure to secure assessments despite continued service use. For most randomized controlled trials, assessments of differential attrition should focus on loss of participants in the treatment arm relative to loss in the control arm. However, grouping active service users who are lost from a study and deactivated users also lost from a study into a single category as “lost at follow-up” can be misleading. Those retained within the hosting service and those who discontinued their use of the service, and the extent to which attrition from either stratum was differential, is important to establish. Differential attrition within the active service user strata would suggest that attrition may have created nonequivalent groups and would require further investigation. Differential attrition within the discontinued service user strata may suggest that groups no longer eligible to receive the treatment are nonequivalent, and investigation for potential group nonequivalence can inform decisions about positioning a given intervention within a given service.

A PROFILE OF RESULTS APPROACH

Analytic methods for understanding and handling missing values11,12,34–37 such as intent-to-treat, as-treated, per-protocol, efficacy subset analyses, and Complier Average Causal Effect analyses, as well as others, specifically target outcomes relative to various inclusion criteria of a sample. Each has underlying assumptions about the random or nonrandom nature of the observed missingness that require empirical and conceptual evaluation (compare Valentine and Cooper21). Despite substantial debate over which postrandomized sample strata should be of greatest interest in outcome evaluations, many areas of inquiry continue to be dominated by intent-to-treat approaches.38 However, adopting intent-to-treat approaches as the sole strategy can ignore valuable information available in the other strata of participants. A profile approach for outcomes of hosted intervention randomized controlled trials could be adopted that would attend to all strata of participants to provide information about the overall impact of offering an intervention program within a given existing service (intent-to-treat on randomized sample), the impact of the intervention with active-service users (intent-to-treat within active-service users), and the impact of the intervention when received (as-treated, per-protocol, efficacy, subset analyses, or Complier Average Causal Effect analyses).

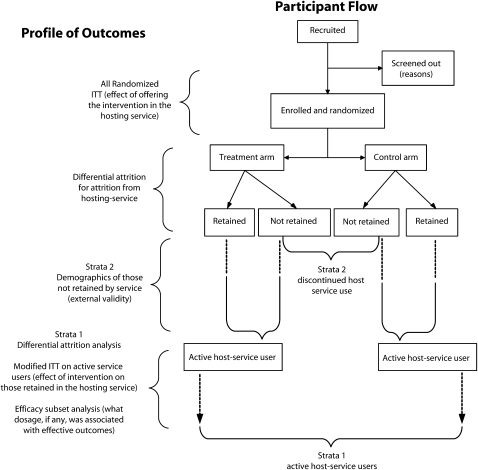

A “profile of outcomes” approach within the specific context of the population and intervention developed provides a supplemental approach to intent-to-treat analyses and supports a comprehensive dynamic understanding of the potential role of attrition in qualifying results. Specifically, with a profile approach, study flow could be characterized by attending to flow in service use and in the study. A CONSORT type of diagram for hosted designs like that depicted in Figure 1 further defines missingness from the enrolled sample into that which is secondary to discontinuation of service use (strata 2) and attrition in the group who maintained service use over the course of the study (strata 1). Each strata is of interest and the adoption of transparent reporting of the characteristics of each strata facilitates exploration of the extent to which stratas 1 and 2 differ in regard to the outcome variables of interest.

FIGURE 1.

Participant flow in hosted intervention designs with suggested profile of outcomes.

Note. ITT = intent-to-treat analysis. With excellent measurement retention or intensive retention strategies, some of strata 2 would be populated by those who had no missing data but had nonetheless discontinued their use of the hosting service. If sufficient in number, this group could be compared with other participants to determine the extent to which aspects of study methods or the intervention may have promoted study engagement. Note as well that appropriate methods for representing missing data in each analysis are assumed.

The profile of results approach could address the impact of the policy of instituting a given intervention within an existing service (intent-to-treat on full sample of randomly assigned participants), the impact of the intervention on active service users (modified intent-to-treat where analyses are conducted on strata 1—those randomly assigned to condition who also remained active in the hosting service over the course of the study), and those who completed the study protocol in full (efficacy sample or per-protocol analyses). Examination of attrition in strata 2 in terms of characteristics of those who remain active in service and those who do not provides valuable information about how well suited a service may be for hosting a given intervention. Only through comprehensive analyses of attrition across and within each strata with targeted emphasis on differential attrition to evaluate possible threats to internal validity and the full characterization of those who were and were not reached by an intervention to evaluate external validity can the wealth of information readily available in most randomized controlled trials be fully utilized.

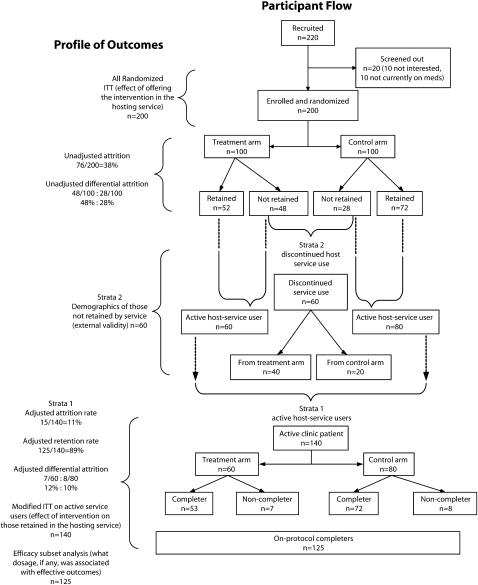

For example, consider a hypothetical study, profiled in Figure 2, which yokes an HIV-medication adherence intervention to regularly occurring HIV-care visits. A sample of patients was screened (N = 220), randomized (N = 200; 100 in each arm), and offered 12 months of an intervention delivered at their HIV care visit (or survey only for control-arm participants). At study end, 125 participants were retained (62%) and 75 attrited (38%). On this basis, even highly supportive study results would not be considered in several compendiums and research syntheses because of attrition rates assumed to confer to lack of rigor.

FIGURE 2.

Example of profile approach for a hypothetical study of an adherence intervention delivered at point of HIV clinical care.

Note: ITT = indent-to-treat analysis.

However, 60 of the 75 attrited secondary to discontinuation of use of clinic services for reasons unassociated with medication adherence including relocation, imprisonment, and change in insurance causing change in where one receives care. On inspection, it can be seen that the differential attrition in arms is largely driven by differential attrition in strata 2 where 40 treatment-arm versus 20 control-arm participants discontinued service use. Group equivalence or nonequivalence should be evaluated to inform whether hosting the intervention in the given service differentially reaches some and not others by virtue of their status on service use, and whether there are measured or hypothesized reasons for the observed differential discontinuation of service use.

For the strata of active service users at study-end (strata 1), retention was 88% (110 of the 125 active service users), attrition was 12% (15 of 125), and attrition appeared nondifferential (7 from treatment and 8 from control arms). Specific demographics and characteristics of those retained must still be provided to assist in the assessment of generalizability, but confidence in the validity of outcomes specifically for active service users is enhanced. As depicted in Figure 2, analyses for this study within targeted strata provide a complete profile of results that comprehensively advises decisions based on intervention reach, cost effectiveness, and utility. Critically, attrition advises results but does not disqualify them.

Consider as well an intervention study that seeks to promote or decrease a behavior within marginalized, transient, or hard-to-reach populations. To facilitate use of established resources, as well as potentially to promote capacity building through collaborative research, an intervention targeting increased self-care among homeless seriously mentally ill persons is yoked to an organization that provides meal services. Methodological and procedural steps are taken to promote retention (which can be quite successful)39 but the meal-service organization has a documented retention rate for the average service user during a 12-month period of about 45%, which further appears to be seasonal. At onset it is expected that open enrollment at study start will likely map onto the naturally occurring flow of service users, so study attrition would, by nature of service use, be expected to be 45% on the high end. Even with prudent study design that maintains adequate power for the on-protocol final sample, end-point attrition values will likely exceed most of the currently used cut-offs for rigor.

Although there are countless ways in which to work appropriately with the data in these examples, defining the sample of interest to include the status on service use (1) allows for the examination of attrition-related threats to outcomes that primarily focus on differential attrition in the active service user groups; (2) promotes careful consideration of potential group nonequivalence, essential to exploring and informing subsequent decisions for how missing data should be handled and outcomes assessed; and (3) positions attrition and retention as important aspects of intervention delivery that should be specifically explored to provide a context for understanding and interpreting intervention outcomes.

BALANCING THREATS

The bubble hypothesis40 equates design challenges to the experience of working an air bubble out of a placed bumper sticker. The sticker is applied behavioral research and the air bubble is the reality that there will likely be some recognized threat to the validity of outcomes2,6,41 when working in environments that are not highly controlled by the experimenter. The bubble can be manipulated to different areas, but the only way to completely eliminate the bubble is to remove the entire sticker. The hypothesis is that there will be flaws for any behavioral study; the question is more where and how much.

With increasing reliance on percent total attrition as a disqualifying criterion, there is likely to be responsiveness of research and research design to these policy and funding requirements. Largely, such responsiveness is an important method to promote efficient use of funding dollars on research that is rigorous and can make unique contributions. When policy promotes smoothing one area of the sticker, where the bubble is likely to move or reappear is an important consideration. In response to recent requirements for and emphasis on values of total retention at a policy and funding level there is the potential for the wide-scale adoption of strategies to promote retention in the absolute, including shorter follow-up intervals, extensive inclusion and exclusion criteria, use of run-in periods to ensure assignment of motivated participants, and aggressive retention strategies that would be difficult to sustain outside the context of well-funded research. Attrition may be traded for selective representation, with an added complication of poor translation potential when extensive retention strategies are required.

For hosted intervention designs, high retention rates in the absolute may pose no threats to internal or external validity when they are well in line with the natural flow of service use for the targeted population. When appropriately powered, it is arguable that a lower, nondifferential retention rate that matches natural service use flow would actually be preferable in many ways to a high retention rate achieved by aggressive study-supported retention strategies that are in discord with what the service typically achieves or can sustain at the close of the funded project. Total number retained in a study without further contextualizing the natural retention or attrition flow within the hosting service can misleadingly overestimate the potential reach of an intervention and its outcomes. It is essential for results and attrition or retention to be positioned within the ecological context of the natural or normal flow of service users for hosted randomized controlled trials.

RECOMMENDATIONS AND CONCLUSIONS

On the basis of recommendations for longitudinal outcome trials and the unique aspects of hosted intervention designs reviewed here, several recommendations can be considered. Table 1 lists steps and strategies for research design, implementation, analyses, and interpretation. It is also important to note that the most basic and pressing recommendation is movement away from using percent attrition, or percent retention, as an indicator of scientific rigor. With the wealth of other relevant information available to evaluate diverse outcomes over diverse designs, interventions, and populations, use of attrition rate as inclusion or exclusion criteria is simply a poor and at times misleading metric.

TABLE 1.

Suggestions and Recommendations for Hosted Intervention Design Intervention Trials

| Area | Recommendation |

| Design | In the preparation stage, the natural flow of service users for the time period of interest should be estimated and used in power analyses to determine an appropriate sample size for analyses that focus on active service user strata. |

| Design | Because randomized controlled trials are not always possible or preferable in certain applied environments, alternative analytic strategies should be explored, considered, adopted, and better received by funding and review boards.42 Power and sample size should be estimated in relation to the selected primary analytic strategy. |

| Design | Plan to assess all enrolled participants at least once if possible on variables known to influence attrition in the population of interest. This can help to contextualize missing data and further inform intervention design and development. |

| Design | Randomly assign participants to conditions as late as possible to minimize dropout from random assignment to first intervention or assessment.38 |

| Design | Carefully consider retention strategies as potentially part of the overall environment in which intervention outcomes occur. Of importance is the consideration of whether intervention outcomes would be expected without the use of the retention strategies. If retention strategies are integral to keeping participants engaged in the intervention, consider their inclusion when moving toward dissemination as part of the intervention protocol. In light of this, however, every effort should be made for the strategies employed to be realistically sustainable outside the context of well-funded research. |

| Design and methods | Consider methods for retaining participants for measurement or survey completion as separate from participation in the intervention itself. |

| Design and analyses | The handling of missing data should be specified a priori and reported with transparency. In profiling outcomes, any intent-to-treat analysis that includes cases with missing data must clearly articulate the manner in which these data are represented in the data set. Sophisticated strategies that borrow strength from existing data through multiple imputation or estimation are preferred to less informative approaches, such as last observation carried forward. |

| Analyses | Assumptions for missing data must be assessed. Differential attrition analyses should provide information about the relative rates of attrition between study arms and address potential equivalence or nonequivalence of groups who (1) are retained or who have attrited, (2) remain active service users over the course of the study versus those who do not, and (3) those active service users who complete or do not complete some level of the intended study protocol. In each case, underlying assumptions for missingness (e.g., at random, completely at random, not at random) should be clear. |

| Analyses and interpretation | Adopt a strategy of profiling outcomes, where several different kinds of analyses are used to address outcomes. Intent-to-treat analysis using the full sample, with appropriate representation of missing data, can address the overall impact of instituting an intervention in the hosting service. After creating 2 strata from this full sample, a stratum of those who discontinued their use of the hosting service during the course of the intervention study and one for those who remained active, a modified intent-to-treat analysis can be performed on those in the strata of remaining active service users. The modified intent-to-treat analysis, again with appropriate handling of missing observations, can address the overall impact of the intervention on active service users. Finally, an efficacy subset analysis, where minimum dosing is identified and outcomes are assessed relative to those who actually received intervention exposure, is also very important in making decisions about minimum requirements for intervention efficacy (see, for example, Fraser et al.43). |

| Presentation | Increase and promote transparency in reporting of retention strategies, underlying assumptions for strategies to address missing data, greater information pertaining to types of attrition and their relation to the use of the hosting service, and the expected or natural attrition that is generally experienced by the hosting service and how that maps with study results. |

| Evaluation | Identifying “effective behavioral interventions” must take the context of the intervention, in terms of targeted population, intervention assessed, and the full context of the study, under consideration. Comprehensive and context-specific rating systems have been proposed21 and should be considered as a potential alternatives. Numerous designs, including hosted intervention trials, are not likely to be well characterized by metrics such as percent attrition, and issues pertaining to appropriateness of randomized controlled trials in some cases.43 The vast array of sophisticated statistical modeling strategies currently available for both missing data and alternative outcome trials designs strongly suggests that rigid criteria will not keep pace with the rapid developments in these areas. |

Entire texts are available for guidance on methods for evaluating attrition and addressing missing data in longitudinal research.44–46 It behooves both the researcher and agencies that manage the funding and dissemination of research to recognize that there are multiple strategies and methods for appropriately rigorously assessing outcomes of behavioral interventions. Allowing for flexibility in design and approaches requires the systematic adoption of greater transparency in reporting results. A detailed flow of participants, along the lines of that recommended in the CONSORT statement9,10 or a modified version such as that presented in Figure 1, is essential. Providing information regarding the strata not reached is as important an area of inquiry as is characterizing those who were reached in a given intervention. For hosted intervention designs, distilling active or nonactive service users in this characterization is similarly critical.

Policies stemming from practices that oversimplify attrition or the nuances of rigor in diverse study designs are of considerable concern. Their impact on the future direction of intervention development and deployment through systematically favoring highly controlled and well-resourced intervention studies with reachable sample groups can be substantial. Though not currently common practice, there would be tremendous value in empirically evaluating the extent to which policies and criteria presently in use are producing pools of supported and recommended interventions that are systematically significantly different from the pool of interventions not represented (e.g., significant differences in populations targeted, location of intervention delivery, length of follow-up, geographic regions where the research took place, study design, and so on).

Concerns stemming from definitions of rigor are of clear relevance in those evaluations, and the potential effect of adopting alternative strategies for inclusion could be modeled within a given content area through the examination of differential characteristics of interventions included or excluded on the basis of the new criteria. In addition to quantifying and monitoring potential bias, more complex methods for defining rigor and evaluating outcomes, such as adopting a profile of outcomes approach or other comprehensive rating systems (see, for example, Valentine and Cooper21), should be investigated and considered in the process of evaluation, dissemination, and implementation of effective behavioral interventions.

Acknowledgements

Sincere thanks to Ann O'Connell and Anne Black for their review of early versions of this article.

References

- 1.Alexander P. The differential effects of abuse characteristics and attachment in the prediction of long-term effects of sexual abuse. J Interpers Violence 1993;8:346–362 [Google Scholar]

- 2.Cook TD, Campbell DT. Quasi-experimentation: Design and Analysis Issues for Field Settings. New York, NY: Houghton-Mifflin; 1979 [Google Scholar]

- 3.Goodman J, Blum T. Assessing the non-random sampling effects of subject attrition in longitudinal research. J Manage 1996;22:627–652 [Google Scholar]

- 4.Little RJA. Modeling the drop-out mechanism in repeated-measures studies. J Am Stat Assoc 1995;90:1112–1121 [Google Scholar]

- 5.Miller R, Wright D. Detecting and correcting attrition bias in longitudinal family research. J Marriage Fam 1995;57:921–946 [Google Scholar]

- 6.Shadish WR, Cook TD, Campbell DT. Experimental and Quasi-experimental Designs for Generalized Causal Inference. New York, NY: Houghton-Mifflin; 2002 [Google Scholar]

- 7.Shadish WR, Hu X, Glaser RR, Kownacki R, Wong S. A method for exploring the effects of attrition in randomized experiments with dichotomous outcomes. Psychol Methods 1998;3:3–22 [Google Scholar]

- 8.Valentine JC, McHugh CM. The effects of attrition on baseline comparability in randomized experiments in education: a meta-analysis. Psychol Methods 2007;12:268–282 [DOI] [PubMed] [Google Scholar]

- 9.Altman DG, Schulz KF, Moher D, et al. The revised CONSORT statement for reporting randomized trials: explanation and elaboration. Ann Intern Med 2001;134:663–694 [DOI] [PubMed] [Google Scholar]

- 10.Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet 2001;357:1191–1194 [PubMed] [Google Scholar]

- 11.Little RJA, Rubin DB. Causal effects in clinical and epidemiological studies via potential outcomes: concepts and analytical approaches. Annu Rev Public Health 2000;21:121–145 [DOI] [PubMed] [Google Scholar]

- 12.Little R, Yau L. Intent-to-treat analysis for longitudinal studies with drop-outs. Biometrics 1996;52:1324–1333 [PubMed] [Google Scholar]

- 13.Marcellus L. Are we missing anything? Pursuing research on attrition. Can J Nurs Res 2004;36:82–98 [PubMed] [Google Scholar]

- 14.Ahern K, Le Brocque R. Methodological issues in the effects of attrition: simple solutions for social scientists. Field Methods 2005;17:53–69 [Google Scholar]

- 15.Nigg JT, Quamma JP, Greenberg MT, Kusche CA. A two-year longitudinal study of neuropsychological and cognitive performance in relation to behavioral problems and competencies in elementary school children. J Abnorm Child Psychol 1999;27:51–57 [DOI] [PubMed] [Google Scholar]

- 16.Amico KR, Harman JJ, O'Grady MA. Attrition and related trends in scientific rigor: a score card for ART adherence intervention research and recommendations for future directions. Curr HIV/AIDS Rep 2008;5:172–185 [DOI] [PubMed] [Google Scholar]

- 17.Siddiqui O, Flay B, Hu F. Factors affecting attrition in a longitudinal smoking prevention study. Prev Med 1996;25:554–560 [DOI] [PubMed] [Google Scholar]

- 18.Lyles CM, Kay LS, Crepaz N, et al. Best-evidence interventions: findings from a systematic review of HIV behavioral interventions for US populations at high risk, 2000–2004. Am J Public Health 2007;97:133–143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Centers for Disease Control and Prevention HIV Prevention Strategic Plan: Extended Through 2010. 2008. Available at: http://www.cdc.gov/hiv/resources/reports/psp/index.htm. Accessed January 1, 2008

- 20.Whitlock EP, Polen MR, Green CA, Orleans CT, Klein J. Behavioral counseling interventions in primary care to reduce risky/harmful alcohol use by adults: a summary of the evidence for the US Preventive Services Task Force. Ann Intern Med 2004;140:557–568 [DOI] [PubMed] [Google Scholar]

- 21.Valentine JC, Cooper H. A systematic and transparent approach for assessing the methodological quality of intervention effectiveness research: the Study Design and Implementation Assessment Device (Study DIAD). Psychol Methods 2008;13:130–149 [DOI] [PubMed] [Google Scholar]

- 22.Fisher JD, Fisher WA, Cornman DH, Amico KR, Bryan A, Friedland GH. Clinician-delivered intervention during routine clinical care reduces unprotected sexual behavior among HIV-infected patients. J Acquir Immune Defic Syndr 2006;41:44–52 [DOI] [PubMed] [Google Scholar]

- 23.Gucciardi E, DeMelo M, Offenheim A, Stewart DE. Factors contributing to attrition behavior in diabetes self-management programs: a mixed method approach. BioMed Central Health Serv Res 2005;8:33 Available at: http://www.biomedcentral.com/bmchealthservres/8?page=2. Accessed March 1, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Lock CA, Kaner EFS. Implementation of brief alcohol interventions by nurses in primary care: do non-clinical factors influence practice? Fam Pract 2004;21:270–275 [DOI] [PubMed] [Google Scholar]

- 25.Chamberlain P, Leve LD, DeGarmo DS. Multi-dimensional treatment foster care for girls in the juvenile justice system: 2-year follow-up of a randomized clinical trial. J Consult Clin Psychol 2007;75:187–193 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.D'Amico JB, Nelson J. Nursing care management at a shelter-based clinic: an innovative model for care. Prof Case Manag 2008;13:26–36 [DOI] [PubMed] [Google Scholar]

- 27.Kubik MY, Story M, Davey C. Obesity prevention in schools: current role and future practice of school nurses. Prev Med 2007;44:504–507 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Oldroyd J, Burns C, Lucas P, Haikerwal A, Waters E. The effectiveness of nutrition interventions on dietary outcomes by relative social disadvantage: a systematic review. J Epidemiol Community Health 2008;62:573–579 [DOI] [PubMed] [Google Scholar]

- 29.Ornelas LA, Silverstein DN, Tan S. Effectively addressing mental health issues in permanency-focused child welfare practice. Child Welfare 2007;86:93–112 [PubMed] [Google Scholar]

- 30.Savage CL, Lindsell CJ, Gillespie GL, Lee RJ, Corbin A. Improving health status of homeless patients at a nurse-managed clinic in the Midwest USA. Health Soc Care Community 2008;16:469–475 [DOI] [PubMed] [Google Scholar]

- 31.Slesnick N, Kang MJ. The impact of an integrated treatment on HIV risk behavior among homeless youth: a randomized controlled trial. J Behav Med 2008;31:45–59 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Angrist JD, Imbens GW, Rubin DB. Identification of causal effects using instrumental variables. J Am Stat Assoc 1996;91:444–455 [Google Scholar]

- 33.Dalal RP, Macphail C, Mqhayi M, et al. Characteristics and outcomes of adult patients lost to follow-up at an antiretroviral treatment clinic in Johannesburg, South Africa. J Acquir Immune Defic Syndr 2008;47:101–107 [DOI] [PubMed] [Google Scholar]

- 34.Jo B. Statistical power in randomized intervention studies with noncompliance. Psychol Methods 2002;7:178–193 [DOI] [PubMed] [Google Scholar]

- 35.Delucchi K, Bostrom A. Small sample longitudinal clinical trials with missing data: a comparison of analytic methods. Psychol Methods 1999;4:158–172 [Google Scholar]

- 36.Ellenberg JH. Intent-to-treat analysis versus as-treated analysis. Drug Inf J 1996;30:535–544 [Google Scholar]

- 37.Unnebrink K, Windeler J. Intention-to-treat: methods for dealing with missing values in clinical trials of progressively deteriorating diseases. Stat Med 2001;20:3931–3946 [DOI] [PubMed] [Google Scholar]

- 38.McMahon AD. Study control. Violators, inclusion criteria and defining explanatory and pragmatic trials. Stat Med 2002;21:1365–1376 [DOI] [PubMed] [Google Scholar]

- 39.McKenzie M, Tulsky JP, Long HI, Chesney M, Moss A. Tracking and follow-up of marginalized populations: a review. J Health Care Poor Underserved 1999;10:409–429 [DOI] [PubMed] [Google Scholar]

- 40.Gelso CJ. Research in counseling: methodological and professional issues. Couns Psychol 1979;8:7–35 [Google Scholar]

- 41.Campbell DT, Stanley JC. Experimental and quasi-experimental designs for research on teaching. Gage NL, ed.Handbook of Research on Teaching Chicago, IL: Rand McNally; 1963 [Google Scholar]

- 42.West SG, Duan N, Pequegnat W, et al. Alternatives to the randomized controlled trial. Am J Public Health 2008;98:1359–1366 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Fraser MW, Terzian MA, Rose RA, Day SH. Efficacy versus intent to treat analyses: how much intervention is necessary for a “desirable” outcome? Paper presented at: Society for Social Work and Research; January 13–16, 2005; Miami, FL. Available at: http://sswr.confex.com/sswr/2005/techprogram/P2579.HTM. Accessed May 1, 2008

- 44.Allison P. Missing Data (Quantitative Applications in the Social Sciences). Thousand Oaks, CA: Sage Publications; 2002 [Google Scholar]

- 45.Daniels MJ, Hogan JW. Missing Data in Longitudinal Studies: Strategies for Bayesian Modeling and Sensitivity Analysis (Monographs on Statistics and Applied Probability). Boca Raton, FL: Taylor & Francis; 2008 [Google Scholar]

- 46.Little RJA, Rubin DB. Statistical Analysis With Missing Data. 2nd ed.Hoboken, NJ: John Wiley & Sons; 2002 [Google Scholar]