Abstract

The value of a reinforcer decreases as the time until its receipt increases, a phenomenon referred to as delay discounting. Although delay discounting of non-drug reinforcers has been studied extensively in a number of species, our knowledge of discounting in non-human primates is limited. In the present study, rhesus monkeys were allowed to choose in discrete trials between 0.05% saccharin delivered in different amounts and with different delays. Indifference points were calculated and discounting functions established. Discounting functions for saccharin were well described by a hyperbolic function. Moreover, the discounting rates for saccharin in all six monkeys were comparable to those of other non-human animals responding for non-drug reinforcers. Also consistent with other studies of non-human animals, changing the amount of a saccharin reinforcer available after a 10-s delay did not affect its relative subjective value. Discounting functions for saccharin were steeper than we found in a previous study with cocaine, raising the possibility that drugs such as cocaine may be discounted less steeply than non-drug reinforcers.

Keywords: delay discounting, choice, cocaine, saccharin, rhesus monkey

Introduction

Delay discounting refers to the decrease in the value of a reinforcer as the time until its receipt increases. Delay discounting often is studied by holding the amount of a delayed reinforcer constant while adjusting the amount of a smaller, more immediate reinforcer until choice between the two is approximately equal (Green, Myerson, Holt, Slevin, and Estle, 2004; Richards, Mitchell, deWit, and Seiden, 1997). The amount of the immediate reinforcer at this indifference point is thought to reflect the subjective value of the delayed reinforcer. When the subjective value of the reinforcer is plotted as a function of the delay until its receipt, the resulting relation is well described by a hyperbolic discounting function (Green and Myerson, 2004; Mazur, 1987).

Delay discounting has been observed in both human and non-human animals using a variety of reinforcers (e.g., Bickel and Marsch, 2001; Green et al., 2004; Raineri and Rachlin, 1993; for review, see Green and Myerson, 2004). It should be noted, however, that nearly all previous studies in non-humans have used rodents or birds. Recently, Woolverton, Myerson, and Green (2007) studied delay discounting in rhesus monkeys using cocaine as a reinforcer. Discounting of delayed cocaine was well described by a hyperbolic function, although it was noted that cocaine was discounted at lower rates than those reported in the literature for non-primate animals and delayed non-drug reinforcers (Green et al., 2004; Mazur, 2000; Richards et al., 1997).

The present study was designed to assess discounting of a non-drug reinforcer, saccharin, in monkeys. Six rhesus monkeys, four of which had participated in the Woolverton et al. (2007) study, were allowed to choose in discrete trials between different amounts of 0.05% saccharin delivered with different delays. The magnitude of the reinforcer was adjusted by varying the volume of the saccharin solution. In addition, we compared the degree to which the monkeys discounted 2.0 and 4.0 ml of saccharin solution delayed by 10 s. At issue in this portion of the study was whether the monkeys, like humans, would discount a larger reinforcer less steeply than a smaller reinforcer.

Materials and methods

Subjects

Six male rhesus monkeys (Macaca mulatta) weighing between 9.3 and 11.5 kg served as subjects. Four of the monkeys (RO105, AV22, M439, and DCJ) had a history of cocaine self-administration under choice conditions with and without delays (Woolverton et al., 2007). The other two monkeys (BDH and X4F) had histories of drug self-administration under progressive-ratio schedules with cocaine, desipramine, and JZ-III-84 as reinforcers (Wee et al., 2006). Approximately 4 h before an experimental session, each monkey was fed a sufficient amount of monkey chow (Teklad 25% Monkey Diet; Harlan/Teklad, Madison, WI) to maintain stable body weight. Fresh fruit (daily) and a chewable vitamin tablet (3 days per week) were also given at this time. Water was available continuously. Lighting was cycled to maintain 16 h of light and 8 h of darkness, with lights on at 06:00 a.m.

Apparatus

Each monkey was fitted with either a stainless-steel restraint harness (E&H Engineering, Chicago, IL) or a mesh jacket (Lomir Biomedical, Malone, NY) and tether that attached to the rear of a 1.0-m3 experimental cubicle (Plas-Labs, Lansing, MI) in which the monkey lived throughout the experiment. The apparatus has been described in detail previously (Woolverton et al., 2007). There were 2 response levers (PRL-001; BRS/LVE, Beltsville, MD) with associated stimulus lights mounted in steel boxes on the inside of the transparent front of each cubicle. There was a sipper tube mounted 10 cm above each steel lever-box through which a saccharin solution was delivered. Saccharin (Sigma) was prepared as a 0.05% solution in tap water for all monkeys except M439, who required a larger concentration (0.10%) to maintain responding. The saccharin solution was delivered over 10 seconds through plastic tubing by variable-speed injection pumps (7540X; Cole-Parmer Co., Chicago, IL) located outside the cubicle. Volumes were varied by changing pump speed. Programming and recording of experimental events were accomplished using a Macintosh computer and associated interfaces.

Procedure

The procedure was similar to the one used by Woolverton et al. (2007) to study cocaine. Briefly, daily sessions began at 11:00 a.m. and were signaled by illumination of white lever lights. Each session consisted of 24 trials. The first 4 trials (2 for each lever) were forced-choice (sampling) trials in which white lights over one lever were illuminated, followed by 20 free-choice trials in which white lights over both levers were illuminated. For both types of trials, a single lever press extinguished the white lights, illuminated the red lights, and initiated a 10-min inter-trial interval (ITI) to the beginning of the next trial. If the response was on the immediate lever, saccharin was immediately delivered and the red lights were terminated at the end of saccharin delivery. If the response was on the delay lever, there was a delay to saccharin delivery during which the red lights over that lever flashed once per second until saccharin delivery. The delay and the ITI ran simultaneously, ensuring that reinforcement rate did not covary with delay to reinforcement. The delayed reinforcer was a 4.0 ml bolus of saccharin solution, presented after a delay that ranged from 0 (no delay) to 60 s across conditions. The immediate reinforcer was a saccharin solution whose volume ranged between 0.25 to 5.6 ml across conditions. Each experimental condition was in effect for at least three consecutive sessions and until choice was stable. Stability was defined as: 1) number of choices of the immediate reinforcer on free-choice trials within ± 2 of the running three-session mean for three consecutive sessions; and 2) no upward or downward trend. Once stability was achieved, the lever-reinforcer pairing was reversed, and stable choice was re-determined. Delay and immediate amount conditions were studied in an irregular order both within and across monkeys. After studying 4.0 ml of delayed saccharin, the volume was decreased to 2.0 ml with a 10-s delay and the effects of varying the immediate volume were determined in the same manner in 5 monkeys (responding was not maintained by this volume in M439). On average, conditions lasted 12.4 sessions (range 6–35).

Mean data from the last three sessions of the original and reversal conditions were used in the data analysis. For each monkey, a volume-response function was determined at each delay, and then the indifference point (i.e., the volume of the immediate reinforcer predicted to result in 50% choice) was calculated based on the best-fitting logistic function. Indifference points for the 4.0 ml saccharin reinforcer at the different delays were fit by the hyperbolic discounting function V = A/(1 + kD)s where V represents the subjective value of the delayed reinforcer, A is the amount of the delayed reinforcer, k is a parameter that reflects the rate of discounting, and D is the amount of time until receipt of the delayed reinforcer. The parameter s reflects nonlinear scaling of amount and time, and when it is equal to 1.0 (as set in the current analysis), the equation reduces to a simple hyperbola. To assess the effect of reinforcer magnitude, the group mean indifference points for the delayed 2.0 and 4.0 ml saccharin reinforcers delivered with 10-s delay were normalized as relative subjective value, i.e., the amount of immediate reinforcer equal in value to the delayed reinforcer as a proportion of the amount of the delayed reinforcer.

Results

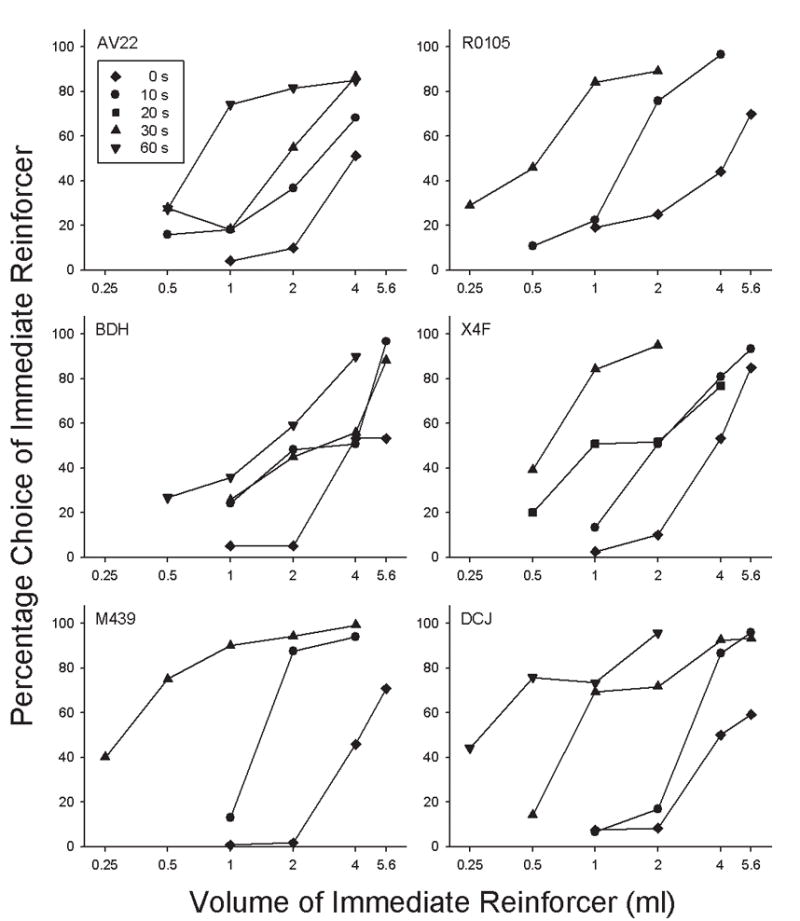

Choice of the immediate reinforcer increased as a function of its volume in all monkeys at every delay (Figure 1). Moreover, the volume-response relation for saccharin (i.e., percentage choice of the immediate reinforcer as a function of its volume) shifted systematically to the left as the delay to the 4.0 ml reinforcer increased. Points that approximated 50% choice often reflected a position preference that was maintained with a reversal.

Figure 1.

Percentage choice of an immediate saccharin reinforcer as a function of its amount. The volume of the alternative was always 4.0 ml, delivered after the delay indicated by the symbol (see legend). Note that volume is logarithmically scaled.

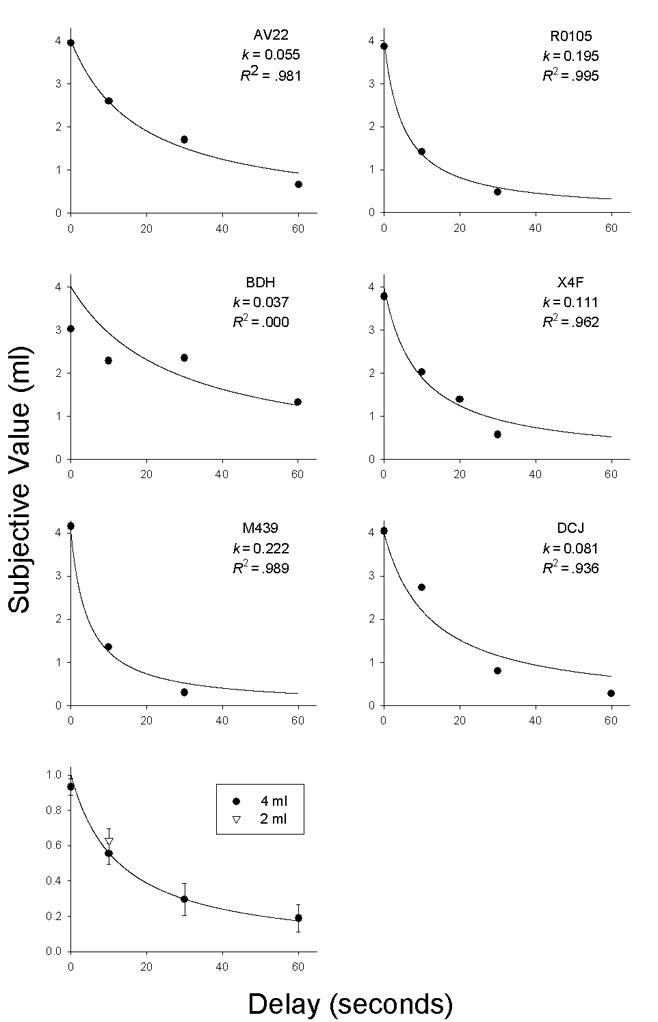

For all of the monkeys except BDH1, the hyperbolic function provided a good fit to the data from the 4.0 ml condition (Figure 2). The median R2 value for these five animals was 0.981 (range 0.936–0.995) and the median k value was 0.111 (range 0.055–0.222). The change in reinforcer magnitude from 4.0 to 2.0 ml had no systematic effect on its relative subjective value (Figure 2, bottom). In fact, only two of five monkeys (BDH and DCJ) showed behavior consistent with the magnitude effect observed in humans. Discussion In all six of the monkeys in the current study, increasing the delay to a saccharin reinforcer systematically decreased its value, and (with one exception) these changes in the subjective value of saccharin were well described by a hyperbolic discounting function. These results are consistent with previous findings regarding delay discounting in non-humans and extend the generality of the phenomenon to rhesus monkeys with responding maintained by saccharin. When the relative subjective values of delayed 2.0 and 4.0 ml saccharin reinforcers were compared at one delay (10-s), there was little evidence of a difference in value, raising the possibility that monkeys, like other non-humans (Green et al., 2004; Richards et al., 1997) may not show a magnitude effect similar to that observed in humans (e.g., Green, Myerson, and McFadden, 1997; Kirby, 1997; Raineri and Rachlin, 1993). However, additional research is clearly needed before conclusions can be drawn about the effect of changing reinforcer magnitude on discounting rates in non-human primates.

Figure 2.

Subjective value of a saccharin reinforcer as a function of delay to its delivery. In the six upper panels, the solid curves represent a hyperbolic discounting function (i.e., Equation 1 with A=4.0 and s=1.0) fit to the data from each monkey, and the corresponding estimates of the k parameter and the proportion of variance accounted for (R2) are shown. The bottom panel shows the mean relative subjective values (i.e., subjective value as a proportion of actual amount) for delayed 2.0- and 4.0-ml saccharin reinforcers for all monkeys with data at the indicated points. Vertical lines are s.e.m.

In a previous study with monkeys, Woolverton et al. (2007) reported that discounting rates for cocaine were low relative to those in previous studies with non-drug reinforcers in non-humans. Four of the monkeys in the current study also were in the Woolverton et al. study, and in each case, discounting of saccharin was steeper than discounting of cocaine, as indicated by the k values. Because these monkeys were tested with cocaine before saccharin, the possibility of an order effect obviates firm conclusions. Nevertheless, these findings raise the possibility that the differences in discounting were due to the type of reinforcer. In contrast, human drug abusers have been shown to discount hypothetical drug rewards more steeply than hypothetical monetary rewards (e.g., Coffey, Gudleski, Saladin, and Brady, 2003; Madden, Petry, Badger, and Bickel, 1997), but humans also discount directly consumable rewards more steeply than monetary rewards (Estle, Green, Myerson and Holt, 2007; Odum and Rainaud, 2003). These apparent differences between reinforcer types clearly merit additional research. This comparison also raises the issue of whether the steeper discounting of saccharin, relative to cocaine, was due to a difference in the value of these reinforcers. As already noted, humans discount reinforcers of different value at different rates. However, the absence of magnitude effects in previous research with non-humans (Green et al., 2004; Richards et al., 1997), as well as the lack of a magnitude effect in the present study, suggest that the issue may be moot here. However, data from the present study were limited to a single magnitude manipulation and must be considered preliminary. Firm conclusions on this issue also await further study.

It is often said that the tendency to choose immediate, smaller rewards over larger, delayed ones represents a form of impulsive behavior (for a critical discussion of this issue, see Green and Myerson, in press), and drug abuse is usually assumed to be impulsive in nature. However, under some circumstances drug taking may be seen as having characteristics of self-control rather than impulsivity in that it often involves planning and complex, organized behavior directed toward future drug consumption (Hernnstein and Prelec, 1992; Heyman, 1996). The present results, taken together with those of Woolverton et al. (2007), raise the possibility that monkeys show greater self-control when choices involve delayed drug reinforcers than when they involve non-drug reinforcers. It will be interesting to address this question more directly in future experiments.

Acknowledgments

This research was supported partially by National Institutes of Health Grant DA08731 to William L. Woolverton, the Drug Abuse Research and Development Fund at the University of Mississippi Medical Center and by the Robert M. Hearin Foundation. Preparation of this article also was supported by National Institutes of Health Grant MH055308 to Leonard Green and Joel Myerson. We gratefully acknowledge the technical support of Lindsey Halley.

Footnotes

The R2 for the fit of Equation 1 to the data from Monkey BDH was zero, indicating that the mean of BDH’s subjective values, averaged across delay conditions, accounted for as much of the variance in his choice behavior as did Equation 1, even though subjective value decreased systematically as a function of delay (r = −.93).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bickel WK, Marsch LA. Toward a behavioral economic understanding of drug dependence: Delay discounting processes. Addiction. 2001;96:73–86. doi: 10.1046/j.1360-0443.2001.961736.x. [DOI] [PubMed] [Google Scholar]

- Coffey SF, Gudleski GD, Saladin ME, Brady KT. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharm. 2003;11:18–25. doi: 10.1037//1064-1297.11.1.18. [DOI] [PubMed] [Google Scholar]

- Estle SJ, Green L, Myerson J, Holt DD. Discounting of monetary and directly consumable rewards. Psychol Sci. 2007;18:58–63. doi: 10.1111/j.1467-9280.2007.01849.x. [DOI] [PubMed] [Google Scholar]

- Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J. Experimental and correlational analyses of delay and probability discounting. In: Madden GJ, Bickel WK, editors. Impulsivity: Theory, science, and neuroscience of discounting. American Psychological Association; Washington D.C: (in press) [Google Scholar]

- Green L, Myerson J, Holt DD, Slevin JR, Estle SJ. Discounting of delayed food rewards in pigeons and rats: Is there a magnitude effect? J Exp Anal Behav. 2004;81:39–50. doi: 10.1901/jeab.2004.81-39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J, McFadden E. Rate of temporal discounting decreases with amount of reward. Mem Cog. 1997;25:715–723. doi: 10.3758/bf03211314. [DOI] [PubMed] [Google Scholar]

- Herrnstein RJ, Prelec D. A theory of addiction. In: Lowenstein G, Elster J, editors. Choice over time. Russell Sage Press; New York: 1992. [Google Scholar]

- Heyman GM. Resolving the contradictions of addiction. Behav Brain Sci. 1996;19:561–574. [Google Scholar]

- Kirby KN. Bidding on the future: Evidence against normative discounting of delayed rewards. J Exp Psychol: Gen. 1997;126:54–70. [Google Scholar]

- Madden GJ, Petry NM, Badger GJ, Bickel WK. Impulsive and self-control choices in opioid-dependent patients and non-drug-using control participants: Drug and monetary rewards. Exp Clin Psychopharm. 1997;5:256–262. doi: 10.1037//1064-1297.5.3.256. [DOI] [PubMed] [Google Scholar]

- Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. Erlbaum; Hillsdale, NJ: 1987. pp. 55–73. [Google Scholar]

- Mazur JE. Tradeoffs among delay, rate, and amount of reinforcement. Behav Process. 2000;49:1–10. doi: 10.1016/s0376-6357(00)00070-x. [DOI] [PubMed] [Google Scholar]

- Odum AL, Rainaud CP. Discounting of delayed hypothetical money, alcohol, and food. Behav Proc. 2003;64:305–313. doi: 10.1016/s0376-6357(03)00145-1. [DOI] [PubMed] [Google Scholar]

- Raineri A, Rachlin H. The effect of temporal constraints on the value of money and other commodities. J Behav Dec Making. 1993;6:77–94. [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. J Exp Anal Behav. 1997;67:353–366. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wee S, Wang Z, He R, Zhou J, Kozikowski AP, Woolverton WL. Role of the increased noradrenergic neurotransmission in drug self-administration. Drug Alc Depend. 2006;82:151–157. doi: 10.1016/j.drugalcdep.2005.09.002. [DOI] [PubMed] [Google Scholar]

- Woolverton WL, Myerson J, Green L. Delay discounting of cocaine by rhesus monkeys. Exp Clin Psychopharm. 2007;15:238–244. doi: 10.1037/1064-1297.15.3.238. [DOI] [PMC free article] [PubMed] [Google Scholar]