Abstract

Not all of what is experienced is remembered later. Behavioral evidence suggests that the manner in which an event is processed influences which aspects of the event will later be remembered. The present experiment investigated the neural correlates of “selective encoding,” or the mechanisms that support the encoding of some elements of an event in preference to others. Event-related MRI data were acquired while volunteers selectively attended to one of two different contextual features of study items (color or location). A surprise memory test for the items and both contextual features was subsequently administered to determine the influence of selective attention on the neural correlates of contextual encoding. Activity in several cortical regions indexed later memory success selectively for color or location information, and this encoding-related activity was enhanced by selective attention to the relevant feature. Critically, a region in the hippocampus responded selectively to attended source information (whether color or location), demonstrating encoding-related activity for attended but not for nonattended source features. Together, the findings suggest that selective attention modulates the magnitude of activity in cortical regions engaged by different aspects of an event, and hippocampal encoding mechanisms seem to be sensitive to this modulation. Thus, the information that is encoded into a memory representation is biased by selective attention, and this bias is mediated by cortical–hippocampal interactions.

Introduction

Much of what we experience as we interact with the world cannot be explicitly remembered later. To date, the processes determining which aspects of an event will later be remembered remain to be elucidated. In the present study we ask what determines the subset of information contained in an event that is successfully encoded into memory.

One possibility is that everything that is experienced is encoded. According to one proposal, for example, the hippocampus obligatorily encodes all consciously experienced information (Moscovitch, 1992). Because only a subset of this information can usually be retrieved later, this proposal implies that processes determining what is remembered operate after encoding. For instance, encoded information may degrade over time (storage failure) or may be incompletely accessed (retrieval failure).

The information that is later remembered may also be determined by processes occurring as an event is experienced, that is, at encoding. Considerable evidence indicates that different aspects of an event are processed in parallel by distinct information processing streams (Livingstone and Hubel, 1988; Felleman and Van Essen, 1991), and it has been proposed that these different classes of information are incorporated into an episodic memory when the hippocampus and adjacent medial temporal (MTL) cortex encode the patterns of cortical activity in the corresponding processing stream (Eichenbaum, 1992). This proposal raises the question of whether there are any mechanisms that control which processing streams gain access to MTL. In particular, if cortical processing is biased toward certain aspects of an event rather than others, does this result in the MTL preferentially encoding the activity representing this subset of information? The aim of the present study was to search for evidence of such a mechanism.

Insight into potential mechanisms by which information could be selected or “biased” for encoding comes from the attention literature. When particular aspects of an event are selectively attended, activity in cortical regions engaged to process the attended information is enhanced (Corbetta et al., 1990). Behavioral studies demonstrate that attention influences the strength and content of memory (Chun and Turk-Browne, 2007), but the neural mechanisms underlying such effects remain unclear. One candidate mechanism is that attentionally enhanced cortical activity is more likely to be encoded. Indirect support for this idea is provided by a recent demonstration that brain regions previously associated with online processing of different features (color, location, or their conjoint processing) showed greater activity when those features were later remembered rather than forgotten (“subsequent memory effects”) (Uncapher et al., 2006). Without an explicit manipulation of attention, however, these findings do not directly link attentional and mnemonic processing.

To investigate the intersection between attention and memory formation, here we explicitly manipulate attention to item features (color and location) in a subsequent memory design. We predicted that feature-specific cortical subsequent memory effects would be selectively enhanced by feature-specific attention. Critically, to the extent that MTL encoding mechanisms are sensitive to the magnitude of cortical response elicited by these features, MTL subsequent memory effects should be enhanced for whichever feature received more attention.

Materials and Methods

Participants.

Nineteen volunteers [nine female; age range: 18–26 years, mean = 21.26 (SD = 3.6)] consented to participate in the study. All volunteers reported themselves to be in good general health, to be right handed, to have no history of neurological disease or other contraindications for MRI, and to have learned English as their first language. Volunteers self-reported no history of color blindness and were tested for color discrimination before participating in the experiment. Volunteers were recruited from the University of California at Irvine (UCI) community and remunerated for their participation, in accordance with the human subjects procedures approved by the Institutional Review Board of UCI. One volunteer's data were excluded because of inadequate study performance (>2 SDs below the sample mean); two volunteers' data because of inadequate memory performance (>2 SDs below the sample mean for recognition accuracy for either attention condition), and one volunteer's data because of excessive movement (>4° rotational movement).

Stimulus materials.

Critical stimuli were 440 colored pictures of common objects. Stimuli were drawn from the set used by Smith et al. (2004), supplemented with stimuli drawn from the Amsterdam Library of Object Images (Geusebroek et al., 2005). Items were classified according to real-world size (whether the object would fit inside a shoebox) and animacy, such that four lists were created (objects that were smaller than a shoebox and animate, smaller than a shoebox and inanimate, larger than a shoebox and animate, or larger than a shoebox and inanimate). Two study lists of 148 items each were created from these lists, with two additional items serving as buffers. Stimuli were pseudorandomized such that each item type was equivalently distributed among each spatial position and color (see below, Procedure). From the remaining set, 116 items were used as new items and 2 as buffers for the source memory test list. Stimulus ordering was rerandomized across study and test lists for each subject. A separate pool of 26 items was used to create two sets of practice study lists (one for the attend-color condition and another for the attend-location condition; see below, Procedure) and one practice test list (with 24 items used as practice study items and the remaining 2 as practice new items).

Procedure.

The experiment consisted of two scanned incidental study sessions and one nonscanned test session. Instructions and practice for the study tasks were given outside the scanner. During the prescanning practice session, volunteers practiced to criterion each of the two study conditions (see below) separately, followed by a concatenated version in the same order as the study phase proper. During each of two encoding phases, volunteers viewed a black screen with a white central fixation cross. Every 3 s (excluding null events; see below), a box (subtending 10° vertical and horizontal visual angles) appeared on the screen. Centered inside the box was a study picture (subtending 5° vertical and horizontal visual angles). On each trial (Fig. 1), the box and study picture appeared for 1 s in one of five locations (either “off-center,” in one of four quadrants of the screen, the nearest corner of which subtended 1° vertical and horizontal visual angles from the central fixation cross, or “on-center,” in the center of the screen). The box itself appeared in one of five colors (either “colored,” in red, yellow, green, or blue, or “noncolored,” in gray).

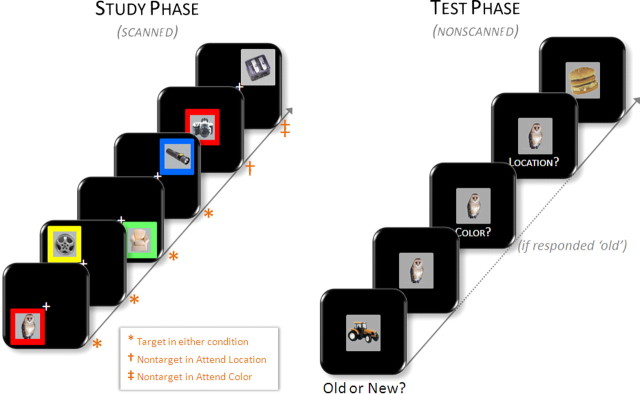

Figure 1.

Experimental design. Left, During the study phase, volunteers were scanned while incidentally encoding pictures in one of two alternating conditions. In the attend-location condition, volunteers made size judgments (see Materials and Methods) to pictures that appeared in one of the four onscreen quadrants (“targets”) and animacy judgments to those that appeared in the center of the screen (“nontargets”), regardless of the color of the background frame. In the attend-color condition, picture location was task irrelevant, in that volunteers were to make a size judgment to pictures that appeared in one of four colored frames (“targets”) and an animacy judgment when the frame was gray (“nontargets”). Right, Scanning was followed by a surprise memory test wherein volunteers made old/new judgments on studied targets and unstudied new items. On responding “old,” volunteers made source memory judgments for the color and location in which the picture was studied.

On each trial, volunteers were to make either a size judgment or an animacy judgment on the study picture, with the type of judgment to be made cued by either the location of the box (in the “attend-location” condition) or the color of the box (in the “attend-color” condition). Specifically, in attend-location blocks, volunteers were to make size decisions for pictures appearing off-center, and animacy decisions for those appearing on-center, regardless of the color of the box. Likewise, in attend-color blocks, size decisions were required for those items appearing in a colored box and animacy decisions for those in a gray box, regardless of the location of the box. Thus, the use of the term “selective attention” here describes the competitive nature of the task, in that in each condition, volunteers were to attend to one feature dimension at the expense of the other. Therefore, “selective” is used to refer to selecting one dimension over another (color vs location) rather than selecting within a dimension (e.g., red vs blue). Items for which a size judgment was required are henceforth referred to as “target” items, whereas those subjected to animacy judgments are designated “nontarget” items. For target items, volunteers were instructed to decide whether the picture depicted an item that was larger or smaller than a shoebox and to indicate their decision by depressing a button with the index (larger than a shoebox) or middle (smaller than a shoebox) finger of one hand. For nontarget items, volunteers were to use the opposite hand to indicate whether the picture depicted a living or a nonliving item (index and middle fingers, respectively). Target (relative to nontarget) items were presented with p = 0.90 and were equally distributed among the four target positions and four target colors. Thus, across the two study phases, 132 target pictures were presented in each condition (attend location and attend color). Of these target pictures, 120 in each condition appeared both off-center and in a colored box; these comprised the critical study items for which memory would be subsequently tested. [The rationale for not testing later memory for all target items is that 12 of the targets in each condition would qualify as nontargets in the other condition and could thus serve to introduce a confound for this subset of items. For example, an item presented in an off-centered, noncolored box would be given a size judgment in the attend-location condition (because it was off-centered) but would be given an animacy judgment if presented in the attend-color condition (because it was noncolored). Such items may be susceptible to interference from conflicting between-condition “targetness.” Thus, to constrain subsequent memory analyses to those items for which study processing was as similar as possible, we tested later memory for only those study items that would qualify as targets in both conditions.]

Each encoding phase lasted ∼10 min and consisted of one block each of the two study conditions (attend location and attend color). Block order was maintained across scanning sessions and counterbalanced across volunteers. Study item stimulus onset asynchrony (SOA) was stochastically distributed with a minimum SOA of 3 s modulated by the addition of approximately one-third (64) randomly intermixed null trials (Josephs and Henson, 1999). A central fixation cross was present throughout the interitem interval. Pictures were presented in pseudorandom order, with no more than three trials of one item type occurring consecutively. The hand used to respond to target items was counterbalanced across volunteers. Speed and accuracy of responding were given equal emphasis, as was performance on both the size and the animacy tasks. Pictures were back-projected onto a screen and viewed via a mirror mounted on the sensitivity-encoding (SENSE) head coil.

Immediately after the completion of the second study phase, volunteers were removed from the scanner and taken to a neighboring testing room. They were then informed of the surprise source memory test and given instructions and a short practice test. Approximately 10 min elapsed between the completion of the second study phase and the beginning of the memory test proper. The memory test consisted of the 240 target pictures (120 for each of the two study conditions), presented one at a time, interspersed among 118 unstudied (new) pictures. Instructions were to judge whether each word was old or new and to indicate the decision with the index (old) or middle (new) finger of their right hand. When they were uncertain whether an item was old or new, volunteers were instructed to indicate “new” so as to maximize the likelihood that subsequent source memory judgments (see below) would be confined to confidently recognized items. Volunteers were required to indicate their old/new decision within 3 s of the onset of the test picture. Each test picture remained onscreen until an old or a new response was made or 3 s elapsed (if no response was made). If a picture was judged to be new, it disappeared, and the test advanced to the next trial (with a 1 s intertrial interval, during which a fixation cross was presented). However, if the picture was judged as old, it remained onscreen and volunteers were prompted to signal the color and the location in which the picture had been studied. This was accomplished by first presenting, below the test picture, the question “Color?” followed by the question “Location?” Below each cue, the relevant response mapping was presented. Volunteers indicated their source judgment with the index (red/upper left), middle (yellow/bottom left), ring (green/upper right), or little (blue/bottom right) finger of their right hand. Both source memory judgments were self-paced, and their order was counterbalanced across subjects. Volunteers were instructed to make their best guess when uncertain.

The source memory test was presented in two consecutive blocks, separated by a short rest period. Old and new items were presented pseudorandomly with no more than three trials of one item type occurring consecutively. One additional new buffer item was added to the beginning of each test block. A gray box was presented in the center of the screen (subtending 9° vertical and horizontal visual angles) continuously throughout the test phase. Each picture was centrally presented in the gray box, with all other display parameters the same as at study. A white fixation cross was presented in the center of the gray box during the intertrial interval.

fMRI scanning.

A Philips Achieva 3T MR scanner (Philips Medical Systems) was used to acquire both T1-weighted anatomical volume images [256 × 200 matrix, 1 mm3 voxels, 150 slices, axial acquisition, three-dimensional MP-RAGE (magnetization-prepared rapid-acquisition gradient echo) sequence] and T2*-weighted echoplanar images (EPIs) [80 × 80 matrix; 3 mm3 voxels; transverse acquisition; flip angle, 70°; echo time, 30 ms] with blood-oxygenation level-dependent (BOLD) contrast, using a SENSE reduction factor of 2 on an eight-channel parallel imaging head coil. Each EPI volume comprised 30 3-mm-thick axial slices separated by 1 mm, positioned to give full coverage of the cerebrum and most of the cerebellum. Data were acquired in two sessions comprised of 305 volumes each, with a repetition time of 2.2 s/volume. Volumes within sessions were acquired continuously in a descending sequential order. The first four volumes were discarded to allow tissue magnetization to achieve a steady state.

fMRI data analysis.

Data preprocessing and statistical analyses were performed with Statistical Parametric Mapping (SPM5; Wellcome Department of Cognitive Neurology, London, UK: http://www.fil.ion.ucl.ac.uk/spm/software/spm5) (Friston et al., 1995) implemented in MATLAB R2006a (The MathWorks). All volumes were realigned spatially to the first volume of the first time series. Inspection of movement parameters generated during spatial realignment indicated that one volunteer moved >4° in pitch; as noted above, this volunteer's data were excluded from the analyses. All other volunteers moved no more than 3 mm or 2° in any direction. Realigned images were spatially normalized using a standard EPI template based on the Montreal Neurological Institute (MNI) reference brain (Cocosco et al., 1997) and resampled into 3 mm3 voxels by use of nonlinear basis functions (Ashburner and Friston, 1999). Image volumes were concatenated across sessions. Normalized images were smoothed with an isotropic 8 mm FWHM (full-width half-maximum) Gaussian kernel. Each volunteer's T1 anatomical volume was coregistered to their mean EPI volume and normalized to a standard T1 template of the MNI brain.

Statistical analyses were performed in two stages of a mixed-effects model. In the first stage, neural activity was modeled by a δ function (impulse event) at stimulus onset. These functions were then convolved with a canonical hemodynamic response function (HRF) and its temporal and dispersion derivatives (Friston et al., 1998) to yield regressors in a general linear model (GLM) that modeled the BOLD response to each event type. The two derivatives model variances in latency and duration, respectively. Analyses of the parameter estimates pertaining to these derivatives added no theoretically meaningful information to that contributed by the HRF and are not reported (results are available from the corresponding author on request).

Two GLMs were estimated. The first modeled the main effects of subsequent memory, collapsed across attention conditions. Accordingly, five event types of interest were defined for this “main subsequent memory model,” namely, studied pictures later attracting correct source judgments for both features (both correct), for color only, or for location only, studied pictures attracting a correct recognition judgment but for which neither source feature was correctly judged (item only), and studied pictures that were later incorrectly judged to be new (miss), each collapsed across attention conditions. The second GLM was geared toward identifying the influence of attention on subsequent memory effects (the “attention model”), and thus the same five event types were modeled separately for each attention condition (attend location and attend color). In both GLMs, pictures for which memory was not later tested were modeled as events of no interest, as were buffer items, condition and rest cues, and pictures for which a response was omitted at test. Six regressors modeling movement-related variance (three rigid-body translations and three rotations determined from the realignment stage) and session-specific constant terms modeling the mean over scans in each session were also used in each design matrix.

The time series in each voxel were high-pass filtered to 1/128 Hz to remove low-frequency noise and scaled within session to a grand mean of 100 across both voxels and scans. Parameter estimates for events of interest were estimated using a general linear model. Nonsphericity of the error covariance was accommodated by an AR(1) (first-order autoregressive) model, in which the temporal autocorrelation was estimated by pooling over suprathreshold voxels (Friston et al., 2002). The parameters for each covariate and the hyperparameters governing the error covariance were estimated using ReML (restricted maximum likelihood). Effects of interest were tested using linear contrasts of the parameter estimates. These contrasts were carried forward to a second stage in which subjects were treated as a random effect. Unless otherwise specified, whole-brain analyses were used when no strong regional a priori hypothesis was possible; in these cases, only effects surviving an uncorrected threshold of p < 0.001 and including five or more contiguous voxels were interpreted. When we held an a priori hypothesis about the localization of a predicted effect, we corrected for multiple comparisons by using a small-volume correction (SVC) using a familywise error correction rate based on the theory of random Gaussian fields (Worsley et al., 1996). The peak voxels of clusters exhibiting reliable effects are reported in MNI coordinates.

Regions of overlap between the outcomes of two contrasts were identified by inclusively masking the relevant statistical parametric maps (SPMs). When the two contrasts were independent, the statistical significance of the resulting SPM was computed using Fisher's method of estimating the conjoint significance of independent tests (Fisher, 1950; Lazar et al., 2002). Contrasts to be masked were maintained at p < 0.001, whereas the inclusive mask was thresholded at p < 0.05, resulting in a conjoint probability of p < 0.0005. Exclusive masking was used to identify voxels for which effects were not shared between two contrasts. The SPM constituting the exclusive mask was thresholded at p < 0.05, whereas the contrast to be masked was thresholded at p < 0.001. Note that the more liberal the threshold of an exclusive mask, the more conservative the masking procedure. The resultant threshold of an exclusive masking procedure is that of the image to be masked (p < 0.001).

Results

Behavioral performance

The degrees of freedom for any ANOVA described below involving factors with more than two levels were adjusted for nonsphericity using the Greenhouse–Geisser correction (Greenhouse and Geisser, 1959).

Study tasks

Prior subsequent memory studies that suggest attention is a mechanism by which information is selected for encoding (including our own) (Uncapher et al., 2006) have drawn such an inference by identifying neural effects in regions previously associated with attentional processing. Here we included a selective attention manipulation in a subsequent memory paradigm to obtain direct measures of the degree to which volunteers were attending to specific information (the contextual features of color and location). That is, by measuring performance in the different attention conditions at study, we could assess whether volunteers were attending to the appropriate information, regardless of their performance on the subsequent memory test.

We found that volunteers were able to selectively attend to the relevant information in both attention conditions, as accuracy for making a size judgment to target items was at ceiling in both conditions (mean ± SD; attend location: 1.00 ± 0.01, attend color: 1.00 ± 0.003). Accuracy for making an animacy judgment to nontarget items was also high (attend location: 0.89 ± 0.14, attend color: 0.93 ± 0.05) but was significantly worse than for size judgments (F(1,14) = 16.29, p < 0.001). Poorer performance on the animacy task was accompanied by significantly longer reaction times in both attention conditions [attend location: 1288 ± 221 (size) vs 1422 ± 266 (animacy), t(14) = 3.38, p < 0.005; attend color: 1305 ± 250 (size) vs 1469 ± 272 (animacy), t(14) = 5.66, p < 0.001]. No differences in performance between attention conditions were found for either task (both t(14) < 1.5).

Also relevant to the question of whether volunteers were able to select one contextual feature over the other is the performance on trials in which the two features were directly at odds. On these trials (targets in one condition that would be nontargets in the other; see Materials and Methods), a correct study judgment could be made only when the correct feature was attended. Critically, volunteers were just as accurate on these trials as on trials for which the features were not in conflict (attend location: 0.97 ± 0.07, p > 0.1; attend color: 0.98 ± 0.03, p > 0.1), indicating that they were able to select relevant over irrelevant features. Together, these findings demonstrate that attention was biased during the incidental encoding of study events. Importantly, the findings supporting this conclusion are independent of any effects that attention may have exerted on memory performance or the localization of neural effects.

Source memory task

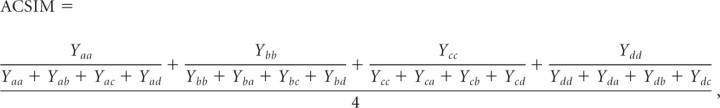

Pictures studied under each attention condition were later recognized with equivalent overall accuracy (hit rate: 74 ± 11% in both conditions; this yielded an average of 89 hits and 31 misses in each attention condition, thus allowing sufficient power to detect reliable neural effects). New (unstudied) pictures were correctly rejected at a rate of 94 ± 2%. Table 1 lists source memory performance for pictures studied under each condition, calculated using the average conditional source identification measure (ACSIM) (Bayen et al., 1996). For each source type (location and color), ACSIM was calculated as follows:

|

where a–d represent each of the four spatial positions or colors, the first character in the subscript denotes the studied position/color, and the second character the given response. This measure provides an estimate of source memory for location and color independently for each subject, accommodating the possibility that accuracy on individual positions or colors may differ.

Table 1.

Source memory performance for pictures studied in the two attention conditions

| Attend location | Attend color | |

|---|---|---|

| Location memory | 0.45 (0.05) | 0.40 (0.05) |

| Color memory | 0.25 (0.01) | 0.28 (0.02) |

Data are mean and SE (in parentheses) as estimated by ACSIM.

To address the question of whether memory performance for a source feature was modulated by attention to that feature during study, a 2 × 2 ANOVA with factors of source memory performance (location ACSIM vs color ACSIM) and attention condition (attend location vs attend color) was performed. The ANOVA revealed a significant effect of source feature, indicating that location judgments were more accurate than color judgments (F(1,14) = 9.67, p < 0.008). More relevant to the question at issue was the finding of a significant interaction between source accuracy and attention condition (F(1,14) = 9.45, p < 0.008). Directional t tests revealed that both location and color judgments were more accurate when the relevant feature was attended rather than nonattended (location: t(14) = 2.54, p < 0.013; color: t(14) = 1.97, p < 0.039).

In accordance with the behavioral literature using multidimensional source memory accuracy as an index of contextual binding (Meiser and Broder, 2002; Starns and Hicks, 2005; Meiser and Sattler, 2007; Meiser et al., 2008), we performed a final analysis to assess the degree to which memory for the two contextual features was stochastically dependent. To accomplish this, we determined whether the likelihood of retrieving one feature was influenced by whether or not the other feature was also retrieved. For the retrieval of location information, for example, we compared the frequencies with which correct location judgments were associated with correct versus incorrect color judgments. An analogous procedure was used for the retrieval of color information. These frequencies were calculated independently for each attention condition and are listed in Table 2. To directly compare the results of these procedures with those in the study by Uncapher et al. (2006), in which attention was not experimentally manipulated between the two source features, we performed 2 × 2 ANOVAs for each attention condition separately, with factors of source feature (color vs location) and conditional accuracy of the other feature (correct vs incorrect). In the case of the attend-color condition [the condition analogous to that in the study by Uncapher et al. (2006)], the main effect of “other feature accuracy” approached significance (F(1,14) = 4.46, p < 0.053), indicating that feature memory for items studied under the attend-color condition tended to be higher when the alternate feature was also retrieved. Follow-up directional t tests revealed that this effect was significant for both source features (location: t(14) = 2.17, p < 0.025; color: t(14) = 1.88, p < 0.04). No such stochastic dependence was identified in the ANOVA performed on the data from the attend-location condition.

Table 2.

Source memory performance conditionalized on the accuracy of the other source

| Attend location | Attend color | |

|---|---|---|

| Locationcorrect if colorcorrect | 0.46 (0.06) | 0.44 (0.06) |

| Locationcorrect if colorincorrect | 0.44 (0.06) | 0.39 (0.05) |

| Colorcorrect if locationcorrect | 0.24 (0.01) | 0.29 (0.02) |

| Colorcorrect if locationincorrect | 0.24 (0.02) | 0.26 (0.02) |

Data are mean and SE (in parentheses).

Finally, we compared the results of this stochastic dependence analysis to those of our previous study of multifeatural source memory (Uncapher et al., 2006). That study reported that volunteers were 8% more likely to retrieve each feature when the other was also retrieved, whereas here we observed the analogous probabilities were only 3% (for color) and 5% (for location). Thus, it appears that the biasing of attention toward a single feature in the present study relative to both features in the previous experiment appears to have reduced the stochastic dependence between memory for the features, which is thought to index the degree to which features were conjoined in memory. The present findings extend previous behavioral studies (Meiser and Broder, 2002; Starns and Hicks, 2005; Meiser and Sattler, 2007; Meiser et al., 2008) by suggesting that the degree to which retrieval of different contextual elements is stochastically dependent is modulated by whether attention is directed to one or both contextual features during encoding.

fMRI findings

We first identified regions that demonstrated main effects of subsequent memory (collapsed across attention condition) associated with successful recognition or successful source memory. In addition, because our previous study of multifeatural encoding (Uncapher et al., 2006) identified distinct regions that appeared to support the encoding of color, location, or the two features together, a second set of analyses in the present study was targeted to identify analogous effects. Thus, we searched for regions that, regardless of attention condition, exhibited color- and location-specific subsequent memory effects, as well as regions demonstrating effects for conjoint feature memory. A final set of analyses sought to address the question of how attention and encoding processes interact by identifying regions for which subsequent memory effects were modulated according to attention.

Main subsequent memory effects

Recognition.

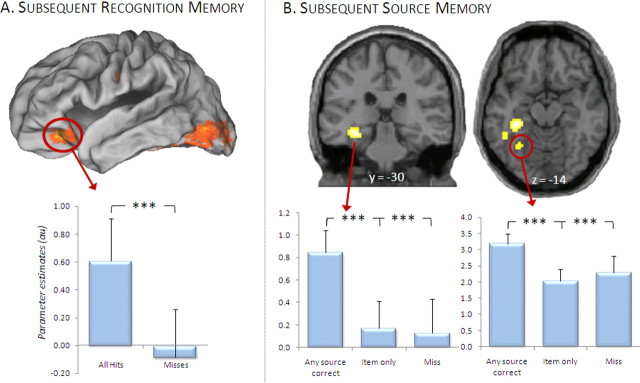

Regions demonstrating a main effect of subsequent item memory were identified by contrasting activity elicited by all pictures later recognized (regardless of accuracy on the color or location source dimensions) with that elicited by pictures later forgotten. Consistent with previous studies, this contrast revealed subsequent memory effects in multiple regions, including left ventral prefrontal and bilateral fusiform cortex (Fig. 2A, Table 3).

Figure 2.

Subsequent memory effects collapsed across attention condition. A, Regions in which activity elicited by study items was greater for those items that were later recognized (“all hits”) relative to those that were later forgotten (“misses”), regardless of source accuracy or study condition. Mean parameter estimates (and SEs) for the cluster in inferior frontal gyrus are plotted below the surface-rendered SPM. B, Items for which one or both source features were later remembered (“any source correct”) elicited greater activity than those for which both source features (“item only”) or the items themselves were forgotten (“misses”) in several MTL regions, including a cluster centered in posterior hippocampus, and another in parahippocampal cortex. Effects are displayed on a standardized brain [in A: PALS-B12 atlas using Caret5 software (http://brainvis.wustl.edu/wiki/index.php/Caret:About); in B: single-subject canonical T1 in SPM5 (http://www.fil.ion.ucl.ac.uk/spm/software/spm5)], below which are plotted mean parameter estimates of the indicated clusters. ***p < 0.001.

Table 3.

Regions showing main effects of subsequent recognition or source memory

| Peak z (no. of voxels) | Region | ∼BA | |

|---|---|---|---|

| Recognition memory | |||

| −27, 33, −18 | 4.45 (35) | L inferior frontal gyrus | 47/11 |

| 30, 33, −21 | 3.60 (6) | R inferior frontal gyrus | 47/11 |

| 48, 3, 24 | 3.54 (19) | R inferior frontal gyrus | 9 |

| −51, −12, 30 | 3.42 (6) | L precentral gyrus | 6 |

| 45, −63, −6 | 3.89 (62) | R fusiform gyrus/middle temporal gyrus | 37 |

| −42, −45, −21 | 3.77 (94) | L fusiform gyrus | 37 |

| 51, −48, −21 | 3.47 (46) | R fusiform gyrus | 37 |

| −33, −93, −3 | 3.60 (26) | L middle occipital gyrus | 18 |

| 36, −81, 15 | 3.54 (44) | R middle occipital gyrus | 19 |

| −51, −78, −9 | 3.53 (45) | L middle occipital gyrus | 19 |

| Source memory | |||

| −48, −12, 51 | 3.51 (5) | L precentral gyrus | 4 |

| −33, −33, −12 | 4.06 (135) | L hippocampus/parahippocampal gyrus | |

| 39, −15, −24 | 3.45 (6) | R hippocampus | |

| −30, −60, −15 | 3.36 (14) | L parahippocampal gyrus | 19 |

| 48, −72, −21 | 3.53 (11) | R fusiform gyrus | 19 |

| −42, −84, 24 | 3.46 (12) | L middle temporal gyrus | 19 |

| −18, −87, 9 | 3.89 (60) | L middle occipital gyrus | 18 |

| −36, −96, −3 | 3.65 (21) | L middle occipital gyrus | 18 |

| −24, −102, 12 | 3.64 (15) | L middle occipital gyrus | 19 |

| −27, −84, −3 | 3.20 (5) | L middle occipital gyrus | 18 |

| −6, −48, −6 | 3.28 (10) | L cerebellum |

Coordinates (x, y, z) are given in the first column. z values refer to the peak of the cluster. L, Left; R, right.

Source memory.

We next identified regions that showed enhanced activity associated with later correct relative to incorrect source judgments, collapsed across attention conditions. Figure 2B illustrates and Table 3 lists the regions identified in this contrast, which included left hippocampus and left parahippocampal cortex.

Feature-specific and multifeatural effects

Color-specific subsequent memory effects.

We next identified subsequent memory effects uniquely associated with the successful recovery of color information. This was accomplished by first identifying all regions demonstrating a subsequent memory effect for color (by contrasting the activity elicited by recognized pictures for which a successful vs an unsuccessful color judgment was given, collapsed across attention condition; i.e., color only > item only). Because a subset of these voxels may have also exhibited a subsequent memory effect for location, the resultant SPM was then exclusively masked by the analogous subsequent memory contrast for location. Regions identified in this analysis are illustrated in Figure 3A and listed in Table 4 and include right parahippocampal gyrus and several medial occipital regions.

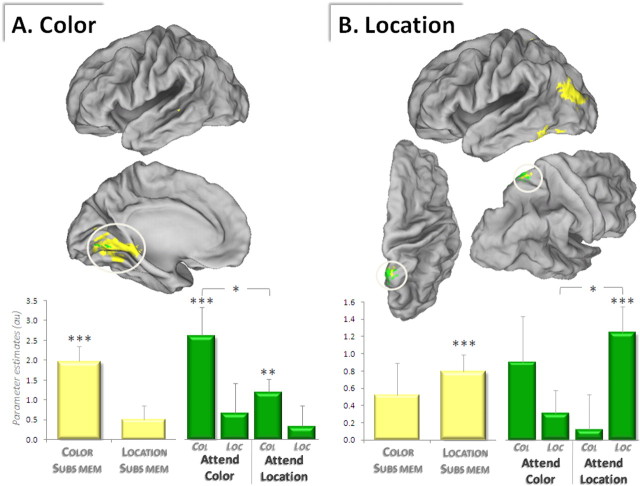

Figure 3.

Feature-specific subsequent memory effects. A, Subsequent memory effects selective for color (displayed in yellow) are surface rendered on a standardized brain. A subset of these color-selective subsequent memory effects was additionally modulated by attention to color (overlaid in green). B, Subsequent memory effects selective for location (yellow) also show a subset of voxels that are modulated by attention to location (green). Graphs depict mean parameter estimates (and SEs) of the encircled clusters (graph and cluster coloring correspond). Subs Mem, Subsequent memory effects; Col, color-subsequent memory effects; Loc, location-subsequent memory effects. *p < 0.05, **p < 0.01, ***p < 0.001.

Table 4.

Regions showing feature-specific subsequent memory effects

| Peak z (no. of voxels) | Region | ∼BA | |

|---|---|---|---|

| Color | |||

| 42, 6, −6 | 3.63 (11) | R insula | 13 |

| 42, −21, −21 | 3.60 (10) | R parahippocampal gyrus | 36 |

| 18, −39, −18 | 3.16 (8) | R parahippocampal gyrus | 36 |

| −30, −60, −6 | 3.16 (5) | L parahippocampal gyrus | 19 |

| −45, −33, −9 | 3.31 (5) | L middle temporal gyrus | 21 |

| 51, −42, 0 | 3.54 (15) | R middle temporal gyrus | 22 |

| 24, −18, −3 | 3.34 (7) | R globus pallidus | |

| −18, −51, −15 | 3.81 (144) | L peristriate | 19 |

| 12, −75, 0 | 3.71 (142) | R medial occipital lobe | 19 |

| Location | |||

| 9, −54, 69 | 3.29 (8) | R superior parietal lobe | 7 |

| −12, −45, 60 | 3.27 (5) | L superior parietal lobe | 7 |

| −54, −54, −27 | 4.31 (26) | L fusiform gyrus | 37 |

| −42, −87, 18 | 3.82 (72) | L superior occipital gyrus | 19 |

Coordinates (x, y, z) are given in the first column. z values refer to the peak of the cluster. L, Left; R, right. Boldface indicates those effects that are modulated by feature-specific attention.

Because source memory for color in the condition for which volunteers were instructed to ignore color (attend-location condition) was near chance, we performed additional analyses to determine whether color-subsequent memory effects in that condition replicated those identified in our previous study (Uncapher et al., 2006), for which color memory was significantly above chance. The only subsequent memory effect for color in the previous study was found in left posterior temporal cortex [x, y, z: −57, −36, −9], almost exactly overlapping a color effect identified here (−51, −30, −12) at a whole-brain threshold of p < 0.001. Indeed, the color effect in the present study survived an SVC analysis (centered on the coordinates of the region in the previous study, with radius of 6 mm) at p < 0.013. We also sought to determine whether other color-subsequent memory effects were evident in functionally plausible regions, notably in the visual area most consistently associated with color processing: V4. We therefore conducted a second SVC centered on coordinates reported in previous studies of color processing identifying V4. For example, Zeki and Marini (1998) reported left V4 Talairach coordinates of −22, −60, −14. Our color memory effects survived an SVC centered on these coordinates at p < 0.005.

The twin findings that the present color-subsequent memory effects not only robustly replicate previously reported effects but also were evident in V4 lead us to conclude that whereas color memory was weak, there was nonetheless some segregation of study trials at retrieval according to whether items were successfully encoded or not. Thus, whereas veridical source judgments were interspersed among a high proportion of guesses, these veridical judgments carried sufficient neural signal to overcome the weak signal contributed by the guesses, leading to meaningful and robust subsequent memory effects.

Location-specific subsequent memory effects.

Subsequent memory effects specific to location were identified with a procedure analogous to that described for color-specific subsequent memory effects. As shown in Table 4, several regions showed location-specific effects, including left fusiform gyrus and superior parietal cortex (Fig. 3B).

Multifeatural subsequent memory effects.

Regions that exhibited subsequent memory effects associated with the conjoint retrieval of both source features were identified by contrasting activity elicited by recognized pictures for which both features were successfully retrieved (both correct) with the activity elicited by pictures for which neither feature was retrieved (item only), collapsed across attention condition. At the pre-experimentally defined threshold of p < 0.001, no voxels exhibited multifeatural subsequent memory effects. At a reduced threshold of p < 0.005, however, several regions in medial occipital cortex and parahippocampal gyrus showed multifeatural effects (supplemental material, available at www.jneurosci.org). To determine whether these clusters were distinct from those showing feature-specific effects [as shown in the study by Uncapher et al. (2006)], this SPM was exclusively masked with the two feature-specific subsequent memory contrasts (each p < 0.05). Note that this exclusive mask used an “OR” operator to exclude those voxels that showed either a location-specific or a color-specific effect (Uncapher et al., 2006). No clusters survived this masking procedure. Thus, we were unable to identify any regions in the present study in which subsequent memory effects were uniquely selective for multifeatural source memory.

Subsequent memory effects modulated by attention

As outlined in Introduction, a key question in the present study involves the influence of attention on encoding-related processing. Specifically, we aimed to identify the extent to which subsequent memory effects for different features of an event are modulated by attention to the feature. We addressed this question with two further analyses. First, we identified where feature-specific subsequent memory effects were enhanced when the relevant feature was attended, compared with when the other feature was attended. We then searched for regions that showed generic effects of attention on encoding-related activity, that is, where subsequent memory effects were influenced by attention regardless of which feature was being attended.

Attention to color.

We first identified regions that showed color-specific subsequent memory effects that were enhanced when color was attended. To accomplish this, we inclusively masked the color-specific subsequent memory contrast described above (p < 0.001) with the relevant subsequent memory × attention interaction contrast [(color only > item only) × (attend color > attend location), thresholded at p < 0.05, giving a conjoint threshold of p < 0.0005 (see Materials and Methods)]. Note that the interaction contrast on its own merely identifies regions that show differential effects as a function of attention but does not guarantee that the subsequent memory effects are themselves significant. The outcome of this procedure identified two clusters in which color-specific subsequent memory effects were modulated by attention to color, one in right parahippocampal gyrus and the other in left peristriate cortex (in the vicinity of the color-processing region V4) (Fig. 3A; Table 4, boldface clusters).

Attention to location.

The analogous procedure was used to identify location-specific subsequent memory effects that were greater when location was attended [i.e., the location-specific subsequent memory contrast, inclusively masked with the interaction (location only > item only) × (attend location > attend color)]. This procedure revealed one cluster, in right superior parietal cortex [approximate Brodmann area (BA) 7] (Fig. 3B, Table 4, asterisked cluster), in which subsequent memory effects for location were enhanced when location was attended. It should be noted that whereas the numerical magnitude of the parameter estimate for color memory in the attend-color condition appears large in this cluster, the associated variance renders it unreliable. In fact, by definition none of the voxels in the cluster can exhibit any significant color-subsequent memory effects, as the cluster was defined by an exclusive mask that removes any voxels showing a color effect below p < 0.05. Indeed, analysis of the parameter estimates confirms that the main color effect in the cluster is not significant (p > 0.09), nor are the individual color effects (attend color: p > 0.08, attend location: p > 0.1). Evidence of an interaction between attention to color and memory for color is not present either (p > 0.2). Thus, the region reliably exhibits a location-selective subsequent memory effect (and does not show any color-subsequent memory effects).

Generic mechanisms of encoding attended features.

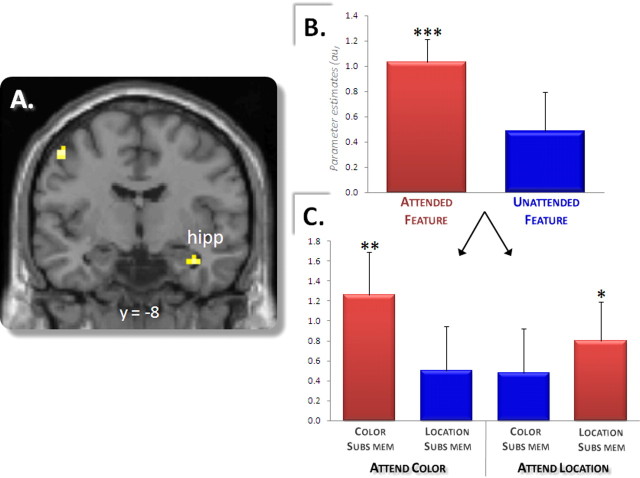

The previous analyses identified feature-specific encoding-related activity that was modulated by feature-specific attention. These analyses do not address the question, however, of whether regions can be found that although sensitive to attention and encoding success are not feature specific. In other words, are there regions that act more generically to encode attended information, regardless of whether the information is color or location? To investigate this question, we searched for regions in which subsequent memory effects were selective for the attended feature regardless of which feature was attended. To accomplish this, we computed the main subsequent memory effect for the attended versus the nonattended feature; in other words, the subsequent memory effect for color when color was attended [(color only > item only)attend color] collapsed with the analogous contrast for location [(location only > item only)attend location]. Subsequent memory effects for attended versus nonattended features were obtained in several regions, the most robust of which was identified in right hippocampus (Fig. 4A, Table 5). Analysis of the peak parameter estimates for the hippocampal cluster (Fig. 4B,C) revealed that whereas this region demonstrated robust subsequent memory effects for color or location when attention was directed toward each feature (p < 0.001), it did not do so when attention was directed toward the alternative feature (p > 0.07). In addition, the difference between the magnitudes of subsequent memory effects for attended versus nonattended features was itself significant (p < 0.05). Thus, this hippocampal region showed significantly greater activity when attended information was successfully rather than unsuccessfully encoded, indicating that the region indexes successful encoding of attended but not of nonattended information. To further test the reliability of this hippocampal effect, we assessed whether the effect survived SVC using an anatomical mask of the bilateral MTL (Johnson et al., 2008). The effect survived this correction (p < 0.05).

Figure 4.

Encoding attended contextual information. A, A right hippocampal (hipp) region shows subsequent memory effects for contextual information that is selectively attended (regardless of which feature is attended) and does not show significant effects for information that is not attended. B, Mean parameter estimates (and SEs) of subsequent memory effects for attended and nonattended contextual (source) information in the peak of the right hippocampal cluster. C, Parameter estimates for right hippocampal subsequent memory effects broken down by feature and attention condition. *p < 0.05, **p < 0.005, ***p < 0.0001.

Table 5.

Regions showing subsequent memory effects for attended features, regardless of whether the feature was color or location

| Coordinates (x, y, z) | Peak z (no. of voxels) | Region | ∼BA |

|---|---|---|---|

| 39, −12, −24 | 4.01 (19) | R hippocampus | |

| −48, −9, 51 | 3.76 (10) | L precentral gyrus | 4 |

| −51, −63, 42 | 3.25 (7) | L ventral posterior parietal cortex (∼supramarginal gyrus) | 40/39 |

| 66, −15, −12 | 3.34 (6) | R middle temporal gyrus | 22 |

| −45, −57, 3 | 3.21 (6) | L middle temporal gyrus | 22 |

| −12, −93, 9 | 3.56 (28) | L middle occipital gyrus | 18 |

z values refer to the peak of the cluster. L, Left; R, right.

Another notable region identified in this contrast was left ventral posterior parietal cortex (PPC) (Table 5, ∼BA 40/39). Given the prominence of this region in attention theories (Corbetta et al., 2008), this finding may offer a potential clue as to how encoding-related hippocampal activity could be modulated by attention. In fact, analysis of peak parameter estimates for this ventral PPC cluster revealed a pattern of effects similar to that found for right hippocampus; specifically, the region not only demonstrated the effect of interest (subsequent memory effect for attended features, p < 0.001) but also showed no hint of an effect for nonattended features (p > 0.3), and this difference between attended versus nonattended subsequent memory effects was significant (p < 0.005). Thus, as with the hippocampus, this ventral PPC region appeared to respond vigorously to contextual features of study items only when those features were explicitly attended and successfully encoded.

Discussion

The aim of the present study was to elucidate the neural mechanisms that determine the subset of contextual information encoded into an episodic memory. Consistent with the findings of previous behavioral studies (Meiser and Sattler, 2007; Meiser et al., 2007), the behavioral results indicated that successful encoding of a contextual feature is modulated by whether attention is directed toward or away from the feature. The fMRI findings suggest that that this attentional modulation of memory encoding is mediated by interactions between the cortex and MTL, especially the hippocampus. A double dissociation was identified between attentionally sensitive cortical subsequent memory effects for color or location information. In contrast, a right hippocampal region demonstrated more general effects of attention on encoding, showing a subsequent memory effect for whichever feature was attended. As discussed below, these findings suggest that attentional enhancement of cortical activity biases the subset of contextual information that is bound into memory by the hippocampus.

The finding that attention to a specific feature increased the probability of later remembering that feature had a neural counterpart in the pattern of subsequent memory effects in right hippocampus. This region (peak: 39, −12, −24) (Fig. 4) robustly predicted later memory for features that were selectively attended, but did not do so for “nonattended” features. Significantly, these attentionally sensitive hippocampal subsequent memory effects were found for study trials that were later associated with memory for a single contextual feature but not for trials for which both features were later remembered. This pattern stands in marked contrast to the one demonstrated by a nearby hippocampal region identified in our previous study (peak: 27, −15, −15), which showed subsequent memory effects exclusively for trials on which both contextual features were successfully encoded. Unlike the previous result, the present finding is seemingly at odds with the idea that the encoding-related hippocampal activity increases with the amount of contextual information encoded (Staresina and Davachi, 2008). This raises the question of why, in the present study, the right hippocampus showed a greater response when fewer (single vs both) contextual associations were encoded.

The answer to this question may lie in the different study tasks used in the two experiments. The present experiment used an incidental task that explicitly directed attention to one feature at a time. In contrast, volunteers in our previous experiment were aware that their memory was to be tested for both contextual features and that their performance would benefit if they attended to both features. Thus, task-relevant information in the present study was each feature in isolation, whereas in the previous study it was the conjunction of the two features. Attending to task-relevant features has been shown to modulate the magnitude of the cortical activity engaged to process those features (Corbetta et al., 1990, 1991). Therefore, to the extent that enhanced cortical activity is reflected in enhanced hippocampal activity, the hippocampus should be associated with single-feature encoding in the present study and with feature conjunctions in the previous study. This was indeed the pattern of findings observed across the two studies in the right hippocampus. Thus, a single variable—task relevance of the contextual feature or features—can account for the disparate patterns of hippocampal activity across the two studies.

Based on this pattern of findings, we propose that this right hippocampal region is biased to encode the contextual features that are most relevant to current behavioral goals and to which, therefore, top-down attentional resources are directed. Extending the perspective of “biased competition” models of attention (Wolfe, 1994; Desimone and Duncan, 1995) (see also Bundesen, 1990) to encoding mechanisms, the hippocampus may be encoding the outcome of competition between feature-specific cortical processing streams, the “winner” being the stream in which processing was enhanced by top-down signals conveying behavioral relevance. By this argument, the hippocampus does not simply encode the totality of the available contextual information, but rather the subset of information that is most behaviorally relevant.

The foregoing model suggests that the neural mechanism implementing this hippocampal bias may not be a direct modulation of hippocampal activity by attention but rather a modulation of the cortical input to the hippocampus. In other words, because feature-specific cortical activity can be enhanced in firing rate or magnitude by selective attention, the effects of attention on encoding seem to be mediated through enhanced cortical activity being more effectively processed by the hippocampus.

The present findings provide strong support for this proposal, in that feature-specific cortical subsequent memory effects were indeed enhanced by feature-specific attention. The loci of these effects are consistent with a large literature on the dissociation of object-directed and spatially directed processing (Mishkin and Ungerleider, 1983; for review, see Goodale et al., 2004), in that subsequent memory effects were found primarily within the “dorsal” processing stream for location and in the “ventral” stream for color. Crucially, a subset of these regions showed enhanced subsequent memory effects when attention was directed toward the relevant feature. For instance, a region in the vicinity of left V4—a color-processing region previously shown to be modulated by attention to color (Pardo et al., 1990; Bundesen et al., 2002)—showed a color-selective subsequent memory effect that was larger in the attend-color condition. Similarly, an attentionally sensitive subsequent memory effect for location was found in a superior parietal region sensitive to visuospatial attention (for review, see Corbetta et al., 2008). The study therefore succeeded in its aim of identifying cortical regions in which activity was both sensitive to whether a specific contextual feature was attended and predictive of later memory for that feature. Together with the hippocampal findings, the present data provide mechanistic support for the idea that what is bound into memory by the hippocampus is the pattern of cortical activity exhibiting a relatively strong neural signal, with this cortical signal being enhanced by selective attention. Note that whereas the features of the study episode manipulated here were perceptual, the proposed mechanism is predicted to apply to any feature of an episode that can be the object of selective attention, including conceptual information.

It should be noted that whereas the aim of the present study was to investigate whether the “selective encoding” of a subset of information constituting an event is mediated in part by selective attention to that information, we remain agnostic as to whether such information is consciously experienced or not. Our model describes selective encoding at the level of neural mechanisms, rather than conscious experience or perception. In other words, because selective attention to specific informational content serves to enhance the cortical processing of that information, we propose that this enhanced processing is more effectively encoded by hippocampal mechanisms, thus engendering a mechanism by which certain information is remembered, whereas other information is not.

Finally, it should be noted that these attention-sensitive subsequent memory effects occurred against a backdrop of attention-“insensitive” effects for both item and source information (Fig. 2). The regions exhibiting an apparent insensitivity to the present attentional manipulation (including a left hippocampal region, posterior to the right hippocampal region that was sensitive to attention) are in the vicinity of those identified in numerous previous studies of episodic encoding (Davachi, 2006). The seeming insensitivity of these effects to the attention manipulation does not, however, force the conclusion that encoding mechanisms supported by these regions operate independently of attention. The attentional manipulation used here modulated “top-down” or goal-directed attention. The manipulation directed volunteers to volitionally attend to one contextual feature at a time, thus biasing the allocation of attentional resources to specific featural information. Consequently, regions in which activity did not differ according to attention condition, but nonetheless predicted later source or item memory, may have been influenced by other attentional processes operating independently of our experimental manipulation. In the case of source encoding, for instance, the activity might reflect encoding of feature information captured through “bottom-up” attentional processes (Corbetta et al., 2008). This idea is consistent with the conclusions of a recent review of the subsequent memory literature that stresses the importance of specifying the contributions of both top-down and bottom-up attention to encoding (Uncapher and Wagner, 2009).

In conclusion, the present findings suggest that the information incorporated into an episodic memory representation is selected, at least in part, by the manner in which attention is allocated at encoding. Here we show that goal-directed attention to a particular feature of an event enhances the cortical activity responsible for processing that feature. Crucially, this “gain modulation” is reflected in the pattern of encoding-related activity in the hippocampus, in that an anterior hippocampal region demonstrated subsequent memory effects only for attended features. Together, the present and previous findings (Uncapher et al., 2006) suggest that episodic encoding in general, and hippocampally mediated encoding in particular, are biased in favor of those features of an episode that are most relevant for current behavioral goals [for similar proposals based on research with rodents, see Morris and Frey (1997) and Kentros et al. (2004)]. In other words, the hippocampus may not indiscriminately bind all available contextual information into memory, but rather binds that information which is most behaviorally relevant.

Footnotes

M.R.U. was supported by National Institute of Mental Health (NIMH) National Research Service Award MH14599-26A1 and NIMH Grant MH074528. We thank the members of the UCI Research Imaging Center for their assistance with fMRI data acquisition.

References

- Ashburner J, Friston KJ. Nonlinear spatial normalization using basis functions. Hum Brain Mapp. 1999;7:254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayen UJ, Murnane K, Erdfelder E. Source discrimination, item detection, and multinomial models of source monitoring. J Exp Psychol Learn Mem Cogn. 1996;22:197–215. [Google Scholar]

- Bundesen C. A theory of visual attention. Psychol Rev. 1990;97:523–547. doi: 10.1037/0033-295x.97.4.523. [DOI] [PubMed] [Google Scholar]

- Bundesen C, Larsen A, Kyllingsbaek S, Paulson OB, Law I. Attentional effects in the visual pathways: a whole-brain PET study. Exp Brain Res. 2002;147:394–406. doi: 10.1007/s00221-002-1243-1. [DOI] [PubMed] [Google Scholar]

- Chun MM, Turk-Browne NB. Interactions between attention and memory. Curr Opin Neurobiol. 2007;17:177–184. doi: 10.1016/j.conb.2007.03.005. [DOI] [PubMed] [Google Scholar]

- Cocosco C, Kollokian V, Kwan RS, Evans A. Brainweb: online interface to a 3D MRI simulated brain database. Neuroimage. 1997;5:425. [Google Scholar]

- Corbetta M, Miezin FM, Dobmeyer S, Shulman GL, Petersen SE. Attentional modulation of neural processing of shape, color, and velocity in humans. Science. 1990;248:1556–1559. doi: 10.1126/science.2360050. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Miezin FM, Dobmeyer S, Shulman GL, Petersen SE. Selective and divided attention during visual discriminations of shape, color, and speed: functional anatomy by positron emission tomography. J Neurosci. 1991;11:2383–2402. doi: 10.1523/JNEUROSCI.11-08-02383.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: from environment to theory of mind. Neuron. 2008;58:306–324. doi: 10.1016/j.neuron.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in humans. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H. The hippocampal system and declarative memory in animals. J Cogn Neurosci. 1992;4:217–231. doi: 10.1162/jocn.1992.4.3.217. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate visual cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fisher RA. Statistical methods for research workers. London: Oliver and Boyd; 1950. [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline J, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- Friston KJ, Fletcher P, Josephs O, Holmes A, Rugg MD, Turner R. Event-related fMRI: characterizing differential responses. Neuroimage. 1998;7:30–40. doi: 10.1006/nimg.1997.0306. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny W, Phillips C, Kiebel S, Hinton G, Ashburner J. Classical and Bayesian inference in neuroimaging: theory. Neuroimage. 2002;16:465–483. doi: 10.1006/nimg.2002.1090. [DOI] [PubMed] [Google Scholar]

- Geusebroek JM, Burghouts GJ, Smeulders AWM. The Amsterdam library of object images. Int J Comput Vis. 2005;61:101–112. [Google Scholar]

- Goodale MA, Westwood DA, Milner AD. Two distinct modes of control for object-directed action. Prog Brain Res. 2004;144:131–144. doi: 10.1016/s0079-6123(03)14409-3. [DOI] [PubMed] [Google Scholar]

- Greenhouse GW, Geisser S. On methods in the analysis of repeated measures designs. Psychometrika. 1959;49:95–112. [Google Scholar]

- Johnson JD, Muftuler LT, Rugg MD. Multiple repetitions reveal functionally and anatomically distinct patterns of hippocampal activity during continuous recognition memory. Hippocampus. 2008;18:975–980. doi: 10.1002/hipo.20456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Josephs O, Henson RN. Event-related functional magnetic resonance imaging: modelling, inference and optimization. Philos Trans R Soc Lond B Biol Sci. 1999;354:1215–1228. doi: 10.1098/rstb.1999.0475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kentros CG, Agnihotri NT, Streater S, Hawkins RD, Kandel ER. Increased attention to spatial context increases both place field stability and spatial memory. Neuron. 2004;42:283–295. doi: 10.1016/s0896-6273(04)00192-8. [DOI] [PubMed] [Google Scholar]

- Lazar NA, Luna B, Sweeney JA, Eddy WF. Combining brains: a survey of methods for statistical pooling of information. Neuroimage. 2002;16:538–550. doi: 10.1006/nimg.2002.1107. [DOI] [PubMed] [Google Scholar]

- Livingstone M, Hubel D. Segregation of form, color, movement and depth: anatomy, physiology and perception. Science. 1988;240:740–749. doi: 10.1126/science.3283936. [DOI] [PubMed] [Google Scholar]

- Meiser T, Bröder A. Memory for multidimensional source information. J Exp Psychol Learn Mem Cogn. 2002;28:116–137. doi: 10.1037/0278-7393.28.1.116. [DOI] [PubMed] [Google Scholar]

- Meiser T, Sattler C. Boundaries of the relation between conscious recollection and source memory for perceptual details. Conscious Cogn. 2007;16:189–210. doi: 10.1016/j.concog.2006.04.003. [DOI] [PubMed] [Google Scholar]

- Meiser T, Sattler C, von Hecker U. Metacognitive inferences in memory judgments: The role of perceived differences in item recognition. Q J Exp Psychol. 2007;60:1015–1040. doi: 10.1080/17470210600875215. [DOI] [PubMed] [Google Scholar]

- Meiser T, Sattler C, Weisser K. Binding of multidimensional context information as a distinctive characteristic of remember judgments. J Exp Psychol Learn Mem Cogn. 2008;34:32–49. doi: 10.1037/0278-7393.34.1.32. [DOI] [PubMed] [Google Scholar]

- Mishkin M, Ungerleider LG. Object vision and spatial vision: two cortical pathways. Trends Neurosci. 1983;6:414–417. [Google Scholar]

- Morris RG, Frey U. Hippocampal synaptic plasticity: role in spatial learning or the automatic recording of attended experience? Philos Trans R Soc Lond B Biol Sci. 1997;352:1489–1503. doi: 10.1098/rstb.1997.0136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch M. Memory and working with memory—a component process model based on modules and central systems. J Cogn Neurosci. 1992;4:257–267. doi: 10.1162/jocn.1992.4.3.257. [DOI] [PubMed] [Google Scholar]

- Pardo JV, Pardo PJ, Janer KW, Raichle ME. The anterior cingulate cortex mediates processing selection in the Stroop attentional conflict paradigm. Proc Natl Acad Sci U S A. 1990;87:256–259. doi: 10.1073/pnas.87.1.256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AP, Henson RN, Dolan RJ, Rugg MD. fMRI correlates of the episodic retrieval of emotional contexts. Neuroimage. 2004;22:868–878. doi: 10.1016/j.neuroimage.2004.01.049. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Davachi L. Selective and shared contributions of the hippocampus and perirhinal cortex to episodic item and associative encoding. J Cogn Neurosci. 2008;20:1478–1489. doi: 10.1162/jocn.2008.20104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Starns JJ, Hicks JL. Source dimensions are retrieved independently in multidimensional monitoring tasks. J Exp Psychol Learn Mem Cogn. 2005;31:1213–1220. doi: 10.1037/0278-7393.31.6.1213. [DOI] [PubMed] [Google Scholar]

- Uncapher MR, Wagner AD. Posterior parietal cortex and episodic encoding: insights from fMRI subsequent memory effects and dual attention theory. Neurobiol Learn Mem. 2009;91:139–154. doi: 10.1016/j.nlm.2008.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uncapher MR, Otten LJ, Rugg MD. Episodic encoding is more than the sum of its parts: an fMRI investigation of multifeatural contextual encoding. Neuron. 2006;52:547–556. doi: 10.1016/j.neuron.2006.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe JM. Guided search 2.0: a revised model of visual search. Psychon Bull Rev. 1994;1:202–238. doi: 10.3758/BF03200774. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. A unified statistical approach to determining statistically significant signals in images of cerebral activation. Hum Brain Mapp. 1996;4:58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Zeki S, Marini L. Three cortical stages of colour processing in the human brain. Brain. 1998;121:1669–1685. doi: 10.1093/brain/121.9.1669. [DOI] [PubMed] [Google Scholar]