Abstract

The control exerted by a stimulus associated with an extinction component (S−) on observing responses was determined as a function of its temporal relation with the onset of the reinforcement component. Lever pressing by rats was reinforced on a mixed random-interval extinction schedule. Each press on a second lever produced stimuli associated with the component of the schedule in effect. In Experiment 1 a response-dependent clock procedure that incorporated different stimuli associated with an extinction component of a variable duration was used. When a single S− was presented throughout the extinction component, the rate of observing remained relatively constant across this component. In the response-dependent clock procedure, observing responses increased from the beginning to the end of the extinction component. This result was replicated in Experiment 2, using a similar clock procedure but keeping the number of stimuli per extinction component constant. We conclude that the S− can function as a conditioned reinforcer, a neutral stimulus or as an aversive stimulus, depending on its temporal location within the extinction component.

Keywords: observing responses, conditioned reinforcement, clock stimuli, lever pressing, rats

An observing response is an operant that exposes an organism to discriminative stimuli without affecting the availability of primary reinforcement. Wyckoff (1952, 1969) described a procedure to study observing behavior that consisted of exposing pigeons to a mixed schedule of reinforcement in which fixed interval (FI) 30 s and extinction (EXT) components alternated randomly. He used an experimental chamber containing a food tray, a response key, and a pedal located on the floor of the chamber below the key. The response key was illuminated with a white light during both FI and EXT unless the pigeons stepped on the pedal (observing response), which changed the keylight to red during FI and to green during EXT. Key pecks were reinforced according to the FI schedule independently of the presses on the pedal. Wyckoff also exposed another group of pigeons to a control condition in which stepping on the pedal changed the color of the key, but that color was not correlated with the reinforcement schedule in effect. He reported that significantly more observing responses were emitted when the stimuli were correlated with the component of the mixed schedule in effect than when it was not. Therefore, Wyckoff demonstrated that the establishment of observing responses depends on the production of discriminative stimuli.

Given that observing responses result in the presentation of originally neutral stimuli without affecting reinforcer delivery, the observing procedure has become important for the study of conditioned reinforcement (Lieving, Reilly, & Lattal, 2006; Shahan, 2002; Shahan, Podlesnik, & Jimenez-Gomez, 2006). Unlike previous procedures for studying conditioned reinforcement, such as chained and second-order schedules (Kelleher, 1966; Kelleher & Gollub, 1962), the observing-response procedure separates the food-producing response from the response that produces the conditioned reinforcer (cf. Dinsmoor, 1983). However, the fact that observing responses produce both stimuli associated with reinforcement delivery (S+) and stimuli associated with omission of the reinforcer (S−) raises several issues of interpretation.

A common point of agreement in explanations of conditioned reinforcement is that the conditions responsible for establishing a stimulus as a conditioned stimulus in Pavlovian conditioning parallel the conditions responsible for establishing a stimulus as a conditioned reinforcer (Fantino, 1977; Kelleher & Gollub, 1962). In line with this explanation, in the observing procedure the stimulus associated with reinforcement delivery (S+) should function as a conditioned reinforcer due to its association with reinforcement. In contrast, the stimulus associated with EXT, by being associated with the omission of reinforcement, should function as a conditioned aversive stimulus or conditioned punisher (e.g., Mulvaney, Dinsmoor, Jwaideh, & Hughes, 1974). Although several studies of observing have supported the notion that the S+ functions as a conditioned reinforcer and the S− as an aversive stimulus (see Dinsmoor, 1983, and Fantino,1977, for reviews), some studies have reported incongruent findings regarding the function of the S−.

Perone and Baron (1980) exposed human participants to a mixed variable-interval (VI) EXT schedule in which pulling a plunger resulted in monetary reinforcement. The participants could press two concurrently available keys to produce the discriminative stimuli. Pressing one key produced the S+ or the S− depending on the ongoing component of the mixed schedule. Pressing the other key only produced the S+ during the VI component and had no consequences during the EXT component. Perone and Baron found that the participants preferred the key that produced both stimuli over the key that produced only the S+. This finding, suggesting that the S− functioned as a conditioned reinforcer, was supported by a subsequent manipulation in which Perone and Baron reported that presentations of S− alone sustained observing behavior.

Attempts have been made to relate Perone and Baron's (1980) findings to an associative explanation of conditioned reinforcement by identifying an artifact in their procedure. Fantino and Case (1983) and Case, Fantino, and Wixted (1985) conducted a series of experiments showing that the S− only reinforces observing when the response producing the primary reinforcer involves a considerable effort; for example, pulling a plunger (see also Dinsmoor, 1983). However, Perone and Kaminski (1992) noted an important difference between Perone and Baron's procedure and those used by Fantino and Case and by Case et al. While Perone and Baron gave minimal instructions about the observing procedure, Fantino and Case, and Case et al., explicitly described the contingencies during the EXT component (i.e., that the reinforcer could not be produced when the S− was presented).

Perone and Kaminski (1992) demonstrated that when the S− was presented without instructing explicitly that an EXT component was in effect, the participants produced the S− more often than a stimulus uncorrelated with the availability of reinforcement. In contrast, when the S− was presented with instructions signaling that the reinforcer was unavailable during EXT, an uncorrelated stimulus was preferred over the S−. Perone and Kaminski suggested that this finding poses a challenge for Pavlovian accounts of conditioned reinforcement. However, their results are consistent with an information account of conditioned reinforcement (see Hendry, 1969). According to this view, both S+ and S− have a reinforcing effect because both reduce uncertainty about the presence or absence of reinforcement delivery. Nevertheless, an explanation in terms of information is unconvincing given that extensive research using the observing procedure has been conducted demonstrating the unsuitability of the information account to explain the findings in conditioned reinforcement literature (see Dinsmoor, 1983; Fantino, 1977). Therefore, rather than supporting an information account of conditioned reinforcement, Perone and Kaminski's findings suggest that the function of the S− in observing procedures is not yet fully understood. Furthermore, the fact that the S− reinforced observing only when the contingencies operating in the observing procedure were not described to the subjects suggests that an accidental relation between the S− and the reinforcer may have been established.

Previous experiments have shown that the function of the S− may be related to the events occurring during the reinforcement component. In one study, Allen and Lattal (1989) exposed pigeons to a mixed variable-interval EXT schedule on one key. Pecks on a second key produced the S+ or the S− depending on the ongoing component. Allen and Lattal found that observing could be maintained by the S− only if food-producing responses during the EXT component reduced the reinforcement frequency during the reinforcement component. They concluded that the S− acquired reinforcing properties given the remote contingency imposed during the reinforcement component.

In another study Escobar and Bruner (2008) exposed two groups of rats to a mixed random interval (RI) 20-s EXT schedule that reinforced presses on one lever. Pressing a second lever produced the S− or the S+ depending on the ongoing component. For one group of rats, adding an unsignaled period without consequences for pressing the levers between the end of EXT and the beginning of the reinforcement component produced a slight decrease in observing-response rate during the EXT component. For the other group of rats, the unsignaled period without consequences added between the end of the RI component and the beginning of the EXT component had no systematic effects on observing rate during the EXT component. This experiment suggests that the effects of the S− on observing are linked to its temporal relation with the reinforcement component.

One problem with the procedure for studying observing responses is that the definition of a stimulus as S+ or S− relies solely on the correlation of the stimulus with the ongoing component of a mixed schedule. This definition ignores the obtained temporal relation between the S− and the reinforcer delivery. The importance of the temporal relation between the stimulus and the reinforcer in the establishment of a previously neutral stimulus as a conditioned reinforcer has been explored extensively using different procedures (e.g., Bersh, 1951; Fantino, 1977, 2001; Jenkins, 1950). One related finding is that the stimulus may acquire multiple functions as aversive, neutral or reinforcing as the stimulus–reinforcer interval is varied (Dinsmoor, Lee, & Brown, 1986; Palya, 1993; Palya & Bevins, 1990; Segal, 1962; Shull, 1979).

In observing procedures, however, the interval between the S− and either the S+ or reinforcer delivery varies unsystematically between a few seconds and the duration of the EXT component. Such variation in the temporal relation between the S− and the reinforcer may be responsible for the apparent contradiction regarding the effects of S− on observing responses in the studies in which mixed schedules of reinforcement were used. For example, an S− occurring repeatedly at the end of the EXT component may occur in temporal contiguity with reinforcement delivery, and thus may acquire reinforcing properties. On the other hand, if the S− occurs consistently at the beginning of the EXT component it would signal long periods without reinforcement and consequently could function as an aversive stimulus.

The present experiments determined the function of the S− in observing procedures by varying the temporal relation between the S− and the onset of the reinforcement component. We report two experiments in which the observing procedure was modified to include different stimuli during the EXT component, each with a different temporal relation with the reinforcement component.

EXPERIMENT 1

In the present experiment, a variation of the observing procedure that Wyckoff (1969) described was used to control the temporal location of the S− across the EXT component. The variation consisted in adding different stimuli (S–s), presented sequentially during successive subintervals of the EXT component. That is, an added clock (Ferster & Skinner, 1957) was produced by observing responses. This procedure allowed us to control the minimum and the maximum interval between each S− and the reinforcement component. In addition, the effects of different durations of the interval between the S− and the reinforcement component were assessed within subjects. Therefore, the purpose of the present experiment was to use an observing procedure with an added clock during the EXT component to determine the effects of the temporal relation between the S− and the reinforcement component on the frequency of observing responses.

Although procedures with an added clock similar to the one used in the present experiment have been reported in the observing literature, in these studies the typical observing procedure consisting of a mixed schedule of food reinforcement was not used. In one study, Hendry and Dillow (1966) reinforced key pecking by pigeons on an FI 6-min reinforcement schedule. Pecks on a second key produced one of three different stimuli, each stimulus associated with a successive 2-min subinterval of the FI (i.e., an optional clock). Hendry and Dillow found that while the number of key pecks for food within the interreinforcer interval (IRI) increased from the preceding to the subsequent reinforcer delivery, observing responses increased from the first to the second subinterval of the IRI and decreased in the third subinterval.

According to Kendall (1972), observing responses in Hendry and Dillow's (1966) experiment could have been sustained exclusively by the stimulus closest in time to the reinforcer delivery. Therefore, Kendall systematically replicated their study by reinforcing key pecking with food on a FI 3-min schedule and added an optional clock that produced three stimuli associated with successive 1-min subintervals of the IRI. In successive conditions Kendall removed either the two stimuli from the beginning of the IRI or the stimuli at the end of the IRI. Kendall found that the number of food-producing responses increased from the preceding reinforcer delivery to the subsequent reinforcer in all conditions. When the observing responses produced the three stimuli of the optional clock or only the stimulus closest to the reinforcer delivery, the number of observing responses increased from the first to the second subinterval of the IRI and decreased in the third subinterval of the IRI, thus replicating Hendry and Dillow's results. In contrast, when the stimulus closest to the subsequent reinforcer was removed, the number of observing responses was close to zero. Kendall concluded that only the stimulus occurring in temporal contiguity with the reinforcer delivery functioned as a conditioned reinforcer (see also Gollub, 1977). However, it is not possible to determine whether the occurrence of observing responses was determined by the clock stimuli within the IRI interval or by the temporal discrimination produced by the FI schedule of reinforcement.

Palya (1993) exposed pigeons to a fixed-time (FT) 60-s schedule of food reinforcement. Pecks on the right key produced the clock stimuli on the center key and pecks on the left key ended stimulus presentation. To insure that key pecking occurred, pecks on the center key occasionally produced food reinforcement on a VI 10-s schedule conjointly with the FT schedule. Palya found that the number of observing responses was low from the preceding reinforcer until halfway through the IRI, and then increased gradually until the subsequent reinforcer delivery. For most subjects, observing responses decreased slightly during the last subinterval of the IRI. Palya concluded that stimuli occurring in proximity to the preceding reinforcer delivery functioned as aversive stimuli, and that stimuli occurring in proximity to the subsequent reinforcer functioned as conditioned reinforcers. As in Hendry and Dillow's (1966) experiment, Palya did not expose the pigeons to conditions in which only the stimulus closest to the reinforcer delivery was presented. Therefore, it is not clear whether observing responses were sustained by the stimuli occurring across the IRI interval or only by the stimulus occurring in temporal contiguity with the reinforcer delivery.

In the present experiment the optional clock procedure was systematically replicated during the EXT component of a mixed schedule of reinforcement, which is the most common free-operant procedure used to study observing responses. To overcome the limitations of the previous studies with optional clocks, the EXT component was of variable duration. In order to determine whether the temporal distribution of observing responses during the EXT component was controlled by the different stimuli presented during the EXT component or only by S+ presentations, a control group of rats was exposed to a procedure in which a single S− was programmed throughout the EXT component.

Method

Subjects

Six experimentally naive male Wistar rats served as subjects. The rats were 3 months old at the beginning of the experiment and were housed individually with free access to water. The access to food was restricted throughout the experiment to keep the rats at 80% of their ad libitum weights.

Apparatus

Three experimental chambers (Med Associates Inc. model ENV-001) equipped with a food tray located at the center of the front panel and two levers, one on each side of the food tray, were used. A minimum force of 0.15 N was required to operate the switch of the levers. The chambers were also equipped with a house light located on the rear panel, a sonalert (Mallory SC 628) that produced a 2900-Hz 70-dB tone, and one bulb with a plastic cover that produced a diffuse light above each lever. A pellet dispenser (Med Associates Inc. model ENV-203) dropped into the food tray 25-mg food pellets that were made by remolding pulverized rat food. Each chamber was placed within a sound-attenuating cubicle equipped with a white-noise generator and a fan. The experiment was controlled and data were recorded from an adjacent room, using an IBM compatible computer through an interface (Med Associates Inc. model SG-503) using Med-PC 2.0 software.

Procedure

The right lever was removed from the chamber and for five sessions each press on the left lever was reinforced with a food pellet. During the next 20 sessions the schedule of reinforcement was gradually increased to RI 20 s. Each session ended after 1 hr or when 30 food pellets were delivered, whichever occurred first. According to response rates during the 20 sessions, rats were assigned to two groups of 3 rats each, in such a way that each group included 1 rat with high, intermediate and low rates.

Discrimination training

One group of rats was exposed to a multiple schedule of reinforcement in which reinforcement and EXT components alternated. The reinforcement component duration was 20 s and consisted of a modified RI schedule in which only one food delivery was scheduled throughout each component but at a random temporal location. The RI schedules consisted of repetitive time cycles in which a fixed probability of reinforcement was assigned to the first response within each cycle. In the present procedure the reinforcement component consisted of four 5-s repetitive cycles. Instead of assigning a fixed probability to the first response in each cycle, only one of the four cycles was selected randomly and a probability of 1.0 was assigned to reinforce with one food pellet the first response within that cycle. During the remainder of the cycle and in the other three cycles no responses were reinforced. The reinforcement component was signaled with a blinking light (S+). Each duration of the EXT component was selected from a list without replacement that included durations of 20, 40, 60, 80, and 100 s, six times each. Thus, the EXT component duration averaged 60 s. Each EXT component was divided into 20-s subintervals such that when the duration of the EXT component was 20 s, only one subinterval occurred. When the duration of the EXT component was 40 s, two subintervals were presented. When the EXT duration was 60, 80 and 100 s, three, four, and five subintervals occurred respectively. The subintervals of the EXT component are numbered 5 to 1 from the beginning to the end of EXT. These subintervals were signaled with tones of increasing intermittencies. During Subinterval 5 a constant tone was presented (S– 5), and during Subinterval 4 the on–off intermittency of the tone was 2-1 s (S– 4). During the Subintervals 3, 2 and 1, on–off intermittencies were 1-1, 0.5-1, 0.1-1 s, respectively.

This condition was in effect for 30 sessions, each consisting of 30 reinforcement and 30 EXT components. The other group of rats (control group) was exposed to a procedure that differed from the clock-procedure group only in that the same S− (a constant tone) was presented throughout the subintervals of the EXT component.

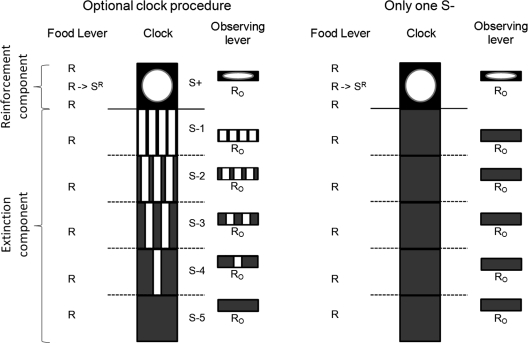

Observing procedure

For both groups of rats, the right lever was installed into the chamber and the multiple schedule of reinforcement was replaced with a mixed schedule. Each press on the right lever during the reinforcement component produced the S+ (blinking light) for 5 s. For the 3 rats that had been exposed to discrimination training using different S–s during the EXT component, each press on the right lever during the EXT component produced the stimuli associated with each subinterval of the EXT component (S– 5 to 1 from the beginning to the end of the EXT component). That is, these rats were exposed to an optional clock. For the 3 rats in the control group, only the constant tone was presented for 5 s after each press on the right lever. For all subjects, stimuli were interrupted whenever a change in subinterval or component occurred. In both components of the mixed schedule, a response on the observing lever during stimulus presentations had no programmed consequences. All other variables were kept as in the previous discrimination training condition. The basic elements of the optional clock procedure and the control-group procedure are shown in Figure 1. Time goes from bottom to top. The middle column shows the clock in each subinterval of the EXT component and the reinforcement component. The clock stimuli were not presented unless an observing response occurred on the right lever. The responses on the left lever produced one food delivery at a random temporal location during the reinforcement component. The dotted horizontal lines indicate that the clock could begin at any subinterval of the EXT component. The solid horizontal line shows the change from the EXT to the reinforcement component. For the control group, the clock consisted of only one stimulus during the EXT component and one during the reinforcement component.

Fig 1.

A diagram of the core elements of the procedure. The squares on the left diagram exemplify the optional clock procedure scheduled during the five subintervals of the EXT components and during the reinforcement component. The different intermittencies of the tone are illustrated with vertical bars within each subinterval. To differentiate the reinforcement component stimuli it was illustrated with an unfilled circle. Responses on the left lever produced reinforcement during the reinforcement component and had no consequences during the EXT component. The clock was not available unless an observing response (RO) occurred on the right lever in which case the corresponding stimulus was presented for 5 s. The dotted horizontal lines exemplify the moment in which the clock could start. The right diagram illustrates the procedure with only one S− during the subintervals of the EXT component.

This condition was in effect for 30 sessions, each consisting of 30 reinforcement and 30 EXT components. Throughout the experiment sessions were conducted daily, 7 days a week and always at the same hour.

Results

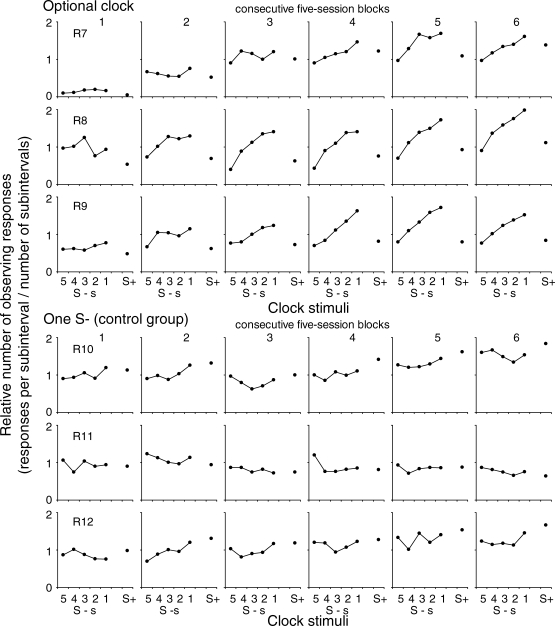

Figure 2 shows the mean relative number of observing responses within each subinterval of the EXT component and during the reinforcement component for each subject across the six successive blocks of five sessions. The variable duration of the EXT component resulted in a different number of subintervals according to its temporal location; that is, subintervals further from the reinforcement component were less frequent. Therefore, the number of observing responses was corrected by dividing the number of responses within each subinterval of the EXT component by the number of occurrences of each subinterval (30, 24, 18, 12 or 6 occurrences for Subintervals 1, 2, 3, 4, and 5, respectively). In the same way, the number of observing responses during the reinforcement component was divided by 30 presentations of the reinforcement component. The upper panels show these data for the subjects that were exposed to the optional clock and the lower panels show the data for the control group with only one S−. For the subjects that were exposed to the optional clock, during the first blocks of sessions the number of observing responses did not vary systematically during the EXT component. During the last blocks of sessions the number of observing responses increased across the EXT component from the preceding to the subsequent reinforcement component, in which the number of observing responses decreased. For the rats in the control group, the number of observing responses did not vary systematically across the subintervals of the EXT component and the reinforcement component.

Fig 2.

Mean relative number of observing responses during successive subintervals of the EXT component and during the reinforcement component across six successive five-session blocks. The upper panels show the data for the subjects that were exposed to the optional clock procedure and the lower panels show the data for the subjects in the control group.

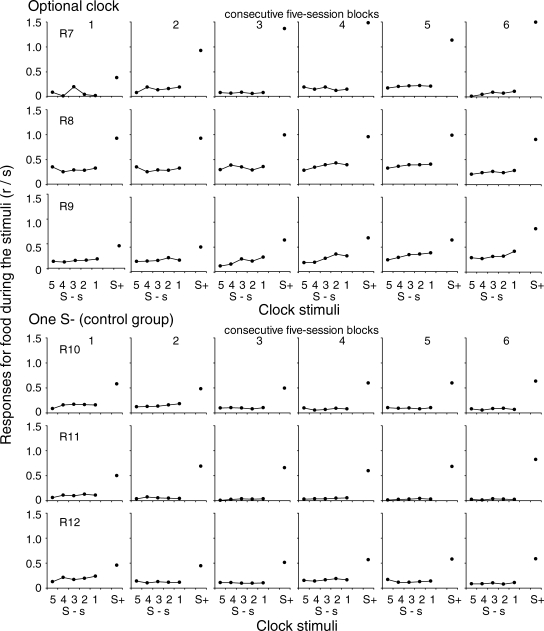

Figure 3 shows the mean rate of responses for food during each subinterval of the EXT component across six successive five-session blocks in the same format used in Figure 2. For the rats that were exposed to the optional clock procedure, during the first blocks of sessions the rate of responses for food did not vary systematically during the EXT component. During the last blocks of sessions the rate of responses for food increased slightly from Subinterval 5 to Subinterval 1 and increased abruptly during the reinforcement component. For the rats that were exposed to the control group across the six blocks of sessions, the rate of responses for food did not vary systematically during the subintervals of the EXT component and was substantially higher during the reinforcement component.

Fig 3.

Mean rate of food responses during the stimuli for each successive subinterval of the EXT component and during the reinforcement component across six successive five-session blocks. See description of Figure 2.

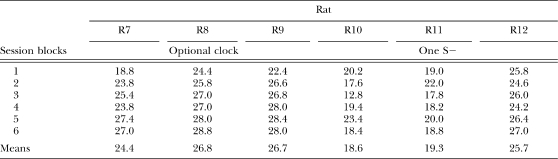

Table 1 shows the mean individual number of reinforcers obtained per session across blocks of five sessions. Apparently, the number of obtained reinforcers was lower for the group of rats that were exposed to the single-S− procedure than for the group of rats that were exposed to the optional-clock procedure. However, a one-way ANOVA showed that the effects of the procedure on the number of obtained reinforcers per sessions were not significant, F (1, 4) = 3.97, p > .05.

Table 1.

Mean number of reinforcers per session during each consecutive five-session block for the rats exposed to the optional-clock procedure and to the single-S− procedure in Experiment 1.

| Session blocks | Rat | |||||

| R7 | R8 | R9 | R10 | R11 | R12 | |

| Optional clock | One S− | |||||

| 1 | 18.8 | 24.4 | 22.4 | 20.2 | 19.0 | 25.8 |

| 2 | 23.8 | 25.8 | 26.6 | 17.6 | 22.0 | 24.6 |

| 3 | 25.4 | 27.0 | 26.8 | 12.8 | 17.8 | 26.0 |

| 4 | 23.8 | 27.0 | 28.0 | 19.4 | 18.2 | 24.2 |

| 5 | 27.4 | 28.0 | 28.4 | 23.4 | 20.0 | 26.4 |

| 6 | 27.0 | 28.8 | 28.0 | 18.4 | 18.8 | 27.0 |

| Means | 24.4 | 26.8 | 26.7 | 18.6 | 19.3 | 25.7 |

Discussion

The present experiment replicated with rats the results reported using pigeons by Hendry and Dillow (1966) and by Kendall (1972), who used an FI schedule of reinforcement with an optional clock and by Palya (1993), who used an FT schedule. These authors reported that observing responses that produced the clock stimuli increased during the IRI and decreased prior to reinforcer delivery. Hendry and Dillow and Kendall also reported that food-producing responses increased gradually across the IRI. In the present study, the number of observing responses increased from the beginning to the end of the EXT component, reached a maximum when the S–1 was produced, and decreased during the reinforcement component when the S+ was produced. Food-producing responses increased only slightly from the beginning to the end of the EXT component, and increased substantially during the reinforcement component. As in the previous studies, the decrease in observing responses during the reinforcement component could be attributed to the fact that the S+ functioned as a strong discriminative stimulus for food-producing responses. The present experiment extends the previous findings to observing procedures involving mixed schedules of reinforcement.

Kendall (1972) suggested that the stimuli associated with the subintervals of the FI closest to the preceding reinforcer had no systematic effect on observing responses because, when he removed those stimuli from the procedure, leaving only the stimuli closest to the subsequent reinforcer, the temporal distribution of observing within the IRI did not vary. Unlike Kendall, we found that when only one S− was presented during the EXT component and the S+ was presented during the reinforcement component, the number of observing responses remained constant throughout the EXT component. Therefore, the gradual increase in the number of observing responses across the EXT component when an optional clock was implemented was due to the clock stimuli during the EXT component and not only to the S+ presentation. Given the procedural differences between Kendall's study and the present experiment, the origin of this difference in results is not clear. One possibility is that the temporal discrimination produced by the FI schedule in Kendall's study may have controlled the increasing pattern of observing responses during the IRI in the absence of the clock stimuli.

A notable aspect of the results of the present experiment is the difference between the number of observing responses with the optional clock procedure and with the single S− procedure. It is worth noticing that the number of observing responses during the EXT component was higher during the last subintervals of the EXT component when the clock procedure was used than when the single S− procedure was implemented. In contrast, the number of observing responses during the first subintervals was lower with the optional-clock procedure than with the single S− procedure. During the middle subintervals, the number of observing responses was similar with both procedures. Relative to a baseline with a stimulus occupying the entire EXT component, a stimulus in a relatively fixed temporal location may increase, decrease or have no effect on the number of observing responses. These findings evidence the multiple functions of the S− as reinforcing, neutral and aversive.

EXPERIMENT 2

A methodological problem associated with using serial stimuli during an IRI is that if the duration of the IRI is kept constant, the temporal discrimination generated by food delivery hampers the interpretation of the effects of the serial stimuli. On the other hand, if an IRI of a variable duration is used then the number of stimuli in the sequence varies. For example, in Experiment 1 the stimuli closest in time to the onset of the reinforcement component occurred more frequently than those occurring further from the reinforcement component. Therefore, it is possible that the increasing number of observing responses across the subintervals of the EXT component found in Experiment 1, rather than resulting from the differences in the temporal location of the stimuli, could have been the result of the different numbers of stimuli presentations that were differentially paired with the reinforcement component (cf. Bersh, 1951, Experiment 2). Experiment 2 was conducted to eliminate the number of pairings as an explanation for the results of the previous experiment. Therefore, the observing procedure with an added optional clock used in Experiment 1 was used again, but all the clock stimuli were scheduled. Thus, the number of programmed stimulus/reinforcement-component pairings was held constant. To diminish the temporal discrimination produced by food delivery, an interval of variable duration without programmed consequences was added before the availability of the serial stimuli.

Method

Subjects and Apparatus

The subjects were 6 male Wistar rats kept at 80% of their ad-lib weight. The rats had previous experience with a procedure of observing involving a mixed RI EXT schedule of reinforcement but had no experience with serial-stimuli procedures. The rats were housed within individual home cages with free access to water. The experimental chambers were those used in Experiment 1.

Procedure

Given that the rats had previous experience with observing procedures, without further training the rats were exposed to a mixed schedule of reinforcement on the left lever in which a 20-s reinforcement component alternated with an EXT component averaging 140 s. During the reinforcement component a modified RI schedule identical to the one used in Experiment 1 was used. Therefore, only one food pellet was scheduled at a varying temporal location during the component. During the reinforcement component each press on the right lever produced a blinking light for 5 s.

Each EXT-component duration was randomly selected without replacement from a list composed of durations of 100, 120, 140, 160, and 180 s, six times each. The last 100 s of each EXT component were divided into 20-s subintervals. For 3 rats, during the subintervals of the EXT component, each press on the right lever produced 5-s stimuli correlated with each subinterval of the component. The stimuli consisted of presenting tones with different intermittencies (see Experiment 1). For the other 3 rats (control group) only the 5-s constant tone was produced with each press on the right lever throughout the last 100 s of the EXT component. For all rats, during the first seconds of the EXT component (0, 20, 40, 60, or 80 s) before the availability of the serial stimuli, presses on either lever had no programmed consequences. For all subjects, stimuli were interrupted whenever a change in subinterval or component occurred. This procedure was in effect for 20 sessions in which 30 reinforcement and 30 EXT components were scheduled. Throughout the experiment each session was conducted daily, 7 days a week and always at the same hour.

Results

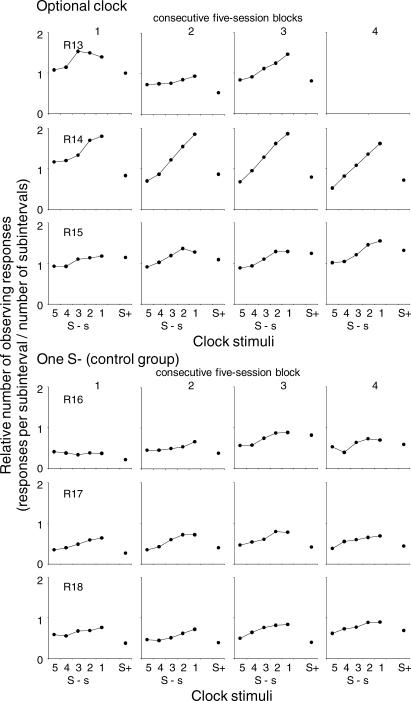

Figure 4 shows for each rat, the number of observing responses during each subinterval of the EXT component and during the reinforcement component, as means of each successive five-session block. The total number of observing responses during each subinterval of the EXT component and the reinforcement component was divided by 30 presentations of each period. The upper panels show the data for the rats exposed to the optional-clock procedure and the lower panels show the data for the rats in the control group with only one S− occurring across the EXT component. Because Rat 13 died before the completion of the experiment, the data for this rat are shown only for sessions 1 through 15.

Fig 4.

Mean relative number of observing responses during successive subintervals of the EXT component and during the reinforcement component across the five successive blocks of five sessions. The upper panels show the data for the subjects that were exposed to the optional clock procedure and the lower panels show the data for the subjects in the control group.

For most rats during the first blocks of sessions the number of observing responses did not vary systematically during the subintervals of the EXT component. However, for Rats 14 and 17 the number of observing responses increased from the beginning to the end of the EXT component. The pattern of observing changed gradually across the blocks of sessions. For all the rats, the number of observing responses increased from the beginning to the end of the EXT component during the last block of sessions. The increase was notably steeper for the rats exposed to the serial stimuli than for the rats exposed to one S− throughout the EXT component. The number of observing responses remained relatively constant during the reinforcement component for all subjects.

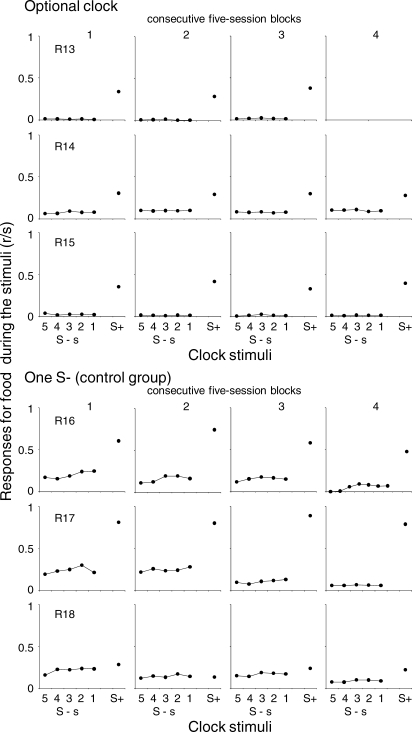

Figure 5 shows the individual mean rates of food responses during the stimuli in the EXT and the reinforcement component of the mixed schedule of reinforcement for each successive five-session block. For all rats, the food-response rate was higher during the reinforcement than during the EXT component. During the EXT component, food-response rate was lower when the serial stimuli were available than when a single S− was programmed. For all rats, food-response rate did not vary systematically during the subintervals of the EXT component nor across the successive blocks of sessions.

Fig 5.

Mean rate of food responses during the stimuli for each successive subinterval of the EXT component and during the reinforcement component across the five blocks of five sessions. See description of Figure 4.

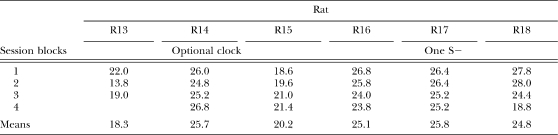

Table 2 shows the mean individual number of reinforcers obtained per session during each five-session block. A one-way ANOVA showed that the number of obtained reinforcers per session did not vary systematically between the rats that were exposed to the optional-clock procedure and those exposed to the procedure with only one S− throughout the EXT component, F (1, 4) = 2.93, p > .05.

Table 2.

Mean number of reinforcers per session during each consecutive five-session block for the rats exposed to the optional-clock procedure and to the single-S− procedure in Experiment 2.

| Session blocks | Rat | |||||

| R13 | R14 | R15 | R16 | R17 | R18 | |

| Optional clock | One S− | |||||

| 1 | 22.0 | 26.0 | 18.6 | 26.8 | 26.4 | 27.8 |

| 2 | 13.8 | 24.8 | 19.6 | 25.8 | 26.4 | 28.0 |

| 3 | 19.0 | 25.2 | 21.0 | 24.0 | 25.2 | 24.4 |

| 4 | 26.8 | 21.4 | 23.8 | 25.2 | 18.8 | |

| Means | 18.3 | 25.7 | 20.2 | 25.1 | 25.8 | 24.8 |

Discussion

Although the number of stimulus–reinforcement component pairings did not vary systematically during the first block of sessions, the pattern of observing responses became an increasing function of the temporal location of the serial stimuli. This finding suggests that the number of stimulus–reinforcement component pairings did not determine the increasing rate of observing across the subintervals of the EXT component. The fact that the increasing pattern of observing responses during the EXT component was flatter with a single S− than with serial stimuli suggests that the findings of the present experiment were not due to the temporal discrimination produced by food-delivery alone, but depended on the serial position of the stimuli relative to the reinforcement component.

Different studies have determined the effects of adding optional clocks to an IRI (e.g., Hendry & Dillow, 1966; Kendall, 1972; Palya, 1993). However, from these studies it was not clear whether observing rate was controlled by the serial stimuli, by food delivery or even by both. The procedure used in the present experiment has one advantage over previous procedures. For example, a control group was used in which a single S− was presented across the EXT component. Thus, the comparison between subintervals with and without stimuli was avoided. This comparison is difficult given that the stimulus duration has to be subtracted from the time base used to calculate the rate of observing (see Dinsmoor, Browne, & Lawrence, 1972, for a similar argument). The control group with a single S− also permitted the conclusion that the temporal discrimination was not responsible for the pattern of observing during the EXT component.

GENERAL DISCUSSION

A large group of studies have focused on showing that in observing procedures the S− functions as an aversive stimulus, in order to support an associative account of conditioned reinforcement (see Dinsmoor 1983; Fantino, 1977 for reviews). In contrast some studies have been conducted to discover whether the S− could reinforce observing behavior, in order to support an information account of conditioned reinforcement (e.g., Lieberman, 1972; Schaub, 1969; Schrier, Thompson, & Spector, 1980). Their results were found to be unreliable or subject to alternative explanations (e.g., Dinsmoor, 1983; Dinsmoor et al., 1972; Fantino, 1977; Mueller & Dinsmoor, 1984). Although most studies found that the S− was not reinforcing, Perone and Kaminski (1992) and Perone and Baron (1980) provide evidence suggesting that the S− can indeed reinforce observing.

Based on the results of the present experiments, we can conclude that the S− can function as an aversive, neutral or reinforcing stimulus depending on its location within the EXT component. Previous attempts to relate the findings of the studies on observing responses to an associative account of conditioned reinforcement assumed that the S+ and the S− are qualitatively different, because the S+ is associated with the reinforcement component and the S− is associated with the EXT component. The problem with this approach in the analysis of observing responses is that the temporal relations between stimuli and reinforcers vary unpredictably. Hence, the control exerted by the S− on observing responses is also unpredictable. The different effects of the S− on observing responses, resulting from the unsystematical variation of the temporal relations between stimuli, have apparently supported both the information and the conditioned reinforcement accounts of conditioned reinforcement.

In studies in which simple schedules of reinforcement were used (FI, fixed time, variable time) it has been reported that stimuli can acquire different functions depending on their temporal relation to reinforcer delivery (Dinsmoor et al., 1986; Palya, 1993; Palya & Bevins, 1990; Segal, 1962; Shull, 1979). Therefore, once the effects of the temporal variables are isolated within the mixed schedule used in an observing procedure, the results are identical to those observed using simple schedules of reinforcement in which no qualitative differences between the different stimuli are assumed.

Previous attempts to relate the findings obtained with the observing procedure to an associative account of conditioned reinforcement have noted the importance of the temporal relations between the stimulus and the reinforcer to endow a stimulus with reinforcing properties (e.g., Auge, 1974; Dinsmoor, 1983; Fantino, 1977). Dinsmoor favored a relative time explanation (cf. Gibbon & Balsam, 1981) for the reinforcing properties of the S+ and for the aversive properties of the S−. Similarly, Fantino argued that the S+ functions as a conditioned reinforcer because it signals a reduction in the time-to-reinforcer interval relative to the IRI (i.e., a delay-reduction hypothesis). In comparison, the S− is not reinforcing because it signals a long time-to-reinforcer interval relative to the IRI (see also Auge, 1974). The present results are congruent with such notions by suggesting that the S− functioned as a more effective conditioned reinforcer when it could occur in temporal proximity with the reinforcement, and that its reinforcing properties decreased as the S− was gradually separated from the reinforcement component. Further increases in the stimulus–reinforcer interval resulted in the S− functioning as an aversive stimulus, or conditioned punisher. One contribution of the present experiments is to suggest that the simple dichotomy between S− and S+ is inadequate when it is applied to observing-response procedures. Additionally, the present results suggest that a nominal S− can signal a reduction in the delay to the reinforcer relative to the IRI.

This interpretation is congruent with several results in observing studies. For example, in Dinsmoor's group studies relatively long stimuli (30 s) were used (e.g., Dinsmoor et al., 1972; Mueller & Dinsmoor, 1984; Mulvaney et al., 1974). This procedural detail may have resulted in the S− occupying the EXT component almost entirely. Therefore, the inability of the S− to reinforce observing reported in those studies may have been the result of long delays imposed between S− onset and the reinforcement component.

The temporal relations between the stimuli and reinforcer are complex when relatively brief stimuli are used. Specifically, the temporal distance between the S− and the reinforcement component depends on the temporal distribution of observing responses. In Gaynor and Shull's (2002) study, an observing procedure involving the typical mixed schedule of reinforcement was used and observing responses produced the S+ or the S− for 5 s. Gaynor and Shull found that the interstimulus interval was considerably longer during the EXT component than during the reinforcement component. However, this finding is not universal. Brief stimuli durations also allow the S− to occur at least occasionally in temporal contiguity with the reinforcement component. For example, Escobar and Bruner (2002) and Kelleher, Riddle, and Cook (1962) reported that observing responses occurred in repetitive patterns consisting of successive observing responses producing S–s. Once an S+ was presented, food responses replaced observing responses until several responses went unreinforced. After that, successive observing responses occurred again. Such distribution of observing allowed the S− to occur in temporal proximity with the reinforcement component and produced results apparently congruent with an information account of observing. That is, the S− was presented more often than the S+.

Although the results of the present study showed that the S− functioned as a conditioned reinforcer when it occurred at the end of the EXT component, this finding is not congruent with an information account of conditioned reinforcement. For example, according to Egger and Miller (1962, 1963) the stimuli occurring early in the sequence should be more informative of the reinforcer delivery then the last stimuli of the clock.

Given that in the present experiment tones with gradually increasing intermittencies were used as clock stimuli during the EXT component, an alternative explanation for the results based on the inherent effects that the tones had on behavior could be offered. Reed and Yoshino (2001, 2008) showed that a loud tone can be used as a punishing stimulus in rats. It can be argued that the constant tone used as S–5 was an aversive stimulus and increasing the intermittency as the EXT component elapsed may have decreased the aversive properties of the tone. From this argument, we might expect an increase in observing rate from the beginning to the end of the EXT component, like that found in the present experiments. Two facts make this explanation unlikely. First, the tones used in Reed and Yoshino's studies as aversive stimuli varied between 100 and 125 dB and the tones used in the present study were considerably quieter (70 dB) and presumably much less aversive. Second, the control group with a constant tone (single S−) across the EXT component in Experiment 1 controlled rates of observing during the EXT component that were intermediate to those found with the clock stimuli. Had the constant tone functioned as an aversive stimulus, the observing rates in the control group would have been the lowest throughout the EXT component.

Acknowledgments

This research was part of a doctoral dissertation by the first author supported by the fellowship 176012 from CONACYT. This manuscript was prepared at West Virginia University during a postdoctoral visit of the first author supported by a fellowship from the PROFIP of DGAPA-UNAM. The first author is indebted to Richard Shull for his many contributions to this work and to Florente López for his helpful comments and suggestions. Thanks also to Alicia Roca for her comments on previous versions of this paper.

REFERENCES

- Auge R.J. Context, observing behavior, and conditioned reinforcement. Journal of the Experimental Analysis of Behavior. 1974;22:525–533. doi: 10.1901/jeab.1974.22-525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen K.D, Lattal K.A. On conditioned reinforcing effects of negative discriminative stimuli. Journal of the Experimental Analysis of Behavior. 1989;52:335–339. doi: 10.1901/jeab.1989.52-335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bersh P.J. The influence of two variables upon the establishment of a secondary reinforcer for operant responses. Journal of Experimental Psychology. 1951;41:62–73. doi: 10.1037/h0059386. [DOI] [PubMed] [Google Scholar]

- Case D.A, Fantino E, Wixted J. Human observing: Maintained by negative informative stimuli only if correlated with improvement in response efficiency. Journal of the Experimental Analysis of Behavior. 1985;43:289–300. doi: 10.1901/jeab.1985.43-289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A. Observing and conditioned reinforcement. Behavioral and Brain Sciences. 1983;6:693–728 (includes commentary). [Google Scholar]

- Dinsmoor J.A, Browne M.P, Lawrence C.E. A test of the negative discriminative stimulus as a reinforcer of observing. Journal of the Experimental Analysis of Behavior. 1972;18:79–85. doi: 10.1901/jeab.1972.18-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dinsmoor J.A, Lee D.M, Brown M.M. Escape from serial stimuli leading to food. Journal of the Experimental Analysis of Behavior. 1986;46:259–279. doi: 10.1901/jeab.1986.46-259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egger M.D, Miller N.E. Secondary reinforcement in rats as a function of information value and reliability of the stimulus. Journal of Experimental Psychology. 1962;64:97–104. doi: 10.1037/h0040364. [DOI] [PubMed] [Google Scholar]

- Egger M.D, Miller N.E. When is a reward reinforcing? An experimental study of the information hypothesis. Journal of Comparative and Physiological Psychology. 1963;56:132–137. [Google Scholar]

- Escobar R, Bruner C.A. Effects of reinforcement frequency and extinction-component duration within a mixed schedule of reinforcement on observing responses in rats. Mexican Journal of Behavior Analysis. 2002;28:41–46. [Google Scholar]

- Escobar R, Bruner C.A. Effects of the contiguity between the extinction and the reinforcement components in observing-response procedures. Mexican Journal of Behavior Analysis. 2008;34:333–347. [Google Scholar]

- Fantino E. Conditioned reinforcement: Choice and information. In: Honig W.K, Staddon J.E.R, editors. Handbook of operant behavior. Englewood Cliffs, NJ: Prentice Hall; 1977. In. [Google Scholar]

- Fantino E. Context: a central concept. Behavioural Processes. 2001;54:95–110. doi: 10.1016/s0376-6357(01)00152-8. [DOI] [PubMed] [Google Scholar]

- Fantino E, Case D.A. Human observing: Maintained by stimuli correlated with reinforcement but not extinction. Journal of the Experimental Analysis of Behavior. 1983;40:193–210. doi: 10.1901/jeab.1983.40-193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C.B, Skinner B.F. Schedules of reinforcement. New York: Appleton-Century-Crofts; 1957. [Google Scholar]

- Gaynor S.T, Shull R.L. The generality of selective observing. Journal of the Experimental Analysis of Behavior. 2002;77:171–187. doi: 10.1901/jeab.2002.77-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbon J, Balsam P. Spreading association in time. In: Locurto C.M, Terrace H.S, Gibbon J, editors. Autoshaping and conditioning theory. New York: Academic Press; 1981. pp. 219–253. In. [Google Scholar]

- Gollub L.R. Conditioned reinforcement: Schedule effects. In: K Honig W, Staddon J.E.R, editors. Handbook of operant behavior. Englewood Cliffs, NJ: Prentice Hall; 1977. pp. 288–312. In. [Google Scholar]

- Hendry D.P. Introduction. In: Hendry D.P, editor. Conditioned reinforcement. Homewood, IL: Dorsey Press; 1969. pp. 1–35. In. [Google Scholar]

- Hendry D.P, Dillow P.V. Observing behavior during interval schedules. Journal of the Experimental Analysis of Behavior. 1966;9:337–349. doi: 10.1901/jeab.1966.9-337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins W.O. A temporal gradient of derived reinforcement. American Journal of Psychology. 1950;63:237–243. [PubMed] [Google Scholar]

- Kelleher R.T. Chaining and conditioned reinforcement. In: Honig W.K, editor. Operant behavior: Areas of research and application. New York: Appleton-Century-Crofts; 1966. pp. 160–212. In. [Google Scholar]

- Kelleher R.T, Gollub L.R. A review of positive conditioned reinforcement. Journal of the Experimental Analysis of Behavior. 1962;5:543–597. doi: 10.1901/jeab.1962.5-s543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelleher R.T, Riddle W.C, Cook L. Observing responses in pigeons. Journal of the Experimental Analysis of Behavior. 1962;5:3–13. doi: 10.1901/jeab.1962.5-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall S.B. Some effects of response-dependent clock stimuli in a fixed-interval schedule. Journal of the Experimental Analysis of Behavior. 1972;17:161–168. doi: 10.1901/jeab.1972.17-161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman D.A. Secondary reinforcement and information as determinants of observing behavior in monkeys (Macaca mulatta) Learning and Motivation. 1972;3:341–358. [Google Scholar]

- Lieving G.A, Reilly M.P, Lattal K.A. Disruption of responding maintained by conditioned reinforcement: alterations in response–conditioned-reinforcer relations. Journal of the Experimental Analysis of Behavior. 2006;86:197–209. doi: 10.1901/jeab.2006.12-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mueller K.L, Dinsmoor J.A. Testing the reinforcing properties of S−: A replication of Lieberman's procedure. Journal of the Experimental Analysis of Behavior. 1984;41:17–25. doi: 10.1901/jeab.1984.41-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mulvaney D.E, Dinsmoor J.A, Jwaideh A.R, Hughes L.H. Punishment of observing by the negative discriminative stimulus. Journal of the Experimental Analysis of Behavior. 1974;21:37–44. doi: 10.1901/jeab.1974.21-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palya W.L. Bipolar control in fixed interfood intervals. Journal of the Experimental Analysis of Behavior. 1993;60:345–359. doi: 10.1901/jeab.1993.60-345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palya W.L, Bevins R.A. Serial conditioning as a function of stimulus, response, and temporal dependencies. Journal of the Experimental Analysis of Behavior. 1990;53:65–85. doi: 10.1901/jeab.1990.53-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Baron A. Reinforcement of human observing behavior by a stimulus correlated with extinction or increased effort. Journal of the Experimental Analysis of Behavior. 1980;34:239–261. doi: 10.1901/jeab.1980.34-239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perone M, Kaminski B.J. Conditioned reinforcement of human observing behavior by descriptive and arbitrary verbal stimuli. Journal of the Experimental Analysis of Behavior. 1992;58:557–575. doi: 10.1901/jeab.1992.58-557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed P, Yoshino T. The effect of response-dependent tones on the acquisition of concurrent behavior in rats. Learning and Motivation. 2001;32:255–273. [Google Scholar]

- Reed P, Yoshino T. Effect of contingent auditory stimuli on concurrent schedule performance: An alternative punisher to electric shock. Behavioural Processes. 2008;78:421–428. doi: 10.1016/j.beproc.2008.02.013. [DOI] [PubMed] [Google Scholar]

- Schaub R.E. Response-cue contingency and cue effectiveness. In: Hendry D.P, editor. Conditioned reinforcement. Homewood, IL: Dorsey Press; 1969. pp. 342–356. In. [Google Scholar]

- Schrier A.M, Thompson C.R, Spector N.R. Observing behavior in monkeys (Macaca arctoides): Support for the information hypothesis. Learning and Motivation. 1980;11:355–365. [Google Scholar]

- Segal E.F. Exteroceptive control of fixed-interval responding. Journal of the Experimental Analysis of Behavior. 1962;5:49–57. doi: 10.1901/jeab.1962.5-49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A. Observing behavior: Effects of rate and magnitude of primary reinforcement. Journal of the Experimental Analysis of Behavior. 2002;78:161–178. doi: 10.1901/jeab.2002.78-161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Podlesnik C.A, Jimenez-Gomez C. Matching and conditioned reinforcement rate. Journal of the Experimental Analysis of Behavior. 2006;85:167–180. doi: 10.1901/jeab.2006.34-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shull R.L. The postreinforcement pause: Some implications for the correlational law of effect. In: Zeiler M.D, Harzem P, editors. Advances in analysis of behaviour: Vol. 1. Reinforcement and the organization of behaviour. Chichester, England: Wiley; 1979. pp. 193–221. In. [Google Scholar]

- Wyckoff L.B., Jr The role of observing responses in discrimination learning. Part I. Psychological Review. 1952;59:431–442. doi: 10.1037/h0053932. [DOI] [PubMed] [Google Scholar]

- Wyckoff L.B., Jr . The role of observing responses in discrimination learning. Part II. In: Hendry D.P, editor. Conditioned reinforcement. Homewood, IL: Dorsey Press; 1969. pp. 237–260. In. [Google Scholar]