Introduction

The Health Behaviour in School-aged Children (HBSC) study was among the first international surveys on adolescent health. Despite fieldwork being limited to three countries in 1983, the challenges to producing valid and reliable data were apparent, including a range of structural and practical factors such as variation in the school systems in which fieldwork was conducted, compliance with a common research protocol, issues around language and translation, and the differing research capabilities within countries.1–2

Some 25 years on, the study has grown to more than 40 countries and regions and its profile has increased dramatically. Increasingly, HBSC data are in demand to inform publications from a range of national and international agencies and from academics to access raw data for secondary analysis.3–5 The increased focus on the HBSC study has resulted in greater methodological scrutiny and the need for a sharper focus on continuous improvement.

This paper provides a brief historical overview of the development of the study and an overview of the methods employed as the 8th wave of fieldwork approaches, with a focus on the systematic approach to collecting data across a growing number of countries. Background is provided in the areas of questionnaire content, sampling, data collection, and file preparation. Details of the most recent survey undertaken in 2005/06 are given as background to the papers that follow. Having outlined current practice, the paper moves on to discuss a range of key methodological tensions that remain in taking the study forward, including (a) maintaining quality standards against a background of rapid growth, (b) continuous improvement with limited financial resources, (c) accommodating analysis of trends with the need to improve and adapt questionnaire content, and (d) meeting the differing requirements of scientific and policy audiences.

Historical development

From its very origin the HBSC has had a double mission, both to establish a monitoring tool for policy development as well as to develop adolescent health research. Further, the study has, primarily based on its close collaboration with the WHO Regional Office for Europe, intended to steadily grow to collect nationally representative adolescent health data in Europe as well as in North-America.

The HBSC study started out as a research program aiming at understanding smoking behaviours in a limited number of countries (England, Finland, and Norway). Immediately following its initiation, contact was established with the World Health Organization (WHO), and emphasis was given to addressing more health topic areas and including more countries, in particular, countries from Eastern Europe that had few, if any, surveys collecting data on adolescent health and health behaviours. In the early phase of the study, countries were approached, primarily by WHO, to become members, whereas the increase in the last 15 years primarily has been a consequence of countries seeking membership. Throughout the development of the HBSC network it has been given emphasis to provide training sessions for new country teams on how to collect nationally representative survey data. Before a country is accepted as a member, it has to run a pilot of a national survey to demonstrate its capacity to collect data and establish funding for the national survey.

Up till 1994, each survey also had a common focus area that was explored, for instance, the relevance of experiences in the school setting for adolescent health and health behaviours. As a way to facilitate different research interests, the survey in 1998 opened up so countries could choose their own focus area(s). They could either use the space solely for national purposes or choose topic area(s) from a selection of optional packages developed through the study, thus allowing comparison between countries using the same package(s). This structure has opened for a diversity of research questions to be explored across the member countries and provided room for exploratory research. It does, however, also present challenges in terms of securing quality standards for a substantial number of optional variables.

HBSC survey methods

For all surveys, a standardised research protocol providing a theoretical framework for the research topics and data collection and analysis procedures is developed.6 The protocol aims at securing comparable data. The HBSC Research Network members collaborate on the production of this international Research Protocol for each four-yearly survey. The Research Protocol includes detailed information and instructions covering the following: conceptual framework for the study; scientific rationales for each of the survey topic areas; international standard version of questionnaires and instructions for use (e.g., recommended layout, question ordering, and translation guidelines); comprehensive guidance on survey methodology, including sampling, data collection procedures, and instructions for preparing national datasets for export to the International Data Bank; and rules related to use of HBSC data and international publishing. While the Research Protocol is currently being reviewed for the forthcoming 2009/10 study, significant change is not anticipated.

Questionnaire content

As HBSC is a school-based survey, data are collected through self-completion questionnaires administered in the classroom. The international standard questionnaire for each survey consists of three levels of questions which are used to create national survey instruments: core questions that each country is required to include to create the international dataset; optional packages of questions on specific topic areas from which countries can choose; and country-specific questions related to issues of national importance.

Survey questions cover a range of health indicators and health-related behaviours as well as the life circumstances of young people. Questions are subject to validation studies and piloting at national and international levels, with the outcomes of these studies often being published.7–12 The core questions provide information on: demographic factors (e.g., age and state of maturation); social background (e.g., family structure and socio-economic status); social context (e.g., family, peer culture, school environment); health outcomes (e.g., self-rated health, injuries, overweight and obesity); health behaviours (e.g., eating and dieting, physical activity and weight reduction behaviour); and risk behaviours (e.g., smoking, alcohol use, cannabis use, sexual behaviour, bullying).13 Analysis of trends is possible as a number of these core items have remained the same since the study’s inception.

Sampling

The specific population selected for sampling is young people attending school aged 11, 13, and 15. When the study was established, these age groups were chosen to represent the onset of adolescence, the challenge of physical and emotional changes, and the middle years when important life and career decisions are beginning to be made. The desired mean age for the three age groups is 11.5, 13.5, and 15.5 years. In some countries, each age group corresponds to a single school grade, while in others a proportion of each age group may be found across grades due to students being advanced or held back. These differences in grades’ equivalency to age cohorts have implications for the sampling strategy chosen by each participating country. A minimum of 95 percent of the eligible target population should be within the sample frame. Countries may choose to stratify their samples to ensure representation by, for example, geography, ethnic group, and school type.

Cluster sampling is used, the primary sampling unit being school class (or school in the absence of a sampling frame of classes).14 The recommended sample size for each of the three age groups is set at approximately 1,500 students, the calculation assuming a 95 per cent confidence interval of +/− 3 per cent around a proportion of 50 per cent and a design factor of 1.2, based on analyses of existing HBSC data. The international data file for the 2005/06 study contains data from more than 200,000 young people across the 41 participating countries or regions.

Throughout the years it has been observed that some of the protocol standards, for instance, using selected grade levels as basis for sample of 11, 13, and 15 year olds, were established based on the few countries included in the survey in its early years. Throughout the surveys, it became apparent that there were cross-national variations when it, for instance, came to whether or not the school systems operated with students repeating a grade and how a grade is defined (whether it is stable across subjects or vary by subject). As the emphasis of the HBSC is to collect representative data for certain age groups, these issues had to be taken into account both in sampling and in cleaning, without violating opportunities for trend analyses for countries that have been in the survey for a long time.

Thus given the differences in school systems, imposing a uniform approach is impractical. To deal with this complexity, age has been a priority for sampling, with students of the relevant age being selected across school years. Further complications arise when the target population is split across different levels of schooling, such as primary and secondary. Where the number of classes eligible for sampling is unknown, probability proportionate to size (PPS) sampling is used, making use of actual or estimated school size.14 In some countries, to minimise fieldwork costs, classes from one age group are randomly selected and classes then drawn for the other grades from the same school, minimising the number of schools required. The survey is administered at different times as appropriate to the national school system in order to produce samples with mean ages of 11.5, 13.5 and 15.5. In countries where there is significant holding back and/or advancement of students, this technique may involve sampling more than three grades.

In the vast majority of countries, a nationally representative sample is drawn. Where national representativeness is not possible, a regional sample is drawn (Germany and the Russian Federation in 2005/06). It should also be noted that a census among the relevant age groups is taken in those countries where the population is sufficiently small (Greenland, Iceland, and Malta in 2005/06).

Countries are provided with sampling guidance notes and required to complete a sampling questionnaire, covering issues such as: the proportions of students held back or advanced; how students will be sampled; whether a sampling frame of classes or schools is available; whether or not PPS sampling will be possible where school is the primary sampling unit; stratification to be used; dealing with likely non-response; and whether or not any boosts will be built in (e.g., to accommodate language groups or geographic regions).

Data collection and file preparation

In most countries, questionnaires are delivered to schools for teacher administration. Where financial resources were available, researchers may be used in an attempt to minimise teacher burden (Note: For the 2005/06 survey, fieldwork took place between October 2005 and May 2006 in the vast majority of cases, usually lasting between one and two months). Files from each country are prepared and exported to the HBSC International Data Bank at the University of Bergen, where they are cleaned and compiled into an international data set with support from the Norwegian Social Science Data Services (NSD), under the guidance of the study’s Data Bank Manager. Data on young people outside the target age groups are removed and deviations from the Research Protocol are documented, typically to make users of the data aware where there are changes to the wording of questions and/or response categories in a country. Depending on the magnitude of the deviation, the user can then choose to include or exclude items from subsequent analyses. Despite some variation, the desired mean ages and sample sizes were achieved in the vast majority of countries in 2005/06 (see Tables 1 and 2).

Table 1.

HBSC survey, 2005/06: mean ages of respondents, by country/region and age group.

| Country | 11- year-olds | 13-year-olds | 15-year-olds |

|---|---|---|---|

| Austria | 11.2 | 13.2 | 15.2 |

| Belgium (Flemish-speaking) | 11.5 | 13.5 | 15.4 |

| Belgium (French- peaking) | 11.6 | 13.5 | 15.5 |

| Bulgaria | 11.6 | 13.6 | 15.6 |

| Canada | 11.7 | 13.6 | 15.5 |

| Croatia | 11.6 | 13.5 | 15.6 |

| Czech Republic | 11.5 | 13.4 | 15.4 |

| Denmark | 11.7 | 13.6 | 15.6 |

| England | 11.7 | 13.7 | 15.7 |

| Estonia | 11.8 | 13.8 | 15.8 |

| Finland | 11.8 | 13.8 | 15.8 |

| France | 11.6 | 13.6 | 15.6 |

| Germany | 11.3 | 13.3 | 15.4 |

| Greece | 11.7 | 13.7 | 15.6 |

| Greenland | 11.7 | 13.5 | 15.4 |

| Hungary | 11.5 | 13.5 | 15.5 |

| Iceland | 11.6 | 13.6 | 15.6 |

| Ireland | 11.6 | 13.5 | 15.5 |

| Israel | 12.0 | 13.9 | 15.9 |

| Italy | 11.9 | 13.8 | 15.8 |

| Latvia | 11.9 | 13.8 | 15.8 |

| Lithuania | 11.6 | 13.6 | 15.7 |

| Luxembourg | 11.6 | 13.5 | 15.5 |

| Malta | 12.0 | 13.8 | 15.8 |

| Netherlands | 11.6 | 13.5 | 15.4 |

| Norway | 11.5 | 13.5 | 15.5 |

| Poland | 11.7 | 13.7 | 15.7 |

| Portugal | 11.6 | 13.6 | 15.6 |

| Romania | 11.6 | 13.6 | 15.5 |

| Russia | 11.4 | 13.5 | 15.6 |

| Scotland | 11.5 | 13.5 | 15.5 |

| Slovakia | 11.4 | 13.4 | 15.3 |

| Slovenia | 11.6 | 13.6 | 15.6 |

| Spain | 11.5 | 13.5 | 15.6 |

| Sweden | 11.5 | 13.5 | 15.5 |

| Switzerland | 11.4 | 13.5 | 15.4 |

| TFYR Macedonia | 11.5 | 13.5 | 15.5 |

| Turkey | 11.9 | 13.9 | 15.9 |

| Ukraine | 11.8 | 13.6 | 15.7 |

| United States | 11.8 | 13.4 | 15.5 |

| Wales | 12.0 | 14.0 | 16.0 |

| TOTAL | 11.6 | 13.6 | 15.6 |

Table 2.

HBSC survey, 2005/06: number of respondents, by country/region, gender and age group.

| Country | Gender | Age group | ||||

|---|---|---|---|---|---|---|

| Boys | Girls | 11 | 13 | 15 | Total | |

| Austria | 2340 | 2435 | 1694 | 1587 | 1494 | 4775 |

| Belgium (Flemish-speaking) | 2198 | 2113 | 1291 | 1404 | 1616 | 4311 |

| Belgium (French- peaking) | 2313 | 2163 | 1459 | 1603 | 1414 | 4476 |

| Bulgaria | 2405 | 2449 | 1586 | 1580 | 1688 | 4854 |

| Canada | 2732 | 3055 | 1466 | 2032 | 2289 | 5787 |

| Croatia | 2439 | 2526 | 1666 | 1669 | 1630 | 4965 |

| Czech Republic | 2411 | 2364 | 1509 | 1601 | 1665 | 4775 |

| Denmark | 2727 | 2955 | 2093 | 2037 | 1552 | 5682 |

| England | 2308 | 2460 | 1655 | 1662 | 1451 | 4768 |

| Estonia | 2217 | 2260 | 1421 | 1469 | 1587 | 4477 |

| Finland | 2474 | 2719 | 1783 | 1725 | 1685 | 5193 |

| France | 3551 | 3590 | 2493 | 2426 | 2222 | 7141 |

| Germany | 3632 | 3592 | 2231 | 2441 | 2552 | 7224 |

| Greece | 1746 | 1944 | 1087 | 1187 | 1416 | 3690 |

| Greenland | 665 | 693 | 458 | 483 | 417 | 1358 |

| Hungary | 1677 | 1821 | 1096 | 1215 | 1187 | 3498 |

| Iceland | 4792 | 4684 | 3814 | 3779 | 1883 | 9476 |

| Ireland | 2451 | 2389 | 1370 | 1785 | 1685 | 4840 |

| Israel | 2248 | 3102 | 1619 | 1734 | 1997 | 5350 |

| Italy | 1974 | 1946 | 1242 | 1343 | 1335 | 3920 |

| Latvia | 2034 | 2187 | 1425 | 1466 | 1330 | 4221 |

| Lithuania | 2904 | 2728 | 1864 | 1907 | 1861 | 5632 |

| Luxembourg | 2162 | 2138 | 1262 | 1531 | 1507 | 4300 |

| Malta | 686 | 703 | 509 | 526 | 354 | 1389 |

| Netherlands | 2114 | 2114 | 1350 | 1515 | 1363 | 4228 |

| Norway | 2428 | 2269 | 1578 | 1585 | 1534 | 4697 |

| Poland | 2649 | 2840 | 1550 | 1652 | 2287 | 5489 |

| Portugal | 1884 | 2035 | 1201 | 1335 | 1383 | 3919 |

| Romania | 2139 | 2545 | 1639 | 1440 | 1605 | 4684 |

| Russia | 3892 | 4340 | 2759 | 2718 | 2755 | 8232 |

| Scotland | 3032 | 3113 | 1691 | 2256 | 2198 | 6145 |

| Slovakia | 1794 | 2083 | 1298 | 1327 | 1252 | 3877 |

| Slovenia | 2549 | 2570 | 1716 | 1842 | 1561 | 5119 |

| Spain | 4368 | 4523 | 2985 | 2841 | 3065 | 8891 |

| Sweden | 2179 | 2213 | 1513 | 1353 | 1526 | 4392 |

| Switzerland | 2233 | 2346 | 1506 | 1573 | 1500 | 4579 |

| TFYR Macedonia | 2625 | 2646 | 1666 | 1709 | 1896 | 5271 |

| Turkey | 2847 | 2705 | 2072 | 1812 | 1668 | 5552 |

| Ukraine | 2388 | 2681 | 1491 | 1749 | 1829 | 5069 |

| United States | 1857 | 2035 | 1094 | 1514 | 1284 | 3892 |

| Wales | 2169 | 2227 | 1505 | 1541 | 1350 | 4396 |

| TOTAL | 100233 | 104301 | 66707 | 69954 | 67873 | 204534 |

When all national data have been received and accepted according to the Research Protocol by the Data Bank Manager, the files are merged and the combined dataset is made available to the Principal Investigators in each participating country. The aim is to produce an agreed international data file within four or five months of the deadline for submission of national data sets. From the time it is finalised the international data file is restricted for the use of member country teams for a period of three years, after which time the data are available for external use by agreement with Principal Investigators across the study.

With the increased demand for published HBSC data and requests for data for secondary analysis, it is necessary to ensure the quality of the data. All data processing, including consistency checks, age cleaning, derivation of variables, and imputation is therefore handled centrally. Data sets are accepted on receipt of completed data documentation record, which provides information on fieldwork dates, sampling procedures (to check compliance with reviewed sampling plan), data collection procedures, response rates, languages used, funding, and deviations from the Research Protocol. Primary sampling units and stratification variables are clearly identified, enabling the precision of survey estimates to be correctly adjusted for in subsequent analyses15 and recognising the increasing use of hierarchical modelling methods.16

Tensions

The assiduous and continuing attention to detail in questionnaire content, sampling procedures, data collection, and file preparation results in a high quality and comprehensive cross-national data file that describes adolescents’ health and health behaviours in a manner no other study currently does. However, there are inevitable tensions for the HBSC, as for any other large, ongoing survey.

Tension 1: Maintaining quality standards against a background of rapid growth

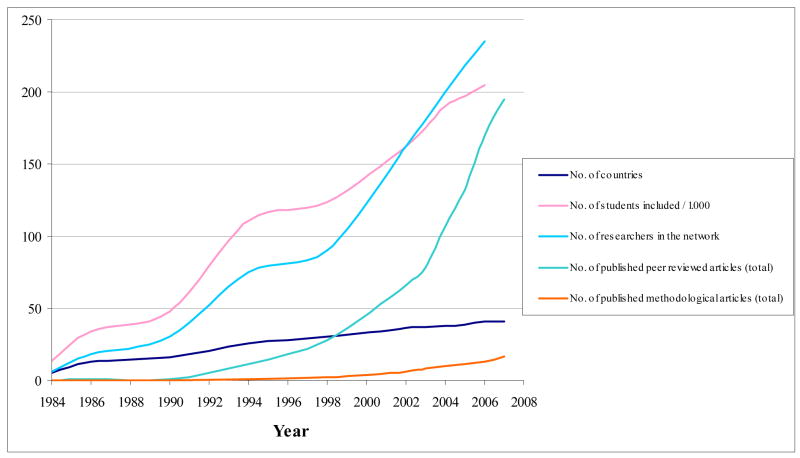

Figure 1 illustrates the development in the HBSC study since 1983 in terms of country and study participants, researchers, and scientific articles of both subject-matter and methodological issues. The growth from 3 to 41 countries within HBSC (and the corresponding growth in number of researchers and scientific publications) provides exciting opportunities for cross-national analyses and learning. It also presents challenges: adapting to new social, economic, political, and educational contexts; improving organisational infrastructures; and dealing with linguistic differences across countries.

Figure 1.

Development within HBSC from 1984 to 2007

The HBSC questionnaire content and sampling methods were originally developed to meet the needs of a small number of European countries.17 Content and sampling have been adapted to other national contexts through a process of learning and negotiation. The challenge is to agree on a questionnaire that is both relevant to national needs and preserves the original purpose of HBSC. To maintain comparability and respect national needs, a set of core questions are established, and these are combined at a country level with optional and country-specific sets of items. Only in extreme circumstances, for example, where answering a question might be perceived to incriminate a student (e.g., substance use or sexual behaviour) or jeopardize the entire survey (e.g., national sensitivities over issues such as sexual behaviour) are core questions not included by individual countries. Cross-national differences in school systems and heterogeneity of age groups within school grades and other characteristics of the school system must be taken into account with such information not always readily available.

International structures have been implemented to maximise the scientific and organisational capacity of the network. An important initiative has been the addition of the Methodology Development Group (MDG). The MDG comprises subgroups charged with addressing specific methodological issues, organises workshops at international meetings, and provides specific guidance and advice to members; vitally, assistance with, and formal review of, sampling plans. In addition, country zones provide organised opportunities for geographically and culturally-proximate countries to share concerns and develop in accordance with their common needs.

Study expansion has also focused attention on the threat of inappropriate or inaccurate questionnaire translation. The challenge is to be both culturally relevant and comprehensible.18 Translated questions are translated back into the source language (English) and compared against the original. This process is not immune to errors6, but has been strengthened by a rigorous system where back-translations are checked at the ICC, followed by further review with the researchers and translators. In addition, a pre-amble to a question(s) is provided for students where thought necessary, such as when describing bullying or physical activity. In this process, the aim is not only to provide a linguistically sound translation, but also guarantee that questions are relevant to and understood by participating students.

Improved electronic communication and the establishment of working groups support relationships and understanding between the multi-disciplinary network of researchers that comprise HBSC. Such collaborations lead to the exchange of crucial formal and informal support and the networking that enables the methodological challenges of expansion to be avoided, uncovered, understood, and addressed.

Tension 2: Continuous improvement with limited financial resources

Lynn proposes how an international study can set cross-national quality standards, distinguishing different kinds of quality.19 The primary distinction is between the maximum quality approach – which can be accomplished at the expense of consistency and comparability if each country should aim for its national maximum standard and the consistent quality approach – which maximises consistency and comparability at the expense of quality, if all countries must have the same level of quality. An intermediate possibility is constrained maximum quality, where certain elements are expected at the international maximum quality level, while other elements are at the national maximum standard.

If nations (and teams within nations) possessed the same financial resources, there would be no need to worry about maximum versus consistent quality and to fear that consistent quality would involve lowering all countries to the lowest common denominator. Unfortunately, countries do have different financial resources with attendant consequences for their ability to educate and employ top-level researchers to conduct the study, their fiscal capacity to send researchers to international meetings, and their capability of paying the necessary costs to administer a survey across sometimes vast geographical regions.

The most apparent solution to this tension is some measure of transfer of funds. To a certain extent, a transfer of funds takes place in the centralisation of key functions within HBSC, such as study coordination and data management. Furthermore, a subscription system is in place whereby those countries with sufficient funds provide some support for study coordination. However, more drastic measures, such as countries with greater financial resources contributing directly to countries with lesser financial resources, remain unlikely in the current global economic and political situation.

Member countries recognize the importance of mutual methodological issues19 but understand the necessity for methodological compromises with respect to quality to achieve functional equivalence in international surveys.20 Each member country of the HBSC therefore endeavours to perform its survey in accordance with the guidelines from the network, but in situations where in doubt – or when a more financially feasible solution is nearer – this solution will sometimes need to be used, and deviations will occur from the international study design.

To lessen the need for deviations to the survey, decisions about functional equivalence have been achieved within HBSC by inviting Principal Investigators (PIs) and members to debate and to reach a common conclusion on the matter, since decisions about survey quality, whether in national or cross-national context, are context dependent.21 For this process to be successful, good will, enthusiasm and transparency is needed from all members, and joint ownership is helpful in this process.

Tension 3: Accommodating analysis of trends with the need to improve and adapt questionnaire content

In many countries, HBSC is the key source of statistics on adolescent health and health behaviours and, for each conducted survey, the issue of studying both national and international trends becomes increasingly more relevant and important. From a policy perspective, trends are highly interesting as they can be used to study the effect of national level adolescent health policies and interventions.

A tension arises between 1) monitoring trends requiring that measurements stay the same over time and that a substantial part of the survey each time is devoted to outcome measures of health and health behaviours, and 2) explanatory and exploratory research aiming at continuously studying and understanding correlates of adolescent health and health behaviours as well as exploring new aspects of adolescent health and health behaviours. Typically the health behaviour and health perception measures have been part of the mandatory items that are repeated each survey for all countries, whereas explanatory variables are part of a section that changes for each survey by the choice of the individual country.

Over the years, the number of trend issues and variables has increased, implying that less space is available for exploring the relevance of individual, social, and setting specific experiences in understanding adolescent health and health behaviours. Thus HBSC has to some extent grown into a monitoring survey meeting the interests of policymakers across countries in Europe and North America, but providing constraints for researchers in the HBSC network that primarily want to explore new areas of adolescent health.

Monitoring trends also represents challenges to the study. To monitor trends, indicators measuring health behaviours and health perceptions from the early phase of the study have been kept unchanged as long as they provide moderate reliability and validity. High level standards have in some cases been traded for long term trends. To ensure that inclusion of any new mandatory items meets high reliability standard the items now have to demonstrate high level measurement properties in a minimum of 10 countries across two-three surveys, i.e., there will be a minimum of 8 years before the item is used as a mandatory item to collect data across all countries. This requirement of quality might be considered counterproductive in providing interesting and relevant monitoring information on, for instance, new technology behaviours that develop quickly. Other issues that need balancing between monitoring trends versus developing the study are changes to sampling, data collection, and data cleaning procedures aimed at increasing accuracy of data. Such changes can affect the opportunities of trends as the basis of comparison over time is not the same.

Thus an international survey like the HBSC study needs to find a way of balancing different foci within the study as well as external expectations taking into account the role the study has as a powerful data collection tool for both monitoring as well as for exploratory research.

Tension 4: Meeting the differing requirements of scientific and policy audiences

In recent years, there has been a call for increasing linkages between researchers and policymakers within the health policy field.22–24 It is argued that policymakers can benefit from researchers in crafting evidence-based policies that have more possibility of providing desired outcomes, while researchers can benefit from having their research used in a timely and constructive fashion. In this argument, both parties make substantial gains.

However, not all observers agree with this assessment. Hammersley25, in particular, makes a compelling case that the needs of researchers and policymakers are too diverse to suggest that policy can be based largely on the results of research. The fundamental problem lies in standards of acceptability. Researchers tend to be wary of putting forth claims about which they have some reservations (e.g., low threshold values for statistical significance). In contrast, policymakers need to make decisions in a timely fashion with or without conclusive evidence.

Other observers, while noting tensions between research and policy, are more sanguine about the prospects these tensions can be resolved.23, 26–27 While they are acutely aware of the cultural divide between researchers and policymakers26, caused by a focus on scientific publications by researchers and an emphasis on politics and public opinion by policymakers27, these observers feel the divide can be bridged through collaboration.27–29 This collaboration needs to be a genuine undertaking of all parties and not contrived collaboration28, done primarily to satisfy funding agencies.

HBSC has built collaboration and strengthened linkages between research and policy in two primary respects. First, it has established two governance structures concerned with scientific and policy issues: the Scientific Development Group (SDG) and the Policy Development Group (PDG), each with representatives from across the study. While working separately to ensure that both researcher and policymaker concerns are raised in the design and utilization of the survey, joint discussions are also held to debate issues of mutual interest.

Second, HBSC network researchers have built explicit connections with policymakers. In a formal sense, these connections come through regular attendance of WHO representatives at HBSC meetings, the presence of policymakers from the hosting nation at meetings, and the widespread dissemination of HBSC national and international data in a variety of formats including reports, fact sheets, and websites. Most recently, a series of jointly organised HBSC/WHO Forums has been established, bringing researchers and high level policy makers together to discuss issues of topical importance, such as socioeconomic determinants of eating habits and physical activity in 2006. 30

In an informal sense, these connections are established when funding is requested. Negotiations involve current policy initiatives and emerging/ongoing research concerns, each with attendant data needs. These negotiations can bring underlying tensions to the surface, especially insofar as funding is secured and data are collected nationally, while questionnaire design is undertaken on a cross-national basis.

Two research directions, each already undertaken by HBSC in a limited fashion, might be broadened to further engage policymakers with researchers. One possibility is the creation of systematic reviews providing a lens into research for policymakers.31 When written in an accessible style, these reviews show promise. Another possibility is the greater use of mixed-method designs.32 Providing policymakers with compelling narrative to complement compelling statistics could result in greater use of research in forming policy.

Discussion

At the time of the 25th anniversary of the HBSC study, it can be concluded that the study has managed to adapt to developments in the research areas within and around public health and policy, relying on the expertise from current and past members. This has been a constructive and fruitful approach, but an approach that needs to continually seek innovative ways to resolve the inherent tensions in a cross-national study. In this paper, we have explained the structure of the HBSC survey, ongoing tensions predicated by doing a large-scale survey across diverse nations, and some current initiatives and interesting possibilities to address these tensions.

What does the future hold for HBSC and other similar cross-national studies? On the one hand, we can lament the downsides of the situation: work within the cross-national survey competing with other demands for the researchers and policymakers involved, the risk that important areas may be neglected, and the impossibility of completely meeting the needs of differing stakeholders across culturally divergent countries. On the other hand, we can celebrate what we have accomplished: the bringing together of over 250 researchers in 41 countries (and growing!) to address one of the most critical issues currently facing adolescents, their health and health behaviours.

References

- 1.Aaro LE, Wold B, Kannas L, Rimpela M. Health behaviour in school-children. A WHO cross-national survey. Health Promotion. 1986;1:17–33. [Google Scholar]

- 2.Smith C, Wold B, Moore L. Health behaviour research with adolescents: a perspective from the WHO cross-national Health Behaviour in School-aged Children study. Health Promotion Journal of Australia. 1992;2:41–44. [Google Scholar]

- 3.UNICEF. Innocenti Research Centre Report Card 7. Florence: UNICEF, Innocenti Research Centre; 2007. Child poverty in perspective: An overview of child well-being in rich countries. A comprehensive assessment of the lives and well-being of children and adolescents in the economically advanced nations. [Google Scholar]

- 4.Bradshaw J, Hoelscher P, Richardson D. An index of child well-being in the European Union. Social Indicators Research. 2007;80:133–177. [Google Scholar]

- 5.Association of Public Health Observatories. Indications of Public Health in the English Regions. 5: Child Health. Association of Public Health Observatories; 2006. [Google Scholar]

- 6.Roberts C, Currie CE, Samdal O, Currie D, Smith R, Maes L. Measuring the health and health behaviours of adolescents through cross-national survey research: recent developments in the Health Behaviour in School-aged Children study. Journal of Public Health. 2007;15:179–186. [Google Scholar]

- 7.Boyce W, Torsheim T, Currie C, Zambon A. The Family Affluence Scale as a Measure of National Wealth: Validation of an Adolescent Self-reported Measure. Social Indicators Research. 2006;78:473–487. [Google Scholar]

- 8.Elgar F, Moore L, Roberts C, Tudor-Smith C. Validity of self-reported height and weight and predictors of bias in adolescents. Journal of Adolescent Health. 2005;37:371–375. doi: 10.1016/j.jadohealth.2004.07.014. [DOI] [PubMed] [Google Scholar]

- 9.Ravens-Sieberer U, Erhart M, Torsheim T, Hetland J, Freeman J, Danielson M, Thomas C and the HBSC Positive Health Group. An international scoring system for self-reported health complaints in adolescents. European Journal of Public Health. 2008;18:294–299. doi: 10.1093/eurpub/ckn001. [DOI] [PubMed] [Google Scholar]

- 10.Vereecken C, Maes LA. A Belgian study on the reliability and relative validity of the Health Behaviour in School-Aged Children food frequency questionnaire. Public Health Nutrition. 2003;6:581–588. doi: 10.1079/phn2003466. [DOI] [PubMed] [Google Scholar]

- 11.Haugland S, Wold B. Subjective health in adolescence - Reliability and validity of the HBSC symptom check list. Journal of Adolescence. 2001;24:611–624. doi: 10.1006/jado.2000.0393. [DOI] [PubMed] [Google Scholar]

- 12.Torsheim T, Wold B, Samdal O. The Teacher and Classmate Support Scale: Factor Structure, test-retest reliability and validity in samples of 13- and 15 year old adolescents. School Psychology International. 2000;21:195–212. [Google Scholar]

- 13.Currie C, Nic Gabhainn S, Godeau E, Roberts C, Smith R, Currie D, Picket W, Richter M, Morgan A, Barnekow V. Health Policy for Children and Adolescents, No. 5. Copenhagen: WHO Regional Office for Europe; 2008. Inequalities in young people’s health: HBSC international report from the 2005/2006 Survey. [Google Scholar]

- 14.Groves RM, Fowler FJ, Couper MP, Lepkowski JM, Singer E, Tourangeau R. Survey Methodology. New York: Wiley; 2004. [Google Scholar]

- 15.Lee ES, Forthofer RN. Analysing complex survey data. 2. Beverley Hills: SAGE; 2005. [Google Scholar]

- 16.Leyland A, Goldstein H, editors. Multilevel modelling of health statistics. New York: Wiley; 2001. [Google Scholar]

- 17.Aaro LE, Wold B. A WHO cross-national survey: Research protocol for the 1989/90 study 1989. Bergen, Norway: University of Bergen, Department of Social Psychology.; Health behaviour in school-aged children. [Google Scholar]

- 18.Sperber AM, Devellis RF, Boehlecke B. Cross-cultural translation: Methodology and validation. Journal of Cross-Cultural Psychology. 1994;25:501–524. [Google Scholar]

- 19.Lynn P. Developing quality standards for cross-national survey research: five approaches. International Journal of Social Research Methodology. 2003;6:323–36. [Google Scholar]

- 20.Hantris L. Combining methods: A key to understanding complexity in European societies? European Societies. 2005;7:399–421. [Google Scholar]

- 21.Harkness J. In pursuit of quality: issues for cross-national survey research. International Journal of Social Research Methodology. 1999;2:125–40. [Google Scholar]

- 22.Franklin GM, Wickizer TM, Fulton-Kehoe D, Turner JA. Policy-relevant research: When does it matter? NeuroRx. 2004;1:356–362. doi: 10.1602/neurorx.1.3.356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Heinemann AW. State of the science on postacute rehabilitation: setting a research agenda ad developing an evidence base for practice and public policy: an introduction. Journal of NeuroEngineering and Rehabilitation. 2007;4:43–48. doi: 10.1186/1743-0003-4-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hennink M, Stephenson R. Using research to inform health policy: Barriers and strategies in developing countries. Journal of Health Communication. 2005;10:163–180. doi: 10.1080/10810730590915128. [DOI] [PubMed] [Google Scholar]

- 25.Hammersley M. The myth of research-based practice: The critical case of educational inquiry. International Journal of Social Research Methodology. 2005;8:317–330. [Google Scholar]

- 26.Berman J. Connecting with industry: bridging the divide. Journal of Higher Education Policy and Management. 2008;30:165–174. [Google Scholar]

- 27.Leadbetter B, Marshall A, Banister E. Building strengths through practice-research-policy collaborations. Child and Adolescent Psychiatric Clinics of North America. 2007;16:515–532. doi: 10.1016/j.chc.2006.11.004. [DOI] [PubMed] [Google Scholar]

- 28.Lawson HA. The logic of collaboration in education and the human services. 2004;18:225–237. doi: 10.1080/13561820410001731278. [DOI] [PubMed] [Google Scholar]

- 29.Hargreaves A. Transforming knowledge: Blurring the boundaries between research, policy, and practice. Educational Evaluation and Policy Analysis. 1996;18:105–122. [Google Scholar]

- 30.WHO/HBSC. Addressing the socioeconomic determinants of healthy eating habits and physical activity levels among adolescents . Copenhagen: WHO Regional Office for Europe; 2006. [Google Scholar]

- 31.Oakley A. The researcher’s agenda for evidence. Evaluation and Research in Education. 2004;8:12–27. [Google Scholar]

- 32.Siraj-Blatchford I, Sammons P, Taggart B, Sylva K, Melhuish E. Educational research and evidence-based policy. The mixed-method approach of the EPPE project. 2006;19:63–82. [Google Scholar]