Abstract

Short-term memory (STM), or the ability to hold verbal information in mind for a few seconds, is known to rely on the integrity of a frontoparietal network of areas. Here, we used functional magnetic resonance imaging to ask whether a similar network is engaged when verbal information is conveyed through a visuospatial language, American Sign Language, rather than speech. Deaf native signers and hearing native English speakers performed a verbal recall task, where they had to first encode a list of letters in memory, maintain it for a few seconds, and finally recall it in the order presented. The frontoparietal network described to mediate STM in speakers was also observed in signers, with its recruitment appearing independent of the modality of the language. This finding supports the view that signed and spoken STM rely on similar mechanisms. However, deaf signers and hearing speakers differentially engaged key structures of the frontoparietal network as the stages of STM unfold. In particular, deaf signers relied to a greater extent than hearing speakers on passive memory storage areas during encoding and maintenance, but on executive process areas during recall. This work opens new avenues for understanding similarities and differences in STM performance in signers and speakers.

Keywords: American Sign Language, deafness, fMRI, short-term memory

Introduction

Short-term memory (STM) is the set of cognitive functions that allow individuals to actively maintain and manipulate information in the service of cognition. It is widely accepted that STM is not a unitary concept but rather can be subdivided into different component processes, including buffers for the temporary maintenance of linguistic and/or visuospatial information and a “central executive” system (Norman and Shallice 1980; Baddeley 1986; Miyake and Shah 1999). Based on this model, the basic processing stages involved during a serial recall task (in which participants are asked to recall lists in the order of presentation) can be described as consisting of “encoding,” or the processes that mediate the analysis of the stimulus and the initial establishment of its memory trace, memory “maintenance” including the rehearsal process by which the memory trace for the stimulus is refreshed while in memory, and “response” selection or retrieval of the stimulus from memory for recall. Here, we ask how the use of a visuospatial language such as American Sign Language (ASL) affects the brain systems known to mediate these different stages of processing. To the extent that the network of areas for STM is invariant across different types of language inputs (auditory, written, and pictorial), we may expect the same brain systems to be recruited in signers and in speakers. Yet, differences in STM performance have been reported between deaf signers and hearing speakers who may find their source in the use of different neural mechanisms across populations.

Neuroimaging studies of the neural systems underlying performance during each of the 3 main stages of STM and converging evidence from brain-damaged patients (Warrington and Shallice 1969; Shallice and Warrington 1974a, 1974b; Shallice and Butterworth 1977; Shallice 1988; Vallar et al. 1997) reveal distinct patterns within an overlapping frontoparietal network (Rypma and D'Esposito 1999; Henson et al. 2000; Chein and Fiez 2001; Manoach et al. 2003). Whereas brain activation during encoding reflects in large part the sensory characteristics of the information to be laid down in STM and recall the motor requirements of executing the response, all 3 processing stages have been found to rely on the integrity of a wide frontoparietal circuit (for a meta-analysis see Wager and Smith 2003). This network includes prefrontal regions ventrally (inferior frontal gyrus [IFG], Brodmann’s area [BA] 44/45) and dorsally (dorsolateral prefrontal cortex [DLpFC] and superior fontal cortex, BA 10/9/46), the inferior parietal cortex (especially BA 7/40), sensory and motor regions (superior temporal cortex, BA 21/42, premotor and supplementary motor areas [SMA], BA 4/6), and the cingulate gyrus (BA 32) (Smith and Jonides 1997; Cabeza and Nyberg 2000; Hickok and Poeppel 2000). Although the exact contributions of each of these areas to STM is a topic of ongoing debate, there is evidence that dorsal and ventral areas within the prefrontal and parietal cortex exhibit some degree of functional specialization. In particular, it has been proposed that the ventral prefrontal cortex mediates passive storage of information under low load conditions and the dorsal prefrontal cortex is recruited as the capacity of the maintenance buffer is exceeded and the task requires not only maintenance but also active manipulation of information and other executive processes like response selection and information retrieval (Rypma and D'Esposito 1999, 2003; Braver and Bongiolatti 2002; Sakai et al. 2002; Narayanan et al. 2005). Similarly, functional distinctions have been proposed for the inferior parietal cortex. The dorsal aspect of the inferior parietal cortex (DIPC, with coordinates centered on −34, −51, 42 in the left hemisphere according to Wager and Smith [2003] and Ravizza et al. [2004]) appears to act with the dorsal prefrontal areas as part of the executive system, playing a role in processes such as retaining temporal order information, allocating attentional resources to items in memory as they get rehearsed or preparing for action (Wager and Smith 2003; Ravizza et al. 2004). In contrast, the ventral inferior parietal cortex has been proposed to mediate the establishment of articulatory representations when processing speech or recoding written material into speech (Hickok and Poeppel 2000; Ravizza et al. 2004). Accordingly, its participation is observed in phonological discrimination and identification tasks as well as reading.

The present study investigates the participation of this network of areas during a STM span task in native users of ASL. ASL is a natural visuogestural language used by the Deaf community in North America, with comparable grammatical complexity and ability to convey abstract meanings as natural spoken languages (Klima and Bellugi 1979; Emmorey and Lane 2000). Thus signing is visuomotor in nature but nonetheless linguistic. Furthermore, encoding and rehearsal of spoken and signed information in STM appears to rely on similar cognitive mechanisms. Just as the encoding and rehearsal of spoken information is based on the phonemes forming the target words, the encoding and rehearsal of signs is based on the formational parameters (hand shape, location, and movement) of the target signs (Wilson and Emmorey 2000). The similarity in mechanisms across STM in speakers and signers is best exemplified by the findings of similar key STM effects in signers as in speakers. For example, serial recall in signers is affected by the same factors as in speakers, including phonological similarity (Conrad 1970, 1972; Wilson and Emmorey 1997), sign length (Wilson and Emmorey 1998), manual articulatory suppression (MacSweeney et al. 1996; Wilson and Emmorey 1997; Losiewicz 2000), and irrelevant signed input (Wilson and Emmorey 2003). These results indicate that, like speakers, native ASL signers rely on phonological encoding in signed STM and use a subarticulatory mechanism to rehearse signs in STM (for a review, see Wilson and Emmorey 2000).

Thus, the available behavioral evidence indicates that spoken and signed STM rely on similar cognitive mechanisms. Yet, signed and spoken verbal STM differ in rather striking ways. First, the reliance of sign language on the visual modality and the role of spatial information in sign language raise the possibility that signed STM may engage brain systems associated with visuospatial STM to a greater extent than those involved in verbal STM (for review of verbal vs. visuospatial STM, see Smith et al. 1996; Wager and Smith 2003; Walter et al. 2003; Jennings et al. 2006). Second, the ability to accurately recall a short list of items in the order it is presented differs between users of ASL and English speakers. Using a standard STM task where subjects are asked to recall a list of digits in the exact order presented, it has been shown that, whereas speakers can recall accurately a mean of 7 ± 2 items, signers reach their capacity limit at 5 ± 1 items (Bellugi and Fisher 1972; Bellugi et al. 1974–1975; Hanson 1982; Krakow and Hanson 1985; Boutla et al. 2004; for a different view, see Wilson and Emmorey 2006a). This population difference was found to be limited to serial STM span tasks as speakers and signers perform similarly on other WM tasks such as free recall or the speaking span (Hanson 1982; Boutla et al. 2004; Bavelier et al. 2007). Here, we compared the similarities and differences in brain networks underlying STM of speech and sign to further our understanding of the impact of language modality on the brain organization of STM processes.

The few neuroimaging studies of STM in signers indicate similar brain systems in signers and speakers, with possible differences in the recruitment of parietal areas. Deaf subjects asked to rehearse a short list of pseudosigns exhibited activation in a frontoparietal network with a marked recruitment of the DIPC, especially on the left. In accord with this finding, the same study reports deficits in signed STM in a deaf signer with a frontoparietal lesion on the left (Buchsbaum et al. 2005). Greater participation of the DIPC in signers than in speakers has also been reported by Ronnberg et al. (2004). Bilinguals in Swedish Sign Language and spoken Swedish were asked to perform immediate serial recall of lexical items in signs versus speech. Brain areas that were more recruited for sign than speech included the DIPC on the left, as well as right and left middle temporal areas and right postcentral gyrus. These 2 previous studies of signed STM, therefore, indicate a common frontoparietal network across signers and speakers, with some differences across modality in the recruitment of the DIPC.

In the present study, we make use of advances in our understanding of the neural bases of encoding, maintenance, and serial recall during a STM task to gain a better understanding of the commonalities as well as the differences between signed and spoken STM processes. This study builds on recent work that has characterized the role of specific brain regions in STM tasks using event-related functional magnetic resonance imaging (fMRI) (Rypma and D'Esposito 1999; Chein and Fiez 2001; Manoach et al. 2003). In these studies, participants are asked to perform a verbal recall task composed of 3 phases. First, during the encoding phase, the to-be-recalled letters are presented in a natural fashion (in the present study, an audio–visual movie for English speakers and an ASL movie for deaf signers) and participants are asked to encode the target set in STM. Second, during the maintenance phase, participants are required to maintain the target set in STM over a short period of time (in the present study, 13.2 s). Finally, during the recall phase, participants are asked to overtly recall the items in the original target set.

This event-related paradigm has 2 important features. First, parametric changes in information load can be implemented by systematic variations of the number of to-be-remembered items on a trial-to-trial basis. This feature allowed us to contrast the impact of a low versus high load on the brain systems supporting STM in signers and speakers. Based on previous studies showing different maximum spans in signers than in speakers, the number of items in the high load condition corresponded to the maximum span of each population (7 for speakers and 5 for signers) and the low load condition consisted in 40% of the maximum span (3 for speakers and 2 for signers; this number was chosen so as to engage STM resources without overtaxing them). These choices ensured comparable performance across populations for each load condition. The second important feature of the event-related design is that brain responses during the individual trial phases can be examined independently, allowing us to identify the contribution of specific brain areas in encoding, maintenance, and recall during a STM task. This design was therefore instrumental to identify and compare the brain regions mediating the different stages of STM as a factor of load in signers and speakers.

Materials and Methods

Subjects

The present experiment included 2 groups. A group of 12 native English speakers was recruited from the Rochester, NY, area. A second group of 12 adult Deaf native ASL signers were recruited from the campus of Gallaudet University (Washington, DC). Two subjects in each group had to be discarded (see Data Preprocessing) leaving 10 subjects per group (6 females, mean age: 23 years old per group).

All signers were born profoundly Deaf to Deaf parents (decibel loss equal or greater than 90 db in each ear except for 1 subject who had a decibel loss of 70 db in the right ear and 75 db in the left ear). All signers were exposed to ASL from birth through their parents and considered ASL as their primary language. At the time of the study, all signers were enrolled in programs taught in ASL. We recognize that these selection criteria limit this study to Deaf native signers, who represent only 5–10% of the Deaf population. However, Deaf native signers are the 1 subgroup in the Deaf community for which ASL is by far the preferred mode of communication, ensuring its use at all stages of the STM task. None of the hearing native English speakers were familiar with ASL. All signers and speakers were right handed. Informed consent was obtained from each participant according to the guidelines of the ethics committees of the University of Rochester, Gallaudet University, and the National Institutes of Health (NIH). All participants were paid for their participation.

Equipment

Stimuli were presented on a Macintosh G3 computer (Apple Computer, Inc., Cupertino, CA) using the Psychophysics Toolbox (Brainard 1997; Pelli 1997) extensions to Matlab (The MathWorks Inc., Natick, MA). The stimuli were projected from an LCD projector and participants viewed the screen via a mirror attached to the head coil. For deaf signers, the visual stimuli were projected on a screen placed at the back of the magnet bore that was viewable through the head coil mirror. For hearing speakers, the screen was mounted on the head coil a short distance from the mirror. The field of view (FOV) was similar but not identical across setups. The FOV for each setup was chosen so that the stimuli would look natural to native users of the language given the apparatus used. Although we acknowledge this is not ideal, the lack of activation differences in early visual cortical areas across populations in the results suggests that low-level stimulus differences did not affect the results of this experiment.

Stimuli

English and finger-spelled letters were selected for each population. Although the serial span is typically tested using digits, digits are problematic when performing ASL–English comparison as they are more formationally similar in ASL than in English, which could be a factor in the smaller span reported in signers. In addition, it has been proposed that digits may differ from other materials when it comes to STM performance (Wilson and Emmorey 2006a, 2006b). Therefore, letters were used; the letters were chosen in each language as to minimize phonological similarity and thus varied slightly across languages (see Bavelier et al. [2006] for a discussion of this point).

ASL Material

Sixty sequences of ASL finger-spelled letters were constructed based on a set of phonologically dissimilar letters from the finger-spelled ASL alphabet (B, C, D, F, G, K, L, N, and S). The sequences were signed by a Deaf native ASL signer at a rate of about 3 items per second and videotaped. The load manipulation was implemented by having half of the sequences be of the average span length in signers (high load: 5 letters) and of 40% of that length (low load: 2 letters) (Mayberry and Eichen 1991; Mayberry 1993; Boutla et al. 2004). All sequences were edited into 3-s movies using iMovie (Apple Computer Inc.).

English Material

The 60 English sequences were constructed from the following set (B, F, K, L, M, Q, R, S, and X). The sequences were spoken by a native English speaker (A.J.N.) at a rate of about 3 items per second and recorded to digital videotape with audio recorded using a lapel microphone (Sony Electronics Inc.). The load manipulation was implemented by varying the number of letters per sequence between the average span in speakers (high load: 7 letters) and 40% of this value (low load: 3 letters). All sequences were edited into 3-s movies using iMovie (Apple Computer Inc.).

Design and Procedure

Each trial included 3 phases. During the encoding phase, the target letter sequence was presented to the participant via a 3-s movie (in ASL for signers and audio–visual English for speakers) followed by a still picture of the same individual who was either signing or speaking for 1.4 s. Thus, the encoding phase lasted a total of 4.4 s or 2 time repetitions (TRs). All participants were instructed to memorize the letter sequence presented in the movie. During the maintenance phase (13.2 s or 6 TRs), a still picture of the model remained on the screen. The participants were instructed to covertly rehearse the letter sequence in the language it had been presented in.

At the beginning of the recall interval, a cue was presented on the screen, instructing participants to recall the target letter sequence in the same order as presented. The participants were explicitly told to perform their recall only during the interval during which the cue remained on the screen (4.4 s). For signers, the cue was an arrow pointing toward either the right or left, with the direction of the arrow indicating which hand to use for the response on a given trial. Response hand was counterbalanced across runs. The signed responses were recorded on paper by an experimenter fluent in ASL who was present in the room. For speakers, the cue was a 2-headed arrow pointing left and right superimposed on a still picture of the model, instructing the speaker to verbally recall the target letter sequence. The responses were recorded by an experimenter sitting in the scanner room, via a sound tube with 1 end placed near the mouth of the participant and the other near the ear of the experimenter. After the recall phase, there was an intertrial interval (11 s) during which a still picture of the model was presented.

The experiment consisted in 6 runs per subject. Each run included 10 trials (5 trials of each load level). Within each run, the order of trials was pseudorandomized with the constraint that no more than 3 trials of the same load condition would follow one another. Moreover, across runs the same number of low- and high-load trials was present as 1st and last trials of a run. The order of runs was counterbalanced across participants. Prior to scanning, detailed instructions were given in English to each speaker and in ASL to each signer. Each signer was additionally trained to recall with the hand corresponding to the arrow cue prior to scanning.

MRI Data Acquisition

Deaf Signers

MRI data were acquired on a 1.5-Tesla GE (Milwaukee, WI) scanner at the NIH, Bethesda, MD. Signers’ heads, shoulders, and elbows were stabilized using pillows and foam. Functional images sensitive to blood oxygenation level–dependent (BOLD) contrasts were acquired by T2*-weighted echo planar imaging (TR = 2.2 s, time echo [TE] = 40 ms, flip angle [FA] = 90 degrees, 64 × 64 matrix, FOV = 24 cm, in-plane voxel resolution = 3.75 × 3.75 mm, 22 slices, slice thickness = 5 mm, interslice gap = 1 mm). High-resolution structural T1-weighted images (TE = minimum, FA = 15, FOV = 24 cm, 256 × 192 matrix, voxel size 1 × 1 × 1.2 mm, 124 sagittal slices) were also acquired from all signers. Each run consisted of 155 time points. The first 5 time points of each run were discarded to allow stabilization of longitudinal magnetization. Each participant performed 6 runs, leading to a total of 900 volumes per participant.

Hearing Speakers

MRI data were acquired on a 1.5-Tesla GE (Milwaukee, WI) scanner located at the University of Rochester Medical Center. This scanner was similar in specifications to that used for the Deaf participants. The pulse sequence and parameters used for data acquisition were identical to those used for the deaf signers.

Because this study required between-group comparisons with data from each group collected on different MRI systems, special provisions were taken. First, we ensured comparable stability on the 2 MRI systems by installing the same BOLD sequence and, importantly, using the same criteria to assess field stability on the Rochester magnet as at NIH. Second, to further assess the comparability of the data from the 2 MRI scanners, we reviewed the preprocessed data for each of the deaf and hearing subjects (i.e., the input to single-subject statistical analysis). We visually examined all of the images and did not observe any apparent systematic differences in susceptibility artifacts or other distortions. As in any other study, we did find sizeable intersubject variability in this regard, likely owing to the fact that such distortions are affected by individual differences including anatomy and dental work, as well as head position in the scanner. But importantly, there was no clear-cut differences across groups. Third, it is unlikely that the 2 MRI systems differed in important ways as the main effects in each population are of comparable size in terms of Z scores (see Supplementary Tables 1–3 online). Finally, our results show that it is not always the case that 1 population exhibits greater activation than the other as would be predicted by a difference in sensitivity between MR systems. Rather, population differences are seen to vary depending on the phase of the STM task and the part of the brain considered. This could not be the case if the population differences documented were due to instrument differences. Thus, our study is similar to other longitudinal or multisite studies in suggesting that fMRI data are quite robust across magnets (Casey et al. 1998; Zou et al. 2005; Aron et al. 2006; Friedman et al. 2006).

Data Preprocessing and Analysis

Data Preprocessing

The fMRI data were preprocessed using SPM99 software (http://www.fil.ion.ucl.ac.uk) running under Matlab 5.3 (The MathWorks Inc.). Preprocessing consisted of slice timing correction (shifting the signal measured in each slice to align with the time of acquisition of the middle slice, using sinc interpolation), realignment (motion correction, using the 1st volume of the 1st run, after discarding “dummy” scans, as a reference and reslicing using sinc interpolation), spatial normalization (using nonlinear basis functions—Ashburner and Friston 1999) of each subject's structural image to the MNI152 template and of each fMRI time series to the SPM EPI template, and spatial smoothing with an isotropic Gaussian kernel (7-mm full width at half maximum). The amount of head motion detected by the motion correction algorithm was examined for each run. Three subjects (2 deaf signers and 1 hearing speaker) were rejected on the basis of excessive motion (multiple rapid translations of >1 mm and/or rotations of >1 degrees). Moreover, the data of 1 additional hearing speaker were removed as he was unable to perform the high-load task in English. All analyses are, therefore, based on a sample of 10 Deaf native ASL signers and 10 hearing native English speakers (6 females/group).

Individual Subject Analyses

Statistical analyses were performed using the AFNI (http://afni.nimh.nih.gov) software package (Cox 1996). Statistical analysis began by applying multiple regression to the concatenated time series of all 6 fMRI runs for each subject using AFNI's 3dDeconvolve (Ward, 2002—http://afni.nimh.nih.gov/pub/dist/doc/manuals/3dDeconvolve.pdf). The vectors modeling each parameter of interest were generated by convolving impulse response functions modeling the “on” periods for each stimulus type with a model of the hemodynamic response implemented in the AFNI program waver. This “WAV” model is very similar in appearance to the gamma function (Cohen 1997); it is a continuously varying function that rises from 0 beginning 2 s after event onset, peaks at 6 s, crosses 0 again at 12 s, and has an undershoot that lasts for 2 additional seconds and is 20% of the amplitude of the peak of the function (for more details see http://afni.nimh.nih.gov/). The regression model included 5 parameters of interest—1 for encoding, 3 for maintenance, and 1 for recall. The 6 TR maintenance period was subdivided into 3 equal parts, each 2 TR long. Only the middle period was considered as the maintenance period in the analyses presented, to avoid contamination from the encoding phase and motor preparation. This also ensured that each phase corresponded to a 2-TR interval. Only correct trials were included in these regressors; the encoding, maintenance, and recall periods of incorrect trials were modeled as 5 additional parameters and were ignored in group-level analyses. The model also included second-order polynomial covariates of no interest for each run, to account for linear drift and low frequency artifacts (e.g., respiration, cardiac aliasing). The estimated regression coefficients for each condition, for each subject, were normalized to percent change units (by dividing by the mean baseline coefficient across all runs) prior to group-level analyses.

Group-Level Analyses

Within-Group Analyses

We first computed the pattern of activation within each group. These analyses fulfilled several needs. First, they allowed us to check that the pattern of activation in the hearing population matched the existing literature. Second, they were necessary as inputs to the conjunction analysis done to identify areas of commonality between hearing speakers and deaf signers. Finally, areas identified as active in each population by these within-group analyses were used as a mask in the between-subject analysis. This approach ensures that population difference are only found in areas known to be active in each population and thus rules out possible artifacts due to deactivation.

For each phase (encoding, maintenance, and response), a 2-way mixed-effects ANOVA was performed on the normalized coefficients obtained from the individual subject regression analysis, with subjects as a random-effect factor and group (Deaf/hearing) as a fixed effect. For each group, the statistical map showing the main effect was converted to z scores and thresholded at z = 3.251 (P ≤ 0.001) for the encoding and recall phases and z = 2.798 (P ≤ 0.005) for the maintenance phase. A more lenient threshold was used for the maintenance phase because the existing literature predicts a smaller effect size in the maintenance phase, when neither visual stimuli nor motor responses occur, than in the encoding and recall phases. Because the number of participants was naturally identical for all the 3 phases, the choice of a more lenient threshold for the maintenance phase is a compromise means of achieving more comparable power across conditions. Still, to ensure comparable levels of false-positive across conditions, Monte Carlo simulations (using AFNI's AlphaSim program) were used to determine the minimum cluster size necessary to obtain a brainwise alpha value of 0.05 in each condition at the voxelwise thresholds given above. Using a cluster connection radius of 10 mm, the minimum cluster size was estimated to 12 voxels for encoding/recall and 26 voxels for maintenance.

Conjunction Analyses

To identify regions of common activation across groups, a conjunction analysis was performed on the within-group maps described in the previous section. Areas of overlap between the thresholded statistical paramteric maps of each of the 2 groups were identified separately for each phase. That is, “active” voxels in the conjunction map are those that were significantly activated (at the threshold specified in the previous section) in both groups independently.

Between-Group Analyses

Differences between groups were computed by performing 2-way mixed-effects ANOVAs with subjects considered as a random-effects variable and group as a fixed-effect variable. This analysis was limited to voxels that were shown to be active in at least one or the other group alone, at the corrected thresholds given above (P ≤ 0.001, 0.005, and 0.001 for encoding, maintenance, and recall, respectively). This ensured that any between-group differences found would not be attributable to deactivations and further helped to control for Type I error by restricting the number of voxels considered in the between-group ANOVAs. Voxelwise cluster analyses were then performed (using AFNI's 3dclust program) on the ANOVA results, using a minimum cluster size of 10 voxels (maximum intracluster connectivity distance of 6 mm) to further control for multiple comparisons. Voxelwise significance thresholds for these between-group comparisons were z = 2.578 (P ≤ 0.01) for the encoding and response phases and z = 1.962 (P ≤ 0.05) for the maintenance phase. In a few cases, the cluster analysis revealed a large cluster (about a 100 voxels or more in size) spanning multiple anatomical areas. In those cases, a second level of clustering was performed by manually subdividing the large cluster into smaller regions based on anatomical landmarks and known functional subdivisions for STM (Manoach et al. 2003; Ravizza et al. 2004). For example, 1 large cluster spanned the SMA, precentral sulcus, and anterior cingulate regions. The activation map within each of these smaller regions was subjected to the clustering algorithm with the same significance threshold and clustering parameters as described above to obtain cluster statistics for each anatomical region within the large cluster.

Load Analyses

The effect of STM load on brain activation was analyzed following the same general approach as described above, except that the individual subject regression model included 10 parameters of interest—1 for encoding, 3 for maintenance, and 1 for recall, at each of the 2 load levels (high and low). The regression coefficients for each load condition were entered in a 2-way mixed-effects ANOVA (with load as a fixed effect and subjects as a random effect) to compute the effects of load manipulation per phase within each group. To test the effects of the load manipulation between groups, differences in activation between high and low load for each phase were computed as linear contrasts in the individual subject regression analyses, and the resulting difference coefficients were used in a 2-way mixed-effects ANOVA with group as a fixed effect and subjects as a random effect. No contrast showed higher activation for low than high load; thus all reported effects below correspond to greater activation for high than low load.

Results

Behavioral

Participants were probed to recall the target list on each trial. Recall was considered correct only if participants reported all of the items in the target list in the exact order the items were presented. Performance for each group at each load level is shown in Table 1. A 2 × 2 ANOVA with group (Deaf/hearing) and load (high/low) was carried out on percent correct performance. A main effect of load was observed (F1,18 = 65.3, P < 0.001) revealing, as expected, better performance for low than high load. Importantly, there was no effect of group (P > 0.9) and no interaction between group and load (P > 0.7) indicating comparable performance across groups at each load. This indicates that difficulty levels were well matched between groups, so group differences in brain activation are not expected to be confounded with differing levels of task difficulty and/or list length.

Table 1.

Percent correct performance on the recall task for each population by load (standard deviation is given in parentheses)

| Deaf (N = 10) | Hearing (N = 10) | |

| Low Load | 95.3 (0.04) | 96.0 (0.05) |

| High Load | 57.0 (0.27) | 55.0 (0.22) |

Brain Imaging

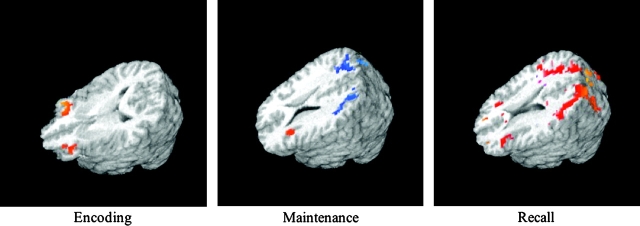

The analyses were constrained by a first set of analyses identifying the network of areas recruited during the 3 STM stages in each population separately. The goal of these first within-group analyses was to define areas of interest for all further analyses and to confirm the expected network of areas recruited in the hearing population. As can be seen in Figure 1, for each of the 3 STM stages hearing participants displayed the expected frontoparietal network of areas previously described in the literature. In addition, the general pattern of activation was quite similar in the Deaf population (summary tables of the regions activated in each population are reported in Supplementary Tables 1–3 online). Below, we discuss separately for each phase the areas of common activation across groups (identified by the conjunction analyses) as well as differences between groups (identified by between-group ANOVAs) and differences in load effect across groups (identified by between-group ANOVAs with load as a fixed effect).

Figure 1.

Overall pattern of activation for each group and each STM stage (see within-group analyses for more details). Speakers and signers recruited a similar network of areas. Crucially, deaf signers did not recruit areas beyond those normally associated with STM.

Encoding

Commonalities and differences across populations.

The conjunction analysis revealed that most of the standard frontoparietal network for encoding was shared between Deaf native signers and hearing speakers. In the frontal lobes, bilateral activations were observed in inferior frontal regions and the insula, as well as the premotor cortex, the precentral gyrus, the anterior cingulate, and the SMA. In the parietal lobes, activations were observed in the superior parietal lobules (SPL) bilaterally. In subcortical structures, robust activation was observed in the head of the caudate nucleus and cerebellum bilaterally (Table 2). Within this network, however, between-group analyses revealed group-specific differences (Table 3). Deaf signers exhibited greater recruitment than hearing speakers in the right inferior frontal and insular regions, the left cingulate gyrus, and the left thalamus, suggesting enhanced recruitment of the frontal network during encoding in signers. Note that although the ventral prefrontal difference was strongest in the right hemisphere, the same population difference was present in the left hemisphere when using a less stringent threshold (P < 0.05; not reported in Table 3), suggesting that population difference was not strongly lateralized.

Table 2.

Conjunction analysis—encoding (P < 0.001)

| Activated regions | Side | BA | Coordinates | Number of voxels | ||

| x | y | z | ||||

| Frontal | ||||||

| IFG/insula | L | 13/47 | −37 | 23 | 8 | 68 |

| IFG/insula | R | 13/47 | 33 | 23 | 8 | 35 |

| Anterior cingulate | R | 32/8 | 10 | 26 | 39 | 15 |

| Lateral premotor cortex | R | 4 | 44 | −9 | 53 | 23 |

| Precentral | L | 9 | −44 | 5 | 37 | 53 |

| Precentral | R | 9 | 48 | 9 | 33 | 14 |

| SMA | L and R | 6 | 1 | 4 | 58 | 76 |

| Parietal | ||||||

| SPL | L | 7/19 | −32 | −67 | 43 | 21 |

| SPL | R | 7/19 | 30 | −61 | 44 | 35 |

| Temporal | ||||||

| STG (mid) | L | 22 | −55 | −16 | −1 | 85 |

| STG (mid) | R | 22 | 56 | −3 | −5 | 23 |

| STG (post) | L | 22 | −56 | −36 | 6 | 149 |

| STG (post) | R | 22 | 54 | −35 | 9 | 65 |

| Occipital | ||||||

| Superior occipital gyrus | R | 18 | 27 | −90 | −5 | 24 |

| MT/MST | L | 19/37 | −41 | −77 | −6 | 110 |

| MT/MST | R | 19/37 | 41 | −63 | −12 | 139 |

| Subcortical | ||||||

| Caudate | L | −19 | 14 | 6 | 100 | |

| Caudate | R | 17 | 15 | 8 | 72 | |

| Cerebellum | L | −36 | −64 | −28 | 18 | |

| Cerebellum | R | 40 | −47 | −28 | 12 | |

Note: Brodmann's areas, Talairach coordinates, peak z scores, and sizes for activated clusters are given. L, left; R, right; STG, superior temporal gyrus; and post, posterior.

Table 3.

Differences between deaf signers and hearing speakers during encoding (P < 0.01)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Z peak (z average) | Number of voxels | Z peak (z average) | Number of voxels | |||

| Frontal | |||||||||

| IFG/insula | R | 47 | 35 | 33 | −2 | −3.56 (−3.0) | 28 | ||

| Anterior cingulate | L | 32 | −9 | 35 | 25 | −3.5 (−2.98) | 10 | ||

| Temporal | |||||||||

| STG (mid) | L | 22 | −54 | −16 | 3 | 3.67 (2.84) | 34 | ||

| STG (mid) | R | 22/21 | 58 | −9 | −1 | 3.42 (2.93) | 35 | ||

| Subcortical | |||||||||

| Thalamus | L | −21 | −24 | −5 | −2.79 (−3.2) | 18 | |||

Note: L, left; R, right; STG, superior temporal gyrus.

In auditory and visual sensory cortices, the conjunction analysis revealed activation that spanned the medial and posterior extent of the superior temporal regions bilaterally, the superior occipital gyrus in the right hemisphere, and visual association cortices centered over the visual motion area, MT/MST, bilaterally. As expected, between-group analyses showed that hearing speakers displayed more activation than deaf signers in superior temporal regions associated with auditory processing, and deaf signers showed a tendency for more activation in MT/MST than hearing speakers during encoding. This effect was only marginally significant (P < 0.05; not reported in Table 3), probably owing to the fact that MT/MST was also activated in hearing speakers upon seeing the audio–visual stimuli, which included lip movements, eye blinks, and slight head/body motion.

Load effects during encoding.

In both groups, encoding of an increased number of items into STM led to increased activation of frontoparietal areas previously reported to be sensitive to load (Supplementary Tables 4A,B online), in particular, the DLpFC and the DIPC. Load effects were also observed in the insula and SMA bilaterally. As expected, each group also showed modality-specific effects. In hearing speakers, load effects were present in superior temporal regions (BAs 21/22, 41/42) bilaterally as well as the right lateral premotor cortex in the vicinity of the mouth representation. Deaf signers displayed a significant load effect in higher level visual areas (MT/MST bilaterally and right fusiform gyrus), the thalamus and caudate bilaterally, as well as the right medial premotor cortex in the vicinity of the hand representation (medial and dorsal to the premotor activation observed in speakers). As shown in Table 4, these modality-specific regions were the only areas to show significant group differences in load effect.

Table 4.

Group differences in load effect during encoding (P < 0.01)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Peak (z average) | Number of voxels | Peak (z average) | Number of voxels | |||

| Temporal | |||||||||

| STG (mid) | Left | 22 | −57 | −16 | 1 | 3.5 (2.88) | 24 | ||

| Occipital | |||||||||

| MT/MST | Left | 19/37 | −52 | −75 | −4 | −3.94 (−3.17) | 14 | ||

Note: STG, superior temporal gyrus.

Maintenance

Commonalities and differences across populations.

As expected, activation levels were overall lower in the maintenance phase compared with the encoding and recall phases; this decrease in activation was especially marked in deaf signers. The only common activation across deaf signers and hearing speakers revealed by the conjunction analysis was in parietal areas that are typical of the STM frontoparietal network (i.e., mainly the DIPC—Table 5; note that widespread bilateral DIPC/SPL activation was observed in hearing subjects but was restricted to the right hemisphere in deaf signers; see Supplementary Tables 2A,B online). Between-group analyses confirmed the weak activation in deaf signers. All areas showed more activation for hearing speakers than deaf signers, except for the left IFG/insula, which was more activated in deaf signers (Table 6). Areas of greater activation for the hearing included the lateral premotor area (near the mouth representation), the DIPC, the cerebellum, and a left middle temporal gyrus area.

Table 5.

Conjunction analysis—maintenance (P < 0.005)

| Activated regions | Side | BA | Coordinates | Number of voxels | ||

| x | y | z | ||||

| Parietal | ||||||

| Dorsal inferior parietal cortex | Right | 40 | 35 | −54 | 39 | 26 |

| Dorsal inferior parietal cortex | Right | 40 | 50 | −48 | 48 | 12 |

Table 6.

Differences between deaf signers and hearing speakers during maintenance (P < 0.05)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Peak (z average) | Number of voxels | Peak (z average) | Number of voxels | |||

| Frontal | |||||||||

| Lateral premotor cortex | R | 4 | 47 | −9 | 43 | 3.00 (2.35) | 22 | ||

| IFG/Insula | L | 13 | −31 | 26 | 16 | −2.88 (−2.26) | 11 | ||

| Parietal | |||||||||

| Dorsal inferior parietal cortex | L | 40 | −35 | −49 | 53 | 3.20 (2.51) | 85 | ||

| Dorsal inferior parietal cortex | R | 40 | 30 | −55 | 57 | 3.19 (2.38) | 73 | ||

| Temporal | |||||||||

| MTG (post) | L | 21/37 | −62 | −48 | −5 | 3.35 (2.78) | 10 | ||

| Cerebellar | |||||||||

| Dentate | L | −14 | −55 | −30 | 3.53 (2.78) | 15 | |||

| Dentate | R | 13 | −50 | −30 | 3.85 (2.93) | 14 | |||

Note: L, left; R, right; post, posterior; and MTG, middle temporal gyrus.

Load effects during maintenance.

During maintenance of spoken information, increased STM load was associated with greater activations in the DLpFC and the SMA bilaterally, as well as the lateral premotor cortex in the right hemisphere. An effect of load was also observed in the DIPC and the superior parietal lobule bilaterally. A very similar pattern of activation was found during maintenance of signed information, including activations in the DLpFC, the SMA, and the DIPC bilaterally as well as the medial premotor area on the right (Supplementary Tables 5A,B online).

Group difference analyses confirmed that the effect of load was highly similar across groups. Only 2 regions showed differential load effects between the 2 populations (Table 7). Both of these regions—right lateral premotor cortex and left DIPC—showed greater load-related increases for hearing speakers than for deaf signers.

Table 7.

Group differences in load effect during maintenance (P < 0.05)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Peak (z average) | Number of voxels | Peak (z average) | Number of voxels | |||

| Frontal | |||||||||

| Lateral premotor cortex | Right | 4/3 | 54 | −13 | 50 | 3.93 (2.7) | 20 | ||

| Parietal | |||||||||

| Dorsal inferior parietal cortex | Left | 40 | −37 | −41 | 52 | 2.85 (2.38) | 15 | ||

Recall

Commonalities and differences across populations.

The conjunction analysis revealed that most of the standard frontoparietal network was shared between Deaf native signers and hearing speakers during recall (Table 8). In the frontal lobes, bilateral activations were observed in inferior frontal regions and the insula, the DLpFC on the left, the anterior cingulate, and the SMA. In the parietal lobes, common activation was less extensive, with only the posterior central gyrus and superior parietal lobule on the right showing common recruitment. Activation was also noted in the posterior part of the superior temporal gyrus bilaterally, as well as in MT/MST on the right, and the cuneus bilaterally. Finally, significant common activation was also noted in subcortical structures including the cerebellum, the caudate, the thalamus, and the brain stem.

Table 8.

Conjunction analysis—recall (P < 0.001)

| Activated regions | Side | BA | Coordinates | Number of voxels | ||

| x | y | z | ||||

| Frontal | ||||||

| IFG/insula | L | 13 | −35 | 6 | 7 | 212 |

| IFG/insula | R | 13 | 35 | 6 | 10 | 276 |

| DLpFC | L | 9 | −36 | 37 | 37 | 47 |

| Anterior cingulate | L and R | 32/6 | 2 | 15 | 44 | 120 |

| SMA | L and R | 6 | 5 | −2 | 59 | 88 |

| Parietal | ||||||

| Postcentral | R | 3 | 37 | −24 | 51 | 21 |

| SPL | R | 7 | 25 | −65 | 61 | 26 |

| Temporal | ||||||

| STG (post) | L | 22 | −56 | −28 | 4 | 90 |

| STG (post) | R | 22 | 50 | −34 | 12 | 32 |

| Occipital | ||||||

| MT/MST | R | 19/37 | 41 | −61 | −5 | 101 |

| Cuneus | L | 18 | −12 | −76 | 14 | 192 |

| Cuneus | R | 18 | 12 | −82 | 18 | 191 |

| Subcortical | ||||||

| Brain stem | L and R | −2 | −25 | −38 | 28 | |

| Caudate | L | −13 | 15 | 11 | 68 | |

| Caudate | R | 8 | 16 | 7 | 44 | |

| Cerebellum | L | −18 | −56 | −22 | 153 | |

| Cerebellum | R | 20 | −57 | −17 | 219 | |

| Thalamus | L | −13 | −20 | 4 | 52 | |

| Thalamus | R | 12 | −10 | 10 | 84 | |

Note: L, left; R, right; STG, superior temporal gyrus; and post, posterior.

Yet, significant group differences were noted with mostly greater recruitment in deaf signers than in hearing speakers (Table 9). Signers exhibited greater activation not only in sensory and motor areas as expected (motion sensitive MT/MST area, medial premotor area, SMA, and cerebellum bilaterally) but also in areas of the frontoparietal brain system subserving STM, including the left DLpFC, anterior cingulate, the DIPC, and the superior parietal lobe. The only areas that displayed greater activation for speakers than for signers were the expected motor and sensory areas (lateral premotor area bilaterally, and superior temporal gyrus around the auditory cortex on the right).

Table 9.

Differences between deaf signers and hearing speakers during recall (P < 0.01)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Peak (z average) | Number of voxels | Peak (z average) | Number of voxels | |||

| Frontal | |||||||||

| Lateral premotor cortex | L | 4/6 | −56 | −9 | 37 | 3.88 (3.08) | 105 | ||

| Lateral premotor cortex | R | 4/6 | 56 | −10 | 36 | 3.86 (3.08) | 82 | ||

| DLpFC | L | 9 | −36 | 50 | 43 | −4.34 (−3.25) | 13 | ||

| Anterior cingulate | L | 32 | −5 | 27 | 32 | −3.46 (−2.86) | 19 | ||

| SMA | L and R | 6 | −3 | −3 | 55 | −3.55 (−2.96) | 37 | ||

| Medial premotor cortex | L | 6 | −28 | −10 | 59 | −3.39 (−2.95) | 21 | ||

| Medial premotor cortex | R | 6 | 28 | −10 | 67 | −4.32 (−3.04) | 79 | ||

| Parietal | |||||||||

| Dorsal inferior parietal cortex | L | 40 | −42 | −36 | 53 | −3.31 (−2.87) | 73 | ||

| Dorsal inferior parietal cortex | R | 40 | 42 | −36 | 54 | −3.66 (−2.92) | 89 | ||

| SPL | L | 7 | −19 | −50 | 67 | −3.94 (−2.95) | 22 | ||

| SPL | R | 7 | 22 | −68 | 56 | −3.73 (−2.86) | 25 | ||

| Temporal | |||||||||

| STG (mid) | R | 22 | 52 | −11 | 4 | 3.01 (2.76) | 22 | ||

| Occipital | |||||||||

| MT/MST | L | 19/37 | −47 | −70 | −10 | −3.51 (−2.88) | 24 | ||

| MT/MST | R | 19/37 | 44 | −71 | 4 | −4.47 (−3.06) | 108 | ||

| Superior occipital gyrus | L | 19 | −34 | −80 | 29 | −3.75 (−2.97) | 69 | ||

| Fusiform | R | 37 | 36 | −61 | −17 | −4.02 (−2.95) | 95 | ||

| Subcortical | |||||||||

| Brain stem | −11 | −8 | −11 | 3.34 (2.86) | 13 | ||||

| Cerebellum | |||||||||

| Inferior surface | L | −23 | −45 | −51 | −4.91 (−3.06) | 38 | |||

| Inferior surface | R | 17 | −43 | −50 | −3.67 (−3.01) | 22 | |||

Note: L, left; R, right; STG, superior temporal gyrus.

Load effects during recall.

Load increase during recall in speakers was associated with greater activations in premotor and sensory areas including the lateral premotor cortex, superior temporal areas associated with auditory processing, and the thalamus bilaterally (see Supplementary Table 6A online). A parallel effect of load increase on sensory and motor areas recruitment was found in signers (Supplementary Table 6B online), with greater recruitment of the medial premotor cortex, visual areas associated with visual motion processing (in particular MT/MST), and the thalamus bilaterally. Notably, however, effects of load in signers were observed bilaterally not only in the dorsal part of the prefrontal cortex but also in the ventral part of the prefrontal cortex (IFG) as well as in subcortical (caudate nucleus and thalamus) and cerebellar regions.

A few significant differences in the effect of load were observed across populations, all reflecting greater activity in signers than in speakers (Table 10). These differences appeared to be associated exclusively with modality differences, including greater recruitment of medial premotor and visual areas, such as MT/MST, in signers.

Table 10.

Group differences in load effect during recall (P < 0.01)

| Activated regions | Side | BA | Coordinates | Deaf > Hearing | Hearing > Deaf | ||||

| x | y | z | Peak (z average) | Number of voxels | Peak (z average) | Number of voxels | |||

| Frontal | |||||||||

| Medial premotor cortex | L | 6 | −31 | −11 | 63 | −3.64 (−3.04) | 18 | ||

| Medial premotor cortex | R | 6 | 29 | −10 | 58 | −3.34 (−2.91) | 19 | ||

| Parietal | |||||||||

| Postcentral | R | 3 | 40 | −32 | 51 | −3.80 (−2.93) | 33 | ||

| Occipital | |||||||||

| MT/MST | L | 19/37 | −52 | −69 | 1 | −3.63 (−3.12) | 26 | ||

| MT/MST | R | 19/37 | 42 | −70 | 7 | −4.64 (−3.25) | 28 | ||

| Cuneus | L | 18 | −15 | −93 | 19 | −3.11 (−2.78) | 11 | ||

| Cuneus | R | 18 | 14 | −77 | 6 | −3.11 (−2.8) | 14 | ||

Note: L, left; R, right.

General Discussion

A Shared STM Network across Language Modalities

This study establishes that signed STM recruits the same network of areas as spoken STM. Encoding, maintenance, and recall of signed information during a STM serial span task were associated with significant activations in a remarkably similar network of areas to that previously described for speakers (Wager and Smith 2003; Habeck et al. 2005). In particular, the same frontoparietal areas were recruited in Deaf signers as in hearing speakers, including DLpFC, IFG, DIPC, insula, cingulate cortex, and the SMA, as well as subcortical structures such as the head of the caudate nucleus, the thalamus, and the cerebellum. This network of regions appears to operate in a similar fashion for signed and spoken material under increased STM load as well. Both groups in the present study showed greater activation as load was increased in key structures of the frontoparietal network, including the inferior and middle prefrontal, medial frontal, and premotor regions, cingulate cortex, and the dorsal inferior parietal lobules.

Overall, these results indicate that the frontoparietal network of areas described in previous literature for STM in speakers is largely shared by signers. The fact that no unexpected brain areas were found to be active in Deaf signers indicates that signed STM does not recruit areas outside the network employed by spoken STM. This suggests that this network of areas is sensitive to linguistic information, irrespective of language modality, providing further support for a shared verbal STM system across a variety of linguistic inputs (Schumacher et al. 1996; Crottaz-Herbette et al. 2004).

Sensorimotor Coding

The differences between the input and output systems across signed and spoken modalities were directly reflected in the shift in recruitment of sensory and motor cortices across populations. This pattern of results, which was expected, provides a direct validation of the robustness of the paradigm used. As predicted, these differences were particularly marked during the encoding and recall phases. Whereas hearing speakers recruited superior temporal areas associated with auditory processing, deaf signers exhibited greater recruitment of visual areas, particularly, the motion sensitive area MT/MST. Similarly in motor cortices, a ventral–lateral region (corresponding most probably to the mouth area) was activated in hearing speakers, whereas a dorsal–medial region (most likely corresponding to the hand area) was activated in deaf signers. These population differences map well on the known sensory and motor cortex involvement that would be expected from an oro-aural versus a visuomanual language. The same pattern of results was observed during the load analyses as the effect of load in deaf signers was highly comparable with that in hearing speakers, with the exception that again activations in sensory and motor regions were shifted in accordance with the language modality. These sensorimotor activations were most marked during encoding and recall, and in fact were the main areas showing group differences in load effects during these stages of STM. Overall, differences in sensory and motor areas at all stages of STM closely reflected the different sensorimotor demands of using speech versus sign.

Group Differences within the Shared Network of Brain Areas

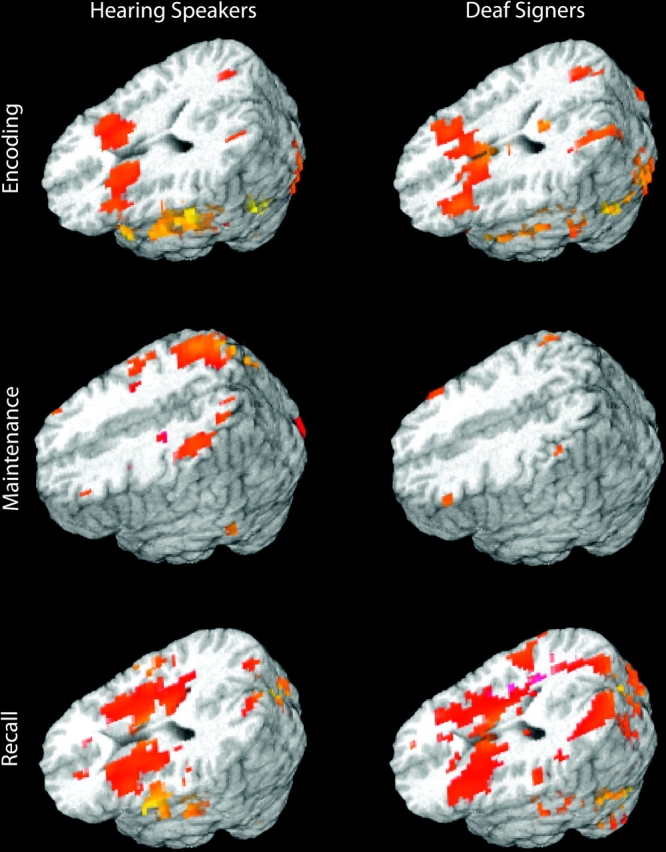

Regions of group differences were not limited to the sensory and motor areas described above. Indeed, as STM stages varied, reliable differences were found between speakers and signers within part of the frontoparietal network (Figure 2). During encoding, signers showed greater activation than speakers in frontal areas, including IFG/insula and the anterior cingulate, as well as the thalamus. During maintenance, greater activation of the IFG/insula region in signers was maintained; however, this was the only region to show more activation in signers during this phase. Speakers displayed greater activation than signers during maintenance in a number of regions including the DIPC and the cerebellum bilaterally, as well as the left middle temporal gyrus. Finally, during recall, signers exhibited roughly twice as many voxels activated above threshold as did speakers, distributed across the frontoparietal STM network.

Figure 2.

Areas within the frontoparietal network were differentially recruited as the stages of STM unfolded in deaf signers and hearing speakers. Areas activated more in Deaf than hearing are shown in red; Hearing greater than Deaf in blue. In particular, deaf signers appear less likely than hearing speakers to call upon parietal areas associated with chunking and manipulation of information during encoding and rehearsal, but more likely to do so during recall. For illustration purposes, results are shown only in the frontoparietal network (from the conjunction analyses thresholded at P < 0.1).

This pattern of population differences indicates differing reliance on components of the STM network from one phase of the task to the other. This makes it unlikely that these population differences can be entirely attributed to the use of stimuli of different lengths in each population (list length of 2 and 5 items in signers vs. 3 and 7 items in speakers). First, the behavioral data confirmed that task difficulty was well matched across speakers and signers. Second, if anything, the use of longer sequences in hearing speakers would predict greater activation in this population during encoding and recall, in contrast to what is observed during these phases. It remains possible that the greater parietal recruitment in speakers during maintenance reflects the higher loads used in speakers. However, this would leave unexplained why the effect of item number is only detected in the maintenance phase, even though it would be driven by physical characteristics known to affect primarily encoding and recall. Another consideration is that because recall had to be performed within the 4-s interval following cue presentation, but was not timed, we cannot exclude the possibility that the greater recruitment in signers during recall may be due to longer response time in sign than in speech. This seems unlikely for 2 reasons, however. First, because list lengths were matched for memory performance across languages, signers were actually given shorter lists than speakers (2 and 5 items for sign vs. 3 and 7 items for speech). Second, we have independent data showing equivalent articulation time for letters, whether finger-spelled or spoken, by native users of ASL and of English, respectively (Boutla et al. 2004; Bavelier et al. 2006; 2007). Given these facts, it is unlikely that the greater activation in deaf signers is due to longer recall time in signers; if anything, the expected difference should be in the other direction. Finally, the greater activation during recall in deaf signers may be attributable to differences in motoric outputs between signers and speakers rather than differences in recruitment of the frontoparietal network for STM. In particular, Emmorey et al. (2007) have shown greater recruitment of the superior parietal lobule and the supramarginal gyrus during recall in signers as compared with speakers. Accordingly, greater activation during recall of regions such as the premotor cortex, SMA, and the superior parietal lobe in the Deaf are likely to reflect such different motoric outputs. However, for the 3 regions of interest within the STM network (IFG/insula, DLpFC, and DIPC), this explanation seems unlikely as these areas are not seen to differ between signers and speakers during production (Emmorey et al. 2007; the DIPC region defined here and in other STM studies is medial, posterior, and superior to the supramarginal gyrus).

Examining the data in a more regionally specific fashion, differential recruitment of dorsal and ventral areas within the frontoparietal network points to functional differences across populations. During encoding and maintenance, the greater recruitment of the ventral prefrontal cortex (IFG/insula) in signers than in speakers is suggestive of a greater reliance by signers on the rather automatic and passive memory buffer associated with this area. Indeed, previous work has suggested that the ventral prefrontal cortex is involved in subcapacity STM load (Rypma and D'Esposito 1999; Rypma et al. 2002; Wager and Smith 2003; although see Narayanan et al. 2005 for a contrasting view). This is not to say that signers do not engage frontal areas associated with more executive functions. For example, dorsal prefrontal areas (DLpFC and middle frontal gyrus) associated with encoding and maintenance of high (supracapacity) loads (Rypma and D'Esposito 1999; Rypma et al. 2002; Wager and Smith 2003) were observed to be sensitive to load manipulation in signers. This shows that signers, like speakers, engage dorsal prefrontal areas associated with supracapacity loads. However, the results of this study show that the extent to which ventral versus dorsal inferior frontal areas are recruited differ in signers and speakers as a function of the phase of STM considered. Signers exhibited greater recruitment than speakers of ventral prefrontal areas during encoding and maintenance but of dorsal prefrontal areas during recall. This pattern of results is suggestive of a greater engagement of the subcapacity, passive memory storage in the early phases of the STM task, followed by an enhanced contribution of supracapacity, flexible storage processes at the time of recall in signers.

A similar functional dissociation between dorsal and ventral regions within the inferior parietal cortex has been made. Ravizza et al. (2004) have proposed that ventral parietal activation reflects material type (in particular, phonological encoding), whereas dorsal areas support domain-general attentional/executive strategies engaged during the processing of higher loads. In accord with this view, the present study revealed effects of load in DIPC during encoding and maintenance in both speakers and signers. However, the relative reliance of each group on this region varied across phases of the STM task. Hearing speakers activated the left DIPC more strongly than signers during maintenance (both overall and as a function of load), whereas only signers activated this region bilaterally during recall, again both across loads and to a greater degree with increased STM load. Together, this work indicates that during encoding and maintenance, signers may not rely to the same extent as speakers on the executive strategies associated with dorsal regions (DLpFC and DIPC), such as flexible reactivation of information, maintenance of temporal order, and rapid switching of attention (Ravizza et al. 2004). On the other hand, signers may have to call upon the domain-general executive processes associated with these dorsal parietal regions more heavily during retrieval and response selection.

The proposal of shared STM cognitive strategies that are differentially engaged as a function of interindividual performance is not new. Rypma et al. (2002; 2005) have documented how differences in brain activation and cognitive strategy can be used to understand individual differences in STM performance. The extent to which the differences in activation and cognitive strategies across STM stages noted in signers and speakers truly account for the differences in span capacity noted behaviorally remain to be established. But the present study suggests that it may be fruitful in future behavioral research to dissociate encoding, maintenance, and recall in order to gain a better understanding of the sources of the difference in behavior between signers and speakers.

Verbal versus Visuospatial STM

To the extent that the network of areas mediating verbal STM can be dissociated from that mediating visuospatial STM, the pattern of results presented here provides little evidence that STM for signing may be more akin to visuospatial STM than verbal STM. For example, although some evidence has suggested greater left lateralization for verbal STM and right-lateralization for nonverbal STM, this study finds no consistent hemispheric lateralization differences between signers and speakers. Most population effects were found to be bilateral or at least marginally so. The idea of differential lateralization between verbal and spatial STM is highly debated (Wager and Smith 2003; but see Walter et al. 2003; Jennings et al. 2006); some have argued that the verbal/spatial difference is better captured by an anterior/posterior distinction (Wager and Smith 2003). The finding of greater DIPC recruitment of during recall in signers is certainly in line with this proposal. However, this result only held for the recall stage and was reversed in the maintenance stage, casting doubt on a simple explanation in terms of modality difference.

This is not to downplay the greater DIPC recruitment in signers during recall; indeed, 2 previous studies document greater DIPC during STM processing in signers (Ronnberg et al. 2004; Buchsbaum et al. 2005). The present design further qualifies these previous reports by showing that the recruitment of DIPC in deaf signers is stage-specific, with a decrease during maintenance and a marked increase during recall. Because activations for each stage of processing could not be distinguished in previous studies, the pattern of activation is likely to have been dominated by the recall stage, which exhibits the most robust activation. The present study therefore expands on prior work by characterizing how the network of areas that mediate STM are differentially recruited across the 3 main stages of STM in signers and in speakers.

Overall, this study indicates a highly similar network of brain regions for STM in signers and speakers, supporting the view of an amodal verbal STM system. The degree of reliance on particular functional components of this network during the different stages of the STM task, however, appears to vary in the signed and spoken modalities. Thus, although sign and speech appear to share a common brain system for STM, they appear to weigh differently some of the strategies that mediate STM as the different stages of STM unfold. In particular, deaf signers appear less likely than hearing speakers to call upon areas that mediate information chunking and manipulation during encoding and rehearsal but more likely to engage these areas during recall. Future work will be required to address the functional implications of this, including the possible relationship between differential reliance on components of the frontoparietal STM network and differing STM spans for spoken and signed material.

Supplementary Material

Supplementary tables can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This research was supported by the National Institutes of Health (DC04418 to D.B.); The James S. McDonnell Foundation (to D.B.); the Canadian Institutes of Health Research (to A.N.).

Acknowledgments

We wish to thank the students and the staff of Gallaudet University, Washington, DC. We are also grateful to P. Clark for her support in stimulus development. Conflict of Interest: None declared.

References

- Aron AR, Gluck MA, Poldrack RA. Long-term test-retest reliability of functional MRI in a classification learning task. Neuroimage. 2006;29(3):1000–1006. doi: 10.1016/j.neuroimage.2005.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner J, Friston K. Nonlinear spatial normalization using basis function. Hum Brain Mapp. 1999;7:254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A. Working memory. Oxford: Clarendon Press; 1986. [Google Scholar]

- Bavelier D, Newport EL, Hall ML, Supalla T, Boutla M. Persistent difference in short-term memory span between sign and speech: implications for cross-linguistic comparisons. Psychol Sci. 2006;17:1090–1092. doi: 10.1111/j.1467-9280.2006.01831.x. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Newport EL, Hall M, Supalla T, Boutla M. Ordered short-term memory differs in signers and speakers: implications for models of short-term memory. Cognition. Forthcoming. 2008 doi: 10.1016/j.cognition.2007.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellugi U, Fisher S. A comparison of sign language and spoken language. Cognition. 1972;1:173–200. [Google Scholar]

- Bellugi U, Klima E, Siple P. Remembering in signs. Cognition. 1974–1975;3:93–125. [Google Scholar]

- Boutla M, Supalla T, Newport L, Bavelier D. Short-term memory span: insights from sign language. Nat Neurosci. 2004;7:997–1002. doi: 10.1038/nn1298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DG. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Braver T, Bongiolatti S. The role of frontopolar cortex in subgoal processing during working memory. Neuroimage. 2002;15:523–536. doi: 10.1006/nimg.2001.1019. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Pickell B, Love T, Hatrack M, Bellugi U, Hickok G. Neural substrates for verbal working memory in deaf signers: fMRI study and lesion case report. Brain Lang. 2005;95:265–272. doi: 10.1016/j.bandl.2005.01.009. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Nyberg L. Imaging cognition II: an empirical review of 275 PET and fMRI studies. J Cogn Neurosci. 2000;12:1–47. doi: 10.1162/08989290051137585. [DOI] [PubMed] [Google Scholar]

- Casey BJ, Cohen JD, O'Craven K, Davidson RJ, Irwin W, Nelson CA, Noll DC, Hu X, Lowe MJ, Rosen BR, et al. Reproducibility of fMRI results across four institutions using a spatial working memory task. Neuroimage. 1998;8:249–261. doi: 10.1006/nimg.1998.0360. [DOI] [PubMed] [Google Scholar]

- Chein JM, Fiez JA. Dissociation of verbal working memory system components using a delayed serial recall task. Cereb Cortex. 2001;11:1003–1014. doi: 10.1093/cercor/11.11.1003. [DOI] [PubMed] [Google Scholar]

- Cohen M. Parametric analysis of fMRI data using linear systems methods. Neuroimage. 1997;6:93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Conrad R. Short-term memory processes in the deaf. Br J Psychol. 1970;61:179–195. doi: 10.1111/j.2044-8295.1970.tb01236.x. [DOI] [PubMed] [Google Scholar]

- Conrad R. Short-term memory in the deaf: a test for speech coding. Br J Psychol. 1972;63:173–180. doi: 10.1111/j.2044-8295.1972.tb02097.x. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Crottaz-Herbette S, Anagnoson R, Menon V. Modality effects in verbal working memory: differential prefrontal and parietal responses to auditory and visual stimuli. Neuroimage. 2004;21:340–351. doi: 10.1016/j.neuroimage.2003.09.019. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Lane H. The signs of language revisited. An anthology to honor Ursula Bellugi and Edward Klima. Mahwah (NJ): Lawrence Erlbaum Associates; 2000. [Google Scholar]

- Emmorey K, Mehta S, Grabowski T. The neural correlates of sign versus word production. Neuroimage. 2007;36:202–208. doi: 10.1016/j.neuroimage.2007.02.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman L, Glover GH, Krenz D, Magnotta V. Reducing inter-scanner variability of activation in a multicenter fMRI study: role of smoothness equalization. Neuroimage. 2006;32:1656–1668. doi: 10.1016/j.neuroimage.2006.03.062. [DOI] [PubMed] [Google Scholar]

- Habeck C, Rakitin B, Moeller J, Scarmeas N, Zarahn E, Brown T, Stern Y. An event-related fMRI study of the neural networks underlying the encoding, maintenance, and retrieval phase in a delayed-match-to-sample task. Brain Res Cogn Brain Res. 2005;23:207–220. doi: 10.1016/j.cogbrainres.2004.10.010. [DOI] [PubMed] [Google Scholar]

- Hanson VL. Short-term recall by deaf signers of American Sign Language: implications of encoding strategy for order recall. J Exp Psychol Learn Mem Cogn. 1982;8:572–583. doi: 10.1037//0278-7393.8.6.572. [DOI] [PubMed] [Google Scholar]

- Henson RNA, Burgess N, Frith CD. Recoding, storage, rehearsal and grouping in verbal short-term memory: an fMRI study. Neuropsychologia. 2000;38:426–440. doi: 10.1016/s0028-3932(99)00098-6. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends Cogn Sci. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Jennings J, van der Veen F, Meltzer C. Verbal and spatial working memory in older individuals: a positron emission tomography study. Brain Res. 2006;1092(1):177–189. doi: 10.1016/j.brainres.2006.03.077. [DOI] [PubMed] [Google Scholar]

- Klima E, Bellugi U. The signs of language. Cambridge (MA): Harvard University Press; 1979. [Google Scholar]

- Krakow RA, Hanson VL. Deaf signers and serial recall in the visual modality: memory for signs, fingerspelling, and print. Mem Cogn. 1985;13:265–272. doi: 10.3758/bf03197689. [DOI] [PubMed] [Google Scholar]

- Losiewicz BL. A specialized language system in working memory: evidence from American Sign Language. Southwest J Linguist. 2000;19:63–75. [Google Scholar]

- MacSweeney M, Campbell R, Donlan C. Varieties of short-term memory coding in deaf teenagers. J Deaf Stud Deaf Educ. 1996;1:249–262. doi: 10.1093/oxfordjournals.deafed.a014300. [DOI] [PubMed] [Google Scholar]

- Manoach DS, Greve DN, Lindgren KA, Dale AM. Identifying regional activity associated with temporally separated components of working memory using event-related functional MRI. Neuroimage. 2003;20:1670–1684. doi: 10.1016/j.neuroimage.2003.08.002. [DOI] [PubMed] [Google Scholar]

- Mayberry R. First-language acquisition after childhood differs from second-language acquisition: the case of American Sign Language. J Speech Hear Res. 1993;36:1258–1270. doi: 10.1044/jshr.3606.1258. [DOI] [PubMed] [Google Scholar]

- Mayberry R, Eichen E. The long-lasting advantage of learning sign language in childhood: another look at the critical period for language learning. J Mem Lang. 1991;30:486–512. [Google Scholar]

- Miyake A, Shah P. Toward unified theories of working memory: emerging general consensus, unresolved theoretical issues, and future research directions. In: Miyake A, Shah P, editors. Models of working memory: mechanisms of active maintenance and executive control. New York: Cambridge University Press; 1999. [Google Scholar]

- Narayanan N, Prabhakaran V, Bunge S, Christoff K, Fine E, Gabrieli J. The role of the prefrontal cortex in the maintenance of verbal working memory: an event-related FMRI analysis. Neuropsychology. 2005;19:223–232. doi: 10.1037/0894-4105.19.2.223. [DOI] [PubMed] [Google Scholar]

- Norman D, Shallice T. Attention to action: willed and automatic control of behaviour. San Diego (CA): Center for Human Information Processing, University of California; 1980. [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Ravizza SM, Delgado MR, Chein JM, Becker JT, Fiez JA. Functional dissociations within the inferior parietal cortex in verbal working memory. Neuroimage. 2004;22:562–573. doi: 10.1016/j.neuroimage.2004.01.039. [DOI] [PubMed] [Google Scholar]

- Ronnberg J, Rudner M, Ingvar M. Neural correlates of working memory for sign language. Brain Res Cogn Brain Res. 2004;20:165–182. doi: 10.1016/j.cogbrainres.2004.03.002. [DOI] [PubMed] [Google Scholar]

- Rypma B, Berger J, D'Esposito M. The influence of working memory-demand and subject performance on prefrontal cortical activity. J Cogn Neurosci. 2002;14:721–731. doi: 10.1162/08989290260138627. [DOI] [PubMed] [Google Scholar]

- Rypma B, Berger J, Genova H, Rebbechi D, D'Esposito M. Dissociating age-related changes in cognitive strategy and neural efficiency using event-related fMRI. Cortex. 2005;41:582–594. doi: 10.1016/s0010-9452(08)70198-9. [DOI] [PubMed] [Google Scholar]

- Rypma B, D'Esposito M. The roles of prefrontal brain regions in components of working memory: effect of memory load and individual differences. Proc Natl Acad Sci USA. 1999;96:6558–6563. doi: 10.1073/pnas.96.11.6558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rypma B, D'Esposito M. A subsequent-memory effect in dorsolateral prefrontal cortex. Brain Res Cogn Brain Res. 2003;16:162–166. doi: 10.1016/s0926-6410(02)00247-1. [DOI] [PubMed] [Google Scholar]

- Sakai K, Rowe J, Passingham R. Active maintenance in prefrontal cortex area 46 creates distractor-resistant memory. Nat Neurosci. 2002;5:479–484. doi: 10.1038/nn846. [DOI] [PubMed] [Google Scholar]

- Schumacher E, Lauber E, Awh E, Jonides J, Smith E, Koeppe R. PET evidence for an amodal verbal working memory system. Neuroimage. 1996;3:79–88. doi: 10.1006/nimg.1996.0009. [DOI] [PubMed] [Google Scholar]

- Shallice T. The short-term memory syndrome. Neuropsychology to mental structure. New York: Cambridge University Press; 1988. pp. 41–67. [Google Scholar]

- Shallice T, Butterworth B. Short-term memory impairment and spontaneous speech. Neuropsychologia. 1977;15:729–735. doi: 10.1016/0028-3932(77)90002-1. [DOI] [PubMed] [Google Scholar]

- Shallice T, Warrington EK. Independent functioning of verbal memory stores: a neuropsychological study. Q J Exp Psychol. 1974a;22:261–273. doi: 10.1080/00335557043000203. [DOI] [PubMed] [Google Scholar]

- Shallice T, Warrington EK. The dissociation between short term retention of meaningful sounds and verbal material. Neuropsychologia. 1974b;12:553–555. doi: 10.1016/0028-3932(74)90087-6. [DOI] [PubMed] [Google Scholar]

- Smith E, Jonides J. Working memory: a view from neuroimaging. Cogn Psychol. 1997;33:5–42. doi: 10.1006/cogp.1997.0658. [DOI] [PubMed] [Google Scholar]

- Smith E, Jonides J, Koeppe R. Dissociating verbal and spatial working memory using PET. Cereb Cortex. 1996;6:11–20. doi: 10.1093/cercor/6.1.11. [DOI] [PubMed] [Google Scholar]

- Vallar G, Di Betta AM, Silveri MC. The phonological short-term store-rehearsal system: patterns of impairment and neural correlates. Neuropsychologia. 1997;35:795–812. doi: 10.1016/s0028-3932(96)00127-3. [DOI] [PubMed] [Google Scholar]

- Wager TD, Smith EE. Neuroimaging studies of working memory: a meta-analysis. Cogn Affect Behav Neurosci. 2003;3:255–274. doi: 10.3758/cabn.3.4.255. [DOI] [PubMed] [Google Scholar]

- Walter H, Bretschneider V, Gron G, Zurowski B, Wunderlich A, Tomczak R, Spitzer M. Evidence for quantitative domain dominance for verbal and spatial working memory in frontal and parietal cortex. Cortex. 2003;39:897–911. doi: 10.1016/s0010-9452(08)70869-4. [DOI] [PubMed] [Google Scholar]

- Warrington EK, Shallice T. The selective impairment of auditory verbal short-term memory. Brain. 1969;92:885–896. doi: 10.1093/brain/92.4.885. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. A visuospatial “phonological loop” in working memory: evidence from American Sign Language. Mem Cognit. 1997;25:313–320. doi: 10.3758/bf03211287. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. A “word length effect” for sign language: further evidence for the role of language in structuring working memory. Mem Cognit. 1998;26:584–590. doi: 10.3758/bf03201164. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. When does modality matter? Evidence from ASL on the nature of working memory. In: Emmorey K, Lane H, editors. The signs of language revisited an anthology to honor Ursula Bellugi and Edward Klima. Mahwah (NJ): Lawrence Erlbaum Associates; 2000. pp. 135–142. [Google Scholar]

- Wilson M, Emmorey K. Comparing sign language and speech reveals a universal limit on short-term memory capacity. Psychol Sci. 2006a;17:682–683. doi: 10.1111/j.1467-9280.2006.01766.x. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. No difference in short-term memory span between sign and speech. Psychol Sci. 2006b;17:1093. doi: 10.1111/j.1467-9280.2006.01835.x. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey KD. The effect of irrelevant visual input on working memory for sign language. J Deaf Stud Deaf Educ. 2003;8:97–103. doi: 10.1093/deafed/eng010. [DOI] [PubMed] [Google Scholar]