Abstract

Background

The results of many quality improvement (QI) projects are gaining wide-spread attention. Policy-makers, hospital leaders and clinicians make important decisions based on the assumption that QI project results are accurate. However, compared with clinical research, QI projects are typically conducted with substantially fewer resources, potentially impacting data quality. Our objective was to provide a primer on basic data quality control methods appropriate for QI efforts.

Methods

Data quality control methods should be applied throughout all phases of a QI project. In the design phase, project aims should guide data collection decisions, emphasizing quality (rather than quantity) of data and considering resource limitations. In the data collection phase, standardized data collection forms, comprehensive staff training and a well-designed database can help maximize the quality of the data. Clearly defined data elements, quality assurance reviews of both collection and entry and system-based controls reduce the likelihood of error. In the data management phase, missing data should be quickly identified and corrected with system-based controls to minimize the missing data. Finally, in the data analysis phase, appropriate statistical methods and sensitivity analysis aid in managing and understanding the effects of missing data and outliers, in addressing potential confounders and in conveying the precision of results.

Conclusion

Data quality control is essential to ensure the integrity of results from QI projects. Feasible methods are available and important to help ensure that stakeholder's decisions are based on accurate data.

Keywords: data quality, research design, data reporting, quality controls

Increasingly, the results of quality improvement (QI) projects are being widely publicized [1–3] and having an important impact on health policy [4]. In addition, hospitals face pressure to report on the quality of care they provide. Underlying this public reporting is the assumption that the data are accurate. To date, there is relatively little assurance to support this assumption and consumers of QI data could be misinformed [5–7].

Quality control methods are critical to help ensure the accuracy of any effort to collect, analyze and report data. Published literature describing valid and feasible data quality control methods within the field of QI is sparse. Furthermore, while data quality control methods are well accepted in clinical research, their applicability to QI is uncertain, given the differences in resources available for research versus QI projects.

While data quality control methods are required, they must be feasible within the QI projects. In the QI projects, data are often collected as part of routine patient care and without additional human or financial resources. As such, QI projects must strike a balance between rigor and feasibility when adopting data quality control methods. The primary objective of this report is to provide recommendations to help assure data quality within QI projects.

A case study: improving patient safety in Michigan

To exemplify data quality control methods, we use a case study throughout this report. This case study is a state-wide QI project aimed at reducing the rate of central line associated blood stream infections by improving compliance with evidence-based strategies for catheter insertion. This QI project was performed in 103 intensive care units (ICUs) in hospitals in Michigan and the surrounding area [8–10]. This project demonstrated that the participating ICUs experienced an immediate, significant and growing reduction in infection rates during the 18-month study period. By the last 3-month period in this project (16–18 months after implementation of the QI intervention), there was an overall 66% reduction in infection rates [8].

Data quality control methods

Principles of data quality control apply to all phases of the project: design, data collection, data management and data analysis (Table 1).

Table 1.

Data quality control methods for QI projects

| Project phase | Challenge question |

|---|---|

| Project design | Are the aims of the project clearly stated? |

| Is a valid definition and measurement system available for the required data? | |

| Is there a clear focus on quality, rather than quantity, of data? | |

| Data collection | Is a standardized data collection form created? |

| Are data items clearly defined and written instructions provided for collecting each data item? | |

| Are staff adequately trained to collect data? | |

| Are QA reviews completed? | |

| Is an electronic database used for data management? | |

| Are sufficient database controls in place to identify errors? | |

| Is there a back-up routine for the electronic database? | |

| Data management | Have data been evaluated using basic statistics? |

| Has there been a comprehensive review for missing data and methods to minimize missing data? | |

| Data analysis | Are missing data reported and appropriate methods used to account for it? |

| Have potential outliers been identified and evaluated? | |

| Have appropriate methods been used to provide summary measures of the project results? | |

| Have measures of precision been presented with the study results? | |

| Have appropriate methods been used to evaluate the impact of factors that may confound the results? |

Project design phase

During the design phase, the QI project's aims should be explicitly stated to clarify the required data collection. Prior to data collection, explicit definitions for each data item, and methods for data collection, should be chosen. Project leaders must decide which data are feasible to collect from the resources available, focusing on quality, not quantity, of data. Consideration should be given to collecting data on any potentially unintended consequences (e.g. adverse events) of the intervention. Data on secondary outcomes must often be minimized or eliminated.

In the case study [8], the primary focus was on collection of the total number of catheter line days and associated blood stream infections in order to calculate the monthly infection rates for each participating ICU. During the project design phase, we recognized that given the lack of funding for data collection, it was not feasible to collect data regarding patient characteristics, adherence to the multifaceted QI intervention or organisms causing infections. Instead, the project focused exclusively on the data required to fulfill its primary aim: evaluating whether infection rates would be reduced by the QI intervention. Thus, in the project design phase, we made an explicit decision to focus on our data collection narrowly to maximize accuracy and completeness of the essential data elements [11].

Data collection phase

Basic methods in planning and conducting data collection can help assure data quality as described below.

Create standardized data collection forms

Each data item collected should have a written definition (using widely accepted definitions whenever possible) and specific instructions regarding how it should be collected. Compiling these instructions into an operation manual is critical for training staff and for use as a reference guide throughout the project. Lack of definitions may result in measurement error from variability in data collection methods. In our experience, it is helpful to include data definitions directly on the collection forms for easy reference.

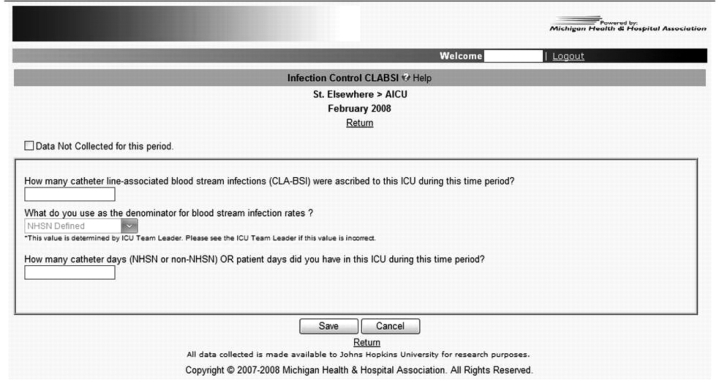

In the QI case study, we developed, pilot-tested and revised standardized data collection forms. Operation manuals were subsequently created and given to each ICU team. As the project matured, we converted the data form into an electronic format to help facilitate data entry, management and analysis of infection rates (Fig. 1). We used standardized definitions for central line associated blood stream infections, provided by the Centers for Disease Control and Prevention [12].

Figure 1.

Sample online data entry form.

Ensure adequate training of staff

Staff training includes two major components: (i) didactic training and orientation to the data collection forms and operations manual and (ii) quality assurance (QA) review in which an independent expert from the QI project reviews a sample of the data collected by each staff member. This QA review should be performed before starting the ‘live’ data collection to prevent errors that may recur throughout the project. In addition, random QA reviews should be conducted throughout the project. With staff turn-over, we recommend that each new staff member undergoes the same training and QA review.

In the QI project, training of ICU staff was crucial to data quality, as some had not participated previously in data collection or QI projects. In addition to involving ICU staff, we used trained, hospital-based, infection-control practitioners to provide data as part of their standard hospital-based infection-control systems.

Create a database

Data on the standardized collection forms should be entered into an electronic database. A well-designed database can provide important controls over data quality that may arise from erroneous data collection or entry errors. For example, database controls can prevent entry of clearly erroneous values (e.g. outside a range of plausible values individually defined for each specific data item) or provide prompts to double check outlier values that could be erroneous. In addition, rather than performing manual calculations, the requisite data elements can be entered into the database and automated processes (via database, spreadsheet or statistical software) can be used to perform calculations to save time and reduce human error.

As a result of the QI project, a comprehensive database was created for sustaining the project and implementing it in other states. Review of the data revealed repeated values for the total number of catheter line days for a specific ICU over consecutive months. As a result, a quality control check was created for the database whereby a warning was provided when the number of catheter line days entered was identical to the value from the immediately preceding month. Another error detected was repeat entry of data for the same time period and ICU; consequently, the database included a check to ensure that there was no duplicate entry for each time period. Moreover, we prevented errors in manual calculation of infection rates by using spreadsheet and statistical software to calculate these rates.

Data management phase

Quality control processes during the data management phase seek to identify and/or help mitigate errors not prevented in earlier phases.

Use statistics to scrutinize data

Simple statistical methods to review data, performed intermittently during collection, are useful in finding errors and preventing them from recurring throughout the project. Consequently, these methods should be introduced very early. For example, if certain variables are collected once daily, the count for each of these variables should be identical if there are no missing data. In addition, evaluating the minimum and maximum values (and a small number of the highest and lowest values) for each data item may help identify potentially erroneous data that may have a large effect on average values used to summarize the results. Finally, calculating a median or average for each value may be helpful in ensuring that the data seem to be reasonable for the given expectations of the project leaders. Questionable or missing data arising from these simple statistics should result in a written query to the relevant project staff or team leader to investigate and verify the value.

Before starting data analysis in the QI project, we reviewed the data within an electronic spreadsheet, which calculated simple statistics and allowed us to directly and efficiently scan through all the data to identify questionable values. These values were then highlighted within the spreadsheet and sent to the project staff for investigation.

Minimize missing data

Missing data are an important and common threat to the validity of QI projects. Since certain missing data may be unrecoverable (e.g. real-time patient assessments not available in the medical record), proactively addressing this issue is vital to project results. Statistical analyses cannot remove the bias created by missing data; hence, the quality control methods described previously are critical. In addition, simple processes, such as immediate review of the collection form, will allow for timely identification of missing data. Such review may be performed by the primary data collection staff member or an independent reviewer. Finally, review of collection forms should be performed at the time of data entry with immediate reporting of problems to the data collection staff and project leaders.

During the early phases of the QI project, we encountered substantial missing data [9]. However, because our primary outcome data were part of routine infection control at many hospitals, we were able to recover much of this missing data. Moreover, we introduced additional data review to minimize future missing data, and the electronic database included visual cues and reminders when data were missing. When missing data were identified, QI project leaders contacted the ICU team to investigate. Due to these efforts, missing data were substantially reduced to only 5% of the total data [11].

Data analysis phase

There are a number of important quality control issues to consider in the data analysis phase.

Accounting for missing data

Missing data that cannot be recovered must be clearly addressed during data analysis. Furthermore, missing data should be explicitly reported and considered in the data analysis plan. Missing data can produce substantial bias since the characteristics of those clinicians or hospitals who submit data are likely to be different from those who do not. For example, if ICUs experiencing an unusually high number of infections during a particular month fail to report data for that month, the results of an analysis of the existing data would understate the true infection rate and may lead to false conclusions about the success of a QI intervention.

There is no commonly accepted threshold below which missing data are considered acceptable. However, if the potential for bias from missing data is considered very low within the context of a particular QI project, the missing data need not be explicitly addressed in the analyses, but simply disclosed when describing the results and their limitations. In circumstances when the extent of missing data may be higher, imputation of missing data may be conducted. Imputation uses methods ranging from simple approaches (e.g. substituting missing data with the average value from existing data or a value that would portray the worst case scenario) to much more sophisticated methods (e.g. fully Bayesian multiple imputation [13]). Consultation with a statistician is necessary if sophisticated methods of imputation are required.

Regardless of the method of accounting for missing data, sensitivity analyses should be considered to determine the potential impact of these alternative methods. Sensitivity analyses evaluate the results of treating missing data in a variety of ways. If results of the QI project do not substantially change with the sensitivity analysis, stakeholders should have greater confidence in inferences drawn from the data.

During the QI project, we performed a sensitivity analysis to compare the effect of the QI intervention on infection rates using the primary results (which ignored the low rate of missing data) versus an analysis based on a subset of ICUs excluding those with any missing data. This sensitivity analysis revealed that project results were similar; thus, providing greater confidence that any bias from missing data was less likely to meaningfully affect our conclusions regarding the benefit of the QI intervention.

Manage outlier data

One or more data items that are extremely different from all other values have the ability to substantially influence the results of statistical analyses. During the data collection and management phases, errors in collection or entry should have been excluded as a reason for outlying data. Thereafter, there are basic methods for addressing outlier data values: (i) remove outliers from the analysis, (ii) truncate the outlier and assign another value that is less extreme (e.g. a value that is two or three standard deviations from the mean), (iii) neither adjust nor remove the outlier and allow it to fully influence the overall results and (iv) choose a statistical analysis plan that is not substantially altered by outlier values (e.g. use of medians, rather than means, to summarize the data). There is no single, universal best approach; consequently, sensitivity analyses are essential for understanding whether the approach substantially changes inferences made from the data.

In the QI project, there were very few outlier values remaining after our data quality control steps. These outliers were retained in the QI project. Their potential impact was minimized because we used a median as our primary summary statistic for the infection rate.

Convey precision of the results

QI projects should provide an estimate of the precision of the results so that readers can evaluate whether the results are robust to random variation. Such precision is usually communicated with P-values and/or confidence intervals. P-values report the probability of obtaining a result that is greater than or equal to the observed result by statistical chance alone if the intervention really had no true effect [14]. Results are usually typically considered ‘statistically significant’ when P-value is <0.05. In contrast, confidence intervals describe a range of values, varying around the result, which may be consistent with the true value when considering the effect of statistical chance. Confidence intervals are generally more informative. Usually, a 95% confidence interval is presented, whereby a narrower confidence interval implies greater precision of the result. Even if results are statistically significant, stakeholders must determine if results are important from their own perspective.

In the QI project, both P-values and 95% confidence intervals were provided to address issues of precision and statistical significance. With the overall result demonstrating a 66% (95% confidence interval, 50–77%) reduction in infection rates, these results were considered large and important from the perspective of relevant stakeholders [15].

Choose an appropriate statistical model for analysis

Most QI projects are analyzed as time series data using a simple run or control chart, which can graphically display a project's results on an ongoing basis [16–18]. Two additional types of graphical display are noteworthy. First, a g-type control chart is valuable for monitoring infrequently occurring events. This chart assesses the time between events, and has the potential to expose small changes in the timing between events [19]. Secondly, a chart of the cumulative sum (CUSUM) graphically displays the sum of differences between actual performance and a target value, and can allow case-mix adjustment [20].

While various types of control charts are helpful for a single organization to visually evaluate performance over time, simple run charts have substantial limitations. First, it is difficult to provide a point estimate of improved performance as a simple overall summary measure. This limits the ability to communicate the overall effectiveness of a program. Secondly, assessing trends in time series data may not consider factors other than the intervention that could influence the results, such as temporal trends (e.g. an improvement in the outcome over time that is unrelated to the QI intervention) or variation in case-mix over time. Thirdly, simple run charts cannot consider the effect of potential confounders (i.e. factors associated with both the exposure and the outcome of the QI project, which may complicate understanding the true effect of the intervention on the outcome). Fourthly, when comparing data across a number of hospital units or facilities, a fundamental assumption regarding the statistical independence of the outcome data may be violated. Standard statistical methods, particularly the use of regression analysis adjusted for non-independence of data (e.g. using random effects or generalized estimating equations methodologies), can be used to account for all of these issues, although they require the appropriate statistical expertise [21].

During the QI project, we were concerned that infection rates could have been independently associated with the teaching status or bed size of participating hospitals. In addition, infection rates may not have been statistically independent within ICUs, hospitals and geographic regions. To address these issues, we presented our results stratified by teaching status and bed size, and performed a hierarchical, multivariable Poisson regression analysis which demonstrated that the QI intervention had a beneficial effect on reducing infection rates independent of these issues.

Conclusion

As data from QI projects are becoming increasingly public, data quality control is essential to ensure integrity of project results. Basic processes, suitable for QI projects, can be implemented to help ensure data quality during all phases of the project. Such data quality control mechanisms are vital to appropriate decision-making based on the results of QI projects.

Funding

The first author is supported by a Clinician–Scientist Award from the Canadian Institutes of Health Research. This project was supported by the Agency for Healthcare Research and Quality, (grant #1UC1HS14246).

Acknowledgments

We thank the Michigan Health and Hospital Association Keystone Center for Patient Safety and Quality, and all the ICU teams in Michigan for their tremendous efforts, leadership, courage and dedication to improving the quality of care and safety of their patients.

References

- Werner RM, Bradlow ET, Asch DA. Hospital performance measures and quality of care. LDI Issue Brief. 2008;13:1–4. [PubMed] [Google Scholar]

- Lindenauer PK, Remus D, Roman S, et al. Public reporting and pay for performance in hospital quality improvement. N Engl J Med. 2007;356:486–96. doi: 10.1056/NEJMsa064964. [DOI] [PubMed] [Google Scholar]

- Ferrer R, Artigas A, Levy MM, et al. Improvement in process of care and outcome after a multicenter severe sepsis educational program in Spain. J Am Med Assoc. 2008;299:2294–303. doi: 10.1001/jama.299.19.2294. [DOI] [PubMed] [Google Scholar]

- Werner RM, Asch DA. The unintended consequences of publicly reporting quality information. J Am Med Assoc. 2005;293:1239–44. doi: 10.1001/jama.293.10.1239. [DOI] [PubMed] [Google Scholar]

- Pronovost PJ, Berenholtz SM, Goeschel CA. Improving the quality of measurement and evaluation in quality improvement efforts. Am J Med Qual. 2008;23:143–6. doi: 10.1177/1062860607313146. [DOI] [PubMed] [Google Scholar]

- Terris DD, Litaker DG. Data quality bias: an underrecognized source of misclassification in pay-for-performance reporting? Qual Manag Health Care. 2008;17:19–26. doi: 10.1097/01.QMH.0000308634.59108.60. [DOI] [PubMed] [Google Scholar]

- Lindenauer PK. Effects of quality improvement collaboratives. Br Med J. 2008;336:1448–9. doi: 10.1136/bmj.a216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pronovost P, Needham D, Berenholtz S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006;355:2725–32. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- Pronovost PJ, Berenholtz SM, Goeschel CA, et al. Creating high reliability in healthcare organizations. Health Serv Res. 2006;41:1599–617. doi: 10.1111/j.1475-6773.2006.00567.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pronovost PJ, Berenholtz SM, Goeschel C, et al. Improving patient safety in intensive care units in Michigan. J Crit Care. 2008;23:207–21. doi: 10.1016/j.jcrc.2007.09.002. [DOI] [PubMed] [Google Scholar]

- Pronovost P, Needham D, Berenholtz S. Authors reply to: catheter-related bloodstream infections (Jenny-Avital, E.R.) N Engl J Med. 2007;356:1267. doi: 10.1056/NEJMc070179. author reply 1268. [DOI] [PubMed] [Google Scholar]

- National Center for Infectious Diseases. The National Healthcare Safety Network (NHSN) Manual. Atlanta: NCID; 2008. [Google Scholar]

- Rubin DB. Multiple Imputation for Nonresponse in Surveys. New York: John Wiley & Sons, Inc; 1987. [Google Scholar]

- Dawson B, Trapp RG. Basic & Clinical Biostatistics. 3rd edn. New York: McGraw-Hill Medical; 2001. [Google Scholar]

- Wenzel RP, Edmond MB. Team-based prevention of catheter-related infections. N Engl J Med. 2006;355:2781–3. doi: 10.1056/NEJMe068230. [DOI] [PubMed] [Google Scholar]

- Pronovost PJ, Nolan T, Zeger S, et al. How can clinicians measure safety and quality in acute care? Lancet. 2004;363:1061–7. doi: 10.1016/S0140-6736(04)15843-1. [DOI] [PubMed] [Google Scholar]

- Kilo CM. A framework for collaborative improvement: lessons from the Institute for Healthcare Improvement's Breakthrough Series. Qual Manag Health Care. 1998;6:1–13. doi: 10.1097/00019514-199806040-00001. [DOI] [PubMed] [Google Scholar]

- Berenholtz SM, Pronovost PJ, Lipsett PA, et al. Eliminating catheter-related bloodstream infections in the intensive care unit. Crit Care Med. 2004;32:2014–20. doi: 10.1097/01.ccm.0000142399.70913.2f. [DOI] [PubMed] [Google Scholar]

- Benneyan JC. Number-between g-type statistical quality control charts for monitoring adverse events. Health Care Manag Sci. 2001;4:305–18. doi: 10.1023/a:1011846412909. [DOI] [PubMed] [Google Scholar]

- William SM, Parry BR, Schlup MMT. Quality control: an application of the CUSUM. Br Med J. 1992;304:1359–61. doi: 10.1136/bmj.304.6838.1359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diggle PJ, Heagerty PJ, Liang KY, et al. Analysis of Longitudinal Data. New York: Oxford Press Inc; 2002. [Google Scholar]