Abstract

Objectives

To develop a methodology for evaluating the impact of research on health care, and to characterise the papers cited on clinical guidelines.

Design

The bibliographic details of the papers cited in 15 clinical guidelines, developed in and for the United Kingdom, were collated and analysed with applied bibliometric techniques.

Results

The median age of papers cited in clinical guidelines was eight years; most papers were published by authors living in either the United States (36%) or the United Kingdom (25%)—this is two and a half times more than expected as about 10% of all biomedical outputs are published in the United Kingdom; and clinical guidelines do not cite basic research papers.

Conclusion

Analysis of the evidence base of clinical guidelines may be one way of tracking the flow of knowledge from the laboratory to the clinic. Moreover, such analysis provides a useful, clinically relevant method for evaluating research outcomes and different strategies in research and development.

Introduction

The United Kingdom spends over £1600 million a year on non-commercial biomedical and health services research.1 This research is funded either from the public purse, such as the NHS and the Medical Research Council, or medical research charities, such as the Wellcome Trust. The tacit understanding is that the biomedical research these bodies support will lead to an eventual improvement in health. The system is highly complex, however, and medical agencies support a wide spectrum of activity from basic biomedical research through to research in health services and assessments of technology. Notwithstanding this complexity, there is a need to understand how funding of research affects health care. Such analysis will allow funding agencies to show accountability and good research governance to their stakeholders; enhance public perception and understanding of biomedical science and the scientific process; and allow the development of more effective strategies in research and development to increase the likelihood of “successful” research outcomes.2

Traditionally, the contribution of scientific research to knowledge has been measured by the number and impact of scientific papers in the peer reviewed literature. A broader approach has suggested disaggregating the research process and assessing the “payback” at each stage.3 A pilot study has shown that it is possible to use applied bibliometric techniques to “link” research funding organisations with both primary and secondary outputs.2 Primary outputs are defined as publications in the serial peer reviewed literature, while the secondary outputs are taken to be evidence based clinical guidelines. We expanded on the pilot study by increasing the sample size to permit us to characterise the papers cited on clinical guidelines.

Data sources

Fifteen sets of guidelines on disease management were selected as data sources for the study (table 1).4–18 The guidelines covered a range of conditions seen in general (family) practice or in hospital care, and all had been produced in the United Kingdom, either by the royal colleges or by the North of England Evidence Based Guidelines Development Project. The guidelines were selected because they had been assessed by the NHS Appraisal Centre for Clinical Guidelines. The role of the centre is to advise the NHS Executive about the quality of clinical guidelines that have been funded through the national guideline programme.19 Its appraisal consists of a structured peer review based on a validated appraisal instrument that assesses key elements for the development and reporting of clinical guidelines.20

Table 1.

Code, title, and reference of 15 “appraised” guidelines

| Code | Guideline title | Reference |

|---|---|---|

| ACE | ACE inhibitors in the primary care management of adults with symptomatic heart failure | 4 |

| ANG | The primary care management of stable angina | 5 |

| ASP | Aspirin for the secondary prophylaxis of vascular disease in primary care | 6 |

| BAC | Clinical guidelines for the management of acute low back pain | 7 |

| DEM | The primary care management of dementia | 8 |

| DEP | The choice of antidepressants for depression in primary care | 9 |

| EPI | Adults with poorly controlled epilepsy | 10 |

| GLI | Improving care for patients with malignant cerebral glioma | 11 |

| INF | The management of infertility in secondary care | 12 |

| MEN | The initial management of menorrhagia | 13 |

| NSA | Non-steroidal anti-inflammatory drugs (NSAIDs) versus basic analgesia in the treatment of pain believed to be due to degenerative arthritis | 14 |

| OST | Clinical guidelines for strategies to prevent and treat osteoporosis | 15 |

| SPE | Clinical guidelines by consensus for speech and language therapists | 16 |

| VIO | The management of imminent violence | 17 |

| WHE | The primary care management of asthma in adults | 18 |

Methods

One criterion on which the guidelines are appraised is the “identification and interpretation of evidence.” Accordingly, a well developed guideline would include a comprehensive bibliography of publications cited therein. We scanned these bibliographic details onto a bespoke database. After we standardised the bibliographic data we looked up all papers on the Science Citation Index and in libraries to add the addresses of the authors and any missing information such as paper titles, volume numbers, etc.

When possible, we made comparisons with all UK biomedical publications between 1988 and 1995 using the Wellcome Trust's research output database (ROD).1 Analyses were based on either paper or journal details and included examination of the “knowledge cycle time” (the time between a paper's publication and its citation in a clinical guideline); the country of authorship, based on analysis of the address fields; and the type of research cited (the extent to which basic (or clinical) research was cited in guidelines).

This last analysis used a journal classification system developed and updated by CHI Research (a private research consultancy based in the United States). The system is based on expert opinion and journal to journal citations and has become a standard tool in bibliometric analyses.21 Journals are allocated to four hierarchical levels in which each level is more likely to cite papers in journals at the same level or the level below it. Hence, only 4% of papers in level 1 “clinical observation” journals (for example, BMJ) will cite papers in level 4 “basic” journals (for example, Nature) compared with 8% for level 2 “clinical mix” journals (for example, New England Journal of Medicine) and 21% for level 3 “clinical investigation” journals (for example, Immunology). By looking at the journals in which papers cited in clinical guidelines are published, it is possible to characterise the research and estimate how long it takes for basic research to feed into clinical practice. This analysis, however, is rather crude as it allocates all papers within a journal to one level, despite a strong likelihood that there is variation in the type of research published in a given journal.

Results

Table 2 shows the characteristics of the papers cited in the 15 guidelines. In total, 2501 papers were referenced in the bibliographies of the guidelines, of which 2043 (82%; range 50-98%) were papers in research journals. A small proportion of papers (55; 3%) were referenced in more than one guideline but were still included in the following analyses and therefore were “double counted.” Of the 2043 papers looked up in libraries, 1761 (88%; range 63-100%) were found and missing information (for example, addresses) recorded.

Table 2.

Number of references, papers, and found papers, by guideline

| Code | No of references | No (%) of papers*† | No (%) of found papers‡ |

|---|---|---|---|

| ACE | 86 | 60 (70) | 54 (90) |

| ANG | 123 | 116 (94) | 107 (92) |

| ASP | 132 | 130 (98) | 93 (72) |

| BAC | 68 | 53 (78) | 46 (87) |

| DEM | 153 | 143 (93) | 119 (83) |

| DEP | 258 | 236 (91) | 183 (78) |

| EPI | 107 | 85 (79) | 67 (79) |

| GLI | 112 | 82 (73) | 71 (87) |

| INF | 588 | 466 (79) | 434 (93) |

| MEN | 127 | 100 (79) | 85 (85) |

| NSA | 48 | 33 (69) | 33 (100) |

| OST | 422 | 337 (80) | 314 (93) |

| SPE | 122 | 61 (50) | 47 (77) |

| VIO | 89 | 87 (98) | 55 (63) |

| WHE | 66 | 54 (82) | 53 (98) |

| Total | 2501 | 2043§ (82) | 1761¶ (84) |

Papers are defined as scientific publications in peer reviewed literature—that is, not reports, book chapters, etc.

Denominator is number of references.

Denominator is number of papers.

Includes 55 papers cited in more than one guideline.

Includes 54 papers cited in more than one guideline.

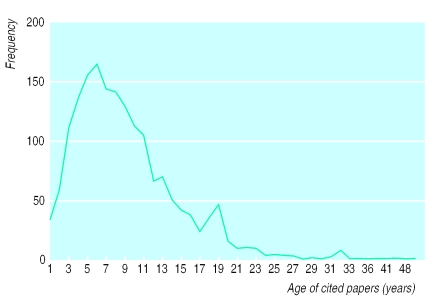

The “knowledge cycle time”

In patent analysis, an important characteristic is the “prior art cycle time,” which is defined as the median age of the patents referenced on the front page of a patent.22 With guidelines, an analogous indicator would be the “knowledge cycle time”—that is, the age of papers cited in clinical guidelines, as illustrated in figure 1. The median knowledge cycle time for all 15 guidelines was eight years; 25% of the papers, however, were more than 10 years old and 4% more than 25 years old.

Figure 1.

Age of papers cited in clinical guidelines

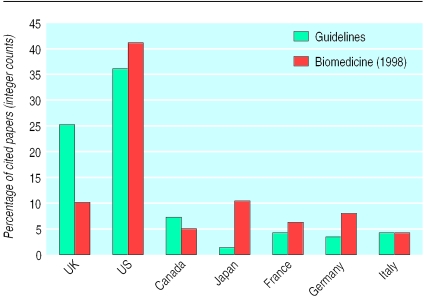

Country of authorship

Figure 2 shows the distribution of the addresses of authors cited in the clinical guidelines for the Group of Seven (G7) largest economies. Comparison data for outputs in 1998 were taken from the Science Citation Index with a title keyword and specialist journal search strategy developed for biomedicine and described elsewhere.1 In total 9007 authors were cited in the 1761 papers, which is an average of 5.1 authors per paper. This distribution is heavily skewed as five papers had more than 100 authors. Most papers were published by authors living in either the United States (36%) or the United Kingdom (25%), with the five remaining countries accounting for 19% of addresses. Non-G7 countries accounted for 26% of the papers. Note that for a number of the guidelines the total percentages add up to more than 100% because of collaboration between the G7 countries. The most salient observation to be made from figure 2 is the higher proportion of UK papers cited in guidelines compared with biomedicine as a whole.

Figure 2.

Nationality of authors of papers cited in clinical guidelines

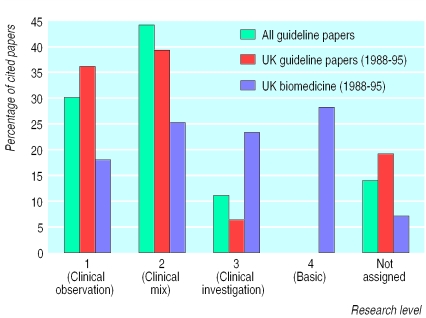

Type of cited research

The third analysis was based on the journals in which the cited papers appeared and was used to determine the extent to which basic or clinical research is cited in clinical guidelines. Figure 3 shows the distribution of cited papers across four different journal levels. Of the 1761 papers, 260 (15%) did not have a research level. Of the remaining papers, nearly three quarters appeared in level 1 (clinical observation) or level 2 (clinical mix) research journals compared with only four papers (or 0.2%) in level 4 (basic) journals.

Figure 3.

Research level of journals cited in clinical guidelines

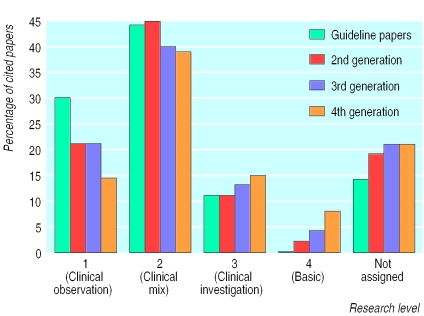

Comparison data were taken from the research output database for UK biomedical publications between 1988 and 1995 (the right hand column for each research level in figure 3). To make this comparison valid, however, the guideline papers have been restricted to the 291 UK publications between 1988 and 1995 (the middle column for each research level). As is apparent from figure 3 clinical guidelines are citing clinical papers. In itself this observation is not surprising, though there is an underlying assumption in biomedical science policy that basic research feeds into clinical practice. To see if this assumption holds, figure 4 examines the type of research cited in subsequent “generations” of papers, where a second generation paper refers to papers cited in a 5% random sample of papers cited in the guideline papers and a third generation paper to a 5% random sample of papers cited in a 5% random sample of papers cited in the guideline papers and so on. In this analysis the proportion of clinical observation (level 1) papers fell from 30% to 14% over the four generations analysed while basic (level 4) papers increased from 0.2% to 8%.

Figure 4.

Research level of journals cited in subsequent generations of papers

Discussion

Policy implications

This study raises a number of important policy issues. Potentially the most important observation is in figure 2, which shows that authors of UK guidelines are citing UK papers. This may suggest that the guidelines are not citing all the internationally available evidence and that there is a publication bias. This explanation is unlikely, however, because the appraisal criteria specifically assess whether the identification, evaluation, and selection of evidence have been properly conducted and are unbiased. That said, it would be interesting to see if US guidelines cite US papers or whether in the United Kingdom a disproportionate volume of papers relevant to guidelines is published. Furthermore, guidelines informed by local (UK) research might not be a bad thing per se. Indeed, the preferential citing of UK papers may provide good evidence for supporting a local science base. If so, then the central policy question is: does a strong science base lead to better clinical practice? As far as we are aware, this assumption has never been fully tested. One way would be to link data on research evaluation (such as the number and quality of peer reviewed publications) with data on clinical audit (such as standardised mortality adjusted for case mix).

A second observation is the length of time it takes for basic research to flow into clinical practice. It has been shown that on average it takes three years for a paper to be cited,23 and figure 1 shows an average eight year time lag between the publication of a paper and the publication of a guideline. In other words, it would seem to take about 17 years (that is, 8+(3×3)) for the fourth generation research papers to feed into clinical practice. From a policy viewpoint, this raises the question of what the optimum time is for research to be fully evaluated and put into practice and whether this process needs to and can be speeded up.

A related issue is how basic research supports clinical research. Figure 4 shows that basic research is not being cited in clinical guidelines, and although the proportion increases by generation, only 8% of basic research is cited at the fourth generation of papers. It is hard to estimate how much basic research one would expect to be cited at the fourth generation, but it is significantly below the 25% for all UK biomedicine. Does this imply that there is little interaction between the clinical and basic research communities? Or is the information flowing through other routes, such as tacit knowledge, medical education, etc? Interestingly, these findings are at odds with those of past studies, which have concluded that basic research is an essential bedrock of clinical medicine.24 These studies and the current analysis, however, take a retrospective look—that is, they identify a success (such as a citation in a clinical guideline or a medical advance) and look at the supporting evidence. An alternative approach would be to identify a body of basic research published some time ago and follow its subsequent knowledge flow. This would further our understanding of how research feeds into medical (or non-medical) advances.

Methodological limitations

This study has shown how applied bibliometric techniques can be used to generate and inform several interesting policy issues in research and development and provide a way to evaluate the impact of research on clinical practice. There are, however, several limitations to our approach. Firstly, our analysis is based on “found papers.” These account for 70% of all references cited in clinical guidelines, and thus there could obviously be biases in our results if the papers we failed to find had significantly different characteristics to those analysed. Secondly, a citation in a clinical guideline does not guarantee an impact on health and therefore a payback on the research investment. Nevertheless, a citation in a clinical guideline could be considered as an indicator of research utility and thus an intermediate outcome, or “secondary output” in the language of the payback model. Thirdly, bibliometric analysis does not give any indication of the importance of a cited paper. This problem could be overcome by assigning weights to papers on the basis of quality, sample size, or such like. Finally, the analysis was undertaken in the absence of a denominator. Only the “successful” papers were examined, and we do not know how many papers have been published that are potentially relevant to the topics covered by the guidelines but were not cited in the clinical guideline.

Towards an evidence based research policy

Despite these limitations, we would strongly defend this type of analysis and encourage other investigators to spend some time thinking about the way research is managed. In one sense the lack of solid conclusions reflects the lack of evidence in this area. This is despite recognition in the BMJ in 1987 that “we need to research research”25 and the strong methodological synergy between epidemiology, especially in the analysis of observational datasets, and evaluation of quantitative research as presented in this paper. Indeed, in a period when researchers are demanding that clinicians practice evidence based medicine it is only appropriate and correct that such researchers audit and evaluate the research outputs and outcomes of their own investigations.

What is already known on this topic

Research evaluations have traditionally assessed contributions to knowledge through citation analysis

The objective of many agencies that fund medical research is to support research that improves health, and therefore conventional bibliometric analysis, which assesses contributions to knowledge, may not be an appropriate method of evaluation

What this study adds

The use of clinical guidelines as an intermediate outcome measure in the research process is a novel method of applying bibliometric techniques to assess the impact of research on health care

Footnotes

Funding: JG, RC, and GF work at, and are therefore intramurally supported by, the Wellcome Trust. FC works at the Health Care Evaluation Unit, which is supported by the research and development offices of the South East and London NHS regional offices.

Competing interests: None declared.

References

- 1.Dawson G, Lucocq B, Cottrell R, Lewison G. Mapping the landscape. National biomedical outputs 1988-95. London: Wellcome Trust; 1998. [Google Scholar]

- 2.Grant J. Evaluating the outcomes of biomedical research on healthcare. Res Eval. 1999;8:33–38. [Google Scholar]

- 3.Buxton M, Hanney S. How can payback from health services research be assessed? J Health Services Res Policy. 1996;1:35–43. [PubMed] [Google Scholar]

- 4.North of England Evidence Based Guideline Development Project. ACE inhibitors in the primary care management of adults with symptomatic heart failure. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1998. [Google Scholar]

- 5.North of England Evidence Based Guideline Development Project. The primary care management of stable angina. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1996. [Google Scholar]

- 6.North of England Evidence Based Guideline Development Project. Aspirin for the secondary prophylaxis of vascular disease in primary care. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1998. [Google Scholar]

- 7.Waddell G, Feder G, McIntosh A, Lewis M, Hutchinson A. Low back pain evidence review. London: Royal College of General Practitioners; 1996. [Google Scholar]

- 8.North of England Evidence Based Guideline Development Project. The primary care management of dementia. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1998. [Google Scholar]

- 9.North of England Evidence Based Guideline Development Project. The choice of antidepressants for depression in primary care. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1998. [Google Scholar]

- 10.Wallace H, Shorvon SD, Hopkins A, O'Donoghue M. Adults with poorly controlled epilepsy. London: Royal College of Physicians; 1997. [Google Scholar]

- 11.Davies E, Hopkins A. Improving care for patients with malignant cerebral glioma. London: Royal College of Physicians; 1997. [Google Scholar]

- 12.Royal College of Obstetricians and Gynaecologists. The management of infertility in secondary care. London: RCOG; 1998. [Google Scholar]

- 13.Royal College of Obstetricians and Gynaecologists. The initial management of menorrhagia. London: RCOG; 1998. [Google Scholar]

- 14.North of England Evidence Based Guideline Development Project. Non-steroidal anti-inflammatory drugs (NSAIDs) versus basic analgesia in the treatment of pain believed to be due to degenerative arthritis. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1998. [Google Scholar]

- 15.Royal College of Physicians. Clinical guidelines for strategies to prevent and treat osteoporosis. London: Royal College of Physicians; 1998. [Google Scholar]

- 16.Enderby P, Reid D, Van der Gaag A. Clinical guidelines by consensus for speech and language therapists. Glasgow: M and M Press; 1998. [Google Scholar]

- 17.Royal College of Psychiatrists. The management of imminent violence. London: Royal College of Psychiatrists; 1998. [Google Scholar]

- 18.North of England Evidence Based Guideline Development Project. The primary care management of asthma in adults. Newcastle upon Tyne: Centre for Health Services Research, University of Newcastle upon Tyne; 1996. [Google Scholar]

- 19.Cluzeau F, Littlejohns P. Appraising clinical practice guidelines in England and Wales: the development of a methodologic framework and its application to policy. Joint Commission J Qual Improve. 1999;25:514–521. doi: 10.1016/s1070-3241(16)30465-5. [DOI] [PubMed] [Google Scholar]

- 20.Cluzeau F, Littlejohns P, Grimshaw J, Feder G, Moran S. Development and application of a generic methodology to assess the quality of clinical guidelines. Int J Qual Health Care. 1999;11:23–28. doi: 10.1093/intqhc/11.1.21. [DOI] [PubMed] [Google Scholar]

- 21.Narin F, Pinski G, Gee H. Structure of the biomedical literature. J Am Soc Inform Sci. 1976;27:25–45. [Google Scholar]

- 22.Anderson J, Williams N, Seemungal D, Narin F, Olivastro D. Human genetic technology: exploring the links between science and innovation. Technol Anal Strategic Manage. 1996;8:135–156. [Google Scholar]

- 23.Grant J, Lewison G. Government funding of research and development. Science. 1997;278:878–880. [Google Scholar]

- 24.Comroe J, Dripps R. Scientific basis for the support of biomedical science. Science. 1976;192:105–111. doi: 10.1126/science.769161. [DOI] [PubMed] [Google Scholar]

- 25.Smith R. Comroe and Dripps revisited. BMJ. 1987;295:1404–1407. doi: 10.1136/bmj.295.6610.1404. [DOI] [PMC free article] [PubMed] [Google Scholar]