Abstract

The speech understanding of persons with sloping high-frequency (HF) hearing impairment (HI) was compared to normal hearing (NH) controls and previous research on persons with “flat” losses to examine how hearing loss configuration affects the contribution of speech information in various frequency regions. Speech understanding was assessed at multiple low- and high-pass filter cutoff frequencies. Crossover frequencies, defined as the cutoff frequencies at which low- and high-pass filtering yielded equivalent performance, were significantly lower for the sloping HI, compared to NH, group suggesting that HF HI limits the utility of HF speech information. Speech intelligibility index calculations suggest this limited utility was not due simply to reduced audibility but also to the negative effects of high presentation levels and a poorer-than-normal use of speech information in the frequency region with the greatest hearing loss (the HF regions). This deficit was comparable, however, to that seen in low-frequency regions of persons with similar HF thresholds and “flat” hearing losses suggesting that sensorineural HI results in a “uniform,” rather than frequency-specific, deficit in speech understanding, at least for persons with HF thresholds up to 60-80 dB HL.

I. INTRODUCTION

Although the negative effect of sensorineural hearing loss (SNHL) on speech understanding is well documented, the benefits and limitations of restoring audibility via amplification continue to be debated. An area that has received recent attention is how the presence and degree of hearing loss, as a function of frequency, affects our ability to make use of amplified speech information. For example, recent research has suggested that, for persons with moderate-to-severe flat SNHL, restoring audibility to low-frequency speech information is more beneficial than restoring audibility to high-frequency speech information, even given a comparable hearing loss in the high-frequencies (Ching, Dillon, and Byrne, 1998; Ching et al., 2001; Hogan and Turner, 1998; Turner and Cummings, 1999; Vickers, Moore, and Baer, 2001). In the studies cited here, speech understanding in quiet was assessed as the frequency range of speech information was systematically varied. The study results generally revealed little or no improvement in speech understanding as high-frequency (i.e., above 3000 Hz) speech information was made audible to individuals with SNHL greater than 55-80 dB HL in the high-frequencies. The evidence regarding the effects of hearing loss on low-frequency information is less clear, however, these and other studies have suggested that speech understanding will improve, regardless of degree of hearing loss, as lower frequency information is made available (Turner and Brus, 2001). Taken together these studies suggest that hearing loss results in a “frequency-specific” deficit in speech understanding with the high-frequency regions (>3000 Hz) being most affected.

Factors other than simply degree of high-frequency hearing loss may also affect our ability to make use of amplified high-frequency speech information. Turner and Henry (2002) reported that the presence of background noise may also impact the utility of amplified high-frequency speech information. They measured the speech understanding in noise, of subjects with varying degrees of high-frequency hearing loss, as high-frequency speech information was progressively increased. Although the relative improvements in intelligibility were small when speech information above 3000 Hz was added, persons with hearing loss listening in noise, regardless of their degree of high-frequency hearing loss, were able to use amplified high-frequency information with efficiency comparable to that of persons without hearing loss. This is in contrast to results from an earlier study using the same paradigm but completed in quiet (Hogan and Turner, 1998). The authors suggest that the difference in results obtained in quiet and in noise are due to differences in the relative access to “easy” (i.e., voicing and manner cues) and “more difficult” (i.e., place of articulation) speech cues when speech is presented in quiet versus noise backgrounds.

The absence of functioning inner hair cells in a specified frequency region, recently referred to as a “cochlear dead region,” may also affect our ability to make use of amplified speech information (Baer, Moore, and Kluk, 2002; Moore et al., 2000; Summers et al., 2003; Vickers, Moore, and Baer, 2001). It is assumed that information in the acoustic signal cannot be accurately transmitted to higher auditory centers if there is a lack of functioning inner hair cells (a dead region). Thus restoring audibility to speech information in a dead region is not expected to improve, and may hinder, speech understanding. Baer, Moore, and Kluk, (2002) found that, in noise, persons with hearing loss and dead regions in the high frequencies were less able to make use of amplified high-frequency speech information than persons with hearing loss but without dead regions. Vickers, Moore, and Baer (2001) reported similar findings for persons with and without dead regions tested in quiet. In the two studies cited above the subjects with dead regions tended to have more high-frequency hearing loss than the subjects without dead regions thus differences in audibility between groups may have also played a role in their findings (Rankovic, 2002). Despite this confound, clearly the lack of surviving inner hair cells in a specific area could affect the utility of speech information in that region.

Finally, a recent study by Hornsby and Ricketts (2003) suggested that the configuration of hearing loss may also play a role in determining the utility of amplified speech information. In this study we examined the utility of speech information, as a function of frequency, in individuals with essentially “flat” SNHLs thereby avoiding the confound of variation in degree of hearing loss across frequency. Speech understanding in noise was measured at a variety of low- and high-pass filter cutoff frequencies. These data were used to estimate crossover frequencies for individuals with flat SNHL and a control group of persons without hearing loss. The crossover frequency, defined as the cutoff frequencies at which the low-pass and high-pass filtering yielded equivalent performance, provided an estimate of the contribution of acoustic information in the low- and high-frequency regions to speech understanding (e.g., French and Steinberg, 1947). No significant difference in crossover frequencies was observed between the control group and persons with flat hearing losses.

In addition, speech intelligibility index (SII; ANSI S3.5, 1997) calculations were made to determine if the performance of persons with flat hearing loss could be explained based primarily on audibility of the speech. Although not specifically designed for persons with hearing loss, multiple researchers have used the SII, and its precursor the articulation index, to explore factors other than audibility (e.g., frequency and/or temporal processing abilities, age, cognitive function) that may affect the speech understanding of persons with hearing loss (e.g., Dubno, Dirks, and Schaefer, 1989; Humes, 2002). Results from Hornsby and Ricketts (2003) show that the absolute performance of the flat HI participants was poorer than predicted (see their Fig. 10). However, the relative improvements in performance with changes in filter cutoff frequency were well predicted, suggesting that these listeners utilized amplified speech information, regardless of frequency, in a fashion comparable to persons without hearing loss. The findings of this study suggest that the presence of hearing loss results in a “uniform” rather than frequency-specific deficit in speech understanding. That is the presence of a flat hearing loss would limit the contribution of speech information across all affected frequency regions (resulting in poorer absolute performance but crossover frequencies that are comparable to those of persons with normal hearing) while the presence of a high-frequency hearing loss would limit the contribution of speech information primarily in the high-frequency regions (thus reducing absolute performance for high-frequency speech information and shifting the crossover frequency lower).

The results of Hornsby and Ricketts (2003) are in contrast to some earlier studies focusing on the utility of high-frequency hearing speech information presented in quiet (Ching, Dillon, and Byrne, 1998; Ching et al., 2001; Hogan and Turner, 1998; Turner and Cummings, 1999; Turner and Brus, 2001) and in noise (Amos and Humes, 2001). One potential reason for this difference may be that fundamental differences in the effect of SNHL on speech understanding exist between persons with flat and sloping hearing losses. For example, since persons with high-frequency hearing losses make relatively good use of low-frequency information and speech information is highly redundant across frequencies, persons with high-frequency SNHL may not have to rely heavily on the more degraded high-frequency speech cues. This would be especially true when the speech materials were presented in quiet, as in several previous experiments (e.g., Hogan and Turner, 1998; Ching, Dillon, and Byrne, 1998). In contrast, persons with flat losses having some degradation across all frequencies may require a broader range of cues regardless of the frequency region.

Given the substantial methodological differences between the studies discussed above, it remains unclear whether configuration of hearing loss plays a role in our ability to utilize speech information in various frequency regions, particularly high-frequency information. The primary purpose of this study was to further examine the effect of configuration of hearing loss on the utility of amplified high-frequency speech information in noise. To do this we compare results from subjects with sloping high-frequency hearing losses to participants with normal hearing and to past work using essentially the same methodology in persons with flat losses (Hornsby and Ricketts, 2003).

II. METHODS

A. Participants

A total of 20 participants, 10 with normal hearing (NH) and 10 with hearing loss, participated in this study. All participants with NH passed a pure-tone air conduction screening at 20 dB HL (250-8000 Hz; ANSI S 3.6, 1996) and had no history of otologic pathology. Individuals in the NH group (one male, nine female) ranged in age from 22 to 47 years (mean 28.5).

Ten persons with sloping, high-frequency SNHL (HI group) also participated in this experiment. The average thresholds at 500 and 3000 Hz were 25.5 and 68.0 dB HL, respectively. HI participants ranged in age from 28 to 82 years old (mean 62.9 years). Specific demographic details of the HI participants are provided in Table I.

TABLE I.

Demographic characteristics of the participants with sloping hearing lossa

| Subject | Sex | Age | Length of HL (years) | HA use (in years) | Binaural aids | Education | Cause of HL |

|---|---|---|---|---|---|---|---|

| DD | F | 68 | 20 | None | N | 15 | Presbycusis |

| JS | M | 68 | 50 | None | N | 12 | Unknown |

| ET | M | 82 | 12 | <1 years | Y | 16 | Noise/Presbycusis |

| JT | F | 28 | 22 | 16 years | Y | 19 | Congenital |

| BW | M | 58 | 25 | 1 years | Y | 20 | Noise/Presbycusis |

| AC | F | 70 | 13 | 9 years | Y | 18 | Presbycusis |

| LC | M | 67 | 20 | 15 years | Y | 12 | Noise/Presbycusis |

| RC | M | 67 | 40 | 4 years | Y | 16 | Unknown |

| MC | M | 65 | 14 | 2 years | Y | 12 | Noise/Presbycusis |

| MH | M | 56 | 33 | 14 years | Y | 16 | Noise/Unknown |

| Average | 62.9 | 24.9 | 6.13 | 15.6 |

HL, hearing loss; HA, hearing aids.

Auditory thresholds were assessed at octave frequencies between 250-8000 Hz, as well as at the interoctave frequencies of 1500, 3000, and 6000 Hz. HI participants exhibited essentially symmetrical hearing loss (interaural difference of ≤15 dB); air-bone gaps ≤10 dB at all frequencies, and other than hearing loss, reported no history or complaints of otologic pathology, surgery, or unilateral tinnitus. All testing, following the initial threshold assessment, was performed monaurally. The ear chosen for testing was based on (1) limiting the loss at 500 and 4000 Hz to no more than 40 and 80 dB HL, respectively and (2) choosing the ear with the steepest drop in thresholds between 500 and 4000 Hz. In contrast, the test ear was counterbalanced for the NH group. Table II lists the auditory thresholds, of the ear tested, for each of the HI participants. Additional information provided in Table II is described later in the text.

TABLE II.

Auditory thresholds (in dB HL) and measured crossover frequencies (in Hz) for participants with sloping hearing loss. In addition, bolded thresholds mark potential dead regions as identified by a positive finding with the TEN test using the less conservative criteria described by Moore et al., 2000 (masked threshold at least 10 dB above quiet threshold and at least 10 dB above the level of the masking noise)

| Frequency (in Hertz) |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Subject | Ear | Crossover frequency | 250 | 500 | 1000 | 1500 | 2000 | 3000 | 4000 | 6000 | 8000 |

| DD | L | 1223 | 15 | 25 | 25 | 45 | 60 | 60 | 65 | 60 | 60 |

| ET | R | 1451 | 30 | 25 | 35 | 60 | 70 | 65 | 65 | 70 | 65 |

| JS | L | 1033 | 20 | 15 | 25 | 30 | 60 | 70 | 75 | 85 | 90+ |

| JT | L | 1377 | 25 | 30 | 45 | 50 | 55 | 65 | 60 | 60 | 65 |

| RC | R | 1364 | 30 | 35 | 45 | 55 | 60 | 70 | 70 | 75 | 80 |

| MC | L | 1128 | 15 | 20 | 30 | 30 | 55 | 70 | 60 | 55 | 70 |

| AC | R | 1198 | 35 | 40 | 55 | 60 | 70 | 70 | 75 | 90 | 95 |

| LC | L | 1301 | 30 | 20 | 40 | 60 | 65 | 70 | 70 | 70 | 75 |

| BW | L | 866 | 20 | 20 | 40 | 45 | 50 | 65 | 80 | 90 | 90 |

| MH | L | 1131 | 15 | 25 | 55 | 65 | 65 | 75 | 80 | 90 | 90 |

B. Procedures

The procedures followed in this study essentially mirror those of our earlier study (Hornsby and Ricketts, 2003). Sentence recognition in noise, at various filter cutoff frequencies, was assessed for both the NH and HI groups. In addition, both groups completed threshold testing in a speech-shaped background noise and in the broadband “threshold equalizing noise” that accompanies the TEN test (Moore et al., 2000). Participants were compensated for their time on a per session basis.

1. Sentence recognition testing

Sentence recognition was assessed using the connected speech test (CST; Cox, Alexander, and Gilmore, 1987; Cox et al., 1988). The CST uses everyday connected speech as the test material and consists of 28 pairs of passages (24 test and four practice pairs). A total of two passage pairs were completed for each condition, and the score for each condition was based on the average result of these two passage pairs (i.e., based on 100 key words). Sentence recognition was assessed at multiple low- and high-pass filter cutoff frequencies (total of 12 filter conditions) in order to obtain performance versus filter cutoff frequency functions. These functions were used in the derivation of crossover frequency for each subject. As in our previous study, crossover frequencies (defined as the filter cutoff frequency at which the score for low- and high-pass filtered speech is the same) were calculated to allow for the comparison of the relative importance of low- and high-frequency information between groups and thus directly test whether hearing loss results in a frequency-specific deficit in the contribution of speech information.

In all low-pass filter conditions the high-pass filter cutoff frequency was fixed at 178 Hz. Likewise, in all high-pass filter conditions the low-pass filter cutoff frequency was fixed at 7069 Hz. Both groups completed the following 10 filter conditions: low-pass 800 Hz, 1200 Hz, 1600 Hz, 2000 Hz, and 3150 Hz; high-pass 1600 Hz, 1200 Hz, and 800 Hz; wideband (178-7069 Hz); and band-pass (800-3150 Hz). Performance was assessed on each subject in an additional two filter conditions to provide a better indication of the performance versus filter cutoff frequency function for each subject. All testing was completed in two test sessions with at least one CST passage completed in each filter condition during a session.

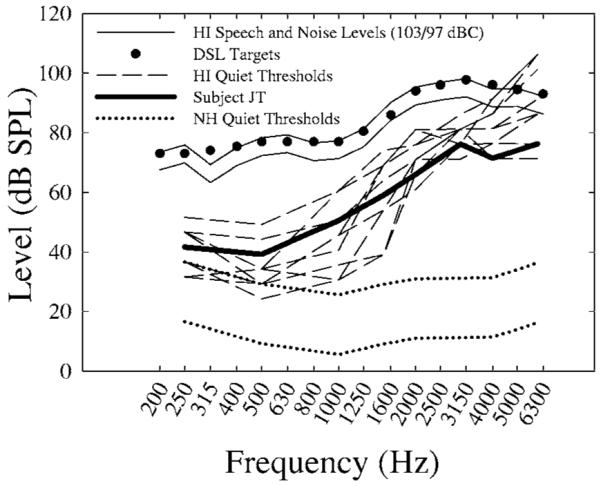

As in our previous study, the masking noise was a steady state noise filtered to match the long term spectral shape of the CST materials. The speech and noise were digitally mixed at a +6 dB SNR in an attempt to limit ceiling effects among the participants with normal hearing while not causing floor effects for the participants with hearing loss. To improve audibility for the participants with hearing loss, spectral shaping of the mixed speech, and noise stimuli was performed. Subjects in the NH group also listened to this same shaped speech (although generally at a lower level). The spectral shaping was applied to approximate desired sensation level targets (DSL v4.1 software, 1996) for conversational speech, assuming linear amplification for a subject with a sloping high-frequency hearing loss that approximated the average loss of the subjects used in this study. Shaping was verified by measuring the 1/3-octave rms levels of the masking noise (which spectrally matched the speech) in a Zwislocki coupler using a Larson-Davis 814 sound level meter (slow averaging, flat weighting). The rms difference between coupler outputs and DSL targets (250-6000 Hz) was 2.0 dB, with a maximum difference of -4.7 dB. Figure 1 shows the 1/3-octave rms levels (200-6300 Hz) of the CST talker (based on the entire corpus of CST test passages, tracks 32-55), after spectral shaping was applied, and the DSL targets (interpolated to 1/3 octave values) used in this study. Quiet thresholds for the HI subjects are also shown. These data show that, for a few HI participants, quiet thresholds partially limited audibility of high-frequency information (>4000 Hz). This is discussed in more detail below.

FIG. 1.

1/3 octave band levels (200-6300 Hz) of CST stimuli and DSL targets (filled circles) used in this study. The thin solid lines represent the 1/3 octave band levels of the speech and noise (presented at overall levels of 103 and 97 dBC measured in a Zwislocki coupler). Dotted lines represent a range of thresholds (in dB SPL in a Zwislocki coupler) for our NH control group. These correspond to quiet thresholds of 0 (normal) and 20 dB HL (our screening level for the NH control group). Dashed lines represent quiet thresholds for HI subjects listening to the speech at 103 dBC. The dark solid line represents threshold for HI subject JT, who listened to the speech and noise at a level of 94 and 88 dBC, respectively.

The filtered speech and noise were low-pass filtered (10 kHz), amplified and routed to an ER3 insert earphone (Etymotic Research). Levels were calibrated in the wideband condition (178-7069 Hz) in a Zwislocki coupler and no corrections for level were applied in the various filter conditions. Output levels were measured in a Zwislocki coupler using a Larson-Davis 814 sound level meter (C-weighting, slow averaging).

As in our previous experiment, output levels for the wideband speech were 95 dB SPL for the NH participants. This level was selected in an attempt to minimize differences in presentation levels between the NH and HI participants without exceeding loudness discomfort levels of the NH participants. All NH participants in this experiment reported that the 95 dB SPL presentation level was loud, but not uncomfortable. Wideband levels for HI participants varied depending on individual loudness comfort levels. Nine participants preferred an overall speech level of 103 dB SPL while one participant preferred a lower speech level of 94 dB SPL.

2. Threshold assessment in noise

Monaural masked thresholds, in the same ear used for speech testing, were determined for the octave frequencies of 250-4000 Hz, as well as the interoctave frequencies of 1500, 3000, and 6000 Hz. A clinical method of threshold assessment (ASHA, 1978) was used. However, step sizes were reduced(4 dB down-2 dB up) to improve threshold estimation accuracy. The background noise used during threshold testing was the same noise used during speech testing and was on continuously during threshold assessment. An attempt was made to measure masked thresholds at the same overall masker level as was used during speech testing. Levels, however, varied slightly depending on the loudness tolerance of individual participants. Overall levels of the masking noise, measured in a Zwislocki coupler using a Larson-Davis 814 sound level meter (C-weighting, slow averaging), were 79-89 dB SPL for the NH participants and ranged from 86-97 dB SPL for the HI participants.

3. Diagnosis of dead regions

Cochlear integrity was assessed only for the HI group using the CD version of the “TEN test” (Moore et al., 2000). Pure-tone thresholds for each subject with hearing loss were measured monaurally (same ear as speech testing) in quiet and in the presence of the “threshold equalizing noise,” at octave frequencies of 250-4000 Hz and interoctave frequencies of 1500, 3000, and 6000 Hz. TEN levels varied, depending on loudness discomfort issues, from 75-85 dB/ERB (overall levels of 91.5-101.5 dB SPL in a Zwislocki coupler). Moore (2001) suggested using the following criterion to define the presence and extent of a dead region. Specifically, a dead region is identified if (1) the masked threshold is 10 dB or more above absolute threshold in quiet and (2) the masked threshold is at least 10 dB or more above the noise level per ERB.

Recent work by Summers et al. (2003), however, questions the specificity of the TEN test, at least when using the Moore’s original criterion for identification of dead regions. Summers and colleagues examined the identification of dead regions in persons with high-frequency sloping hearing losses using both the TEN test and psychophysical tuning curves (PTC’s). They reported relatively poor agreement between TEN test and PTC results when using the criterion proposed by Moore (2001). When the criterion for identifying a dead region, however, was increased from a masked threshold at least 10 dB above the level of the masking noise to a threshold at least 14 dB above the level of the noise, agreement between TEN test results and PTC results were much improved. Therefore a more conservative criterion (masked thresholds at least 15 dB above the level of the masking noise) was used to identify suspected dead regions among subjects in this study. A level of 15 dB, as opposed to 14 dB, was chosen due to the use of a 5 dB step size, as recommended by Moore (2001), to determine thresholds using the TEN.

III. RESULTS AND ANALYSIS

A. Thresholds in speech noise

As seen in Fig. 1, HI quiet thresholds may have limited high-frequency audibility in some cases. Masked thresholds were compared to thresholds in quiet to confirm that for the most part the masking noise, rather than quiet thresholds, determined audibility differences between groups. Results showed that quiet thresholds for the NH and HI groups were shifted at least 4 dB (five subjects showed shifts ≥12 dB) by the masking noise at all frequencies tested except 6000 Hz. At 6000 Hz, however, the level of masking noise was not intense enough to cause a 4 dB shift in quiet thresholds for five of the 10 HI participants. For these HI participants, auditory threshold at 6000 Hz rather than the masking noise dictated audibility in noise.

In addition, differences in the “effective masking” of the background noise, between the NH and HI groups, were evaluated by subtracting noise levels (rms level in a 1/3 octave band) from the level of the pure tone at threshold. All measures were made in a Zwislocki coupler. This method provides a comparison of the SNR required for threshold detection in noise (similar to the critical ratio measure) between groups and is provided here to allow for comparison to data reported in the same fashion from our previous study (Hornsby and Ricketts, 2003). Described in this fashion a more negative SNR is better as it suggests that a lower signal SPL is required to detect the signal in noise (e.g., hearing in noise is better). A large positive value suggests that the noise had a significant masking effect or conversely that the masking noise was not intense enough to cause a shift in quiet threshold. The average results for the NH and HI group, as well as individual results for HI participants are shown in Table III for the frequencies of 250-6000 Hz. HI subjects whose quiet thresholds at 6000 Hz were sufficiently poor that they were not shifted by the masking noise are shown bolded and with asterisks.

TABLE III.

Average SNRs at threshold as a function of frequency for the NH and HI groups, as well as, individual SNRs for participants with sloping hearing loss. Bolded values with asterisks at 6000 Hz represent HI subjects whose quiet thresholds were not shifted by the presence of the masking noise

| 250 Hz | 500 Hz | 1000 Hz | 1500 Hz | 2000 Hz | 3000 Hz | 4000 Hz | 6000 Hz | Average | |

|---|---|---|---|---|---|---|---|---|---|

| NH | -2.6 | -2.8 | -3.7 | -9.3 | -5.4 | -4.3 | -3.4 | 0.5 | -3.9 |

| HI participants | |||||||||

| DD | 5.2 | 3.7 | 5.5 | -1.8 | 2.0 | 3.7 | 5.8 | 5.6 | 3.4 |

| JS | 3.2 | 3.7 | -0.5 | -5.8 | 0.0 | -0.3 | 3.8 | 21.6 | 0.6 |

| ET | 0.2 | 0.7 | 0.5 | 1.2 | 1.0 | 0.7 | 2.8 | 2.6* | 1.0 |

| JT | 4.2 | 0.7 | 4.5 | 1.2 | 1.0 | -1.3 | -3.2 | -1.4* | 1.0 |

| BW | 10.2 | 0.7 | 0.5 | -4.8 | 1.0 | 0.7 | 16.8 | 30.6 | 3.6 |

| MC | 9.2 | 3.7 | 5.5 | -5.8 | 0.0 | -0.3 | 3.8 | 1.6 | 2.3 |

| RC | 1.2 | -0.3 | -2.5 | -5.8 | -6.0 | -4.3 | -2.2 | 1.6* | -2.8 |

| LC | 13.2 | 7.7 | 5.5 | 2.2 | 2.0 | 1.7 | 5.8 | 5.6 | 5.4 |

| AC | 5.2 | 3.7 | 3.5 | -3.8 | 2.0 | -2.3 | 5.8 | 21.6* | 2.0 |

| MH | 1.2 | -0.3 | 3.5 | -1.8 | 0.0 | 1.7 | 7.8 | 21.6* | 1.7 |

| Average | 5.3 | 2.4 | 2.6 | -2.5 | 0.3 | 0.0 | 4.7 | 1.8 | |

The data reveal the average SNRs for the HI group were substantially elevated compared to the NH group. When averaged across frequency (250-4000 Hz) the SNR at threshold for the NH participants (-4.5 dB) was 6.3 dB better than that of the HI participants (1.8 dB). Results from 6000 Hz were not included in these averages as quiet thresholds rather than the masking noise were responsible for the SNR for several HI subjects. This difference is comparable to that seen in our previous study (i.e., 5.4 dB difference between groups). In addition, SNRs were elevated compared to the NH group, even in the 250-500 Hz frequency regions where thresholds in quiet for the majority of HI participants were near normal (≤25 dB HL) or no poorer than that associated with a mild hearing loss (≤40 dB HL).

B. Thresholds in TEN

Although any broadband background noise (e.g., the speech noise described above) may be used to identify suspected dead regions, the TEN test (Moore et al., 2000; Moore, 2001) was specifically designed to allow relatively quick identification and quantification of suspected dead regions. Therefore cochlear integrity was assessed using the TEN. Using a more conservative criteria (i.e., masked threshold at least 10 dB> than quiet and at least 15 dB> than the level of the masker), none of our HI subjects showed a positive result at any test frequency assessed. Using the less conservative criteria proposed by Moore (i.e., masked thresholds only 10 dB above the level of then masking noise), six of the 10 HI subjects in this study had a positive result at a minimum of one test frequency. Threshold frequencies showing a positive result on the TEN test (using the less conservative criteria) are shown in bold in Table II. Of the six HI participants showing positive TEN results, only four showed a positive result at more than one test frequency. In addition several positive results were observed in frequency regions with good hearing. These findings suggest that large scale dead regions were not present in our study subjects.

C. Derivation and examination of crossover frequencies

The method for deriving crossover frequencies is described in detail in our previous paper (Hornsby and Ricketts, 2003). Briefly, two functions were generated for each participant. Each function consisted of average sentence recognition scores (in proportion correct) plotted as a function of the log of their low-pass or high-pass filter cutoff frequency. Then nonlinear regression, using a three-parameter sigmoid function (SPSS, Inc., SigmaPlot V. 5.0), was used to provide a best fit to each data set. These nonlinear regression functions were used to determine the low- and high-pass filter cutoff frequency for which the predicted score was the same (i.e., the crossover frequency). The sigmoid functions for the NH participants provided a good fit to the measured data, with r2 values ranging from 0.91 to 0.99. The average score at the crossover frequency for the NH group was 50%. The sigmoid function also provided a good fit to the HI data, with r2 values again ranging from 0.91 to 0.99. The average score (28%) at the crossover frequency, however, was lower for the HI than the NH group.

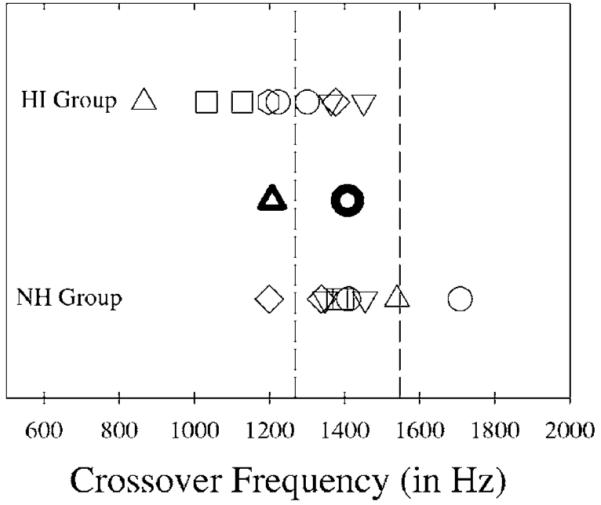

A single factor between-subjects ANOVA was used to examine differences in crossover frequencies between groups. Significance was defined by an alpha level <0.05 for this, and all other, statistical analyses reported in this paper. The independent and dependent variables were subject group and crossover frequency (in Hz), respectively. Figure 2 shows the mean crossover frequencies for the NH and HI groups as well as individual crossover frequencies for each HI and NH participant.

FIG. 2.

Crossover frequency data for NH and HI subjects. The dark circle and triangle represent the average crossover frequency for the NH and HI groups, respectively. The remaining symbols represent individual crossover frequencies for the NH (lower) and HI (upper) groups. Dashed lines show plus or minus 1 standard deviation around the NH mean crossover frequency.

In contrast to our previous study using subjects with flat losses (Hornsby and Ricketts, 2003), the ANOVA results showed crossover frequencies were significantly (F1,18 =7.96, p<0.05) lower for the HI (1207 Hz) than the NH (1408 Hz) participants. Although the ANOVA results show a significant difference in average crossover frequencies between the NH and HI groups there was considerable overlap between groups. Five of the 10 HI participants had crossover frequencies within the range of the NH subjects. Drawing conclusions about the utility of high-frequency information for persons with hearing loss based on these ANOVA results alone would be inappropriate as it is not clear whether the lower crossover frequency of the HI participants is due simply to reduced audibility. These analyses do, however, provide a starting point in examining that question.

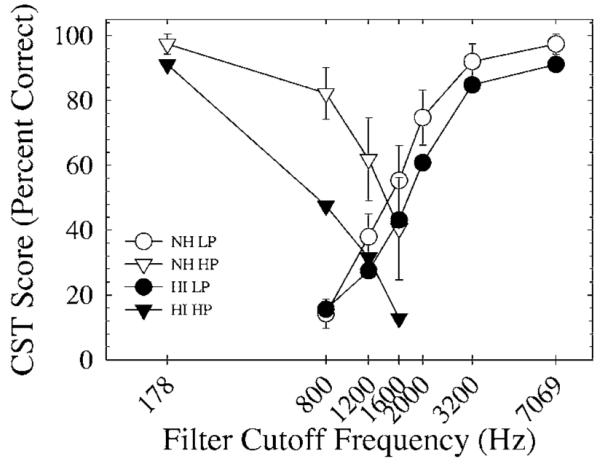

Examination of the average speech understanding data for the NH and HI subjects as a function of LP and HP filter condition, as shown in Fig. 3, is useful to help clarify the reason for the lower crossover frequency observed for the HI subjects. As seen in Fig. 3, the speech understanding performance of the HI subjects was substantially poorer in the HP than the LP conditions, compared to the NH control group. Thus the lower-than-normal average crossover frequency seen in the sloping HI group appears to be due primarily to the reduced performance observed in the HP conditions by the HI subjects.

FIG. 3.

Average CST scores for NH and HI participants as a function of low- and high-pass filter cutoff frequency. The filled and unfilled symbols show scores for the HI and NH participants, respectively. The circles and triangles represent scores for low- and high-pass filtering, respectively. Error bars show 1 standard deviation around the NH means.

D. Speech intelligibility index (SII) calculations

Results from the crossover frequency data suggest that the NH and HI groups differed in their ability to make use of amplified speech information and that this difference varied with frequency. However, given that quiet thresholds limited high-frequency audibility for some HI subjects and thresholds in noise varied substantially between the NH and HI participants (see Fig. 1 and Table III) it remains unclear whether the observed differences in speech understanding were due to residual differences in audibility or other factors (such as the presence/configuration of hearing loss) resulting in differences in the ability to utilize amplified speech information. Given our subjects had high-frequency hearing loss, reduced audibility of high-frequency speech sounds could provide a simple explanation for the lower-than-normal crossover frequencies observed in the HI group. To examine this question, the 1/3 octave band SII calculations (ANSI S3.5, 1997) were performed to generate SII values and predicted scores in each filter condition for each subject. Measures of quiet thresholds were interpolated or extrapolated to match the 1/3 octave center frequencies used in the SII calculations. These thresholds, along with levels of the speech and noise stimuli, were used to determine the proportion of audible speech information in each 1/3 octave frequency band. The frequency importance function specifically derived for the CST materials was used (Sherbecoe and Studebaker, 2002). In addition, the effective speech peaks for the CST proposed by Sherbecoe and Studebaker (2002) were used, rather than assuming 15 dB peaks as in the ANSI standard. The corrections for high presentation levels and spread of masking effects proposed in the ANSI standard were also included in the calculations. Given our interest in potential differences in performance between the HI and NH groups, however, no corrections for hearing loss desensitization were utilized.

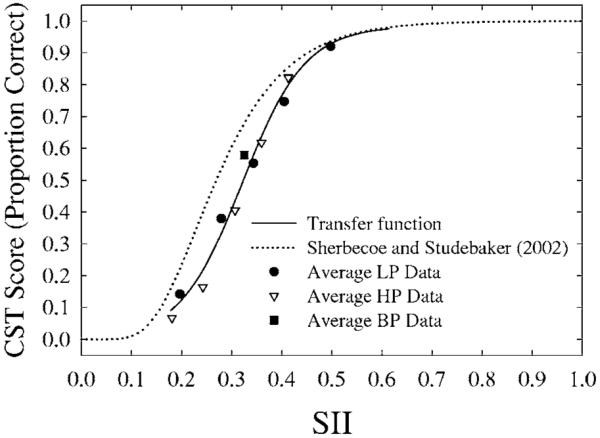

1. SII results for the NH group

Average CST scores for the NH group, as a function of SII are shown in Fig. 4. Each symbol represents the average score of the NH subjects in one of the low-, high-, or band-pass filter conditions previously described. The solid line in Fig. 4 shows the transfer function relating the SII to CST performance. The transfer function was derived, as in our earlier study, using nonlinear regression and a three-parameter sigmoid function (SPSS, Inc.: SigmaPlot V. 5.0) to provide a best fit to the data. For comparative purposes we have also included the transfer function derived by Sherbecoe and Studebaker (2002) for the CST materials (shown by the dotted line). In general, our transfer function required a higher SII for a fixed level of performance (except for high SII values). Given procedural differences in the two studies and the fact that our own transfer function provided a better fit to our data we chose to use our own function.

FIG. 4.

Transfer function relating SII values to CST performance. This function was derived using data from our NH participants. Average CST scores as a function of SII for our NH group for low-, high-, and band-pass conditions are also shown. For comparative purposes we have also included the transfer function derived by Sherbecoe and Studebaker (2002) for the CST materials (shown by the dotted line).

2. SII predictions of HI crossover frequency data

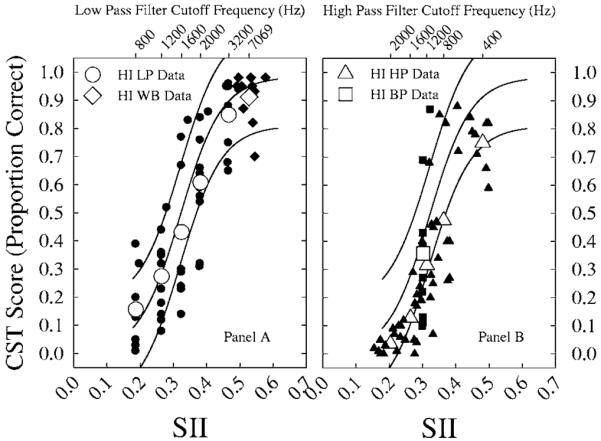

Utilizing the transfer function derived from the NH data, predictions of individual HI performance based on each person’s SII were obtained. These “SII predicted scores” were then compared to actual performance across the various filter conditions. Figure 5 shows the average and individual HI scores as a function of SII and filter cutoff frequency for the low-pass, high-pass, band-pass and wideband conditions.

FIG. 5.

Average and individual HI CST performance as a function of SII and filter condition. Performance in the low-pass (circles) and wideband (diamonds) conditions are shown in panel A. Band-pass (800-3150 Hz shown by squares) and high-pass (triangles) performance are shown in panel B. Average and individual performance are shown by the open symbols and filled symbols, respectively.

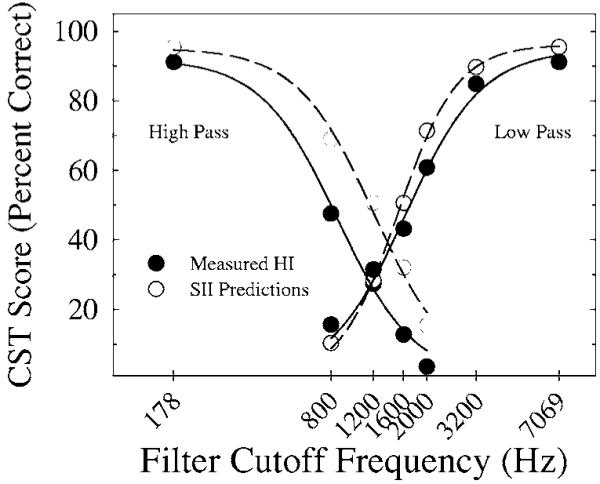

In addition, SII predicted scores for each HI person were used to derive an “SII predicted crossover frequency” for each subject (as described in Sec. III C above). Figure 6 shows the average predicted and measured results for the HI subjects in several LP and HP conditions and the best fit regression functions to the average data.

FIG. 6.

Average SII predicted and measured CST performance for the HI group in several low- and high-pass filter conditions and the best fit regression functions to the average data. SII predicted and measured performance is shown by the open and filled circles, respectively.

The data in Figs. 5 and 6 show that, in general, the SII over-predicted the performance of our HI participants except for conditions where speech information was limited to frequencies below ∼1200 Hz (where hearing loss, if present, was less severe). This discrepancy was largest for data obtained in the HP conditions resulting in a lower-than-predicted crossover frequency (Fig. 6). This was confirmed by an analysis of the individual crossover frequencies derived from the SII predicted scores. Results of a pairedsample t-test found the average crossover frequency of 1334.5 Hz, based on SII predicted scores for the HI participants, was significantly higher (t=-2.897, p<0.05) than the average crossover based on actual HI scores (1207 Hz) but not significantly different (t=1.699, p=0.124) from the average measured crossover frequency of the participants without hearing loss (1407 Hz).

This finding suggests that the lower crossover frequencies observed in the measured data from our HI participants was not due solely to reduced audibility. Consistent with the earlier comparison to the NH measured data, the lower crossover frequencies of our HI participants appears to be due, at least in part, to poorer-than-normal use of amplified HF information. A primary question of interest however, is whether this poorer performance is substantially larger than that resulting from a comparable low-frequency hearing loss.

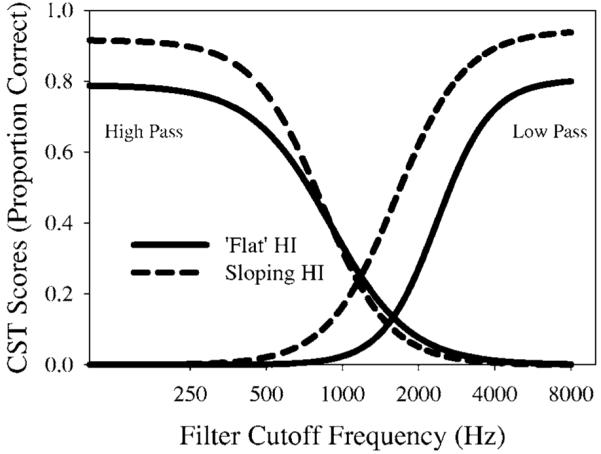

To explore this question we compared the average regression function for our sloping HI subjects to that derived from our previous work (Hornsby and Ricketts, 2003) using subjects with a flat hearing loss and essentially equivalent high-frequency thresholds. Figure 7 shows the average regression functions based on actual measured performance in low- and high-pass filter conditions (i.e., not based on SII predicted scores) of the sloping and flat HI groups. Except in the widest bandwidth conditions the regression functions for the high-pass data essentially overlap between flat and sloping groups. In contrast, the regression function for the low-pass data shows consistently poorer performance for the flat HI group resulting in a lower crossover frequency for the sloping HI group compared to the flat group. The difference in crossover frequencies, however, is not due to differences in the ability to use high-frequency information. Rather the higher crossover frequency observed for the flat hearing loss group is the result of poorer use of low-frequency information. This finding is consistent with the suggestion that hearing loss results in a “uniform” rather than frequency-specific deficit in speech understanding.

FIG. 7.

Average regression functions based on actual measured performance in low- and high-pass filter conditions (i.e., not based on SII predicted scores) of the sloping and flat HI groups. Regression functions for the sloping and flat groups are shown by the dashed and solid lines, respectively.

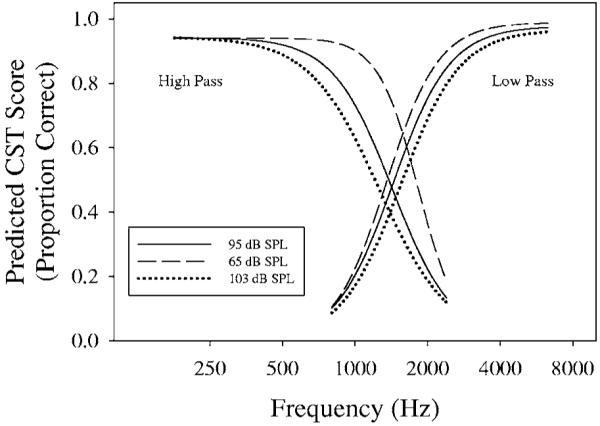

3. Effect of presentation level on crossover frequencies

One factor that is well known to negatively affect speech recognition performance is high presentation levels (e.g., French and Steinberg, 1947; Studebaker et al., 1999; Dubno, Horwitz, and Ahlstrom, 2005a, 2005b; Hornsby, Trine, and Ohde, 2005). Given that the speech and noise materials were presented with a high-frequency emphasis in this study (i.e., at a higher-than-normal level) it is possible that the lower-than-predicted crossover frequency observed in our HI participants was due, at least in part, to the higher-than-normal presentation levels of the high-frequency speech. Although we attempted to minimize differences in presentation levels to our NH and HI participants, to limit loudness discomfort our NH participants generally listened at a lower level than our HI participants (95 versus 103 dB SPL).

The SII includes a correction for the negative effects of high presentation levels and thus allows us to estimate the effect of high presentation levels on crossover frequency. To do so we compared predicted crossover frequencies for our NH participants assuming presentation levels of 65, 95, and 103 dB SPL. The regression functions, derived from predicted scores, for each presentation level are shown below in Fig. 8.

FIG. 8.

Effect of presentation level on crossover frequency. The regression functions shown were derived from fits to SII predicted scores for the CST in multiple low- and high-pass filter conditions. Predictions assume the speech and noise were shaped as in the current study for sloping high-frequency hearing loss and presented at the same SNR as used in this study( +6 dB SNR). The functions were derived assuming speech presentation levels of 65, 95, and 103 dB SPL (measured in a Zwislocki coupler), shown by the dotted, dashed, and solid lines, respectively.

The data in Fig. 8 show that predicted crossover frequencies were shifted systematically lower with each increase in presentation level. The lowering was due to the more rapid decline in performance in the high-pass compared to the low-pass conditions. The largest shift occurred with an increase in level from 65 dB to 95 dB SPL with the predicted crossover frequency lowering from 1578 Hz to 1407 Hz. Increasing the level to 103 dB resulted in only a slight additional lowering of the crossover frequency to 1396 Hz. Consistent with past research (e.g., Hogan and Turner, 1998) these findings suggest that spectral shaping appropriate for persons with high-frequency hearing loss results in high presentation levels that limit the utility of amplified high-frequency speech information. To confirm the effect was due to the high level distortion factor within the ANSI standard and not other factors such as spread of masking effects which are also included in the calculation, we also derived predicted crossover frequencies without utilizing the level distortion factor in the SII calculations. Without the inclusion of the level distortion factor, predicted crossover frequencies shifted only minimally lower (from 1578 Hz to 1559 Hz) as levels increased from 65 to 103 dB SPL. Likewise, when shaping appropriate for a “flat” hearing loss, as in our previous study, was applied crossover frequencies were only minimally affected (i.e., lowered from 1593 Hz to 1536 Hz).

4. Analysis of band-pass data

The analysis of crossover frequencies provides information about the relative utility of high- and low-frequency information. It is also of interest however, to examine the effect of hearing loss on the utility of speech information in specific frequency regions. We can also use the data in Figs. 3 and 6 to examine the effect of hearing loss on the relative utility of high- and low-frequency speech information. Of particular importance in this study, performance improved for both the NH and HI subjects as high-frequency information above 3000 Hz was made available (i.e., as low-pass filter cutoff frequency increased from 3150 Hz to 7069 Hz). Figure 6 shows that although absolute performance was slightly poorer than predicted, the improvement in performance with the addition of high-frequency information was well predicted by the SII.

It should be noted here that the relative improvement, both measured and predicted, in performance when high-frequency information was added to existing low-frequency information was small (approximately 5%-6% for both groups) for both the NH and HI participants. However, ceiling effects clearly could be limiting performance improvements in this condition. By comparing performance in multiple BP conditions (i.e., BP800-3150, LP3150, and HP800) the contribution of low- and high-frequency information can be assessed while limiting the impact of ceiling effects. These results are shown in Fig. 9.

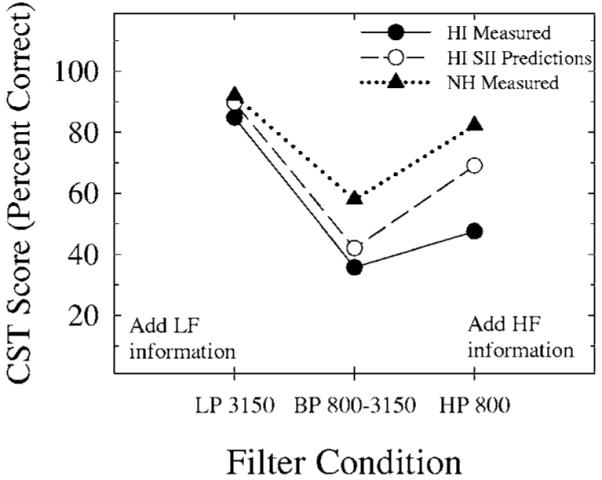

Fig. 9.

Average measured and predicted CST scores for the HI group, shown by the filled and open symbols respectively, from the band widening conditions. Measured performance for the NH group (filled triangles) is also shown for comparison.

Absolute performance is only slightly over predicted in the low-pass 3150 and BP 800-3150 Hz conditions. In addition, the performance improvement as additional low-frequency information is made available is well predicted by the SII. In contrast, when additional high-frequency information is provided (compare BP800-3150 Hz to HP800 Hz) the performance improvement is somewhat less than predicted(∼12% versus 27%). However, it is important to note that this limited improvement with the addition of high-frequency information coincides with the frequency regions where the hearing loss is most severe. In other words, this reduced use of high frequency information is expected given the presence of hearing loss in this frequency region. It is not evidence, however, that the high-frequency regions are affected any more than low frequency regions given a comparable degree of hearing loss. In fact when viewed in conjunction with the data in Fig. 7 (performance of flat and sloping HI groups), which shows comparable performance in the high pass conditions, this finding is consistent with the idea that the presence of hearing loss limits the utility of speech information in a uniform fashion across the frequency region of the hearing loss.

IV. DISCUSSION

One purpose of this study was to examine the relative utility of amplified high-frequency speech information for persons with sloping high-frequency hearing loss. To do this we examined the speech understanding of persons with sloping high-frequency hearing loss under various conditions of filtering and compared their performance to that of a control group with normal hearing and to previous work using a person with flat hearing losses. It is important to note that, on average, persons with sloping hearing loss were able to use amplified high-frequency information to improve their speech understanding, although the magnitude of the improvement was small. The sloping HI subjects’ performance improved an average of 6% (compared to the NH improvement of 5%) as LP filter cutoff frequency increased from 3150 Hz to 7069 Hz with eight of 10 HI subjects showing improvements of 7% or less. This was due to in large part to ceiling effects as average performance in the 3150 Hz low-pass condition was ∼92%. Two of the 10 sloping HI subjects, AC and ET, showed larger improvements of 10% and 27%, respectively. These data show that some individuals with sloping high-frequency hearing loss obtain substantial benefit from speech information above 3000 Hz. These results are consistent with the findings of Turner and Henry (2002), whose HI participants were also tested in noise. Together these findings suggest that although persons with sloping high-frequency hearing loss (up to 60-80 dB HL) are able to make use of amplified high-frequency speech information, the improvements in most cases are expected to be small.

The average improvement in scores as LP filter cutoff increased above 3150 Hz was larger for the subjects with flat hearing loss in our previous study than for those with sloping losses in the current study, even though high-frequency thresholds were comparable between groups. Subjects with flat losses showed an average improvement of ∼16% as low-pass filter cutoff frequency increased from 3150 Hz to the wideband condition (Hornsby and Ricketts, 2003). The range of improvement was again variable, although five of nine subjects showed improvements of 17% or more (maximum of 33% improvement). This larger improvement was likely due, at least in part, to reduced ceiling effects. Average performance in the low-pass 3150 Hz condition was only ∼62% for the flat hearing loss group. In addition, and in contrast to some reports in the literature (e.g., Hogan and Turner, 1998; Vickers, Moore, and Baer, 2001), none of our subjects in either study showed any decrement in performance as additional high-frequency information was added.

These cases highlight the utility of amplified high-frequency information when provided in conjunction to existing low-frequency information and are most applicable in relation to hearing aid fittings. These data argue for a cautious approach when considering reducing high-frequency audibility (at least for speech in noise) as the majority of our subjects in both studies showed improvements in speech understanding in noise when the maximum bandwidth of speech information was made available. At the same time, both clinical experience and research suggests that in addition to speech intelligibility, we must take into account an individual’s perception of loudness comfort and sound quality, which may be negatively affected by substantial high-frequency amplification, when determining optimal amplification characteristics, particularly for adults.

A primary purpose of this study was to examine the role hearing loss configuration plays in the relative utility of amplified high-frequency speech information. Several findings from this study suggest that persons with sloping high-frequency SNHL do not utilize amplified speech information in the same fashion as persons with normal hearing. For example, crossover frequencies, derived from measured speech scores in low- and high-pass filter conditions, were significantly lower for the persons with sloping SNHL than for the control group of persons without hearing loss. The average measured crossover frequency, for the sloping HI group, was also lower than the average SII predicted crossover frequency for these same subjects. This suggests that these lower-than-normal crossover frequencies were not due simply to reduced audibility. In addition, when ceiling effects were limited, as in the band widening conditions, our sloping HI group showed smaller improvements in speech understanding with the addition of high-frequency information than the NH participants (12% vs 24% improvement) and smaller improvements than predicted based on SII calculations (12% vs 27%).

It is not clear however, what factors may be responsible for this limited utility of amplified high-frequency information. Specifically, factors other than degree of hearing loss also differentiate our NH and sloping hearing loss groups and may have impacted our results. In addition to hearing loss, a substantial difference in age exists between our NH and HI groups (∼29 and 63 years old, respectively). Other researchers have reported factors, besides hearing loss, associated with the aging process (e.g., age related declines in processing efficiency, memory and cognitive processing) can influence speech understanding in elderly (CHABA, 1988; Wingfield, 1996; Amos and Humes, 2001; Pichora-Fuller, 2003). Amos and Humes (2001) reported specifically that some measures of cognitive function were correlated with the ability of elderly HI persons with varying degrees of high-frequency hearing loss, to make use of high-frequency speech information, although this contribution was secondary to audibility. The fact that our HI participants showed substantially higher thresholds in noise compared to the NH participants, even in the lower frequency regions (250-500 Hz) where thresholds were best, strongly suggests that factors other than hearing loss affected our results.

At the same time a relationship between speech understanding and age-related processing deficits is not always present or clear (e.g., Humes and Floyd, 2005). In the current study comparing variations in the accuracy of SII predictions across frequency regions with and without hearing loss provides some estimate of the impact of age on our results. Note in Fig. 6 that performance in the low-pass conditions ( <1200 Hz), where hearing thresholds are better, is well predicted. In contrast, whenever, speech information is presented to frequency regions with more hearing loss (e.g., >1200 Hz) performance is generally poorer than predicted. If age differences were a substantial factor in our findings we would expect that predictive accuracy would have been affected across all frequency regions. Finally, the presence or absence of age effects in the current study do not detract from the importance of the primary finding suggesting that hearing loss (up to ∼80 dB HL) has a “uniform” rather than “frequency-specific“ effect on speech understanding.

In contrast to some previous studies (e.g., Vickers, Moore, and Baer, 2001; Baer, Moore, and Kluk, 2002) our study results suggest that cochlear dead regions were not a significant factor in limiting the utility of high-frequency speech information. In addition, we used SII calculations to assess the role audibility played in the observed differences between the groups. SII calculations suggest that although slight audibility differences between the NH and HI groups existed in the high frequencies, these differences had only a minimal impact on the lowered crossover frequencies observed for our sloping HI participants.

Likewise, differences in absolute presentation levels between groups (the HI listened at slightly higher levels) appeared to play only a small role in the poorer use of amplified high-frequency information compared to our NH control group. It should be noted, however, that when compared to predicted performance at conversational levels (e.g., 65 dB SPL) the high presentation levels required by persons with high-frequency hearing loss to ensure audibility appear to substantially reduce the utility of high-frequency speech information both for listeners with and without hearing loss. This is evidenced by the lowering in predicted crossover frequencies from 1578 to 1396 Hz as presentation levels are increased from 65 to 103 dB SPL. This is also consistent with the difference in the measured crossover frequencies obtained by Sherbecoe and Studebaker (2002) for the CST materials presented at 65 dB SPL in a+6 dB SNR (1562 Hz) and that of the NH participants in the current study (1407 Hz). Even when these factors were accounted for in the SII calculations (i.e., comparing predicted and measured HI crossover frequencies), however, the utility of high-frequency information remained poorer-than-normal for these persons with sloping high-frequency hearing loss.

We also looked at the effect of hearing loss configuration by comparing our results from persons with sloping hearing loss to previous data from persons with flat configuration. The comparison of crossover frequencies (see Fig. 7) between the sloping HI group from the current study and the flat hearing loss group from our previous study (Hornsby and Ricketts, 2003) suggests that configuration of hearing loss does play a role in determining the relative utility of speech information in different frequency regions. The data in Fig. 7, however, suggest that the presence of hearing loss results in a uniform rather than frequency-specific deficit in speech understanding. Specifically, the difference in crossover frequencies between the sloping HI and flat HI groups was due to the poorer performance in the low-pass conditions by the flat HI group (i.e., in the region where thresholds were poorer for the flat compared to sloping group). In addition crossover frequencies for our flat group and a control group of persons without hearing loss were not significantly different. This suggests that presence of a flat hearing loss decreased the utility of speech information across frequencies in a “uniform” fashion. The introduction of a sloping configuration merely resulted in improved utility of low-frequency information (where thresholds were better) and continued, and comparable, poorer use of high-frequency information (where thresholds remained poor).

V. CONCLUSIONS

A primary finding of this study is that persons with sloping high-frequency SNHL are limited in their ability to make use of audible high-frequency speech information to improve speech understanding in noise. This deficit appears due in part to the relatively high presentation levels these individuals are forced to listen. Audibility measures (i.e., SII calculations) suggest that the limited utility of high-frequency information by persons with sloping hearing loss in this study is not due simply to reduced audibility. Rather, SII measures suggest that the presence of sensorineural hearing loss, regardless of frequency, results in a poorer-than-normal ability to use amplified speech information in that frequency region. This deficit appears to be comparable to that seen in persons with flat hearing loss configuration and comparable high-frequency thresholds, suggesting configuration of hearing loss does not create additional reductions in the utility of amplified high-frequency information. Taken together these findings add further support to the idea that sensorineural hearing loss (up to 60-80 dB HL) reduces the contribution of amplified speech information in a uniform, rather than frequency-specific, fashion at least for high-frequency (3000-4000 Hz) hearing losses up to approximately 60-80 dB HL.

ACKNOWLEDGMENTS

This work was supported, in part, by Grant R03 DC006576 from the National Institute on Deafness and Other Communication Disorders (National Institutes of Health) and the Dan Maddox Hearing Aid Research Foundation. Thanks to the associate editor Ken Grant, Larry Humes, and two anonymous reviewers for their helpful comments on an earlier version of this paper. Thanks also to Gerald Studebaker and Bob Sherbecoe for their patient discussions regarding the SII procedure and to Earl Johnson for help with data collection. This research was supported, in part, by the Dan Maddox Hearing Aid Research Endowment.

Footnotes

PACS number(s): 43.71.Ky, 43.66.Ts, 43.66.Sr, 43.71.An [KWG]

References

- Amos N, Humes L. The contribution of high frequencies to speech recognition in sensorineural hearing loss; Paper presented at the 12th International Symposium on Hearing; Mierlo, The Netherlands. 2001. [Google Scholar]

- ANSI . American National Standard Specification for Audiometers; New York: 1996. ANSI S3.6-1996. [Google Scholar]

- ANSI . American National Standard Methods for the calculation of the speech intelligibility index; New York: 1997. ANSI S3.5-1997. [Google Scholar]

- ASHA Manual pure-tone threshold audiometry. 1978;20:297–301. [PubMed] [Google Scholar]

- Baer T, Moore BC, Kluk K. Effects of low pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. J. Acoust. Soc. Am. 2002;112:1133–1144. doi: 10.1121/1.1498853. [DOI] [PubMed] [Google Scholar]

- CHABA. Working group on speech understanding and aging. Committee on hearing, bioacoustics, and biomechanics. Commission on behavioral and social sciences and education. National Research Council Speech understanding and aging. J. Acoust. Soc. Am. 1988;83:859–895. [PubMed] [Google Scholar]

- Ching T, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: Predictions from audibility and the limited role of high-frequency amplification. J. Acoust. Soc. Am. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Ching TY, Dillon H, Katsch R, Byrne D. Maximizing effective audibility in hearing aid fitting. Ear Hear. 2001;22:212–224. doi: 10.1097/00003446-200106000-00005. [DOI] [PubMed] [Google Scholar]

- Cox RM, Alexander GC, Gilmore C. Development of the connected speech test (CST) Ear Hear. 1987;8:119S–126S. doi: 10.1097/00003446-198710001-00010. [DOI] [PubMed] [Google Scholar]

- Cox RM, Alexander GC, Gilmore C, Pusakulich KM. Use of the Connected Speech Test (CST) with hearing-impaired listeners. Ear Hear. 1988;9:198–207. doi: 10.1097/00003446-198808000-00005. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Schaefer AB. Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. II-Articulation index predictions. J. Acoust. Soc. Am. 1989;85:355–364. doi: 10.1121/1.397687. [DOI] [PubMed] [Google Scholar]

- Dubno J, Horwitz A, Ahlstrom J. Word recognition in noise at higher-than-normal levels: Decreases in scores and increases in masking. J. Acoust. Soc. Am. 2005a;118:914–922. doi: 10.1121/1.1953107. [DOI] [PubMed] [Google Scholar]

- Dubno J, Horwitz A, Ahlstrom J. Recognition of filtered words in noise at higher-than-normal levels: Decreases in scores with and without increases in masking. J. Acoust. Soc. Am. 2005b;118:923–933. doi: 10.1121/1.1953127. [DOI] [PubMed] [Google Scholar]

- French NR, Steinberg JC. Factors governing the intelligibility of speech sounds. J. Acoust. Soc. Am. 1947;19:90–119. [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: benefits for hearing-impaired listeners. J. Acoust. Soc. Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA. The effects of hearing loss on the contribution of high- and low-frequency speech information to speech understanding. J. Acoust. Soc. Am. 2003;113:1706–1717. doi: 10.1121/1.1553458. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Trine T, Ohde R. The effects of high presentation levels on consonant feature transmission. J. Acoust. Soc. Am. 2005;118:1719–1729. doi: 10.1121/1.1993128. [DOI] [PubMed] [Google Scholar]

- Humes LE. Factors underlying the speech-recognition performance of elderly hearing-aid wearers. J. Acoust. Soc. Am. 2002;112:1112–1132. doi: 10.1121/1.1499132. [DOI] [PubMed] [Google Scholar]

- Humes LE, Floyd SS. Measures of working memory, sequence learning, and speech recognition in the elderly. J. Speech Lang. Hear. Res. 2005;48:224–235. doi: 10.1044/1092-4388(2005/016). [DOI] [PubMed] [Google Scholar]

- Moore BC, Huss M, Vickers DA, Glasberg BR, Alcantara JI. A test for the diagnosis of dead regions in the cochlea. Br. J. Audiol. 2000;34:205–224. doi: 10.3109/03005364000000131. [DOI] [PubMed] [Google Scholar]

- Moore BCJ. Dead regions in the cochlea: diagnosis, perceptual consequences, and implications for the fitting of hearing aids. Trends Amplification. 2001;5:1–34. doi: 10.1177/108471380100500102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller MK. Processing speed and timing in aging adults: psychoacoustics, speech perception, and comprehension. Int. J. Audiol. 2003;42:S59–S67. doi: 10.3109/14992020309074625. [DOI] [PubMed] [Google Scholar]

- Rankovic CM. Articulation index predictions for hearing-impaired listeners with and without cochlear dead regions. J. Acoust. Soc. Am. 2002;111:2545–2548. doi: 10.1121/1.1476922. 111, 2549-2550 (2002), author reply. [DOI] [PubMed] [Google Scholar]

- Sherbecoe RL, Studebaker GA. Audibility-index functions for the connected speech test. Ear Hear. 2002;23:385–398. doi: 10.1097/00003446-200210000-00001. [DOI] [PubMed] [Google Scholar]

- Studebaker G, Sherbecoe R, McDaniel D, Gwaltney C. Monosyllabic word recognition at higher-than-normal speech and noise levels. J. Acoust. Soc. Am. 1999;105:2431–2444. doi: 10.1121/1.426848. [DOI] [PubMed] [Google Scholar]

- Summers V, Molis MR, Musch H, Walden BE, Surr RK, Cord MT. Identifying dead regions in the cochlea: psychophysical tuning curves and tone detection in threshold-equalizing noise. Ear Hear. 2003;24:133–142. doi: 10.1097/01.AUD.0000058148.27540.D9. [DOI] [PubMed] [Google Scholar]

- Turner CW, Brus SL. Providing low- and mid-frequency speech information to listeners with sensorineural hearing loss. J. Acoust. Soc. Am. 2001;109:2999–3006. doi: 10.1121/1.1371757. [DOI] [PubMed] [Google Scholar]

- Turner CW, Cummings KJ. Speech audibility for listeners with high-frequency hearing loss. Am. J. Audiol. 1999;8:47–56. doi: 10.1044/1059-0889(1999/002). [DOI] [PubMed] [Google Scholar]

- Turner CW, Henry BA. Benefits of amplification for speech recognition in background noise. J. Acoust. Soc. Am. 2002;112:1675–1680. doi: 10.1121/1.1506158. [DOI] [PubMed] [Google Scholar]

- Wingfield A. Cognitive factors in auditory performance: context, speed of processing, and constraints of memory. J. Am. Acad. Audiol. 1996;7:175–182. [PubMed] [Google Scholar]

- Vickers DA, Moore BC, Baer T. Effects of low-pass filtering on the intelligibility of speech in quiet for people with and with-out dead regions at high frequencies. J. Acoust. Soc. Am. 2001;110:1164–1175. doi: 10.1121/1.1381534. [DOI] [PubMed] [Google Scholar]