Abstract

One of vision’s most important functions is specification of the layout of objects in the 3D world. While the static optical geometry of retinal disparity explains the perception of depth from binocular stereopsis, we propose a new formula to link the pertinent dynamic geometry to the computation of depth from motion parallax. Mathematically, the ratio of retinal image motion (motion) and smooth pursuit of the eye (pursuit) provides the necessary information for the computation of relative depth from motion parallax. We show that this could have been obtained with the approaches of Nakayama and Loomis (1974) or Longuet-Higgins and Prazdny (1980) by adding pursuit to their treatments. Results of a psychophysical experiment show that changes in the motion/pursuit ratio have a much better relationship to changes in the perception of depth from motion parallax than do changes in motion or pursuit alone. The theoretical framework provided by the motion/pursuit law provides the quantitative foundation necessary to study this fundamental visual depth perception ability.

Keywords: visual system, eye movements, psychophysics

1. Introduction

To perceive a three-dimensional world the human visual system must rely on the information falling upon a two-dimensional retina. Therefore, geometry provides a crucial starting point for understanding how the visual system produces a neural representation of relative depth. Historically, knowledge of the underlying static geometry was central to understanding how the lateral separation of the two eyes creates retinal images with objects in different positions (Wheatstone, 1838; Wade, 1998). This retinal disparity is an important cue for the perception of relative depth from binocular stereopsis. Another important monocular depth perception cue is motion parallax, the relative movement of objects in a scene created by the translation of an observer (Gibson, 1950). Here we use the dynamic geometry of motion parallax to show that the visual system relies on a ratio of retinal image motion and pursuit eye movement to disambiguate near and far depth from motion parallax, and to show that this ratio provides a reliable metric for relative depth of objects in a scene. This motion/pursuit law finally provides a quantitative and unambiguous measure of depth from motion parallax, a crucial step in understanding the brain mechanisms for depth perception.

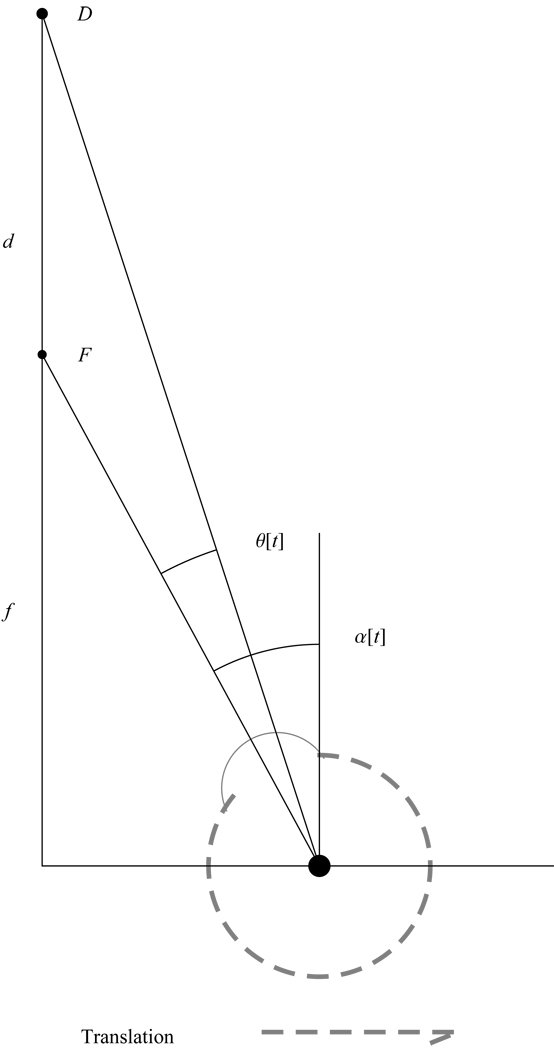

Recent psychophysical studies (Nawrot, 2003; Naji & Freeman, 2004; Nawrot & Joyce, 2006) have shown that the pursuit eye movement system provides a necessary extra-retinal signal for the unambiguous perception of depth from motion parallax. That extra-retinal signal does not appear to come from other potential sources (Nawrot & Joyce, 2006). Neurophysiological studies in cortical area MT show that these neurons appear to use an extra-retinal signal in order to code unambiguous depth motion parallax (Nadler et al., 2008), and that the smooth eye movement system is the source of the required signal (Nadler et al., 2006; Nadler et al., submitted). As the observer translates laterally (Figure 1), the observer’s eyes rotate to maintain stable fixation on a particular point in the scene (the fixate1, F) at the viewing distance f. Because gaze is maintained on the fixate during translation (Miles & Bussetini, 1992), distractors (D), at nearer (−) or farther (+) distance d than the fixate, generate image motion in opposite directions on the observer’s retina. The visual system’s apparent task is to use this retinal image motion along with the extra-retinal information to recover the relative depth (d/f) of D in the scene.

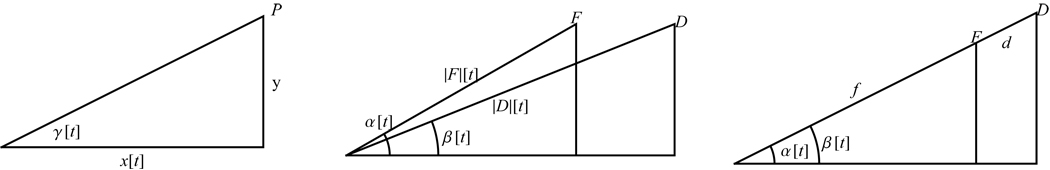

Figure 1.

Schematic overhead representation of motion parallax. The observer is depicted as translating rightward while maintaining gaze on the fixate (F) at a specific viewing distance (f). A distractor (D) is shown here more distant (d) than the fixate. Observer fixation on F produces the gaze angle α that changes over time at the rate dα/dt as the observer translates and pursuit causes the eye to counter-rotate. This observer translation also causes the retinal image of D, displaced from the retinal image of F by the angle θ, to change at the rate dθ/dt.

In Figure 1, the pursuit eye movement corresponds to change in angle α, which measures the rotation of the eye. Retinal image motion corresponds to change in angle θ between the fixate and the distractor. In conditions that produce motion parallax, both of these angles are continuously changing, mathematically giving nonzero time derivatives, dθ/dt and dα/dt. The dynamic geometry of motion parallax shows that the ratio of these rates determines the relative depth d/f (itself a ratio). The ratio of the angular rates is denoted: . We propose that this ratio of rates is the key mathematical and neurophysiological quantity for the perception of depth from motion parallax. Neither rate alone is sufficient to determine depth, but while both rates have clear and independent perceptual counterparts, their combination is needed to determine depth. The precise relationship between relative depth d/f and the ratio of angles dθ/dα is quite simple and the angle β = α − θ makes the mathematical derivations easier. The motion/pursuit law (M/PL) is:

| (1) |

Proof of this basic mathematical formula involves only an elementary analysis of change in position at the time when the eye crosses the line containing the fixate and the distractor. This formula works in all cases of f > 0 and d + f > 0 (d is negative for near distractors), and is independent of units as the distance units cancel on division.

Moreover, the M/PL formula (1) constrains the perception of depth-from-motion and accounts for depth sign (i.e., near vs. far). It is this unambiguous depth sign that makes motion parallax such an important depth cue, unlike other types of depth-from-motion, that lack a pursuit signal, and generate an ambiguous depth percept (Farber & McConkie, 1979). That is, retinal image motion is the basis for all depth-from-motion, but a concomitant pursuit signal guides or constrains the orderly conversion of motion-to-depth as described by formula (1). In the absence of a pursuit signal, an observer may still perceive depth-from-motion due to the retinal image motion, but the motion-to-depth process is unconstrained and perceived depth-sign and depth scaling is ambiguous and may rely on probabilistic analyses such as that suggested by Domini and Caudek (2003).

The M/PL also provides a mechanism to explain depth scaling similar to binocular stereopsis: changes in f, while the M/PL ratio dθ/dβ remains constant, results in a proportional change in relative depth, d/f. This means that perceived depth (d) scales with the viewing distance (f), if the retinal image velocity and pursuit velocity remain constant. Similarly, a change in the retinal image velocity produces a change in perceived depth if viewing distance and pursuit remain constant.

The M/PL also explains depth constancy with motion parallax: a fixed M/PL ratio dθ/dβ at a fixed viewing distance f produces a fixed relative depth percept d/f, regardless of the absolute changes in speed of observer translation, retinal image motion, and pursuit velocity (Ono & Ujike, 2005). That is, perceived depth does not change when observer translation speed changes. When observer translation speed is changed, dθ/dt and dα/dt are multiplied by a factor, but the two factors cancel in the ratio. Of course, the M/PL applies to depth-from-motion parallax conditions in which the observer has pursuit eye movements. Depth constancy in conditions lacking pursuit eye movements may again rely on probabilistic analyses (e.g., Domini & Caudek, 2003).

The M/PL also provides a “speed multiplier” effect for motion parallax, making it useful for long viewing distances when the observer is traveling at a higher speed (e.g., driving). Increased observer translation speed makes the constituent elements dθ/dt and dα/dt larger so that they are more likely to be supra-threshold, and therefore detected and registered by the visual system. This speed multiplier effect is not available for the perception of depth from binocular stereopsis. For example, the binocular disparity of an object 20 m beyond a fixate at 100 m is B.D. ≈ 0.372 min of arc which is below normal human visual threshold (Tyler, 2004). However, for an observer moving at 100 km/hr, dq/dt ≈ 159 min/sec. The pursuit velocity da/dt would also be proportionally increased so both the motion and pursuit signals would be greater. However, the ratio dq/db remains unchanged by the change in observer speed so the result is the correct relative depth percept. Therefore, increased observer translation speed makes motion parallax useful over much greater viewing distances than binocular disparity.

Although the explanation above describes a single ratio, to provide the depth information for complex scene it is necessary that the M/PL must have a neurophysiologically plausible parallel implementation at a multitude of points across the visual field. Consider that the visual system is capable of simultaneously detecting a different retinal image motion dθ/dt at countless points in the scene. To recover relative depth for each D across the entire visual panorama, all of the different point estimates of dθ/dt are compared to the one, singular, pursuit signal dα/dt and to one singular viewing distance f. The result is a multitude of different relative depth estimates d/f across the visual field. When the observer’s eye moves to a different point in space, this change in the fixate causes a change in f and pursuit dα/dt, and possibly a change in the retinal image motion dθ/dt. These new values are then used by the visual system for a new set of depth calculations.

Longuet-Higgins and Prazdny (1980) wrote, “There have been two somewhat different approaches to the interpretation of visual motion. One is based on an analogy with stereopsis, and the other appeals to the existence of receptors that respond to visual stimuli moving across the retina with specific velocities…(p.385).” Our approach is based on direct use of elementary geometry, but there are noteworthy connections with the two older approaches given below.

2. Mathematical Derivations

We prove four new mathematical results and compare them to previous mathematical work. First, we prove formula (1) using only the law of sines and a fundamental approximation, Sin[ι] ~ ι, for ι ≈ 0. Second, we show that the M/PL holds for non-perpendicular translation provided the translation is not directly toward the fixate, again using only elementary geometry to emphasize the importance of the two basic angular derivatives. Third, we justify Figure 5 that illustrates why retinal image motion alone does not determine depth. Fourth, we show that retinal motion is asymptotic to a multiple of binocular disparity at long distances.

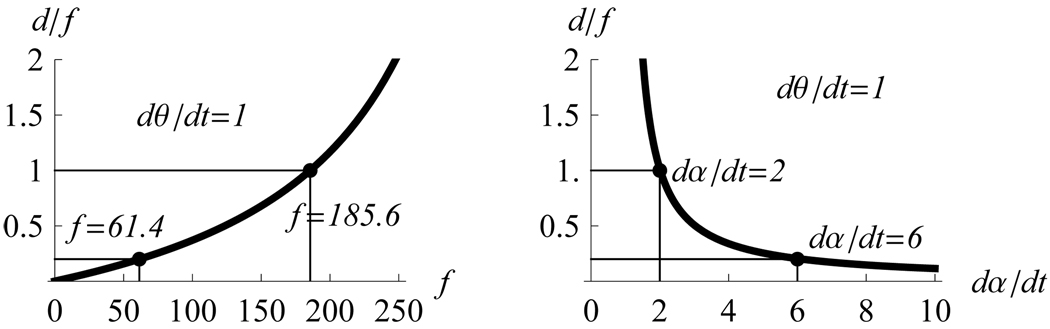

Figure 5.

Curves of constant retinal image motion. Shown are curves of constant retinal motion equal to one degree per second for observer translation speed of 6.5 cm/sec. On the left, the viewing distance and relative depth pairs on this curve are shown. It shows that the same retinal image motion produces different relative depth (d/f) estimates depending on the viewing distance. On the right, the pursuit rate and relative depth pairs are shown. It shows that the same retinal image motion produces relative depth estimates scaled with the pursuit rate dα/dt. Retinal image motion alone does not determine relative depth, but the motion/pursuit law does.

We also show how our depth formula can be derived by adding pursuit to the “velocity approach” of Nakayama and Loomis (1974) or Longuet-Higgins and Prazdny (1980).

2.1. Geometric Proof of Motion/Pursuit Law -Formula (1)

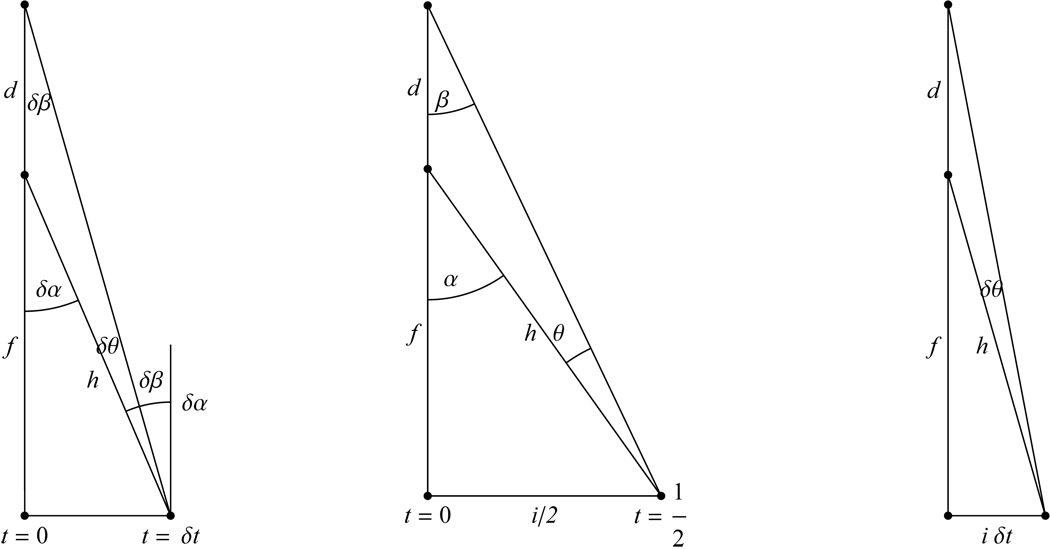

In Figure 2 A the positions of the right eye node point at time t = 0 and a later infinitesimal time t = δt ≈ 0 are shown with the fixate and distractor on the y-axis By parallels, the angles marked δα from the vertical to the line from the eye to the fixate are the same in the two positions shown. By definition of β, δβ + δθ = δα, for the δβ shown at the right. Also by parallels the angle δβ shown at the top is the same δβ.

Figure 2.

Changes in the separation, θ, and tracking, α, angles for small perpendicular rightward translation. The fixate (F) and distractor (D) are shown on the vertical axis. A) Shown are the angles for infinitesimal time t = δt ≈ 0. B) Shown are the angles at t = 1/2 at a translation speed of i. (c) Shown are the angles for a small change in spatial separation, θ.

By the Law of Sines, , or equivalently,

| (2) |

with ε ≈ 0 and ς ≈ 0, since Sin[ι] = ι + η · ι, with η ≈ 0 when ι ≈ 0. When f > 0 is real and δt ≈ 0, h = f + κ, for κ ≈ 0, so . The outside formulas are real (have no infinitesimals) so they are equal (see Stroyan (1998) for example), proving a version of the “motion pursuit” law (written in differential notation, dθ[0] = θ′[0]dt, dβ[0] = β′[0]dt.) This is formula (1) written in one form:

| (3) |

Since β = α − θ, we may also write this as given in formula (1) above.

Also, Tan[δβ] = δt/(d + f) and Tan[δα] = δt / f, so we obtain a formula for the motion/pursuit ratio itself:

| (4) |

Notice that this equation can be solved for the ratio d/f to give formula (1), so formulas (1) and (4) are mathematically equivalent, but measure different ratios.

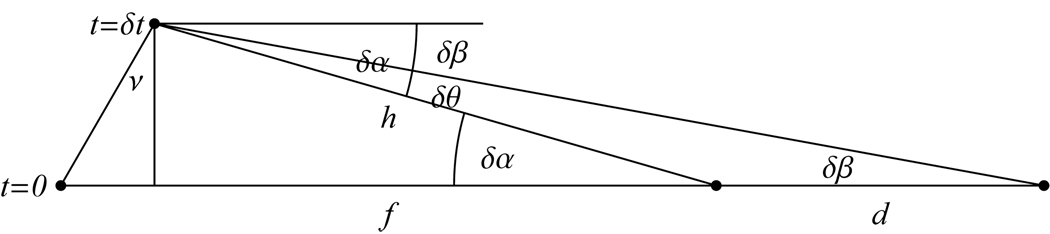

Non-perpendicular translation may be useful for angles other than ν = 0 studied in our experiments. Following is a derivation of the depth formula for non-perpendicular translation. Let the fixate and distractor lie on the x-axis and translate along the slanted line inclined at an angle ν shown in Figure 3.

Figure 3.

Shown are changes in θ and α for the case of transverse translation

By the Law of Sines, , or equivalently,

| (5) |

with ε ≈ 0 and ς ≈ 0. By the definition of cosine, Cos[δα] = (f − s · δt · Sin[ν])/h, so f = h · Cos[δα] + s · δt · Sin[ν] where the translation is along the line inclined at angle ν shown in Figure 3 at speed s. Again, h = f + κ with κ ≈ 0 so d/f ≈ d/h. Putting the outside terms together

| (6) |

Little is known experimentally in the case where ν ≈ π/2, where the motion/pursuit ratio is undefined and our derivation does not apply. Hanes, Keller and McCollum (2008) have an interesting formula (9 in their paper) for the relative distance between two distractors in the case ν = π/2. We conjecture that people will fail to accurately perceive this in experiments.

2.2 Comparison to previous “velocity” approaches to motion parallax

If one adds measurement of pursuit to the approach of Nakayama and Loomis (1974) it is easy to derive a formula for the separation derivative or “motion”. The Nakayama and Loomis approach relies on the following situation: Consider an observer moving along the x-axis at speed s with a fixed object at P a fixed distance y above the x-axis, as shown in Figure 4A (no fixation or eye orientation of any kind is assumed).

Figure 4.

Illustrations of the geometry of outlined in Nakayama and Loomis (1974). A) Shown is the general case of motion along x, and the relative position of point P. B) Shown is the case of a fixate (F) and distractor (D) and the corresponding angles angles α and β. C) Shown is the case of the fixate and distractor in line.

Nakayama and Loomis differentiate the basic trig relation to obtain their fundamental formula

| (7) |

Now consider the case of a fixate point F and a distractor D as shown in Figure 4 B with corresponding angles α and β. From formula (7) we obtain

| (8) |

The retinal image motion is the time rate of change of the angle θ = α − β, so writing in terms of the tracking angle and retinal image motion,

| (9) |

At the time when the points are in line with the eye shown in Figure 4 C this is equivalent to the expression:

| (10) |

The ratio of motion to pursuit, , yields our formula (4) above from this approach.

It is important to notice that the angle γ and its rate of change in formula (7) are not retinal cues in the case of motion with fixation. (They use coordinates on a fixed retinal circle.) For example, we use the general formula for the pursuit derivative above, but when there is fixation, the retinal image of the fixate point does not move, so the derivative of the retinal position would be zero. This is why we need add the formula (9) to the Nakayama and Loomis approach before we take the motion/pursuit ratio. Neither of these things is done in that line of published work.

The approach of Longuet-Higgins and Prazdny (1980) is easier to connect with our approach because fixation is built into their homogeneous coordinates. We consider the symmetric case (where their Y=0, W=0), and use their formula (2.14) specializing to

| (11) |

for two points with the same (x,y)-projected coordinates, (0,0), at time zero and having different depths, their Z1 = f and Z2 = f + d and translational speed, their U = s along the axis of Figure 1. The derivatives xÝi = ui in their paper are retinal velocities in homogeneous coordinates. At zero, these are the same as our angular derivatives. The quantity on the left is the negative of the retinal image motion rate. The pursuit is xÝ1 = s / f, so the motion/pursuit ratio becomes:

| (12) |

which is equivalent to our formula (4) above.

While the M/PL can be derived using either coordinate system for the “velocity approach,” this depth formula relying specifically on pursuit does not appear in those works. The M/PL does not need observer translation speed to determine depth; observer speed cancels in the ratio. The mathematical algorithms such as in Perrone and Stone (1994) deserve another look, adding the M/PL (formula (12) is closest to their approach) to the underlying theory so that use of observer velocity is replaced with the extra-retinal pursuit as they suggest might be possible on p. 2933, “Our model does not preclude the possibility that information other than the flow field are used… or eye-movement motor corollary could all contribute…”. It is also important to notice that retinal image motion alone does not determine relative depth without knowledge of observer translation speed as shown next.

2.3 Retinal Motion alone does not determine relative depth

A simple, but erroneous, explanation for the perception of depth from motion parallax is that the retinal image motion of objects in the scene is sufficient to perceive unique, veridical, and unambiguous depth (Gibson, 1950; Gibson et al., 1959; Braunstein & Tittle, 1988). But, retinal image motion dθ/dt alone is insufficient for this purpose. The retinal image motion dθ/dt can have the same value for a whole range of relative depths (Figure 5). Specifically, consider an observer moving at translation speed of interocular distance (6.5 cm) per second, maintaining gaze on the fixate, with a retinal image motion dθ/dt = 1 deg/sec. While an infinite number of {f, d} depth pairs could generate that retinal image motion, in this example we illustrate two: {f, d}= {60,12} cm and {f, d} = {181.3,181.3} cm. These pairs have the different relative depths 12/60 = 1/5 and 181.3/181.3 = 1 respectively. The binocular disparities at these points are respectively 1.028 and 1.009 deg, a small 70 sec difference, but binocular disparity is a sensitive measure for the visual system. The motion/pursuit ratios at these points are respectively dθ/dα = 1/6 and 1/2, giving the correct relative depth with the motion/pursuit law. In other words, pursuit and binocular disparity change as we move along the constant retinal image motion curve in Figure 5.

Now we show mathematically why retinal image motion alone cannot provide a unique, correct, depth percept. Referring to Figure 4A above, we have Sin[δα] = δt · i / f, for translation at speed equal to the interocular distance per second, i, so using an approximation for sine and formula (4), we have the time zero derivatives,

| (13) |

We can solve the equation dθ/dt = c for the relative distance:

| (14) |

The left graph of Figure 5 plots this for c = π/180, or 1 degree per second. Substituting f = i/(dα/dt) into this expression gives the relative depth as a function of the pursuit as shown on the right of Figure 5.

For a general speed of observer translation s, motion is given by

| (15) |

so if motion is known, dθ/dt = c, we may solve for the relative depth,

| (16) |

If observer translation speed s were a retinal cue, then relative depth could be determined by retinal motion and translation speed using this formula. The M/PL does not require information about observer translation speed, but relies on retinal motion and extra-retinal eye movement signal. If observer translation speed is known, the M/PL determines absolute depth. Since dα/dt = s / f,

| (17) |

We have published interactive programs Stroyan (2008.12) and (2008.13), so our reader can compute other specific numerical examples. The interactive programs available at Stroyan (2008.15) and Stroyan (2008.16) illustrate formula (17).

2.4. Retinal Motion is Asymptotic to Binocular Disparity, The One Dimensional Case

There are also striking connections between our simple geometry for motion parallax and the “stereopsis approach” mentioned by Longuet-Higgins and Prazdny (1980). This approach to quantifying motion parallax relied on static variables and a rough equivalence between retinal image motion and binocular disparity termed “disparity equivalence” (Rogers & Graham, 1982). This can be viewed as the coarse approximation using Δt = 1/2,

| (18) |

Consider that motion parallax is dynamic, and these dynamic variables do not make sense for a stationary observer and scene, even one measured, moved, stopped, and measured again, as is the case for disparity equivalence. Indeed, since Exner (1875) we have known that the perception of motion is a fundamental sensation (Nakayama, 1985) and does not depend on the independent perception of a spatial or temporal displacement. Because the M/PL uses derivatives, it captures the essential dynamic nature of motion parallax. (Moreover, there is more to investigate concerning the dynamic nature of motion parallax especially in 2 dimensions, Stroyan and Nawrot (in preparation). A moving observer does not remain in a symmetric configuration and the maximal response to the motion/pursuit ratio can occur at a non-symmetric time. (See the interactive programs at Stroyan (2008.3) and Stroyan (2008.4) for numerical illustrations.)

While “disparity equivalence” provides a coarse approximation to dθ/dt, there is a precise relation. Below we show that as f gets larger and larger, the ratio motion/disparity tends to 1, a strong “asymptotic” approximation.

The well-known “non-trig law assuming symmetric fixation” (formula (11) of Cormack and Fox (1985), the same as formula (4) of Davis and Hodges (1995)) rewritten in our notation for distances

| (19) |

is a basic case of the asymptotic approximation B.D.~“Motion” given below. The connection with retinal motion was not observed in earlier work. Now we prove the mathematical asymptotic relation.

We restrict our attention to the case where the fixate and distraction are on the y-axis and the right eye crosses the y-axis at t = 0, translating at constant velocity to the right with speed equal to the interocular distance i per unit time. (We set the node percent n = 0 as well.) This section proves that B.D. ~ (dθ/dt)t=0 when d ≠ 0, i/f ≈ 0, d + f > 0 is not infinitesimal, and both F and D lie on the axis perpendicular to the head aim. The eye crosses this line when t = 0 (Figure 1). (We use the rigorous technical theory of Stroyan (1998), but a vision reader can read a ≈ b as ‘a is very close to b’ and a ~ b as ‘a/b is nearly 1’. The mathematical results could be re-stated as limits.)

Binocular disparity is twice the value of the angle θ shown in Figure 2 B that shows the difference in angle between the center of the interocular segment and the right eye. This is also the difference in the position of the right eye at time t = 0 and time t = 1/2 under perpendicular translation at speed equal to i, the interocular distance, so θ shown is half the binocular disparity. The addition formula for tangent gives

| (20) |

so

| (21) |

Now consider the difference in the angle in one eye from the time that eye crosses the line thru F and D and a short time δt later shown in Figure 2 C. Reasoning as above in the large scale picture, we obtain

| (22) |

Removing infinitesimals gives the real formula,

| (23) |

Now suppose f is large compared with the interocular distance and the distractor is not near zero. Technically, suppose i/f ≈ 0, and 0 < d + f is not infinitesimal (i is fixed, nominally 6.5 cm), then

| (24) |

so we may replace ArcTan[ς] with ς + ε · ς2, specifically, , where ε ≈ 0. As long as d ≠ 0, this makes

| (25) |

| (26) |

This proves that retinal motion and binocular disparity are asymptotic approximations of each other as f → ∞.

If the observer translation speed is changed, the general asymptotic approximation is

| (27) |

when d ≠ 0, i / f ≈ 0, and d + f > 0 is not infinitesimal, with the approximation good as a percentage of binocular disparity, or the ratio tending toward 1. The speed multiplier effect means that at high speed, the θ-derivative is much larger than binocular disparity, so could be detected by an observer when B.D. could not as in the numerical example above.

This proof is only in the symmetric case of fixate and distractor on the y-axis with nodes at the center of the eye. All the derivatives are evaluated at t = 0 when the right eye crosses the y-axis. Stroyan and Nawrot (in preparation) extends this result to two distractor dimensions and variable time, but it is a much more complicated derivation. Our interactive program Stroyan (2008.14) allows a user to compare these quantities and see that motion and binocular disparity are quite close at “normal” viewing distances. In other words, formula (27) is a “practical” approximation.

2.5. The Motion/Pursuit Ratio as a Depth Approximation

While the M/PL provides the exact mathematical solution for relative depth, it is far too accurate to describe the human visual system. For instance, the perception of depth from motion parallax is often grossly foreshortened (Ono, Rivest & Ono, 1986; Domini & Caudek, 2003; Nawrot, 2003) with distractors (D) appearing nearer to the observer than veridical. The simple motion/pursuit ratio provides a neurobiologically plausible approximation to M/PL formula (1):

| (28) |

The error in relative depth in using dθ/dα in place of dθ/dβ, for all ratios in our experiments, |dθ/dα| ≤ 0.167, is no worse than 0.0335 (Figure 6). Another way to say this is that the relative error in replacing dθ/dβ with dθ/dα is dθ/dα itself. This means that the M/PL formula (1) and formula (4) are indistinguishable when the ratios are small. In other words, approximation (28) is accurate for small ratios, but produces greater foreshortening when the motion/pursuit ratio grows larger. (Interactive comparisons are possible with the programs Stroyan (2008.17) and Stroyan (2008.18).) This approximation to the M/PL provides a plausible explanation the foreshortening seen with the perception of depth from motion parallax (Nawrot, 2003). It will be important to determine if the precise magnitude of perceptual errors follows the approximation to the M/PL, and even more importantly, we will need to determine the range of visual stimulus conditions for which the motion/pursuit ratio provides a reasonable description of human visual function.

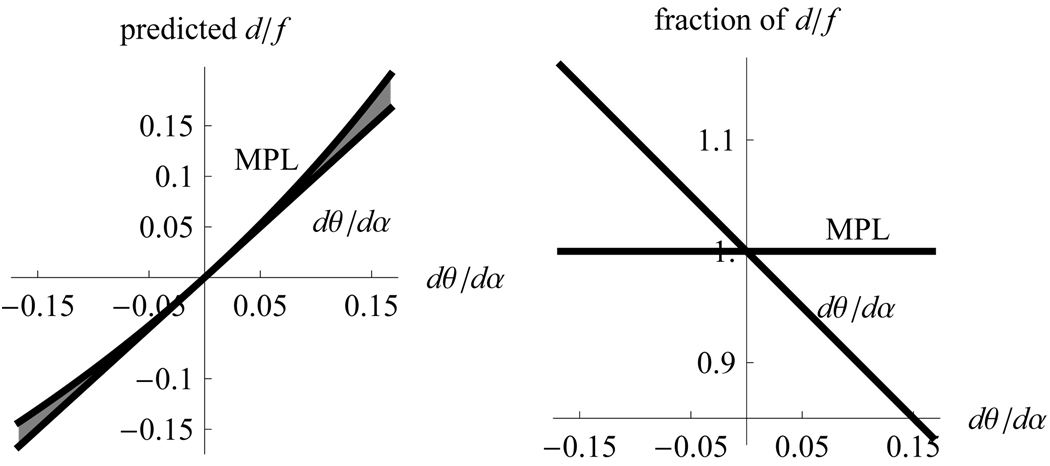

Figure 6.

Graphical comparison of formulas (1) and (30). The motion/pursuit law (1) is approximately equal to just the motion/pursuit ratio (30) when the ratio is small. The graph on the left shows the relative depth (vertical axis) given that ratio (horizontal axis). The shaded area shows the difference in the two formulas. The graph on the right shows the fraction of the relative depth each formula predicts. The motion/pursuit law corresponds to the horizontal line running through 1, meaning the relative depth estimate is exact. The motion/pursuit ratio corresponds to the oblique line showing that it is within 10% if [|dθ/dα| is < 0.1].

We examined, with a psychophysical study, whether the M/PL or retinal image motion provides the best explanation for perceived depth from motion parallax. That is, while a change in retinal image velocity can produce a change in perceived depth, we contend that the perceptual change occurs only as part of a change in the M/PL. The goal of the experiment is to dissociate the roles of M/PL, retinal image motion, and pursuit eye movement in the perception of depth from motion parallax. Observers compared the perceived depth of two motion parallax stimuli that differed in: dθ/dt (retinal image velocity), dα/dt (pursuit velocity), and/or dθ/dβ (M/PL). The goal was to determine how systematic changes in the perceived magnitude of depth from motion parallax was produced independently by changes in any of these three variables.

3. Methods

The goal of this study is to determine whether two independent stimulus parameters, retinal image velocity and pursuit velocity, or the interdependence of these two parameters as defined by the M/PL, has a determinative role on the perception of depth from motion parallax. To do this, the study uses a depth comparison paradigm where the participants report which of two stimuli appears to have more depth, or whether the stimuli appear to have the same depth magnitude. The experiment was constructed so that the two stimuli could differ in each of the three independent variables independently. For instance, a proportional change in both change in both dθ/dt and dα/dt leaves dθ/dβ (M/PL) unchanged. Moreover, because both dθ/dt and dα/dt are independent, the interrelation between these two parameters could be coded as either dθ/dβ (the M/PL) or as dθ/dα (the approximation to the M/PL) to see which relationship provided the best explanation for the variance of the dependent variable.

The study employed two conditions. In the first condition the observers were instructed to maintain fixation on the fixation spot for the duration of each trial. In the second condition eye position was monitored in real time with an eye tracker.

3.1. Participants

Ten healthy participants (9 female, 1 male, age 18 to 28), naïve to the hypotheses under consideration, gave informed consent to participate in the study. All participants had normal visual, free from a history of ocular or neurological disorders. The study had the approval of the university IRB. Five participants served in the first condition, and six participants served in the second condition, with one observer serving in both conditions.

3.2. Tasks and stimuli

The experiment used a common random-dot motion parallax stimulus that depicts a corrugated surface undulating sinusoidally in depth in the vertical dimension (Rogers & Graham, 1979; see Nawrot & Joyce, 2006 for a detailed explanation of how the stimuli were created). The stimulus depicted a total of 1.5 cycles, 0.75 cycles above and below a horizontal centerline. This stimulus window was 400 × 400 pixels in size comprising 7500, 1×1 pixel black dots upon a white background. A small fixation spot appeared at the center of the stimulus. In the second (eye tracking) condition the stimulus contrast was reversed, to white dots on a black background, to reduce both illuminance and infrared and aid in eye tracking.

In this experiment the stimulus window was translated horizontally across the screen. The observer generated a pursuit eye movement to maintain fixation on the spot at the center of this stimulus window. The observer did not make head movements. Stimulus dots moving in the same direction as the stimulus window were perceived nearer than the fixation point in depth, while dots moving in the opposite direction were perceived farther in depth. This movement of the stimulus dots relative to the stimulus window and fixation point produced the relative depth, or depth-phase, of the corrugation. The perceived depth-phase of the motion parallax stimulus could be reversed by reversing the relationship between the direction of pursuit and the direction of the stimulus dot motion in the corrugation. The two stimuli presented in each trial were always presented in opposite depth phases.

The experiment used a three-alternative forced-choice procedure, wherein two different stimuli were presented in succession and the observer was required to indicate whether greater depth magnitude was perceived in either of the two stimuli, or if depth magnitude was the same in both stimuli.

Nine different stimulus versions were created. These nine stimuli were paired with each other stimulus in both the left and right position for a total of 81 trials per block. In the first condition, five nåive observers, with limited experience in psychophysical studies, each competed 10 blocks of trials. In the second condition the six observers completed 7 blocks of trials. The nine different stimuli could have one of three different stimulus window translation velocities leading to three different pursuit eye movement velocities (5, 7.5, and 10 deg/sec). Within the translating stimulus window peak velocity of dot translation could have one of five values: 0.42, 0.625, 0.833, 1.25 or 2.5 deg/sec. The combination of stimulus window velocity and peak dot velocities were restricted to produce one of four different M/PL ratios: 0.333, 0.200, 0.143, and 0.091. Pilot testing demonstrated that all of the stimulus parameters levels could be distinguished from other levels (e.g., the window or dots appeared to move at different speeds).

The goal of the experiment was to determine which stimulus variable (stimulus window velocity, peak dot velocity, or the M/PL) causes a change in perceived depth magnitude. It is already well known that the sign of perceived depth (i.e., near vs. far) is determined by the mathematical sign of the M/PL ratio. That is, reversing the direction of either dot motion or pursuit reverses the sign of perceived depth, but reversing both renders perceived depth sign unchanged. The goal here was to determine if differences in perceived depth magnitude are determined by a change in the ratio, or by a change in one of the constituent variables.

3.3. Apparatus

Stimuli were presented on a 19” Trinitron CRT monitor with a refresh rate of 75 hz. The dot pitch of the monitor was adjusted so that at the viewing distance of 46.6 cm each stimulus dot subtended 2 min of visual angle. The experiment was controlled by a Macintosh computer running software developed in the Metroworks Codewarrior Development Environment. Observers initiated trials and entered responses through the computer keyboard. Performing the 3AFC procedure, the observer indicated at the end of each trial whether the left or right stimulus appears to have greater depth (left or right keys), or if depth magnitude perceived in the two stimuli was the same (middle key).

In the second condition eye position was monitored with video-based, remote-optic eye- tracking system (Model 5000, Applied Science Laboratories; Bedford, MA). The eye tracking system was connected to the experimental computer through a 16-bit ADC (National Instruments; Austin, TX) that sampled, in real-time at a 75 hz rate, two analog signals corresponding to horizontal and vertical eye position. Observers were required to maintain a measured eye position within 2 degrees of the fixation point during the central 8 degrees. An eye position outside this window caused blanking of the stimulus and a auditory signal to the observer that the trial was to be repeated.

Observers viewed the display from within a head restraint so that their heads neither translated, nor rotated. In the eye-tracking condition the head restraint was replaced with a bite-bar using high viscosity silicone dental putty (Exaflex, GC America; Chicago IL). This helped to further stabilize the observer’s head and reduced the inherent jitter in the eye tracking system.

3.4. Psychophysical Procedure

Each trial used a single direction of pursuit (leftward or rightward) that alternated between trials. Each trial began with a stationary fixation point presented 300 pixels from either the left or right edge (alternately) of the display monitor. Observers initiated the stimulus presentation with a key press. The first stimulus window immediately appeared, centered on the fixation spot, and began moving across the screen at one of the three window translation speeds given above. When the stimulus window came to the center of the display screen, presentation of the first stimulus ended. Following a 200 msec blank inter-stimulus interval, presentation of the second stimulus began at the point where the first stimulus ended. The second stimulus continued across the screen at its particular translation velocity until the fixation point was 300 pixels from edge of the display monitor (Figure 7). The second stimulus always depicted the opposite (reversed) depth phase from the first stimulus (i.e., stimulus regions with “hills” in the first stimulus became “valleys” in the second stimulus).

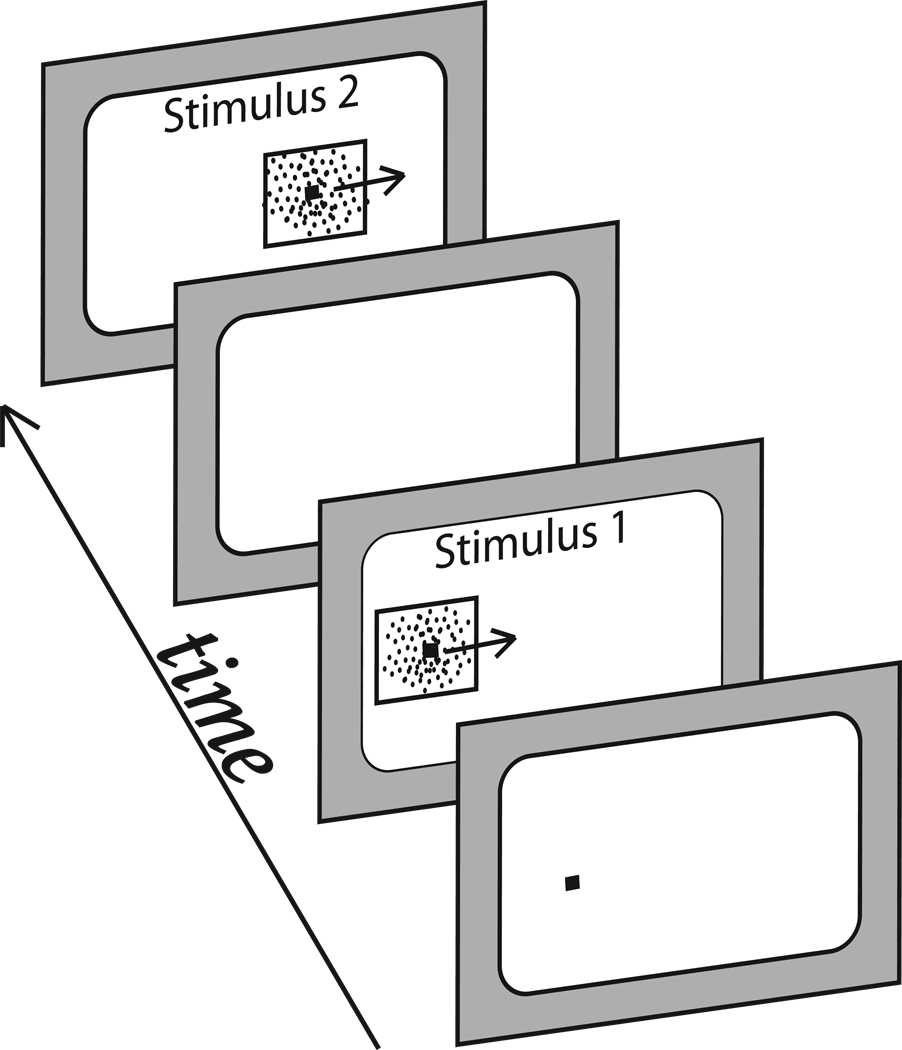

Figure 7.

Depiction of a single experimental trial with rightward pursuit. Each trial begins with a static fixation point. Two stimuli are presented in succession as the observer maintains fixation on the point moving across the screen. The observer indicated which of the two stimuli depicted a larger magnitude corrugation in depth.

The observer’s tasks were: 1) maintain fixation on the fixation point throughout the stimulus presentation, and 2) report, via a keypress, which of the two stimuli had more depth (left or right) or if they were the same. When the observer entered the response, the fixation point was presented on the monitor signaling that the next trial was ready.

3.5. Analysis

For each trial, representing a specific stimulus-position pairing, the observer’s judgment of greater relative depth magnitude was coded as 1 (left stimulus), 0 (same), or −1 (right stimulus). For each pairing, these values were summed across blocks and observers generating for each pairing a perceived relative depth magnitude score. A proportional score of 1.0 meant the left stimulus in the pairing was judged by every observer, in every block, to have greater depth, a −1.0 meant the right stimulus had greater depth. A score of 0 meant the stimuli in the pairing had “same” or equal left and right responses.

A step-wise multiple regression was performed to characterize the relationship between the three independent variables (pursuit velocity, retinal motion velocity, and the motion/pursuit law) and the dependent variable (perceived relative depth magnitude). That is, the multiple regression reveals which independent variable(s) are closely related to changes in the dependent variables while also accounting for the simultaneous effect of the other independent variables. The step-wise regression was performed independently for each of the two conditions. In a subsequent analysis, the motion/pursuit law (formula 1) independent variable was alternatively re-coded as an approximation using the motion/pursuit ratio (formula 28). This change was used to assess whether the variability of the relative depth magnitude judgments was better explained by the motion/pursuit law, or by the simple approximation to the law given by the motion/pursuit ratio.

4. Results

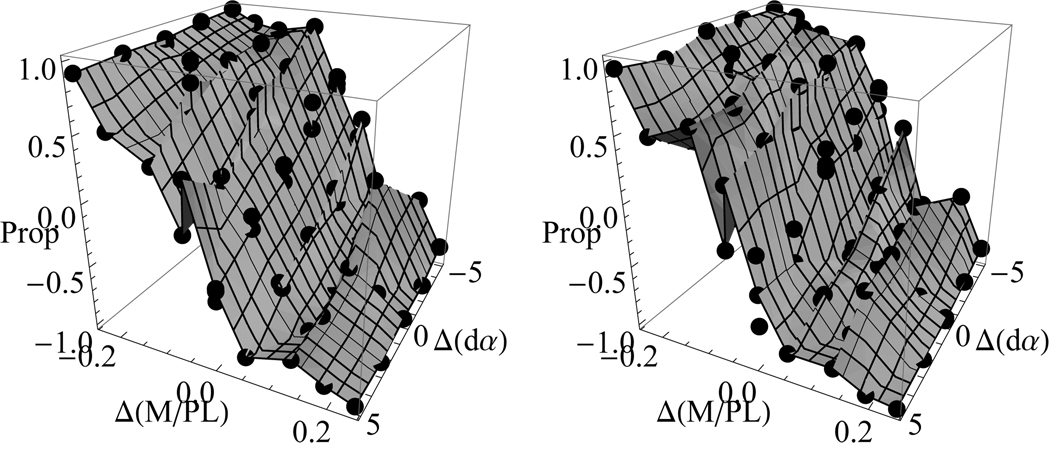

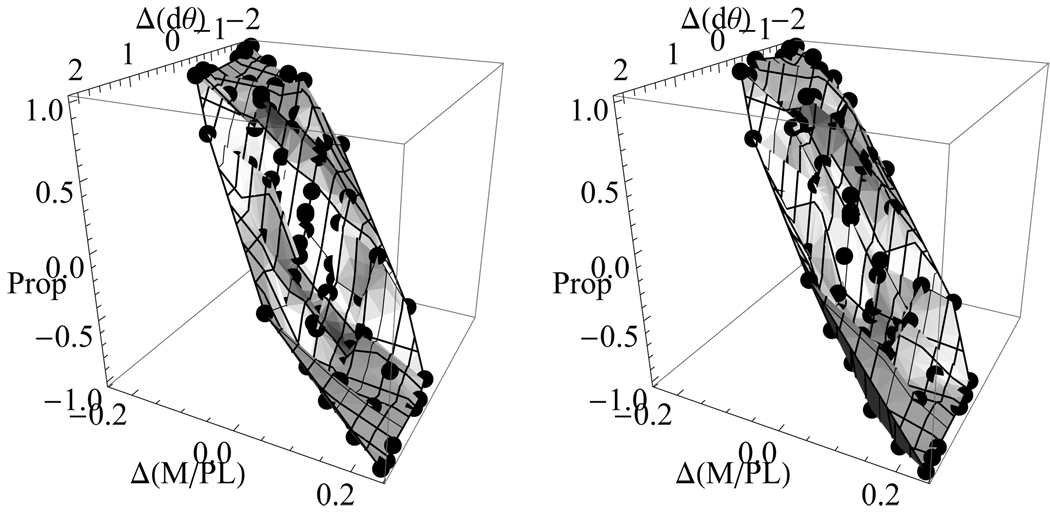

The aggregate data for the two conditions are shown in Figure 8 and Figure 9. Left and right panels in each figure show data from the first and second conditions respectively. Since the experiment had three independent variables, the two figures show the same data points using different variables on the z-axis. Individual data points (black spots) represent the aggregate response from all observers for each of the 81 different trial types. The shaded surface in both figures represents the simple connection of the data points. The vertical axes in both figures denote the proportion of relative depth magnitude responses: values approaching 1 or −1 mean that all of the observers’ judgments consistently had one of the stimuli having greater depth magnitude, while values near 0 mean that judgments of relative depth magnitude were equivocal. The x-axes represent the change in dθ/dβ (M/PL) between the two stimuli being compared in that trial. In Figure 8 the z-axes represent the change in dα/dt between the two stimuli being compared, while in Figure 9 the z-axes represent change in dθ/dt between the two stimuli.

Figure 8.

Graphs show results from the first (left panel) and second (right panel) conditions. The black dots represent the aggregate data from all observers in that particular trial. Vertical axis represents the proportion of responses for left (positive numbers) or right (negative numbers) stimulus appearing to have greater depth magnitude. The x-axis shows the difference in the M/PL between the two stimuli, the z-axis shows the difference in dα/dt between the two stimuli being compared in each trial.

Figure 9.

Graphs show the same data points from Figure 8 with a change in the z-axis. The z-axis now shows the difference in dθ/dt between the two stimuli being compared in each trial. The x-axis and y-axis are unchanged from those shown in Figure 8.

Results of the two conditions are very highly correlated (r79 = 0.976). This similarity in observer performance can be seen in the similarity of the two panels in each of the results figures. For both conditions, a stepwise multiple regression reveals that the best predictor of perceived change in depth magnitude was a change in dθ/dβ (M/PL)(condition 1: F (1,79) = 352.2, p = 0.000, R = 0.904; condition 2: F (1,79) = 335.6, p = 0.000, R = 0.900). This means that changes in perceived depth are most closely related to changes in dθ/dβ (M/PL), not dα/dt (pursuit velocity) or dθ/dt (retinal image velocity). As expected, a change in retinal image velocity dθ/dt alone did not contribute to a change in perceived depth. A change in retinal image velocity only produces a change in perceived depth through a change in the dθ/dβ ratio. This can be seen in Figure 8 and Figure 9 as the slope of the surface changing most closely with the change in dθ/dβ.

The stepwise multiple regression also showed that change in pursuit velocity did contribute to explaining a change in perceived depth (two factors: condition 1: F (2,78) = 312.1, p = 0.000, R = 0.943; condition 2: F (2,78) = 247.0, p = 0.000, R = 0.929). Adding pursuit velocity to the multiple regression increased the value of R by a small amount (condition 1: 0.039; condition 2: 0.029) indicating that a change in pursuit velocity dα/dt did influence observers’ judgments of perceived depth magnitude, independent from changes in dθ/dβ. The direction of the effect is that higher stimulus window translation speeds contributed to greater perceived depth magnitudes. This could be due to small non-linearities in pursuit gains (gain = pursuit velocity/target velocity) as the target velocity increases. Then, as target velocity increases and pursuit gain decreases, the M/PL ratio increases, thereby indicating greater perceived depth (d). Alternatively, perhaps the visual system’s internal pursuit velocity signal is not linear with velocity, and this internal signal fails to increase linearly as actual pursuit velocity increases. This factor requires further study.

While the dθ/dβ (M/PL) provides the exact mathematical solution for relative depth, it is too accurate to properly describe the perception of depth from motion by the human visual system. Moreover, the simple motion/pursuit ratio dθ/dα (formula 28) provides a slightly better fit than does the M/PL for the psychophysical data collected here. When the dθ/dβ M/PL independent variable values are replaced with simple motion/pursuit ratio dθ/dα values, the stepwise multiple regression returns both larger R and F values for the performance in both conditions (condition 1: F (1,79) = 392.6, p = 0.000, R = 0.912; condition 2: F (1,79) = 383.7, p = 0.000, R = 0.911). Therefore, we conclude that the approximate motion/pursuit ratio provides a reasonable description of the visual process used for the perception of depth from motion parallax.

5. Discussion

The motion/pursuit law provides a novel quantitative model for the perception of depth from motion parallax. It provides a simple formula to predict perceived depth from the stimulus variables required for the perception of depth from motion parallax. This is important if motion parallax is to be compared to binocular stereopsis, and the neural mechanisms serving combination of these depth cues is to be understood (Bradley et al, 1995; Bradshaw et al. 1998; 2002).

Moreover, current studies are seeking to determine whether the M/PL generalizes from side-to-side observer translations to forward observer translations that produce optic flow. That is, does the M/PL generate an accurate relative depth estimate independent of the direction and velocity of observer translation? While most optic flow research has addressed perceived heading (e.g., Warren, & Hannon, 1988; Li & Warren, 2000; Royden, Banks & Crowell, 1992), optic flow is an important source of relative depth information during walking and driving. One important limitation for understanding optic flow is that the M/PL does fail, for all positions of D, when the fixate is within a few degrees of the direction of translation. For example, if the fixate is at the focus of expansion in an optic flow field the value of dα/dt is 0 (pursuit is not required to maintain fixation) causing the motion/pursuit ratio to be undefined. Little is known about the perception of depth from motion in such cases, although formula 9 in Hanes et al. (2008) presents an interesting relation between two distractors. We do not know if their formula has been experimentally verified as useful in human depth perception.

In an important application, the M/PL provides a novel explanation for the underestimation of distance in virtual reality systems (Witmer & Kline, 1998; Knapp & Loomis, 2004) wherein the perceived depth in a virtual environment may be orders of magnitude less than the real environment. The problem is that virtual environments have short viewing distances (f). The M/PL demonstrates that d is calculated in proportion to f. Even if the virtual environment offers a perfect reproduction of the visual scene generating dθ/dβ, the M/PL is scaled to the actual viewing distance (f) to generate perceived relative depth (d/f) of objects represented in the virtual scene. Therefore, perceived d will be smaller than the simulated d in the virtual environment. However, the perception of depth in movie theatres may be immune to this problem due to a large actual viewing distance (f). Perhaps this is a factor in television viewing where the trend is towards larger television screens facilitating longer viewing distances.

In conclusion, the motion/pursuit law, derived from simple dynamic geometry, suggests how the visual system is able to perceive relative depth from motion parallax. Moreover, the motion/pursuit law provides a dynamic metric to convert the distal geometry of the scene into the proximal visual signals used by the visual system, retinal image motion dθ/dt and pursuit rate dα/dt. The visual system appears to use a simple ratio of these proximal visual signals (formula 28) for the perception of depth from motion parallax. This new quantification of the important parameters for motion parallax will allow orderly laboratory study of depth from motion parallax.

ACKNOWLEDGEMENTS

We thank R. Blake, C. Tyler, G. DeAngelis, M. McCourt, E. Nawrot, and two anonymous referees for helpful comments on previous versions of the manuscript. M.N. supported by NIH RR02015.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Here we use the term fixate to refer to the observer’s point of gaze in the environment and make no implicit assumptions about the underlying neural mechanisms.

References

- Bradley DC, Chang GC, Andersen RA. Encoding of three-dimensional structure-from-motion by primate area MT neurons. Nature. 1998;392:714–717. doi: 10.1038/33688. [DOI] [PubMed] [Google Scholar]

- Bradley DC, Qian N, Anderson RA. Integration of motion and stereopsis in middle temporal cortical area of macaques. Nature. 1995;373:609–611. doi: 10.1038/373609a0. [DOI] [PubMed] [Google Scholar]

- Bradshaw MF, Parton AD, Eagle RA. Interaction of binocular disparity and motion parallax in determining perceived depth and perceived size. Perception. 1998;27:1317–1331. doi: 10.1068/p271317. [DOI] [PubMed] [Google Scholar]

- Bradshaw MF, Parton AD, Glennerster A. Task dependent use of binocular disparity and motion parallax information. Vision Research. 2002;40:3725–3734. doi: 10.1016/s0042-6989(00)00214-5. [PubMed] [DOI] [PubMed] [Google Scholar]

- Braunstein ML, Tittle JS. The observer-relative velocity field as the basis for effective motion parallax. J. Exp. Psychol. Hum. Percept. Perform. 1988;14:582–590. doi: 10.1037//0096-1523.14.4.582. [DOI] [PubMed] [Google Scholar]

- Davis ET, Hodges LF. Human stereopsis, fusion and stereopscopic virtual environments (p.145–174) In: Barfield W, Furness TA, editors. Virtual environments and advanced interface design. New York, NY: Oxford University Press; 1995. [Google Scholar]

- Domini F, Caudek C. 3-D structure perceived from dynamic information: a new theory. Trends Cogn. Sci. 2003;7:444–449. doi: 10.1016/j.tics.2003.08.007. [DOI] [PubMed] [Google Scholar]

- Exner S. Ueber das sehen von bewegungen und die theorie des zusammengesetzen auges. Sitzungsberichte Akademie Wissenchaft Wien. 1875;72:156–190. [Google Scholar]

- Farber JM, McConkie AB. Optical motions as information for unsigned depth. J. Exp. Psychol. Hum. Percept. Perform. 1979;3:494–500. [PubMed] [Google Scholar]

- Gibson JJ. The perception of the visual world. Boston: Houghton Mifflin Co.; 1950. [Google Scholar]

- Gibson EJ, Gibson JJ, Smith OW, Flock H. Motion parallax as a determinant of perceived depth. J. Exp. Psychol. 1959;58:40–51. doi: 10.1037/h0043883. [DOI] [PubMed] [Google Scholar]

- Hanes DA, Keller J, McCollum G. Motion parallax contribution to perception of self-motion and depth. Biol. Cybern. 2008;98:273–293. doi: 10.1007/s00422-008-0224-2. [DOI] [PubMed] [Google Scholar]

- Knapp JM, Loomis JM. Limited field of view of head-mounted displays is not the cause of distance underestimation in virtual environments. Presence: Teleoperators & Virtual Environments. 2004;13:572–577. [Google Scholar]

- Li L, Warren WH. Perception of heading during rotation: sufficiency of dense motion parallax and reference objects. Vision Res. 2000;40:3873–3894. doi: 10.1016/s0042-6989(00)00196-6. [DOI] [PubMed] [Google Scholar]

- Longuet-Higgens HC, Prazdny K. The interpretation of a moving retinal image. Proc. R. Soc. Lond. B. 1980;208:385–397. doi: 10.1098/rspb.1980.0057. [DOI] [PubMed] [Google Scholar]

- Miles FA, Busettini C. Ocular compensation for self-motion. Visual mechanisms. Ann. N.Y. Acad. Sci. 1992;656:220–232. doi: 10.1111/j.1749-6632.1992.tb25211.x. [DOI] [PubMed] [Google Scholar]

- Nadler JW, Angelaki DE, DeAngelis GC. A smooth eye movement signal provides the extra-retinal input used by MT neurons to code depth sign from motion parallax. Soc. Neurosci. Abstr. 2006:407.8. doi: 10.1016/j.neuron.2009.07.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler JW, Angelaki DE, DeAngelis GC. A neural representation of depth from motion parallax in macaque visual cortex. Nature. 2008;452:642–645. doi: 10.1038/nature06814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler JW, Nawrot M, Angelaki DE, DeAngelis GC. MT neurons combine visual motion with a smooth eye movement signal to code depth sign from motion parallax. doi: 10.1016/j.neuron.2009.07.029. (submitted). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naji JJ, Freeman TCA. Perceiving depth order during pursuit eye movements. Vision Res. 2004;44:3025–3034. doi: 10.1016/j.visres.2004.07.007. [DOI] [PubMed] [Google Scholar]

- Nakayama K. Biological image motion processing. Vision Res. 1985;25:625–660. doi: 10.1016/0042-6989(85)90171-3. [DOI] [PubMed] [Google Scholar]

- Nakayama K, Loomis JM. Optical velocity patterns, velocity-sensitive neurons, and space perception: a hypothesis. Perception. 1974;3:63–80. doi: 10.1068/p030063. [DOI] [PubMed] [Google Scholar]

- Nawrot M. Eye movements provide the extra-retinal signal required for the perception of depth from motion parallax. Vision Res. 2003;43:1553–1562. doi: 10.1016/s0042-6989(03)00144-5. [DOI] [PubMed] [Google Scholar]

- Nawrot M. Depth from motion parallax scales with eye movement gain. J. of Vision. 2003;3:841–851. doi: 10.1167/3.11.17. [DOI] [PubMed] [Google Scholar]

- Nawrot M, Joyce L. The pursuit theory of motion parallax. Vision Res. 2006;46:4709–4725. doi: 10.1016/j.visres.2006.07.006. [DOI] [PubMed] [Google Scholar]

- Ono H, Ujike H. Motion parallax driven by head movements: conditions for visual stability, perceived depth, and perceived concomitant motion. Perception. 2005;34:477–490. doi: 10.1068/p5221. [DOI] [PubMed] [Google Scholar]

- Ono ME, Rivest J, Ono H. Depth perception as a function of motion parallax and absolute distance information. J. Exp. Psychol. Hum. Percept. Perform. 1986;12:331–337. doi: 10.1037//0096-1523.12.3.331. [DOI] [PubMed] [Google Scholar]

- Perrone JA, Stone LS. A model of self-motion estimation within primate extrastriate visual cortex. Vision Res. 1994;34:2917–2938. doi: 10.1016/0042-6989(94)90060-4. [DOI] [PubMed] [Google Scholar]

- Rogers B, Graham M. Motion parallax as an independent cue for depth perception. Perception. 1979;8:125–134. doi: 10.1068/p080125. [DOI] [PubMed] [Google Scholar]

- Rogers BJ, Graham M. Similarities between motion parallax and stereopsis in human depth perception. Vision Res. 1982;22:261–270. doi: 10.1016/0042-6989(82)90126-2. [DOI] [PubMed] [Google Scholar]

- Royden CS, Banks MS, Crowell JA. The perception of heading during eye movements. Nature. 1992;360:583–585. doi: 10.1038/360583a0. [DOI] [PubMed] [Google Scholar]

- Stroyan KD. Foundations of Infinitesimal Calculus. Academic Press; 1998. available at: http://wwwmath.uiowa.edu/~stroyan/InfsmlCalculus/InfsmlCalc.htm. [Google Scholar]

- Stroyan KD. Interactive computation of Geometric Inputs to Visual Depth Perception. 2008 index at http://www.math.uiowa.edu/~stroyan/VisionWebsite/PlayOutline.html.

- Motion Pursuit Law In 1D Visual Depth Perception 1. 20081 http://demonstrations.wolfram.com/MotionPursuitLawIn1DVisualDepthPerception1/

- Motion Pursuit Fixate And Distraction: Visual Depth Perception 2. 20082 http://demonstrations.wolfram.com/MotionPursuitFixateAndDistractorVisualDepthPerception2/

- Motion Pursuit LawI n 2D: Visual Depth Perception 3. 20083 http://demonstrations.wolfram.com/MotionPursuitLawIn2DVisualDepthPerception3/

- Motion Pursuit Law On Invariant Circles: Visual Depth Perception 4. 20084 http://demonstrations.wolfram.com/MotionPursuitLawOnInvariantCirclesVisualDepthPerception 4/

- Fixation And Distraction: Visual Depth Perception 5. 20085 http://demonstrations.wolfram.com/FixationAndDistractionVisualDepthPerception5/

- Eye Parameters: Visual Depth Perception 6. 20086 http://demonstrations.wolfram.com/EyeParametersVisualDepthPerception6/

- Binocular Disparity: Visual Depth Perception 7. 20087 http://demonstrations.wolfram.com/BinocularDisparityVisualDepthPerception7/

- Vieth Meuller Circles: Visual Depth Perception 8. 20088 http://demonstrations.wolfram.com/ViethMullerCirclesVisualDepthPerception8/

- Binocular Disparity Vs Depth: Visual Depth Perception 9. 20089 http://demonstrations.wolfram.com/BinocularDisparityVersusDepthVisualDepthPerception9/

- Disparity Convergence And Depth: Visual Depth Perception 10. 200810 http://demonstrations.wolfram.com/DisparityConvergenceAndDepthVisualDepthPerception10/

- Tracking And Separation: Visual Depth Perception 11. 200811 http://demonstrations.wolfram.com/TrackingAndSeparationVisualDepthPerception11/

- Motion Vs Depth: 2D Visual Depth Perception 12. 200812 http://demonstrations.wolfram.com/MotionParallaxVersusDepth2DVisualDepthPerception12/

- Motion Vs Depth: 3D Visual Depth Perception 13. 200813 http://demonstrations.wolfram.com/MotionParallaxVersusDepth3DVisualDepthPerception13/

- Dynamic Approximation Of Static Quantities: Visual Depth Perception 14. 200814 http://demonstrations.wolfram.com/DynamicApproximationOfStaticQuantitiesVisualDepthPerce ption1/

- Speed Motion Pursuit And Depth: Visual Depth Perception 15. 200815 http://demonstrations.wolfram.com/SpeedMotionPursuitAndDepthVisualDepthPerception15/

- Speed Pursuit MP Ratio And Depth: Visual Depth Perception 16. 200816 http://demonstrations.wolfram.com/SpeedPursuitMPRatioAndDepthVisualDepthPerception16/

- Motion Pursuit Ratio And Depth In 1D: Visual Depth Perception 17. 200817 http://demonstrations.wolfram.com/MotionPursuitRatioAndDepthIn1DVisualDepthPerception17/

- Motion Pursuit Ratio And Depth In 2D: Visual Depth Perception 18. 200818 http://demonstrations.wolfram.com/MotionPursuitRatioAndDepthIn2DVisualDepthPerception18/

- Tyler CW. Binocular vision. In: Tasman W, Jaeger EA, editors. Duane’s foundations of clinical ophthalmology. Vol. 2. Philadelphia: J.B. Lippincott; 2004. [Google Scholar]

- Wade NJ. A natural history of vision. Cambridge: MIT Press; 1998. [Google Scholar]

- Warren WH, Hannon DJ. Direction of self-motion is perceived from optical flow. Nature. 1988;336:162–163. [Google Scholar]

- Wheatstone C. Contributions to the physiology of vision: Part I. On some remarkable and hitherto unobserved phenomena of binocular vision. Philos. Trans. R. Soc. 1938;128:371–394. [Google Scholar]

- Witmer BG, Kline PB. Judging perceived and traversed distance in virtual environments. Presence: Teleoperators & Virtual Environments. 1998;7:144–167. [Google Scholar]