Abstract

Decrements in cognitive function are common in cancer patients and other clinical populations. As direct neuropsychological testing is often not feasible or affordable, there is potential utility in screening for deficits that may warrant a more comprehensive neuropsychological assessment. Furthermore, some evidence suggests that perceived cognitive function (PCF) is independently associated with structural and functional changes on neuroimagery, and may precede more overt deficits. To appropriately measure PCF, one must understand its components and the underlying dimensional structure. The purpose of this study was to examine the dimensionality of PCF in people with cancer. The sample included 393 cancer patients from four clinical trials who completed a questionnaire consisting of the prioritized areas of concerns identified by patients and clinicians: self-reported mental acuity, concentration, memory, verbal fluency, and functional interference. Each area contained both negatively-worded (i.e., deficit) and positively-worded (i.e., capability) items. Data were analyzed by using Cronbach’s alpha, item-total correlations, one-factor confirmatory factor analysis (CFA), and a bi-factor analysis model. Results indicated that Cognitive Deficiency items are distinct from Cognitive Capability items, supporting a two-factor structure of PCF. Scoring of PCF based on these two factors should lead to improved assessment of PCF for people with cancer.

Keywords: Perceived cognitive function, bi-factor analysis, dimensionality

Introduction

Changes in cognitive function occur in normal aging (1,2), but can also be associated with a number of chronic illnesses, such as epilepsy (3,4) multiple sclerosis (5), Alzheimer’s disease (6), and cancer (7,8). Traditionally, cognitive function has been described and measured by its component processes, such as memory, attention, and executive functioning. Consequently, cognitive function has often been assessed using flexible or fixed batteries of neuropsychological tests, which provide objective measures targeting either specific cognitive components, specific neurological disorders, or cognitive functioning more globally (9,10). Despite well-known advantages, direct neuropsychological testing is often not feasible or affordable. As a result, patients are typically referred for neuropsychological testing only after significant decrements are noticed. Furthermore, when such batteries are administered repeatedly, their reliability and validity are likely to be compromised by practice effects. These limitations have prompted interest in the use of screening tools to identify individuals who may benefit from a full neuropsychological battery. A psychometrically-sound instrument measuring self-reported cognitive function, i.e., perceived cognitive function (PCF), may fill this need.

The concept of PCF has been discussed in the research literature using different terms such as cognitive complaints (11), cognitive difficulties (12), cognitive distress (13) or subjective cognitive dysfunction (14); however, the validity of PCF measures has often been questioned (15,16). Examples of such criticisms are that patients with declining cognitive function are unlikely to report reliably on their cognitive function (17); and that PCF may reflect patients’ psychological states (e.g., depression) rather than cognitive function (18,19). Although the association between PCF and performance-based neuropsychological testing results has been inconsistent (3,20–22), recent evidence suggests that perceived cognitive function (PCF) is independently predictive of structural or functional brain changes, and may precede more overt deficits (23–31). For example, Saykin et al. (26) compared structural brain MRI scans across three groups: individuals with cognitive complaints but with normal neurological test performance (CC), patients with amnestic mild cognitive impairment (MCI), and individuals without significant cognitive complaint or deficits. They found that CC and MCI groups showed a similar pattern of reduced gray matter density in the bilateral medial temporal, frontal, and other distributed brain regions. This study highlights the importance of perceived cognitive function in the clinical evaluation of older adults, suggesting that those who report cognitive decrements may warrant evaluation and/or close monitoring over time. As new treatment and preventive strategies for MCI and Alzheimer’s disease are developed and refined, the earliest possible accurate detection of patients at increased risk of developing diseases will take on critical importance. This further highlights the importance of having a reliable yet user-friendly PCF instrument. The purpose of such a tool would not be to replace comprehensive neuropsychological testing battery; instead, it may serve as a useful screening tool that could be used in clinics to identify individuals who may benefit from a more thorough neuropsychological evaluation or more frequent monitoring for cognitive changes.

Recognizing the potential clinical utility of the construct, the cancer supplement to the NIH Patient-Reported Outcomes Measurement Information System (PROMIS: http://www.nihpromis.org) has included PCF as one of its measurement development areas. The current version of the PROMIS Cancer Supplement PCF measure is comprised of 78 items, including items from the Functional Assessment of Cancer Therapy - Cognition (FACT-Cog, version 2), the scale we focus on in the current analysis. An appropriate measure requires a full understanding of the underlying latent trait the PCF intends to measure. As a first approximation to address this issue, we examined the dimensionality of PROMIS PCF items by using bi-factor analysis, a recently developed technique for examining unidimensionality of item sets. Given the documented effects of cancer and chemotherapy on cognitive function (7,8), we felt it would be appropriate to first address this issue on cancer population. We started with a cancer sample in order to reduce some of the variability in the association of PCF with neuropsychological testing across patient groups.

Methods

Measure

The development process of the FACT-Cog has been reported elsewhere (32,33). Items for the scale were written to reflect themes identified by experts and patients and literature review results. To minimize self-report bias due to distress unrelated to cognition, items were written to include behavioral examples of cognitive dysfunction. As a result, FACT-Cog Version 1 consisted of 35 items that were rated on a 5-point Likert scale (32). We then administered this measure to 206 general oncology patients. The majority of patients in that sample were white (93.2%) and female (59.2%), with a mean age of 59.6 ± 13.0 years. Approximately 26% of patients had breast cancer, 15% had colorectal cancer, and 14% had non-Hodgkin’s lymphoma; the majority of patients were currently receiving chemotherapy (89.8%). Reliability was excellent (alpha=0.96). Psychometric properties of individual items were examined by using a one-parameter Item Response Theory - Rasch model (34). Items were determined to fit if the ratio between expected and observed item variance was less than 1.4 (i.e., MnSq < 1.4 in Rasch software output). Results showed that one item did not fit and three items provided information redundant with other items, as demonstrated by high item-total correlations and further discussions with a group of experts. Consequently, one item was revised and three items were removed from the scale. Additionally, ten new, positively-worded items were developed to reduce an observed ceiling effect. As a result, the FACT-COG (v2) consists of 42 items (32 negatively-worded and 10 positively-worded).

To reflect patients’ experience, the FACT-Cog (v2) items were originally grouped into two categories: cognitive capacity and cognitive performance. Cognitive capacity, including both items capturing capability and deficit, consists of following four areas of concerns: mental acuity (4 items), concentration (4 items), verbal and non-verbal memory (7 items), and verbal fluency (7 items). Cognitive performance, defined as actual performance or consequences of a given capability or deficit item, consists of following three areas of concerns: functional interferences due to cognitive deficits (7 items), noticeability (4 items), and changes in cognitive function (9 items). A frequency type of rating scale is used (0=Never; 4=Several times a day). FACT-cog (v2) items are listed in the Appendix.

Samples

This is a secondary analysis, making use of data from 393 cancer patients who participated in studies at Evanston Northwestern Healthcare, University of Toronto-Princess Margaret Hospital, University of Pennsylvania-Abramson Cancer Center, and Moffitt Cancer Center and Research Institute. The Institutional Review Board (IRB) at each site approved the study before patients were approached, and all participants provided written informed consent. Patient demographic and clinical information are summarized on Table 1. Across the entire sample, 65.1% were female, average age was 53.8 years (standard deviation = 12.5), and 57.2% had a college degree or higher. A majority of the sample had breast cancer (54.5%), followed by multiple myeloma (16.0%), prostate cancer (9.4%), testicular cancer (5.9%) and colorectal cancer (5.6%). None of the patients had a CNS-related tumor/cancer. Patients were at different stages of disease continuum ranging from on-treatment to long-term survival. Due to the research goals across the study sites, available treatment information is variable. Nearly half of the sample (n = 149; 45.6%) were known to receive chemotherapy or had completed chemotherapy.

Table 1.

Sample Demographic and Clinical Information

| Total, N (%)a | By Data Source |

|||||

|---|---|---|---|---|---|---|

| Source 1 (n=62), n | Source 2 (n=101), n | Source 3 (n=188), n | Source 4 (n=42), n | |||

| Age | Mean (SD) | 53.8 (12.5) | 50 (9) | 53 (12) | 55 (14) | 60 (7)b |

|

| ||||||

| Gender | Male | 137 (34.9) | 2 | 57 | 60 | 18 |

| Female | 256 (65.1%) | 60 | 44 | 128 | 24 | |

|

| ||||||

| Education | HS or less | 84 (24.1%) | 5 | 47 | 32 | 0 |

| Some college | 65 (18.7%) | 13 | 25 | 27 | 0 | |

| College degree or higher | 199 (57.2%) | 44 | 29 | 126 | 0 | |

| Unknown | 45 (--) | 0 | 0 | 3 | 42 | |

|

| ||||||

| Diagnosis | Breast | 214 (54.5%) | 62 | 4 | 128 | 20 |

| Colorectal | 22 (5.6%) | 0 | 0 | 0 | 22 | |

| Multiple myeloma | 63 (16.0%) | 0 | 63 | 0 | 0 | |

| Non-Hodgkin | 12 (3.1%) | 0 | 12 | 0 | 0 | |

| Prostate | 37 (9.4%) | 0 | 0 | 37 | 0 | |

| Testicular | 23 (5.9%) | 0 | 0 | 23 | 0 | |

| Other | 22 (5.6%) | 0 | 22 | 0 | 0 | |

|

| ||||||

| Ethnicity | Hispanic origin | 8 (2.3%) | 0 | 6 | 2 | 0 |

| White | 282 (82.5%) | 47 | 87 | 148 | 0 | |

| African American | 33 (9.6%) | 5 | 6 | 22 | 0 | |

| Other | 19 (5.6%) | 10 | 2 | 7 | 0 | |

| unknown | 51 (- -) | 0 | 0 | 9 | 42 | |

|

| ||||||

| Treatment | Chemotherapy | 149 (45.6%) | 62 | 0 | 87 | 0 |

| Surgery/radiation with no chemotherapy | 51 (15.6%) | 0 | 0 | 51 | 0 | |

| BMTc | 101 (30.8%) | 0 | 101 | 0 | ||

| ADTd | 26 (8.0%) | 0 | 0 | 26 | 0 | |

| information not available | 66 (- -) | 0 | 0 | 24 | 42 | |

|

| ||||||

| Assessment timing | On Cycle 4, Day 1 of treatment | 6 or 12 months post BMT | Vary by diseasese. | Received cancer treatment within 6 months | ||

|

| ||||||

| FSIQ | mean (SD) | 112.2 (7.4) | 110 (6) | 114 (8)f | ||

|

| ||||||

| Quality of Life, mean (SD) | ||||||

| PWB g | 22.29 (5.5) | 18.06 (6.3) | - | 23.34 (4.7) | 25.05 (2.7) | |

| EWB | 19.06 (4.3) | 18.95 (4.3) | - | 18.91 (4.4) | 20.67 (3.2) | |

| SWB | 22.10 (5.2) | 23.42 (4.3) | - | 21.56 (5.3) | 23.19 (5.8) | |

| FWB | 19.99 (5.6) | 18.12 (5.6) | - | 20.29 (5.5) | 22.57 (6.2) | |

| SF36-Mental | 52.33 (10.7) | - | 52.33 (10.7) | - | - | |

| SF36-Physical | 38.90 (10.3) | - | 38.90 (10.3) | - | - | |

NOTE:

% was calculated by excluding data that are unavailable.

n=20

BMT: Bone marrow transplant

ADT: Androgen deprivation therapy

All Testicular cancer patients had survived for at least 2.5 years (mean=7.4 years) at the time of surveying. All prostate cancer patients did not have chemotherapy and treatment dates are not available. Breast cancer patients were undergoing chemotherapy at the time of surveying.

n=60

PWB: FACT-G Physical Well-Being (norm=22.7 SD=5.5); EWB: Emotional Well-Being (norm=19.9 SD=4.8); SWB: Social/Family Well-Being (norm=19.1 SD=6.8); FWB: Functional Well-Being (norm=18.5 SD=6.8)

One hundred and one (30.8%) patients completed the FACT-Cog at either 6 or 12 months post bone marrow transplant; 51 (15.6%) patients underwent surgery or radiation without chemotherapy; 26 (8.0%) prostate patients received androgen deprivation therapy only (either an LHRH agonist or complete androgen blockade). In addition to the perceived cognitive function items, patients also completed various performance-based cognitive instruments. While cognitive instruments were not consistent across the samples, three subsets of the sample (n=122) had estimates of Full Scale Intelligence Quotient (FSIQ) available (mean FSIQ =112.2; SD=7.4). Compared to the population norm of 100 and SD=15, 74 patients (60.6%) were within 1 SD of the mean (6 below and 68 above the mean), 47 (38.5%) were 1 SD above and only one (0.8%) was 1 SD below. Sample information by study is detailed on Table 1. Sixty-two patients completed the Hospital Anxiety and Depression Scale (HADS); the average depression score was 4.44 (SD=3.97) and average anxiety score was 5.24 (SD=3.90).Quality of life was measured using either the Functional Assessment of Cancer Therapy – General(35) or the SF-36 (36). Average quality of life scores are reported in Table 1. The sample had physical composite scores (as measured in SF-36) at least one standard deviation lower than national norms (36). However, the sample had similar physical well-being (PWB; part of FACT-G), emotion well-being (EWB; part of FACT-G), and SF-36 mental composite scores compared to national norms (36, 37). The sample reported higher social well-being (SWB, part of FACT-G) and functional well-being (FWB, part of FACT-G), yet, they were within one standard deviation of normative means.

Analysis

We first evaluated the coherence of items within each area of concern (e.g. memory, concentration) using Cronbach’s alpha (criterion: ≥0.7) and item-total correlations (criterion: > 0.3). Next, we used one-factor confirmatory factor analysis (CFA) related techniques to evaluate dimensionality using the original FACT-Cog item categories (i.e., cognitive capacity and cognitive performance). Unidmensionality (38) at the sub-domain level was confirmed when the model fit the data well (i.e., comparative fit index, CFI, > 0.9; Tucker-Lewis index, TLI, > 0.90) and the loadings of all of the items are sufficiently large (loading > 0.3).

Finally, bi-factor analysis was used to examine sufficient (or “essential”) unidimensionality at the domain level (i.e. overall PCF) (39, 40). We have utilized such an approach in the past to demonstrate that while cancer-related fatigue manifests itself in a number of different ways (e.g., physical fatigue, mental fatigue), it is essentially unidimensional using a bi-factor analysis and, therefore, can be described using a single score (39). Bi-factor analysis includes two classes of factors: a general factor, defined by loadings from all of the items in the scale, and local factors, defined by loadings from pre-specified groups of items related to that sub-domain (40–43). The relationship between general and local factors are orthogonal, as the local factors are related to the contribution that is over and above the general factor. This approach permits each parameter in the model to be uniquely estimable so that theoretically there should not be problems with identification. Items are considered sufficiently unidimensional when standardized loadings are salient (i.e., >0.3) for all the items on the general factor. Similarly, if the loadings of all the items on a local factor are salient, this would indicate that the local factor is well defined even in the presence of the general factor, where it is more appropriate to report scores of local factors separately (39,40). The bi-factor analysis was conducted by using MPlus version 3 (42) with the implementation of the polychoric correlation matrix and weighted least squares with adjustments for mean and variance estimation, which is appropriate for the evaluation of ordered categorical data.

Additionally, to better understand the measurement properties of cognitive capability (i.e., positively framed) and cognitive deficiency (i.e., negatively framed) items, we examined the sociodemographic correlates of both classes of item. SAS 9.1 (44) was used for these analyses.

Results

All negatively-worded items were reverse-scored; that is, higher scores on the FACT-Cog items always represent better function. Descriptive statistics showed that all response categories (i.e., 0=never; 4=several times a day) were used for each item, with means ranging from 2.2 (SD=1.5) for the item “My thinking is fast as always” to 3.9 (SD=0.4) for the item “accidentally missed medical appointments”.

Results for Seven “Areas of Concern”

Table 2 shows analysis results for each area of concern. Cronbach’s alphas ranged from 0.49 (concentration) to 0.89 (changes in cognitive function). However, positively-framed items measuring cognitive capability had low item-total correlations (range: 0–0.17) in all but one concern area and low Spearman rho (0–0.25) to negatively framed items measuring cognitive deficit. The one exception to this pattern was the changes in cognitive function, which consists of three capability items, with item-total correlations of either 0.47 or 0.51. However, the Spearman rho of these three capability items with the remaining deficit items of the same area of concern ranged from 0.13 to 0.25; the rho within these three items ranged from 0.86 to 0.90. Therefore, we concluded that the moderate item-total correlations were the result of including these three highly correlated capability items in the same area of concern, not because they correlated with other items measuring cognitive deficits. As shown on Table 2, alpha values increased when items measuring cognitive capability were excluded.

Table 2.

Analysis results: Area of concern

| Area of Concern | Item n (Negative) | Item n (Positive) | Alpha of all items | Negatively-worded (Deficiency) Items Only |

|

|---|---|---|---|---|---|

| Alpha | Item-total correlation | ||||

| Mental acuity | 3 | 1 | 0.72 | 0.92 | 0.81–0.84 |

| Concentration | 3 | 1 | 0.49 | 0.69 | 0.48–0.51 |

| Memory | 5 | 2 | 0.70 | 0.81 | 0.43–0.72 |

| Verbal fluency | 6 | 1 | 0.83 | 0.91 | 0.65–0.81 |

| Functional interference | 6 | 1 | 0.77 | 0.81 | 0.32–0.66 |

| Noticeability | 3 | 1 | 0.74 | 0.87 | 0.71–0.81 |

| Changes in cognitive functions | 6 | 3 | 0.89 | 0.93 | 0.63–0.87 |

Given the consideration that alpha is influenced by the number of items in the scale, the fact that higher alpha values were obtained with fewer items indicated that capability items and deficit items should not be scaled together. Consequently, we regrouped items into three subdomains. Positively-framed cognitive capability items, originally grouped with negatively-framed deficit items under the cognitive capacity item category, were used to form a distinct subdomain: cognitive capabilities. Internal consistency of these 10 capability items was supported by high internal consistency (α = 0.91) and item-total correlations ranging from 0.48 to 0.74. The cognitive deficit items that remained under the original cognitive capacity category were grouped into a separate, renamed sub-domain: cognitive deficits. To reflect the specific nature of the original cognitive performance item category, the remaining item grouping was renamed under the sub-domain name consequences of cognitive deficits.

Results for Three Sub-Domains

We then tested the unidimensionality of three sub-domains: cognitive deficit, consequences of cognitive deficits, and cognitive capabilities. CFA results (shown on Table 3) supported the unidimensionality of each sub-domain: CFI= 0.90, 0.92, 0.92 and TLI = 0.97, 0.98, 0.95 for cognitive deficits, consequences of cognitive deficits and cognitive capability, respectively. Though RMSEA ranged from 0.16 to 0.38, given the acceptable TLI and CFI values, we still considered unidimensionality of each sub-domain.

Table 3.

Dimensionality testing results: Local factors (grouped sub-domains)

| Local Factor | Item n | Alpha | Item-total correlation | CFI | TLI | RMSEA |

|---|---|---|---|---|---|---|

| Cognitive Deficits1 | 17 | 0.94 | 0.43–0.79 | 0.90 | 0.97 | 0.167 |

| Consequences of cognitive deficits2 | 15 | 0.92 | 0.29–0.86 | 0.92 | 0.98 | 0.155 |

| Cognitive Capabilities3 | 10 | 0.91 | 0.48–0.74 | 0.92 | 0.95 | 0.380 |

Cognitive Deficits consists of items measuring Mental acuity, Concentration, Memory and Verbal fluency.

Consequences of cognitive deficits consists of items measuring Functional interference, Other people notice, and Changes in cognitive functions

Cognitive Capabilities consists of all positively-worded items

Results for Entire Domain (Bi-Factor Model)

We then examined the general PCF domain for sufficient unidimensionality by using bi-factor analysis. We conceptualized the general factor as “overall perceived cognitive function” and local factors as the previously described sub-domains: cognitive deficits; consequences of cognitive deficits; and cognitive capabilities. All items were loaded on both the general factor and their own local factor.

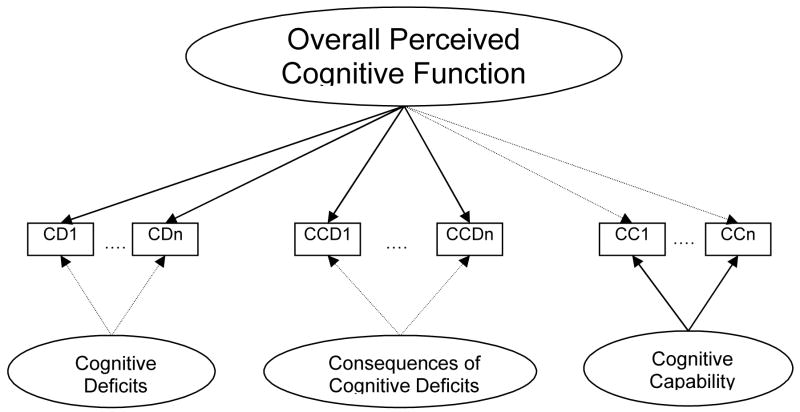

Table 4 shows results of the bi-factor analysis, which compares factor loadings of all items on the general factor and on the sub-domain factors. Acceptable CFI (=0.92) and TLI (=0.98) were obtained in this analysis. The RMSEA of 0.120, lower than when local factors were considered individually, indicated that the general factor model fit data better. Figure 1 depicts the relationship between local factors (i.e., sub-domains) and the general factor.

Table 4.

Factor loadings of each item to the general factor and to its associated local factor.

| Item | Local Factor/Sub-Domain | Factor Loading1 | ||

|---|---|---|---|---|

| Overall PCF | Local Factor | |||

| COGA1 | Cognitive Deficits | Mental Acuity | 0.84 | 0.26 |

| COGA3 | 0.89 | 0.30 | ||

| COGA4 | 0.86 | 0.37 | ||

|

|

||||

| COGC5 | Concentration | 0.65 | 0.07 | |

| COGC6 | 0.69 | −0.18 | ||

| COGC7 | 0.76 | 0.30 | ||

|

|

||||

| COGM8 | Memory | 0.68 | −0.19 | |

| COGM9 | 0.59 | −0.23 | ||

| COGM10 | 0.62 | −0.15 | ||

| COGM11 | 0.78 | −0.21 | ||

| COGM12 | 0.83 | −0.13 | ||

|

|

||||

| COGV13 | Verbal Fluency | 0.83 | −0.28 | |

| COGV14 | 0.85 | −0.25 | ||

| COGV15 | 0.89 | −0.21 | ||

| COGV16 | 0.70 | −0.25 | ||

| COGV17A | 0.85 | −0.10 | ||

| COGV17B | 0.85 | −0.09 | ||

|

| ||||

| COGF19 | Consequences of Cognitive Deficits | Functional Interference | 0.76 | −0.09 |

| COGF20 | 0.66 | −0.13 | ||

| COGF21 | 0.50 | −0.15 | ||

| COGF23 | 0.80 | 0.28 | ||

| COGF24 | 0.73 | 0.03 | ||

| COGF25 | 0.76 | 0.28 | ||

|

|

||||

| COGO26 | Noticeability | 0.75 | 0.32 | |

| COGO27 | 0.74 | 0.34 | ||

| COGO28 | 0.78 | 0.41 | ||

|

|

||||

| COGC29 | Changes in cognitive function | 0.86 | 0.31 | |

| COGC31 | 0.84 | 0.44 | ||

| COGC32 | 0.86 | 0.40 | ||

| COGC33A | 0.89 | 0.31 | ||

| COGC33B | 0.85 | 0.27 | ||

| COGC33C | 0.69 | 0.11 | ||

|

| ||||

| COGPA1 | Cognitive Capabilities | 0.10 | 0.85 | |

| COGPC1 | 0.04 | 0.85 | ||

| COGPM1 | 0.02 | 0.80 | ||

| COGPM2 | −0.01 | 0.84 | ||

| COGPV1 | −0.10 | 0.61 | ||

| COGPF1 | 0.23 | 0.75 | ||

| COGPO1 | 0.22 | 0.73 | ||

| COGPCH1 | 0.34 | 0.89 | ||

| COGPCH2 | 0.33 | 0.90 | ||

| COGPCH3 | 0.30 | 0.88 | ||

Standard errors of each loading are between 0.01 and 0.06

Figure 1.

Relationship between the general factor (overall perceived cognitive function) and local factors (cognitive deficits, consequences of cognitive deficits, and cognitive capabilities)

Note: 1. Bi-Factor analysis results show that all items of Cognitive deficits and Consequences of cognitive deficits have higher loadings (solid lines) on the general factor Overall perceived cognitive function rather than on their own local factors (dashed lines). While all Cognitive capability items have higher loadings on its local factor (solid lines) than on the general factor (dashed lines). Comparisons of item loadings are shown on Table 3.

2. Model fit: CFI=0.92 and TLI=0.98; RMSEA=0.120

Cognitive deficits and consequences of cognitive deficits items had higher loadings (from 0.50 to 0.89; shown as solid lines in Figure 1) on the general factors than those on the local factors (from −0.28 to 0.44; shown as dashed lines in Figure 1). Though some items had loadings ≥0.3 on both the general and local factor (three measured cognitive deficits and seven measured consequences of cognitive deficits), their loadings on the general factor were much higher than those on local factors (loading discrepancy ranged from 0.37 to 0.59). On the other hand, items of the perceived cognitive capabilities sub-domain had higher loadings (from 0.61 to 0.90; shown as solid lines in Figure 1) on the local factor than on the general factor (from −0.10 to 0.34; shown as dashed lines in Figure 1). For cognitive deficits and consequences of cognitive deficits, the negative loadings of some items on the local factors indicated that our a priori theoretical model of how the local factors might relate to the items was not compatible with the data. However, this does not have any bearing on the validity of treating items in the cognitive deficits and consequences of cognitive deficits sub-domains together as sufficiently unidimensional, for later applications requiring unidimensionality such as Item Response Theory (IRT) model.

Distinct from items measuring cognitive deficiency, perceived cognitive capability items loaded higher on the local factor than on the general factor (loading discrepancy range: −0.50 to −0.85). In other words, while perceived cognitive capability items perform well together, they do not measure the same construct as perceived cognitive deficiency. From a psychometric perspective, cognitive deficiency and cognitive capability are separate constructs under the umbrella of Perceived Cognitive Function.

Other Related Analyses

The magnitude of the correlation between cognitive capability and cognitive deficiency was negligible: Pearson r=0.106 p=0.035; Spearman’s rho = 0.158, P=0.002. Cognitive capability and deficiency items were not significantly correlated to age, r=0.059 (P=0.261) and −0.014 (P=0.790), respectively. There was no significant difference in cognitive deficiency scores between gender (t=1.65, P=0.10) and education (college degree or higher compared to those who did not; t=−0.32 P=0.75). However, we found females (vs. males) and patients who had at least a college degree (compared to those who did not) had better cognitive capability scores t=3.28 (P=0.001) and 5.03 (P< 0.001), respectively. Patients who had FSIQ scores available were divided into four grouped (2 SDs below norm, 1 SD below norm, 1 SD above norm, 2 SDs above norm). There was no statistically significant difference in scores among groups on items measuring cognitive deficiency, F(3,118)=0.91, P=0.44 or capability, F(3,118)=1.41, P=0.24. The above results suggest that patients perceived their cognitive deficiency and cognitive capability independently regardless of FSIQ. These results strengthened our conclusion that cognitive deficiency and capability are two distinct concepts.

Patients with better scores on the Emotional Well-Being (EWB) scale of the FACT-G (35) (available n=268) scores tended to report less cognitive deficiency and better capability, with Spearman’s rho = 0.41 and 0.24, P<0.001, respectively. Similar results were found with the relationship between SF-36 mental component score, MCS (available n=99) and cognitive deficiency, rho=0.35, P<0.001, but not cognitive capability, rho = −0.25, P=0.014. Patients (n=62) with less cognitive deficiency and better cognitive capability reported less depression and anxiety as measured by HADS, rho = −0.69 and −0.49 for deficiency, respectively, and rho=−0.37 and −0.32 for capability, respectively

Discussion

Cancer and cancer treatment can have a deleterious impact on cognition. Only in the past 10–15 years have clinical researchers examined and documented this phenomenon in any rigorous way (8,45,46). However, chemotherapy-associated cognitive decline and the mechanisms underlying this phenomenon are not yet well understood. A valid PCF measurement tool can assist clinicians communicating with their patients about their cognitive concerns and can serve as a useful screening tool to identify patients who may benefit from a referral for a more comprehensive neuropsychological test. Towards this end, it is crucial to understand the dimensionality of PCF in order to determine whether it is appropriate to report a single summary score or multiple scores tapping relevant content areas separately (39). Based on evidence from internal consistency statistics, confirmatory factor analytic techniques (including bi-factor analysis) and a negligible correlation between cognitive capability and cognitive deficiency items, we conclude that these sets of items are perceived by cancer patients as distinct factors and their scores should be reported separately.

Results of this study were somewhat unexpected. Positively-worded (i.e., cognitive capability) items were initially added to an earlier version to the FACT-Cog to minimize a ceiling effect – a common practice in test/scale construction. Our experiences in other health-related quality of life measures have shown that such a strategy is valid, at times. For example, we have shown that vitality or energy items (i.e., positively-worded “fatigue” items) tap the same construct as fatigue items; the added energy items appeared to cover the higher end (i.e., less fatigue) of the symptom continuum (47). On the other hand, negatively-worded illness impact items did not seem to measure the same construct as positively-worded illness impact items. In fact, similar to our present findings, the relationship between positive and negative illness impact items was found to be orthogonal (48). We reasoned that our findings in perceived cognitive function and illness impact, unlike cancer-related fatigue, may share similarities to the measurement of affect, where positive and negative aspects are essentially independent (49–51). We therefore conclude that there are two relatively unrelated concepts that comprise perceived cognitive function: deficiency, defined as perceived cognitive deficits and the consequences of those difficulties, and capability, including items that tap self-efficacy and confidence. At this time, we cannot completely rule out the possibility that method variance captured by the local factors define the distinction we have made between cognitive capability and cognitive deficiency items, and so results from the present analyses require replication.

Previous research has suggested that depression and anxiety may have strong associations with subjective memory difficulties. Neuropsychological test performance may not be associated with patient-reported cognition after controlling for the impact of emotional distress (28). In this study, for those with available data, we found an association between the cognitive deficiency scale and mood measures. Yet, similar correlations with cognitive capability were inconsistent. It is somewhat difficult to know if the different pattern of results for cognitive deficiency and cognitive capability are a result of true differences in the subscales or an issue related to the different instruments used to assess emotional health symptoms (e.g., EWB subscale of FACT-G vs. MCS of SF-36). The implementation of initiatives such as NIH PROMIS may help to standardize such assessments, making such comparisons more straightforward. Nonetheless, it is possible that PCF may reflect emotional distress more than cognitive dysfunction, as measured by performance-based measures.

Nonetheless, we feel that PCF is an important patient-reported outcome in its own right. Of note, even when mood symptoms were associated with the PCF subscale, the shared variance between the two concepts was not substantial. Our PCF measure is assessing something above and beyond symptom distress and taps concerns of importance to cancer patients. Some evidence suggests that PCF instruments may be associated with brain changes detectable using structural or functional neuroimagery (23–31). In addition to the study conducted by Saykin and colleagues (26) as mentioned earlier, de Groot et al. (25) found that cognitive complaints (i.e., cognitive deficiency) preceded measurable cognitive dysfunction or even dementia. A dose-dependent pattern was suggested: at the low end of the white matter lesions (WML) severity distribution are subjects without reported cognitive deficiency and good cognitive performance, followed by those with reported cognitive deficiency but without cognitive dysfunction on neuropsychological testing, and finally those with reported cognitive deficiency progression during the last five years and measurable cognitive dysfunction. Cognitive deficiency might be an early warning sign related to progression of WML and imminent cognitive decline. While the results from Saykin et al. and de Groot et al. are compelling, we do not claim that PCF is a superior measure of cognition than neuropsychological tests, but PCF may hold promise, in specific circumstances, as a marker of structural or functional changes in the brain.

A psychometrically sound PCF scale will assist in our understanding of how patients’ self-reported cognition relates to objective performance and to other important correlates, such as emotional distress. For the present samples, we did not have information on patient’s objective neuropsychological test performance to compare with PCF scores. However, to help elucidate this important issue we plan to apply a multi-trait, multi-method approach (52) to explore the construct validity of PCF with longitudinal data currently being collected. Such a systematic approach will aid in our understanding of what we are measuring when we ask patients about their cognitive functioning.

A few other questions remain unanswered. Patients did not differ with respect to their scores on the cognitive deficiency items based on sex, education, or IQ. However, females and college-educated patients had better cognitive capability scores than the comparison groups. Interestingly, for those patients with IQ estimates, there were no differences between groups on cognitive capability items. The underlying reason for these group differences is not yet clear. To our knowledge, there are no published reports documenting gender difference and education effects on perceived cognitive capability. Future studies should be conducted to further understand potential mediating or moderating factors influencing perceived cognitive function (both deficiency and capability).

Although additional research is necessary to better understand what is being measured by cognitive capability items, there are some interesting potential applications for this sub-domain of PCF items. For example, it may be the case that cognitive capability items are more responsive to cognitive improvement (e.g., post-chemotherapy), compared to deficiency items, which may be more responsive to cognitive injuries. If so, capability and deficiency items could serve as complementary, but distinguishable indexes of change. Divergent and convergent validity studies using both classes of PCF items may help gauge the degree to which these items tap distinguishable concepts.

The current sample was well-educated, with nearly 60% having at least a college degree. Participants with more educational attainment scored better on cognitive capability items while no significant differences were found between patients with different levels of FSIQ scores. It is unclear what it is about education attainment that influences patients’ perceptions. Future studies that recruit individuals with a greater range in education level are needed to better address such issues. We also note that perceived cognitive function scores are not normally distributed; however, we do not expect that this impacted the resulting factor pattern. Skewed responses on Likert type scale items do not mean that the resulting factors must be skewed. The observed non-normality may simply be due to extremeness of the item wording, which is the central concept of the Item Response Theory models. In Item Response Theory, we prefer to include items with different degrees of endorsement in order to calibrate them on the construct being measured (in this study, perceived cognitive function) (47,53).

Furthermore, the samples for the present analysis were restricted to patients with cancer, as there is a growing interest in cognitive decrements due to either disease itself or the treatment such as chemotherapy (i.e., chemo-brain). However, the actual item content does not reflect symptoms unique or specific to the cancer experience. Nonetheless additional studies are needed in order to cross-validate the factor structure of PCF in other populations. In addition, while the tested items were developed via individual interviews and focus groups, it is noted that these items do not yet fully cover all relevant constructs within cognition; for example, executive function and multitasking are not queried, and the number of deficiency and capability items is not balanced. Using results of this study, our team is currently working on revising the PCF item bank under the Cancer PROMIS supplement (CaPS) as mentioned earlier. We are hoping that a valid and clinically meaningful PCF measure can serve as foundation for computerized adaptive testing (CAT), which can provide brief yet precise assessments in busy clinics. Routine CAT-based PCF assessment holds promise as an efficient screening tool for patients at risk for developing cognitive dysfunction.

In conclusion, this paper examined dimensionality of perceived cognitive function in cancer patients, and based on the convergence of several analyses we concluded that perceived cognitive deficiency and capability are two distinct concepts and should be scored separately. The establishment of sufficient dimensionality is an initial step towards further understanding PCF. Such an understanding holds the promise for the development of better screening tools.

Acknowledgments

This study was supported by grants from the National Cancer Institute (CA60068, PI: D. Cella; CA125671, PI: J.-S. Lai), and the National Institutes of Health (U01 AR 052177-01; PI: D. Cella). Dr. Shapiro’s efforts were supported by a grant from the Department of Defense (DAMD 17-03-1-0138; PI: P.J. Shapiro) when she was on fellowship at University of Pennsylvania School of Medicine

Appendix. Functional Assessment of Cancer Therapy – Cognition (version 2)

A 5-point rating scale assessing “in the past 7 day” time frame is used: 0=Never; 1=About once a week; 2=Two to three times a week; 3=Nearly every day; 4=Several times a day

| CogA1 | I have had trouble forming thoughts |

| CogA3 | My thinking has been slow |

| CogA4 | My thinking has been foggy |

| CogPA1 | I have been able to think clearly |

| CogC5 | I have had trouble adding or subtracting numbers in my head |

| CogPC1 | I have been able to concentrate |

| CogC6 | I have made mistakes when writing down phone numbers |

| CogC7 | I have had trouble concentrating |

| CogM8 | I have had trouble remembering the name of a familiar person |

| CogM9 | I have had trouble finding my way to a familiar place |

| CogM10 | I have had trouble remembering where I put things, like my keys or my wallet |

| CogM11 | I have had trouble remembering whether I did things I was supposed to do, like taking a medicine or buying something I needed |

| CogM12 | I have had trouble remembering new information, like phone numbers or simple instructions |

| CogV13 | I have had trouble recalling the name of an object while talking to someone |

| CogV14 | Words I wanted to use have seemed to be on the “tip of my tongue” |

| CogV15 | I have had trouble finding the right word(s) to express myself |

| CogV16 | I have used the wrong word when I referred to an object |

| CogV17a | I have had trouble speaking fluently |

| CogV17b | I have had trouble saying what I mean in conversations with others |

| CogPV1 | I have been able to bring to mind words that I wanted to use while talking to someone |

| CogF19 | I have walked into a room and forgotten what I meant to get or do there |

| CogF20 | I have needed medical instructions repeated because I could not keep them straight |

| CogF21 | I have forgotten or accidentally missed medical appointments |

| CogPM1 | I have been able to remember things, like where I left my keys or my wallet |

| CogF23 | I have had to work really hard to pay attention or I would make a mistake |

| CogF24 | I have forgotten names of people soon after being introduced |

| CogPM2 | I have been able to remember to do things, like take medicine or buy something I needed |

| CogF25 | My reactions in everyday situations have been slow |

| CogPF1 | I am able to pay attention and keep track of what I am doing without extra effort |

| CogO26 | Other people have noticed that I had problems remembering information |

| CogO27 | Other people have noticed that I had problems speaking clearly |

| CogO28 | Other people have noticed that I had problems thinking clearly |

| CogPO1 | People think my mind is really sharp |

| CogC29 | It has seemed like my brain was not working as well as usual |

| CogC31 | I have had to work harder than usual to keep track of what I was doing |

| CogC32 | My thinking has been slower than usual |

| CogC33a | I have had to work harder than usual to express myself clearly |

| CogC33b | I have had more problems conversing with others |

| CogC33c | I have had to use written lists more often than usual so I would not forget things |

| CogPCH1 | My mind is as sharp as it has always been |

| CogPCH2 | My memory is as good as it has always been |

| CogPCH3 | My thinking is as fast as it has always been |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Crook TH, Bartus RT, Ferris SH, et al. Age-associated memory impairment: proposed diagnostic criteria and measures of clinical change -- report of a National Institute of Mental Health work group. Dev Neuropsychol. 1986;2:261–276. [Google Scholar]

- 2.Levy R. Aging-associated cognitive decline. Working Party of the International Psychogeriatric Association in collaboration with the World Health Organization. Int Psychogeriatr. 1994;6(1):63–68. [PubMed] [Google Scholar]

- 3.Banos JH, LaGory J, Sawrie S, et al. Self-report of cognitive abilities in temporal lobe epilepsy: cognitive, psychosocial, and emotional factors. Epilepsy Behav. 2004;5(4):575–579. doi: 10.1016/j.yebeh.2004.04.010. [DOI] [PubMed] [Google Scholar]

- 4.Allen CC, Ruff RM. Self-rating versus neuropsychological performance of moderate versus severe head-injured patients. Brain Inj. 1990;4(1):7–17. doi: 10.3109/02699059009026143. [DOI] [PubMed] [Google Scholar]

- 5.Christodoulou C, Melville P, Scherl WF, et al. Perceived cognitive dysfunction and observed neuropsychological performance: longitudinal relation in persons with multiple sclerosis. J Int Neuropsychol Soc. 2005;11(5):614–619. doi: 10.1017/S1355617705050733. [DOI] [PubMed] [Google Scholar]

- 6.American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4. Washington, DC: Author; 1994. [Google Scholar]

- 7.Anderson-Hanley C, Sherman ML, Riggs R, Agocha VB, Compas BE. Neuropsychological effects of treaments for adults with cancer: a meta-analysis and review of the literature. J Int Neuropsychol Soc. 2003;9(7):967–982. doi: 10.1017/S1355617703970019. [DOI] [PubMed] [Google Scholar]

- 8.Jansen CE, Miaskowski C, Dodd M, Dowling G, Kramer J. A metaanalysis of studies of the effects of cancer chemotherapy on various domains of cognitive function. Cancer. 2005;104(10):2222–2233. doi: 10.1002/cncr.21469. [DOI] [PubMed] [Google Scholar]

- 9.Lezak MD, Howieson DB, Loring DW, Hannay HJ, Fischer JS. Neuropsychological assessment. 4. New York: Oxford University Press; 2004. [Google Scholar]

- 10.Spreen O, Strauss E. A compendium of neuropsychological tests: Administration, norms, and commentary. New York: Oxford University Press; 2006. [Google Scholar]

- 11.Martinez-Aran A, Vieta E, Colom F, et al. Do cognitive complaints in euthymic bipolar patients reflect objective cognitive impairment? Psychother Psychosom. 2005;74(5):295–302. doi: 10.1159/000086320. [DOI] [PubMed] [Google Scholar]

- 12.Burdick KE, Endick CJ, Goldberg JF. Assessing cognitive deficits in bipolar disorder: are self-reports valid? Psychiatry Res. 2005;136(1):43–50. doi: 10.1016/j.psychres.2004.12.009. [DOI] [PubMed] [Google Scholar]

- 13.Hiller W, Goebel G, Rief W. Reliability of self-rated tinnitus distress and association with psychological symptom patterns. Br J Clin Psychol. 1994;33(Pt 2):231–239. doi: 10.1111/j.2044-8260.1994.tb01117.x. [DOI] [PubMed] [Google Scholar]

- 14.Moritz S, Woodward TS, Krausz M, Naber D Persist Study Group. Relationship between neuroleptic dosage and subjective cognitive dysfunction in schizophrenic patients treated with either conventional or atypical neuroleptic medication. Int Clin Psychopharmacol. 2002;17(1):41–44. doi: 10.1097/00004850-200201000-00007. [DOI] [PubMed] [Google Scholar]

- 15.Hermann DJ. Know thy memory: the use of questionnaires to assess and study memory. Psychol Bull. 1982;92:434–452. [Google Scholar]

- 16.Jorm AF, Christensen H, Henderson AS, et al. Complaints of cognitive decline in the elderly: A comparison of reports by subjects and informants in a community survey. Psychol Med. 1994;24:365–374. doi: 10.1017/s0033291700027343. [DOI] [PubMed] [Google Scholar]

- 17.American Psychological Association. Guidelines for the evaluation of dementia and age-related cognitive decline. Am Psychol. 1998;53(12):1298–1303. [PubMed] [Google Scholar]

- 18.Bolla KI, Lindgren KN, Bonaccorsy C, Bleecker ML. Memory complaints in older adults. Arch Neurol. 1991;48:61–64. doi: 10.1001/archneur.1991.00530130069022. [DOI] [PubMed] [Google Scholar]

- 19.Kahn RL, Zarit SH, Hilbert NM, Niederehe G. Memory complaints and impairment in the aged. Arch Gen Psychiatry. 1975;32:35–39. doi: 10.1001/archpsyc.1975.01760300107009. [DOI] [PubMed] [Google Scholar]

- 20.Zimprich D, Martin M, Kliegel M. Subjective cognitive complaints, memory performance, and depressive affect in old age: a change-oriented approach. Int J Aging Hum Dev. 2003;57(4):339–366. doi: 10.2190/G0ER-ARNM-BQVU-YKJN. [DOI] [PubMed] [Google Scholar]

- 21.Blazer DG, Hays JC, Fillenbaum GG, Gold DT. Memory complaint as a predictor of cognitive decline: a comparison of African American and White elders. J Aging Health. 1997;9(2):171–184. doi: 10.1177/089826439700900202. [DOI] [PubMed] [Google Scholar]

- 22.Jorm AF, Christensen H, Korten AE, et al. Do cognitive complaints either predict future cognitive decline or reflect past cognitive decline? A longitudinal study of an elderly community sample. Psychol Med. 1997;27(1):91–98. doi: 10.1017/s0033291796003923. [DOI] [PubMed] [Google Scholar]

- 23.Lange G, Steffener J, Cook DB, et al. Objective evidence of cognitive complaints in chronic fatigue syndrome: a BOLD fMRI study of verbal working memory. Neuroimage. 2005;26(2):513–524. doi: 10.1016/j.neuroimage.2005.02.011. [DOI] [PubMed] [Google Scholar]

- 24.Dufouil C, Fuhrer R, Alperovitch A. Subjective cognitive complaints and cognitive decline: consequence or predictor? The epidemiology of vascular aging study. J Am Geriatr Soc. 2005;53(4):616–621. doi: 10.1111/j.1532-5415.2005.53209.x. [DOI] [PubMed] [Google Scholar]

- 25.de Groot JC, de Leeuw FE, Oudkerk M, et al. Cerebral white matter lesions and subjective cognitive dysfunction: The Rotterdam Scan Study. Neurology. 2001;56(11):1539–1545. doi: 10.1212/wnl.56.11.1539. [DOI] [PubMed] [Google Scholar]

- 26.Saykin AJ, Wishart HA, Rabin LA, et al. Older adults with cognitive complaints show brain atrophy similar to that of amnestic MCI. Neurology. 2006;67(5):834–842. doi: 10.1212/01.wnl.0000234032.77541.a2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Salinsky MC, Binder LM, Oken BS, et al. Effects of gabapentin and carbamazepine on the EEG and cognition in healthy volunteers. Epilepsia. 2002;43(5):482–490. doi: 10.1046/j.1528-1157.2002.22501.x. [DOI] [PubMed] [Google Scholar]

- 28.Minett TS, Dean JL, Firbank M, English P, O’Brien JT. Subjective memory complaints, white-matter lesions, depressive symptoms, and cognition in elderly patients. Am J Geriatr Psychiatry. 2005;13(8):665–671. doi: 10.1176/appi.ajgp.13.8.665. [DOI] [PubMed] [Google Scholar]

- 29.Ferguson RJ, McDonald BC, Saykin AJ, Ahles TA. Brain structure and function differences in monozygotic twins: possible effects of breast cancer chemotherapy. J Clin Oncol. 2007;25(25):3866–3870. doi: 10.1200/JCO.2007.10.8639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Prichep LS, John ER, Ferris SH, et al. Prediction of longitudinal cognitive decline in normal elderly with subjective complaints using electrophysiological imaging. Neurobiol Aging. 2006;27(3):471–481. doi: 10.1016/j.neurobiolaging.2005.07.021. [DOI] [PubMed] [Google Scholar]

- 31.McAllister TW, Sparling MB, Flashman LA, et al. Differential working memory load effects after mild traumatic brain injury. Neuroimage. 2001;14(5):1004–1012. doi: 10.1006/nimg.2001.0899. [DOI] [PubMed] [Google Scholar]

- 32.Wagner L, Lai J-S, Cella D, Sweet J, Forrestal S. Chemotherapy-related cognitive deficits: Development of the FACT-Cog instrument. Ann Behav Med. 2004;27:S010. [Google Scholar]

- 33.Wagner LI, Sabatino T, Cella D, et al. Robert J, Lurie H. Cognitive function during cancer treatment: Te FACT-Cog Study. Comprehensive Cancer Center of Northwestern University. 2005;X:10–15. [Google Scholar]

- 34.Linacre JM. A user’s guide to WINSTEPS/MINISTEP: Rasch-Model computer programs. Chicago, IL: Winsteps; 2005. [Google Scholar]

- 35.Cella D. The Functional Assessment of Cancer Therapy-Anemia (FACT-An) Scale: a new tool for the assessment of outcomes in cancer anemia and fatigue. Semin Hematol. 1997;34(3 Suppl 2):13–19. [PubMed] [Google Scholar]

- 36.Ware JE, Snow KK, Kosinski M. SF-36 Health Survey: Manual and interpretation guide. Lincoln, RI: QualityMetric Incorporated; 2000. [Google Scholar]

- 37.Brucker PS, Yost K, Cashy J, Webster K, Cella D. General population and cancer patient norms for the Functional Assessment of Cancer Therapy-General (FACT-G) Eval Health Prof. 2005;28(2):192–211. doi: 10.1177/0163278705275341. [DOI] [PubMed] [Google Scholar]

- 38.Hu L-T, Bentler PM. Cut-off criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Structural Equation Modeling. 1999;6(1):1–55. [Google Scholar]

- 39.Lai JS, Crane PK, Cella D. Factor analysis techniques for assessing sufficient unidimensionality of cancer related fatigue. Qual Life Res. 2006;15(7):1179–1190. doi: 10.1007/s11136-006-0060-6. [DOI] [PubMed] [Google Scholar]

- 40.McDonald RP. Test theory: A unified treatment. Mahwah, NJ: Lawrence Erlbaum; 1999. [Google Scholar]

- 41.Gibbons R, Hedeker D. Full-information item bi-factor analysis. Psychometrika. 1992;57(3):423–436. [Google Scholar]

- 42.Muthen LK, Muthen BO. MPlus: Statistical analysis with latent variables. Los Angeles: Muthen & Muthen; 2004. [Google Scholar]

- 43.Chen FF, West SG, Sousa KH. A comparison of bifactor and second-order models of quality of life. Multivariate Behav Res. 2006;41(2):189–225. doi: 10.1207/s15327906mbr4102_5. [DOI] [PubMed] [Google Scholar]

- 44.SAS Institute Inc. SAS/STAT, Version 8.2. Cary, NC: SAS Institute, Inc; 2002. [Google Scholar]

- 45.Meyers CA, Hess KR, Yung WK, Levin VA. Cognitive function as a predictor of survival in patients with recurrent malignant glioma. J Clin Oncol. 2000;18(3):646–650. doi: 10.1200/JCO.2000.18.3.646. [DOI] [PubMed] [Google Scholar]

- 46.Wefel JS, Kayl AE, Meyers CA. Neuropsychological dysfunction associated with cancer and cancer therapies: a conceptual review of an emerging target. Br J Cancer. 2004;90(9):1691–1696. doi: 10.1038/sj.bjc.6601772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lai J-S, Cella D, Dineen K, Von Roenn J, Gershon R. An item bank was created to improve the measurement of cancer-related fatigue. J Clin Epidemiol. 2005;58(2):190–197. doi: 10.1016/j.jclinepi.2003.07.016. [DOI] [PubMed] [Google Scholar]

- 48.Lai J-S, Rosenbloom S, Cella D, Wagner L. Building core HRQL measures: positive and negative psychological impact of cancer. Qual Life Res. 2004;13(9):1503. [Google Scholar]

- 49.Watson D, Tellegen A. Toward a consensual structure of mood. Psychol Bull. 1985;98(2):219–235. doi: 10.1037//0033-2909.98.2.219. [DOI] [PubMed] [Google Scholar]

- 50.Watson D, Clark LA, Weber K, et al. Testing a tripartite model: II. Exploring the symptom structure of anxiety and depression in student, adult, and patient samples. J Abnorm Psychol. 1995;104(1):15–25. doi: 10.1037//0021-843x.104.1.15. [DOI] [PubMed] [Google Scholar]

- 51.Tellegen A, Watson D, Clark LA. On the dimensional and hierarchical structure of affect. Psychol Sci. 1999;10(4):297–303. [Google Scholar]

- 52.Campbell DT, Fiske DW. Convergent and discriminant validation by the multitrait-multimethod matrix. Psychol Bull. 1959;56(2):81–105. [PubMed] [Google Scholar]

- 53.Lai J-S, Dineen K, Reeve B, et al. An item response theory based pain item bank can enhance measurement precision. J Pain Symptom Manage. 2005;30(3):278–288. doi: 10.1016/j.jpainsymman.2005.03.009. [DOI] [PubMed] [Google Scholar]