Abstract

Objective

Describe the planning, implementation, and faculty perceptions of a classroom peer-review process, including an evaluation tool.

Design

A process for peer evaluation of classroom teaching and its evaluation tool were developed and implemented by a volunteer faculty committee within our department. At the end of the year, all faculty members were asked to complete an online anonymous survey to evaluate the experience.

Assessment

The majority of faculty members either agreed or strongly agreed that the overall evaluation process was beneficial for both evaluators and for those being evaluated. Some areas of improvement related to the process and its evaluation tool also were identified.

Summary

The process of developing and implementing a peer-evaluation process for classroom teaching was found to be beneficial for faculty members, and the survey results affirmed the need and continuation of such a process.

Keywords: peer evaluation, classroom, teaching, survey, faculty, assessment

INTRODUCTION

Student evaluation is the most common method used to assess teaching performance during classroom instruction.1 This method is reliable and valid for assessing teaching effectiveness;2-3 however, the potential for evaluation bias has also been noted in the literature.4-8 In one study, faculty members from the Bernard J. Dunn School of Pharmacy at Shenandoah University evaluated the relationship between students' grade expectations, their actual grades, and their evaluation of 138 courses taught over 4 academic years at their institution. The 5,399 students included in the study represented first- through third-year pharmacy students. Researchers found a strong positive correlation between the mean course evaluation scores and the students' actual and expected grades. This suggests a potential for students to positively evaluate faculty members who award them higher grades.8

The faculty perception and utilization of the information provided by student evaluations was examined by faculty members from Mercer University. A questionnaire consisting of 19 favorable and unfavorable statements about student evaluations and 22 changes in instructional activity resulting from student feedback was created. The faculty member was asked to evaluate the 19 statements and to describe if any of the 22 instructional activities “decreased,” “increased,” or did “not change” based on student evaluations. A validated questionnaire was mailed to 1,600 faculty members. Approximately 43% of questionnaires were included in the final analysis. Forty-six percent of respondents were pharmacy practice faculty members. Other faculty members were evenly distributed among the disciplines of medicinal chemistry, pharmaceutics, pharmacology, and social and administrative sciences. Respondents also were evenly distributed by rank (30% professor; 34% associate professor; 35% assistant professor), and 55% of the faculty members were tenured. The mean attitude score was 3.1 (using a 5-point Likert scale) representing a neutral attitude toward student evaluations. In particular, pharmacy faculty members disagreed (mean response <2.5) with statements suggesting that student evaluations make it easy to distinguish between good and poor teaching and that student ratings were the best procedure to evaluate classroom teaching. Out of the 22 changes in instructional activity, faculty members indicated that they increased activity in 17 of them. Overall, these results suggest that although pharmacy faculty members had a neutral or noncommittal attitude towards student evaluations, they are using the information provided by them to make instructional changes.9

To evaluate faculty perception on their promotion and tenure process, a questionnaire consisting of 29 commonly utilized promotion and tenure criteria was mailed to 300 randomly selected full-time faculty members at US colleges and schools of pharmacy. The faculty members were asked to indicate how much each criterion was currently emphasized for promotion and tenure and how much importance they think each criterion should receive. The distribution of rank was evenly divided among the respondents, with approximately half tenured. The majority of participants were in the pharmacy practice department (37%), and the other disciplines included 11% pharmacology, 15% pharmaceutics, 16% chemistry, 13% social and administrative sciences, and 8% other. “Peer evaluation either through a structured peer review committee or by input from peers within your institution with relevant backgrounds” was in the top 5 criteria the faculty members would like to see emphasized. Peer evaluation also had the largest discrepancy between what is currently valued and what faculty members would like to see emphasized.10 This information suggested that pharmacy faculty members may want to implement a peer-evaluation process to enhance their teaching performance and documentation. Peer evaluation also is supported by the Accreditation Council for Pharmacy Education (ACPE), with guidelines signifying the importance of appropriate input from peers in annual faculty evaluations.11 Finally, the American Association of Health Education (AAHE) supports peer evaluation with 4 main arguments: (1) student evaluations are not enough; (2) teaching entails learning from experience, and collaboration among faculty members is essential; (3) the regard of one's peers is highly valued; and (4) peer review puts faculty members in charge of the quality of their work.12

In response, some colleges of pharmacy are developing peer-evaluation processes, and at least 2 have been described in the literature.13,14 The first publication described the peer-evaluation process developed at the Shenandoah University Bernard J. Dunn School of Pharmacy.13 An instructional design faculty member created a peer review program based on faculty input collected during retreats and meetings. The faculty members preferred to keep their results from their department chairs, making the process more formative as opposed to a requirement for annual evaluation or promotion and tenure. The faculty volunteered to evaluate others or to be evaluated, and this could be done on an annual basis. In contrast, the University of Colorado Denver School of Pharmacy used an evidenced-based approach to create its tool and process.14 Their process was required for all faculty members during prepromotion years 1, 3, and 6; and every 5 years following promotion, with results sent to the department chair. Assessment could be completed more often if the instructor or department chair desired. Assessors were asked to participate in a training program and were assigned a 1-year term appointed by the associate dean of academic affairs.

Upon review, there are distinct differences in the 2 programs. Each school evaluated the faculty's perception of their process and published these results. The faculty feedback, which highlights the programs' strengths and weaknesses, gives other colleges an opportunity to decide which procedures would best support their institution. We believe this is an important factor for successful development and implementation as the requirements of a peer-evaluation process should differ depending upon the purpose of its creation. In a 1996 survey of colleges of pharmacy, peer-evaluation procedural characteristics differed by whether they were mandatory (55.6% were mandatory), the frequency of reviews (25% were every year and 22% during the year of promotion and tenure review), the evaluation instruments (61% created their own), and number of reviews in a year (33.3% did one review per year).1

At the South Carolina College of Pharmacy-MUSC campus, student evaluations were the only method used to evaluate and document classroom teaching performance. Within the Department of Clinical Pharmacy and Outcomes Sciences, student evaluations were not favored for many of the reasons described in the above paragraphs, such as the potential for evaluation bias and the difficulty in distinguishing between good and poor teaching. In addition, student evaluations were not enforced; therefore, some students did not complete them and those who did often scored their professor or courses on a Likert scale without including comments to explain their evaluation. Faculty members desired more constructive feedback to improve their teaching performance, and they wanted better documentation for their annual reviews, promotion, and tenure. In addition, the department chair felt he needed more information to distinguish between his faculties' teaching performances. Prompted by a request from the department chair and faculty desire, the department created a formal, streamlined process for peer evaluation of classroom teaching with differences we highlight and propose as an alternative method. This description of the process and the evaluation tool, along with objective data from faculty members, adds to the limited published literature in this area and may serve as a resource for other colleges when developing their own processes.

DESIGN

A committee consisting of faculty members in our department with an interest in teaching evaluation created a process and tool for peer evaluation of classroom instruction with the ultimate goals of improving faculty teaching and documentation of teaching performance. First, a “Best Practices in Teaching” document that established desired teaching characteristics was written, with positions adapted from previously published information.15,16 This was necessary in order to streamline the faculty's vision and limit individual interpretations. An evaluation tool was then created to assess these “Best Practices in Teaching” characteristics. Specifically, the tool focused on 7 different domains: objectives, organization, lecture content, presentation style, interaction and rapport with students, handout and reading assignments, and examination. A Likert scale (ranging from 1= does not meet expectations to 3 = meets expectations) was used to evaluate each skill, with additional space available for constructive comments. A guest lecturer from the College of Medicine with expertise in healthcare professional instruction gave a presentation to our department on peer evaluation. With his permission, the faculty members used the tool to evaluate the lecture in an effort to validate it. In addition, this experience allowed faculty members to provide feedback to the committee on the tool's practicality. The final versions of the tool were voted upon and approved by the department.

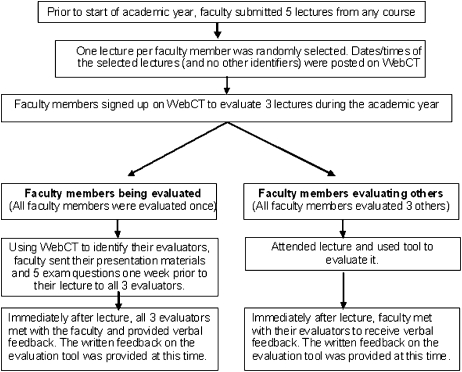

The process for evaluation was created by the committee with faculty feedback and ultimately voted upon and approved by the department (Figure 1). During its first year, the peer-evaluation process was coordinated by 2 faculty members from the Committee. The implementation was as follows: each faculty member within the department (N=17) submitted 5 lectures from any course prior to the 2006-2007 academic year and was evaluated during 1 of these randomly selected lectures by 3 of his or her peers. Each faculty member also was asked to serve as an evaluator 3 times during the year. An online calendar within a University-approved electronic database (WebCT, Blackboard Inc. Washington, DC) was used to organize the lecture dates and times (with no other identifiers) in a central location, and faculty members were asked to randomly sign up to evaluate 3 lectures in this database. The exclusion of names, lecture topics, and lecture locations made the process anonymous. Faculty members also were asked to use this database to identify their evaluators and send presentation materials (including slides and handouts) and 5 sample examination questions to them at least a week prior to their lecture. At the conclusion of each lecture, immediate written and verbal feedback was provided to the evaluated faculty member, and time was allowed for discussion of potential opportunities or methods for improvement. Faculty members were encouraged but not required to include these evaluations, along with their self-evaluations, in their annual performance review packet. The process was emphasized at monthly department meetings, with the support of the chair, to remind faculty members of requirements and completion timelines.

Figure 1.

Peer Evaluation Process

To evaluate faculty member perceptions of the peer-evaluation process and tool, we designed an online anonymous survey instrument that included both open-ended questions and Likert scale items (responses ranged from 1 = strongly disagree to 5 = strongly agree). The survey instrument was distributed electronically to faculty members in September 2007 and results were compiled in October 2007. This study was approved by the Institutional Review Board for Research with Human Subjects at the Medical University of South Carolina (MUSC).

EVALUATION AND ASSESSMENT

Fifteen faculty members completed the survey instrument (88%). The faculty members participating were of various rank (27% were assistant professors, 53% associate professors, and 20% full professors) and had an average of 16 years of teaching experience (range 3-39 years). Six (40%) faculty members were nontenure track and 9 were tenure track (of these, 6 had achieved tenure). The majority of lectures evaluated were in the therapeutics course. Although all participating faculty members should have completed 3 evaluations of their peers, 1 faculty member did not complete any evaluations. The average number of evaluations completed was 2 (range 1-3). Nine (60%) of the faculty members had at least 1 evaluation completed during their lecture.

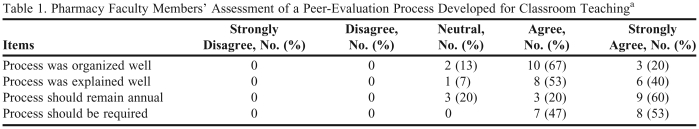

The majority of faculty members agreed or strongly agreed that the process was organized, well explained, should remain an annual process, and should be required for everyone. No faculty member disagreed or strongly disagreed with these comments (Table 1). The open-ended questions provided positive feedback as well as constructive criticism. Most faculty members appreciated the feedback provided by their evaluators. One faculty member commented on the learning experience gained by simply attending their peers' lectures. Constructive comments included decreasing the number of evaluators and requiring the process every 2 to 3 years instead of annually. Other comments suggested adding a new faculty orientation to the process and assuring that materials, especially examination questions, were provided to all evaluators a week in advance as instructed.

Table 1.

Pharmacy Faculty Members' Assessment of a Peer-Evaluation Process Developed for Classroom Teachinga

Measured on a 5-point Likert scale ranging from 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree

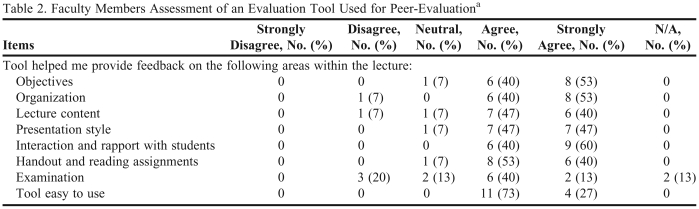

Of the faculty members who evaluated the tool, the majority agreed or strongly agreed that each item within the evaluation tool was appropriate (Table 2). Only 47% of individuals were able to evaluate all 3 lectures during the academic year. Most indicated that other responsibilities and lack of time were the major barriers. Sixty-seven percent of individuals were able to meet with the lecturer immediately after their presentation to provide constructive feedback. Within the open-ended comments, there was another mention of examination questions not being readily available, making it difficult to evaluate them. Another faculty member found it difficult to evaluate the “treats class members equitably” statement in the tool.

Table 2.

Faculty Members Assessment of an Evaluation Tool Used for Peer-Evaluationa

Measured on a 5-point Likert scale: 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree

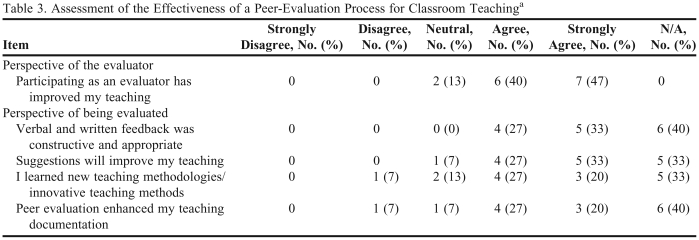

Out of the 15 faculty members completing the survey instrument, 6 were not evaluated (40%). This is because only 47% of faculty members completed the 3 evaluations required by the process stated above. Of the 9 individuals who had been evaluated, the majority agreed or strongly agreed that feedback was constructive and appropriate and enhanced teaching documentation (Table 3). Overall, the majority of faculty members who were evaluated agreed or strongly agreed that the process improved teaching and introduced innovative teaching methods (Table 3). Of note, 1 of the 6 faculty members who was not evaluated contributed in the evaluation of “Suggestions will improve my teaching” and “I learned new teaching methodologies/innovative teaching methods” (Table 3). This faculty member commented on the ability of the process to improve their teaching just by participating as an evaluator. Fifty-three percent of individuals provided the evaluators with lecture objectives and other relevant educational materials and examination questions a week prior to the lecture. Although general comments were positive, the post-observation meeting was identified as critical and should be a higher priority for faculty members (67% of faculty members were able to fulfill this requirement). In fact, lack of or limited feedback immediately after the lecture was deemed the largest barrier to the overall process.

Table 3.

Assessment of the Effectiveness of a Peer-Evaluation Process for Classroom Teachinga

Measured on a 5-point Likert scale: 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, 5 = strongly agree

Due to the limited number of classroom teaching evaluations, we could not conduct representative statistical testings. Further statistical analyses could only be conducted in a group of faculty members who had the same exposure within the process. Likewise, conducting subanalyses to evaluate the different perceptions between academic rankings, or correlating student evaluations and perceptions should be considered in the future as we achieve more homogenous participation and more evaluations.

DISCUSSION

The major strength of this peer-evaluation process was the involvement of the faculty in each step of its creation, development, and implementation. Having the faculty choose the “Best Practices in Teaching,” the process for peer evaluation, and the tool that was used for evaluation likely made the process better accepted, with 100% of evaluated faculty members commenting that the feedback was appropriate and constructive. This part of our process is similar to that of Shenandoah University, which also had immediate acceptance of its peer-evaluation process and tool.13 In addition, we required all faculty members to participate as evaluators.

This is different than the other published peer-evaluation processes.13,14 At the University of Colorado Denver School of Pharmacy, evaluators were assigned by the Associate Dean of Academic Affairs in conjunction with department chairs. This was a mandatory responsibility included in their annual evaluation. The evaluators were required to participate in a training course and were asked to supervise and coach the faculty member through the entire process. They reviewed their teaching materials, coached them through a pre-observation conference, attended and evaluated their lectures, provided constructive feedback, and documented it all in a report to the department chair. In contrast, the evaluators' responsibility during the Shenandoah University Bernard J. Dunn School of Pharmacy peer-evaluation process was just to evaluate the lecture. Because there were no mentor responsibilities, all faculty members could contribute; however, it was not a requirement resulting in only 1 faculty member participating as an evaluator.

We created an evaluator role more consistent with Shenandoah University's. Each new faculty member at the South Carolina College of Pharmacy-MUSC campus was assigned a mentor who coached him or her through teaching performance. Our peer-evaluation process was created to provide both junior and senior faculty members with constructive feedback. Like Shenandoah, we agreed that evaluators can learn from their peers by attending and reviewing their lectures and participating in feedback discussions. To improve participation, our process required all faculty members to contribute as evaluators. The survey results suggest that this assumption was justified, with the majority of faculty members agreeing that participating as an evaluator would improve their teaching. In addition, 1 faculty member who was not evaluated still commented on the program's ability to improve teaching skills simply by participating as an evaluator.

One limitation of including all faculty members in the evaluation process was the potential for inconsistency in their evaluation and documentation skills, which is why our process encouraged but did not require faculty members to submit their evaluations as part of their annual performance review or promotion and tenure review. Interestingly, 78% of the faculty members who were evaluated agreed or strongly agreed that the process enhanced the documentation of their teaching included in their annual performance reviews. In addition, our department chair noted that 100% of faculty members who were evaluated included the peer evaluation in their annual review packet. He commented on the additional information provided by the peer evaluations, which helped guide him more than student evaluations. He also noted an increase in faculty members trying to implement active-learning and critical-thinking skills into their lectures. He thought this, in part, was related to the peer-evaluation process, which stimulated an open discussion after each lecture during the feedback sessions. Finally, he mentioned that 2 faculty members included peer evaluations in their promotion/tenure packets (1 was promoted, and the other was tenured). We acknowledge the limitations of this subjective information and plan to objectively evaluate the impact of the peer evaluation process after a few years of implementation.

The decision of who should be an evaluator depends on the academic purpose of the peer evaluation process. If the goal is to improve the evaluation of teaching to have better documentation for annual performance review and promotion and tenure, a more summative process, one similar to the University of Colorado Denver School of Pharmacy may be favored. Our department chose to focus on the goal of improving teaching performance. The faculty members thought the learning opportunity created by evaluating peers outweighed its potential limitations. Therefore, our peer evaluation process will remain more formative. For that reason, faculty members will continue to have the option of including their peer evaluations in their annual performance reviews, but it will not be required.

A noted limitation of the process was the necessary time commitment. Although 88% of faculty members participated in the process, only 47% completed the required 3 evaluations. Time was indicated as the largest barrier. Similarly, time was a concern for the peer evaluation processes previously described in the literature.13,14 Shenandoah University's peer evaluation process was voluntary, achieving a participation rate of 23%. Lack of time and reminders were 2 barriers reported by faculty members. Our participation rate likely was higher because we made it a requirement, and we believe the monthly reminders at department meetings, the universal online calendar, the encouragement and support provided by the department chair, and possibly a lesser time commitment, also contributed. Our process did not require a pre-observation meeting like Shenandoah University, and our tool may have improved the efficiency of the actual peer evaluation. Shenandoah University faculty members were only provided a guide for evaluation. However, the more formative processes chosen by our program and Shenandoah University, which are not required to be included in annual performance reviews, does make it more challenging to enforce. In contrast, the University of Colorado Denver School of Pharmacy's process was required by all faculty members during prepromotion; years 1, 3, and 6; and every 5 years following promotion. Evaluations were sent to the department chairs, and the evaluators' responsibilities were added to their annual evaluations, making the process easier to enforce. However, “perceived excessive time involved in the process” was still expressed as a concern. For each assessment, the evaluated faculty member contributed approximately 3 hours and the evaluator contributed approximately 4 to 8 hours.14 Our process did not involve mentoring and therefore required fewer meetings compared to the University of Colorado Denver School of Pharmacy, which possibly could mean less time, but we did not document this result. Clearly, for a peer-evaluation program to exist, at least some faculty members must contribute their time. However, the extent of this time commitment and the number of faculty members affected could vary and should be strongly considered when designing a peer evaluation process. In addition, tools should be utilized to make the process as efficient as possible (such as evaluation tools, online calendars with reminders, etc). Ultimately, a peer evaluation program only can be successful if the peers involved in the process believe in its outcomes and are committed to fulfilling their responsibilities.

The survey results were reviewed and discussed at a department faculty meeting. Our department chose to continue the process with only minor changes. In an attempt to make our process more time manageable, our department chose to decrease the number of evaluators to 2 and increase the interval to every other year. Because 80% of faculty members surveyed thought the process of peer review should remain annual, we included the option of annual review if the faculty member desired. Because 100% of the faculty members surveyed thought the process should be required, we did not change this. However, we acknowledge that the weakness of this approach is the potential for faculty members to not fulfill their peer evaluation requirements. The basic requirements include: (1) attending 2 lectures; (2) staying after each session to provide feedback; and (3) providing materials a week ahead of time when being evaluated. While the changes described above should decrease faculty time commitment; having the department chair evaluate attendance at lectures and commitment to the peer evaluation process also may help. Continual evaluation will occur to determine whether these interventions improve the requirement fulfillment rate.

SUMMARY

The process of developing and implementing a peer-evaluation process was a beneficial experience for our department and added to the limited information in the literature. Our process allowed all faculty members involved in our department to review their teaching practices while learning additional skills from others and exchanging teaching philosophies. In order for faculty members to improve their teaching skills in the classroom, this exercise must be given as much importance as student evaluations. However, the peer evaluation process must be supported and valued by the academic leaders within individual teaching institutions. We encourage other groups to develop their own unique systems and report their experiences, as there is lack of evidence in the primary literature of the academic value of this particular process.

ACKNOWLEDGEMENTS

We thank Dr. John Bosso, the SCCP – MUSC Campus Clinical Pharmacy and Outcome Sciences Department Chair, for supporting this academic improvement project; and Dr. Jefferey Wong, from the College of Medicine, for sharing his teaching expertise during the initial training and validation faculty session.

REFERENCES

- 1.Barnett CW, Matthews HW. Current procedures used to evaluate teaching in schools of pharmacy. Am J Pharm Educ. 1998;62:388–91. [Google Scholar]

- 2.McKeachie WJ. Student ratings: the validity of use. Am Psych. 1997;52:1218–1225. [Google Scholar]

- 3.Arreola RA. Boston, Mass: Anker Publishing Co; 1995. Developing a Comprehensive Faculty Evaluation System: A Handbook for College Faculty and Administrators on Designing and Operating a Comprehensive Faculty Evaluation System. [Google Scholar]

- 4.Holmes DS. Effects of grades and disconfirmed grade expectancies on students' evaluations of their instructor. J Educ Psychol. 1972;63:130–3. [Google Scholar]

- 5.Powell RW. Grades, learning and student evaluation of instruction. Res High Educ. 1977;7:193–205. [Google Scholar]

- 6.Vasta R, Sarmiento RF. Liberal grading improves evaluations but not performance. J Educ Psychol. 1979;71:207–211. [Google Scholar]

- 7.Worthington AG, Wong PTP. Effects of earned and assigned grades on student evaluations of an instructor. J Educ Psychol. 1979;71:764–775. [Google Scholar]

- 8.Kidd RS, Latif DA. Student evaluations: are they valid measure of course effectiveness? Am J Pharm Educ. 2004;68 Article 61. [Google Scholar]

- 9.Barnett CW, Matthews HW. Student evaluation of classroom teaching: A study of pharmacy faculty attitudes and effects on instructional practices. Am J Pharm Educ. 1997;61:345–350. [Google Scholar]

- 10.Wolfgang AP, Gupchup GV, Plake KS. Relative importance of performance criteria in promotion and tenure decisions: Perceptions of pharmacy faculty members. Am J Pharm Educ. 1995;59:342–347. [Google Scholar]

- 11. Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.pdf. Accessed April 17, 2009.

- 12.Hutchings P. American Association for Health Education bulletin; November 1994. Peer review of teaching; pp. 3–8. [Google Scholar]

- 13.Schultz KK, Latif D. The planning and implementation of a faculty peer review teaching project. Am J Pharm Educ. 2006;70 doi: 10.5688/aj700232. Article 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hansen LB, McCollum M, Paulsen SM, Cyr T, Jarvis CL, Tate G, et al. Evaluation of an evidence-based peer teaching assessment program. Am J Pharm Educ. 2007;71 doi: 10.5688/aj710345. Article 45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lubawy WC. Evaluating teaching using the best practices model. Am J Pharm Educ. 2003;67 Article 87. [Google Scholar]

- 16.Hammer DP, Sauer KA, Fielding DW, Skau KA. White Paper on Best Evidence Pharmacy Education (BEPE) Am J Pharm Educ. 2004;68 Article 24. [Google Scholar]