Abstract

Considerable evidence suggests that qualitatively different processes are involved in the perception of faces and objects. According to a central hypothesis, the extraction of information about the spacing among face parts (e.g., eyes and mouth) is a primary function of face processing mechanisms that is dissociated from the extraction of information about the shape of these parts. Here, we used an individual-differences approach to test whether the shape of face parts and the spacing among them are indeed processed by dissociated mechanisms. To determine whether the pattern of findings that we reveal is unique for upright faces, we also presented similarly manipulated nonface stimuli. Subjects discriminated upright or inverted faces or houses that differed in parts or spacing. Only upright faces yielded a large positive correlation across subjects between performance on the spacing and part discrimination tasks. We found no such correlation for inverted faces or houses. Our findings suggest that face parts and spacing are processed by associated mechanisms, whereas the parts and spacing of nonface objects are processed by distinct mechanisms. These results may be consistent with the idea that faces are special, in that they are processed as nondecomposable wholes.

Many lines of evidence indicate that face recognition engages cognitive and neural mechanisms distinct from those involved in object recognition (Kanwisher, 2000; Moscovitch, Winocur, & Behrmann, 1997). But how exactly does the perceptual processing of faces differ from the perceptual processing of objects? Several studies have shown that we are highly sensitive to the spacing among face parts (e.g., distance between the eyes) in upright but not in inverted faces (Haig, 1984; Kemp, McManus, & Pigott, 1990). Furthermore, it has been suggested that mechanisms that extract information about the spacing among face parts are distinct from those involved in extracting the identity of the individual parts—that is, the shape, color, and texture (Maurer, Le Grand, & Mondloch, 2002). In the present study, we applied an individual-differences approach to examine whether information about spacing and parts in faces is processed by dissociated mechanisms. Furthermore, to determine whether the pattern of correlation that we find is unique for upright faces, we also presented inverted faces and similarly manipulated nonface stimuli.

The hypothesis that spacing information and part-based information are extracted by distinct mechanisms has been tested with a variety of methods, including studies of the face-inversion effect (i.e., a drop in performance for inverted relative to upright faces), studies with individuals who suffer from face recognition impairments, and neuroimaging studies. Studies that have measured the magnitude of the face inversion effect for discrimination of faces that differed only in the spacing among parts relative to faces that differed only in the identity of the parts (see Figure 1) have reported mixed results. Many studies have reported a much larger inversion effect for face stimuli that differ in spacing than for face stimuli that differ in the identity of the parts (e.g., Freire, Lee, & Symons, 2000; Goffaux & Rossion, 2007; Le Grand, Mondloch, Maurer, & Brent, 2001), which suggests that upright face processing mechanisms primarily extract spacing information, whereas part-based face information may be extracted by nonface mechanisms. Other studies have reported similar inversion effects for spacing and parts (Rhodes, Brake, & Atkinson, 1993; Riesenhuber, Jarudi, Gilad, & Sinha, 2004; Yovel & Duchaine, 2006; Yovel & Kanwisher, 2004), which suggests that face-specific processing mechanisms extract information about both spacing and part-based information.

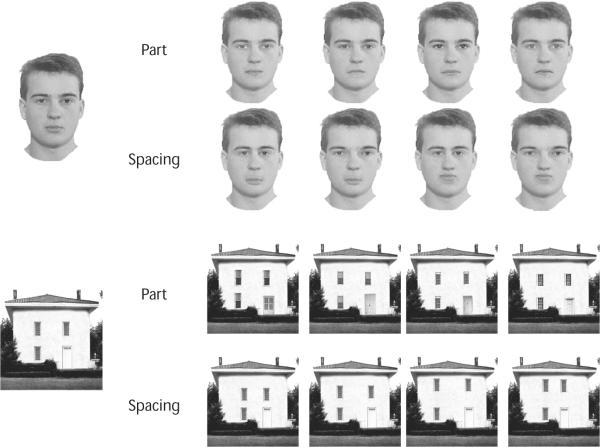

Figure 1.

The face and house stimuli differed in either the parts (eyes and mouth for faces and windows and doors for houses) or the distance among these parts (spacing). Stimuli were constructed such that performance for the upright conditions was below ceiling and matched for the configuration and part tasks in faces and houses (see Table 1).

Studies of individuals who suffer from face recognition difficulties have also reported mixed findings. Le Grand et al. (2001) reported better discrimination for upright faces that differ in parts than for faces that differ in spacing in individuals who suffered from infantile cataracts and show face recognition difficulties in adulthood. These findings suggest dissociation between the processing of spacing and parts (see also Barton, Press, Keenan, & O'Connor, 2002, and Joubert et al., 2003, for acquired prosopagnosia cases). Yovel and Duchaine (2006), who examined individuals with developmental prosopagnosia, found a similar deficit for discrimination of spacing and parts, but only when face parts differed primarily in shape. When face parts differed also in contrast/color information (as in Barton et al., 2002; Joubert et al., 2003; Le Grand et al., 2001), they found that prosopagnosic individuals showed normal discrimination abilities. These findings suggest that information about the spacing among face parts and the shape of parts may be processed by the impaired face-processing mechanisms of prosopagnosic individuals, whereas differences in contrast and brightness of face parts can also be extracted by their intact nonface mechanisms.

Finally, neuroimaging studies allow us to directly examine how faces are processed by face-selective brain regions (regions that show significantly higher response to faces than to objects), as compared with object-general brain regions (regions that show similar responses to faces and non-face objects). According to fMRI studies, the processing of spacing and parts in faces may be dissociated in various temporal and frontal regions, but not in the face-selective fusiform face area, which is similarly sensitive to both types of face manipulations (Maurer et al., 2007; Rotshtein, Geng, Driver, & Dolan, 2007; Yovel & Kanwisher, 2004). These findings suggest that face-selective processing mechanisms extract both spacing and part-based information. In contrast, outside the face-selective regions, distinct mechanisms extract spacing and part-based information.

In the present article, we applied an individual-differences approach to test the hypothesis that spacing and part-based information are processed by dissociated mechanisms for nonfaces, but associated mechanisms for upright faces. Specifically, to assess the relationship between the processing of spacing and parts for faces and nonfaces, we computed the correlations between performance on a sequential matching task with faces and non-faces that differed in parts or spacing (Yovel & Duchaine, 2006; Yovel & Kanwisher, 2004). If mechanisms used to discriminate spacing were dissociated from those used to discriminate parts, we would have expected low correlations across subjects between performance on these two tasks. On the basis of the imaging data that show dissociation between the processing of spacing and parts in object processing regions but not in face-selective brain regions, we predicted low correlations between discrimination of spacing and parts for nonfaces but a high correlation for upright faces.

METHOD

Subjects

Seventy-seven subjects participated in the experiment for $10/h. Three subjects did not complete all tasks and were omitted from further analyses, leaving 74 subjects.

Stimuli and Apparatus

Stimuli were presented using SuperLab 1.2 on a 17-in. Macintosh monitor (1,024 × 768, 75 Hz). Adobe Photoshop was used to create the spacing and part sets for the face and house exemplars. Stimulus resolution was 300 × 300 pixels.

Face stimuli

The generation of the face stimuli followed the method used by Le Grand et al. (2001), except for one key difference: As in Le Grand et al., two sets of four face stimuli were generated from a photograph of a male face. For the spacing set, four faces were constructed in which the eyes were either close together or far apart from each other and the mouth was either close to or far from the nose. For the part set, the two eyes and the mouth were replaced in each of four faces by eyes and mouths of a similar shape from different original face photos. Figure 1 shows a face stimulus generated by the same procedure, which yielded similar behavioral findings (see below) as the face stimulus used in the experiment (which is not presented in Figure 1 because we did not obtain permission to publish it). The face stimuli subtended 3.5 cm in width and 5 cm in length.

Our stimuli differ from Le Grand et al.'s (2001; see also Erratum in Nature, 412, p. 786), in that the spacing and part stimuli were constructed on the basis of performance levels in a pilot study. In particular, we manipulated the stimuli until they yielded an averaged discrimination level of about 80% in both the spacing and the part tasks for both upright faces and houses (see Table 1). Thus, in our study, the two tasks did not differ in level of difficulty, and none of the conditions suffered from ceiling performance. The spacing manipulation to faces that yielded a performance level around 80% included moving the eye position by 4-5 pixels inward or outward and the mouth position 4-5 pixels upward or downward. For the part manipulation, we minimized the difference in contrast/brightness among the eyes and mouth that were used in the face set.

Table 1.

Mean Accuracy, Standard Errors, and the Spearman-Brown Corrected Split-Half Reliability for the Eight Tasks

| Stimulus and Task | Partial Correlations | Correlation Corrected for Attenuation | Upper Bound Correlation | Correlation | Split-Half Reliability | M | SE |

|---|---|---|---|---|---|---|---|

| Face upright | |||||||

| Spacing | .42 | .69 | .79 | .55 | .75 | .79 | .013 |

| Part | .84 | .77 | .013 | ||||

| Face inverted | |||||||

| Spacing | -.06 | .22 | .75 | .16 | .78 | .71 | .015 |

| Part | .74 | .62 | .014 | ||||

| House upright | |||||||

| Spacing | -.12 | .06 | .82 | .05 | .88 | .79 | .010 |

| Part | .77 | .81 | .010 | ||||

| House inverted | |||||||

| Spacing | .02 | .25 | .87 | .22 | .86 | .79 | .010 |

| Part | .88 | .83 | .013 |

Note-The upper bound of the correlation (the square root of the product of the reliability scores) between the part and spacing tasks for each condition is similar and high for all stimuli. Zero-order correlation and the correlation corrected for attenuation between the spacing and part tasks were higher for upright faces than for nonfaces. The partial correlations are the correlation between the spacing and part discrimination tasks for each stimulus, while holding performance on the six other tasks constant.

House stimuli

The house stimuli were designed to be as similar as possible to the face stimuli in discriminability and in the nature of the spacing and part differences among stimuli. House stimuli were created using a method similar to that used for the face stimuli. For the spacing set, four variants of one house were constructed in which the windows and door were closer together or farther apart, or the upper windows were closer to or farther from the roof. For the part set, the windows and door were replaced by windows and a door of similar overall shape but a different texture (see Figure 1). The house stimuli subtended 5 cm in width and 5 cm in length. To obtain 80% performance level for the house spacing stimuli, we moved the location of the windows inward or outward as well as upward or downward by 15 pixels on average.

Procedure

Subjects completed the face- and house-matching tasks, in addition to seven other perception tasks designed to test different hypotheses. Here, we report results from only the face and house upright and inverted matching tasks. Subjects were presented with a sequential same-different matching task. The distance between the subject and the screen was 45 cm. Each trial started with a 500-msec fixation dot at the center of the screen. A first stimulus was presented for 250 msec, followed by a 1,000-msec interstimulus interval, during which the fixation dot was on the screen. The second stimulus was presented for 250 msec. The first stimulus on a part trial could be the original face or one of four stimuli from the part set (first row in Figure 1). The first stimulus on a spacing trial could be the original stimulus or one of the four stimuli from the spacing set (second row in Figure 1). The second stimulus either was identical or was a different stimulus from the spacing set or the part set on the spacing or part trials, respectively. On each trial, both stimuli were presented upright or upside down; the upright task was run before the inverted task. Each task (upright face, inverted face, upright house, and inverted house) included a total of 80 stimuli—20 pairs of different stimuli and 20 pairs of the same stimuli for the part and spacing trials. Subjects pressed one key for same responses and another key for different responses. The part and spacing trials were presented in an interleaved manner. Subjects were not informed that the stimuli would differ in spacing or parts.

Data Analysis

Accuracy was used as our main dependent measure. Seven subjects who had z scores larger than ±2.5 on any of the eight tasks were excluded from the correlational analyses (note that analyses that included these 7 subjects revealed a similar pattern of findings). We computed Pearson correlations between the part and spacing tasks for each stimulus (face/house) and orientation (upright/inverted) for the 68 subjects who had scores on all eight tasks. The arcsine transformation, which normalizes the distribution, yielded findings similar to the raw scores. We therefore, present the results of the raw scores.

RESULTS

Mean Analysis

Table 1 presents the averaged accuracy scores on the face and house tasks. Note that performance for the upright faces and houses was matched for the two tasks. Thus, differences in performance level for the upright stimuli per se cannot account for the correlational findings reported below.

Correlation Analyses

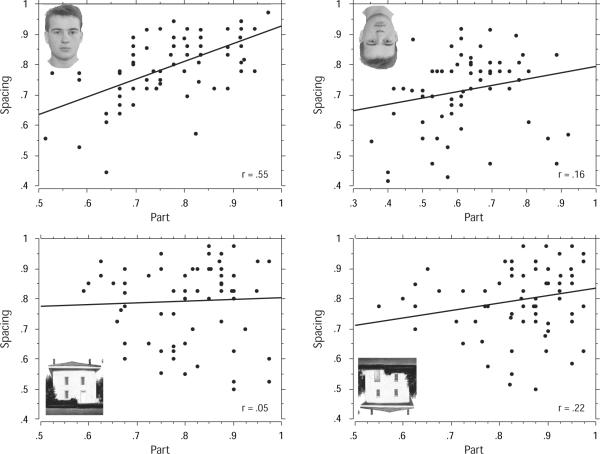

Only upright faces yielded a large correlation (Cohen, 1988) across subjects between performance on the spacing and part tasks [r(66) = .55, p < .0001]. The correlations between the two tasks were small and not significant for inverted faces [r(66) = .16, p = .18], upright houses [r(68) = .05, p = .68], and inverted houses [r(66) = .22, p = .08]; the differences between correlations (z transformation) for upright faces versus inverted faces, upright houses, and inverted houses were all significant (ps < .05). The significantly lower correlation between the inverted face tasks (similar to upright faces in all respects but orientation) relative to the upright face tasks indicates that the high correlation between spacing and part processing is specific for upright face processing (see Figure 2).

Figure 2.

Scatterplots of performance on the spacing task (y-axis) and the part task (x-axis) for upright faces, inverted faces, upright houses, and inverted houses show that only for upright faces there was a large positive correlation between performance on discrimination of spacing and performance on part information.

Reliability Analyses

Low correlations between two tasks could indicate either that the tasks are mediated by independent mechanisms or that one or both tasks have a low reliability score. Table 1 shows that the Spearman-Brown corrected split-half reliability scores and the upper bound of the correlation (the product of the square root of the reliability scores) between spacing and parts were high and similar for all stimulus types (upright faces, inverted faces, upright houses, and inverted houses); low reliability, therefore, cannot account for the low correlations for nonfaces.

Elimination of the Possible Effect of a General Factor

To assess the extent to which a general factor (e.g., general visual discrimination abilities, motivation, fatigue) underlies the observed zero-order correlations, we performed a partial correlation between spacing and part for each of the stimuli (face/house × upright/inverted), while holding the other variables constant. The partial correlation between the spacing and part tasks for upright faces, when performance for spacing and parts of inverted faces and upright and inverted houses are partialed out, was still positive and reliable [r(60) = .42, p < .001], whereas the analogous partial correlations between the spacing and part tasks were not significantly different from 0 for inverted faces [r(60) = -.06, p = .66], upright houses [r(60) = -.12, p = .35], or inverted houses [r(60) = .02, p = .9]. These findings suggest that the higher correlations between spacing and parts for upright faces are not mediated by a general perceptual factor but instead reflect the unique way in which faces are represented.

Examination of a Possible Effect of Task Order

Subjects in our study performed the upright task before the inverted task. To assess whether the pattern of results we obtained merely reflected a task order effect, 18 new subjects were presented with a similar task in which the upright and inverted faces or houses were randomly mixed in the same block. As in the original task, the spacing and part trials were interleaved, and subjects were not informed that the stimuli differed by parts or spacing. The data show remarkably similar findings. The correlation between the part and spacing tasks was high only for upright faces [r(16) = .67, p < .005], not for inverted faces [r(16) = .30, p = .22], upright houses [r(16) = -.05, p = .84], or inverted houses [r(16) = -.20, p = .42].

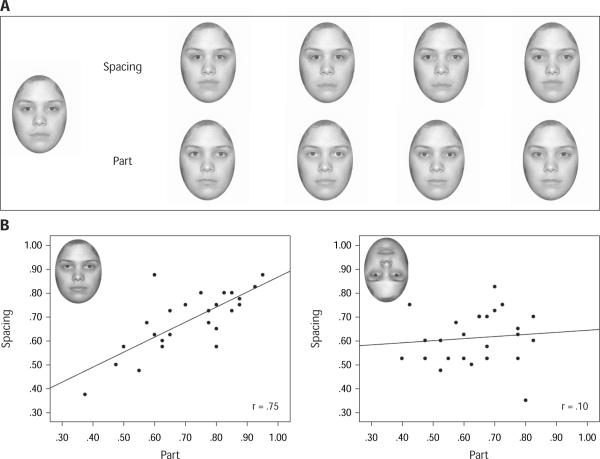

Replication with Two Other Face Exemplars

To test whether the correlation between parts and spacing for upright but not inverted faces was specific to the face exemplar that we used, we generated two new face exemplars, a male face (Figure 1) and a female face (Figure 3A), that were presented in two new experiments. We first adjusted the stimuli to match performance for the spacing and part trials; then, with a procedure similar to the one described above, we presented the faces, either upright or inverted (Yovel & Duchaine, 2006), in a sequential discrimination task. The order of the upright and inverted blocks was counterbalanced across subjects. Results from 16 subjects who performed a discrimination task with the male face replicated our findings of a high correlation between performance on the spacing and part tasks for upright faces [r(14) = .64, p < .01] but not for inverted faces [r(14) = .05, p = .86]. Results from 24 additional subjects who performed a discrimination task with the female face revealed a high positive correlation between performance on the spacing and part tasks for upright faces [r(22) = .75, p < .01] but no correlation for inverted faces [r(22) = .10, p > .05] (see Figure 3).

Figure 3.

(A) Replication of the correlational findings with a female face exemplar in which we manipulated the parts and spacing among them so they yield similar performance level. (B) Scatter plots show high positive correlations between performance on the spacing task, and that on the part task for the upright faces but not inverted faces.

Evidence for Two Domain-General Mechanisms for Processing Spacing and Part-Based Information

Table 2 shows the zero-order correlations across the eight different conditions (face/house × upright/inverted × spacing/part). Our findings show large positive correlations among all the part and spacing conditions. In contrast, correlations between the spacing and part conditions were small for all stimulus types, except for upright faces. To assess the overall pattern of correlations among the eight tasks, we performed a principal component analysis. This analysis revealed two components with an eigenvalue larger than 1, which we rotated using Varimax rotation. The rotated solution showed that the spacing but not the part tasks loaded highly on the first component, which explained 30.2% of the total variance, whereas the part but not the spacing tasks loaded highly on the second component, which explained 28.5% of the total variance (see Table 3). Thus, the high correlation between spacing and parts that we found for upright faces, but for no other stimuli, and the results of the principal component analysis suggest that in addition to the upright-face-specific mechanism for processing both parts and spacing, two additional process-specific mechanisms may exist, one for spacing and one for parts, that can be applied to any stimulus type.

Table 2.

Zero-Order Correlations Across the Eight Different Conditions

| Face |

House |

|||||||

|---|---|---|---|---|---|---|---|---|

| Upright |

Inverted |

Upright |

Inverted |

|||||

| Spacing | Part | Spacing | Part | Spacing | Part | Spacing | Part | |

| Face upright | ||||||||

| Spacing | .554** | .444** | .357* | .455** | .248 | .483** | .258 | |

| Part | .256 | .440** | .145 | .273 | .355* | .438** | ||

| Face inverted | ||||||||

| Spacing | .164 | .270 | .248 | .356* | .157 | |||

| Part | .165 | .355* | .294 | .303 | ||||

| House upright | ||||||||

| Spacing | .05 | .652** | .059 | |||||

| Part | .229 | .565** | ||||||

| House inverted | ||||||||

| Spacing | .217 | |||||||

Note-A correlation matrix displays the zero-order correlations among the eight discrimination tasks (spacing/part X upright/inverted X face/house).

p < .005.

p < .0001.

Table 3.

Principal Component Analysis and Explanation of Variance

| Components |

||

|---|---|---|

| Condition | 1 | 2 |

| Face upright spacing | .71 | .37 |

| Face inverted spacing | .56 | .22 |

| House upright spacing | .85 | -.10 |

| House inverted spacing | .82 | .17 |

| Face upright part | .37 | .64 |

| Face inverted part | .26 | .61 |

| House upright part | .04 | .78 |

| House inverted part | .03 | .82 |

| Explained variance | 30.2% | 28.5% |

Note-Principal component analysis revealed two domain-general, process-specific factors. The spacing tasks but not the part tasks had high loading on the first component, and the part tasks but not spacing tasks had high loadings on the second component.

DISCUSSION

The central goal of research on face perception is to characterize the nature of the processes carried out on face stimuli and to understand whether and how they differ from the processes that are carried out on nonface objects. Here, we found a high correlation in subjects' performance on part and spacing discrimination tasks only for upright faces, not for inverted faces or houses, a result that we replicated in four different studies using three different face stimulus sets. These findings support our hypothesis that spacing and parts are processed by dissociated mechanisms for nonfaces. In contrast, extraction of information about spacing and parts is associated with upright faces.

What kind of mechanism may underlie the positive correlation between the processing of spacing and parts that we observed only for upright faces? Our findings are consistent with the idea that faces are processed as nondecomposable wholes by specialized holistic face mechanisms (Tanaka & Farah, 1993). In particular, Tanaka and Sengco (1997) showed that the improved recognition of face parts, within the context of the whole face rather than in isolation, deteriorates when the spacing among the parts of the original whole face are modified. These findings are in line with our finding that spacing and parts are processed interactively. Furthermore, the finding that spacing and parts are associated for only upright faces is consistent with findings that show that holistic mechanisms do not operate on inverted faces (Young, Hellawell, & Hay, 1987; Yovel, Paller, & Levy, 2005) or on nonface objects (e.g., Robbins & Mc-Kone, 2007). Finally, our findings also may be consistent with those of neuroimaging studies, which have reported similar responses to spacing and part faces in the fusiform face area, which generate holistic representation for upright faces (Schiltz & Rossion, 2006), but dissociated responses to spacing and parts outside face-selective regions (Maurer et al., 2007).

A logically possible, if unparsimonious, alternative hypothesis—one that is also consistent with our data—is that, for upright faces only, part and spacing information is extracted by distinct mechanisms that nonetheless interact strongly with each other (perhaps via a third mechanism correlated with both). This alternative hypothesis resembles the hypothesis of a single holistic mechanism, in that part and spacing processing interact, presumably producing—for upright faces only—the holistic effects observed behaviorally. Here, we refer to face part and spacing processing as “associated,” to leave open the question of whether this association reflects the operation of a single mechanism or two or more interacting mechanisms.

Our findings appear at first glance to be inconsistent with a large body of literature suggesting that face parts and the spacing among them are processed by distinct mechanisms (for a review, see Maurer et al., 2002). Specifically, many studies have reported a larger inversion effect for the spacing than for the part task. However, in a recent comprehensive review of 17 studies that examined the magnitude of the inversion effect for discrimination of spacing and parts, McKone and Yovel (2008) showed that inversion effects are reduced only when face parts differ in contrast/color information. When face parts differ primarily in shape, they generate effects similar to those of faces that differ only in spacing (Yovel & Duchaine, 2006). The dissociation between the processing of spacing and the color/contrast of parts is probably due to the usage of non-face mechanisms that are sensitive to brightness/contrast information in any visual stimulus and can be easily applied also to faces in sequential matching tasks (but see Russell, Sinha, Biederman, & Nederhouser, 2006).

In summary, our finding of a high correlation between performance on spacing and parts challenges a widespread view in the face-perception literature that spacing information and parts information about faces are processed by dissociated mechanisms. Instead, our findings support the idea that faces are processed by specialized holistic mechanisms, which extract information about any facial information, including the shape of parts and the spacing among them. This unique ability to holistically represent both parts and spacing may underlie the rich and integrated representation that we generate for faces, which allows for efficient discrimination of such visually similar stimuli.

Acknowledgments

This research was supported by NEI Grant EY13455 to N.K. from the National Center for Research Resources (P41-RR14075, R01 RR16594-01A1, and the NCRR BIRN Morphometric Project BIRN002) and the Mental Illness and Neuroscience Discovery (MIND) Institute. We thank Jerre Levy, Elinor McKone, Hans Op de Beeck, Rachel Robbins, Jeremy Wilmer, and Iftah Yovel for their comments on the manuscript. We also thank Nao Gamo, Kevin Der, Arielle Tambini, and Michael Ogrydziak for data collection, and Stephanie Chow and Kathleen Cui for stimulus preparation.

REFERENCES

- Barton JJS, Press DZ, Keenan JP, O'Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58:71–78. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Erlbaum; Hillsdale, NJ: 1988. [Google Scholar]

- Freire A, Lee K, Symons LA. The face-inversion effect as a deficit in the encoding of configural information: Direct evidence. Perception. 2000;29:159–170. doi: 10.1068/p3012. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Rossion B. Face inversion disproportionately impairs the perception of vertical but not horizontal relations between features. Journal of Experimental Psychology: Human Perception & Performance. 2007;33:995–1002. doi: 10.1037/0096-1523.33.4.995. [DOI] [PubMed] [Google Scholar]

- Haig ND. The effect of feature displacement on face recognition. Perception. 1984;13:505–512. doi: 10.1068/p130505. [DOI] [PubMed] [Google Scholar]

- Joubert S, Felician O, Barbeau E, Sontheimer A, Barton JJ[S], Ceccaldi M, Poncet M. Impaired configurational processing in a case of progressive prosopagnosia associated with predominant right temporal lobe atrophy. Brain. 2003;126:2537–2550. doi: 10.1093/brain/awg259. [DOI] [PubMed] [Google Scholar]

- Kanwisher N. Domain specificity in face perception. Nature Neuroscience. 2000;3:759–763. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]

- Kemp R, McManus C, Pigott T. Sensitivity to the displacement of facial features in negative and inverted images. Perception. 1990;19:531–543. doi: 10.1068/p190531. [DOI] [PubMed] [Google Scholar]

- Le Grand R, Mondloch CJ, Maurer D, Brent HP. Neuroperception: Early visual experience and face processing. Nature. 2001;410:890. doi: 10.1038/35073749. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Maurer D, O'Craven KM, Le Grand R, Mondloch CJ, Springer MV, Lewis TL, Grady CL. Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia. 2007;45:1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016. [DOI] [PubMed] [Google Scholar]

- McKone E, Yovel G. A single holistic representation of spacing and feature shape in faces. Poster presented at the 8th Annual Meeting of the Vision Sciences Society; Naples, FL: May, 2008. [Google Scholar]

- Moscovitch M, Winocur G, Behrmann M. What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. Journal of Cognitive Neuroscience. 1997;9:555–604. doi: 10.1162/jocn.1997.9.5.555. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Brake S, Atkinson AP. What's lost in inverted faces? Cognition. 1993;47:25–57. doi: 10.1016/0010-0277(93)90061-y. [DOI] [PubMed] [Google Scholar]

- Riesenhuber M, Jarudi I, Gilad S, Sinha P. Face processing in humans is compatible with a simple shape-based model of vision. Proceedings of the Royal Society B. 2004;271:S448–S450. doi: 10.1098/rsbl.2004.0216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins R, McKone E. No face-like processing for objects-of-expertise in three behavioural tasks. Cognition. 2007;103:34–79. doi: 10.1016/j.cognition.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Geng JJ, Driver J, Dolan RJ. Role of features and second-order spatial relations in face discrimination, face recognition, and individual face skills: Behavioral and functional magnetic resonance imaging data. Journal of Cognitive Neuroscience. 2007;19:1435–1452. doi: 10.1162/jocn.2007.19.9.1435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell R, Sinha P, Biederman I, Nederhouser M. Is pigmentation important for face recognition? Evidence from contrast negation. Perception. 2006;35:749–759. doi: 10.1068/p5490. [DOI] [PubMed] [Google Scholar]

- Schiltz C, Rossion B. Faces are represented holistically in the human occipito-temporal cortex. NeuroImage. 2006;32:1385–1394. doi: 10.1016/j.neuroimage.2006.05.037. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology. 1993;46A:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Sengco JA. Features and their configuration in face recognition. Memory & Cognition. 1997;25:583–592. doi: 10.3758/bf03211301. [DOI] [PubMed] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception. 1987;16:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Yovel G, Duchaine B. Specialized face perception mechanisms extract both part and spacing information: Evidence from developmental prosopagnosia. Journal of Cognitive Neuroscience. 2006;18:580–593. doi: 10.1162/jocn.2006.18.4.580. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: Domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Yovel G, Paller KA, Levy J. A whole face is more than the sum of its halves: Interactive processing in face perception. Visual Cognition. 2005;12:337–352. [Google Scholar]