Abstract

Objective

To assess the effect of publication bias on the results and conclusions of systematic reviews and meta-analyses.

Design

Analysis of published meta-analyses by trim and fill method.

Studies

48 reviews in Cochrane Database of Systematic Reviews that considered a binary endpoint and contained 10 or more individual studies.

Main outcome measures

Number of reviews with missing studies and effect on conclusions of meta-analyses.

Results

The trim and fill fixed effects analysis method estimated that 26 (54%) of reviews had missing studies and in 10 the number missing was significant. The corresponding figures with a random effects model were 23 (48%) and eight. In four cases, statistical inferences regarding the effect of the intervention were changed after the overall estimate for publication bias was adjusted for.

Conclusions

Publication or related biases were common within the sample of meta-analyses assessed. In most cases these biases did not affect the conclusions. Nevertheless, researchers should check routinely whether conclusions of systematic reviews are robust to possible non-random selection mechanisms.

Introduction

Selection bias is known to occur in meta-analyses because studies with results that are significant, interesting, from large well-funded studies, or of higher quality are more likely to be submitted, published, or published more rapidly than work without such characteristics.1 A meta-analysis based on a literature search will thus include such studies differentially, and the resulting bias may invalidate the conclusions.

The best way to deal with these problems, which we shall collectively label “publication bias,” is to avoid them. Recently, for example, a trial amnesty was announced that encouraged researchers to submit for publication reports of previously unpublished trials.2 Additionally, steps are being taken to encourage the prospective registration of trials through trial registries.3 Although these steps may reduce the problem of publication bias in the future, it will remain a serious problem, and one that meta-analysts need to address for some time to come.

The simplest and most commonly used method to detect publication bias is an informal examination of a funnel plot.4 Formal tests for publication bias, such as those developed by Begg and Mazumdar5 and Egger et al,6 exist, but in practice few meta-analyses have assessed or adjusted for the presence of publication bias. A recent assessment of the quality of systematic reviews reported that only 6.5% and 3.2% of studies in high impact general and specialist journals respectively reported that a funnel plot had been examined.7 The uptake of any formal methods is lower still, although there are notable exceptions.8

The main aim of this paper is to assess what effect publication bias could have on the results and conclusions of meta-analyses of randomised controlled trials in general. We applied the trim and fill method to a set of meta-analyses contained within the Cochrane Database of Systematic Reviews9 and estimated the numbers of missing trials and their effects on the inferences in these meta-analyses. This method both tests for the presence of publication bias and adjusts for it. It is simpler to implement than previously described methods,10 and simulation studies suggest that it may outperform more sophisticated methods in many situations.

Methods

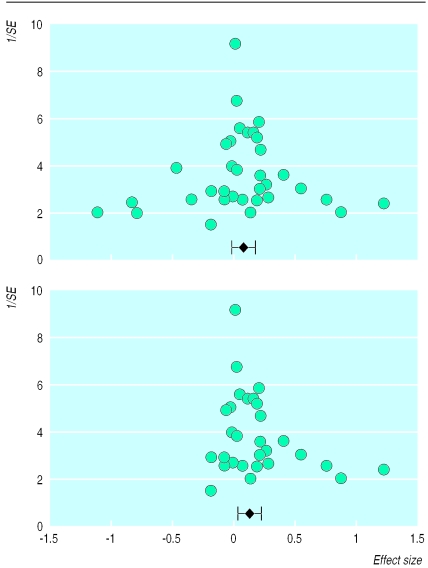

A funnel plot is a plot of each trial's effect size against some measure of its size, such as the precision (used here), the overall sample size, or the standard error (fig 1, top).4 These plots are referred to as funnel plots because they should be shaped like a funnel if no publication bias is present. This shape is expected because trials of smaller size (which are more numerous) have increasingly large variation in the estimates of their effect size as random variation becomes increasingly influential. However, since smaller or non-significant studies are less likely to be published, trials in the bottom left hand corner (when a desirable outcome is being considered) of the plot are often omitted, creating a degree of asymmetry in the funnel (fig 1, bottom).

Figure 1.

Typical funnel plot generated from 35 simulated studies (top) and same data with five missing studies showing a typical manifestation of publication bias (bottom)

We used the “trim and fill” method to evaluate bias in funnel plots.10–12 Firstly, the number of “asymmetric” trials on the right side of the funnel is estimated: these can broadly be thought of as trials which have no left side counterpart. These trials are then removed, or “trimmed,” from the funnel, leaving a symmetric remainder from which the true centre of the funnel is estimated by standard meta-analysis procedures. The trimmed trials are then replaced and their missing counterparts imputed or “filled”: these are mirror images of the trimmed trials with the mirror axis placed at the pooled estimate (see BMJ 's website for figure). This then allows an adjusted overall confidence interval to be calculated. A test for the presence of publication bias has also been derived from this method, based on the estimated number of missing trials.11

Selection and analysis of studies

We examined all reviews contained in the Cochrane Database of Systematic Reviews (1998, issue 3).9 Reviews including 10 or more trials reporting a binary outcome measure were included in the assessment. At most, one meta-analysis from each review was included, and when more than one met the inclusion criteria, the one containing the most studies was selected. If two or more contained the same number of studies, the one listed first in the review was chosen. The (log) odds ratio measure was used for analysis, and where data were sparse, a continuity correction of 0.5 was used.13 For consistency, funnel plots were reflected about zero for meta-analyses in which the reported outcome measure was “undesirable” so that the left hand side of the funnel plot was scrutinised for publication bias in every case.

For each meta-analysis, we trimmed the trials considered to be symmetrically unmatched (assumed to be the k right-most trials in each funnel). The number k was estimated by an iterative procedure (details given elsewhere10–12) using the estimator denoted L0.11,12 We used fixed and random effects models to estimate the overall effect in order to assess the impact of choice of model on publication bias. The estimated effect of the missing trials provides an indication of whether the imputed missing studies affect the overall result of the meta-analysis.

Results

Included studies

At the time of the investigation, the Cochrane Library contained 397 reviews that included a meta-analysis. Of these, 49 included 10 or more trials with at least one dichotomous outcome. However, one of these did not assess a comparative effect and was excluded, leaving 48 meta-analyses for assessment. The number of trials included in each dataset ranged from 10 to 47 (median 13), with only four analyses including more than 20 trials.

Random effects meta-analyses of these datasets, ignoring possible publication bias, resulted in 28 estimates that reached significance at the 5% level, with the remaining 20 being inconclusive. The corresponding figures were 30 and 18 with a fixed effects model.

The funnel plots for all 48 meta-analyses are available on the BMJ 's website. Each trial's log odds ratio is plotted against the reciprocal of its standard error. The distribution of individual trial effect sizes is often highly irregular. Only a few of the plots closely conform to the classic funnel shape.

Estimated numbers of missing studies

Details of the estimates of the number of trials missed because of publication bias for each review are available on the BMJ 's website. In all, 23 meta-analyses were estimated to have some degree of publication bias (L0 >0) with the random effects model; this increased to 26 with the fixed effects model. The number of missing trials was significant if L0 >3 for the range of trials included in the meta-analyses evaluated here.12 Eight meta-analyses reached this critical level under the random effects model and 10 under the fixed effects model. These estimates suggest that about half of meta-analyses may be subject to some level of publication bias and about a fifth have a strong indication of missing trials.

Changes in significance and magnitude of the overall pooled estimates

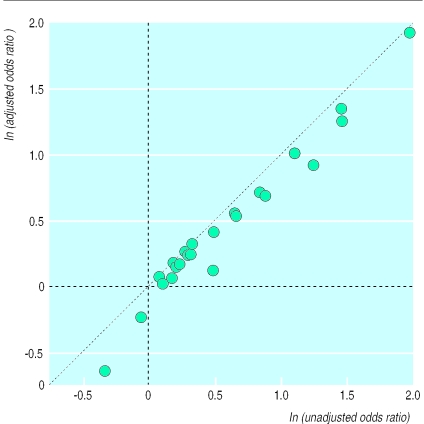

Imputing missing trials changed the estimates of the overall effect for all meta-analyses in which one or more trials was estimated as missing. Use of the random effects model indicated that in four reviews this would lead to significant changes in the conclusions. Three meta-analyses that were considered significant at the 5% level in the original analysis became non-significant (studies 5, 10, 13), and one that was considered non-significant became significantly negative (study 30). These results seem plausible in the light of the trial distribution in figure 2, and thus we deduce that around 5-10% of meta-analyses may be interpreted incorrectly because of publication bias.

Figure 2.

Scatter plot of unadjusted versus adjusted odds ratios after filling with trim and fill method under random effects model. Dotted line is line of equality, and vertical distance away from this line indicates magnitude by which original pooled estimates have been reduced by adjusting for publication bias

Figure 2 shows the effect of adjustment by the trim and fill method on the odds ratios for the 23 meta-analyses estimated to have one or more missing trials. Although only modest changes in outcome are observed in most instances, in six studies with a positive effect (studies 1, 3, 5, 10, 24, and 47) the reduction is greater than 30%. In two cases (studies 30 and 48) with a negative effect, adding missing studies had an even stronger effect (see table on BMJ 's website for details).

Discussion

We found a clear indication of publication bias within this sample of studies from the Cochrane Database of Systematic Reviews. About half had some indication of publication bias, with a fifth having a strong indication. The potential effect of such bias varies from dataset to dataset. In some instances (for example, study 3) the number of studies estimated to be missing was relatively large but the adjusted estimate of effect size was only slightly lower than the original estimate. This can largely be explained by the presence of one or more studies that are much larger than those filled. These large studies have sufficient influence over the overall pooled estimate that asymmetry in the lower part of the funnel makes little impression on the overall pooled result. In other cases (for example, study 5), the estimated number of missing studies is smaller but the review's conclusions are altered.

The only other assessment of the impact of funnel plot asymmetry on a general collection of meta-analyses which is known to us is that of Egger et al,6 who applied their test to an earlier edition of the Cochrane Database of Systematic Reviews. Their inclusion criteria were less stringent than ours, including meta-analyses of categorical outcomes with five or more trials (compared with our minimum requirement of 10). They found significant indication of bias in five meta-analyses out of 38 examined (P<0.1). Our decision to include only meta-analyses with a minimum of 10 trials was largely arbitrary; however, five studies are usually too few to detect an asymmetric funnel.

Use of bias assessment

Although the development of methods for assessing publication bias has a reasonably long history, these methods are rarely used in practice.7 We searched the 48 original review reports from which the 48 meta-analyses were taken for references to publication bias and descriptions of any steps taken to deal with it. Thirty (63%) made no reference to publication bias. Five reviews mentioned examining a funnel plot, and three used the test of Egger et al. Since many of these reviews were written before that test was published, it has made a relatively high impact among reviewers subsequently. Generally, it was the more recently conducted reviews that had considered or tested for funnel plot asymmetry.

One possible reason for the lack of uptake of methods to deal with publication bias is that previous approaches have involved modelling methods that are difficult to implement and require lengthy calculations. The trim and fill method is both theoretically simple and easy and quick to implement. It may therefore be appropriate for routine use in evaluating meta-analyses. Our results seem plausible, with the four studies whose conclusions changed on adjustment all having visually skewed funnel plots.

Validity of results

Some cautionary remarks are needed in assessing our results. Since the method is based on the lack of symmetry in the funnel plot, and asymmetry might be due to factors other than publication bias,14 the results produced by trim and fill may not always reflect correction for publication bias. Moreover, the odds ratio outcome was used exclusively in this investigation. The appearance of a funnel plot can depend on the outcome measure used, and different results might be obtained in some instances if the risk difference or relative risk scale is used. The sensitivity of assessments of publication bias to the outcome measure used requires further investigation.

What is already known on this topic

Meta-analyses are subject to bias because smaller or non-significant studies are less likely to be published

Most meta-analyses do not consider the effect of publication bias on their results

What this study adds

A simple trim and fill adjustment method on studies in the Cochrane database suggests that publication bias may be present to some degree in about 50% of meta-analyses and strongly indicated in about 20%

Publication bias affected the results in less than 10% of meta-analyses

Researchers should always check for the presence of publication bias and perform a sensitivity analysis to assess the potential impact of missing studies

The idea of adjusting the results of meta-analyses for publication bias and imputing “fictional” studies into a meta-analysis is controversial.15 We certainly would not rely on results of imputed studies in forming a final conclusion, partly because asymmetry in a funnel plot may be due to factors other than publication bias. Any adjustment method should be used primarily as a form of sensitivity analysis, to assess the potential effect of missing studies on the meta-analysis, rather than as a means of adjusting results themselves.

If, as our study indicates, missing studies change the conclusions in less than 10% of meta-analyses, publication bias, although widespread, may not be a major practical problem. On the other hand, the fact that almost half the funnel plots examined seemed to exhibit some asymmetry leads us to conclude that routine evaluation for this bias should be an important step in any systematic review.

Supplementary Material

Footnotes

Funding: None.

Competing interests: None declared.

Figures illustrating the method and funnel plots of all trials and a full table of results are available on the BMJ's website

References

- 1.Song F, Easterwood A, Gilbody S, Duley L, Sutton, AJ. Publication bias. In: Stevens A, Abrams K, Brazier J, Fitzpatrick R, Lilford R, eds. Handbook of research methods for evidence-based health care—insights from the NHS HTA programme. London: Sage Publications (in press).

- 2.Horton R. Medical editors trial amnesty. Lancet. 1997;350:756. doi: 10.1016/s0140-6736(05)62564-0. [DOI] [PubMed] [Google Scholar]

- 3.Easterbrook PJ. Directory of registries of clinical trials. Stat Med. 1992;11:345–423. [PubMed] [Google Scholar]

- 4.Begg CB. Publication bias. In: Cooper H, Hedges LV, editors. The handbook of research synthesis. New York: Russell Sage Foundation; 1994. pp. 399–409. [Google Scholar]

- 5.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994;50:1088–1101. [PubMed] [Google Scholar]

- 6.Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tallon D, Schneider M, Egger M. Quality of systematic reviews published in high impact general and specialist journals. Proceedings of 2nd symposium on systematic reviews: beyond the basics, Oxford, 1999. (www.ihs.ox.ac.uk/csm/talks.html#p8; accessed 1 June 2000.)

- 8.Linde K, Clausius N, Ramirez G, Melchart D, Eitel F, Hedges LV, et al. Are the clinical effects of homoeopathy placebo effects? A meta-analysis of placebo-controlled trials. Lancet. 1997;350:834–843. doi: 10.1016/s0140-6736(97)02293-9. [DOI] [PubMed] [Google Scholar]

- 9.Cochrane Collaboration, editors. Cochrane Library. Issue 3. Oxford: Update Software; 1998. Cochrane database of systematic reviews. [Google Scholar]

- 10.Duval S, Tweedie R. Practical estimates of the effect of publication bias in meta-analysis. Australasian Epidemiologist. 1998;5:14–17. [Google Scholar]

- 11.Duval S, Tweedie R. A non-parametric “trim and fill” method of assessing publication bias in meta-analysis. J Am Stat Ass (in press).

- 12.Duval S, Tweedie R. Trim and fill: a simple funnel plot based method of testing and adjusting for publication bias in meta-analysis. Biometrics (in press). [DOI] [PubMed]

- 13.Sankey SS, Weissfeld LA, Fine MJ, Kapoor W. An assessment of the use of the continuity correction for sparse data in meta-analysis. Communications In Statistics—Simulation and Computation. 1996;25:1031–1056. [Google Scholar]

- 14.Petticrew M, Gilbody S, Sheldon TA. Relation between hostility and coronary heart disease: evidence does not support link. BMJ. 1999;319:917. doi: 10.1136/bmj.319.7214.917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Begg CB. Comment on Givens GH, Smith DD, Tweedie RL. Publication bias in meta-analysis: a Bayesian data-augmentation approach to account for issues exemplified in the passive smoking debate. Stat Sci. 1997;12:221–250. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.