Abstract

We describe a functional architecture for word recognition that focuses on how orthographic and phonological information cooperates in initial form-based processing of printed word stimuli prior to accessing semantic information. Component processes of orthographic processing and orthography-to-phonology translation are described, and the behavioral evidence in favor of such mechanisms is briefly summarized. Our theoretical framework is then used to interpret the results of a large number of recent experiments that have combined the masked priming paradigm with electrophysiological recordings. These experiments revealed a series of components in the event-related potential (ERP), thought to reflect the cascade of underlying processes involved in the transition from visual feature extraction to semantic activation. We provide a tentative mapping of ERP components onto component processes in the model, hence specifying the relative time-course of these processes and their functional significance.

The neural/mental operations involved in the comprehension of written words are fundamental to the process of reading. Providing an understanding of how individual words can be identified from around 50,000 or so possibilities in less than half a second has been a continuing challenge for theorists in the field of Cognitive Science for the past four decades. Finding the answer to this question would represent a major step forward in understanding the most complex of human abilities – language-based communication. In this paper we start with a blueprint for a processing model of word comprehension and then present event-related potential (ERP) data in an attempt to specify the relative time-course of the component processes that are specified in the model.

The General Framework

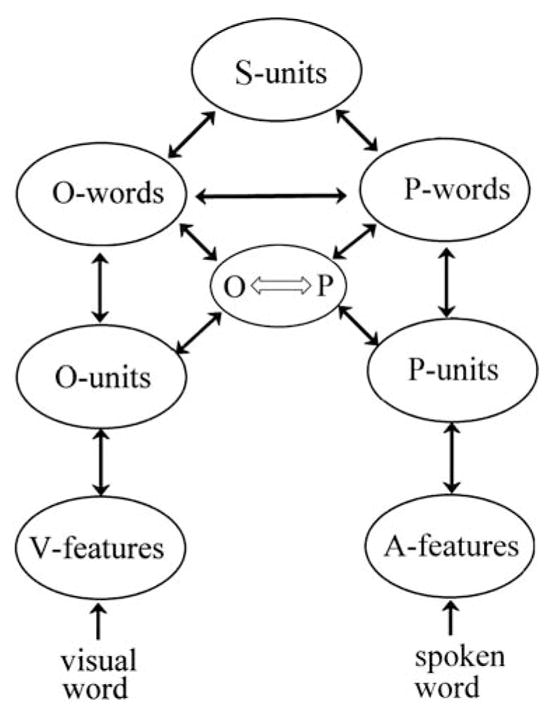

The architecture to be described is a hierarchical system that distinguishes separate featural, sublexical (i.e., smaller than words), and lexical (whole-word) levels of processing within each of the orthographic and phonological pathways. Among other things, this hierarchy permits connections between orthography and phonology to be established at different points in each pathway. An additional feature of this architecture is the inclusion of a final a-modal semantic system which is fed by both the orthographic and the phonological whole-word levels. The architecture can be implemented using the general computational principles of interactive-activation (McClelland and Rumelhart 1981), and as such is referred to as the bi-modal interactive-activation model (BIAM).

Variants of the architecture presented in Figure 1 have been described in previous work (e.g., Grainger and Ferrand 1994; Jacobs et al. 1998; Grainger et al. 2003, 2005; Grainger and Ziegler 2007). A printed word stimulus, like all visual stimuli, first activates a set of visual features (V-features) that we assume are largely outside the word recognition system per se. Features in turn activate a sublexical orthographic code (O-units) that in turn sends activation onto the central interface between orthography and phonology (O ⇔ P) that allows sublexical orthographic representations to be mapped onto their corresponding phonological representations, and vice versa. Thus a printed word stimulus rapidly activates a set of sublexical phonological representations that can influence the course of visual word recognition principally via the activation of whole-word phonological representations. These whole-word phonological representations also receive activation from whole-word orthographic representations, thus establishing connections between orthography and phonology at both sublexical and lexical levels of representation. In the following section we discuss possible mechanisms involved in processing information in the orthographic and phonological pathways of the BIAM, and describe how they interact during word reading. We then turn to examine the evidence from recent electrophysiological research that provides timing estimates for these different component processes.

Fig 1.

Architecture of a bi-modal interactive activation model (BIAM) of word recognition in which a feature/sublexical/lexical division is imposed on orthographic (O) and phonological (P) representations. In this architecture, orthography and phonology communicate directly at the level of whole-word representations (O-words, P-words), and also via a sublexical interface (O ⇔ P). Semantic representations (S-units) receive activation from whole-word orthographic and phonological representations (the details of inhibitory within-level and between-level connections are not shown).

Component Processes in Visual Word Recognition

On presentation of a printed word stimulus, an elementary analysis of its visual features is rapidly succeeded by the first stage of processing specific to letter string stimuli. Orthographic processing involves the computation of letter identity and letter position. There is strong evidence suggesting that each individual letter identity in a string of letters can be processed simultaneously, in parallel. The typical function linking letter-in-string identification accuracy and position-in-string is W-shaped (for central fixations), with highest accuracy for the first and last letters, and for the letter on fixation (Stevens and Grainger 2003; Tydgat and Grainger 2009). A combination of two factors accurately describes this function: the decrease in visual acuity with distance from fixation, and the decreased crowding (interference from neighboring letters) for the outer letters in the string (i.e., the first and last letter of a word). There is evidence for a small sequential component to this function (with superior identification for letters at the beginning of the string), but as argued by Tydgat and Grainger (2009), this might simply reflect an initial letter advantage rather than a graded beginning-to-end processing bias.

This parallel independent processing of letter identities is the starting point for a description of printed word recognition. Grainger and van Heuven (2003) used the term ‘alphabetic array’ to describe a bank of letter detectors that would perform such processing (see Figure 2, and Grainger 2008, for a recent review). These letter detectors are thought to be location-specific, with location referring to position relative to eye fixation position along the horizontal meridian. Letter detectors signal the presence of a particular configuration of visual features at a given location. Stimuli that are not aligned with the horizontal meridian require a transformation of retinotopic coordinates onto this special coordinate system for letter strings. This specialized mechanism for processing strings of letters is hypothesized to develop through exposure to print, and its specific organization will depend on the characteristics of the language of exposure (e.g., McCandliss et al. 2003; Dehaene 2007; Tydgat and Grainger 2009). The alphabetic array codes for the presence of a given letter at a given location relative to eye fixation along the horizontal meridian. It does not say where a given letter is relative to the other letters in the stimulus, since each letter is processed independently of the others. Thus, processing at the level of the alphabetic array is insensitive to the orthographic regularity of letter strings. However, for the purposes of location-invariant word recognition, this location-specific map must be transformed into a ‘word-centered’ code such that letter identity is tied to within-string position independently of spatial location (cf. Caramazza and Hillis 1990). This is achieved in two different ways in our model, along the orthographic and phonological processing routes shown in Figure 2 (note that Figure 2 elaborates on certain aspects of the more general model depicted in Figure 1 by specifying more detail at the level of sublexical orthographic and phonological processes).

Fig. 2.

Details of the orthographic and phonological pathways of the BIAM. Visual features extracted from a printed word feed activation into a bank of retinotopic letter detectors (1). Information from different processing slots in the alphabetic array provides input to a relative position code for letter identities (2) and a graphemic code (3). The relative-position coded letter identities control activation at the level of whole-word orthographic representations (5). The graphemic code enables activation of the corresponding phonemes (4), which in turn activate compatible whole-word phonological representations (6).

The orthographic and phonological processing routes of our model provide two pathways from visual features to word forms (whole-word orthographic and phonological representations), and from there onto semantics. The orthographic route (left-hand side of Figure 2) uses letters and letter combinations to home in on a unique whole-word orthographic representation. The phonological route (right-hand side of Figure 2) uses phonological information (phonemes and phoneme combinations) to home in on a unique whole-word phonological representation. One key motivation for this particular dual-route approach to visual word recognition is the consideration that visual word recognition is a mixture of two worlds – one whose main dimension is space – the world of visual objects, and the other whose main dimension is time – the world of spoken language. Skilled readers might therefore have learned to capitalize on this particularity, using structure in space in order to optimize the mapping of an orthographic form onto semantics, and using structure in time in order to optimize the mapping of an orthographic form onto phonology. Here we describe one specific mechanism for capturing spatial relations between letter identities in terms of their relative positions in the string. This is combined with a mechanism that uses the precise ordering of letter identities, hence mimicking the sequential structure of spoken language.

In the orthographic processing route, a coarse-grained orthographic code is computed in order to rapidly home in on a unique word identity, and the corresponding semantic representations (the fast track to semantics). In the phonological processing route, a fine-grained code is required to compute grapheme identities (the letters and letter combinations that correspond to phonemes in a given language) and their precise ordering in the string. These graphemes then activate the corresponding phonemes, which in turn lead to activation of the appropriate whole-word phonological representation and the corresponding semantic representations (see Perry et al. 2007, for a specific implementation of a graphemic parser). At this level of processing, both types of orthographic code are location-invariant, word-centered representations, such that letter identities are coded for their position in the word, not their location in space. The graphemic code uses a standard beginning-to-end position coding as previously applied in dual-route models of reading aloud (Coltheart et al. 2001; Perry et al. 2007), whereas the coarse-grained code uses ordered pairs of contiguous and non-contiguous letters (open-bigrams: Grainger and van Heuven 2003; Grainger and Whitney 2004). Evidence in favor of this type of coarse orthographic coding has been obtained using the masked priming paradigm in the form of robust priming effects with transposed-letter primes (e.g., gadren-GARDEN: Perea and Lupker 2004; Schoonbaert and Grainger 2004), and subset and superset primes (e.g., grdn-GARDEN, gamrdsen-GARDEN: Peresotti and Grainger 1999; Grainger et al. 2006b; Van Assche and Grainger 2006; Welvaert Farioli and Grainger 2008).

Finally, there is one key behavioral result in support of this specific form of dual-route architecture, and that disqualifies the traditional dual-route architecture (Coltheart et al. 2001) – that is fast masked phonological priming (Perfetti and Bell 1991;Ferrand and Grainger 1992; 1993; 1994; Lukatela and Turvey 1994; Ziegler et al. 2000; Frost et al. 2003, see Rastle and Brysbaert 2006, for review and meta-analysis). According to the time-course analyses provided by Ferrand and Grainger (1993) and Ziegler et al. (2000), phonological code activation lags about 20–30 ms behind orthographic code activation. This is too fast for traditional dual-route models in which sublexical phonological influences on visual word recognition are mediated by output phonology (i.e., phoneme representations that are dedicated for the process of reading aloud as opposed to comprehension). Rastle and Brysbaert (2006) showed that Coltheart et al.’s (2001) dual-route computational (DRC) model could only simulate fast phonological priming by speeding up processing in the sublexical phonological route. However, this inevitably came at the cost of increasing the number of regularization errors to exception words (e.g., reading ‘pint’ as/pInt/), hence leading to an abnormally high level of errors when processing such words.

In the BIAM, sublexical phonological influences on visual word recognition are mediated by input phonology (i.e., phonological representations involved in spoken word recognition). In Figure 2 we can see that such phonological representations are rapidly activated following presentation of a printed word, but this activation requires an extra step compared to the orthographic pathway – that is the mapping of graphemes onto phonemes. The model therefore correctly predicts fast phonological priming effects that lag only slightly behind orthographic effects (see Diependaele et al. 2009 for a simulation of fast phonological priming in the BIAM). In the following section we will present findings from a new line of research that combines masked priming with electrophysiological recordings in an attempt to plot the time-course of the component processes described in Figures 1 and 2.

Electrophysiological Markers of Visual Word Recognition

What could be the time-course of the processing described in Figures 1 and 2? Event-related potentials (ERPs) would appear to provide the ideal tool for answering that question. Unlike traditional behavioral measures (such as reaction time) that have been used extensively to study visual word recognition, ERPs have been shown to provide reliable measures of continuous online brain activity reflecting sensory, perceptual and cognitive processing (see Luck 2005 for a tutorial on the ERP technique). Moreover, differences in the distribution of ERP components (the positive and the negative deflections in ERPs that reflect the underlying neural and cognitive processes) across the scalp can be used to make gross approximations about the location of physiological sources and therefore can be used to help bridge the gap between overt behavior and the underlying neural events (although the strength of the ERP technique is clearly its temporal and not its spatial resolution – see Luck 2005).

Our research has combined electrophysiological recordings with the masked priming technique. Masked priming involves the rapid presentation of a prime stimulus immediately prior to a clearly visible target stimulus, and typically accompanied by forward and backward masking stimuli in order to reduce prime visibility to a minimum. In behavioral research, this technique has been found to be sensitive to the earliest processes of visual word recognition while avoiding the contamination from strategic factors that arises with visible prime stimuli (Forster 1998). Information extracted from briefly presented masked prime stimuli is thought to be integrated with the information extracted from the target stimulus (when this follows the prime in close temporal proximity), such that primes are not processed as a separate perceptual event. Our own research has established a cascade of ERP components that are sensitive to masked priming (Holcomb and Grainger 2006). These ERP components are the N/P150, N250, P325, and N400, and in this section we will summarize the research that has highlighted each of these components and began to flesh out their functional significance.

The N/P150

The first component is a very focal bipolar ERP effect that we have labeled the N/P150 (see Figure 3). The ‘P’ (positive polarity) portion of this response has a posterior scalp distribution focused over occipital scalp sites (larger on the right side of the head – see Figure 3c) and is more positive to target words that are repetitions of the prior prime word compared to targets that are unrelated to their primes (see Figure 3a).1 In this same time-window, a comparable but opposite polarity (i.e., the ‘N’ for negative polarity) effect is visible at anterior sites. Although further experimentation might reveal non-identical generators for these two potentials, at present the available data suggest that they respond in a very similar manner, hence our tentative classification as a single ERP component. The first studies where we saw the N/P150 were one conducted in English (Figure 3a) and another in French (Figure 3b). Moreover, as suggested by the ERPs plotted in Figure 3a, the N/P150 is sensitive to the degree of overlap between the prime and target items with greater effects for targets that completely overlap their primes in every letter position and intermediate for targets that overlap in most but not all positions.

Fig. 3.

Grand average ERPs at the right frontal and occipital electrode sites for English words (a), French words (b) and a scalp voltage map centered on the 150 ms epoch (c). Note that in this and all subsequent ERP figures negative voltages are plotted in the upward direction. Stimulus onset (target) is indicated by the vertical calibration bar transecting the x-axis and 100 millisecond increments in time are indicated by tic marks. Voltage maps are differences between two conditions and reflect interpolated voltages across the scalp at a particular point in time.

Several other masked priming studies using other types of stimuli suggest that this earliest of repetition effects is likely due to differential processing at the level of visual features (V-features in Figure 1). In one study (Petit et al. 2006) using the same masked repetition priming parameters as the above word studies, but using single letters as primes and targets, we found that the N/P150 was significantly larger to mismatches between prime and target letter case, but more so when the features of the lower and upper case versions of the letters were physically different compared to when the they were physically similar (e.g., A-A compared to a-A vs. C-C compared to c-C). In another line of research using pictures as primes and targets (Eddy et al. 2006) we have been able to further test the domain specificity of the N/P150 effect for letters and words in masked priming. In this picture priming study, we found an early posterior positivity and frontal negativity to pictures of objects that were physically different in the prime and target positions compared to repeated pictures. This positivity had a similar scalp distribution (posterior right for the positivity and bilateral frontal for the negativity) and time-course to the N/P150 that we found for word and letter targets. However, while the effect for letters and words was on the order of .5 to 1.0 μ volts in amplitude, the effect for pictures was greater than 2 μ volts. Moreover the picture effect peaked somewhat later (~190 ms). The larger and later picture effect could be due to the fact that the different prime and target pictures match or mismatch on a larger number of visual features and have more features than do single letters and five-letter words. Together, the results from these studies suggest that these early N/P effects are sensitive to processing at the level of visual features (V-features in the model in Figure 1) and that this process occurs in the time frame between 90 and 200 ms.

More recently, we have obtained additional evidence for the hypothesis that the N/P150 is driven by featural overlap across prime and target stimuli. Chauncey et al. (2008) manipulated changes in size (large vs. small) and font (same vs. different) between prime and target words in a masked repetition priming paradigm. A change versus no-change in font from the prime to target word was found to differentially affect repetition priming in the N/P150 component, while a change versus non-change in letter size from prime to target produced only main effects of size and repetition (see Figure 4). More specifically, while N/P150 repetition priming was not affected by a change in size across prime and target, it was significantly diminished when there was a change in font. This suggests that the N/P150 reflects processing at the level of size invariant visual features.

Fig. 4.

Scalp map of ERPs from Chauncey et al. (2008) showing the effect of stimulus font on the N/P150 component. This map was formed from differences waves centered at 150 ms post-target onset. The difference waves were calculated by subtracting target ERPs with a font change from prime to target from target ERPs where prime and target font were the same.

Finally, Dufau, Grainger and Holcomb (2008) found evidence that the N/P150 generated by letter strings is sensitive to small changes in position of prime and target stimuli. A robust N/P150 repetition priming effect was found in the standard situation with primes in the same position as targets, but this effect disappeared when primes were shifted by one letter space to the left or to the right of targets (see Figure 5). This was taken as evidence that the N/P150 reflects the retinotopic mapping of visual features onto location-specific letter detectors. Since the ERP components following the N/P150 were not affected by displacement of the prime, this suggests that the shift from a location-specific to a location-invariant orthographic code is rapidly achieved after the N/P150 component. These results all converge to suggest that the N/P150 is modulated by the level of feature overlap between prime and target stimuli and, in the case of printed words, likely reflects the mapping of visual features onto location-specific letter representations (i.e., the first component process shown in Figures 1 and 2).

Fig. 5.

N/P150 repetition effect when primes are aligned with targets (b) or displaced by one letter space to the left (a) or to the right (c – adapted from Dufau et al. 2008).

The N250

Following the N/P150, a subsequent negative-going wave that starts as early as 110 ms and peaks around 250 ms is also sensitive to masked repetition priming of words, being more negative to targets that are unrelated to the previous masked prime word than those that are repeats of the prime (see Figure 6a). We referred to this as the N250 effect (by ‘effect’ we mean the difference between repeated and unrelated target ERPs). Unlike the earlier N/P150, the N250 has a more widespread scalp distribution being largest over midline and slightly anterior left hemisphere sites (see Figure 6c). Also, unlike the N/P150, N250-like effects were not observed in our picture or letter studies, but they were apparent when targets were pseudowords (Figure 6b). Finally, while the N250 was modulated when both primes and targets were visual letter strings, no such modulation was present when primes were visual and targets were auditory words (Figure 6d).

Fig. 6.

ERPs to words in a masked word priming study (Kiyonaga et al., 2007) for visual word targets show an N250 effect (a), visual nonword targets show a similar effect (b), scalp maps of the N250 effect (c), no N250 was observed to auditory targets in cross-modal masked priming (d), and N250 effects were observed in a second study (Holcomb and Grainger 2006) to both fully repeated words and partial word repetitions (e).

The N250 is sensitive to the degree of prime-target orthographic overlap, being somewhat larger for targets that overlap their primes by all but one letter compared to targets (e.g., teble-TABLE) that completely overlap with their primes (Holcomb and Grainger 2006 – see Figure 6e), and is not sensitive to small shifts of prime location (Dufau et al. 2008). This suggests that the N250 reflects processing in the orthographic and phonological pathways of our model, situated after the initial phase of retinotopic feature-letter processing. The likely locus would be the sublexical orthographic and phonological representations involved in mapping letters onto whole-word form representations.

According to the above interpretation of the N250 effect, phonological overlap across primes and targets should also cause amplitude changes in this component. Furthermore, the BIAM predicts that such phonological influences should only slightly lag behind orthographic effects. Grainger et al. (2006a) found evidence for phonological priming on the N250. In this study, target words were primed by briefly presented (50 ms) pattern-masked pseudowords that varied in terms of their phonological overlap with target words but were matched for orthographic overlap (e.g., bakon-BACON vs. bafon-BACON). In this example, the pseudoword ‘bakon’ would typically be pronounced by a native speaker of English as the real word ‘bacon’, and is referred to as a pseudohomophone of that word. As shown in Figure 7, phonological priming had its earliest influence starting around 225 ms post-target onset, and caused a significant reduction in N250 (and N400) amplitude compared to the orthographic controls. Grainger et al. (2006a) compared this phonological priming with the effects of transposed-letter primes (e.g., barin-BRAIN vs. bosin-BRAIN). Prior behavioral research suggests that transposed-letter primes reflect processing in relative-position coded letter representations (see Grainger 2008, for review). We therefore expected to see an effect of these primes on the N250, and arising earlier than our phonological priming effect. As can be seen in Figure 7, this was indeed what we observed. Our transposed-letter priming manipulation had most of its influence in the early phase of the N250, starting at around 200 ms post-target onset, and with a definite posterior distribution compared with the more anterior and later phonological priming effect.

Fig. 7.

(a) ERPs generated by target words following masked presentation of pseudohomophone primes (e.g., bakon-BACON) versus orthographic control primes (e.g., bafon-BACON). (b) and (c) Voltage maps calculated by subtracting target ERPs from the pseudohomophone condition from their control ERPs (b) and the Transposed Letter ERPs from their controls (c). Adapted from Grainger et al. (2006a).

Finally, it is important to note that in the Grainger et al. (2006a) study there was no effect of either phonological priming or transposed-letter priming on the N/P150 component. This is in line with our interpretation of this component as reflecting retinotopic feature-to-letter processing that is performed prior to the sublexical translation of orthography onto phonology. Furthermore, the fact that our phonological priming manipulation did not affect the N/P150 component is evidence that our orthographic controls were well matched to the pseudohomophone primes in terms of visual-orthographic overlap. Our transposed-letter manipulation did not affect this component since precise location is important at this retinotopic level of processing.

The P325

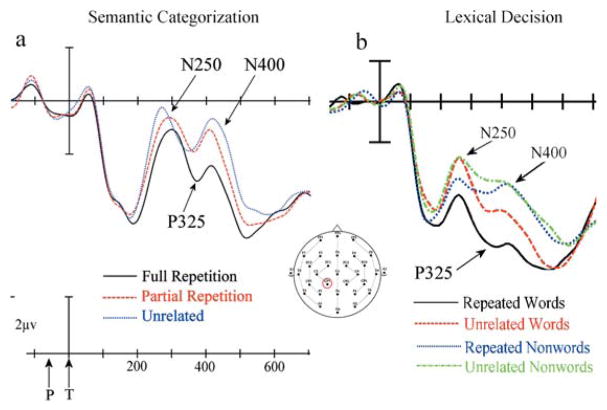

In the Holcomb and Grainger (2006) study, we also, for the first time, reported evidence for a second component that was sensitive to our masked priming manipulation. We referred to this component as the P325 and as can be seen in Figure 8a, it intervened between the N250 and the later N400, peaking near 325 ms. Evidence that this is actually a component independent from the surrounding negativities can be seen in Figure 8a. While the N250 and N400 produced similar graded responses to the three levels of priming (most negative to Unrelated, intermediate to Partial Repetition and least negative to Full Repetition), the P325 was more positive only in the Full Repetition condition (the Partial Repetition and Unrelated did not differ). This pattern of results is consistent with the hypothesis that the P325 is sensitive to processing within whole-word representations, being modulated only when prime and target words completely overlap. Further support for this hypothesis can also be seen in Figure 8b, where we have re-plotted the ERPs from Figure 6c contrasting repetition effects for words and pseudowords on a longer time scale (through 700 ms). In this figure the positivity we are calling the P325 can be clearly seen intervening between the N250 and N400. Moreover, while it is larger for repeated compared to unrelated words, it does not differentiate repeated compared to unrelated pseudowords. This makes sense given that pseudowords are not represented lexically and therefore should not produce repetition effects within whole-word representations themselves. Furthermore, the fact that Grainger et al. (2006a) found a significant effect of pseudohomophone priming on the P325 component is evidence that this component reflects processing at the level of whole-word phonological representations as well as whole-word orthographic representations (O-words and P-words in the BIAM). This interpretation of the P325 component fits with the results of other ERP and MEG (magneto-encephalography) studies, where effects thought to reflect lexical identification were found in approximately the same time-window (e.g., Friedrich et al. 2004; Pylkkänen et al. 2002). However, the use of different paradigms (with or without priming, and with different levels of prime visibility) complicates any direct comparison of ERP components across studies. What is critical, for the present purposes, is that there is a consensus with respect to the functional significance that is assigned to a given component tied to a specific time-window.

Fig. 8.

ERPs to visual targets in a masked priming paradigm when the task was semantic categorization (a – from Holcomb and Grainger 2006) and lexical decision (b – from Kiyonaga et al. 2007).

The N400

Following the N250 and P325, we have also seen evidence for the modulation of the N400 component in our masked repetition priming experiments. In the above two studies (Holcomb and Grainger 2006; Kiyonaga et al. 2007) N400 amplitudes were larger to words that were unrelated to their primes (Figure 8a,b), although this was not the case for pseudoword targets (Figure 8b). As in our previous work using single-word paradigms and supraliminal priming (e.g., Holcomb et al. 2002), we interpret this pattern of findings as being consistent with N400 repetition priming reflecting interactions between levels of representation for whole-words and semantics (the so-called ‘form-meaning interface’). In the BIAM, this interface arises between whole-word orthographic and phonological representations (O-words, P-words) and higher-level semantic representations (S-units) shown in Figure 1. In our view the N400 reflects the amount of effort involved in forming links between these levels, with larger N400 amplitudes indicating that more effort was involved.

Evidence for at least a partial independence of the above array of components comes from their different patterns of peak latencies and scalp distribution. As seen in the voltage maps in Figure 9 (derived from subtracting Full Repetition ERPs from Unrelated ERPs), the ERPs in the time periods of all three components (N250, P325, and N400) have relatively widespread distributions across the scalp. While the N250 tended to have a more frontal focus, the N400 had a more posterior focus. The P325 had on the other hand had an even more posterior (occipital) midline focus.

Fig. 9.

Scalp voltage maps for visual masked priming repetition effects in three different time epochs surrounding the three ERP components reported in Holcomb and Grainger (2006).

ERPs and prime–Target SOA

One question that emerges from the above review is why the numerous earlier studies manipulating word level variables have not reported similar N/P150, N250, and P325 effects. In almost all of these earlier studies, the first lexically sensitive ERP component is the N400 (see Barber and Kutas 2007 for a recent review; also see Holcomb 1993; Bentin et al. 1999; Holcomb et al. 2002). At one level the obvious answer is that none of these studies used the masked priming technique and that maybe the earlier components rely on some aspect of masking to be detected. However, several studies prior to Holcomb and Grainger (2006) that also used ERPs in the masked repetition priming paradigm did not find evidence for repetition effects earlier than the N400 (Misra and Holcomb 2003; Holcomb et al. 2005b). The biggest difference between these earlier studies and the Holcomb and Grainger experiment was the duration of the interval between the prime and target events. In Holcomb and Grainger (2006), and most of the work done by behavioral researchers interested in word recognition, the prime–target interval (so-called stimulus-onset-asynchrony or SOA) has been relatively short. In Holcomb and Grainger (2006), it was 70 ms (50 ms duration of the prime and a 20 ms backward mask with the target immediately replacing the backward mask). In earlier ERP masking studies, typical prime–target SOAs have been between 500 and 1000 ms. These longer SOAs have been used to avoid problems with the overlap of ERPs that occurs when multiple events are presented in a short period of time. So, one possibility is that many of the early ERP effects discussed above might be refractory at the longer SOA used prior to the Holcomb and Grainger (2006) report. This seems reasonable given that at least one behavioral study has found that the size of repetition priming effects in the masked priming paradigm diminishes with increasing SOA (for a fixed 29 ms prime duration), and is absent with SOAs of 500 ms or greater (Ferrand 1996b). To more systematically examine this possibility, Holcomb and Grainger (2007) manipulated the SOA between prime and target words in the masked repetition priming paradigm. They chose prime-target SOA intervals near the two extremes reported in both the long SOA studies (e.g., Holcomb et al. 2005b – 480 ms and Holcomb and Grainger 2006 – 60 ms) as well as two intermediate values (180 ms and 300 ms). Plotted in Figure 10 are ERPs from the CP1 electrode site for each of the four SOAs. As can be seen, the two shortest SOAs (60 and 180 ms) produced both the later N400 and the earlier N250. However, the two longer SOAs (300 and 480 ms) only produced the later N400.

Fig. 10.

Target ERPs from the SOA experiment of Holcomb and Grainger (2007).

In their second experiment Holcomb and Grainger (2007) held the SOA constant at 60 ms and instead manipulated the duration of the prime between 10 and 40 ms and filled in the balance of the SOA with the backward mask. Here the goal was to extend the study of the temporal dynamics of visual word recognition by determining the minimal amount of prime processing necessary to reveal ERP priming effects. Plotted in Figure 11 are the ERPs from this experiment. Note that while the N250 and N400 are clearly apparent at 30 and 40 ms prime durations, only the N250 was visible at 20 ms, and neither component was detectable at 10 ms.

Fig. 11.

Target ERPs from the prime duration experiment of Holcomb and Grainger (2007).

Connecting ERP Components to Component Processes

Figure 12 illustrates one possible scheme for how these different ERP components might map onto the printed word side of the BIAM. Above a diagram of the BIAM is plotted an ERP difference wave that contrasts unrelated and repeated words. The time frame of each component is indicated by the dashed lines connecting the ERP wave to hypothesized underlying processes in the model. The architecture is identical to Figure 1, but turned on its side to facilitate the alignment of component processes with ERP components. Furthermore, we indicate the breakdown of sublexical orthographic representations (O-units) into a location-specific and location-invariant code as described in Figure 2.

Fig. 12.

ERP masked repetition priming effects mapped onto the Bi-modal Interactive Activation Model (note that we have turned the model on its side to better accommodate the temporal correspondence between the model and the ERP effects). This version of the BIAM incorporates the breakdown of sublexical orthographic representations (O-units) into a location-specific, retinotopic (R) code and a location-invariant, word-centered (W) code, as described in Figure 2.

Starting with the N/P150, data presented above are consistent with this component reflecting some aspect of the process, whereby visual features are mapped onto abstract representations relevant to a specific domain of processing – in this case letters and groups of letters. That the N/P150 reflects a domain non-specific process or family of processes is supported by our having found N/P150 effects for letters, letter strings, and pictures of objects. Following the N/P150 is the N250, which we have proposed reflects the output of a visual word-specific process – one whereby orthographic information is mapped onto whole-word representations, either directly or via phonology. Evidence for the domain-specificity of the N250 comes from the fact that we did not see evidence of such a component for spoken words or for pictures in our masked priming studies described above. After the N250 comes the P325 which we have tentatively suggested reflects processing within the lexical system itself (O-words). Finally following the P325 come what we are identifying as two subcomponents of the N400. The N400w (‘w’ for word) we suggest reflects interactions between word and concept level processes (O-word to S-unit). However, because there is ample evidence that N400s are sensitive to semantic processing beyond the word level (e.g., Van Berkum et al. 2003), we also tentatively are suggesting that concept to concept processing (which would support higher level sentence and discourse processing) is reflected in a later phase of the N400 (N400c, c for concept).

We now turn to examine two sets of ERP findings – one that provides a critical test of the type of cross-modality interactions implemented in the BIAM – and the other that highlights the cascaded, interactive nature of processing.

Cross-modal Interactions

One critical aspect of the architecture described in Figures 1, 2, and 12 is the predicted occurrence of strong cross-modal interactions during word recognition. According to this highly interactive architecture, information related to modalities other than that used for stimulus presentation (i.e., the auditory modality for a printed word) should rapidly influence stimulus processing. In the preceding sections, we presented evidence for the involvement of phonological variables during visual word recognition. This finding is perhaps not that surprising given the fact that the process of learning to read requires the deliberate use of phonological information (letters and letter clusters must be associated with their corresponding sounds). However, the BIAM also makes the less obvious prediction that spoken word recognition should be influenced by the orthographic characteristics of word stimuli. Is there any electrophysiological evidence for this, and if yes, what does this tell us about the time-course of cross-modal transfer?

One key behavioral demonstration of cross-modal interactions in word recognition has been obtained using masked cross-modal priming. In this paradigm a briefly presented, pattern-masked visual prime stimulus is immediately followed by an auditory target word. Subjects typically perform a lexical decision on target stimuli (Kouider and Dupoux 2001; Grainger et al. 2003). Grainger et al. (2003) found significant effects of visual primes on auditory target processing at prime exposure durations of only 50 ms. In the Grainger et al. study, cross-modal effects of pseudohomophone primes (e.g., ‘brane’ as a prime for ‘brain’) emerged with longer exposure durations (67 ms), equivalent to the duration necessary for obtaining within-modality (visual-visual) pseudohomophone priming. This is evidence that the phonological route of the BIAM (e.g., the right-hand pathway of Figure 2) is slower than the orthographic route, such that information from a visual stimulus arrives at whole-word phonological representations more rapidly via whole-word orthographic representations than via the sublexical interface between orthography and phonology.

Kiyonaga et al. (2007) examined masked cross-modal priming using ERP recordings to visual and auditory target words and 50 and 67 ms prime exposures. As predicted by the BIAM, we found that at the shorter (50 ms) prime exposures, repetition priming effects on ERPs arose earlier and were greater in amplitude when targets were in the same (visual) modality as primes. However, with slightly longer prime durations (67 ms), the effect of repetition priming on N400 amplitude was just as strong for auditory (across-modality) as for visual (within-modality) targets. The within-modality repetition effect had apparently already asymptoted at the shorter prime duration, while the cross-modal effect was evidently lagging behind the within-modality effect by a small amount of time. Figure 13 shows the change in cross-modal priming from 50 ms to 67 ms prime durations in the Kiyonaga et al. study. This is exactly what one would predict on the basis of the time-course of information in the BIAM. With sufficiently short prime durations (i.e., 50 ms in the testing condition of the Kiyonaga et al. study), feedforward information flow from the prime stimulus has most of its influence in the orthographic pathway, and it is only with a longer prime duration that feedforward activation from a visual prime stimulus can significantly influence activation in whole-word phonological representations. Furthermore, our results suggest that cross-modal transfer is dominated by lexical-level pathways, since we found very little evidence for any early (pre-N400) effects of cross-modal repetition even at the 67 ms prime duration. More precisely, our visual masked prime stimuli would appear to be mostly affecting activity in whole-word phonological representations, either via whole-word orthographic representations or via the sublexical grapheme–phoneme interface (O–P) of the BIAM (see Figure 12). This raises the issue of the nature of the phoneme representations involved in translating an orthographic code into phonology, and their role in spoken-word recognition. The specific architecture of the BIAM shown in Figure 1 maintains a distinction between the phoneme representations involved in grapheme–phoneme translation (O⇔P) and the sublexical representations involved in spoken-word recognition (P-units). Whether or not this distinction should be maintained is clearly an important issue for future research.

Fig. 13.

Auditory Target ERPs in a cross-modal masked priming paradigm from two prime durations (adapted from Kiyonaga et al. 2007).

Not surprisingly, very strong modulations of ERP amplitude are observed in cross-modal priming with supraliminal prime exposures (Anderson and Holcomb 1995; Holcomb et al. 2005a). More important, however, is the fact that we observed a strong asymmetry in the size of cross-modal priming effects as a function of the direction of the switch. Visual primes combined with auditory targets were much more effective in reducing N400 amplitude during target word processing than were auditory primes combined with visual targets. This observed asymmetry in cross-modal priming effects might well reflect an asymmetry in the strength of connections linking orthography and phonology on the one hand, and phonology with orthography on the other. The process of learning to read requires the explicit association of newly learned orthographic representations with previously learned phonological representations, thus generating strong connections from orthography to phonology that could be the source of the strong influence of visual primes on auditory targets shown in Figure 14. On the other hand, the association between phonology and orthography is learned explicitly for the purpose of producing written language, and these associations need not directly influence the perception of visually presented words. This can explain why the auditory–visual cross-modal priming effect shown in Figure 14 is much smaller than the corresponding visual–auditory effect. Within the framework of the BIAM, this would suggest that the bi-directional connections between orthographic and phonological representations differ in strength as a function of direction, with stronger connections in the direction of orthography to phonology. Asymmetric strength of connections at the sublexical interface between orthography and phonology (O ⇔ P in Figure 1) would explain why visual words are better primes for auditory targets than vice versa.

Fig. 14.

Supraliminal cross-modal repetition priming results of Holcomb et al. (2005, 200 ms SOA) showing asymmetry of effects as a function of modality (visual primes – auditory targets on the left, auditory primes – visual targets on the right).

Semantic Influences on Word Recognition

Semantic priming (e.g., car-TRUCK), like repetition priming, produces characteristic differences in ERPs. The most frequently reported effect is that semantically primed target words produce an attenuated N400 component compared to non-primed targets (e.g., Bentin et al. 1985; Holcomb 1988). Such N400 ‘effects’ are believed by many to be sensitive to the lexical and/or semantic properties of the stimulus and its context (e.g., Holcomb 1993). According to this view, words that are easily integrated with their contextual framework produce an attenuated N400, while those that are impossible or difficult to integrate with the surrounding context generate larger N400s. It is controversial as to whether such N400 effects might require conscious processing of the prime and target events. A handful of studies (e.g., Deacon et al. 2000; Kiefer and Spitzer 2000; Kiefer 2002; Grossi 2006) have challenged the notion of a conscious requirement based on having found significant masked semantic priming effects on the N400. These studies have generally concluded that N400 semantic priming reflects a primarily automatic process that does not require conscious processing. We recently have presented two studies challenging this view. Holcomb et al. (2005b) demonstrated that while N400 masked repetition priming effects were unrelated to the detectability of primes, masked semantic priming effects were predicted by how readily participants detected the primes. This suggests that the above-mentioned demonstrations of masked semantic priming might be due to what Holcomb et al. referred to as ‘conscious leakage’ of semantic information from the masked primes. In a more powerful follow-up study directly comparing repetition and semantic priming, Holcomb and Grainger (2007) again showed that at short prime durations, only repetition priming produced any traces of a priming effect. They could find no evidence of masked semantic priming (see Figure 15). They concluded that semantically related pairs did not provide sufficient overlap in processing at the word and/or semantic level to support the rapid interactivity necessary to produce priming effects when primes are masked and presented only a short time before the target. Given the hierarchical nature of processing, as illustrated in the BIAM, semantic priming logically requires a greater amount of processing in order to become detectable compared with form-level priming effects.

Fig. 15.

Masked Repetition and Semantic priming (from Holcomb and Grainger, in press).

The above failure to obtain ERP evidence of masked semantic priming could be due to the relatively weak manipulation involved, and the fact that there is no general consensus as to how to measure semantic relatedness. For example, if weak and strong semantic pairs are mixed in an experiment, then priming effects might be watered down and difficult to detect. Non-cognate translation equivalents (e.g., the English word ‘tree’ and its French translation ‘arbre’) arguably provide the closest possible semantic relation between two distinct word forms. They therefore provide an ideal testing ground for the interplay between form-level and semantic-level processes during visual word recognition (e.g., Grainger and Frenck-Mestre 1998). Recently we have run a masked non-cognate translation priming ERP study in order to have a more sensitive measure of priming effects (Midgley et al. in press). This study included both within-language repetition priming as well as a between-language translation priming. We also blocked the target words by language, thus minimizing the bilingual nature of the study. In each of two experiments, participants were presented with target words in a single language (Exp 1 in English, Exp 2 in French, their L1), while masked primes were presented in both languages. This is important because it has been suggested (Grosjean and Miller 1994) that bilinguals may have different processing modes for situations where both the bilinguals languages are required versus when only a single language is used. In Experiment 1, both within-language repetition (tree-TREE) and L1-L2 translation priming (arbre-TREE) produced effects on the N250 component as well as the later N400 component (see Figure 16 top). In Experiment 2, only within-language repetition (L1-L1) produced effects on the N250 while both types of priming (L1-L1 and L2-L1) produced effects on the N400 (see Figure 16 bottom). Unlike the within-language semantic priming manipulation reviewed above, these results suggest rapid involvement of semantic representations during ongoing form-level processing of printed words. Within the framework of the BIAM, this suggests that when prime–target semantic overlap is high enough, then masked primes can generate significant activity in semantic representations, and this semantic-level activation feeds back to modulate ongoing processing at the level of lexical and sublexical form representations.

Fig. 16.

From Midgley et al. (in press). L1–L2 translation priming (top) and L2–L1 translation priming (bottom).

Other evidence for early effects of semantics has been found in recent work investigating morphological priming (Morris et al. 2007 Morris et al. in press). This research compared priming effects from primes that were true derivations of target words (e.g., farmer-farm) compared with pseudo-derivations such as corner-corn, and purely form overlap (scandal-scan). In line with recent behavioral research (see Rastle and Davis in press, for review), we found priming effects from semantically opaque pseudo-derivations in about the same time-window as priming effects from semantically transparent morphologically related primes, accompanied by no effect of orthographic primes. Our most recent work (Morris et al. in press) suggests that morphological structure independently of semantic transparency is driving priming effects in the early phase of the N250, and that semantic transparency starts to have an influence in the later phase of this component. In other words, a prime such as ‘corner’ will automatically be parsed into its (pseudo-)morphological constituents (corn + er), but this initial morpho-orthographic segmentation is then perturbed by the semantic incompatibility between ‘corn’ and ‘corner’. In line with the results of Midgley et al., this semantic influence starts about 250 ms post-target onset.

There are at least two possible ways to accommodate this, and other evidence (e.g., Dell’Acqua et al. 2007), for fast semantic activation within the framework of our time-course model shown in Figure 12. Recent models of visual object recognition (e.g., Bar et al. 2006) draw a distinction between slow processing of high-spatial frequency information (providing information about the details of the object) and faster processing of low-spatial frequency information (providing information about the gross outline of the object). The visuo-orthographic processing described in our model is thought to be performed by neural processors in the ventral visual stream, with activity spreading from primary visual areas through to occipito-temporal cortex (e.g., Dehaene et al. 2005). This corresponds to the slow high-spatial frequency route of visual object processing, and therefore ignores the possibility of rapid processing of low-spatial frequency information derived from printed words (i.e., word shape). Since all relevant stimuli in the studies cited above were in lowercase, it is possible that some word shape information was available and used to make a fast guess at word identity, hence enabling fast onset of semantic influences relative to ongoing orthographic and phonological processing. Future research could easily test this possibility by manipulating the availability of word shape information in prime stimuli.

An alternative means of integrating early semantic influences in our model is by analogy to the word superiority effect, and the apparent dilemma this effect posed for letter-based approaches to visual word recognition. How can a word be recognized by its component letters if these letters are harder to identify than the word itself (see Grainger 2008, for discussion)? Cascaded interactive processing (McClelland and Rumelhart 1981), provided the response to that dilemma, and provides a similar solution for early semantic effects in visual word recognition. Due to the cascaded nature of processing in the BIAM, activation starts to build up in semantic representations when the bulk of processing is still at the level of sublexical form representations. This early semantic activation can then influence ongoing processing at the level of sublexical form representations via top-down connections. Therefore, the early effects of semantics found in the above-cited studies could reflect the earliest feedback from semantic representations, affecting ongoing processing at the level of form representations. Future research could test this hypothesis by using stimuli that vary in the amount of top-down support they can receive (e.g., random consonant strings vs. pronounceable pseudowords).

Summary and Conclusions

We have presented a functional architecture for visual word recognition (the bi-modal interactive-activation model, BIAM) that describes a set of component processes and how these processing stages interact and connect to the system designed to recognize spoken words during auditory language processing. We then described some specific mechanisms for orthographic and phonological processing within this general framework, and provided ERP evidence for the connectivity and relative timing of these component processes. The model describes how visual features are first mapped onto a location-specific orthographic code before bifurcating onto two pathways for invariant word recognition. One pathway computes a coarse orthographic code in order to rapidly constrain word identity, and the other route a more fine-grained orthographic code used to activate phonology. The cooperation of these two routes helps optimize word recognition given the particular nature of the printed word as a visual object and a linguistic stimulus. The relative timing of priming effects found in various ERP components was found be compatible with the overall architecture of the BIAM and enabled a straightforward mapping of ERP components onto component processes in the model. This not only allowed us to tentatively define the functional significance of these components, but also provided important support for the BIAM. This research paves the way toward formulating a general account of skilled reading that takes into consideration the constraints imposed by the printed word as a visual object (structure in space) as well as the constraints imposed by spoken language (structure in time).

Acknowledgments

Support from NICHD grant HD25889 and HD043251 to Phillip J. Holcomb, and ANR grant 06-BLAN-0337 to Jonathan Grainger.

Biographies

Jonathan Grainger graduated from Manchester University, UK, before obtaining his PhD in Psychology from René Descartes University, Paris, France. He is currently director of the Laboratoire de Psychologie Cognitive at the University of Provence in Marseille, a CNRS-funded research centre in cognitive psychology. He has studied basic processes in visual word recognition in monolinguals and bilinguals, using behavioral experimentation and computational modeling. His latest work has focused on early visuo-orthographic processing of letters and letter strings, with recent publications on this topic in Trends in Cognitive Sciences, Journal of Experimental Psychology: Human Perception and Performance, and Language and Cognitive Processes.

Phillip Holcomb obtained his PhD in Psychology from the New Mexico State University. He is currently a Professor in the Department of Psychology at Tufts University, Medford, MA, and the director of the NeuroCognition Lab. His research, including recent papers in Psychological Science, the Journal of Cognitive Neuroscience, Psychophysiology, Brain Research, and Brain and Language uses ERPs to examine brain mechanisms of language comprehension, especially those involved in visual word recognition.

Footnotes

Deciding whether a particular ERP effect is due to modulation of underlying negative-going or positive-going EEG activity is frequently difficult, and is always, to some degree arbitrary. In this regard we could have just as easily referred to the effect illustrated in Figure 3 as an enhancement of the N1 because it clearly overlaps the exogenous N1. However, we have chosen to refer to this as a positive-going effect for two reasons. First, we think it likely that the mismatching unrelated targets are driving the differences between the unrelated and repeated ERPs in this epoch. Second, this nomenclature is also consistent with the naming scheme used for the N250 and N400, where it is the relative polarity of the ERP to the unrelated condition that is used to describe the polarity of the effect. Note, however, that we will violate this scheme when we introduce the P325 effect. Here we will argue that it is the repeated targets that drive the effect.

Works Cited

- Anderson JE, Holcomb PJ. Auditory and visual semantic priming using different stimulus onset asynchronies: An event-related brain potential study. Psychophysiology. 1995;32:177–90. doi: 10.1111/j.1469-8986.1995.tb03310.x. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, Hamalainen MA, Marinkovic K, Schacter DL, Rosen BR, Halgren E. Top-down facilitation of visual recognition. Proceedings of the National Academy of Sciences USA. 2006;103:449–54. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber HA, Kutas M. Interplay between computational models and cognitive electrophysiology in visual word recognition. Brain Research Reviews. 2007;53:98–123. doi: 10.1016/j.brainresrev.2006.07.002. [DOI] [PubMed] [Google Scholar]

- Bentin S, McCarthy G, Wood CC. Event-related potentials, lexical decision and semantic priming. Electroencephalography and Clinical Neurophysiology. 1985;60:343–55. doi: 10.1016/0013-4694(85)90008-2. [DOI] [PubMed] [Google Scholar]

- Bentin S, Mouchetant-Rostaing Y, Giard MH, Echallier JF, Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels: Time course and scalp distribution. Journal of Cognitive Neuroscience. 1999;11:35–60. doi: 10.1162/089892999563373. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Hillis AE. Spatial representation of words in the brain implied by studies of a unilateral neglect patient. Nature. 1990;346:267–69. doi: 10.1038/346267a0. [DOI] [PubMed] [Google Scholar]

- Chauncey K, Holcomb PJ, Grainger J. Effects of stimulus font and size on masked repetition priming: An ERP investigation. Language and Cognitive Processes. 2008;23:183–200. doi: 10.1080/01690960701579839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coltheart M, Rastle K, Perry C, Langdon R, Ziegler JC. DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychological Review. 2001;108:204–256. doi: 10.1037/0033-295x.108.1.204. [DOI] [PubMed] [Google Scholar]

- Deacon D, Hewitt S, Yang CM, Nagata M. Event-related potential indices of semantic priming using masked and unmasked words: Evidence that the N400 does not reflect a post-lexical process. Cognitive Brain Research. 2000;9(2):137–46. doi: 10.1016/s0926-6410(99)00050-6. [DOI] [PubMed] [Google Scholar]

- Dehaene S. Les neurones de la lecture. Paris: Odile Jacob; 2007. [Google Scholar]

- Dehaene S, Cohen L, Sigman M, Vinckier F. The neural code for written words: A proposal. Trends in Cognitive Sciences. 2005;9:335–341. doi: 10.1016/j.tics.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Dell’Acqua R, Pesciarelli F, Jolicœur P, Eimer M, Peressotti F. The interdependence of spatial attention and lexical access as revealed by early asymmetries in occipito-parietal ERP activity. Psychophysiology. 2007;44:436–443. doi: 10.1111/j.1469-8986.2007.00514.x. [DOI] [PubMed] [Google Scholar]

- Diependaele K, Ziegler JC, Grainger J. Fast Phonology and the Bi-Modal Interactive-Activation Model. 2009 Manuscript submitted for publication. [Google Scholar]

- Dufau S, Grainger J, Holcomb PJ. An ERP investigation of location invariance in masked. Repetition Priming, Cognitive, Affective and Behavioral Neuroscience. 2008;8:222–28. doi: 10.3758/cabn.8.2.222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddy M, Schmid A, Holcomb PJ. A new approach to tracking the time-course of object perception: Masked repetition priming and event-related brain potentials. Psychophysiology. 2006;43:564–68. doi: 10.1111/j.1469-8986.2006.00455.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrand L. The masked repetition priming effect dissipates when increasing the interstimulus interval: Evidence from word naming. Acta Psychologica. 1996;91:15–25. [Google Scholar]

- Ferrand L, Grainger J. Phonology and orthography in visual word recognition: Evidence from masked nonword priming. Quarterly Journal of Experimental Psychology. 1992;45A:353–72. doi: 10.1080/02724989208250619. [DOI] [PubMed] [Google Scholar]

- Ferrand L, Grainger J. The time-course of phonological and orthographic code activation in the early phases of visual word recognition. Bulletin of the Psychonomic Society. 1993;31:119–22. [Google Scholar]

- Ferrand L, Grainger J. Effects of orthography are independent of phonology in masked form priming. Quarterly Journal of Experimental Psychology. 1994;47A:365–82. doi: 10.1080/14640749408401116. [DOI] [PubMed] [Google Scholar]

- Forster KI. The pros and cons of masked priming. Journal of Psycholinguistic Research. 1998;27:203–33. doi: 10.1023/a:1023202116609. [DOI] [PubMed] [Google Scholar]

- Friedrich CK, Kotz S, Friederici AD. ERPs reflect lexical identification in word fragment priming. Journal of Cognitive Neuroscience. 2004;16:541–52. doi: 10.1162/089892904323057281. [DOI] [PubMed] [Google Scholar]

- Frost R, Ahissar M, Gotesman R, Tayeb S. Are phonological effects fragile? The effect of luminance and exposure duration on form priming and phonological priming. Journal of Memory and Language. 2003;48:346–78. [Google Scholar]

- Grainger J. Cracking the orthographic code: An introduction. Language and Cognitive Processes. 2008;23:1–35. [Google Scholar]

- Grainger J, Frenck-Mestre C. Masked translation priming in bilinguals. Language and Cognitive Processes. 1998;13:601–23. [Google Scholar]

- Grainger J, Whitney C. Does the huamn mnid raed wrods as a wlohe? Trends in Cognitive Sciences. 2004;8:58–59. doi: 10.1016/j.tics.2003.11.006. [DOI] [PubMed] [Google Scholar]

- Grainger J, Ziegler J. Cross-code consistency effects in visual word recognition. In: Grigorenko EL, Naples A, editors. Single-word Reading: Biological and Behavioral Perspectives. Mahwah, NJ: Lawrence Erlbaum Associates; 2007. pp. 129–57. [Google Scholar]

- Grainger J, Ferrand L. Phonology and orthography in visual word recognition: Effects of masked homophone primes. Journal of Memory and Language. 1994;33:218–33. [Google Scholar]

- Grainger J, Van Heuven W. Modeling letter position coding in printed word perception. In: Bonin P, editor. The Mental Lexicon. New York: Nova Science Publishers; 2003. pp. 1–24. [Google Scholar]

- Grainger J, Diependaele K, Spinelli E, Ferrand L, Farioli F. Masked repetition and phonological priming within and across modalities. Journal of Experimental Psychology: Learning, Memory and Cognition. 2003;29:1256–69. doi: 10.1037/0278-7393.29.6.1256. [DOI] [PubMed] [Google Scholar]

- Grainger J, Muneaux M, Farioli F, Ziegler J. Effects of phonological and orthographic neighborhood density interact in visual word recognition. Quarterly Journal of Experimental Psychology. 2005;58A:981–98. doi: 10.1080/02724980443000386. [DOI] [PubMed] [Google Scholar]

- Grainger J, Kiyonaga K, Holcomb PJ. The time-course of orthographic and phonological code activation. Psychological Science. 2006a;17:1021–6. doi: 10.1111/j.1467-9280.2006.01821.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grainger J, Granier JP, Farioli F, Van Assche E, van Heuven W. Letter position information and printed word perception: The relative-position priming constraint. Journal of Experimental Psychology: Human Perception and Performance. 2006b;32:865–84. doi: 10.1037/0096-1523.32.4.865. [DOI] [PubMed] [Google Scholar]

- Grosjean F, Miller J. Going in and out of languages: An example of bilingual flexibility. Psychological Sciences. 1994;5:201–6. [Google Scholar]

- Grossi G. Relatedness proportion effects on masked associative priming: An ERP study. Psychophysiology. 2006;43:21–30. doi: 10.1111/j.1469-8986.2006.00383.x. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ. Automatic and attentional processing: An event-related brain potential analysis of semantic priming. Brain and Language. 1988;35:66–85. doi: 10.1016/0093-934x(88)90101-0. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ. Semantic priming and stimulus degradation: Implications for the role of the N400 in language processing. Psychophysiology. 1993;30:47–61. doi: 10.1111/j.1469-8986.1993.tb03204.x. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Grainger J. The time-course of masked repetition priming: An event-related brain potential investigation. Journal of Cognitive Neuroscience. 2006;18:1631–43. doi: 10.1162/jocn.2006.18.10.1631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcomb PJ. The effects of stimulus duration and prime-target SOA on ERP measures of masked repetition priming. Brain Research. 2007;1180:39–58. doi: 10.1016/j.brainres.2007.06.110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcomb PJ. ERP effects of short interval masked associative and repetition priming. Journal of Neurolinguistics. doi: 10.1016/j.jneuroling.2008.06.004. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcomb PJ, O’Rourke T, Grainger J. An event-related brain potential study of orthographic similarity. Journal of Cognitive Neuroscience. 2002;14:938–50. doi: 10.1162/089892902760191153. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Anderson A, Grainger J. An electrophysiological investigation of cross-modal repetition priming at different stimulus onset asynchronies. Psychophysiology. 2005a;42:493–507. doi: 10.1111/j.1469-8986.2005.00348.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcomb PJ, Reder L, Misra M, Grainger J. Masked priming: An event-related brain potential study of repetition and semantic effects. Cognitive Brain Research. 2005b;24:155–72. doi: 10.1016/j.cogbrainres.2005.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs AM, Rey A, Ziegler JC, Grainger J. MROM-p: An interactive activation, multiple read-out model of orthographic and phonological processes in visual word recognition. In: Grainger J, Jacobs AM, editors. Localist Connectionist Approaches to Human Cognition. Hillsdale, NJ: Erlbaum; 1998. pp. 147–88. [Google Scholar]

- Kiefer M. The N400 is modulated by unconsciously perceived masked words: Further evidence for an automatic spreading activation account of N400 priming effects. Cognitive Brain Research. 2002;13:27–39. doi: 10.1016/s0926-6410(01)00085-4. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Spitzer M. Time course of conscious and unconscious semantic brain activation. Neuroreport. 2000;11(11):2401–7. doi: 10.1097/00001756-200008030-00013. [DOI] [PubMed] [Google Scholar]

- Kiyonaga K, Midgley KJ, Holcomb PJ, Grainger J. Masked cross-modal repetition priming: An ERP investigation. Language and Cognitive Processes. 2007;22:337–76. doi: 10.1080/01690960600652471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kouider S, Dupoux E. A functional disconnection between spoken and visual word recognition: Evidence from unconscious priming. Cognition. 2001;82:B35–49. doi: 10.1016/s0010-0277(01)00152-4. [DOI] [PubMed] [Google Scholar]

- Luck SJ. An introduction to the event-related potential technique. Cambridge, MA: MIT Press; 2005. [Google Scholar]

- Lukatela G, Turvey MT. Visual access is initially phonological: 2. Evidence from phonological priming by homophones, and pseudohomophones. Journal of Experimental Psychology: General. 1994;123:331–53. doi: 10.1037//0096-3445.123.4.331. [DOI] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: Expertise for reading in the fusiform gyrus. Trends in Cognitive Sciences. 2003;13:155–61. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Rumelhart DE. An interactive activation model of context effects in letter perception. 1. An account of basic findings. Psychological Review. 1981;88:375–407. [PubMed] [Google Scholar]

- Midgley K, Holcomb PJ, Grainger J. Psychophysiology. Masked repetition and translation priming in second language learners: A window on the time-course of form and meaning activation using ERPs. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Misra M, Holcomb PJ. The electrophysiology of word-level masked repetition priming. Psychophysiology. 2003;40:115–30. doi: 10.1111/1469-8986.00012. [DOI] [PubMed] [Google Scholar]

- Morris J, Frank T, Grainger J, Holcomb PJ. Semantic transparency and masked morphological priming: An ERP investigation. Psychophysiology. 2007;44:506–21. doi: 10.1111/j.1469-8986.2007.00538.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris J, Grainger J, Holcomb PJ. An electrophysiological investigation of early effects of masked morphological priming. Language and Cognitive Processes. 2008;23:1021–56. doi: 10.1080/01690960802299386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perea M, Lupker SJ. Can CANISO activate CASINO? Transposed-letter similarity effects with nonadjacent letter positions. Journal of Memory and Language. 2004;51:231–46. [Google Scholar]

- Peressotti F, Grainger J. The role of letter identity and letter position in orthographic priming. Perception and Psychophysics. 1999;61:691–706. doi: 10.3758/bf03205539. [DOI] [PubMed] [Google Scholar]

- Perfetti CA, Bell LC. Phonemic activation during the first 40 ms of word identification: Evidence from backward masking and priming. Journal of Memory and Language. 1991;30:473–85. [Google Scholar]

- Perry C, Ziegler JC, Zorzi M. Nested incremental modeling in the development of computational theories: The CDP+ model of reading aloud. Psychological Review. 2007;114:273–315. doi: 10.1037/0033-295X.114.2.273. [DOI] [PubMed] [Google Scholar]

- Petit J, Grainger J, Midgley KJ, Holcomb PJ. On the time-course of processing in letter perception: A masked priming ERP investigation. Psychonomic Bulletin and Review. 2006;13:674–81. doi: 10.3758/bf03193980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyllkänen L, Stringfellow A, Marantz A. Neuromagnetic evidence for the timing of lexical activation: An MEG component sensitive to phonotactic probability but not to neighborhood density. Brain and Language. 2002;81:666–78. doi: 10.1006/brln.2001.2555. [DOI] [PubMed] [Google Scholar]

- Rastle K, Davis M. Morphological decomposition is based on the analysis of orthography. Language and Cognitive Processes. 2008;23:942–71. [Google Scholar]

- Rastle K, Brysbaert M. Masked phonological priming effects in English: Are they real? Do they matter? Cognitive Psychology. 2006;53:97–145. doi: 10.1016/j.cogpsych.2006.01.002. [DOI] [PubMed] [Google Scholar]

- Schoonbaert S, Grainger J. Letter position coding in printed word perception: Effects of repeated and transposed letters. Language and Cognitive Processes. 2004;19:333–367. [Google Scholar]

- Stevens M, Grainger J. Letter visibility and the viewing position effect in visual word recognition. Perception and Psychophysics. 2003;65:133–51. doi: 10.3758/bf03194790. [DOI] [PubMed] [Google Scholar]

- Tydgat I, Grainger J. Serial position effects in the identification of letters, symbols, and digits. Journal of Experimental Psychology: Human Perception and Performance. 2009 doi: 10.1037/a0013027. in press. [DOI] [PubMed] [Google Scholar]

- Van Assche E, Grainger J. A study of relative-position priming with superset primes. Journal of Experimental Psychology: Learning, Memory and Cognition. 2006;32:399–415. doi: 10.1037/0278-7393.32.2.399. [DOI] [PubMed] [Google Scholar]

- Van Berkum JJA, Zwitserlood P, Brown CM, Hagoort P. When and how do listeners relate a sentence to the wider discourse? Evidence from the N400 effect. Cognitive Brain Research. 2003;17:701–18. doi: 10.1016/s0926-6410(03)00196-4. [DOI] [PubMed] [Google Scholar]

- Welvaert M, Farioli F, Grainger J. Graded effects of number of inserted letters in superset priming. Experimental Psychology. 2008;55:54–63. doi: 10.1027/1618-3169.55.1.54. [DOI] [PubMed] [Google Scholar]

- Ziegler J, Ferrand L, Jacobs AM, Rey A, Grainger J. Visual and phonological codes in letter and word recognition: Evidence from incremental priming. Quarterly Journal of Experimental Psychology. 2000;53A:671–92. doi: 10.1080/713755906. [DOI] [PubMed] [Google Scholar]