Abstract

Discovering whether children prefer reinforcement via a contingency or independent of their behavior is important considering the ubiquity of these programmed schedules of reinforcement. The current study evaluated the efficacy of and preference for social interaction within differential reinforcement of alternative behavior (DRA) and noncontingent reinforcement (NCR) schedules with typically developing children. Results showed that 7 of the 8 children preferred the DRA schedule; 1 child was indifferent. We also demonstrated a high degree of procedural fidelity, which suggested that preference is influenced by the presence of a contingency under which reinforcement can be obtained. These findings are discussed in terms of (a) the selection of reinforcement schedules in practice, (b) variables that influence children's preferences for contexts, and (c) the selection of experimental control procedures when evaluating the effects of reinforcement.

Keywords: concurrent-chains arrangement, contingency strength, differential reinforcement, noncontingent reinforcement, preference assessment

Two treatments routinely programmed after determining the social function of problem behavior (Iwata, Dorsey, Slifer, Bauman, & Richman, 1982/1994) involve either the contingent or noncontingent delivery of reinforcement. Contingent reinforcement is usually delivered following the occurrence of a target response (e.g., differential reinforcement); by contrast, noncontingent reinforcement (NCR) is delivered independent of responding via a time-based schedule (Vollmer, Iwata, Zarcone, Smith, & Mazaleski, 1993). Selecting either schedule type may be influenced by the relative effectiveness of each (Kahng, Iwata, DeLeon, & Worsdell, 1997) or practical issues associated with implementation (Hagopian, Fisher, & Legacy, 1994).

Kahng et al. (1997) and Hanley, Piazza, Fisher, Contrucci, and Maglieri (1997) both showed that differential reinforcement of an alternative response (DRA) and the noncontingent delivery of the same amount of social reinforcement (i.e., adult attention) produced similar reductions in problem behavior maintained by adult attention. Their collective results suggested that schedule selection could not be determined on the basis of efficacy. It has been argued that NCR schedules may be more practical because constant proximity to the client and observation of behavior are not necessary with these schedules (Hagopian et al., 1994; Vollmer et al., 1993). By contrast, a DRA schedule may be advantageous because the inherent response-dependent contingency strengthens some aspect of a child's repertoire; this feature may ultimately allow children to be more active in educational and social contexts (Durand, 1999).

Individual preferences may be an additional factor to consider when determining how to program social reinforcement. In addition to demonstrating the efficacy of DRA and NCR schedules, Hanley et al. (1997) identified children's preferences for these schedules and used these data as the basis for recommending the means through which social reinforcement should be provided. Their preference assessment involved a concurrent-chains arrangement, in which pressing one of three table-top switches outside a therapy room produced brief access to one of three different schedules (DRA, NCR, or extinction) in the room. With equal rates and distribution of reinforcement presumably obtained under DRA and NCR, the only difference between these two conditions was the programmed contingency. Both children allocated 70% of their selections toward the switch that produced access to the DRA or response-dependent schedule. Therefore, the value of a DRA schedule was not found in the direct effects on behavior, but rather from the observation that the children preferred contingent social interaction.

Determining schedules of reinforcement that are preferred by children has implications beyond the selection of interventions for problem behavior. Identification of schedules that promote desirable behavior while decreasing undesirable behavior has historically been of interest in educational contexts (Neef et al., 2004); however, students' preferences for the delivery schedule of putative reinforcers (e.g., teacher praise and social interaction) has not been evaluated. Given the high frequency of teacher–student interactions and the fact that they are sometimes explicitly programmed (Hall, Lund, & Jackson, 1968; Hart, Reynolds, Baer, Brawley, & Harris, 1968), information regarding the value of social reinforcement schedules to those directly experiencing them should be of interest.

Continued evaluation of children's preferences for contingent and noncontingent reinforcement is important for several additional reasons. First, the findings of Hanley et al. (1997) were limited to only 2 children with intellectual disabilities who had been admitted to an inpatient hospital for the assessment and treatment of severe problem behavior. Additional research should be conducted with children of typical development and a larger number of children to determine if the preference for contingent reinforcement has generality. Second, preference should be assessed within commonly experienced social contexts to determine if the relation is observed under more typical conditions. Third, measuring and analyzing the extent to which the programmed schedules were implemented with fidelity would increase confidence in the identified relations. Fourth, determining the response–reinforcer relations experienced by the children may allow a description of more specific factors that influence children's preference for the schedule.

Therefore, we initially demonstrated the reinforcing value of social interaction with 8 children of typical development. An evaluation of the efficacy of and preference for obtaining social interaction via a DRA or NCR schedule within a play context was then conducted. The extent to which the schedules were implemented with fidelity, and the response–reinforcer relations experienced by the children, were also described and analyzed.

METHOD

Participants and Setting

Children of typical development enrolled in a full-day, university-based, inclusive preschool participated. Following parental consent procedures and institutional review board approval, children were selected who exhibited a manding repertoire that included the responses “excuse me” and “Kevin” and provided consistent daily assent. At the onset of the study, Amy and Cia were 4 years 7 months old, Dee and Sam were 4 years 4 months old, Ted was 5 years 4 months old, Beth was 3 years 8 months old, Ed was 4 years 8 months old, and Lou was 3 years 7 months old. Sessions were conducted in a room (3 m by 3 m) equipped with a one-way observation window. The child was situated in a chair at the middle of a table (0.5 m by 1.5 m), and the experimenter sat in a chair at the end of the table.

Color Preference Assessment

Prior to all schedule comparisons, different colored cards were empirically derived to function as initial-link stimuli. A preference assessment, similar to that of Fisher et al. (1992), was conducted to identify moderately preferred colors in attempt to decrease the likelihood that selections would be influenced by an existing color bias. Differential consequences were not provided following colored card selections, in that praise followed every selection. Moderately preferred colors, defined as being neither most highly or least preferred, were randomly assigned to each schedule described below.

Efficacy Evaluation

The purposes of conducting the efficacy evaluation prior to arranging for children to make choices among the schedules were (a) to teach the association between selecting a particular colored card and experiencing the corresponding schedule of reinforcement and (b) to evaluate the effects of each schedule on rates of child responding. Efficacy sessions consisted of the experimenter making one selection of each colored card available in the initial link. Following a card selection, contingency-specifying instructions and a brief role play of the schedule's parameters were provided, and then the child entered the session room to experience the schedule for 3 min. The placement of the colored cards was randomly determined for the first session of each session block, but they were rotated clockwise for each subsequent session. The order of efficacy sessions was randomly determined and counterbalanced. The effects on responding were evaluated within a multielement design, and the evaluation ended when clear patterns in the data were evident via visual inspection. Once discriminated performance was detected in the terminal links during the efficacy evaluation, the preference assessment was initiated.

Prior to each efficacy session, the experimenter stood next to the child and said, “Hand me the [color] card.” In the contingent reinforcement (CR) and DRA schedules, the experimenter held up the associated colored card and said, “[Child's name], when you hand me the [color] card, it is your time now; when you say ‘Kevin’ or ‘excuse me’ I can talk with you, and I can play with you.” In NCR, the experimenter said, “[Child's name], when you hand me the [color] card, sometimes I am going to talk with you and play with you, and sometimes I am not.” In the no-reinforcement and extinction schedules, the experimenter held up the associated colored card and said, “[Child's name], when you hand me the [color] card, it is my time now; I will not be able to talk with you, and I will not be able to play with you.” After providing the instructions for a given schedule, the experimenter then initiated a brief role play by saying, “Let's practice, one, two, three, start.” During the role play in the CR and DRA schedules, when the child said, “Kevin” or “excuse me,” 3 to 5 s of social interaction was experienced. If the target response was not readily emitted, a prompt (“say ‘Kevin’ or ‘excuse me’”) was provided. In the NCR role play, social interaction was delivered independent of the child's responding. By contrast, in the no-reinforcement and extinction schedules, the experimenter did not look at the child for 10 s. The schedule interactions were role played twice. The child and experimenter then entered the session room to experience the associated contingencies (described below).

Preference Assessment

Each session consisted of experiencing one initial link and one terminal link within a concurrent-chains arrangement (Hanley et al., 1997). The initial link was composed of concurrently available colored cards (10 cm by 10 cm) located 25 cm apart on the session room door from which selections of a single card were made. The instructions and role play were no longer provided prior to entering the terminal link during the preference assessment. Blocks of three to six sessions were conducted daily; a short break (4 to 5 min) occurred between sessions, consisting of the experimenter and child engaging in a child-selected activity (e.g., coloring, playing catch). Prior to conducting a block of sessions, a child entered a room filled with toys and games and made a selection of one item or activity; the same selected item was freely available in all terminal links.

An efficacy evaluation followed by a preference assessment were conducted initially for CR (target response: “Kevin”) and no-reinforcement conditions. A subsequent set of efficacy and preference assessments were conducted for DRA (target response: “excuse me”), NCR, and extinction conditions for all 8 children. The experimenter asked the child to “hand me the card that you like the best” prior to each initial-link selection. If the child did not respond within 5 s, the instruction was repeated; no child failed to make a selection following the second prompt. Then, the experimenter and child immediately entered the session room to experience the associated schedule. Sessions were terminated when the preference criterion was met. A terminal link was considered preferred when a colored card was selected four more times than any other card. If the preference criterion was not met by the 20th selection, the assessment was discontinued.

Descriptions of the Schedule Comparisons

Comparison of CR and no reinforcement

This schedule comparison was conducted to determine whether brief social interaction delivered by the experimenter would function as a reinforcer and to determine if the described concurrent-chains arrangement was capable of detecting children's preferences. In the no-reinforcement condition, responding did not produce any stimulus changes; that is, the experimenter never diverted the direction of his eyes or head from the reading materials. By contrast, during the CR condition, 3 to 5 s of social interaction was programmed to be delivered immediately after the child said “Kevin.”

Comparison of DRA, NCR, and extinction

This schedule comparison evaluated the effectiveness of DRA, NCR, and extinction for reducing the previously reinforced response “Kevin.” The procedures for DRA replicated the manner in which CR was arranged, except the target response, “excuse me” (rather than “Kevin”) was reinforced with 3 to 5 s of social interaction. Extinction replicated all procedures as described for the no-reinforcement schedule. Social interaction was delivered on a time-based schedule in NCR. The frequency and temporal distribution of social interaction delivered in NCR were yoked to those observed in the preceding DRA session. Yoking involved segmenting the duration of a DRA session (i.e., 180 s) into 36 5-s intervals and marking an X for each interval in which a reinforcer was delivered. During the next NCR session, the marked data sheet cued the experimenter to deliver 3 to 5 s of social interaction in every interval marked with an X.

Response Measurement

Within the initial link, each occurrence of a specific card selection, defined as handing one of the available cards to the experimenter, was scored using paper and pencil. Within the terminal links, the dependent measures included two vocal responses, saying “Kevin” and “excuse me.” In addition, the delivery of 3 to 5 s of social interaction by the experimenter was scored as any vocal (e.g., “Wow, that looks really interesting”) or nonvocal (e.g., a head nod and smile) behavior directed toward the child. A continuous measurement system was used that collected data in real time via a handheld computer that provided a second-by-second account of the occurrence of target responses and events during the terminal links.

Interobserver Agreement

Interobserver agreement was assessed by having a second observer simultaneously but independently score card selections in the initial links and child vocal responses and social interaction in the terminal links. Initial-link agreement was defined as both observers scoring the same card for an initial-link selection and dividing that number by the total number of selections. Agreement data were collected for 92% of all initial-link selections, and there was 100% agreement across all 8 children. Agreement for child vocal responses and reinforcer deliveries (i.e., episodes of social interaction) was determined by partitioning terminal links into 10-s bins and comparing data collectors' observations on an interval-by-interval basis. Within each interval, the smaller number of responses was divided by the larger number; if no target responses or events were observed by both observers, 100% agreement was scored for the session. These quotients were then multiplied by 100% and averaged across all intervals. At least 30% of sessions were scored by a second observer for all participants. Agreement for child vocal responses averaged 97% across participants (agreement for individual sessions ranged from 74% to 100%). Agreement for the delivery of social interaction averaged 96% across participants (agreement for individual sessions ranged from 71% to 100%).

Procedural Fidelity

Our primary question was whether children preferred response-dependent or response-independent reinforcement. Therefore, determining the extent to which the schedules were accurately implemented and reinforcement was correctly yoked allows (a) assessment of the degree to which the schedules experienced by the child matched the programmed schedules and (b) identification of factors such as unequal amounts of reinforcement across DRA and NCR that may have influenced preference.

For the DRA sessions, our procedural fidelity measures involved (a) an assessment of whether reinforcers were delivered when and only when a response occurred (dependent fidelity) (b) within 2 s following each response (temporal fidelity). The assessment of dependent fidelity allowed errors of omission (withholding scheduled reinforcer deliveries) and commission (delivery of unscheduled reinforcers) to be detected, and the test of temporal fidelity allowed timing errors (e.g., delayed reinforcement) to be detected.

Response–reinforcer occurrences with fidelity were defined as responses followed by a reinforcer prior to the occurrence of another response, because on a fixed-ratio (FR) 1 schedule every response should produce 3 to 5 s of social interaction. Occurrences with error included a response not followed by a reinforcer before another response was observed (error of omission), or a reinforcer preceded by another reinforcer before a response was observed (error of commission). Dependent fidelity was calculated in the following manner. The number of occurrences with fidelity was divided by the number with fidelity and error, and then was multiplied by 100. The occurrences with fidelity in the dependent fidelity assessment were then tested for temporal fidelity. Occurrences with temporal fidelity were defined as responses followed by a reinforcer within 2 s; that number was then divided by the total number of occurrences with dependent fidelity and multiplied by 100. The dependent and temporal percentages of fidelity were then averaged across all sessions to yield a single fidelity percentage (and range) for each measure and child.

For the NCR sessions, our procedural fidelity measure involved an assessment of whether the number of reinforcer deliveries matched the number programmed to occur based on the yoked DRA session (amount fidelity). This was calculated by determining the number of reinforcers delivered and programmed in each pair of DRA and NCR sessions, and then dividing the smaller number by the larger number and multiplying by 100 to produce a percentage of fidelity. The percentages were averaged across all sessions to yield a single fidelity percentage (and range) for each child.

In DRA, the percentages of dependent fidelity aggregated across all sessions averaged 100% for Amy, 99% for Cia (range, 86% to 100%), 100% for Dee, 100% for Ted, 99.9% for Beth (range, 96% to 100%), 100% for Ed, 100% for Sam, and 99% for Lou (range, 89% to 100%). The percentage of temporal fidelity aggregated across all sessions averaged 100% for Amy, Cia, Dee, Ted, Beth, Ed, and Sam, and 98% for Lou (range, 89% to 100%). The assessment determined that a high degree of fidelity was achieved for implementing the DRA schedule across all children.

In NCR, the percentage of amount fidelity aggregated across all sessions averaged 100% for Amy, 98% for Cia (range, 88% to 100%) and Dee (range, 93% to 100%), 96% for Ted (range, 85% to 100%), 91% for Beth (range, 86% to 95%), 98% for Ed (range, 93% to 100%), 98% for Sam (range, 95% to 100%), and 96% for Lou (range, 86% to 100%). These results showed that the amount of reinforcement in NCR closely matched that experienced in DRA for all children; thus, the amount of reinforcement was indeed yoked, and observed preferences were not influenced by differences in the amount of reinforcement across schedules.

Contingency Strength Analysis

By programming an equal amount and distribution of reinforcement across DRA and NCR, an attempt was made to isolate the presence and absence of a contingency as the sole difference between the schedules. It is unknown, however, whether a contingency was removed during NCR due to the possibility of responses adventitiously co-occurring with reinforcer deliveries (see Vollmer, Ringdahl, Roane, & Marcus, 1997, for an example of adventitious reinforcement during NCR). Therefore, response and reinforcer occurrences during DRA and NCR were quantified along a contingency strength continuum from 1 to −1 and described in terms of positive, neutral, and negative contingencies, as outlined by Hammond (1980).

The measure of contingency strength quantified two independent correlations between responses and reinforcers. One index described the response conditional probability, defined as the number of times that the target response (either “Kevin” or “excuse me”) occurred within t seconds of an event (social interaction) divided by the total number of target responses. This yielded a proportional score between 0 and 1.

The other index described the event conditional probability, defined as the number of times an event (social interaction) was not preceded within t seconds by a target response and dividing that number by the total number of events. This also yielded a proportional score between 0 and 1.

Subtracting the event conditional probability from the response conditional probability produced a contingency strength value between 1 and −1. Window sizes of 2 s, 3 s, 4 s, 5 s, and 10 s were used to define the time period between target responses and events. As the window size increased, so did the likelihood that target responses and events would be counted as contiguous.

RESULTS

Efficacy and Preference

Comparison of CR and no reinforcement

Responding during the CR and no-reinforcement conditions is depicted for the first 4 children in Figure 1 and for the second 4 children in Figure 2. The session data depicted prior to the phase line were collected during the efficacy evaluation, and the session data after the phase line were collected during the preference assessment. Reinforcement was programmed for the response depicted in the first row (“Kevin”), whereas reinforcement was withheld for “excuse me,” depicted in the second row.

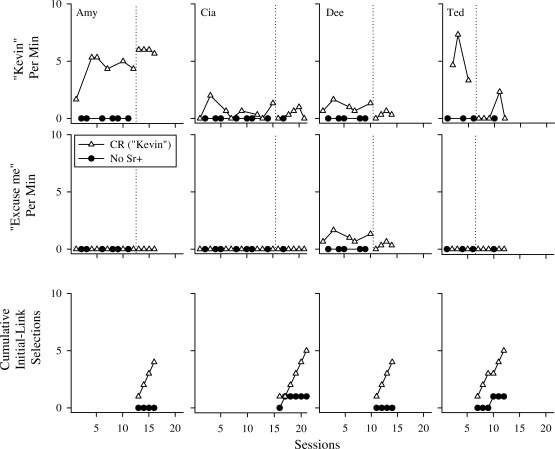

Figure 1.

Responses per minute for “Kevin” (first row) and “excuse me” (second row) during the contingent reinforcement schedule (CR; open triangles) and no-reinforcement schedule (no Sr+; filled circles) and cumulative initial-link selections during the preference assessment (third row) for Amy, Cia, Dee, and Ted across sessions. The data depicted prior to the phase line were collected during the efficacy evaluation, and the data after were collected during the preference assessment.

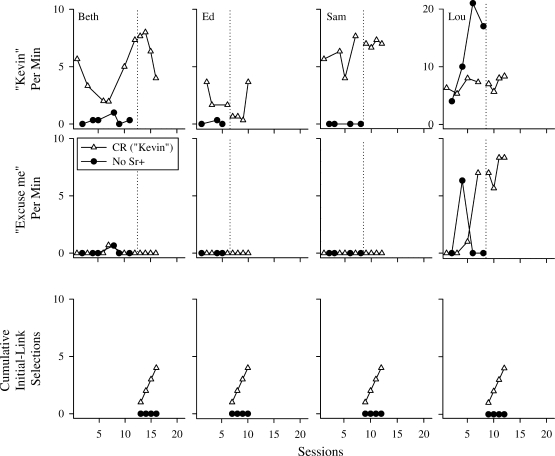

Figure 2.

Responses per minute for “Kevin” (first row) and “excuse me” (second row) during the CR schedule (open triangles) and no-Sr+ schedule (filled circles) and cumulative initial-link selections during the preference assessment (third row) for Beth, Ed, Sam, and Lou across sessions. The data depicted prior to the phase line were collected during the efficacy evaluation, and the data after were collected during the preference assessment.

A similar pattern of responding was observed for 7 of the 8 children. During CR sessions, Amy (M = 5.1 responses per minute), Cia (M = 0.5), Dee (M = 0.7), Ted (M = 2.8), Beth (M = 5.4), Ed (M = 1.8), and Sam (M = 6.5) emitted “Kevin” consistently albeit at varying levels. By contrast, low or zero rates of “Kevin” were observed by the same children in no-reinforcement sessions. These data show that social interaction did indeed serve as a reinforcer for children's vocal responding. Additional evidence of the reinforcing efficacy can be found in the second rows of Figures 1 and 2, which shows that “excuse me” was rarely (Beth) or never (Amy, Cia, Ted, Ed, and Sam) emitted by 6 of the 8 children.

Exceptions to the general patterns were evident in Lou's and Dee's data. Lou was the only child who emitted a higher rate of vocal responding in no reinforcement (M = 13 responses per minute) than in CR (M = 7). These data suggest that either this response produced its own reinforcement or this pattern represented a burst of socially mediated responding that occurred under extinction-like conditions. Dee chained the responses by emitting “excuse me” immediately prior to “Kevin,” which resulted in an equal rate of those two responses.

The third row of Figures 1 and 2 depicts cumulative initial-link selections during the preference assessment. All children selected the card associated with the CR condition on all trials or five of the six trials, thus demonstrating a preference for contingent social interaction relative to no interaction. These results also show that (a) the concurrent-chains arrangement leads to discriminated responding towards a particular schedule (i.e., is capable of detecting preferences of young children for schedules of interaction) and (b) social interaction was probably a reinforcing event for Lou.

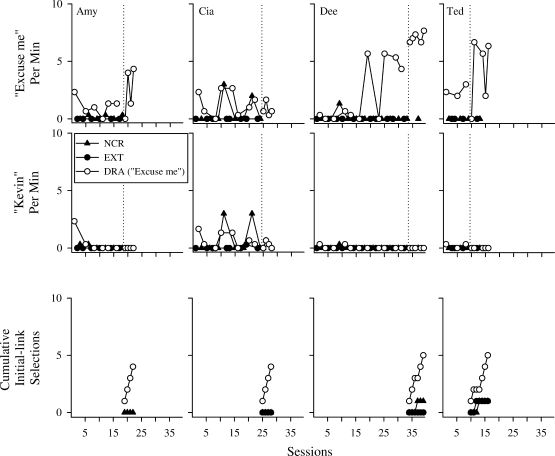

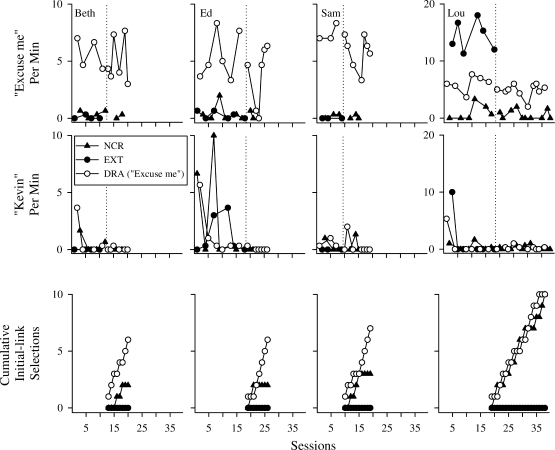

Comparison of DRA, NCR, and extinction

The results for the comparison of DRA, NCR, and extinction are depicted in Figures 3 and 4. Higher rates of “excuse me” were observed for all children in DRA relative to NCR and extinction (the level of responding in extinction was near zero for 7 of the 8 children). Six of the 8 children emitted low to near-zero levels of “Kevin,” the response that previously produced reinforcement in the CR condition, across DRA, NCR, and extinction. Cia, in a number of sessions, chained the responses by emitting “excuse me” immediately prior to “Kevin,” which resulted in similar rates for the two responses. These results further support the notion that social interaction served as a reinforcer for children's vocal responding.

Figure 3.

Responses per minute for “excuse me” (first row) and “Kevin” (second row) during differential reinforcement of alternative behavior (DRA; open circles), noncontingent reinforcement (NCR; filled triangles), and extinction (EXT; filled circles), and cumulative initial-link selections during the preference assessment (third row) for Amy, Cia, Dee, and Ted across sessions. The data depicted prior to the phase line were collected during the efficacy evaluation, and the data after were collected during the preference assessment.

Figure 4.

Responses per minute for “excuse me” (first row) and “Kevin” (second row) during DRA (open circles), NCR (filled triangles), and EXT (filled circles) across sessions, and cumulative initial-link selections during the preference assessment (third row) for Beth, Ed, Sam, and Lou across sessions. The data depicted prior to the phase line were collected during the efficacy evaluation, and the data after were collected during the preference assessment.

Although there were no substantial differences in the efficacy of each condition for reducing a target response, our preference data revealed more consistent influences of the schedules. Amy and Cia demonstrated exclusive preference for DRA by allocating all initial-link selections toward the colored card associated with the DRA schedule. Dee, Ted, Beth, Ed, and Sam also showed a preference for DRA by allocating the majority of their selections toward this schedule. Finally, Lou allocated selections equally across the two colored cards associated with DRA and NCR. In sum, all children preferred either NCR or DRA to extinction, and 7 of 8 children preferred DRA to NCR.

DRA, NCR, and Extinction as Experimental Control Conditions

Providing exposure to the schedules' associated contingencies was described as an efficacy evaluation because these sessions allowed examination of the reductive effects of DRA, NCR, and extinction on the previously reinforced “Kevin” response. The degree of efficacy achieved by each schedule provides some information regarding the utility of these three schedules as control conditions for the influence of reinforcement (see Thompson & Iwata, 2005, for an extended discussion). Relatively high levels of the target response (“Kevin”) were observed in the initial DRA session for 5 of the 8 children, and responding reoccurred in this condition for only 1 child. Responding was relatively high for only 3 of the 8 children during the initial NCR session, and responding reoccurred in this condition for 2 children. Responding was relatively high for only 1 of the 8 children during the initial extinction session, and responding reoccurred in this condition for only 1 child. These data showed that all conditions served as adequate controls for the effects of reinforcement (i.e., all conditions resulted in a reduction of the initially reinforced response “Kevin”), and no single condition was associated with persistent negative side effects (e.g., extinction bursts, spontaneous recovery).

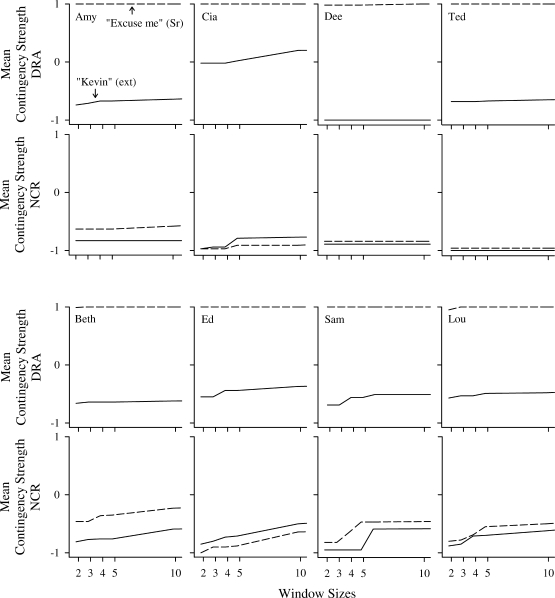

Contingency Strength Analysis

The average contingency strength at progressively increasing window sizes is described graphically across all DRA (first and third rows of Figure 5) and NCR (second and fourth rows of Figure 5) sessions. Contingency strength was at or near 1 across all window sizes for the “excuse me” response during DRA for all children. Thus, reinforcement was obtained through a strong positive contingency for “excuse me” by all children in DRA. For “Kevin,” the response that did not produce reinforcement, a moderately negative contingency was experienced for 7 of the 8 children during DRA, with the exception of Cia (Figure 5, middle left) who experienced a neutral contingency. Recall that Cia often chained the two responses even though one response was now on extinction. All children obtained reinforcement under a moderate to strong negative contingency for both responses at short window sizes (i.e., 2 s to 5 s) in NCR. These contingencies progressively neutralized for the majority of children as the window size increased to 10 s. In sum, a strong positive mean contingency was present in DRA for “excuse me.” Said another way, the probability of reinforcement was much greater following the target response than in its absence during the DRA condition. By contrast, the probability of reinforcement was greater in NCR in the absence of responding.

Figure 5.

Mean contingency strengths for “excuse me” (dotted line) and “Kevin” (solid line) in the DRA (first and third rows) and NCR schedules (second and fourth rows) across progressively increasing window sizes for all children. All sessions from both the efficacy evaluation and preference assessment for each schedule were included in these analyses.

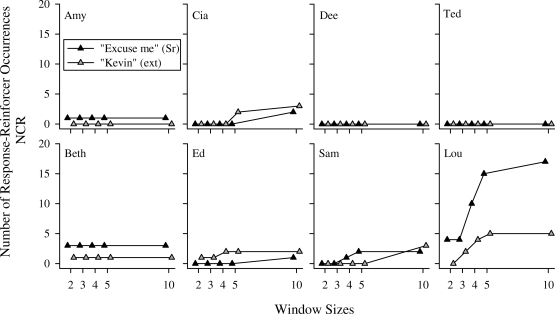

The mean contingency strengths in DRA and those observed in NCR did not differ substantially across children. As a result, variables that may have led Lou to prefer the contexts equally were not identified through an assessment of contingency strength. While conducting the assessment, however, we discovered a notable difference in the number of reinforcers that followed “excuse me” and “Kevin” in NCR for Lou relative to the other children. Because the initial selection was predictive of the preference assessment outcomes for 7 of 8 children, we used the three efficacy sessions in each condition prior to the preference assessment to investigate this potential disparity across children's experiences.

The numbers of response–reinforcer occurrences experienced by the children in NCR across all window sizes are depicted in Figure 6. Very few response–reinforcer occurrences during NCR were observed for the 7 children who preferred the DRA schedule. By contrast, Lou experienced a relatively large number of response–reinforcer occurrences during NCR at all window sizes for both responses. These data provide tentative support that the amount of reinforcement following Lou's responding in NCR obscured the programmed difference between the DRA and NCR schedules, perhaps resulting in his indifference.

Figure 6.

The number of response–reinforcer occurrences experienced in the last three sessions of NCR during the efficacy evaluation for “excuse me” (filled triangles) and “Kevin” (gray triangles) across progressively increasing window sizes for all children.

DISCUSSION

All children showed sensitivity to social interaction as reinforcement, and all preferred this reinforcement to be provided contingently relative to none at all. All three conditions—differential reinforcement, noncontingent reinforcement, and extinction—which are often arranged to either reduce some existing response (clinical use) or to show control over the influence of some event as reinforcement (experimental use), reduced a target response. The primary purpose for conducting our analysis was to determine which of the three schedules children preferred; the results showed that 7 of 8 children preferred differential reinforcement over noncontingent reinforcement and extinction. These results replicate and extend Hanley et al.'s (1997) findings.

The finding that children do indeed prefer contingencies is strongly related to basic research on the contrafreeloading–freeloading phenomenon, which described how nonhuman animals prefer to “work” for reinforcement rather than obtaining equal amounts for free (for a review, see Osborne, 1977). Contrafreeloading (preference for responding to obtain reinforcement) was considered to be a reliable phenomenon; however, Osborne noted that preference for response-dependent delivery of reinforcement typically eroded when appropriate experimental controls were arranged. Contrafreeloading by young children was, nonetheless, reported by Singh (1970) in a grouped data analysis. When 32 boys and 28 girls, 5 to 7 years old, were given the opportunity to obtain marbles in a free-operant arrangement by either a level press or for free (sitting in a chair), the children acquired, on average, 66% of the marbles by lever pressing. These results were systematically replicated across older children (8 to 12 years old) from Native American backgrounds by Singh and Query (1971), who found that 64% of this group's marbles were obtained by lever pressing. As noted earlier, Hanley et al. (1997) also observed contrafreeloading within single-subject analyses that included repeated measures for 2 children with intellectual disabilities using a qualitatively different reinforcer (adult social interaction). The current study extended the generality of contrafreeloading with humans by observing that 7 of 8 children of typical development preferred to obtain social interaction by emitting vocal mands while playing in social contexts rather than experiencing the same amount and distribution of social interaction on a time-based schedule. The results tentatively suggest that children prefer to work for reinforcement under conditions in which a presumably low-effort response is required to produce reinforcement.

Procedural fidelity measures showed that schedules were accurately implemented and the yoking of reinforcement experienced during DRA to NCR was achieved, eliminating the potential confounding effect of differences in reinforcement amount influencing children's preferences. Contingency strength analysis confirmed that the independent variable, a response-dependent contingency, was in place during DRA and absent during NCR for all participants.

It is important to understand why a preference for a strong positive contingency, typically found in differential reinforcement schedules, occurs at all. Factors known to affect preference for different schedules, such as the amount and quality of reinforcement (Mazur, 1994), were considered in the design of our analysis. We assessed and quantified the amount of reinforcement, and the results confirmed that the amount was nearly identical across the schedules. The quality or form of social interaction provided by the experimenter was not measured in our study, but preassessment teaching and feedback were arranged to increase the likelihood that quality did not vary across schedules. Future research should more directly measure the type or quality of reinforcement delivered across the schedules by having independent and uninformed observers rate the quality of social reinforcement. Also, given that response effort favors NCR, in that some effort is required to produce reinforcement in DRA, it seems counterintuitive that 7 of 8 children preferred DRA to NCR in the current study.

Hanley et al. (1997) suggested that response-dependent reinforcement (e.g., DRA) may be preferred because the contingency allows an individual to modulate when and how much reinforcement is obtained to match momentary fluctuation in the value of the reinforcer. The fact that children in our study also did not maximize reinforcement (i.e., their responding was variable, which resulted in periods of time without social interaction) suggests that the value of the reinforcer fluctuated for our children as well. Our contingency strength analyses also showed that children did indeed have a means to increase the probability of reinforcement in DRA, and this same mechanism was absent in NCR. These data support the notion that response-dependent reinforcement allows children to obtain reinforcement at times it is most valued. Furthermore, analysis of the number of response–reinforcer occurrences during NCR suggested a larger number for the 1 child whose selections indicated indifference; thus, preference may be differentially sensitive to the amount of reinforcement following responding. This potential influence on preference suggests that programming free reinforcers in a context that involves a degree of response–reinforcer contiguity may still result in a preferred context. We hope to evaluate this latter notion in future research, because the findings could inform how teachers arrange the delivery of social interaction to their students (i.e., perhaps a mixture of contingent and noncontingent reinforcement is most preferred and most practical in application).

Contingency-specifying instructions were provided to facilitate discriminated performance across the schedules, and because these instructions described different contingencies, they too were different, and thus could have biased preference towards either schedule. Nevertheless, several factors militate against considering instructions the determinants of the children's preferences. First, 7 of the 8 children interacted extensively with both schedules in the efficacy evaluation, and their responding closely conformed to the patterns expected given the types of schedules arranged. Furthermore, 4 of the 8 children experienced NCR in the preference assessment when rules were no longer provided. We feel that these experiences led to control of preferences by the schedules in the terminal links as opposed to the instructions. Second, if the terminal-link experiences were irrelevant because the instructions were primarily influencing initial-link responding, the child whose behavor was relatively insensitive to the schedules should have also preferred the DRA condition. This was, however, not observed. Third, the preference assessment involving DRA and NCR in Hanley et al. (1997) did not include presession instructions, yet both participants demonstrated a preference for the DRA schedule. Taken together, this information strongly suggests that interacting with the schedules was the primary influence on children's preferences for the schedules.

At this point, our results suggest that DRA schedules should be favored in clinical application and in experimental analyses in which experimental control of reinforcement is a goal simply because children prefer DRA schedules to other schedules. However, the conditions under which preference for DRA has been observed (a continuous schedule of reinforcement) do not necessarily emulate the conditions under which these schedules may be implemented when recommended as behavioral treatments. Thus, future research should evaluate whether preference persists for DRA when the schedule of reinforcement for the alternative response is thinned (Hanley, Iwata, & Thompson, 2001). Future research should also be directed toward evaluating the boundary conditions for the DRA preference. For instance, providing reinforcement in DRA contingent on a response with a higher degree of effort (Horner & Day, 1991) also may affect children's preferences for these schedules.

The continuation of research on discovering children's preferences for contexts promotes sensitivity to personal variables, and should probably be considered whenever comparative analyses are conducted with single participants, especially when these analyses suggest that both options are effective. Research on preference for reinforcement schedules or other contexts also should progress from descriptive analyses to functional analyses in order to gain more confidence in the specific variables that influence children's preferences. This will ultimately lead to an improved technology for designing reinforcement-based treatments and contexts routinely experienced by children.

REFERENCES

- Durand V.M. Functional communication training using assistive devices: Recruiting natural communities of reinforcement. Journal of Applied Behavior Analysis. 1999;32:247–267. doi: 10.1901/jaba.1999.32-247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagopian L.P, Fisher W.W, Legacy S.M. Schedule effects of noncontingent reinforcement on attention-maintained destructive behavior in identical quadruplets. Journal of Applied Behavior Analysis. 1994;27:317–325. doi: 10.1901/jaba.1994.27-317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall R.V, Lund D, Jackson D. Effects of teacher attention on study behavior. Journal of Applied Behavior Analysis. 1968;1:1–12. doi: 10.1901/jaba.1968.1-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammond L.J. The effect of contingency upon the appetitive conditioning of free-operant behavior. Journal of the Experimental Analysis of Behavior. 1980;34:297–304. doi: 10.1901/jeab.1980.34-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G.P, Iwata B.A, Thompson R.T. Reinforcement schedule thinning following treatment with functional communication training. Journal of Applied Behavior Analysis. 2001;34:17–37. doi: 10.1901/jaba.2001.34-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G.P, Piazza C.C, Fisher W.W, Contrucci S.A, Maglieri K.M. Evaluation of client preference for function-based treatments. Journal of Applied Behavior Analysis. 1997;30:459–473. doi: 10.1901/jaba.1997.30-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart B.M, Reynolds N.J, Baer D.M, Brawley E.R, Harris F.R. Effect of contingent and non-contingent social reinforcement on the cooperative play of a preschool child. Journal of Applied Behavior Analysis. 1968;1:73–76. doi: 10.1901/jaba.1968.1-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner R.H, Day M.D. The effects of response efficiency on functionally equivalent competing behaviors. Journal of Applied Behavior Analysis. 1991;24:719–732. doi: 10.1901/jaba.1991.24-719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B.A, Dorsey M.F, Slifer K.J, Bauman K.E, Richman G.S. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. (Reprinted from Analysis and Intervention in Developmental Disabilities, 2, 3–20, 1982) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahng S, Iwata B.A, DeLeon I.G, Worsdell A.S. Evaluation of the “control over reinforcement” component in functional communication training. Journal of Applied Behavior Analysis. 1997;30:267–277. doi: 10.1901/jaba.1997.30-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Learning and behavior. Englewood Cliffs, NJ: Prentice Hall; 1994. [Google Scholar]

- Neef N.A, Iwata B.A, Horner R.H, Lerman D, Martens B.A, Sainato D.S, editors. Behavior analysis in education (2nd ed.) Lawrence, KS: Society for the Experimental Analysis of Behavior; 2004. [Google Scholar]

- Osborne S.R. The free food (contrafreeloading) phenomenon: A review and analysis. Animal Learning & Behavior. 1977;5:221–235. [Google Scholar]

- Singh D. Preference for bar-pressing to obtain reward over free-loading in rats and children. Journal of Comparative and Physiological Psychology. 1970;73:320–327. [Google Scholar]

- Singh D, Query W.T. Preference for work over “free-loading” in children. Psychonomic Science. 1971;24:77–79. [Google Scholar]

- Thompson R.H, Iwata B.A. A review of reinforcement control procedures. Journal of Applied Behavior Analysis. 2005;38:257–278. doi: 10.1901/jaba.2005.176-03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Iwata B.A, Zarcone J.R, Smith R.G, Mazaleski J.L. The role of attention in the treatment of attention-maintained self-injurious behavior: Noncontingent reinforcement and differential reinforcement of other behavior. Journal of Applied Behavior Analysis. 1993;26:9–21. doi: 10.1901/jaba.1993.26-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Ringdahl J.E, Roane H.S, Marcus B.A. Negative side effects of noncontingent reinforcement. Journal of Applied Behavior Analysis. 1997;30:161–164. doi: 10.1901/jaba.1997.30-161. [DOI] [PMC free article] [PubMed] [Google Scholar]