Abstract

Decision makers often face choices whose consequences unfold over time. To explore the neural basis of such intertemporal choice behavior, we devised a novel two-alternative choice task with probabilistic reward delivery and contrasted two conditions that differed only in whether the outcome was revealed immediately or after some delay. In the immediate condition, we simply varied the reward probability of each option and the outcome was revealed immediately. In the delay condition, the outcome was revealed after a delay during which the reward probability was governed by a constant hazard rate. Functional imaging revealed a set of brain regions, such as the posterior cingulate cortex, parahippocampal gyri, and frontal pole, that exhibited activity uniquely associated with the temporal aspects of the task. This engagement of the so-called “default network” suggests that during intertemporal choice, decision makers simulate the impending delay via a process of prospection.

Keywords: decision, fMRI, intertemporal choice, prospection, discounting, temporal resolution of uncertainty

Introduction

Adaptive decisions require the integration of multiple factors in a variety of dimensions. Many of these dimensions (e.g., durability, color) are intrinsic to the goods being offered. However, there are more ethereal dimensions that are just as important, such as those having to do with the timing of decision-relevant events. For example, decision makers exhibit temporal discounting; they behave as though immediately consumable goods are more valuable than those only available after some delay (Frederick et al., 2002; Berns et al., 2007; Rosati et al., 2007; Kalenscher and Pennartz, 2008). The current study seeks to uncover the cognitive and neural mechanisms underlying the integration of temporal factors during decision making.

Research in psychology and economics has investigated how choice behavior is affected by the timing of decision-relevant events. The tasks used to explore such factors are referred to as intertemporal choice and frequently manipulate the time at which rewards are delivered. For example, a subject might choose between $5.00 now and $10.00 in 2 weeks. Previous behavioral work using such tasks has focused on whether decision makers are temporally consistent (i.e., whether preferences change as time elapses) (Strotz, 1955; Ainslie, 1975, 1992; Kirby and Herrnstein, 1995; Kirby, 1997), whereas previous neuroeconomic work has emphasized the neural representation of value of delayed rewards (Cardinal et al., 2001; McClure et al., 2004; Tanaka et al., 2004; Cardinal, 2006; Hariri et al., 2006; Kable and Glimcher, 2007; McClure et al., 2007; Roesch et al., 2007; Wittmann et al., 2007; Kim et al., 2008).

Despite this work, it remains unclear how delay influences decisions. One important factor is the uncertainty associated with waiting. Investigators (Mischel, 1966; Stevenson, 1986; Mazur, 1989; Prelec and Loewenstein, 1991; Rachlin et al., 1991; Mazur, 1995, 1997; Sozou, 1998; Kacelnik, 2003) have suggested that delay exerts its influence on choices via the perceived uncertainty associated with waiting; decision makers may believe that the probability of acquiring promised rewards decreases as waiting time increases. This implies that previous studies could reflect the influence of probability rather than delay per se. However, there is empirical evidence that probability and delay do not exert identical influences (Holt et al., 2003; Green and Myerson, 2004; Chapman and Weber, 2006). Indeed, it has been noted (Green et al., 1999) that impulsivity is associated with weak discounting over probability (i.e., increased preference for low-probability rewards), but strong discounting over delay (i.e., decreased preference for delayed rewards). Other work has illustrated that purely temporal considerations can have behavioral consequences. For example, work on the temporal resolution of uncertainty (Chew and Ho, 1994; Arai, 1997) has found that people exhibit strong preferences between identical gambles that only differ in when the outcome of the choice is revealed. It has been hypothesized that such preferences could be related to the utility associated with temporally extended uncertainty (Wu, 1999). However, there have been no investigations into what neural processes underlie these purely temporal preferences. The current research seeks to explore how time, specifically the time of uncertainty resolution, is incorporated into decisions.

Materials and Methods

Subjects.

Twenty subjects (13 women; all right-handed; mean age, 23.4 years; range, 19–30 years) with normal or corrected-to-normal vision participated in this study. Informed consent was obtained from all subjects, and the study protocol was approved by the Human Investigation Committee of the School of Medicine and the Human Subjects Committee of the Faculty of Arts and Sciences at Yale University.

Procedure.

Subjects were told that they would be asked to make a set of decisions, each offering monetary rewards, and that all the money earned during the experiment would be paid to them immediately after completion of the experiment. The experiment consisted of two conditions that only differed in when uncertainty about rewards was removed: the immediate condition and the delay condition. Trials in both conditions consisted of a decision between two options, one offering 10 cents and the other offering 20 cents (see Fig. 1). Each option was associated with some number (zero to nine) of rectangles. In the delay condition, the number of rectangles both (1) determined the likelihood of the chosen reward and (2) determined how long the subject would have to wait for the reward, if obtained. In the immediate condition, the number of rectangles only determined the likelihood of the chosen reward being obtained because the outcome of the subject's choice was always revealed immediately. There were seven different pairs of options used throughout the experiment: all six nontrivial combinations of zero, three, six, and nine rectangles (zero vs three, zero vs six, zero vs nine, three vs six, three vs nine, and six vs nine, in which the larger number of rectangles was always associated with the larger reward) plus the trivial pair zero versus zero. To familiarize subjects with the task, the timing, and the probabilities, a block of practice trials (∼30 trials from each of the two conditions) was administered before they entered the scanner.

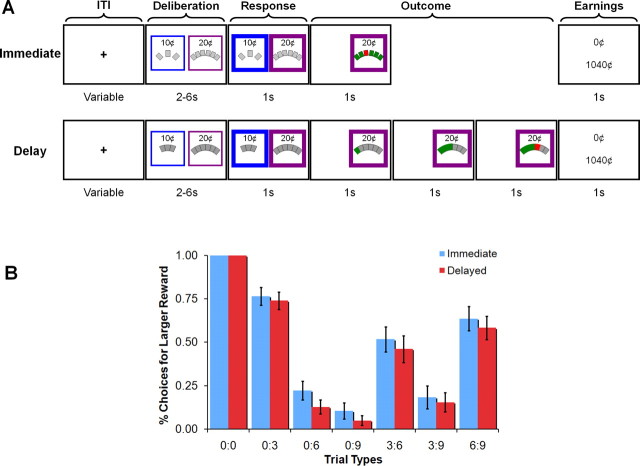

Figure 1.

Design and behavioral results. A, Stimuli and task design. Subjects chose between 10 and 20 cent rewards on each trial. The number of rectangles determined the probability and, in the delay condition, the length of time before the outcome was revealed. B, Choice behavior reflected both the probability of reward delivery and delay (error bars represent the SEM). For example, increasing numbers of rectangles associated with the larger reward option decreased choices for that option. There was also a small but consistent bias to choose the larger reward option more in the immediate condition than in the delay condition.

Each participant completed five functional runs. Each run consisted of two blocks of 29 trials (each of the seven option pairs presented four times, plus a single filler trial, three vs six rectangles, that was always the first trial of the block) for a total of 58 choices per run. One of these blocks consisted of trials from the immediate condition and one consisted of trials from the delayed condition. The order of the blocks alternated across runs, and the condition of the first block in the first run was counterbalanced across subjects. An instruction screen preceded each block indicating which of the two tasks was about to begin, and subtle differences in each conditions displays ensured that subjects would not be confused about which task they were completing.

Each trial began with the presentation of the two options available on that trial. On all trials, one of these options offered 10 cents and the other offered 20 cents. These options were presented side by side on the computer screen with the left/right assignment chosen randomly on each trial. To ease subjects' task, the 10 cent option was always presented in a blue square and the 20 cent option was always presented in a yellow square. This option remained on the screen for 2–6 s (50% 2 s, 30% 4 s, and 20% 6 s for a mean of 3.4 s) during which time subjects could not respond. Subjects were instructed to simply consider which of the two options they would prefer. After the appropriate interval had elapsed, the two squares surrounding the options enlarged, which indicated to subjects that they could register their choice. They did so by pressing one of two buttons on the provided button box. At the conclusion of the 1 s response window, the unchosen option was removed from the screen and the reward associated with the chosen option was delivered according to the rule associated with the condition. If no response was made, the trial immediately ended, and subjects were informed of what they had just earned (nothing) and their cumulative total. Otherwise, the trial unfolded according to the rules associated with the current condition.

In the immediate condition, the outcome was immediately revealed. Each of the rectangles changed color (green or red) all at once. Each rectangle had a 0.9 probability of turning green and 0.1 probability of turning red. If all the rectangles turned green, then the subject received the chosen reward. If any of the rectangles turned red, then the subject received nothing. Thus, rewards were delivered with a probability of 0.9D, where D is the number of rectangles associated with the chosen option. Rewards associated with zero rectangles, if chosen, were guaranteed (delivered with probability of 1).

In the delay condition, the outcome was only revealed after some delay. The rectangles of the chosen option changed color (green or red) sequentially (from left to right) at a rate of one rectangle per second. Each rectangle again had a 0.9 probability of turning green and 0.1 probability of turning red. If all the rectangles turned green, then the subject received the chosen reward. If any of the rectangles turned red, then the trial ended immediately and the subject received nothing. Thus, just as in the immediate condition, rewards were delivered with a probability of 0.9D, but additionally, subjects had to wait D seconds to discover whether they would receive the chosen reward. Note also that this results in an interval with a constant hazard rate; all cards have the same probability of being red regardless of when they are turned over (Sozou, 1998; Dasgupta and Maskin, 2005; Halevy, 2005). Rewards associated with zero rectangles, if chosen, were guaranteed and entailed no delay.

In both conditions, the trial ended by presenting the amount earned for the current trial on the screen for 500 ms followed by the cumulative total for the experiment for another 500 ms. The screen was then cleared, and a small fixation cross was placed in its center. In both conditions, the next trial commenced only after the current trial would have ended if the subject had chosen the 20 cent option and waited the entire D seconds to receive it, regardless of the subject's choice, the amount of time actually waited, or condition. Thus, the trial durations were the same regardless of these factors.

Subjects were paid immediately after exiting the scanner. Mean payment from the experiment itself was $30.95 (range, $29.80–$33.40; SD, $1.14; maximum possible was $58.00) and subjects were given an additional $10.00–$15.00 in compensation.

Functional magnetic resonance image acquisition.

All scans took place in a Siemens Trio 3T scanner with a standard birdcage head coil. Functional images were acquired with a T2*-weighted gradient-echo sequence (repetition time, 2000 ms; echo time, 25 ms; flip angle, 90°; 4 × 4 × 4 mm resolution; no gap); each volume contained 34 axial slices parallel to the anterior commissure/posterior commissure line, covering the entire brain. The experiment was conducted in five functional runs, each acquiring 390 volumes. Visual stimuli were presented using a liquid crystal display projector on a rear-projection screen, which was viewed with an angled mirror attached to the head coil. A magnetic resonance imaging-compatible button box was used to collect subjects' responses.

Functional magnetic resonance image analyses.

Preprocessing and statistical analyses were conducted using the BrainVoyager QX software (version 1.7; Brain Innovation). After the first four volumes of each functional scan were discarded to allow for T1 equilibration effects, each remaining volume was slice-time corrected, aligned to the first volume in each run to correct for head motion, and high-pass filtered (six cycles per scan or 0.0083 Hz). The volumes were then normalized to a standard stereotaxic space (Talairach and Tournoux, 1988), interpolated to 3 mm isotropic voxels, and spatially smoothed with a 6 mm full-width half-maximum Gaussian kernel.

Analyses were conducted by modeling the average signal time course of each voxel using multiple regression. When comparing the task-related activity associated with each condition (delay vs immediate), we dummy-coded the trial onsets of each trial type. We also included separate regressors of no interest representing the onset of the response period and the onset of the outcome presentation. These time courses were then convolved with a canonical, two-gamma hemodynamic response function (HRF) and fit to the time course of each voxel. Individual subjects' contrast maps were taken to a second, group-level analysis in which t values were calculated for each voxel treating intersubject variability as a random effect. Each voxel was assigned the t value associated with the contrast (delay > immediate). The resulting map was then thresholded at p < 0.001 (uncorrected) with a cluster threshold of five contiguous 3 × 3 × 3 mm voxels.

For our parametric analyses, we first set the unconvolved, trial-onset regressors (marking the beginning of the deliberation period) to be equal to the value of the parameter of interest (e.g., expected value) on that trial. These regressors were then normalized and convolved with a canonical, two-gamma HRF. The β values that result from the use of such regressors represent the correlation between the parameter of interest and the activity in each voxel. Thus, a parametric analysis using expected value as the parameter of interest could localize regions whose activity is potentially representing expected value. Individual subject maps were again taken to a second, group-level analysis in which t values were calculated for each voxel treating intersubject variability as a random effect. Each voxel was assigned the one-sample t value testing the distribution of β values against the null hypothesis of μ = 0 (i.e., the hypothesis that no correlation exists). The resulting map was again thresholded at p < 0.001 (uncorrected) with a cluster threshold of five contiguous 3 × 3 × 3 mm voxels.

All analyses, other than that of choice-related effects, controlled for subjects' choice behavior. This was accomplished by using a regressor that dummy-coded the choice made on each trial. This regressor was 1 when the 20 cent option was chosen and −1 when the 10 cent option was chosen. This regressor was then normalized and convolved with a canonical, two-gamma HRF. The convolved time course was then added to the general linear model as a regressor of no interest. This additional step allows us to avoid misinterpreting choice-related variance in the blood oxygen level-dependent signal as the effect of some other parameter (e.g., value, delay, etc.).

The analyses of choice-related effects were performed similarly. The same dummy-coded choice regressor was now used as the regressor of interest. This analysis was performed in two ways. First, we included this regressor alone. Second, we added an additional regressor that coded the expected value of the chosen option. The expected value regressor was then normalized and convolved with a canonical, two-gamma HRF. The convolved time course was then added to the general linear model as a regressor of no interest. For the reasons outlined above, this second analysis allows us to avoid misinterpreting value-related effects as effects of choice per se.

Region-of-interest (ROI) analyses were conducted by defining spheres centered on voxels of peak significance. The spheres were 8 mm in diameter. These ROIs were interrogated by deriving β values for each subject using a fixed-effects general linear model. Group analyses were conducted by subjecting these β values to subsequent t tests.

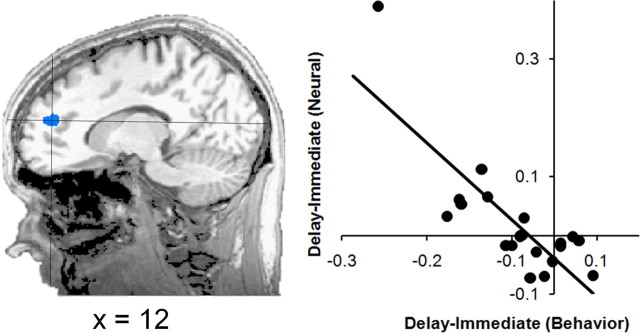

Individual differences in decision making were investigated by computing an index reflecting the degree to which each subject was sensitive to the difference between the immediate and delay conditions of our task. To do so, we computed the difference between the probability of choosing the 20 cent option in the immediate condition and the same probability in the delay condition. This difference was significantly greater than zero (see Results), reflecting our subjects' relative unwillingness to wait in the delay condition. This difference was then entered as a covariate in an analysis of covariance (ANCOVA) to investigate whether the neural differentiation of the two conditions (during the deliberation period) was modulated by the choice pattern of our subjects. Group-level analyses were performed by computing R values (Pearson's correlation coefficient) for each voxel. Each voxel was assigned the R value representing the correlation between the behavioral differences and the t values from the immediate versus delay contrast at that voxel. The resulting map was then thresholded at p < 0.001 (taking into account the fact that R is not normally distributed) with a cluster threshold of five contiguous 3 × 3 × 3 mm voxels. There was a single outlier in terms of both behavioral and neural differentiation in the resulting frontopolar region. However, the obtained relationship between behavior and neural activity in this region (r = −0.81; p < 0.0001) remains significant both when removing this subject (r = −0.70; p < 0.001) and when using Spearman's rank order correlation (r = −0.71; p < 0.0005).

Results

Behavioral results

As expected, reward magnitude, probability, and delay each exerted a significant influence on choice behavior (Fig. 1B). To quantify the relationship between these variables, we performed a 2 (condition: delay vs immediate) by 7 (choice type) repeated-measures ANOVA to an arcsine transformation of the original choice proportions. We observed a significant effect of choice type (F(6,19) = 66.33; p < 0.0001) reflecting the influence of probability. We also observed a significant effect of condition (F(1,19) = 9.73; p < 0.01). This latter effect reflects the fact that subjects chose the 20 cent option more frequently in the immediate condition (M = 50.8% of the time) than in the delay condition (M = 45.48% of the time), most likely because subjects found waiting to be somewhat aversive and thus chose the smaller, less delayed option in the delay condition. There were no other significant effects. The effect of condition is of interest, particularly because its magnitude varied across subjects. Five of our subjects (25% of our sample) chose the smaller reward significantly more frequently in the delay condition than in the immediate condition (values of t(19) > 2.20; values of p < 0.05). An additional 10 subjects (50% of our sample) exhibited this same pattern but failed to reach significance (values of t(19) = 0.07–1.81; values of p = 0.08–0.94). The remaining five subjects (25% of our sample) chose the smaller reward less frequently in the delay condition than in the immediate condition, although none came close to significance (values of t(19) < 1.23; values of p > 0.23).

Activity related to expected value

All of our functional magnetic resonance imaging analyses were performed on activity elicited during the deliberation phase of our task (e.g., time-locked to trial onset). Doing so allows us to focus on the cognitive and neural processes operating during decision making itself. In addition, because the deliberation phase of the delay and immediate conditions include nearly identical stimuli, timing, and motor preparation, our analyses are untainted by the methodological differences that differentiate the two conditions later in the trial.

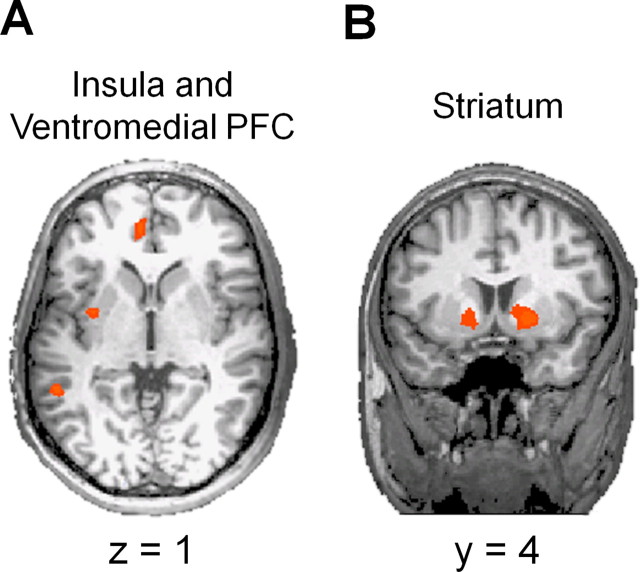

Our initial analyses sought to localize brain regions representing the value of the options under consideration. Thus, we localized regions whose activity was correlated with the expected value (objective value multiplied by the objective probability of receipt) of the chosen option (Hsu et al., 2005). This analysis yielded a large set of regions, many of which were common to both the immediate and delay conditions (supplemental Table S1, available at www.jneurosci.org as supplemental material). These common regions included portions of the posterior cingulate cortex, medial portions of prefrontal cortex, including a large part of the ventromedial region, and portions of left insula (Fig. 2A). All of these regions have previously been implicated in the representation of value, uncertainty, and decision making (McCoy et al., 2003; Sanfey et al., 2003; McCoy and Platt, 2005; Naqvi et al., 2007; Kepecs et al., 2008). There were also regions correlated with expected value in only one of the two conditions. Regions unique to the delay condition included additional lateral and ventral regions in the temporal lobe and dorsolateral prefrontal regions such as the middle and superior frontal gyri. In the delay condition, we also observed an interesting dissociation in insular cortex. A posterior insular region exhibited positive correlations with expected value, whereas more anterior regions exhibited negative correlations (i.e., activity decreased as expected value increased). Additional negative relationships were observed in the right inferior frontal and precentral gyri and the right inferior parietal lobule. There were fewer regions that mirrored expected value during only the immediate condition. These included medial frontal gyrus and right superior parietal lobule.

Figure 2.

A, Value-related effects. Portions of ventromedial prefrontal and insular cortices exhibited activity that was positively correlated with the expected value of the chosen option in both the immediate and delay conditions. PFC, Prefrontal cortex. B, Choice-related effects. Bilateral regions in the striatum exhibited significantly greater activity when participants chose the larger (20 cent) option than when they chose the smaller (10 cent) option. This pattern was observed in both the immediate and delay conditions and remained significant even when controlling for the expected value of the chosen option (see Materials and Methods).

Activity related to choice

We also investigated whether there were brain regions whose activity was directly related to our subjects' choice behavior. To do so, we looked for regions that were differentially active when the subjects chose the smaller and larger reward options. Note that this analysis is equivalent to localizing regions whose activity is related to undiscounted value (i.e., 10 vs 20 cents). This analysis was performed in several ways. The first analysis simply looked for choice-related activity (supplemental Table S2, available at www.jneurosci.org as supplemental material). We also performed this same analysis while controlling (see Materials and Methods) for the expected value of the chosen option (supplemental Table S3, available at www.jneurosci.org as supplemental material). The region most robustly exhibiting choice-related activity in both the delay and immediate conditions was bilateral striatum (Fig. 2B), which exhibited significantly greater activity before subjects chose the option offering 20 cents than before subjects chose the option offering 10 cents. These results can also be taken as suggesting that activity in the striatum is related to the undiscounted value of the chosen option.

Differences between immediate and delay conditions

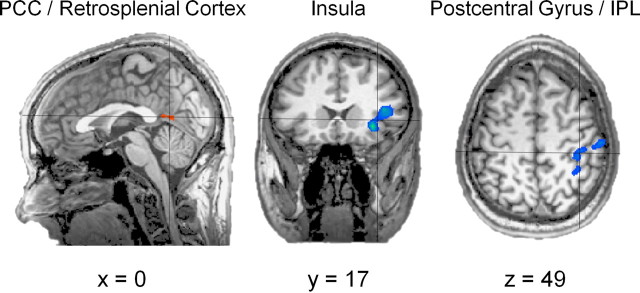

There were several regions that exhibited greater task-related activity in either the delay and immediate conditions (Fig. 3; supplemental Table S4, available at www.jneurosci.org as supplemental material) (thresholded at p < 0.001; cluster threshold, 5). Regions exhibiting greater activity in the immediate condition include anterior portions of the right insula and a small superior portion of the right postcentral gyrus. In contrast, a single region exhibited greater activity in the delay condition than in the immediate condition: medial posterior cingulate/retrosplenial cortex.

Figure 3.

Task-related effects. Posterior cingulate/retrosplenial cortex (in warm colors) was significantly more active during the delay condition than during the immediate condition. In contrast, left insula, right postcentral gyrus, and right inferior parietal lobule (in cool colors) all exhibited significantly greater activity in the immediate version of the task. PCC, Posterior cingulate cortex; IPL, inferior parietal lobule.

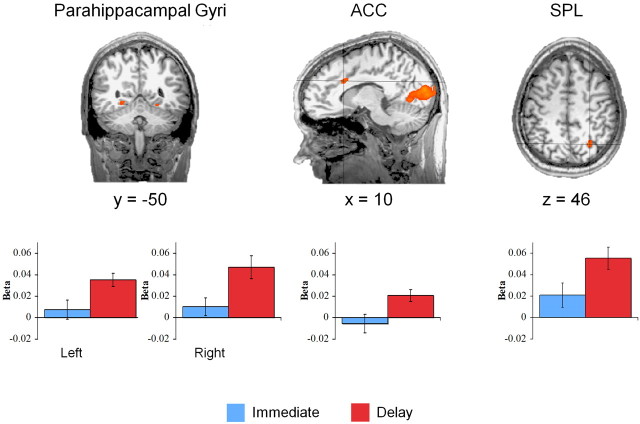

Representation of delay but not probability

We next performed analyses to look for activity correlated with the number of rectangles present during decision making. Recall that an increasing number of rectangles represents a decreasing probability of reward delivery in the immediate condition and represents both increasing delay and decreasing probability in the delay condition. We first localized those regions whose activity was correlated with the number of rectangles associated with the chosen option. These regions are illustrated in Figure 4 (and supplemental Table S5, available at www.jneurosci.org as supplemental material) along with the value of β: the slope derived from the linear regression between the number of rectangles and the neural activity in each region and in each condition. In the immediate condition, this analysis failed to reveal any significant parametric effects. In the delay condition, however, increasing numbers of rectangles were associated with increasing levels of activity in several regions including bilateral parahippocampal cortex, anterior cingulate, and right intraparietal sulcus (IPS). That these same regions did not exhibit parametric effects in the immediate condition suggests that their activity is associated with delay per se, not probability. Indeed, the robust effects in parahippocampal cortex and IPS are consistent with the suggestion that the delay condition engages prospective processes (Okuda et al., 2003; Addis et al., 2007; Buckner and Carroll, 2007; Hassabis et al., 2007; Szpunar et al., 2007). To further explore the difference between the two conditions, we performed ROI analyses on those regions exhibiting significant parametric effects in the delay condition. These comparisons revealed significantly greater parametric effects in the delay condition than in the immediate condition in each of the ROIs (Fig. 4) (all values of t(19) > 2.27; values of p < 0.05). Furthermore, despite their absence in the above whole-brain analysis, ROI analyses reveal that each of these regions also exhibits significantly greater activity in the delay condition than in immediate condition (all values of t(19) > 2.25; values of p < 0.05). In all, these results suggest that the cognitive operations being performed in these regions appear to be uniquely associated with the temporal aspects embodied by the delay condition. These findings also strongly imply that the delay condition is engaging prospective processes during the deliberation period.

Figure 4.

Delay and uncertainty-related effects. Several regions exhibited parametric effects in the delay condition such that their activity was correlated with the number of rectangles associated with the chosen option. These regions included bilateral parahippocampal gyri, anterior cingulate cortex (ACC), and portions of the IPS. SPL, Superior parietal lobule. Plotted below are the β values representing the slope derived from the linear regression between the number of rectangles and neural activity in each ROI and each condition. Each ROI exhibits both significant parametric effects (p < 0.001) and significantly greater activity in the delay condition than in the immediate condition (p < 0.05). Error bars indicate SEM.

Individual differences

Given the heterogeneity of choices exhibited by our subjects, we wanted to explore whether there were systematic neural differences between different decision makers. In particular, we were interested in our subjects' sensitivity to the temporal manipulation. To estimate our subjects' sensitivity to the temporal dimension of our decision-making task, we computed the difference between the probability of choosing the 20 cent option in the immediate and delay conditions separately for each subject. This difference captures our subjects' attitudes toward the delay component per se, while removing other attitudes (e.g., risk aversion) captured by the immediate condition. This difference varied quite widely across subjects with our most extreme subject exhibiting a 20% difference between conditions (65.71% in the immediate condition and 45.0% in the delay condition). Neural correlates of these individual differences were investigated using an ANCOVA model, using the above difference as the covariate. We then looked for correlations between this covariate and the contrast values from the delay-immediate comparison reported above. This analysis revealed a region of anterior medial frontal cortex (Brodmann area 10) often referred to as frontopolar cortex (Fig. 5; supplemental Table S6, available at www.jneurosci.org as supplemental material). Those subjects whose choices were similar in the immediate and delay conditions exhibited similar neural activity in frontopolar cortex in the two conditions. In contrast, those subjects that chose the 20 cent option less in the delay condition than in the immediate condition exhibited greater activity in frontopolar cortex in the delay condition than in the immediate condition. This suggests that neural activity in this frontopolar region was uniquely associated with the behavioral discrimination of the delay and immediate conditions.

Figure 5.

Individual differences. We computed the difference between each subject's probability of choosing the 20 cent option in the immediate and delay conditions and looked for brain regions exhibiting a similar differentiation between conditions. A region in frontopolar cortex exhibited this pattern of activity. To the right, subjects' behavioral differences are plotted against their neural differences from the above region of interest.

Discussion

There has been much recent interest in the neural underpinnings of intertemporal choice behavior. For example, there has been recent investigation (McClure et al., 2004) into the neural mechanisms underlying the impatience that is commonly observed behaviorally. There has also been work on the neural representation of discounted value during intertemporal choice (Kable and Glimcher, 2007; Kim et al., 2008). However, these studies have primarily focused on the representation of value. There has been little investigation into what, if any, influence the uniquely temporal features of these decisions exert. Indeed, many assume (often implicitly) that the influences of delay have more to do with the uncertainty associated with waiting than with time per se. Thus, it is important to determine how purely temporal factors exert their influence on decision makers.

We constructed a novel intertemporal choice task, incorporating probabilistic reward delivery and varying whether the outcome was revealed immediately or only after some delay. This design has the novel ability to reveal behavior and neural activity uniquely associated with the temporal aspects of the decision. Although the two versions of our task are superficially similar and elicited similar choice behavior, we observed systematic differences in the neural activity during decision making. The most significant of these differences was that the temporal version of our task engaged a network of brain regions (including parahippocampal cortex, retrosplenial cortex, and frontopolar cortex) previously implicated in the process of prospection, or constructing representations of future events (Addis et al., 2007; Buckner and Carroll, 2007; Szpunar et al., 2007). This finding is consistent with the possibility that, as part of their deliberations, our subjects were simulating the impending delay. Knowing that each decision in our task led to a complex series of events, it seems plausible that our subjects attempted to construct a representation of the delay interval simply to comprehend, anticipate, and evaluate the consequences of their choice. Such a representation would likely include the unfolding visual stimuli, expectations of each rectangle turning red or green, and the expected outcome of their choice. Such processing would explain the neural activity associated with the temporal aspects of the delay condition.

Consistent with previous work in economics (Chew and Ho, 1994; Arai, 1997), delaying the resolution of uncertainty influenced many of our subjects' preferences. It has been argued that choices are modulated by delays because decision makers experience positive or negative utility during the delay interval itself. When outcomes are certain and the outcome is negative, the utility associated with the delay period is referred to as dread (Loewenstein, 1987; Berns et al., 2006; Berns et al., 2007). When outcomes are uncertain and it is the resolution of that uncertainty that is being delayed (as in the current study), this delay period utility has been referred to as anxiety (Wu, 1999). Thus, decision makers are also likely representing the impending delay interval in an attempt to anticipate any anxiety that they might experience as a consequence of their choice.

In addition, we observed a systematic relationship between the tendency to choose delayed rewards and activity in frontopolar cortex, a region that has been implicated in prospective processing (Okuda et al., 2003; Addis et al., 2007; Buckner and Carroll, 2007; Szpunar et al., 2007). Economists have long speculated that temporal preferences are, in part, determined by people's “power to imagine” and their “willingness to put forth the necessary effort” to do so (von Böhm-Bawerk, 1959). Furthermore, it has been suggested (Loewenstein, 1987) that the vividness of such imagination (or the lack thereof) should explain variability in temporal preferences across both individual and situations. Our results are consistent with this idea in that individuals who exhibit greater frontopolar activity appeared better able to represent future consequences such as anxiety and modulate their behavior accordingly. In contrast, those individuals who exhibited less frontopolar cortex activity appeared to have “less power of realizing the future” (Marshall, 1916) and failed to discriminate between our delay and immediate conditions.

Perhaps surprisingly, in contrast to previous studies (McClure et al., 2004; Hsu et al., 2005; Kable and Glimcher, 2007; Tanaka et al., 2007), we found little difference between the coding of subjective value in our delay and immediate conditions. This may be attributable to our use of a paradigm that included two equally salient rewards rather than emphasizing one choice option against an unchanging “standard” (McClure et al., 2004; Kable and Glimcher, 2007; Tanaka et al., 2007). It is also likely attributable, in part, to our focus on the deliberation phase of the decision, rather than the decision epoch emphasized in previous studies (Hsu et al., 2005) and our inclusion of objective value as a nuisance regressor (Tanaka et al., 2007). However, the most likely explanation for this difference is that manipulations of the time of reward delivery have a significantly larger influence on behavior (and value signals, presumably) than other sorts of temporal manipulations (Hyten et al., 1994).

That being said, our results suggest that there may be differential effects of value in the temporal and nontemporal versions of our task. In the delay condition, but not the immediate condition, we observed neural activity in dorsolateral prefrontal cortex that was parametrically modulated by the expected value of the chosen option. Perhaps more interestingly, we observed neural activity associated with choice (10 vs 20 cents) in regions thought to code for value. Both the delay and immediate condition elicited greater activity in the ventral striatum during deliberations when subjects subsequently chose the larger, 20 cent reward than when they chose the smaller, 10 cent reward. Furthermore, the delay condition, but not the immediate condition, elicited similar choice-related patterns of neural activity in bilateral insular and anterior cingulate cortices. These differences, particularly those in dorsolateral and medial frontal cortices, could suggest an attempt on subjects' part to override the prepotent aversion to delay (Botvinick et al., 2004; McClure et al., 2004), although this possibility requires additional investigation.

Together, our results are inconsistent with the suggestion that delay and uncertainty influence decisions via identical mechanisms (Mischel, 1966; Stevenson, 1986; Mazur, 1989, 1995, 1997; Rachlin et al., 1991; Sozou, 1998). We included probabilistic reward delivery in both the temporal and nontemporal versions of our task. Thus, neural activity related to the probability of reward delivery would not have differed between the two versions of the task. Indeed, the only procedural difference between the two tasks was the time at which the outcome was revealed. Thus, the behavioral and neural differences we observed between the two versions of our task can only be attributable to this temporal feature. This suggests that there is uniquely temporal processing taking place above and beyond the consideration of uncertainty. The proposed ability to anticipate future anxiety as outlined above is precisely the sort of uniquely temporal process that can explain such differences.

Instead, our results suggest that temporal factors may exert their influence via separate mechanisms. Nonetheless, we fully expect that these temporal signals are combined with reward magnitude signals to form subjective reward values and modulate choice behavior. This might take place in the dorsolateral prefrontal cortex (Kim et al., 2008) or orbitofrontal cortex (Padoa-Schioppa and Assad, 2006). Once committed to a particular choice, the decision maker would experience the utility associated with the delay period, a quantity that might change monotonically throughout the interval (Berns et al., 2006). This direct experience would allow decision makers to learn about their own reactions to temporally extended uncertainty and to better anticipate this factor in future decisions.

Footnotes

This work was supported by National Institutes of Health (NIH) Grants EY014193 and P30 EY000785 (M.M.C.) and NIH Grant MH573246 (X.-J.W.). We thank Nicholas Turk-Browne and the members of the Yale Visual Cognitive Neuroscience Laboratory, the Wang Laboratory, the Lee Laboratory, and the Yale Neuroeconomics Group for helpful feedback and discussion. We also thank Tae-kwan Lee for assistance in early behavioral pilot testing.

References

- AddisDR, Wong AT, Schacter DL (2007) Remembering the past and imagining the future: common and distinct neural substrates during event construction and elaboration. Neuropsychologia 45:1363–1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- AinslieG (1975) Specious reward: a behavioral theory of impulsiveness and impulse control. Psychol Bull 82:463–496. [DOI] [PubMed] [Google Scholar]

- AinslieG (1992) Picoeconomics: the strategic interaction of successive motivational states within the person. Cambridge, UK: Cambridge UP.

- AraiD (1997) Temporal resolution of uncertainty in risky choices. Acta Psychologica 96:15–26. [Google Scholar]

- BernsGS, Chappelow J, Cekic M, Zink CF, Pagnoni G, Martin-Skurski ME (2006) Neurobiological substrates of dread. Science 312:754–758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- BernsGS, Laibson D, Loewenstein G (2007) Intertemporal choice—toward an integrative framework. Trends Cogn Sci 11:482–488. [DOI] [PubMed] [Google Scholar]

- BotvinickMM, Cohen JD, Carter CS (2004) Conflict monitoring and anterior cingulate cortex: an update. Trends Cogn Sci 8:539–546. [DOI] [PubMed] [Google Scholar]

- BucknerRL, Carroll DC (2007) Self-projection and the brain. Trends Cogn Sci 11:49–57. [DOI] [PubMed] [Google Scholar]

- CardinalRN (2006) Neural systems implicated in delayed and probabilistic reinforcement. Neural Netw 19:1277–1301. [DOI] [PubMed] [Google Scholar]

- CardinalRN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ (2001) Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science 292:2499–2501. [DOI] [PubMed] [Google Scholar]

- ChapmanGB, Weber BJ (2006) Decision biases in intertemporal choice and choice under uncertainty: testing a common account. Mem Cognit 34:589–602. [DOI] [PubMed] [Google Scholar]

- ChewSH, Ho JL (1994) Hope: an empirical study of attitude toward the timing of uncertainty resolution. J Risk Uncertain 8:267–288. [Google Scholar]

- DasguptaP, Maskin E (2005) Uncertainty and hyperbolic discounting. Am Econ Rev 95:1290–1299. [Google Scholar]

- FrederickS, Loewenstein G, Odonoghue T (2002) Time discounting and time preference: a critical review. J Econ Lit 40:351–401. [Google Scholar]

- GreenL, Myerson J (2004) A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull 130:769–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- GreenL, Myerson J, Ostaszewski P (1999) Amount of reward has opposite effects on the discounting of delayed and probabilistic outcomes. J Exp Psychol Learn Mem Cogn 25:418–427. [DOI] [PubMed] [Google Scholar]

- HalevyY (2005) Diminishing impatience: disentangling time preference from uncertain lifetime. Mimeo: University of British Columbia Department of Economics.

- HaririAR, Brown SM, Williamson DE, Flory JD, de Wit H, Manuck SB (2006) Preference for immediate over delayed rewards is associated with magnitude of ventral striatal activity. J Neurosci 26:13213–13217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- HassabisD, Kumaran D, Vann SD, Maguire EA (2007) Patients with hippocampal amnesia cannot imagine new experiences. Proc Natl Acad Sci U S A 104:1726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- HoltDD, Green L, Myerson J (2003) Is discounting impulsive? Evidence from temporal and probability discounting in gambling and non-gambling college students. Behav Processes 64:355–367. [DOI] [PubMed] [Google Scholar]

- HsuM, Bhatt M, Adolphs R, Tranel D, Camerer CF (2005) Neural systems responding to degrees of uncertainty in human decision-making. Science 310:1680–1683. [DOI] [PubMed] [Google Scholar]

- HytenC, Madden GJ, Field DP (1994) Exchange delays and impulsive choice in adult humans. J Exp Anal Behav 62:225–233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- KableJW, Glimcher PW (2007) The neural correlates of subjective value during intertemporal choice. Nat Neurosci 10:1625–1633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- KacelnikA (2003) The evolution of patience. In: Time and decision: economic and psychological perspectives on intertemporal choice (Loewenstein G, Read D, Baumeister RF, eds), pp 115–138. New York: Russell Sage Foundation.

- KalenscherT, Pennartz CMA (2008) Is a bird in the hand worth two in the future? The neuroeconomics of intertemporal decision-making. Prog Neurobiol 84:284–315. [DOI] [PubMed] [Google Scholar]

- KepecsA, Uchida N, Zariwala HA, Mainen ZF (2008) Neural correlates, computation and behavioural impact of decision confidence. Nature 455:227–231. [DOI] [PubMed] [Google Scholar]

- KimS, Hwang J, Lee D (2008) Prefrontal coding of temporally discounted values during intertemporal choice. Neuron 59:161–172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- KirbyKN (1997) Bidding on the future: evidence against normative discounting of delayed rewards. J Exp Psychol Gen 126:54–70. [Google Scholar]

- KirbyKN, Herrnstein RJ (1995) Preference reversals due to myopic discounting of delayed reward. Psychol Sci 6:83–89. [Google Scholar]

- LoewensteinG (1987) Anticipation and the valuation of delayed consumption. Econ J 97:666–684. [Google Scholar]

- MarshallA (1916) Principles of economics, Ed 7. London: Macmillan.

- MazurJE (1989) Theories of probabilistic reinforcement. J Exp Anal Behav 51:87–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MazurJE (1995) Conditioned reinforcement and choice with delayed and uncertain primary reinforcers. J Exp Anal Behav 63:139–150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MazurJE (1997) Choice, delay, probability, and donditioned reinforcement. Learn Behav 25:131–147. [Google Scholar]

- McClureSM, Laibson DI, Loewenstein G, Cohen JD (2004) Separate neural systems value immediate and delayed monetary rewards. Science 306:503–507. [DOI] [PubMed] [Google Scholar]

- McClureSM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD (2007) Time discounting for primary rewards. J Neurosci 27:5796–5804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCoyAN, Platt ML (2005) Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci 8:1220–1227. [DOI] [PubMed] [Google Scholar]

- McCoyAN, Crowley JC, Haghighian G, Dean HL, Platt ML (2003) Saccade reward signals in posterior cingulate cortex. Neuron 40:1031–1040. [DOI] [PubMed] [Google Scholar]

- MischelW (1966) Theory and research on the antecedents of self-imposed delay of reward. Prog Exp Pers Res 3:85–132. [PubMed] [Google Scholar]

- NaqviNH, Rudrauf D, Damasio H, Bechara A (2007) Damage to the insula disrupts addiction to cigarette smoking. Science 315:531–534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- OkudaJ, Fujii T, Ohtake H, Tsukiura T, Tanji K, Suzuki K, Kawashima R, Fukuda H, Itoh M, Yamadori A (2003) Thinking of the future and past: the roles of the frontal pole and the medial temporal lobes. Neuroimage 19:1369–1380. [DOI] [PubMed] [Google Scholar]

- Padoa-SchioppaC, Assad JA (2006) Neurons in the orbitofrontal cortex encode economic value. Nature 441:223–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- PrelecD, Loewenstein G (1991) Decision making over time and under uncertainty: a common approach. Manage Sci 37:770–786. [Google Scholar]

- RachlinH, Raineri A, Cross D (1991) Subjective probability and delay. J Exp Anal Behav 55:233–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- RoeschMR, Calu DJ, Schoenbaum G (2007) Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci 10:1615–1624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- RosatiAG, Stevens JR, Hare B, Hauser MD (2007) The evolutionary origins of human patience: temporal preferences in chimpanzees, bonobos, and human adults. Curr Biol 17:1663–1668. [DOI] [PubMed] [Google Scholar]

- SanfeyAG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD (2003) The neural basis of economic decision-making in the ultimatum game. Science 300:1755–1758. [DOI] [PubMed] [Google Scholar]

- SozouPD (1998) On hyperbolic discounting and uncertain hazard rates. Proc Biol Sci 265:2015–2020. [Google Scholar]

- StevensonMK (1986) A discounting model for decisions with delayed positive or negative outcomes. J Exp Psychol Gen 115:131–154. [Google Scholar]

- StrotzRH (1955) Myopia and inconsistency in dynamic utility maximization. Rev Econ Stud 23:165–180. [Google Scholar]

- SzpunarKK, Watson JM, McDermott KB (2007) Neural substrates of envisioning the future. Proc Natl Acad Sci U S A 104:642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- TalairachJ, Tournoux P (1988) Co-planar stereotaxic atlas of the human brain: 3-dimensional proportional system: an approach to cerebral imaging. New York: Thieme.

- TanakaSC, Doya K, Okada G, Ueda K, Okamoto Y, Yamawaki S (2004) Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nat Neurosci 7:887–893. [DOI] [PubMed] [Google Scholar]

- TanakaSC, Schweighofer N, Asahi S, Shishida K, Okamoto Y, Yamawaki S, Doya K (2007) Serotonin differentially regulates short- and long-term prediction of rewards in the ventral and dorsal striatum. PLoS ONE 2:e1333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Böhm-BawerkE (1959) Capital and interest, Vol 2. South Holland, IL: Libertarian, Reprint (London: Macmillan, 1889). [Google Scholar]

- WittmannM, Leland DS, Paulus MP (2007) Time and decision making: differential contribution of the posterior insular cortex and the striatum during a delay discounting task. Exp Brain Res 179:643–653. [DOI] [PubMed] [Google Scholar]

- WuG (1999) Anxiety and decision making with delayed resolution of uncertainty. Theory Decis 46:159–199. [Google Scholar]