Abstract

Nonlinear random effects models with finite mixture structures are used to identify polymorphism in pharmacokinetic/pharmacodynamic phenotypes. An EM algorithm for maximum likelihood estimation approach is developed and uses sampling-based methods to implement the expectation step, that results in an analytically tractable maximization step. A benefit of the approach is that no model linearization is performed and the estimation precision can be arbitrarily controlled by the sampling process. A detailed simulation study illustrates the feasibility of the estimation approach and evaluates its performance. Applications of the proposed nonlinear random effects mixture model approach to other population pharmacokinetic/pharmacodynamic problems will be of interest for future investigation.

Keywords: Finite mixture models, Mixed effects models, Pharmacokinetics/pharmacodynamics

1. Introduction

There is substantial variability in the way individuals respond to medications, in both treatment efficacy and toxicity. The sources of a drug’s underlying pharmacokinetic and pharmacodynamic variability can include demographic factors (such as age, sex, weight), physiological status (such as renal, liver, cardiovascular function), disease states, genetic differences, interactions with other drugs and environmental factors. In their seminal work, Sheiner, Rosenberg and Melmon (1972) proposed a parametric nonlinear mixed-effects modeling framework for quantifying both within and between subject variability in a drug’s pharmacokinetics, and developed an approximate maximum likelihood solution to the problem. Since the introduction by Beal and Sheiner (1979) of the general purpose software package NONMEM implementing this approach, other approximate maximum likelihood algorithms have been introduced to solve the nonlinear random and mixed effects modeling problem (see Davidian and Giltinan (1995) for an extensive review). An exact maximum likelihood (i.e., no linearization) solution to the parametric population modeling problem based on the EM algorithm has also been proposed by Schumitzky (1995) and fully developed and implemented by Walker (1996). The population modeling framework has had a significant impact on how pharmacokinetic (and pharmacodynamic) variability is quantified and studied during drug development, and on the identification of important covariates associated with a drug’s inter-individual kinetic/dynamic variability.

While population models incorporating measured covariates have proven to be useful in drug development, it is recognized that genetic polymorphisms in drug metabolism and in the molecular targets of drug therapy, for example, can also have a significance influence on the efficacy and toxicity of medications (Evans and Relling, 1999). There is, therefore, a need for population modeling approaches that can extract and model important subpopulations using pharmacokinetic/pharmacodynamic data collected in the course of drug development trials and other clinical studies, in order to help identify otherwise unknown genetic determinants of observed pharmacokinetic/pharmacodynamic phenotypes. The nonparametric maximum likelihood approach for nonlinear random effects modeling developed by Mallet (1986), as well as the nonparametric Bayesian approaches of Wakefield and Walker (1997) and Rosner and Mueller (1997), and the smoothed nonparametric maximum likelihood method of Davidian and Gallant (1993) all address this important problem. In this paper we propose a parametric approach using finite mixture models to identify subpopulations with distinct pharmacokinetic/pharmacodynamic properties.

An EM algorithm for exact maximum likelihood estimation of nonlinear random effects finite mixture models is introduced, extending the previous work of Schumitzky (1995) and Walker (1996). The EM algorithm has been used extensively for linear mixture model applications (see McLachlan and Peel (2000) for a review). The algorithm for nonlinear mixture models presented below has an analytically tractable M step, and uses sampling-based methods to implement the E step. Section 2 of this paper describes the finite mixture model within a nonlinear random effects modeling framework. Section 3 gives the EM algorithm for the maximum likelihood estimation of the model. Section 4 addresses individual subject classification, while an error analysis is presented in section 5. A detailed simulation study of a pharmacokinetic model is presented in section 6. Section 7 contains a discussion.

2. Nonlinear Random Effects Finite Mixture Models

A two-stage nonlinear random effects model that incorporates a finite mixture model is given by

| (1) |

and

| (2) |

where i=1,…,n indexes the individuals and k=1,…,K indexes the mixing components.

At the first stage represented by (1), Yi = (y1i,…,ymii)T is the observation vector for the ith individual (Yi ∈ Rmi); hi (θi) is the function defining the pharmacokinetic/pharmacodynamic (PK/PD) model, including subject specific variables (e.g., drug doses), and θi is the vector of model parameters (random effects) (θi ∈ Rp). In (1) Gi (θi, β) is a positive definite covariance matrix (Gi ∈ Rmi×mi) that may depend upon θi as well as on other parameters β (fixed effects) (β ∈ Rq).

At the second stage given by (2), a finite mixture model with K multivariate normal components is used to describe the population distribution. The weights {wk}are nonnegative numbers summing to one, denoting the relative size of each mixing component (subpopulation), for which μk (μk ∈ Rp) is the mean vector and Σk (Σk ∈ Rp×p) is the positive definite covariance matrix.

Letting φ represent the collection of parameters, {β,(wk, μk, Σk),k = 1,…,K}, the population problem involves estimating φ given the observation data{Y1,…,Yn}. The maximum likelihood estimate (MLE) can be obtained by maximizing the overall data likelihood L with respect to φ. Under the i.i.d. assumption of the individual parameters {θi}, L is given by the expression

| (3) |

The MLE of φ is defined as φML with L(φML) ≥ L(φ) for all φ in the parameter space.

3. Solution via the EM Algorithm

The EM algorithm, originally introduced by Dempster, Laird and Rubin (1977), is a widely applicable approach to the iterative computation of MLEs. It was used by Schumitzky (1995) and Walker (1996) to solve the nonlinear random effects maximum likelihood problem for a second stage model consisting of a single normal distribution. The EM algorithm is typically formulated in terms of “complete” versus “missing” data structure. Consider the model given by (1) and (2) for the important case

| (4) |

where Hi (θi) is a known function and β = σ2. The component label vector zi is introduced as a K dimensional indicator such that zi (k) is one or zero depending on whether or not the parameter θi arises from the kth mixing component. The individual subject parameters (θ1,…,θn) are regarded as unobserved random variables. The “complete” data is then represented by Yc = {(Yi, θi, zi),i = 1,…,n} with {θi, zi} representing the “missing” data.

The algorithm starts with φ(0) and moves from φ(r) to φ(r+1) at the rth iteration. At the E-step, define

where log Lc (φ) is the complete data likelihood given by

| (5) |

Now

and by Bayes’ Theorem,

Introducing the notation

then

The expected value of (5) is given by

where

for some constant C.

The M-step takes φ(r) → φ(r+1) where φ(r+1) is the unique optimizer of Q(φ, φ(r)) such that . Let φ′ = {β,(μk, Σk), k=1,…, K}, then the optimizer of Q(φ, φ(r)) relative to φ′ occurs at interior points, and the corresponding components of φ(r+1) are the unique solution to

| (6) |

From the expression of log p(Yi, θi | σ2, μk, Σk),

so

Also,

and

The unique solution of (6) is thus given by

| (7) |

| (8) |

and

| (9) |

The updated estimates {wk(r+1)} are calculated independently. If zi were observable, then the MLE of wk would be . By replacing each zi by its conditional expectation from the E step, the updating for wk is given by (see McLachlan and Peel, 2000):

| (10) |

Dempster et al. (1977) showed that the resulting sequence {φ(r+1)} has the likelihood improving property L (φ(r +1)) ≥ L(φ(r)). It can be shown that the above updates are well-defined, that is for all 1 ≤ k ≤ K, if then so that and are positive definite. Wu (1983) and Tseng (2005) gave the sufficient conditions for the convergence of φ(r) to a stationary point of the likelihood function L(φ). A number of starting positions are suggested, however, in an effort to ensure convergence to a global maximum.

In order to implement the algorithm all the integrals in (7)–(10) must be evaluated at each iterative step. For the non-mixture problem involving a relatively simple pharmacokinetic model, Walker (1996) proposed Monte Carlo integration to evaluate the required integrals. We and others (Ng et al., 2005) have found that importance sampling is preferable to the Monte Carlo integration for approximating the integrals in the EM algorithm for a number of representative models of interest in PK/PD. We have also applied importance sampling to the current mixture model.

We note that all the integrals above have the following form

For each mixing component, a numbers of samples are taken from an envelope distribution, , and used to approximate the integrals as follows

| (11) |

For each mixing component for each subject, the envelope distribution is taken to be a multivariate normal density using the subject’s previously estimated conditional mean and conditional covariance as its mean and covariance. Therefore all the random samples are independent and individual specific. For details of the importance sampling approach in general, see Geweke (1989). The number of independent samples, T, will depend on the complexity of the model and the required accuracy in the integral approximations.

4. Classification of Subjects

It is of interest to assign each individual subject to a subpopulation. Such a classification will allow further investigation into the genetic basis of any identified PK/PD polymorphism. The quantity τi (k) = E{zi (k) | Y, φ} in the E step is the posterior probability that the ith individual belongs to the kth mixing component. The classification involves assigning an individual to the subpopulation associated with the highest posterior probability of membership. For example, for each i, (i =1,…,n), set

or to zero otherwise. No additional computation is required since all the τi (k) are evaluated during each EM step.

5. Standard Errors

Assuming the regularity conditions from Philppou and Roussas (1975), it can be shown that asymptotically as n → ∞,

where .

Now

and the gradient components are calculated for k=1,…,K as

and for k=1,…,K−1,

Introduce the notation sϖk = ((sΣk)1,1, (sΣk)2,1 …, (sΣk)p, p), where (sΣk)i, j is the component of the lower triangular part of sΣk in the (i, j) position. Put these results together to produce the vector

so

All the computations can be performed during the importance sampler calculation at the final iteration of the EM algorithm.

6. Example

In this section a simulation study is conducted to evaluate the proposed algorithm for calculating the exact MLEs for a population finite mixture model. A one compartment pharmacokinetic model is used, with the observations of plasma concentration given by

where D is a bolus drug administration with 100 units of dose, V represents the volume of distribution and k is the elimination rate constant. For all the individuals, mi = 5 with t1 = 1.5, t2 = 2, t3 = 3, t4 = 4 and t5 = 5.5. The within-individual error is assumed to be i.i.d. with variance 0.01. Data sets were simulated from this model for each of 100 subjects sampled from the following population model:

where Vi and ki are assumed to be independent. A total of 200 such population data sets were generated. This model represents the pharmacokinetics of a drug with an elimination that can be characterized by two distinct subpopulations.

The formulation of Section 2 has been modified to accommodate the important case where a subset of parameters are modeled by a multivariate normal distribution and the remaining parameters follow a mixture of normals (see modified updating formulas in the Appendix). The MLEs were obtained for each of the 200 population sets using the EM algorithm with importance sampling described above. For each of the estimated parameters φ, its percent prediction error was calculated for each population data set as:

These percent prediction errors were used to calculate the mean prediction error and root mean square prediction error for each parameter. In addition, for each population data set, the calculated standard errors were used to construct 95% confidence intervals for all estimated parameters. The percent coverage of these confidence intervals was then tabulated over the 200 population data sets. Finally, the individual subject classification accuracy was evaluated for each population data set.

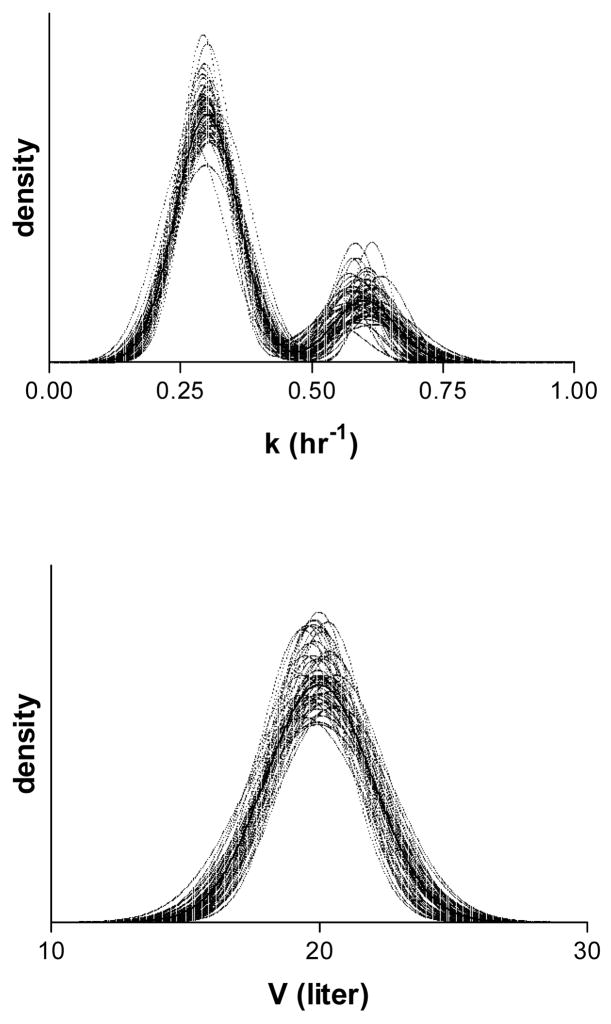

Figure 1 provides a graphical illustration of the results showing the true population distributions of V and k along with the estimated distribution obtained from the 200 simulated population data sets. Quantitative results are presented in Table 1, which gives mean and root mean square prediction errors (RMSE) as well as the percent coverage of the calculated confidence intervals for each of the estimated parameters. The parameter estimates, overall, match the population values and the percent coverage of confidence intervals is reasonable. The estimates of population variance have relatively greater biases and RMSE. Over the 200 population data sets, on average 1.54 out of 100 subjects were classified in the wrong subpopulation. The largest number of subjects misclassified was 4, while all the subjects were correctly classified in 83 of the 200 population data sets.

Fig. 1.

True (solid line) and estimated (dotted line) population densities of k (upper panel) and V (lower panel) from the population simulation analysis.

Table 1.

Mean of parameter estimates (over 200 simulations); Mean percent prediction error (PE) and root mean square percent prediction error (RMSE); Percent coverage of 95% confidence interval

| Parameter | Population Values | Mean of Estimates | Mean PE (%) | RMSE (%) | Coverage of 95% CI (%) |

|---|---|---|---|---|---|

| μV | 20 | 19.978 | 0.043586 | 1.0399 | 94.5 |

| μk1 | 0.3 | 0.29982 | −0.09045 | 1.6491 | 96.5 |

| μk2 | 0.6 | 0.60029 | 0.10042 | 2.6455 | 90.5 |

| w1 | 0.8 | 0.80448 | 0.55991 | 5.4248 | 94.5* |

| σV2 | 4 | 3.7605 | −5.0867 | 23.822 | 94.5 |

| σk12 | 0.0036 | 0.003573 | −1.0539 | 14.88 | 91.0 |

| σk22 | 0.0036 | 0.003195 | −10.857 | 40.236 | 83.5 |

| σ | 0.1 | 0.099931 | −0.06857 | 4.0618 | 95.5 |

The coverage of the transformed variable is shown.

Central to the calculation of the MLEs is the computation of the integrals in (7)–(9), as approximated by importance sampling in our implementation. Using one of the 200 population data sets we examined the influence of the number of samples (T) used in the importance sampler, as well as the number of EM iterations required to achieve two digits of accuracy for each of the estimated parameters. Table 2 presents the parameter estimates from 50 EM iterations by using 1000, 2000 and 3000 samples in the important sampling. Accuracy to two digits was obtained with 1000 samples. Based on this experience, T was taken to be 1000 in this simulations study and 50 EM iterations were run on each data set.

Table 2.

Parameter estimates by importance sampling with 1000, 2000 and 3000 samples

| Parameter | T=1000 | T=2000 | T=3000 |

|---|---|---|---|

| μV | 19.85039 | 19.84843 | 19.85201 |

| μk1 | 0.30840 | 0.30837 | 0.30853 |

| μk2 | 0.60038 | 0.60029 | 0.60079 |

| w1 | 0.75164 | 0.75157 | 0.75269 |

| σV2 | 5.73544 | 5.72227 | 5.70365 |

| σk12 | 0.00327 | 0.00323 | 0.00328 |

| σk22 | 0.00202 | 0.00203 | 0.00200 |

| σ | 0.09706 | 0.09687 | 0.09692 |

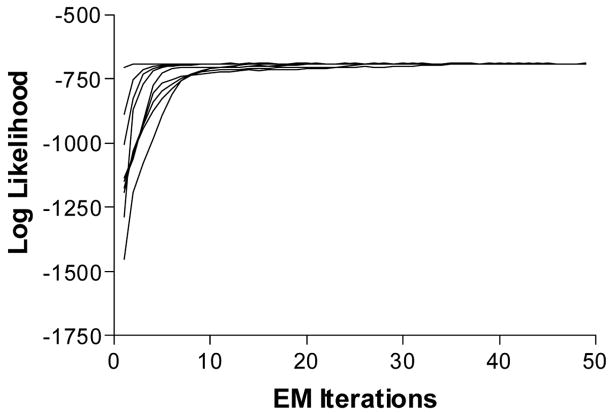

Error! Reference source not found

demonstrates the convergence of log-likelihood values for a particular data set by starting the 50 EM iterations from 9 different positions. The log-likelihood values were approximated via Monte Carlo integration

where . In any particular example, of course, the number of EM iterations, the number of samples T used to approximate integrals, as well as the use of different starting guess will depend on the experiment design and complexity of the model.

7. Discussion

In this paper, an EM algorithm for maximum likelihood estimation of nonlinear random effects mixture models is presented that has application in pharmacokinetic/pharmacodynamic population modeling studies. It extends the previous work on the use of the EM algorithm for MLE of nonlinear random effects models, to the case of finite mixture models, and reinforces the practicability of using exact (no linearizing approximation) MLE estimation in PK/PD modeling studies (see also, Kuhn and Lavielle (2005) for a stochastic EM variation). The parametric mixture model MLE approach presented also complements previous work on nonparametric Bayesian and smoothed nonparametric MLE, in addressing the increasingly important problem of identifying subpopulations with distinct PK/PD properties in drug development trials. We note that approximate maximum likelihood methods using mixture models are also available in NONMEM.

The EM algorithm has been used extensively for fitting linear mixture models in numerous applications in diverse fields of study. Even for linear problems involving mixture of normal components, a number of challenges attend the use of the EM algorithm for maximum likelihood estimation (McLachlan and Peel (2000)) that are also relevant to the nonlinear random effects problem. These include: potential unboundness of the likelihood for heteroscedastic covariance components; local maxima of the likelihood function; and choice of the number of mixing components. Application of the algorithm for nonlinear random effects mixture models presented here can be guided by the extensive work related to these issues for linear mixture modeling.

We have investigated the possible unboundness of the likelihood for the example considered in this paper. If, for example, in the first component of the mixture, μ1 satisfies hi (μ1) = Yi for any i and Σ1 → 0, then the likelihood will tend to infinity, and the global maximizer will not exist. By restricting the covariance matrices Σk, k = 1,…, K to be equal (homoscedastic components), as is often done in mixture modeling, the unboundness of the likelihood will be eliminated. In our example with heteroscedastic variance components, each individual has five error-associated observations, while the parameter space is of dimension two. The condition for likelihood singularity is therefore very unlikely to be satisfied.

Future work is also needed to extend the algorithm to include important practical cases involving more general error variance models and random effects covariates.

Fig. 2.

Convergence of log-likelihood by starting the EM algorithm from 9 positions.

Acknowledgments

This work was supported in part by National Institute of Health grants P41-EB001978 and R01-GM068968.

Appendix

For the example in Section 6, as in other PK/PD problem, it is often reasonable to assume that the mechanism of genetic polymorphism applies to only part of the system, for example, drug metabolism or drug target. It is therefore desirable to partition the parameter θi into two components, one (αi) that follows a mixture of multivariate normals and the second (βi) defined by a single multivariate normal distribution: θi = {αi, βi}, where αi and βi are independent. The EM updates for this special case are given by:

and

The updates for {wk, 1 ≤ k ≤ K} and σ2 are the same as in Section 3.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Beal SL, Sheiner LB. NONMEM User’s Guide, Part I. San Francisco: Division of Clinical Pharmaology, University of California; 1979. [Google Scholar]

- Davidian M, Gallant AR. The non-linear mixed effects model with a smooth random effects density. Biometrika. 1993;80:475–488. [Google Scholar]

- Davidian M, Giltinan M. Nonlinear Models for Repeated Measurement Data. Chapman and Hall; New York: 1995. [Google Scholar]

- Dempster AP, Laird N, Rubin DB. Maximum likelihood from incomplete data via the EM algorithm. J Roy Statist Soc B. 1977;39:1–38. [Google Scholar]

- Evans WE, Relling MV. Pharmacogenomics: translating functional genomics into rational therapeutics. Science. 1999;286:487–491. doi: 10.1126/science.286.5439.487. [DOI] [PubMed] [Google Scholar]

- Geweke J. Bayesian inference in econometric models using Monte Carlo integration. Econometrica. 1989;57:1317–1340. [Google Scholar]

- Kuhn E, Lavielle M. Maximum likelihood estimation in nonlinear mixed effects models. Comput Stat Data Anal. 2005;49:1020–1038. [Google Scholar]

- Mallet A. A maximum likelihood estimation method for random coefficient regression models. Biometrika. 1986;73:645–656. [Google Scholar]

- McLachlan GJ, Peel D. Finite Mixture Models. Wiley; New York: 2000. [Google Scholar]

- Ng CM, Joshi A, Dedrick RL, Garovoy MR, Bauer RJ. Pharmacokinetic-pharmacodynamic-efficacy analysis of Efalizumab in patients with moderate to severe psoriasis. Pharm Res. 2005;77:1088–1100. doi: 10.1007/s11095-005-5642-4. [DOI] [PubMed] [Google Scholar]

- Philppou A, Roussas G. Asymptotic normality of the maximum likelihood estimate in the independent but not identically distributed case. Ann Inst Stat Math. 1975;27:45–55. [Google Scholar]

- Rosner GL, Muller P. Bayesian population pharmacokinetic and pharmacodynamic analyses using mixture models. J Pharmacokinet Biopharm. 1997;25:209–234. doi: 10.1023/a:1025784113869. [DOI] [PubMed] [Google Scholar]

- Schumitzky A. EM Algorithms and two stage methods in pharmacokinetic population analysis. In: D’Argenio DZ, editor. Advanced Methods of Pharmacokinetic and Pharmacodynamic Systems Analysis. II. Plenum Press; New York: 1995. pp. 145–160. [Google Scholar]

- Sheiner LB, Rosenberg B, Melmon KL. Modeling of individual pharmacokinetics for computer-aided drug dosing. Comput Biomed Res. 1972;5:441–459. doi: 10.1016/0010-4809(72)90051-1. [DOI] [PubMed] [Google Scholar]

- Tierney L. Markov chains for exploring posterior distributions (with discussion) Ann Stat. 1994;22:1701–1762. [Google Scholar]

- Tseng P. An analysis of the EM algorithm and entropy-like proximal point methods. Math Oper Res. 2005;29:27–44. [Google Scholar]

- Wakefiled JC, Walker SG. Bayesian nonparametric population models: formulation and comparison with likelihood approaches. J Pharmacokinet Biopharm. 1997;25:235–253. doi: 10.1023/a:1025736230707. [DOI] [PubMed] [Google Scholar]

- Walker S. An EM algorithm for nonlinear random effects models. Biometrics. 1996;52:934–944. [Google Scholar]

- Wu CF. On the convergence properties of the EM algorithm. Ann Stat. 1983;11:95–103. [Google Scholar]