Abstract

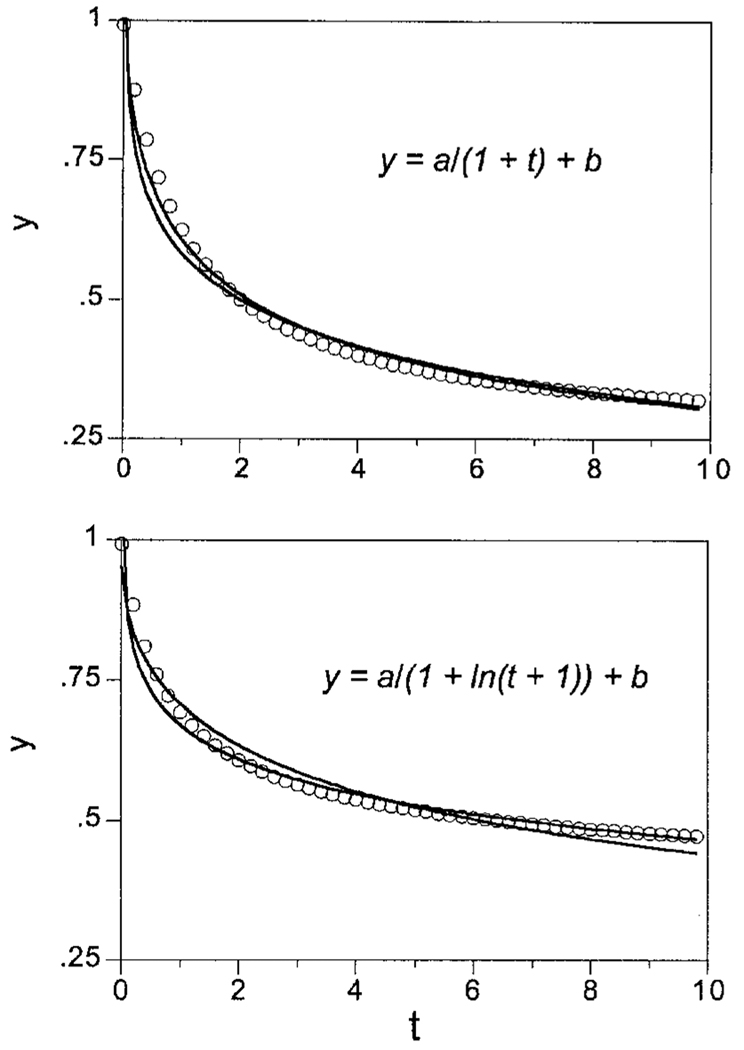

An integrative account of short-term memory is based on data from pigeons trained to report the majority color in a sequence of lights. Performance showed strong recency effects, was invariant over changes in the interstimulus interval, and improved with increases in the intertrial interval. A compound model of binomial variance around geometrically decreasing memory described the data; a logit transformation rendered it isomorphic with other memory models. The model was generalized for variance in the parameters, where it was shown that averaging exponential and power functions from individuals or items with different decay rates generates new functions that are hyperbolic in time and in log time, respectively. The compound model provides a unified treatment of both the accrual and the dissipation of memory and is consistent with data from various experiments, including the choose-short bias in delayed recall, multielement stimuli, and Rubin and Wenzel’s (1996) meta-analyses of forgetting.

When given a phone number but no pencil, we would be unwise to speak of temperatures or batting averages until we have secured the number. Subsequent input overwrites information in short-term store. This is called retroactive interference. It is sometimes a feature, rather than a bug, since the value of information usually decreases with its age (J. R. Anderson & Schooler, 1991; Kraemer & Golding, 1997). Enduring memories are often counterproductive, be they phone numbers, quality of foraging patches (Belisle & Cresswell, 1997), or identity of prey (Couvillon, Arincorayan, & Bitterman, 1998; Johnson, Rissing, & Killeen, 1994). This paper investigates short-term memory in a simple animal that could be subjected to many trials of stimulation and report, but its analyses are applicable to the study of forgetting generally. The paper exploits the data to develop a trace-decay/ interference model of several phenomena, including list length effects and the choose-short effect. The model has affinities with many in the literature; its novelty lies in the embedding of a model of forgetting within a decision theory framework. A case is made for the representation of variability by the logistic distribution and, in particular, for the logit transformation of recall/recognition probabilities. Exponential and power decay functions are shown to be special cases of a general rate equation and are generalized to multielement stimuli in which only one element of the complement, or all elements, are necessary for recall. It is shown how the form of the average forgetting function may arise from the averaging of memory traces with variable decay parameters and gives examples for the exponential and power functions. By way of introduction, the experimental paradigm and companion model are previewed.

The Experiment

Alsop and Honig (1991) demonstrated recency effects in visual short-term memory by flashing a center key-light five times and having pigeons judge whether it was more often red or blue. Accuracy decreased when instances of the minority color occurred toward the end of the list. Machado and Cevik (1997) flashed combinations of three colors eight times on a central key, and pigeons discriminated which color had been presented least frequently. The generally accurate performances showed both recency and primacy effects. The present experiments use a similar paradigm to extend this literature, flashing a series of color elements at pigeons and asking them to vote whether they saw more red or green.

The Compound Model

The compound model has three parts: a forgetting function that reflects interference or decay, a logistic shell that converts memorial strength to probability correct, and a transformation that deals with variance in the parameters of the model.

Writing, rewriting, and overwriting

Imagine that short-term memory is a bulletin board that accepts only index cards. The size of the card corresponds to its information content, but in this scenario 3 × 5 cards are preferred. Tack your card randomly on the board. What is the probability that you will obscure a particular prior card? It is proportional to the area of the card divided by the area of the board. (This assumes all-or-none occlusion; the gist of the argument remains the same for partial overwriting.) Call that probability q. Two other people post cards after yours. The probability that the first one will obscure your card is q. The probability that your card will escape the first but succumb to the second is (1 − q)q. The probability of surviving n − 1 successive postings only to succumb to the nth is the geometric progression q(1 − q) n− 1. This is the retroactive interference component. The probability that you will be able to go back to the board and successfully read out what you posted after n subsequent postings is f(n) = (1 − q)n. Discouraged, you decide to post multiple images of the same card. If they are posted randomly on the board, the proportion of the board filled with your information increases as 1 − (1 − q)m, from which level it will decrease as others subsequently post their own cards.

Variability

The experiment is repeated 100 times. A frequency histogram of the number of times you can read your card on the nth trial will exemplify the binomial distribution with parameters 100 and f(n). There may be additional sources of variance, such as encoding failure— the tack didn’t stick, you reversed the card, and so forth. The decision component incorporates variance by embedding the forgetting function in a logistic approximation to the binomial.

Averaging

In another scenario, on different trials the cards are of a uniform but nonstandard size: All of the cards on the second trial are 3.5 × 5, all on the third trial are 3 × 4, and so on. The probability q has itself become a random variable. This corresponds to averaging data over trials in which the information content of the target item or the distractors is not perfectly equated, or of averaging over subjects with different-sized bulletin boards (different short-term memory capacities) or different familiarities with the test item. The average forgetting functions are no longer geometric. It will be shown that they are types of hyperbolic functions, whose development and comparison to data constitutes the final contribution of the paper.

To provide grist for the model, durations of the interstimulus intervals (ISIs) and the intertrial intervals (ITIs) were manipulated in experiments testing pigeons’ ability to remember long strings of stimuli.

METHOD

The experiments involved pigeons’ judgments of whether a red or a green color occurred more often in a sequence of 12 sequentially presented elements. The analysis consisted of drawing influence curves that show the contribution of each element to the ultimate decision and thereby measure changes in memory of items with time. The technique is similar to that employed by Sadralodabai and Sorkin (1999) to study the influence of temporal position in an auditory stream on decision weights in pattern discrimination. The first experiment gathered a baseline, the second varied the ISI, and the third varied the ITI.

Subjects

Twelve common pigeons (Columba livia) with prior histories of experimentation were maintained at 80%– 85% of their free-feeding weight. Six were assigned to Group A, and 6 to Group B.

Apparatus

Two Lehigh Valley (Laurel, MD) enclosures were exhausted by fans and perfused with noise at 72 dB SPL. The experimental chamber in both enclosures measured 31 cm front to back and 35 cm side to side, with the front panel containing four response keys, each 2.5 cm in diameter. Food hoppers were centrally located and offered milo grain for 1.8 sec as reinforcement. Three keys in Chamber A were arrayed horizontally, 8 cm center to center, 20 cm from the floor. A fourth key located 6 cm above the center key was not used. The center in-line key was the stimulus display, and the end keys were the response keys. The keys in Chamber B were arrayed as a diamond, with the outside (response) keys 12 cm apart and 21 cm from the floor. The top (stimulus) key was centrally located 24 cm from the floor. The bottom central key was not used.

Procedure

All the sessions started with the illumination of the center key with white light. A single peck to it activated the hopper, which was followed by the first ITI.

Training 1: Color-naming

A 12-sec ITI comprised 11 sec of darkness and ended with illumination of the houselight for 1 sec. At the end of the ITI, the center stimulus key was illuminated either red or green for 6 sec, whereafter the side response keys were illuminated white. A response to the left key was reinforced if the stimulus had been green, and a response to the right key if the stimulus had been red. Incorrect responses darkened the chamber for 2 sec. After either a reward or its omission, the next ITI commenced. There were 120 trials per session. For the first 2 sessions, a correction procedure replayed all the trials in which the subject had failed to earn reinforcement, leaving only the correct response key lit. For the next 2 sessions, the correction procedure remained in place without guidance and was thereafter discontinued. This categorization task is traditionally called zero-delay symbolic matching-to-sample. By 10 sessions, subjects were close to 100% accurate and were switched to the next training condition.

Training 2: An adaptive algorithm

The procedure was the same as above, except that the 6-sec trial was segmented into twelve 425-msec elements, any one of which could have a red or a green center-key light associated with it. There was a 75-msec ISI between each element. The elements were initially 100% green on the green-base trials and 100% red on the red-base trials. Response accuracy was evaluated in blocks of 10 trials, which initially contained half green-base trials and half red-base trials. A response was scored correct and reinforced if the bird pecked the left key on a trial that contained more than 6 green elements or the right key on a trial that contained more than 6 red elements. If accuracy was 100% in a block, the number of foil elements (a red element on a green-base trial and the converse) was incremented by 2 for the next block of 10 trials; if it was 90% (9 out of 10 correct), the number of foil elements was incremented by 1. Since each block of 10 trials contained 120 elements, this constituted a small and probabilistic adjustment in the proportion of foils on any trial. If the accuracy was 70%, the number of foils was decremented by 1, and if below that, by an additional 1. If the accuracy was 80%, no change was made, so that accuracy converged toward this value. On any one trial, the number of foil elements was never permitted to equal or exceed the number of base color elements, but otherwise the allocation of elements was random. Because the assignments were made to trials pooled over the block, any one trial could contain all base colors or could contain as many as 5 foil colors, even though the probability of a foil may have been, say, 30% for any one element when calculated over the 120 elements in the block. These contingencies held for the first 1,000 trials. Thereafter, the task was made slightly more difficult by increasing the number of foil elements by 1 after blocks of 80% accuracy.

Bias to either response key would result in an increased number of reinforcers for those responses, enhancing that bias. Therefore, when the subjects received more reinforcers for one color response in a block, the next block would contain proportionately more trials with the other color dominant. This negative feedback maintained the overall proportion of reinforcers for either base at close to 50% and resulted in relatively unbiased responding. The Training 2 condition was held in force for 20 sessions.

Experiment 1 (baseline)

The procedure was the same as above, except that the number of foils per block was no longer adjusted but was held at 40 (33%) for all the trials except the first 10 of each session. The first 10 trials of each session contained only 36 foils; data from them were not recorded. If no response occurred within 10 sec, the trial was terminated, and after the ITI the same sequence of stimulus elements was replayed. All the pigeons served in this experiment, which lasted for 16 sessions, each comprising 13 blocks of 10 trials. All of the subsequent experimental conditions were identical to this baseline condition, except in the details noted.

Experiment 2 (ISI)

The ISI was increased from 75 to 425 msec, while keeping the stimulus durations constant at 425 msec. The ITI was increased to 20 sec to maintain the same proportion of ITI to trial duration. As is noted below, the ratio of cue duration to ITI has been found to be a powerful factor in discrimination, with smaller ratios supporting greater accuracies than do large ratios. Only Group A experienced this condition, which lasted for 20 sessions, each comprising 12 blocks of 10 trials.

Experiment 3 (ITI)

The ITI was increased to 30 sec, the last 1 sec of which contained the warning stimulus (houselight). Only Group B experienced this condition, which lasted for 20 sessions, each comprising 12 blocks of 10 trials.

RESULTS

Training 2

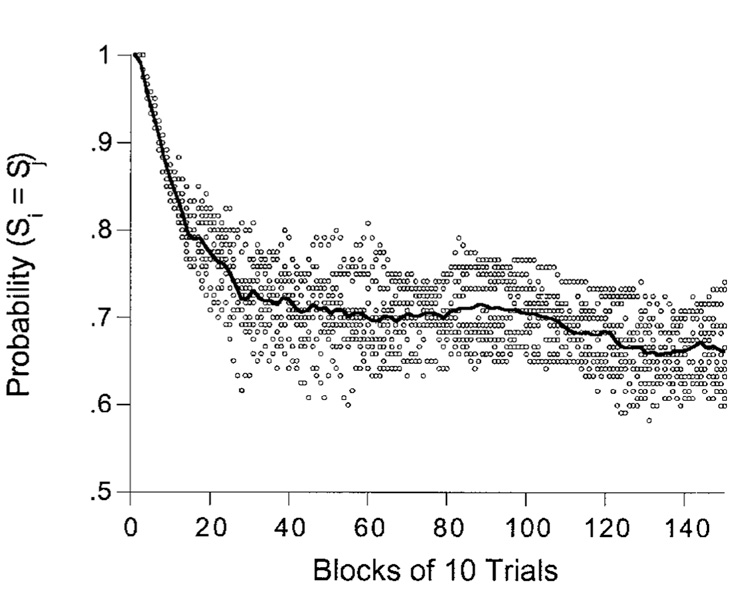

All the subjects learned the task, as can be seen from Figure 1, where the proportion of elements with the same base color is shown as a function of blocks of trials. The task is trivial when this proportion is 1.0, and impossible when it is .5. This proportion was automatically adjusted to keep accuracy around 75%–80%, which was maintained when approximately two thirds of the elements were of the same color.

Figure 1.

The probability that stimulus elements will have the same base color, shown as a function of trials. The program adjusted this probability so that accuracy settled to around 78%.

Experiment 1

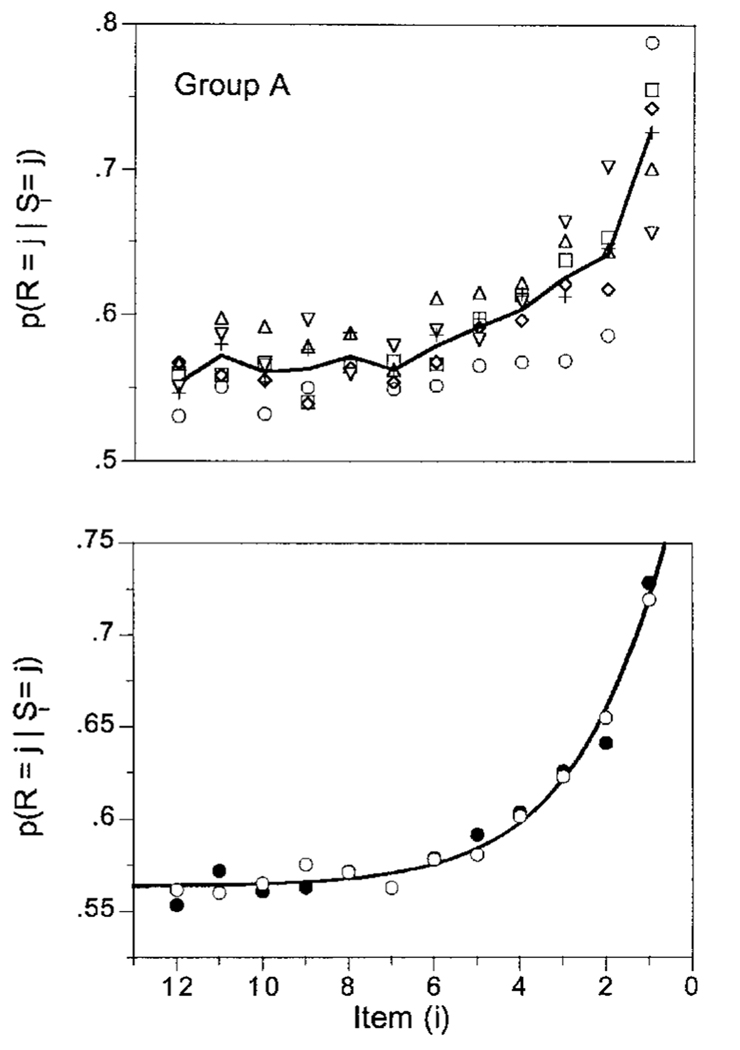

Trials with response latencies greater than 4 sec were deleted from analysis, which reduced the database by less than 2%. Group A was somewhat more accurate than Group B (80% vs. 75%), but not significantly so [t(10) = 1.52, p > .1]; the difference was due in part to Subject B6, whose accuracy was the lowest in this experiment (68%). The subjects made more errors when the foils occurred toward the end of a trial. The top panel of Figure 2 shows the probability of responding R (or G) when the element in the ith position was R (or G), respectively, for each of the subjects in Group A; the line runs through the average performance. The center panel contains the same information for Group B, and the bottom panel the average over all subjects. All the subjects except B6 (squares) were more greatly influenced by elements that occurred later in the list.

Figure 2.

The probability that the response was G (or R) given that the element in the ith position was G (or R). The curves in the top panels run through the averages of the data; the curve in the bottom panel was drawn by Equation 1 and Equation 2.

Forgetting

Accuracy is less than perfect, and the control of the elements over the response varies as a function of their serial position. This may be because the information in the later elements blocks, or overwrites, that written by the earlier ones: retroactive interference. The average memory for a color depends on just how the influence of the elements changes as a function of their proximity to the end of the list, a change manifest in Figure 2. Suppose that each subsequent input decreases the memorial strength of a previous item by the factor q, as in the bulletin board example. This is an assumption of numerous models of short-term memory, including those of Estes (1950; Bower, 1994; Neimark & Estes, 1967), Heinemann (1983), and Roitblat (1983), and has been used as part of a model for visual information acquisition (Busey & Loftus, 1994). The last item will suffer no overwriting, the penultimate item an interference of q so that its weight will be 1 − q, and so on. The influence of an element—its weight in memory—forms a geometrically decreasing series with parameter q and with the index i running from the end of the list to its beginning. The average value of the ith weight is

| (1) |

Memory may also decay spontaneously: It has been shown in numerous matching-to-sample experiments that the accuracy of animals kept in the dark after the sample will decrease as the delay lengthens. Still, forgetting is usually greater when the chamber is illuminated during the retention interval or other stimuli are interposed (Grant, 1988; Shimp & Moffitt, 1977; cf. Kendrick, Tranberg, & Rilling, 1981; Wilkie, Summers, & Spetch, 1981).

The mechanism of the recency effect may be due in part to the animals’ paying more attention to the cue as the trial nears its end, thus failing to encode the earliest elements. But these data make more sense looked back upon from the end of the trial where the curve is steepest, which is the vantage of the overwriting mechanism. All attentional models would look forward from the start of the interval and would predict more diffuse, uniform data with the passage of time. If, for instance, there was a constant probability of turning attention to the key over time, these influence curves would be a concave exponential-integral, not the convex exponential that they seem to be.

Deciding

The diagnosticity of each element is buffered by the 11 other elements in the list, so the effects shown in Figure 2 emerge only when data are averaged over many trials (here, approximately 2,000 per subject). It is therefore necessary to construct a model of the decision process. Assign the indices Si = 0 and +1 to the color elements R and G, respectively. (In general, those indices may be given values of MR and MG, indicating the amount of memory available to such elements, but any particular values will be absorbed into the other parameters, and 0 and +1 are chosen for transparency.) One decision rule is to respond “G” when the sum of the color indices is greater than some threshold, theta (θ, the criterion) and “R” otherwise. An appropriate criterion might be θ = 6, half-way between the number of green stimuli present on green-dominant trials (8) and the number present on red-dominant trials (4). If the pigeons followed this rule, performance would be perfect, and Figure 2 would show a horizontal line at the level of .67, the diagnosticity of any single element (see Appendix A).

Designate the weight that each element has in the final decision as Wi, with i = 1 designating the last item, i = 2 the penultimate item, and so on. If, as assumed, the subjects attend only to green, the rule might be

The indicated sum is the memory of green. Roberts and Grant (1974) have shown that pigeons can integrate the information in sample stimuli for at least 8 sec. If the weights were all equal to 1, the average sum on green-base trials would be 8, and subjects would be perfectly accurate. This does not happen. Not only are the weights less than 1, they are apparently unequal (Figure 2).

What is the probability that a pigeon will respond G on a trial in which the ith stimulus is G? It is the probability that Wi plus the weighted sum of the other elements will carry the memory over the criterion. Both the elements, Si, and the weights, Wi, conceived as the probability of remembering the ith element, are random variables: Any particular stimulus element is either 0 or 1, with a mean on green-base trials of 2/3, a mean on red-base trials of 1/3, and an overall mean of 1/2. The animal will either remember that element (and thus add it to the sum) or not, with an average probability of remembering it being wi. The elements and weights are thus Bernoulli random variables, and the sum of their products over the 12 elements, Mi, forms a binomial distribution. With a large number of trials, it converges on a normal distribution. In Appendix B, the normal distribution is approximated by the logistic, and it is shown that the probability of a green response on trials in which the ith stimulus element is green and of a red response on trials in which the ith stimulus element is red is

| (2) |

with

In this model, μ(Ni) is the average memory of the dominant color given knowledge of the ith element and is a linear function of wi (μ(Ni) = awi + b; see Equation B13), θ is the criterion above which such memories are called green, and below which they are called red, and s is proportional to the standard deviation, The scaling parameters involved in measuring μ(Ni) may be absorbed by the other parameters of the logistic, to give

The rate of memory loss is q: As q approaches 0, the influence curves become horizontal, and as it approaches 1, the influence of the last item grows toward exclusivity. The sum of the weights for an arbitrarily long sequence (i → ∞) is 1/q. This may be thought of as the total attentional/memorial capacity that is available for elements of this type—the size of the board relative to the size of the cards. Theta (θ) is the criterial evidence necessary for a green response. The variability of memory is s: The larger s is, the closer the influence curves will be to chance overall. The situation is symmetric for red elements. Equations 1 and 2 draw the curve through the average data in Figure 2, with q taking a value of .36, a value suggesting a memory capacity (1 /q) of about three elements. Individual subjects showed substantial differences in the values of q; these will be discussed below.

As an alternative decision tactic, the pigeons might have subtracted the number of red elements remembered from the number of green and chosen green if the residue exceeded a criterion. This strategy is more efficient by a factor of an advantage that may be outweighed by its greater complexity. Because these alternative strategies are not distinguishable within the present experiments, the former, noncomparative strategy was assumed for simplicity in the experiments to be discussed below and in scenarios noted by Gaitan and Wixted (2000).

Experiment 2 (ISI)

In this experiment, the ISI was increased from 75 to 425 msec for the subjects in Group A. If the influence of each item decreases with the entry of the next item into memory, the serial-position curves should be invariant. If the influence decays with time, the apparent rate constants should increase by a factor of 1.7, since the trial duration has been increased from 6 to 10.2 sec, with 10.2/6 5 1.7.

Results

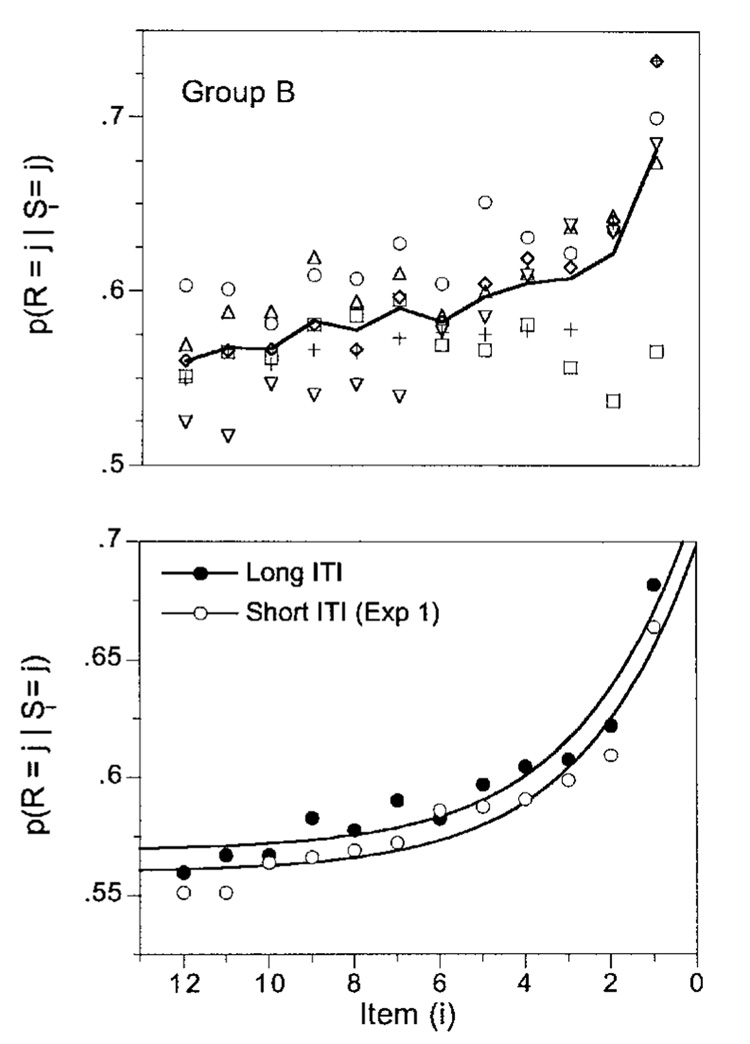

The influence curve is shown in the top panel of Figure 3. The median value of q for these subjects was .40 in Experiment 1 and .37 here; the change in mean values was not significant [matched t(5) 5 0.19]. This lack of effect is even more evident in the bottom panel of Figure 3, where the influence curves for the two conditions are not visibly different.

Figure 3.

The probability that the response was G (or R) given that the element in the i th position was G (or R) in Experiment 2. The curve in the top panel runs through the average data; the curves in the bottom panel were drawn by Equations 1 and 2, with the filled symbols representing data from this experiment and the open symbols data from the same subjects in the baseline condition (Experiment 1).

Discussion

This is not the first experiment to show an effect of intervening items—but not of intervening time— before recall. Norman (1966; Waugh & Norman, 1965) found that humans’ memory for items within lists of digits decreased geometrically, with no effect of ISI on the rate of forgetting (the average q for his visually presented lists was .28).

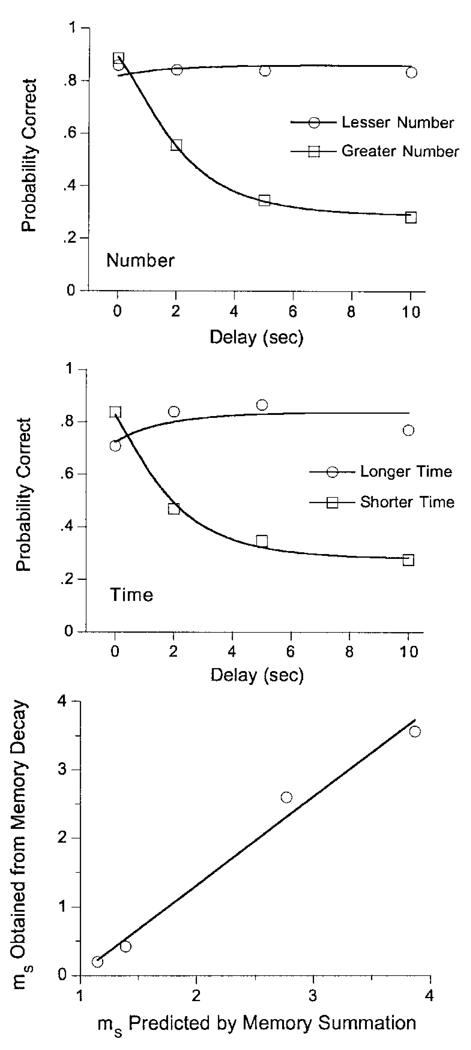

Other experimenters have found decay during the ISI (e.g., Young, Wasserman, Hilfers, & Dalrymple, 1999). Roberts (1972b) found a linear decrease in percent correct as a function of ISIs ranging from 0 to 10 sec. He described a model similar to the present one, but in which decay was a function of time, not of intervening items. In a nice experimental dissociation of memory for number of flashes versus rate of flashing of key lights, Roberts, Macuda, and Brodbeck (1995) trained pigeons to discriminate long versus short stimuli and, in another condition, a large number of flashes from a small number (see Figure 7 below). They concluded that in all cases, their subjects were counting the number of flashes, that their choices were based primarily on the most recent stimuli, and that the recency was time based rather than item based, because the relative impact of the final flashes increased with the interflash interval. Alsop and Honig (1991) came to a similar conclusion. The decrease in impact of early elements was attributed to a decrease in the apparent duration of the individual elements (Alsop & Honig, 1991) or in the number of counts representing them (Roberts et al., 1995), during the presentation of subsequent stimuli.

Figure 7.

The decrease in memory for number of flashes as a function of delay interval in two conditions (Roberts, Macuda, & Broadbeck, 1995). Such decay aids judgments of “fewer flashes” that mediated these choices, as is shown by their uniformly high accuracy. The curves are from Equations 3–6. The bottom panel shows the hypothetical memory for number at the beginning of the delay interval as predicted by the summation model (Equation 4; abcissae) and as implied by the compound model (Equations 3, 5, and 6; ordinates).

The changes in the ISI were smaller in the present study and in Norman’s (1966: 0.1–1.0 sec) than in those evidencing temporal decay. When memory is tested after delay, there is a decrease in performance even if the delay period is dark (although the decrease is greater the light; Grant, 1988; Sherburne, Zentall, & Kaiser, 1998). It is likely that both overwriting and temporal decay are factors in forgetting, but with short ISIs the former are salient. McKone (1998) found that both factors affected repetition priming with words and nonwords, and Reitman (1974) found that both affected the forgetting of words when rehearsal was controlled. Wickelgren (1970) showed that both decay and interference affected memory of letters presented at different rates: Although forgetting was an exponential function of delay, rates of decay were faster for items presented at a higher rate. Wickelgren concluded that the decay depended on time but occurred at a higher rate during the presentation of an item. Wickelgren’s account is indistinguishable from ones in which there are dual sources of forgetting, temporal decay and event overwriting, with the balance naturally shifting toward overwriting as items are presented more rapidly.

The passage of time is not just confounded with the changes in the environment that occur during it; it is constituted by those changes. Time is not a cause but a vehicle of causes. Claims for pure temporal decay are claims of ignorance concerning external inputs that retroactively interfered with memory. Such claims are quickly challenged by others who hypostasize intervening causes (e.g., Neath & Nairne, 1995). Attempts to block covert rewriting of the target item with competing tasks merely replace rewriting with overwriting (e.g., Levy & Jowaisas, 1971). The issue is not decay versus interference but, rather, the source and rate of interference; if these are occult and homogenous in time, time itself serves as a convenient avatar of them. Hereafter, decay will be used when time is the argument in equations and interference when identified stimuli are used as the argument, without implying that time is a cause in the former case or that no decrease in memory occurs absent those stimuli in the latter case.

Experiment 3 (ITI)

In this experiment, the ITI was increased to 30 sec for subjects in Group B. This manipulation halved the rate of reinforcement in real time and, in the process, devalued the background as a predictor of reinforcement. Will this enhance attention and thus accuracy? The subjects and apparatus were the same as those reported in Experiment 1 for Group B; the condition lasted for 20 sessions.

Results

The longer ITI significantly improved performance, which increased from 75% to 79% [matched t(5) 5 4.6]. Figure 4 shows that this increase was primarily due to an improvement in overall performance, rather than to a differential effect on the slope of the influence curves. There was some steepening of the influence curves in this condition, but this change was not significant, although it approached significance with B6 removed from the analysis [matched t(4) = 1.94, p >.05]. The curves through the average data in the bottom panel of Figure 4 share the same value of q = .33.

Figure 4.

The probability that the response was G (or R) given that the element in the ith position was G (or R) in Experiment 3. The curve in the top panel runs through the averages of the data; the curves in the bottom panel were drawn by Equations 1 and 2, with the filled symbols representing data from this experiment and the open symbols data from the same subjects in the baseline condition.

Discussion

In the present experiment, the increased ITI improved performance and did so equally for the early and the late elements. It is likely that it did so both by enhancing attention and by insulating the stimuli (or responses) of the previous trial from those of the contemporary trial, thus providing increased protection from proactive interference. A similar increase in accuracy with increasing ITI has been repeatedly found in delayed matching-to-sample experiments (e.g., Roberts & Kraemer, 1982, 1984), as well as with traditional paradigms with humans (e.g., Cermak, 1970). Grant and Roberts (1973) found that the interfering effects of the first of two stimuli on judging the color of the second could be abated by inserting a delay between the stimuli; although they called the delay an ISI, it functioned as would an ITI to reduce proactive interference.

APPLICATION, EXTENSION, AND DISCUSSION

The present results involve differential stimulus summation: Pigeons were asked whether the sum of red stimulus elements was greater than the sum of green elements. In other summation paradigms—for instance, duration discrimination—they may be asked whether the sum of one type of stimulus exceeds a criterion (e.g., Loftus & McLean, 1999; Meck & Church, 1983). Counting is summation with multiple criteria corresponding to successive numbers (Davis & Pérusse, 1988; Killeen & Taylor, 2000). Effects analogous to those reported here have been discussed under the rubric response summation (e.g., Aydin & Pearce, 1997).

The logistic/geometric provides a general model for summation studies: Equation 1 is a candidate model for discounting the events that are summed as a function of subsequent input, with Equation 2 capturing the decision process. This discussion begins by demonstrating the further utility of the logistic-geometric compound model for (1A) lists of varied stimuli with different patterns of presentation and (1B) repeated stimuli that are written to short-term memory and then overwritten during a retention interval. It then turns to (2) qualitative issues bearing on the interpretation of these data, (3) more detailed examination of the logistic shell and the related log-odds transformation, (4) the form of forgetting functions and their composition in a writing/overwriting model, and finally (5) the implications of averaging across different forgetting functions.

Writing and Overwriting

Heterogeneous Lists

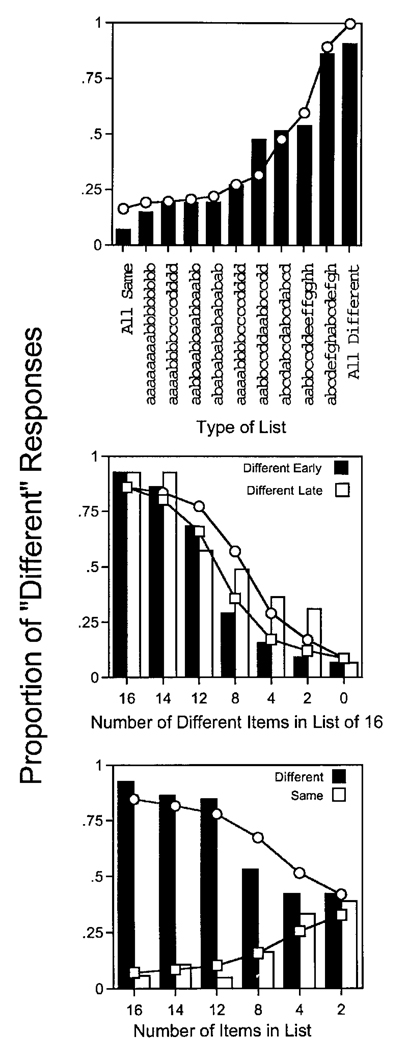

Young et al. (1999) trained pigeons to peck one screen location after the successive presentation of 16 identical icons and another after the presentation of 16 different icons, drawn from a pool of 24. After acquisition, they presented different patterns of similar and different icons: for instance, the first eight of one type, the second eight of a different type, four quartets of types, and so on. The various patterns are indicated on the x-axis in the top panel of Figure 5, and the resulting average proportions of different responses as bars above them.

Figure 5.

The average proportion of different responses made by 8 pigeons when presented a series of 16 icons that were the same or different according to the patterns indicated in each panel (Young, Wasserman, Hilfers, & Dalrymple, 1999). The data are represented by the bars, and the predictions of the compound model (Equations 1 and Equation 3) by the connected symbols.

The compound model is engaged by assigning a value of +1 to a stimulus whenever it is presented for the first time on that list and of — 1 when it is a repeat. Because we lack sufficient information to construct influence curves, the variable μ(Ni ) in Equation 2 is replaced with mS = Σwi Si (see Appendix B), where mS is the average memory for novelty at the start of the recall interval:

| (3) |

Equations 1 and 3, with parameters q = .1, θ = − .45, and σ = .37, draw the curve of prediction above the bars. As before,

In Experiment 2a, the authors varied the number of different items in the list, with the variation coming either early in the list (dark bars) or late in the list. The overwriting model predicts that whatever comes last will have a larger effect, and the data show that this is generally the case. The predictions, shown in the middle panel of Figure 5, required parameters of q = .05, θ = .06, and σ = .46.

In Experiment 2b, conducted on alternate days with 2a, Young et al. (1999) exposed the pigeons to lists of different lengths comprising items that were all the same or all different. List length was a strong controlling variable, with short lists much more difficult than long ones. This is predicted by the compound model only if the pigeons attend to both novelties and repetitions, instantiated in the model by adding (+1) to the cumulating evidence when a novelty is observed and subtracting from it (− 1) when a repetition is observed. So configured, the z-scores of short lists will be much closer to 0 than the z-scores of long lists. The data in the top two panels, where list length was always 16, also used this construction but are equally well fit by assuming attention either to novelties alone or to repetitions alone (in which case the ignored events receive weights of 0). The data from Experiment 2b permit us to infer that the subjects do attend to both, since short strings with many novelties are more difficult than long strings with few novelties, even though both may have the same memorial strength for novelty (but different strengths for repetition). The predictions, shown in the bottom panel of Figure 5, used the same parameters as those employed in the analysis of Experiment 2a, shown above them.

Delayed Recall

Roberts et al. (1995) varied the number of flashes (F = 2 or 8) while holding display time constant (S = 4 sec) for one group of pigeons and, for another group, varied the display time (2 vs. 8 sec) while holding the number of flashes constant at 4. The animals were rewarded for judging which was greater (i.e., more frequent or of longer duration). Figure 6 shows their design for the stimuli. After training to criterion, they then tested memory for these stimuli at delays of up to 10 sec.

Figure 6.

The design of stimuli in the Roberts, Macuda, and Brodbeck (1995) experiment. On the right are listed the hypothetical memory strengths for number of flashes at the start of recall, as calculated from Equations 3–6.

The writing/overwriting model describes their results, assuming continuous forgetting through time with a rate constant of λ = 0.5/sec. Under this assumption, memory for items will increase as a cumulative exponential function of their display time (Loftus & McLean, 1999, provide a general model of stimulus input with a similar entailment). Since display time of the elements is constant, the (maximum) contribution of individual elements is set at 1. Their actual contribution to the memory of the stimulus at the start of the delay interval depends on their distance from it; in extended displays, the contribution from the first element has dissipated substantially by the start of the delay period (see, e.g., Figure 2). The cumulative contribution of the elements to memory at the start of the delay interval, mS, is

| (4) |

where ti measure the time from the end of the ith flash until the start of the delay interval. This initial value of memory for the target stimulus will be larger on trials with the greater number of stimuli (the value of n is larger) or frequency of stimuli (the values of t are smaller).

During the delay, memories continue to decay exponentially, and when the animals are queried, the memory traces will be tested against a fixed criterion. This aggregation and exponential decay of memorial strength was also assumed by Keen and Machado (1999; also see Roberts, 1972b) in a very similar model, although they did not have the elements begin to decay until the end of the presentation epoch. Whereas their data were indifferent to that choice, both consistency of mechanism and the data of Roberts and associates recommend the present version, in which decay is is the same during both acquisition and retention.

The memory for the stimulus at various delays dj is

| (5) |

if this exceeds a criterion θ, the animal indicates “greater.”

Equation 3 may be used to predict the probability of responding “greater” given the greater (viz., longer/more numerous) stimulus. It is instantiated here as a logistic function of the distance of xj above threshold: Equation 3, with mS being the cumulation for the greater stimulus and

| (6G) |

The probability of responding “lesser” given the smaller stimulus is then a logistic function of the distance of xj below threshold: Equation 3, with mS being the cumulation for the lesser stimulus and

| (6L) |

To the extent memory decay continues through the interval, memory of the greater decays toward criterion, whereas memory of the lesser decays away from criterion, giving the latter a relative advantage. This provides a mechanism for the well-known choose-short effect (Spetch & Wilkie, 1983). It echoes an earlier model of accumulation and dissipation of memory offered by Roberts and Grant (1974) and is consistent with the data of Roberts et al. (1995), as shown by Figure 7. In fitting these curves, the rate of memory decay (λ in Equation 5) was set to 0.5/sec. The value of the criterion was fixed θ = 1 for all conditions, and mS was a free parameter. Judgments corresponding to the circles in Figure 7 required a value of 0.6 for s in both conditions, whereas values corresponding to the squares required a value of 1.1 for s in both conditions. The smaller measures of dispersion are associated with the judgments that were aided if the animal was inattentive on a trial (the “fewer flashes” judgments). These were intrinsically easier/more accurate not only because they were helped by forgetting during the delay interval, but also because they were helped by inattention during the stimulus, and this is what the differences in s reflect.

If the model is accurate, it should predict the one remaining free parameter, the level of memory at the beginning of the delay interval, mS. It does this by using the obtained value of λ, 0.5/sec, in Equation 4. The bottom panel of Figure 7 shows that it succeeds in predicting the values of these parameters a priori, accounting for over 98% of their variance. (The nonzero intercept is a consequence of the choice of an arbitrary criterion θ = 1.) This ability to use a coherent model for both the storage (writing) and the report delay (overwriting) stages increases the degrees of freedom predicted without increasing the number used in constructing the mechanism, the primary advantage of hypothetical constructs such as short-term memory.

Trial Spacing Effects

Primacy versus recency

In the present experiments, there was no evidence of a primacy effect, in which the earliest items are recalled better than the intermediate items. Recency effects, such as those apparent in Figure 2–Figure 4, are almost universally found, whereas primacy effects are less common (Gaffan, 1992). Wright (1998, 1999; Wright & Rivera, 1997) has identified conditions that foster primacy effects (well-practiced lists containing unique items, delay between review and report that differentially affects visual and auditory list memories, etc.), conditions absent from the present study. Machado and Cevik (1997) found primacy effects when they made it impossible for pigeons to discriminate the relative frequency of stimuli on the basis of their most recent occurrences and attributed such primacy to enhanced salience of the earliest stimuli. Presence at the start of a list is one way of enhancing salience; others include physically emphasizing the stimulus (Shimp, 1976) or the response (Lieberman, Davidson, & Thomas, 1985); such marking also improves coupling to the reinforcer and, thus, learning in traditional learning (Reed, Chih-Ta, Aggleton, & Rawlins, 1991; Williams, 1991, 1999) and memory (Archer & Margolin, 1970) paradigms.

In the present experiment, there was massive proactive interference from prior lists, which eliminated any potential primacy effects (Grant, 1975). The improvement conferred by increasing the ITI was not differential for the first few items in the list. Generalization of the present overwriting model for primacy effects is therefore not assayed in this paper.

Proactive Interference

Stimuli presented before the to-be-remembered items may bias the subjects by preloading memory; this is called proactive interference. If the stimuli are random with respect to the current stimulus, such interference should eliminate any gains from primacy. Spetch and Sinha (1989; also see Kraemer & Roper, 1992) showed that a priming presentation of the to-be-remembered stimuli before a short stimulus impaired accuracy, whereas presentation before a long stimulus improved accuracy: Prior stimuli apparently summated with those to be remembered. Hampton, Shettleworth, and Westwood (1998) found that the amount of proactive interference varied with species and with whether or not observation of the to-be-remembered item was reinforced. Consummation of the reinforcer can itself fill memory, displacing prior stimuli and reducing interference. It can also block the memory of which response led to reinforcement (Killeen & Smith, 1984), reducing the effectiveness of frequent or extended reinforcement (Bizo, Kettle, & Killeen, 2001). These various effects are all consistent with the overwriting model, recognizing that the stimuli subjects are writing to memory may not be the ones the experimenter intended (Goldinger, 1996).

Spetch (1987) trained pigeons to judge long/short samples at a constant 10-sec delay and then tested at a variety of delays. For delays longer than 10 sec, she found the usual bias for the short stimulus—the choose-short effect. At delays shorter than 10 sec, however, the pigeons tended to call the short stimulus “long.” This is consistent with the overwriting model: Training under a 10-sec delay sets a criterion for reporting “long” stimuli quite low, owing to memory’s dissipation after 10 sec. When tested after brief delays, the memory for the short stimulus is much stronger than that modest criterion.

In asymmetric judgments, such as present/absent, many/few, long/short, passage of time or the events it contains will decrease the memory for the greater stimulus but is unlikely to increase the memory for the lesser stimulus, thus confounding the forgetting process with an apparent shift in bias. But the resulting performance reflects not so much a shift in bias (criterion) as a shift in memories of the greater stimulus toward the criterion and of the lesser one away from the criterion. If stimuli can be recoded onto a symmetric or unrelated set of memorial tags, this “bias” should be eliminated. In elegant studies, Grant and Spetch (1993a, 1993b) showed just this result: The choose-short effect is eliminated when other, non-analogical codes are made available to the subjects and when differential reinforcement encourages the use of such codes (Kelly, Spetch, & Grant, 1999).

As a trace cumulation/decumulation model of memory, the present theory shares the strengths and weaknesses of Staddon and Higa’s (1999a, 1999b) account of the choose-short effect. In particular, when the retention interval is signaled by a different stimulus than the ITI, the effect is largely abolished, with the probability of choosing short decreasing at about the same rate as that of choosing long (Zentall, 1999). These results would be consistent with trace theories if pigeons used decaying traces of the chamber illumination (rather than sample keylight) as the cue for their choices. Experimental tests of that rescue are lacking.

Wixted and associates (Dougherty & Wixted, 1996; Wixted, 1993) analyze the choose-short effect as a kind of presence/absence discrimination in which subjects respond on the basis of the evidence remembered and the evidence is a continuum of how much the stimuli seemed like a signal, with empty trials generally scoring lower than signal trials. Although some of their machinery is different (e.g., they assume that distributions of “present” and “absent” get more similar, rather than both decaying toward zero), many of their conclusions are similar to those presented here.

Context

These analyses focus on the number of events (or the time) that intervenes between a particular stimulus and the opportunity to report, but other factors are equally important. Roberts and Kraemer (1982) were among the first to emphasize the role of the ITI in modulating the level of performance, as was also seen in Experiment 3. Santiago and Wright (1984) vividly demonstrated how contextual effects change not only the level, but also the shape, of the serial position function. Impressive differences in level of forgetting occur depending on whether the delay is constant or is embedded in a set of different delays (White & Bunnell-McKenzie, 1985), or is similar to or different from the stimulus conditions during the ITI (Sherburne et al., 1998). Some of these effects might be attributed to changes in the quality of original encoding affecting initial memorial strength, mS, relative to the level of variability, s); examples are manipulations of attention by varying the duration (Roberts & Grant, 1974), observation (Urcuioli, 1985; Wilkie, 1983), marking (Archer & Margolin, 1970), and surprisingness (Maki, 1979) of the sample. Other effects will require other explanatory mechanisms, including the different kinds of encoding (Grant, Spetch, & Kelly, 1997; Riley, Cook, & Lamb, 1981; Santi, Bridson, & Ducharme, 1993; Shimp & Moffitt, 1977). The compound model may be of use in understanding some of this panoply of effects; to make it so requires the following elaboration.

THE COMPOUND MODEL

The Logistic Shell

The present model posits exponential changes in memorial strength, not exponential changes in the probability of a correct response. Memorial strength is not well captured by the unit interval on which probability resides. Two items with very different memorial strengths may still have a probability of recognition or recall arbitrarily close to 1.0: Probability is not an interval scale of strength. The logistic shell, and the logit transformation that is an intrinsic part of it, constitute a step toward such a scale (Luce, 1959). The compound model is a logistic shell around a forgetting function; its associated log-odds transform provides a candidate measure of memorial strength that is consistent with several intuitions, as will be outlined below.

The theory developed here may be applied to both recognition and recall experiments. Recall failure may be due either to decay of target stimulus traces or to lack of associated cues (handles) sufficient to access those traces (see, e.g., Tulving & Madigan, 1970). By handle is meant any cue, conception, or context that restricts the search space; this may be a prime, a category name, the first letter of the word, or a physical location associated with the target, either provided extrinsically or recovered through an intrinsic search strategy. The handles are provided by the experimenter in cued recall and by the subject in free recall; in recognition experiments, the target stimulus is provided, requiring the subject to recall a stimulus that would otherwise serve as a handle (a name, presence or absence in training list, etc.). Handles may decay in a manner similar to target stimuli (Tulving & Psotka, 1972). The compound model is viable for cued recall, recognition, and free recall, with the forgetting functions in those paradigms being conditional on recovery of target stimulus, handle, or both, respectively. This treatment is revisited in the section on episodic theory, below.

Paths to the Logit

Ad hoc

If the probability p of an outcome is .80, in the course of 100 samples we expect to observe, on the average, 80 favorable outcomes. The odds for such an outcome are 80/20 = p/(1 − p) = 4/1, and the odds against it are 1/4. The “odds” transformation maps probability from the symmetric unit interval to the positive continuum. Odds are intrinsically skewed: 4/1 is farther from indifference (1/1) than is 1/4, even though the distinction between favorable and unfavorable may signify an arbitrary assignment of 0 or 1, heads or tails, to an event. The log-odds transformation carries probability from the unit interval through the positive continuum of odds to the whole continuum, providing a symmetric map for probabilities centered at 0 when p is .50:

| (7) |

Here, the capital lambda-sub-b signifies the log-odds ratio of p, using logarithms to base b. When b = e = 2.718 …—that is, when natural logarithms are employed—the log-odds is called the logit transformation. The use of different logarithms merely changes the scale of the log-odds (e.g., Λe[p] = loge[10] × Λ 10[p]). White (1985) found that an equation of the form

| (8) |

provided a good description of pigeon short-term memory, with f(t) = e−λt (also see Alsop, 1991). When memorial strength, m, is zero—say, at some extremely remote point in time—the probability of a correct response, p∞, is chance. It follows that c must equal the negative log odds of p∞. When t = 0, memory must be at its original level. Therefore, if f (0) =1,

| (9) |

The value of p∞ is not necessarily equal to the inverse of the number of choices available. A bias toward one or another response will be reflected in changes in c and, thus, in the probability of being correct by chance for that response.

The logit transformation is a monotonic function of mSf(t). In the case in which f(t) = e−λt, Loftus and Bamber (1990) and Bogartz (1990) have shown that Equation 9 entails that forgetting rates are independent of degree of original learning. Allerup and Ebro (1998) provide additional empirical arguments for the log-odds transformation; Rasch (1960) bases a general theory of measurement on it.

In the case of acquisition, p0 is the initial probability of being correct by chance, pmax is the asymptotic accuracy (often, approximately 1.0), f′(t) is some acquisition function, such as f′(t) = 1 −e−λt, and

| (10) |

Signal detection theory/Thurstone models

The traditional signal detection theory (SDT) exegesis interprets detectability/memorability indices as normalized differences between mean stimulus positions on a likelihood axis that describes distributions of samples. The currently present or most recently presented stimulus provides evidence for the hypothesis of R (or G). To the extent that the stimulus is clear and well remembered, the evidence is strong, and the corresponding position on the axis (x) is extreme. The observer sets a criterion on the likelihood axis and responds on the basis of whether the sample exceeds or falls short of the criterion. The criterion may be moved along the decision axis to bias reporting toward one stimulus or the other. The underlying distributions are often assumed to be normal but are not empirically distinguishable from logistic functions. It was this Thurstonian paradigm that motivated the logistic model employed to analyze the present data.

Calculate the log-odds of a logistic process by dividing Equation 3 by its complement and taking the natural logarithm of that ratio. The result is

Thus, the logit is the z-score of an underlying logistic distribution.

When the logit is inferred from choice/detection data, it is overdetermined. Redundant parameters are removed by assigning the origin and scale of the discriminability index so that the mean of one distribution (e.g., that for G) is 0 and the standard deviation is the unit, reducing the model to

where m is the distance of the R stimulus above the G stimulus in units of variability and c is the criterion. If memory decreases with time, this is equivalent to Equation 8.

The use of z-scores to represent forgetting was recommended by Bahrick (1965), who christened such transformed units ebbs, both memorializing Ebbinghaus and characterizing the typical fate of memories. In terms Equation 9,

A disadvantage of this representation is that when asymptotic guessing probabilities are arbitrarily close to their logits will be arbitrarily large negative numbers, causing substantial variability in the ebb owing to the logit’s amplification of data that are near their floor, leading substantial measurement error. In these cases, stipulation of some standard floor such as Λ(.01) will stabilize the measure while having little negative affect on its functioning in the measurable range of performance.

Davison and Nevin (1999) have unified earlier treatments of stimulus and response discrimination to provide a general stimulus—response detection theory. Their analyses takes the log-odds of choice probabilities as the primary dependent variable. Because traditional SDT converges on this model, as was shown above, it is possible to retroinfer the conceptual impedimenta of SDT as a mechanism for Davison and Nevin’s more empirical approach. Conversely, it is possible to develop more effective and parsimonious SDT models by starting from Davison and Nevin’s reinforcement-based theory, which promises advantages in dealing with bias.

White and Wixted (1999) crafted an SDT model memory in which the odds of responding, say, R equals the expected ratio of densities of logistic distributions situated m relative units apart, multiplied by the obtained odds of reinforcement for an R versus a G response. Although it lacks closed-form solutions, White and Wixted’s model has the advantage of letting the bias evolve as the organism accrues experience with the stimuli and associated reinforcers; this provides a natural bridge between learning theories and signal detectability theories and thus engages additional empirical degrees of constraint on the learning of discriminations.

Race models

Race models predict response probabilities and latencies as the outcome of two concurrent stochastic processes, with the one that happens to reach its criterion soonest being the one that determines the response and its latency. Link (1992) developed a comprehensive race model based on the Poisson process, which he called wave theory. He derived the prediction that the log-odds of making one of two responses will be proportional to the memorial strength—essentially, Equation 8.

The compound model is a race model with interference/decay: It is essentially a race/erase model. In the race model, evidence favoring one or the other alternative accumulates with each step, as in an add–subtract counter, until a criterion is reached or—as the case for all of the paradigms considered here—until the trial ends. If rate of forgetting were zero, the compound model would be race model pure and simple. But with each new step, there is also a decrease in memorial strength toward zero. If the steps are clocked by input, it is called interference; if by time, decay. In either case, some gains toward the criterion are erased. During stimulus presentation, information accumulates much faster than it dissipates, and the race process is dominant; during recall delays, the erase process dominates. The present treatment does not consider latency effects, but access to them via race models is straightforward. The race/erase model will be revisited below.

Episodic theory

Memorial variance may arise for composite stimuli having a congeries of features, each element of which decays independently (e.g., Spear, 1978); Goldinger (1998) provides an excellent review. Powerful multitrace episodic theories are available, but these often require simulation for their application (e.g., MINERVA; Hintzman, 1986). Here, a few special cases with closed-form solutions are considered.

•If memory fails when the first (or nth, or last) element is forgotten, probability of a correct response is an extreme value function of time. Consider first the case in which all of n elements are necessary for a correct response. If the probability of an element’s being available at time t is f(t) = e−λt, the probability that all will be available is the n-fold product of these probabilities: p = e−λnt. Increasing the number of elements necessary for successful performance increases the rate of decay by that factor.

If one particular feature suffices for recall, it clearly behooves the subject to attend to that feature, and increasingly so as the complexity of the stimulus increases. The alternatives are either fastest-extreme-value forgetting or probabilistic sampling of the correct cue, both inferior strategies.

•Consider a display with n features, only one of which suffices for recall, and exponential forgetting. If a subject randomly selects a feature to remember, the expected value of memorial strength of the correct feature is e−λt/n. If the subject attempts to remember all features, the memorial strength of the ensemble is e−λtn. This attend-to-everything strategy is superior for very brief recall intervals but becomes inferior to probabilistic selection of cues when λt > ln(n) / (n − 1).

•The dominant strategy at all delay intervals is, of course, to attend to the distinguishing feature, if that can be known. The existence of sign stimuli and search images (Langley, 1996; Plaisted & Mackintosh, 1995) reflects this ecological pressure. Labels facilitate shape recognition by calling attention to distinguishing features (Daniel & Ellis, 1972). If the distinguishing element is the presence of a feature, animals naturally learn to attend to it, and discriminations are swift and robust; if the distinguishing element is the absence of a feature, attention lacks focus, and discrimination is labored and fragile (Dittrich & Lea, 1993; Hearst, 1991), as are attend-to-everything strategies in general.

•Consider next the case in which retrieval of any one of n correlated elements is sufficient for a correct response —for example, faces with several distinguishing features or landscapes. If memorial decay occurs with constant probability over time, the probability that any one element will have failed by time t is F(t) = 1 − e−λt. The probability that all of n such elements will have failed by time t is the n-fold product of those probabilities; the probability of success is its complement:

| (11) |

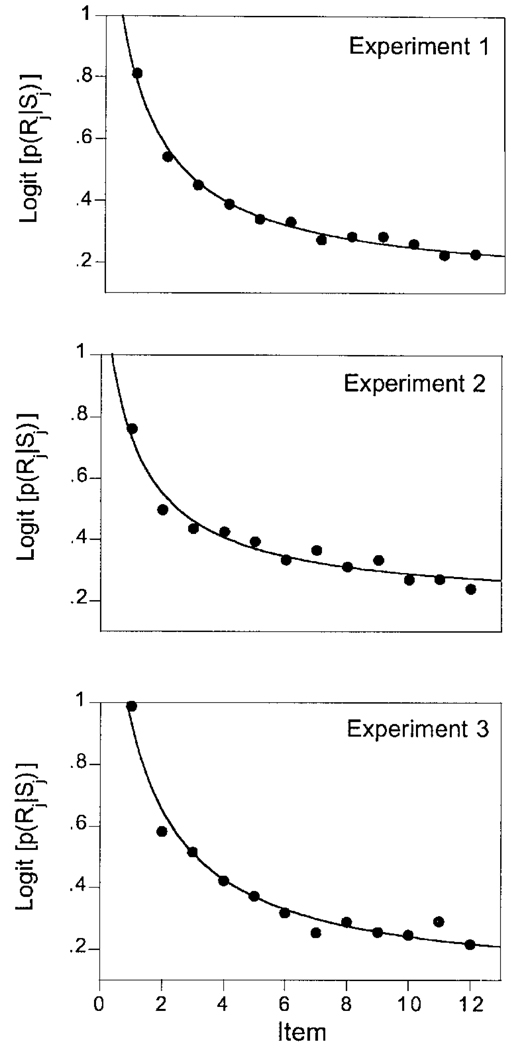

These forgetting functions are displayed in Figure 8. In the limit, the distribution of the largest extreme converges on the Gumbel distribution (exp{−exp[(t − μ) / s]}; Gumbel, 1958), whose form is independent of n and whose mean μ increases as the logarithm of n.

Figure 8.

Extreme value distributions. Bold curve: The elemental distribution, an exponential decay with a time constant of 1. Dashed curves: Probability of recall when that requires 2 or 5 such elements to be active. Continuous curves: Probability when any one of 1 (bold curve), 2, 3, 5, or 10 such elements suffice for recall (Equation 11). Superimposed on the rightmost curve is the best-fitting asymptotic Gumbel distribution.

A relevant experiment was conducted by Bahrick, Bahrick, and Wittlinger (1975), who tested memory for high school classmates’ names and pictures over a span of 50 years. For the various cohorts in the study, the authors tested the ability to select a classmate’s portrait the context of four foils (picture recognition), to select the one of five portraits that went with a name (picture matching) and to recall the names that went with various portraits (picture cued recall). They also tested the ability select a classmate’s name in the context of four foils (name recognition), to select the one of five names that went with a picture (name matching), and to freely recall the names of classmates (free recall). Equation 9, with the decay function given by Equation 11 with a rate constant λ set to 0.05/year, provided an excellent description the recognition and matching data. The number of inferred elements was n = 33 for pictures and 3 for names; this difference was reflected in a near-ceiling performance with pictures as stimuli over the first 35 years but a visible decrease in performance after 15 years when names were the stimuli.

Bahrick et al. (1975) found a much faster decline free- and picture-cued recall of names than in recognition and matching. They explained it as being due to the loss of mediating contextual cues. Consider in particular the case of a multielement stimulus in which one element (the handle) is necessary for recall but, given that element, any one of a panoply of other elements is sufficient. In this case, the rate-limiting factor in recall is the trace of the handle. The decrease in recall performance may be described as the product of its trace with the union of the others, f(t) = e−λt[1 − (1 − e−λt)n], approximating the dashed curves in Figure 8. If the necessary handle is provided, the probability of correct recall will then be released to follow the course of the recognition and matching data that Bahrick and associates reported (the bracket in the equation; the rightmost curve in Figure 8). If either of two elements is necessary and any of n thereafter suffice, the forgetting function is

and so on.

Tulving and Psotka (1972) reported data that exemplified retroactive interference on free recall and release from that interference when categorical cues were provided. Their forgetting functions resemble the leftmost and rightmost curves in Figure 8. Bower, Thompson- Schill, and Tulving (1994) found significant facilitation of recall when the category of the response was from the same category as the cue and a systematic decrease in that facilitation as the diagnosticity of the cue categories was undermined. In both studies, the category handle provided access to a set of redundant cues, any one of which could prompt recall.

•The half-life of a memory will thus change with the number of its features, and the recall functions will go from singly inflected (viz., exponential decay) to doubly inflected (ogival), with increases in the number that are sufficient for a correct response. If all features are necessary, the half-life of a memory will decrease proportionately with the number of those features. Whereas the whole may be greater than the sum of its parts, so also will be its rate of decay.

•Figure 8 and the associated equations have been discussed as though they were direct predictions of recall probabilities, rather than predictions of memory strength to then be ensconced within the logistic shell. This was done for clarity. If the ordinates of Figure 8 are rescaled by multiplying by the (inferred) number of elements initially conditioned, the curves will trace the expected number of elements as a function of time. Parameters of the logistic can be chosen so that the functions of the ensconced model look like those shown in Figure 8, and different parameters permit the logistic to accommodate bias and nonzero chance probabilities.

•If a subject compares similar multielement memories from old and new populations by a differencing operation (the standard SDT assumption for differential judgments), or if subpopulations of attributes that are favorable and unfavorable to a response are later compared (e.g., Riccio, Rabinowitz, & Axelrod, 1994), the resulting distribution of strengths will be precisely logistic, since the difference of two independent standard Gumbel variates is the logistic variate (Evans, Hastings, & Peacock, 1993).

It follows that Equation 9 with an appropriate forgetting function (e.g., Equation 1) can account for the forgetting of single-element stimuli and of multielement stimuli if the decision process involves differencing two similar populations of elements; for absolute judgments concerning multielement stimuli, Equation 11 or a variant should be embedded in Equation 9.

Averaging Logits

Respondents in binary tasks may be correct in two ways: In the present experiment, the pigeons could correct both when they responded R and when they responded G. How should those probabilities be concatenated? At any one point in time, logits are a linear combination of memory and chance (Equation 9), so that averaged logits should fairly represent their population means (Estes, 1956; Appendix C). As the average of logarithms of probabilities, the average logit is equivalent to a well-known measure of detectability, the geometric mean of the log-odds of the probabilities, which under certain conditions is independent of bias (Appendix B):

| (12) |

It is also possible to average the constituent raw probabilities, but as nonlinear functions of underlying processes, they will be biased estimators of them (Bahrick, 1965). Whenever there are differences in initial memorial strength (mS) or bias (c), the logistic average over response alternatives gives a better estimate of the population parameters than does a probability average.

Summary

There are many reasons for using a log-odds transformation of probabilities. Although the logit is one step farther removed from the data than is probability of recognition or recall, so also is memory, and various circumstances suggest that the logit is closer to an interval measure of memorial strength than are the probabilities which it is based. It leads naturally to a decision-theoretic representation, parsing strength and bias into representation as memorial strength, mS, and criterion, c, and letting the decrease in strength with time be represented independently by f(t).

The Forgetting Function

The experiments reported here were different from those typically used to establish forgetting functions, since there were many elements both before and after any particular element that could interfere with its memory. Indeed, the situation is worse than the typical interference experiment, where the interfering items are often from different domain or might be ignored if the subject is clever same stimulus domain, must be processed to perform the task, and will certainly affect accuracy. White (1985; Harper & White, 1997) found that events intervening between a stimulus and its recall disrupted recall and, furthermore, that the events caused the same percentage decrement wherever they were placed in the delay interval, an effect consistent with exponential forgetting. Young et al. (1999) varied ISI from 0 to 4 sec on lists of 16 novel or repeated stimuli and found a graded effect, with accuracy decreasing exponentially with ISI.

Geometric/Exponential Functions

Geometric decrease was chosen as a model of the recency process because it is consistent both with the present data and with other accounts of forgetting (e.g., Loftus, 1985; Machado & Cevik, 1997; McCarthy & White, 1987; Waugh & Norman, 1965). In the limit of many small increments, the geometric series converges to an exponential decay process. Exponential decays are appropriate models for continuous variables, such as the passage of time. The process was represented here as a geometric process in light of the results of Experiment 2, where the occurrence of subsequent stimuli decremented memory. The rate constant in exponential decay (λ) is related to the rate of a geometric decrease by the formula λ = − ln(1 − q) /Δ, with Δ being the ISI. When decay is slow, these are approximately equal (e.g., a q of .100 for stimuli presented once per second corresponds to a λ of 0.105/ sec). The rate constants reported here correspond to values of of approximately 0.5/item; since items were presented at a rate of around 2 items/sec (Δ = 0.5 sec), this implies a rate of decay on the order of λ = 1/sec.

Given a memory capacity of c items, each occupying a standard amount m of the capacity, the probability that a memory will survive presentation of a subsequent item equals the unoccupied proportion of memory available for the next item: (c− m)/ c, or (1 − m/c) = 1 − q. The probability that the item will survive two subsequent events is that ratio squared. In general, recall of the nth item from the end of the list will decrease as (1 − m/c)n− 1. This geometric progression recapitulates the bulletin board analogy of the introduction; the average value of q = m/c = .36 in Experiment 1 entails an average capacity of short-term memory, c = m/q, of about three items of size m (the size of the index card in the story, the flash of color in the experiment).

Read-out from memory is also an event, and if the card that is sampled is reposted to the board, it will erase memory of whatever it overlays. Such output interference is regularly found (e.g., M. C. Anderson, Bjork, & Bjork, 1994). More complex stimuli with multiple features should have correspondingly larger storage demands but may utilize multidimensional bulletin boards of greater capacities (Vaughan, 1984). As the item size decreases and n increases, the geometric model morphs into the exponential, and the periodic posting of index cards into a continuous spray of ink droplets.

The change in memory with respect to time

Although the geometric/exponential model provides a good account of the quantitative aspects of forgetting, other processes would also do so. To generalize this approach, consider the differential equation

| (13) |

where Mj is the memory for the jth stimulus/element and λ = 1 /c. Solution of this equation yields the exponential decay function with parameter λ.

An advantage of the differential form is its greater ease of generalization. Consider, for example, an experiment in which various amounts of study time are given for each item in a list of L items. The more study time allowed for the jth item, the more strongly/often it will be written to memory. This is akin to multiple postings of images of the same card. The differential model for such storage is

During each infinitesimal epoch, writing will be to a new location with probability 1 − Mj/c and will overwrite images of itself with the complement of that probability. As Mj comes to fill memory, the change in its representation as a function of time goes to zero. This differential generates the standard concave exponential-integral equation often used as a model of learning.

Competition

Other items from the list are also being written to memory, however. Assume each has the same parameters as the target item (i.e., λ is constant, and all Mi = Mj ). Then, each of these L − 1 items will overwrite an image of the jth item with a probability that is proportional to the area that the jth item occupies: Mj / c = λMj. Therefore, the change in memory at each epoch in time during the study phase is

| (14) |

A solution of this differential equation is

| (14′) |

This writing/overwriting function may be inserted in the logistic shell to give the probability of recalling the jth item. If the same process holds for all L items in a list, the average number of items recalled will be

| (15) |

There are three free parameters in this model: the rate parameter λ, the capacity of memory relative to the spread of the logistic, c/s, and the mean of the logistic relative to the spread, μ/s.

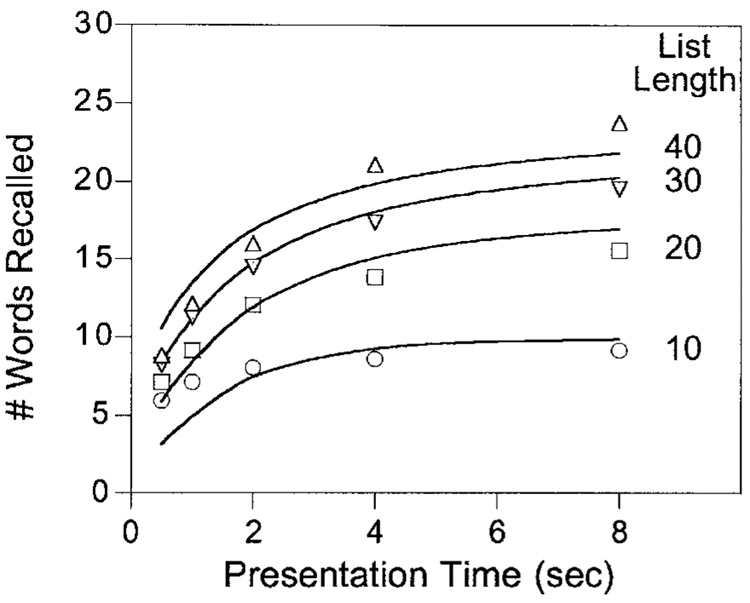

Roberts (1972a) performed the experiment, giving 12 participants lists of 10, 20, 30, and 40 words, with study times for each word of 0.5, 1, 2, 4, and 8 sec, immediately followed by free recall. Figure 9 shows his results. Recall improved with study time, and a greater number (but smaller proportion) of words were recalled from lists as a function of their length. The parameters used to fit the data were λ=0.018 sec−1, c/s = 67, and μ/s = 1.5.

Figure 9.

Performance of subjects given various durations of exposure to each word on lists of varying length (Roberts, 1972). The rewriting/overwriting model (Equations 14 and 15) drew the curves.

The model underpredicts performance at the briefest presentations for short lists, an effect that is probably due to iconic/primary memory, help not represented in the present model. In the bulletin board analogy, primary memory comprises the cards in the hand, in the process of being posted to the board. Roberts (1972a) estimated that primary memory contained a relatively constant average of 3.3 items. If this is appropriately added to the number recalled in Equation 13, the parameters readjust, and the variance accounted for increases from 91% to 95%.

The point of modeling is not to fit data but to understand them. Roberts (1972a) conducted his experiment to test the the total time hypothesis, according to which a list of 20 words presented at 2 sec per word should yield the same level of recall as a list of 40 words presented at 1 sec per word. Figure 9 shows that this hypothesis generally fails: The asymptotic difference between the 30- and the 40-word lists is smaller than the difference between the 10- and the 20-word lists. If a constant proportion were recalled given constant study time, the reverse should be the case. Equation 14′ tells why the total time hypothesis fails: The exponent increases with total time, so that approach to asymptote will proceed according to the hypothesis; however, the asymptotes—c / L—are inverse functions of list length, and asymptotic probability of recall will vary as Λ[p∞]= (c/L − μ)/s. Long lists will have a lower proportion of their items recalled. When memory capacity (c) is very large relative to number of items in the list, the total time hypothesis will be approximately true. But this will not be the case for very long lists or for lists whose contents are large relative to the capacity of memory. In those cases, the damage from overwriting by other items on the list is greater than the benefit of rewriting the same item.

The overwriting model does not assume that the list-length effect is due to inflation of the parameter s with increased list lengths, as do some other accounts. For recognition memory, at least, such an increase is unlikely cause the effect (Gronlund & Elam, 1994; Ratcliff, Koon, & Tindall, 1994).

This instantiation of the overwriting model assumes that each of the items in a list competes on a level playing field; if some items receive more strengthening others, perhaps by differential rehearsal, or are remembered better for any reason, so that their size relative the bulletin board is greater than others, then they overwrite the other items to a greater extent. Equation 14 fixed the size of each item as Λ = 1/c, but λ for more salient items will be larger, entailing a greater subtrahend in Equation 14. Ratcliff, Clark, and Shiffrin (1990) found this to be the case, and called it the list-strength effect. Strong items occupy more territory on the mind’s bulletin board; they are therefore themselves more subject overwriting than are weak items, which are less likely be impinged by presentation or re-presentation of other items. M. C. Anderson et al. (1994) found this also to the case.

Power Functions

Contrast the above mechanisms with the equally plausible intuition that a memory is supported by an array associations of varying strengths and that the weakest these is the first to be sacrificed to new memories. memories, although diminished, are more robust because they have already lost their weakest elements, or perhaps because they have entrenched/consolidated their remaining elements. This feature of the “older die harder” is of Josts’s laws of memory—old laws whose durability epitomizes their content. Mathematically, it may be rendered as

| (16) |

Here, the rate of decay slows with time, as λ/t. This differential entails a power law decay of memory, Mt = M1t−λ, which is sometimes found (e.g., Wixted & Ebbesen, 1997). The constant of integration, M1, corresponds to memory at t = 1.

Equations 13 and 16 are instances of the more general chemical rate equation:

| (17) |

The rate equation (1) reduces to Equation 13 when γ= 1, (2) entails a power law decay, as does Equation 16, when γ > 1, and (3) has memory grow with time, as might occur with consolidation, when γ < 1.

In their review of five models of perceptual memory, Laming and Scheiwiller (1985) answered their rhetorical question, “What is the shape of the forgetting function,” with the admission, “Frankly, we do not know.” All of the models they studied, which included the exponential, accounted for the data within the limits of experimental error. The form of forgetting functions is likely to vary with the particular dependent variable used (Bogartz, 1990; Loftus & Bamber, 1990; Wixted, 1990; but see Wixted & Ebbesen, 1991). Since probabilities are bound by the unit interval and latencies are not, a function that fit one would require a nonlinear transformation to map it onto the other. Absent a strong theory that identifies the proper variables and their scale, the shape of this function is an illposed question (Wickens, 1998).

Summary

Both the variables responsible for increasing failure of recall as a function of time and the nature of the function itself are in contention. This is due, in part, to the use of different kinds of measurement in experimental reports. Here, two potential recall functions have been described: exponential and power functions, along with their differential equations and a general rate equation that takes them as special cases. If successful performance involves retention of multiple elements, each decaying according to one of these functions, the probability of recall failure as a function of time is given by their appropriate extreme value distributions. Most experiments involve both writing and overwriting; the exponential model was developed and applied to a representative experiment.

Average Forms