Abstract

Including gesture in instruction facilitates learning. Why? One possibility is that gesture points out objects in the immediate context and thus helps ground the words learners hear in the world they see. Previous work on gesture’s role in instruction has used gestures that either point to or trace paths on objects, thus providing support for this hypothesis. Here we investigate the possibility that gesture helps children learn even when it is not produced in relation to an object but is instead produced “in the air.” We gave children instruction in Piagetian conservation problems with or without gesture and with or without concrete objects. We found that children given instruction with speech and gesture learned more about conservation than children given instruction with speech alone, whether or not objects were present during instruction. Moreover, children who received instruction in speech and gesture were more likely to give explanations for how they solved the problems that they were not taught during the experiment; this advantage was found only when objects were absent during instruction. Gesture in instruction can thus help learners learn even when those gestures do not direct attention to visible objects, suggesting that gesture can do more for learners than simply ground arbitrary, symbolic language in the physical, observable world.

Keywords: Gesture, Instruction, Action, Learning, Teaching

People gesture when they talk and are often not aware that they have gestured. These gestures express information that forms an integrated system with speech (McNeill, 1992)—gesture can reiterate information expressed in speech, clarify ambiguities, and even add information not found anywhere in the words it accompanies (Goldin-Meadow, 2003). Gesture thus serves as a visual, embodied representation of thought during the communicative process and, in this sense, is representation in action.

One communicative situation in which gesture is particularly important is the interaction between learners and teachers. Children’s gestures communicate to the teacher information about what they know and how they view a problem (e.g., Church & Goldin-Meadow, 1986; Perry, Church & Goldin-Meadow, 1988; Goldin-Meadow, Alibali & Church, 1993; Alibali & Goldin-Meadow, 1993) and, in turn, teachers use gesture when providing instruction to children of all ages. For example, teachers use gesture more than other nonverbal materials (e.g., counting blocks) when instructing 1st graders in mathematical notions (Flevares & Perry, 2001). When teaching older students, high school science teachers “layer” explanations of physics problems, with visible objects as one layer, explanation-rich speech as a second layer, and meaningful gestures as a third layer that ties speech to the objects to which it refers and thus grounds the lesson in the world (Roth & Welzel, 2001).

Importantly, children pay attention to the gestures they see and glean information from them. Kelly (2001) found that preschool-aged children understood a message that was divided between gesture and speech better than they understood either speech or gesture alone (see also Morford & Goldin-Meadow, 1992). Kelly & Church (1997) showed seven- and eight-year-old children videotapes of other children participating in conservation tasks; the children were able to glean information from the gestures they saw and did so even when the gestures conveyed information not found in the speaker’s talk (see also Goldin-Meadow & Singer, 2003).

Not only do children pay attention to information conveyed in gesture, they learn from it. Children instructed in mathematical equivalence problems (e.g., 3 + 4 + 5 = __ + 5) are more likely to learn when the instruction includes speech and gesture than when it includes only speech (Perry, Berch & Singleton, 1995; Singer & Goldin-Meadow, 2005). Preschoolers instructed in symmetry also learn more when the lesson includes speech and gesture than when it includes speech alone (Valenzeno, Alibali & Klatzky, 2003). The gestures used in these experiments were pointing and tracing gestures, gestures that have the potential to help children learn by directing their attention to relevant aspects of the problems. But children also profit from instruction containing iconic gestures, gestures whose form captures aspects of the objects or actions they represent (e.g., a C-shaped “width” gesture whose form reflects the relative size of the diameter of the container it represents, Church & Goldin-Meadow, 1986). Church, Ayman-Nolley and Mahootian (2004) used iconic gestures when instructing 1st grade children in Piagetian conservation tasks, and found that children learned more when the lesson contained speech and iconic gesture than when it contained speech alone.

The gestures in Church et al.’s (2004) conservation study differed from those in the mathematical equivalence (Perry et al., 1995) and symmetry (Valenzeno et al., 2003) studies in that they were iconic. However, none of these studies used ungrounded iconic gestures to teach children: all of the iconic gestures used in the Church et al. study were produced near the objects to which they referred (as were the pointing and tracing gestures used in the other studies). Take, for example, the iconic “width” gesture described earlier. If the C-hand is produced near the container to which it refers, the gesture is grounded and its meaning (as well as the meaning of the accompanying words) becomes more transparent and thus more likely to be accessible to a young learner.

This indexical function of gesture may be precisely why children profit from instruction that includes gesture (see Valenzeno et al., 2003, p. 187). Gesture has iconic properties that are symbolic in that they stand for objects and actions, but it is also perceptual and produced in real space. Gesture can therefore serve as an embodied bridge linking arbitrary, symbolic speech to the highly perceptual, experienced physical world. It is quite likely that gestures, particularly pointing gestures, do serve this linking function. Indeed, Glenberg and Robertson (1999) found that people who listened to instructions while watching a person point toward relevant parts of a map and compass performed better when later asked to use the compass than people who either listened to or read the instructions. Glenberg and Robertson argue that comprehension is best when listeners can index the words they hear to relevant parts of the objects they see, a function beautifully served by gesture in their study.

But it is also possible that gesture can improve comprehension and, as a result, learning even when it does not serve an indexical function. The shapes and movements of an iconic gesture can convey meaning independent of the context in which it occurs in a way that pointing gestures cannot. Iconic gestures might therefore be able to facilitate comprehension and learning when they are not directed toward particular objects and are instead produced “in the air.” Indeed, iconic gestures that are not produced near objects have been shown to scaffold children’s understanding of speech, especially when that speech is complex (Hodapp, Goldfield & Boyatzis, 1984; McNeil, Alibali & Evans, 2000). These studies suggest that gesture’s success in facilitating learning may stem not from its ability to link words to the visible world, but from its ability to convey additional information that frames the information conveyed in those words.

Our study explores the conditions under which iconic gestures promote learning. It differs from previous studies in two respects. First, although the adults’ iconic gestures in some previous studies were not produced on the objects to which they referred (i.e., they were ungrounded), the objects were visible to the child. The ungrounded iconic gestures that we used in our study referred to objects that were not visible in the immediate context. Second, in our study, we asked whether watching an adult produce ungrounded iconic gestures can lead to cognitive change (as opposed to merely better comprehension of language in the moment). If so, children should be more likely to learn when given instruction containing speech and ungrounded iconic gesture than when given instruction containing speech alone. Alternatively, gesture may be able to bring about cognitive change only when it refers to objects in the visible context; that is, only when it serves to ground speakers’ words in the visible world. If so, then children given instruction containing speech and ungrounded iconic gesture should be no more likely to learn than children given instruction containing speech alone.

To test these alternative possibilities, we presented children with instruction containing iconic gestures referring to objects that were either present in or absent from the visible context. Iconic gestures referring to present objects were produced near the objects to which they referred (i.e., grounded); iconic gestures referring to absent objects were produced “in the air” (ungrounded). If gesture facilitates learning even when it refers to objects that are not visible, we will have evidence that gesture’s role in learning is not restricted to helping ground words in the world of objects.

Experiment 1

Children participated in a pretest-instruction-posttest paradigm involving a series of Piagetian conservation tasks. For half the children, the objects used in the Piagetian tasks were present during instruction; for the other half, the objects were absent. In addition, half the children received instruction containing speech alone; half received instruction containing speech and gesture. In the objects present condition, the experimenter produced her gestures near the objects (e.g., a gesture aligning the first checker in row 1 with the first checker in row 2, the second checker in row 1 with the second checker in row 2, etc., produced over the two rows of checkers). In the objects absent condition, she produced the same gestures in the space where the objects had recently been.

We expected children in the objects present conditions to be more likely to profit from instruction containing speech and gesture than instruction containing speech alone, thus replicating Church et al. (2004). The crucial question is whether gesture helps children learn when the objects are absent and the experimenter produces her gestures in empty space. If gesture confers the same benefit in the objects absent conditions, we will have evidence that gesture can stand on its own without props in teaching children a new concept. If it does not, our findings will lend support to the hypothesis that gesture facilitates learning exclusively by drawing the learner’s attention to the task objects.

Method

Participants

Participants were 61 kindergarten and first grade students (35 5-year-olds, 22 6-year-olds, 4 7-year-olds) from Chicago area public and private schools; 52% of the children were female, 48% male; 83% were Caucasian, 14% African-American, and 3% Asian-American; 26% of the children were of Hispanic ethnicity. Children participated individually in one 45-minute experimental session during the normal school day. Each child was given a conservation pretest, followed by instruction in conservation, and finally a posttest comparable to the pretest. Children were randomly assigned to one of the four conditions: objects present/gesture + speech; objects present/speech alone; objects absent/gesture + speech; objects absent/speech alone.

Pretest

The pretest consisted of eight conservation tasks, two tasks tapping conservation of liquid quantity, two conservation of number, two conservation of length, and two conservation of matter. At the beginning of each trial, the child was presented with two identical objects (e.g., two identical glasses containing the same amount of water) and was asked whether the two contained the same amount, number, or length. Once the child agreed that the two contained the same amount, one of the objects was transformed (e.g., water in a tall, thin glass was poured into a short, wide dish) and the child was again asked whether the two contained the same amount. Regardless of the answer, the child was asked for the reasoning behind his or her judgment. The object was then transformed back to its original state (e.g., the water was poured from the short, wide dish back into the tall, thin glass) and the child was once again asked whether the two contained the same amount. Since the goal of the study was to teach conservation, the three children who answered more questions correctly than incorrectly on the pretest (5 or more correct judgments plus correct justifications) did not continue in the experiment.

Instruction

Each child was given instruction in liquid and number conservation by a second experimenter, different from the one who had administered the pretest. The instruction session consisted of 3 liquid trials and 3 number trials. Liquid and number were presented in counterbalanced blocks, and the three trials were randomized within these blocks. In each trial, the experimenter first gave the child instruction and then asked the child to solve a new problem. For example, on a liquid trial, the experimenter put two identical glasses containing the same amount of water on the table. She said, “I think these two have the same amount of water.” She then poured the liquid from one of the glasses into a different shaped glass and said, “I think these two glasses have the same amount of water,” followed by the training statement: “One of the glasses is taller and the other one is shorter, but the shorter glass is wider and the taller glass is skinnier. So it makes up for it.” This statement illustrates the compensation explanation, the idea that there is more than one dimension on which the amount in the glasses can be measured and that those dimensions compensate for one another.

The training statement was produced in speech alone (i.e., without gestures) in two speech alone conditions. In one of these conditions, the objects stayed on the table within the child’s view throughout the instruction (objects present); in the other, the experimenter took the glasses off the table before producing the training statement (objects absent).

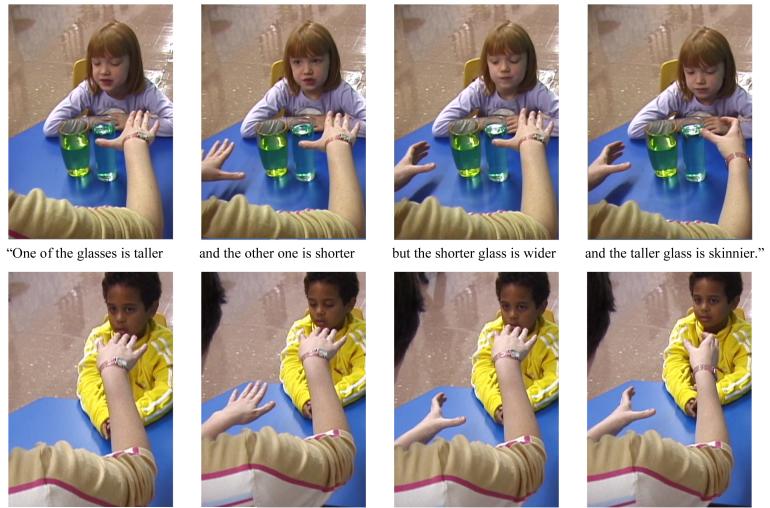

In the two gesture and speech conditions, the experimenter produced the training statement using the same words and intonation pattern as in the speech alone conditions but she also gestured (see Figure 1). In one of these conditions, the experimenter produced her iconic gestures near the task objects (objects present); in the other, she produced them in the air near the spot where the task objects had been (objects absent). For example, when the objects were present, the experimenter indicated the height of the glasses by producing two flat palms perpendicular to the table near the top of the water level in the glasses, and then indicated the widths of the glasses by producing two appropriately sized C-shaped hands near the glasses. The gestures illustrated the heights (the flat palms) and the widths of the glasses (the C-shapes) in the same response, a key component of the compensation explanation. When the objects were absent, the experimenter produced the same gestures in the air in the spot where the glasses had been.

Figure 1.

Stills from a video illustrating gesture plus speech instruction in the objects present (top) and objects absent (bottom) conditions. In the middle is the speech that accompanied the gestures; speech was identical in all conditions.

After the training statement, the experimenter replaced the objects (in the objects absent condition), returned the transformed object to its initial state, and said, “I think these two glasses have the same amount of water in them” in all four conditions.

In the second half of each training trial, the child tackled a new problem involving different containers and received feedback on his or her performance. The experimenter put two identical glasses with equal amounts of water on the table and asked the child whether the two had the same amount of water. Conservation objects were visible to the children as they made their similarity judgment of “same” or “different”. Then, in the two objects absent conditions, the glasses were removed from the table and placed out of the child’s view; in the two objects present conditions, the glasses were left on the table. The child was then asked to justify his or her judgment. If the child gave a correct answer (i.e., said the glasses had the same amount of water), the experimenter said, “I think you’re right. I think they do have the same amount of water,” and then gave the training statement again. If the child gave an incorrect answer, the experimenter said, “Actually, I think they have the same amount of water,” and then gave the training statement. The experimenter produced the training statement without gestures in the two speech alone conditions, and with gestures in the two gesture and speech conditions. Finally, the experimenter replaced the objects (in the objects absent conditions), returned the transformed object to its initial state, and asked the child whether the two glasses had the same amount of water. Number trials followed a similar procedure, except that the experimenter’s spoken training statement illustrated the principle of one-to-one correspondence, as did her gestures (see Table 1).

Table 1.

Examples of correct explanations children produced in speech and gesture

| Explanation | Speech | Gesture |

|---|---|---|

| Compensation | “This one is taller but it’s also wider” | Show the height of one or both objects, then the width of one or both objects |

| Reversibility | “If you moved it back, it would be the same” | Mime the action that would return the object to its original state |

| 1-to-1 Correspondence | “Each checker lines up with one in the other line” | Point to a checker in one row and the corresponding checker in the other row, etc. |

| Identity by Counting | “1 2 3 4 5 6, 1 2 3 4 5 6” | Point to each checker in row 1 then each checker in row 2 |

| Add-Subtract | “You didn’t add any and didn’t take any away” | Take-away swiping gesture near the object |

| Initial Equality | “They were the same before you changed them” | N/A |

| Identity | “It’s the same water no matter what” | N/A |

| Comparison + just | “This one is just taller” | N/A |

| Description + just | “The one is just a little bit rolled up” | N/A |

| Transformation + just | “You just poured this one in a different glass” | N/A |

Posttest

The posttest was administered by the same experimenter who had given the child the pretest. The posttest questions were the same questions given in the pretest, but presented in a different order. As in the pretest, the child was given no feedback on his or her answers.

Explanations and coding

The equality judgment (same or different) that the child gave for each question during the pretest, instruction, and posttest was recorded, along with the problem solving explanations the child expressed in speech and gesture when justifying that judgment. Two trained coders transcribed all of the child’s speech and gesture according to a previously developed system (Church & Goldin-Meadow, 1986); see Table 1 for examples of the correct explanations children produced in speech and gesture. On the first pass through the tape, the child’s speech was transcribed and coded without looking at the video. On the second pass, the child’s gesture was transcribed and coded without listening to the audio. Reliability was assessed on approximately 15% of trials; agreement between coders was 93% for speech explanations and 94% for gesture explanations. Children were given credit for solving a problem correctly if they produced a “same” judgment and gave a correct explanation in speech. Requiring a correct explanation (along with a correct judgment) ensures that the child has an understanding of the problem and is not just declaring the objects to be the same because the experimenter did.

As mentioned earlier, three children who were correct on 5 or more problems on the pretest (and thus did not have room to show improvement on the posttest) did not continue in the experiment past the pretest. Previous work has shown that children who produce gesture-speech mismatches on conservation tasks are in a state of transition and are more ready to learn than children who do not produce mismatches (Church & Goldin-Meadow, 1986; see also Perry et al., 1988; Pine et al, 2004). Six children in this experiment produced mismatches on the pretest. By chance, these mismatchers were not equally distributed across the four conditions; we therefore excluded these children from the analyses. Thus, data from 52 children (25 boys and 27 girls) were included in the analyses (N = 14 in objects present/gesture+speech condition; N = 12 in objects present/speech alone condition; N = 13 in objects absent/gesture+speech condition; N = 13 in objects absent/speech alone condition).

We calculated children’s improvement after instruction by subtracting the number of problems solved correctly (“same” judgment plus a correct spoken explanation) on the pretest from the number solved correctly on the posttest. We also calculated the number of correct explanations each child added to his or her spoken repertoire from pretest to posttest.

Results and Discussion

Children in all four groups solved approximately the same number of problems correctly on the pretest: M = 0.50 (SD = 1.28) objects present/gesture+speech; M = 0.33 (SD = 0.89) objects present/speech alone; M = 0.31 (SD = 0.63) objects absent/gesture+speech; M = 0.46 (SD = 1.13) objects absent/speech alone. There were no significant differences between the gesture+speech and speech alone groups, F (1, 48) = 0.19, ns; no significant differences between the objects present and objects absent groups, F (1, 48) = 0.01, ns; and no interaction, F (1, 48) = 0.33, ns. Children in all four groups also expressed approximately the same number of correct explanations in speech on the pretest: M = 0.50 (SD = 1.29) objects present/gesture+speech; M = 0.33 (SD = 0.78) objects present/speech alone; M = 0.46 (SD = 1.13) objects absent/gesture+speech; M = 0.39 (SD = 0.65) objects absent/speech alone. There were no significant differences between the gesture+speech and speech alone groups, F (1, 48) = 0.19, ns; no significant differences between the objects present and objects absent groups, F (1, 48) = 0.001, ns; and no interaction, F (1, 48) = 0.03, ns.

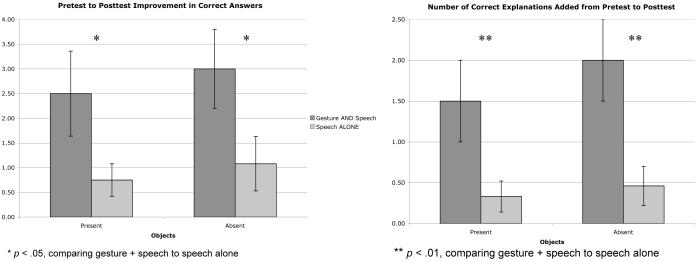

We looked next at improvement from pretest to posttest. Children improved significantly more when their instruction contained gesture and speech than when it contained speech alone (see Figure 2, left graph), F (1, 48) = 7.07, p < .05, Cohen’s (1988) f = 0.25. There was no effect of the presence vs. absence of objects, F (1, 48) = 0.36, ns, and no interaction, F (1, 48) = 0.02, ns. In other words, gestures produced in conjunction with speech facilitated learning whether or not those gestures were produced in relation to visible objects. We found a similar effect of gesture in instruction when the number of correct spoken explanations the child added from pretest to posttest was used as the dependent measure (see Figure 2, right graph), F (1, 48) = 11.45, p < .01, Cohen’s f = 0.34. Again, there was no effect of presence vs. absence of objects, F (1, 48) = 0.62, ns, and no interaction, F (1, 48) = 0.22, ns. Gesture helped children learn correct explanations whether or not those gestures were produced in relation to objects in the immediate context.

Figure 2.

Number of correct answers (left graph) and correct explanations (right graph) children added to their repertoires on the posttest in Experiment 1. Responses are categorized according to whether children received instruction in gesture and speech or speech alone, and according to whether the task objects were visible during instruction.

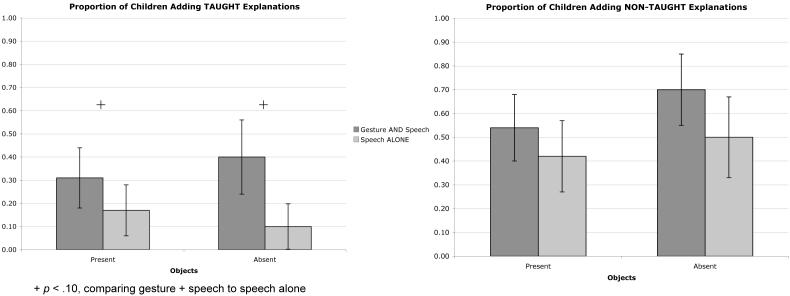

Did gesture help children learn the specific explanation that the experimenter taught during the instruction? Only 4 of the 25 children (16%) given instruction in speech alone added the explanation taught by the experimenter to their spoken repertoires (see Figure 3, left graph). But 12 of the 27 children (44%) given instruction in gesture plus speech added the explanation taught by the experimenter (Figure 3, left graph), significantly more than in the speech alone group (χ2 (1, N = 52) = 4.93, p < .05). Note that adding gesture to the explanation expressed in speech during instruction helped the children acquire the explanation in speech. Thus, adding gesture to instruction made the speech that accompanied the gesture more useful to the student.

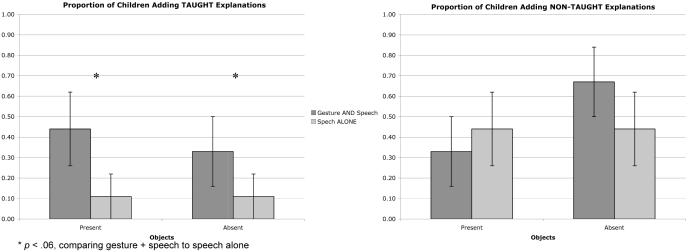

Figure 3.

Proportion of children who added the explanation taught by the experimenter (left graph) and who added correct explanations not taught by the experimenter (right graph) to their spoken repertoires after instruction in Experiment 1. Responses are categorized according to whether the children received instruction in gesture and speech or speech alone, and according to whether the task objects were visible during instruction.

Not only did adding gesture to instruction help children learn the particular explanation that the experimenter taught, but it also helped children add other correct explanations not taught by the experimenter, thus expanding their repertoire of spoken explanations (see examples in Table 1). Interestingly, this effect was found only when objects were absent during instruction. In the objects absent condition, 9 of the 13 children (69%) given instruction in gesture plus speech added new and correct explanations not taught by the experimenter to their spoken repertoires, compared to 2 of the 13 children (15%) given instruction in speech alone, χ2 (1, N = 26) = 7.72, p < .01 (Figure 3, two right columns in the graph on the right). In contrast, in the objects present condition, 5 of the 14 children (36%) given instruction in gesture plus speech added correct explanations not taught by the experimenter to their spoken repertoires, compared to 3 of the 12 children (25%) given instruction in speech alone, χ2 (1, N = 26) = 0.35, ns (Figure 3, two left columns on the graph on the right). Adding gesture to instruction allowed children to go beyond what they had been taught, helping them develop additional ways to explain why quantity does not change when an object is rearranged—but only when the task objects were absent during instruction.

Previous research with instruction on mathematical equivalence problems has found that simply asking children to gesture prior to instruction makes it more likely that they will reveal hidden implicit knowledge and subsequently learn from instruction (Broaders, Cook, Mitchell & Goldin-Meadow, 2007). In addition, children who receive instruction that includes gesture have been shown to be more likely to produce gesture themselves (Cook & Goldin-Meadow, 2006). Perhaps children in our study who saw gesture were more likely to produce gestures of their own during training; if so, the act of producing gesture might have been the mechanism underlying learning. However, we found no evidence for this effect in our data. We calculated how often a child produced at least one gesture on each training trial (interrater reliability for this decision was 100%). We found that children were no more likely to gesture when given instruction with gesture than when given instruction without gesture: children in the gesture plus speech conditions produced gesture on an average of 4.11 trials out of the six training trials (SD = 1.87; range = 0-6 trials), compared to 4.24 trials (SD = 1.85; range = 0-6 trials) for children in the speech alone conditions, F (1, 48) = 0.21, ns. Interestingly, however, children were more likely to gesture when objects were present than when they were absent: children in the objects present conditions gestured on an average of 5.15 trials (SD = 1.16; range = 0-6 trials), compared to 3.19 trials (SD = 1.90; range = 0-6 trials), for children in the objects absent conditions, F (1, 48) = 20.02, p < .001, Cohen’s f = 0.21. But note that children in the objects present conditions did not improve more than children in the objects absent conditions after training (M = 1.69, SD = 2.60 vs. M = 2.04, SD = 2.62; F (1, 48) = 0.36, ns, Figure 2, left graph).

Our study demonstrates that including iconic gestures in instruction makes that instruction more effective, and thus replicates Church et al. (2004). However, our study takes the phenomenon one step further by providing the first evidence that gesture can help children learn even when it does not direct attention to concrete objects.

Experiment 2

The findings from Experiment 1 suggest that gesture does not promote learning exclusively by directing the learner’s attention to relevant aspects of the task objects, that is, by grounding the speaker’s words in the world. Note, however, that in our study when the experimenter produced gestures in the objects absent condition, she produced them in the space where the objects had just been seen. As a result, the space could have served as a place-holder for the objects and the experimenter’s gestures could have facilitated learning by directing the child’s attention to this place-holder. Under this view, the objects in the objects absent condition were, in effect, present, albeit in the child’s mental image of the space. Our goal in Experiment 2 was to determine whether gestures can facilitate learning even when they are produced in a new space, one that has not been previously associated with the task objects.

Children again participated in a pretest-instruction-posttest paradigm involving Piagetian conservation tasks, and again were given instruction with speech alone or with speech plus iconic gestures. For half the children, the task objects were present during the experimenter’s instructions. For half, they were absent but, this time, the gestures in the absent condition were produced in a new space distinct from the one where the objects had been. Our question was whether children would learn from gestures produced during instruction even if those gestures were produced in a space that had not previously been associated with the task objects. If so, we will have evidence that gesture does not have to be strongly tied by space to its associated objects in order to facilitate learning.

Method

Participants

Participants were 52 kindergarten and first grade students (14 5-year-olds, 15 6-year-olds, 23 7-year-olds) from Chicago area public and private schools; 44% of the children were female, 56% male; 69% were Caucasian, 28% African-American, and 3% Asian-American; 26% of the children were of Hispanic ethnicity. Children participated individually in the experiment during the normal school day. The experiment consisted of a pretest, instruction by an experimenter, and a posttest. As in Experiment 1, children who scored 5 or higher on the pretest did not complete the rest of the experiment (N = 3). Data from children who produced gesture-speech mismatches on the pretest (N = 4) were also eliminated because they were not equally distributed across the four conditions; 45 children (25 boys and 20 girls) were included in the analyses (N = 13 in objects present/gesture+speech condition; N = 10 in objects present/speech alone condition; N = 12 in objects absent/gesture+speech condition; N = 10 in objects absent/speech alone condition).

Pretest and Posttest

The pretest and posttest were administered exactly as in Experiment 1.

Instruction

The instruction procedure was similar to Experiment 1 except that both experimenters participated in this phase. As in the first experiment, children were instructed in both liquid and number conservation. We describe the procedure for one liquid instruction trial to illustrate the differences between Experiment 2 and Experiment 1. The child was first shown two identical glasses of water by experimenter 1 who said, “I think these two glasses have the same amount of water.” He then poured the water from one of the glasses into a shorter and wider glass and said, “I think these two glasses have the same amount of water.” Experimenter 1 then passed the glasses to experimenter 2 to put on a table behind the child (objects present conditions) or placed them on the floor out of the child’s view (objects absent conditions). The child turned around to face experimenter 2 (and the objects, in the objects present conditions) who gave the training sentence with speech alone or gesture plus speech, as described in Experiment 1. The child then turned back around to face experimenter 1 who put the glasses back on the table in front of him, poured the water back into its original glass, and said, “I think these two glasses have the same amount of water”.

The child was then given a new problem with different task objects to solve. Experimenter 1 put two new glasses containing equal amounts of water in front of the child. Once the child had agreed that the two glasses contained the same amount of water, experimenter 1 poured the water from one of the glasses into a differently shaped container and asked the child whether the two containers had the same amount of water. Once the child offered a judgment (“same” or “different”), the experimenter asked the child to turn around and face experimenter 2 who asked the child to justify his or her judgment. In the objects present conditions, the glasses were passed to experimenter 2 and the child was able to refer to them when explaining his or her judgment. In the objects absent conditions, the glasses were placed on the ground out of the child’s view so that the child had no access to the objects during the explanation. After the child had explained his or her judgment, experimenter 2 presented the training statement and gave the child feedback as in Experiment 1. The child then turned back around to face experimenter 1 who returned the glasses to their original position and state, and asked the child whether the two glasses contained the same amount of water.

Coding

Categorizing children as correct or incorrect on a trial, as well as coding the explanations children expressed in speech and gesture, was done following the procedure described Experiment 1.

Results and Discussion

As in Experiment 1, we calculated the number of problems children in each group solved correctly: M = 1.15 (SD = 1.46) objects present/gesture+speech; M = 0.50 (SD = 0.80) objects present/speech alone; M = 0.30 (SD = 0.48) objects absent/gesture+speech; M = 0.80 (SD = 1.14) objects absent/speech alone. There were no significant differences between the gesture+speech and speech alone groups, F (1, 41) = 0.06, ns, and no significant differences between the objects present and objects absent groups, F (1, 41) = 0.75, ns. However, there was a significant interaction between the two factors, F (1, 41) = 3.27, p < .08, Cohen’s f = 0.14. The number of correct explanations expressed in speech on the pretest showed the same unequal pattern: M = 0.85 (SD = 1.14) objects present/gesture+speech; M= 0.33 (SD = 0.49) objects present/speech alone; M = 0.30 (SD = 0.48) objects absent/gesture+speech; M = 0.50 (SD = 0.71) objects absent/speech alone. There were no significant differences between the gesture+speech and speech alone groups, F (1, 41) = 0.45, ns, and no significant differences between the objects present and objects absent groups, F (1, 41) = 0.66, ns, but there was a marginally significant interaction, F (1, 41) = 2.32, p < .13, Cohen’s f = 0.09.

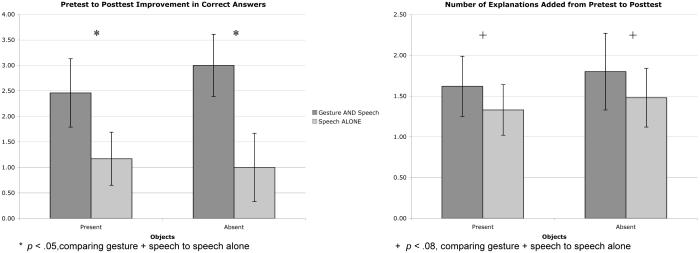

We found that, as in Experiment 1, children improved significantly more from pretest to posttest when their instruction contained gesture and speech than when it contained speech alone (Figure 4, left graph): M = 2.46 (SD = 2.40) objects present/gesture + speech; M = 1.17 (SD = 1.80) objects present/speech alone; M = 3.00 (SD = 1.94) object absent/gesture + speech; M = 1.00 (SD = 2.11) objects absent/speech alone. There was a main effect of gesture, F (1, 41) = 6.91, p < .05; Cohen’s f = 0.22, no effect of presence vs. absence of objects, F (1, 41) = 0.09, ns, and no interaction, F (1, 41) = 0.32, ns. Because pretest performance was not equal across the four groups, in addition to analyzing improvement from pretest to posttest, we also analyzed the posttest scores and used pretest scores as a covariate. We again found a main effect of gesture, F (1, 40) = 6.69, p < .05, Cohen’s f = 0.17, no effect of presence vs. absence of objects, F (1, 40) = 0.10, ns, and no interaction, F (1, 40) = 0.35, ns. As in Experiment 1, gestures produced in conjunction with speech facilitated learning, even if those gestures were produced in a space that had not been previously associated with the task objects.

Figure 4.

Number of correct answers (left graph) and correct explanations (right graph) children added to their repertoires on the posttest in Experiment 2. Responses are categorized according to whether children received instruction in gesture and speech or speech alone, and according to whether the task objects were visible during instruction

Children also added more correct explanations to their spoken repertoires after instruction with gesture than without it (Figure 4, right graph): M = 1.62 (SD = 1.32) objects present/gesture + speech; M = 0.92 (SD = 1.08) objects present/speech alone; M = 1.80 (SD = 1.48) objects absent/gesture + speech; M = 1.00 (SD = 1.15) objects absent/speech alone. There was a marginal effect of gesture, F (1, 41) = 3.90, p < .06; Cohen’s f = 0.10, no effect of presence vs. absence of gesture, F (1, 41) = 0.13, ns, and no interaction, F (1, 41) = 0.02, ns. When we analyzed the posttest scores using pretest scores as a covariate to control for the unequal pretest performance across groups, we again found a marginally significant effect of gesture, F (1, 40) = 3.32, p < .08, Cohen’s f = 0.05, no effect of the presence vs. absence of objects, F (1, 40) = 0.50, ns, and no interaction, F (1, 40) = 0.63, ns.

As in Experiment 1, we found that more children in the gesture plus speech instruction conditions added the explanation taught by the experimenter to their spoken repertoires than in the speech alone conditions, although here the effect was marginal: 8 of 23 children (35%) in the gesture plus speech conditions, compared to 3 of 22 children (14%) in the speech alone conditions, χ2 (1, N = 45) = 2.72, p < .10, Figure 5, left graph). Since it was not possible to use pretest score as a covariate in these chi-square analyses, we used a different approach to equate for performance inequalities at pretest—we matched children across the four groups on the number of correct explanations expressed in speech at the pretest, and then examined the explanations that these children added. This approach resulted in a sample of 36 children, 9 in each group; the data are presented in Figure 6. We found that, as in Experiment 1, more children added the explanation taught by the experimenter to their spoken repertoires after instruction when the instruction included both speech and gesture (7 of 18 children, 39%) than when it included speech alone (2 of 18 children, 11%); χ2 (1, N = 36) = 3.71, p < .06, Figure 6, left graph).

Figure 5.

Proportion of children who added the explanation taught by the experimenter (left graph) and who added correct explanations not taught by the experimenter (right graph) to their spoken repertoires after instruction in Experiment 2. Responses are categorized according to whether the children received instruction in gesture and speech or speech alone, and according to whether the task objects were visible during instruction.

Figure 6.

Proportion of children who added the explanation taught by the experimenter (left graph) and who added correct explanations not taught by the experimenter (right graph) to their spoken repertoires after instruction in Experiment 2, when children are matched for their performance (number of correct explanations) at pretest (N = 9 per condition). Responses are categorized according to whether the children received instruction in gesture and speech or speech alone, and according to whether the task objects were visible during instruction.

When we analyzed the number of new explanations not taught by the experimenter in the full sample of 45 children, we found that children in the gesture plus speech conditions added more non-taught explanations than children in the speech alone conditions, but the differences were not reliable and, unlike Experiment 1, the effect was not dramatically different for the objects absent condition than for the objects present condition (Figure 5, right graph). However, when we controlled for pretest differences and used the matched sample of 36 children, we found that, as in Experiment 1, more children added new correct explanations not taught by the experimenter when given instruction in gesture plus speech than when given instruction in speech alone, but only when the objects were absent during instruction. In the objects absent condition, 6 out of 9 children (67%) given instruction in gesture plus speech added new and correct explanations not taught by the experimenter to their spoken repertoires, compared to 4 out of 9 children (44%) given instruction in speech alone (Figure 6, two right columns in the graph on the right). In contrast, in the objects present condition, 3 out of 9 children (33%) given instruction in gesture plus speech vs. 4 out of 9 children (44%) given instruction in speech alone added non-taught explanations to their spoken repertoires (Figure 6, two left columns in the graph on the right). Although this effect did not reach statistical significance even in the objects absent condition, the trends in Experiment 2 were comparable to those in Experiment 1 (compare the right graphs in Figures 3 and 6), and when data from the two experiments are combined, there is a robust effect of including gesture in instruction on adding untaught explanations when the task objects are absent. In the objects absent condition, 15 out of 22 children (68%) added untaught explanations to their spoken repertoires when given instruction in gesture plus speech, compared to 6 out of 22 (27%) given instruction in speech alone, χ2 (1, N = 44) = 7.38, p < .01. Comparable numbers in the objects present condition for the studies combined were 8 out of 23 (35%) vs. 7 out of 21 (33%), χ2 (1, N = 44) = 0.01, ns.

Again as in Experiment 1, children were no more likely to gesture during training when given instruction with gesture than without it: children in the gesture plus speech conditions gestured on an average of 3.87 trials (SD = 1.84; range = 0-6 trials), compared to 3.27 trials (SD = 2.29; range = 0-6 trials) for children in the speech alone conditions, F (1, 41) = 1.20, ns. Unlike Experiment 1, however, we did not find that children gestured significantly more when objects were present: Children in the objects present conditions gestured on 3.84 trials (SD = 1.80; range = 3-6 trials), compared to 3.25 trials (SD = 2.38; range = 0-6 trials) for children in the objects absent conditions, F (1, 41) = 0.92, ns.

In sum, we found in Experiment 2, as in Experiment 1, that children who were given instruction in gesture plus speech were more likely to learn than children given instruction in speech alone. This pattern held even when the gestures were produced in a neutral space not previously associated with the task objects. Gesture thus does not require a spatial connection to props in order to be an effective teaching tool.

General Discussion

These studies are the first to provide experimental evidence that gesture can facilitate cognitive change even when those gestures are not produced in relation to concrete objects and are not presented in a space associated with the objects. Importantly, gesture helped children make more effective use of the spoken instruction they received—the children in the gesture plus speech conditions were not only more likely to learn the particular explanation that the experimenter taught than children in the speech alone conditions, but they were also more likely to generate their own correct explanations to justify their new-found belief in the conservation of quantity. Interestingly, this particular effect was found only when the task objects were absent during instruction, suggesting that gesture was effective in bringing out new explanations primarily when there were no concrete objects to reference. Finally, unlike previous work (Cook & Goldin-Meadow, 2006), seeing gesture in instruction did not make children more likely to gesture themselves, suggesting that the beneficial effects we found for gesture in our study could not be attributed to the child’s gesturing (cf. Broaders et al, 2007; Cook, Mitchell & Goldin-Meadow, 2007).

We began our study by asking why adding gesture to instruction helps children learn. Previous work had suggested that watching a speaker gesture helps the listener index the speaker’s words to the visible physical environment (Glenberg & Robertson, 1999). During instruction, the instructor’s gestures may help children ground the spoken component of the instruction in the immediate context (e.g., Valenzeno et al., 2003). Under this view, seeing gesture helps children understand how the speech they hear relates to the objects they see, as speech, gesture, and the environment all give meaning to one another (cf. Goodwin, 2003). Gesture may very well play this type of grounding role when produced in relation to concrete objects. But gesture can be, and often is, produced “in the air,” creating its own space not tied to the immediate real-world space. Our studies demonstrate that gesture can be just as effective as a teaching tool when it is produced in the air, not tied to concrete objects. However, it is worth noting that the words the experimenter used in our study were all familiar to children of this age. Had the experimenter used unfamiliar terms, it might have been necessary for her gestures to be grounded on the task objects in order for learning to occur. Thus, it is possible that our findings on ungrounded gesture may hold only when terminology is familiar to the learner.

Given the evidence in the literature for spatial indexing of objects in memory (e.g., Spivey & Geng, 2001), we might have expected gestures produced in a space associated with an object to be an effective recall cue for that object. And, indeed, they were (see the objects absent condition in Experiment 1). But we also found that gestures do not have to be produced in a space previously associated with an object in order to facilitate learning about that object (the objects absent condition in Experiment 2). In other words, although gesture can help learners by drawing attention to spaces that have become place-holders for objects, they do not always work via this mechanism. When objects are absent, children seem to be able to index the gestures to their mental image of the objects even if there is no spatial link between the gestures and the objects.

Why then do ungrounded iconic gestures facilitate learning? Iconic gestures, be they grounded or not, may encourage comparison, an important aspect of many learning situations. The shapes and placements of the instructor’s two-handed gestures allowed children to simultaneously consider the two containers. This simultaneity may have encouraged the children to compare the height and width dimensions of the objects, an essential step in understanding conservation. Indeed, situations that promote comparison between two objects have been shown to lead children to categorize the objects at a deep conceptual level, whereas situations that do not promote comparison lead children to categorize at a relatively shallow perceptual level (Gentner & Namy, 1999; Namy & Gentner, 2002). In our study, gesture may have encouraged children to compare the relevant dimensions, thus allowing them to think at a deep conceptual level and ignore the shallow perceptual information that is so salient to non-conservers. Gesture is, in fact, routinely used in classrooms to promote comparison. A cross-cultural study of how analogy is implemented in classrooms in the United States, Japan, and Hong Kong found that middle school teachers, particularly those in Japan and Hong Kong, used gestures to highlight comparisons between familiar source analogs and the to-be-learned targets (Richland, Holyoak & Zur, 2007).

Receiving instruction that contains both speech and gesture helped children in our study generate their own new correct explanations—but only when the objects were absent during instruction. Why? It is possible that when gestures are not grounded on objects, they encourage children to form more abstract representations of the problem, which, in turn, help them master the task and go beyond what they have learned. Concrete representations typically help children learn how to solve a specific problem. But it frequently takes a more abstract problem representation to get children to go beyond the problem on which they were taught (Kaminski, Sloutsky & Heckler, 2006; see Goldstone & Sakamoto, 2003 for similar work with adults). When the objects were present in our study, the knowledge the children gained during instruction may have been more tied to the specific objects they saw. In contrast, when the objects were absent, the children’s new knowledge may have been formulated at a more abstract level, allowing them not only to solve more problems but also to generate more correct explanations of their own.

Note that we found an effect of adult gesture on child speech—children who saw gesture made better use of the instructor’s speech than children who did not see gesture. The experimenter expressed the same explanation in both gesture and speech in her instruction. By giving two renditions of the explanation, the experimenter effectively gave the child two opportunities to grasp the explanation and learn from it, which may have facilitated learning. However, there are other possibilities. Rather than (or perhaps in addition to) providing another opportunity for the learner to grasp the explanation presented in speech, gesture might have lightened the learner’s cognitive load. Producing gesture has been shown to reduce the amount of cognitive effort the speaker expends, compared to not producing gesture (Goldin-Meadow, Nusbaum, Kelly & Wagner, 2001; Wagner, Nusbaum & Goldin-Meadow, 2004). It is possible that seeing gesture in instruction serves a similar function for listeners, reducing cognitive load and freeing up resources that can then be put toward learning. In our study, children who saw gesture in their instruction (with or without objects present) were more likely than children who did not see gesture to solve the problems correctly and to learn the specific explanation that the experimenter taught. Moreover, when no objects were present during instruction, children who saw gesture were more likely than children who did not see gesture to generate correct explanations that the experimenter had not taught them, thus extending the knowledge they had gained during instruction. Gesture may have lightened the learners’ load, providing them with extra resources that allowed them to learn from their instruction and even go beyond it.

We have shown that including gesture in instruction improves that instruction whether or not the gestures are produced in relation to concrete objects. Our findings constitute the first experimental evidence that gesture can do more than simply ground abstract language in the physical environment during learning interactions. It can stand on its own—without props—to facilitate learning a new concept. Although more research is needed to understand the mechanism by which gesture improves learning, it is clear from our findings that including gesture in instruction makes that instruction more effective, even if the gestures do not point out objects in the real world.

Acknowledgements

This research was supported by grant number R01 HD47450 from the NICHD to Goldin-Meadow and grant number SBE 0541957 from the NSF. Thanks to Mary-Anne Decatur and Hector Santana for help with data collection and coding. We also thank Zac Mitchell, Sam Larson, Pat Zimmerman, and Terina Yip for additional help with data collection. Thanks to Dedre Gentner for suggesting Experiment 2 and to the anonymous reviewers whose input greatly improved this paper. We thank the students, teachers and principals at the participating schools for their generous support.

References

- Alibali MW, Goldin-Meadow S. Gesture-speech mismatch and mechanisms of learning: What the hands reveal about a child’s state of mind. Cognitive Psychology. 1993;25(4):468–523. doi: 10.1006/cogp.1993.1012. [DOI] [PubMed] [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture reveals implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007 doi: 10.1037/0096-3445.136.4.539. in press. [DOI] [PubMed] [Google Scholar]

- Church RB, Ayman-Nolley S, Mahootian S. The role of gesture in bilingual education: Does gesture enhance learning? International Journal of Bilingual Education & Bilingualism. 2004;7(4):303–320. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23(1):43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd Edition Erlbaum; Hillsdale, NJ: 1988. [Google Scholar]

- Cook S,W, Goldin-Meadow S. The role of gesture in learning: Do children use their hands to change their minds? Journal of Cognition and Development. 2006;7:211–232. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2007 doi: 10.1016/j.cognition.2007.04.010. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flevares LM, Perry M. How many do you see? The use of nonspoken representation in first-grade mathematics lessons. Journal of Educational Psychology. 2001;93:330–345. [Google Scholar]

- Gentner D, Namy L. Comparison in the development of categories. Cognitive Development. 1999;14:487–513. [Google Scholar]

- Glenberg AM, Robertson DA. Indexical understanding of instructions. Discourse Processes. 1999;28(1):1–26. [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Harvard University Press; Cambridge, MA: 2003. [Google Scholar]

- Goldin-Meadow S, Alibali MW, Church RB. Transitions in concept acquisition: Using the hand to read the mind. Psychological Review. 1993;100(2):279–297. doi: 10.1037/0033-295x.100.2.279. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly SD, Wagner S. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12(6):516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Singer MA. From children’s hands to adults’ ears: Gesture’s role in the learning process. Developmental Psychology. 2003;39(3):509–520. doi: 10.1037/0012-1649.39.3.509. [DOI] [PubMed] [Google Scholar]

- Goldstone RL, Sakamoto Y. The transfer of abstract principles governing complex adaptive systems. Cognitive Psychology. 2003;46:414–466. doi: 10.1016/s0010-0285(02)00519-4. [DOI] [PubMed] [Google Scholar]

- Goodwin C. The semiotic body in its environment. In: Coupland J, Gwyn R, editors. Discourses of the body. Palgrave/Macmillan; New York, NY: 2003. pp. 19–42. [Google Scholar]

- Hodapp RM, Goldfield EC, Boyatzis CJ. The use and effectiveness of maternal scaffolding in mother-infant games. Child Development. 1984;55:772–781. [PubMed] [Google Scholar]

- Kaminski JA, Sloutsky VM, Heckler AF. In: Sun R, Miyake N, editors. Do children need concrete instantiations to learn an abstract concept?; Proceedings of the XXVIII Annual Conference of the Cognitive Science Society; 2006. [Google Scholar]

- Kelly SD. Broadening the units of analysis in communication: Speech and nonverbal behaviours in pragmatic comprehension. Journal of Child Language. 2001;28(2):325–349. doi: 10.1017/s0305000901004664. [DOI] [PubMed] [Google Scholar]

- Kelly SD, Church RB. Can children detect conceptual information conveyed through other children’s nonverbal behaviors? Cognition & Instruction. 1997;15(1):107–134. [Google Scholar]

- McNeil NM, Alibali MW, Evans JL. The role of gesture in children’s comprehension of spoken language: Now they need it, now they don’t. Journal of Nonverbal Behavior. 2000;24:131–150. [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thought. University of Chicago Press; Chicago: 1992. [Google Scholar]

- Morford M, Goldin-Meadow S. Comprehension and production of gesture in combination with speech in one-word speakers. Journal of Child Language. 1992;19(3):559–580. doi: 10.1017/s0305000900011569. [DOI] [PubMed] [Google Scholar]

- Namy LL, Gentner D. Making a silk purse out of two sow’s ears: Young children’s use of comparison in category learning. Journal of Experimental Psychology: General. 2002;131:5–15. doi: 10.1037//0096-3445.131.1.5. [DOI] [PubMed] [Google Scholar]

- Perry M, Berch D, Singleton JL. Constructing shared understanding: The role of nonverbal input in learning contexts. Journal of Contemporary Legal Issues. 1995;6:213–236. [Google Scholar]

- Perry M, Church RB, Goldin-Meadow S. Transitional knowledge in the acquisition of concepts. Cognitive Development. 1988;3(4):359–400. [Google Scholar]

- Pine KJ, Lufkin N, Lewis C. More gestures than answers: Children learning about balance. Developmental Psychology. 2004;40(6):1059–1067. doi: 10.1037/0012-1649.40.6.1059. [DOI] [PubMed] [Google Scholar]

- Richland LE, Zur O, Holyoak KJ. Cognitive supports for analogies in the mathematics classroom. Science. 2007;316(5828):1128–1129. doi: 10.1126/science.1142103. [DOI] [PubMed] [Google Scholar]

- Roth W-M, Welzel M. From activity to gestures and scientific language. Journal of Research in Science Teaching. 2001;38(1):103–136. [Google Scholar]

- Singer MA, Goldin-Meadow S. Children learn when their teacher’s gestures and speech differ. Psychological Science. 2005;16(2):85–89. doi: 10.1111/j.0956-7976.2005.00786.x. [DOI] [PubMed] [Google Scholar]

- Spivey M, Geng J. Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research. 2001;65:235–241. doi: 10.1007/s004260100059. [DOI] [PubMed] [Google Scholar]

- Valenzeno L, Alibali MA, Klatzky R. Teachers’ gestures facilitate students’ learning: A lesson in symmetry. Contemporary Educational Psychology. 2003;28(2):187–204. [Google Scholar]

- Wagner S, Nusbaum H, Goldin-Meadow S. Probing the mental representation of gesture: Is handwaving spatial? Journal of Memory and Language. 2004;50:395–407. [Google Scholar]