Abstract

Children vary widely in how quickly their vocabularies grow. Can looking at early gesture use in children and parents help us predict this variability? We videotaped 53 English-speaking parent-child dyads in their homes during their daily activities for 90-minutes every four months between child age 14 and 34 months. At 42 months, children were given the Peabody Picture Vocabulary Test (PPVT). We found that child gesture use at 14 months was a significant predictor of vocabulary size at 42 months, above and beyond the effects of parent and child word use at 14 months. Parent gesture use at 14 months was not directly related to vocabulary development, but did relate to child gesture use at 14 months which, in turn, predicted child vocabulary. These relations hold even when background factors such as socio-economic status are controlled. The findings underscore the importance of examining early gesture when predicting child vocabulary development.

Keywords: Gestures, parent-child interaction, vocabulary

INTRODUCTION

Young children vary dramatically in the size of their vocabularies. Some 3-year-olds have over 1100 words in their vocabularies; others have fewer than 500 (Hart & Risley, 1995). In an analysis of over 1800 infants and toddlers at 30 months of age, Fenson and colleagues (1994) found that children’s vocabularies ranged from 300 words at the 10th percentile to 650 words at the 90th percentile. How can variability of this sort be explained?

One obvious candidate explanation is the verbal input children receive. Indeed, many studies have found a significant positive relation between the speech parents address to their children and the child’s vocabulary growth (Hart & Risley, 1995; Huttenlocher, Haight, Bryk, Seltzer & Lyons, 1991; Pan, Rowe, Singer & Snow, 2005). However, verbal input is not the whole story. The speech parents address to children accounts for only a portion of the variation in children’s vocabulary growth, leaving room for other possible factors. We suggest that gesture may be one of those other factors. Gesture has the potential to be related to child vocabulary growth in two ways: through the gestures that children themselves produce, and through the gestures that their parents produce. In this study, we examine the role played by gesture – both child’s and parent’s – in vocabulary development.

Parent verbal input

Parents are an important source of language experience for their young children. There is a growing body of work showing a positive relation between the amount and diversity of speech that parents offer children and the child’s vocabulary growth (Hart & Risley, 1995; Huttenlocher et al., 1991; Pan et al., 2005). Children who hear more words and/or a larger variety of words from their parents have faster vocabulary growth over time, and larger vocabularies at given points in time, than children exposed to less speech and less varied vocabulary.

However, when considering parental talk as a predictor of child vocabulary development, other factors come into play. Parents with high socio-economic status (SES, typically measured as parent income and/or education) tend to talk more and use more varied and complex talk with their children than parents with low SES (Hart & Risley, 1995; Hoff, 2003a). It is therefore difficult to separate parental talk from the environmental context within which that talk occurs. Indeed, both factors have been shown to be important in predicting individual differences in children’s vocabulary skill (Hoff, 2003b). In the present study we build on this prior work, first assessing whether early child and parent talk (and gesture) predict subsequent child vocabulary development, and then examining whether these effects hold when environmental factors such as SES are also taken into consideration.

Child gesture use

Gestures appear before speech in a child’s communicative repertoire (Bates, 1976; Bates, Benigni, Bretherton, Camaioni & Volterra, 1979; Greenfield & Smith, 1976). Children’s earliest gestures, produced around 10 months of age, are deictic gestures; for example, holding up (‘showing’) objects and pointing at objects, people and locations (Bates, 1976; Masur, 1990). While most of the gestures that children produce during the early years of language learning are deictic, other types of gestures, most notably iconic gestures, can also be found in children’s gestural repertoires. Iconic gestures are used to depict attributes or actions associated with objects; for example, panting to represent a dog or flapping arms to represent a bird flying (Acredolo & Goodwyn, 1988).

Previous research suggests a link between children’s uses of gesture and early word learning. Iverson & Goldin-Meadow (2005) found that it is possible to predict specific items that will enter a child’s verbal lexicon based on that child’s earlier gestures. In monthly videotapes taken between ages 10 and 24 months, they found that children produced a gesture for an object an average of 3 months prior to producing the word for that object. Furthermore, Acredolo & Goodwyn (1988) have shown that the amount a child gestures relates to the child’s vocabulary development. Children who produced more symbolic gestures at 19 months had larger productive vocabularies at 24 months than children who produced fewer symbolic gestures. Thus, the amount and diversity of children’s early gesture use appears to be related to their early vocabulary growth.

However, we do not yet know whether the gestures children use in the early stages of language development continue to predict their vocabulary growth at later ages. That is, do children’s early gestures explain only the initial differences found in their vocabularies, thus serving as an entry point into word learning but no more? Or do children’s early gestures continue to explain differences in children’s vocabulary skills years later?

Parent gesture use

There is ample evidence that by 12 months of age children are able to understand the gestures other people produce. For example, they can follow an adult’s pointing gesture to a target object (Butterworth & Grover, 1988; Carpenter, Nagell & Tomasello, 1998; Murphy & Messer, 1977). We also know that parents gesture when they interact with their children and that the majority of parent gestures co-occur with speech (Acredolo & Goodwyn, 1988; Greenfield & Smith, 1976; Shatz, 1982). Most of these parent gestures reinforce the message conveyed in speech (Greenfield & Smith, 1976; Iverson, Capirci, Longobardi & Caselli, 1999; Özçalişkan & Goldin-Meadow, 2005), for example, pointing at a cat while saying ‘cat’. Thus, it is possible that parent gesture could facilitate the child’s comprehension, and eventual acquisition, of new words simply by providing nonverbal support for understanding speech. For example, Zukow-Goldring (1996) has shown that when infants in the one-word stage misunderstand caregiver messages, subsequent comprehension is greatly enhanced if parents direct their child’s attention to the perceptual aspects of the context using gesture (e.g., points) or actions (e.g., physically moving the child).

Indeed, studies examining parent-child interaction in naturalistic settings have found a relation between parent gesture use and child vocabulary size. Iverson et al. (1999) found a significant relation between the quantity of maternal pointing and child vocabulary use at 16 months. Similarly, Pan et al. (2005) found that 14- to 36-month-old children whose parents point more when interacting with them have faster vocabulary growth than children whose parents point less. However, in both studies, when parents’ verbal input was taken into account, the relation between parent gesture and child vocabulary disappeared. These findings suggest that parent gesture and parent speech may be measuring similar properties and that, once parent speech is taken into account, nothing is gained by adding parent gesture to analyses of child vocabulary growth.

However, there is other evidence that gesture does play a role in promoting child vocabulary growth. For example, in a training study, Acredolo & Goodwyn (1998) found that when parents were instructed to use symbolic gestures in addition to words, their children not only used more gestures themselves, but also showed greater vocabulary gains than children whose parents were encouraged to use only words or were not trained at all. Note that this effect of parent gesture on child vocabulary could be mediated by child gesture. For example, parent gesture might encourage the child to gesture and those gestures might then influence vocabulary development. Previous work has indeed found a link between parent gesture and child gesture: parents who gesture more have children who gesture more than children of parents who gesture less (Iverson et al., 1999; Namy, Acredolo & Goodwyn, 2000; Rowe, 2000). Of course we can only speculate as to the direction of these effects, as correlation does not imply causation – some parents may gesture more because their children gesture more, and not vice versa. In the current study, we explore whether early parent gesture relates to later child vocabulary, either directly or indirectly through its relation to early child gesture.

The present study

The current study examines gesture use in a heterogeneous sample of parents and children during the early stages of language learning, and explores whether early gesture use predicts vocabulary comprehension years later. We begin by describing, at the group level, the gestures that parents and children produce, with a focus on gesture measures previously found to relate to child vocabulary. We then determine whether child and parent gesture at the early stages of language learning predict child vocabulary during the preschool years, adjusting for child and parent speech and other environmental factors. Finally, if we find (as we will) that early child gesture does play a role in later child vocabulary, it becomes of interest to explore the factors that influence children’s early gesture use.

METHOD

Participants

Fifty-three English-speaking parent-child dyads were drawn from a larger sample of 63 families participating in a longitudinal study of language development in Chicago, Illinois. The larger sample was selected to be representative of English-speaking families in the greater Chicago area in terms of ethnicity and income, and all were raising their children as monolingual English speakers. Ten families from the larger sample were excluded from the analysis because the children were either diagnosed with a disorder that affected their language development, had primary caregivers who changed over the course of the study, or had two primary caregivers present and thus participated in triadic rather than dyadic interactions. Annual income levels varied from less than $15,000 to over $100,000, and five different ethnic groups were represented in the sample (see Table 1). On average, parents had 16 years of education (the equivalent of a college degree) at the start of the study; however, the range was large – from 10 years (less than high school degree) to 18 years (Master’s degree or more). The final sample of 53 contained 52 mothers and 1 father, 27 boys and 26 girls, and 31 first-borns and 22 later-borns.

Table 1.

Sample distribution based on ethnicity and yearly family income

| Ethnicity | Family income ($) |

Total no. families | |||||

|---|---|---|---|---|---|---|---|

| 7,500 | 25,000 | 42,500 | 62,500 | 87,500 | 100,000 | ||

| White | 1 | 3 | 4 | 6 | 8 | 12 | 34 |

| Black | 3 | 3 | 2 | 1 | 0 | 0 | 9 |

| Hispanic | 0 | 1 | 3 | 0 | 0 | 0 | 4 |

| Asian | 0 | 1 | 0 | 2 | 0 | 0 | 3 |

| Mixed | 0 | 2 | 0 | 1 | 0 | 0 | 3 |

| Total | 4 | 10 | 9 | 10 | 8 | 12 | 53 |

Procedure and measures

Parent-child dyads were visited in the home every four months between child age 14 and 34 months, resulting in six visits covering a 20-month period.1 At each visit, dyads were videotaped for 90 minutes engaging in their ordinary activities. A typical videotaped session included toy play, book reading and a meal or snack time. At child age 42 months, the children were given a number of controlled assessments, including the Peabody Picture Vocabulary Test (PPVT III; Dunn & Dunn, 1997).

All speech and gestures in the videotaped sessions were transcribed. The unit of transcription was the utterance, defined as any sequence of words and/or gestures preceded and followed by a pause, a change in conversational turn, or a change in intonational pattern. Transcription reliability was established by having a second coder transcribe 20% of the videotapes; reliability was assessed at the utterance level and was achieved when the second coder agreed with the first on 95% of the transcription decisions.

All dictionary words, as well as onomatopoeic sounds (e.g., woof-woof) and evaluative sounds (e.g., woops, uh-oh), were counted as words. The number of word types (the number of different intelligible word roots) that the child or parent produced served as a measure of spoken vocabulary production at each session.

Children and parents produced gestures indicating objects, people or locations in the surrounding context (deictic gestures, e.g., point at dog), gestures depicting attributes or actions of concrete or abstract referents via hand or body movements (representational gestures, e.g., flapping arms at sides to represent a flying bird), and gestures having pre-established meanings associated with particular gesture forms (conventional gestures, e.g., shaking the head ‘no’). Other actions or hand movements that involved manipulating objects (e.g., turning the page of a book) or were part of a ritualized game (e.g., itsy-bitsy spider) were not considered gestures.

We calculated four separate gesture measures that could potentially relate to child vocabulary. (1) Gesture tokens, or the sheer number of gestures a parent or child produced with or without speech, served as our measure of gesture quantity. (2) Gesture types, or the number of different meanings each parent or child conveyed in gesture, served as our measure of gesture vocabulary. Following Goldin-Meadow & Mylander (1984) and Iverson & Goldin-Meadow (2005), we counted each deictic gesture that indicated a different object as a distinct gesture type. For example, if a child pointed to his dog 10 times during an interaction, those 10 points would count as 1 gesture type (dog) and 10 gesture tokens. We also counted each conventional or representational gesture associated with a different meaning as a distinct gesture type (e.g., head shake conveying the meaning no; arm flap conveying the meaning flying). (3) The proportion of speech utterances containing gesture is a measure that controls for the amount of speech produced. Speech utterances containing gesture were communicative acts in which both gesture and speech were produced with some temporal overlap (e.g., ‘see dog’ + point at dog; ‘cookie’ + eat gesture). Speech utterances were included only if the child produced a recognizable word; in other words, meaningless vocalizations were not counted as speech. We did not code the order or the precise temporal relation in which gesture and speech occurred in these utterances. The measure is the proportion of utterances containing speech and gesture divided by all speech utterances (with and without gesture).2 (4) The proportion of gesture utterances containing only gesture (and no speech) tells us how often children and parents use gesture on its own to communicate. Gesture-only utterances are utterances where the child or parent gestures but does not produce recognizable words (e.g., point at dog; hold up ball; shake head). The measure is the proportion of gesture utterances that are gesture-only divided by all gesture utterances (with and without speech).

The children’s scores on the PPVT at 42 months served as the outcome measure of later vocabulary comprehension. The PPVT was chosen as the outcome measure because it is a widely used instrument with published norms, and is independent of the measures culled from the early parent-child interactions.

RESULTS

Child and parent gesture use

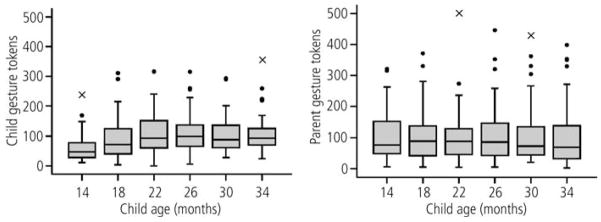

To obtain a sense of how the children and parents used gesture when interacting with one another, we constructed box-plots for each of the four gesture measures at each time point (see Figs 1–4). The boxes in these plots present the median and inter-quartile ranges, the tails represent the 5th and 95th percentiles, and outliers are represented by individual markers. The two panels in Fig. 1 show gesture tokens for children and parents. The children produced an average of 58 gestures tokens during the 90-minute period (SD = 44) at 14 months. But by 22 months, they were producing over 100 gesture tokens (M = 107, SD = 69). Between 22 and 34 months of age, the children remained stable in their number of gesture tokens, with average values between 100 and 115. Interestingly, these later child values mirrored the parents’ values at all time points. Parents produced, on average, between 100 and 115 gesture tokens during the 90-minute period at all six interactions. Thus, by 22 months, the children were producing as many gesture tokens as their parents. There were, however, wide individual differences for both children and parents, as is evident from the relatively large boxes at each time point.

Figure 1.

Distribution of child (left) and parent (right) gesture tokens (note: in Figs 1–4, the boxes present the median values and inter-quartile range; the tails represent the 5th and 95th percentiles; outliers are noted by circles; extreme values are noted by crosses)

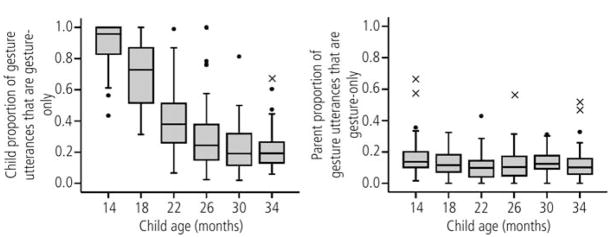

Figure 4.

Distribution of child (left) and parent (right) proportion of gesture utterances that are gesture-only (for symbols, see Fig. 1 caption

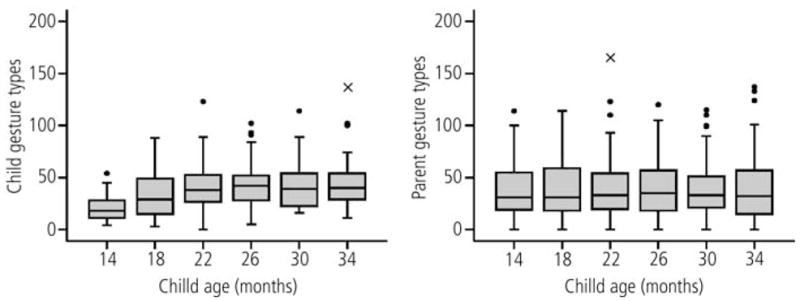

We see a very similar picture in Fig. 2 for gesture types. Children produced an average of 21 different gesture types at 14 months (SD = 12). By 22 months, they were producing an average of 40 gesture types, and between 22 and 34 months, their production remained stable at 40–45 gesture types. Parents produced, on average, 38–42 gesture types (SD range = 26–35) across the six sessions. Thus, by 22 months, the children were conveying the same number of different meanings in gesture as their parents. We examined the overlap between the gesture types and word types produced by each child and by each parent to determine how redundant gesture was with speech. We found that, for children, the mean proportion of gesture types redundant with spoken word types increased from 6% at 14 months to 25% at 34 months; for parents, the mean proportion of gesture types redundant with spoken word types remained relatively stable over time at around 40%. Thus, at the earliest sessions, the children expressed many meanings in gesture that they did not express in speech. But by 34 months, they were approaching the level of redundancy found in their parents’ gesture and speech vocabularies.

Figure 2.

Distribution of child (left) and parent (right) gesture types (for symbols, see Fig. 1 caption)

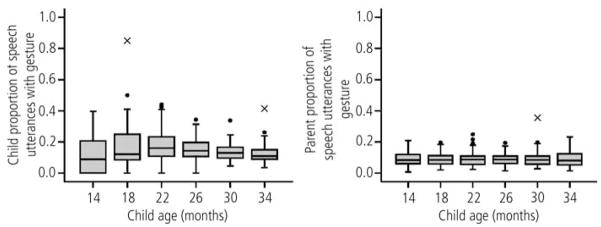

Figure 3 presents plots of the proportion of speech utterances accompanied by gesture. At 14 months, gestures accompanied approximately 11% (SD = 12) of the children’s spoken utterances. This value remained relatively constant across the six sessions, although there was more variability during the earlier than the later months. Parents were quite stable in their proportion of speech utterances accompanied by gesture, with average values of 8.6–9.4% (SD range = 4.0–5.6) across the six sessions. Thus, at 34 months, children were still producing gestures with a greater proportion of their spoken utterances than were their parents.

Figure 3.

Distribution of child (left) and parent (right) proportion of speech utterances accompanied by gesture (for symbols, see Fig. 1 caption)

The most pronounced change in the children’s gesture use was in the proportion of gesture utterances they produced without any speech at all (see Fig. 4; see also Butcher & Goldin-Meadow, 2000). At 14 months, 89% (SD = 14) of the children’s gesture utterances contained only gesture. By 34 months, only 22% (SD = 13) of the children’s gesture utterances were gesture-only utterances. There was, however, much variation in the measure at every age and particularly at 22 and 26 months. Thus, while as a group children’s proportions dropped dramatically between 14 and 26 months, some children dropped at a faster rate than others. Parents, on the other hand, were stable in the proportion of gesture utterances they produced without speech, with averages of 10–17% (SD range = 7–14) across the six sessions. It is interesting to note that the parents used gesture alone most often (17%) at child age 14 months, when the majority of their children’s gestures were also used without speech.

Relation between early gesture and later vocabulary skill

We now ask whether individual differences in gesture use relate to children’s later vocabulary skill. To do so, we first explore whether variation in children’s early gesture use at 14 months is related to their later vocabulary comprehension, as measured by their PPVT scores at 42 months, controlling for the child’s early spoken vocabulary at 14 months. We then examine whether parent speech and/or parent gesture adds predictive value to the analysis.

Children varied widely in their normed PPVT scores at 42 months of age, ranging from a minimum score of 63 to a maximum of 137 (M = 106, SD = 17).3 PPVT scores were correlated with children’s word types at 14 months (r = 0.34, p < 0.05), indicating that children with larger spoken vocabularies at 14 months had larger receptive vocabularies at 42 months than children with smaller spoken vocabularies at 14 months. As a result, when exploring the effect that gesture use at 14 months has on later vocabulary development, it is essential that we control for the number of different words (word types) children produced at 14 months. Partial correlations controlling for children’s word types at 14 months indicate that three of the four child gesture measures at 14 months are related to PPVT scores at 42 months. Specifically, PPVT scores at 42 months are positively related to child gesture tokens (r = 0.41, p < 0.01), child gesture types (r = 0.52, p < 0.001), and child proportion of speech accompanied by gesture (r = 0.38, p < 0.01) at 14 months, controlling for children’s productive vocabularies at 14 months. The proportion of gesture utterances containing only gesture and no speech is negatively related to PPVT scores, controlling for word types, but the relationship does not reach significance. In sum, even controlling for early spoken vocabulary (word types), three of the early child gesture measures relate to later spoken vocabulary, with gesture types showing the strongest relation to PPVT scores.

We conducted follow-up multiple regression analyses to understand better the independent and combined effects that child word types and child gesture types at 14 months have on PPVT scores at 42 months (see Table 2). Model 1 shows the relation between child word types at 14 months and PPVT scores at 42 months. Under this model, the parameter estimate associated with child word types (β = 0.44) tells us that every additional word type produced at 14 months is positively associated with a 0.44-point difference on the PPVT at 42 months. However, the number of different words that children produced at 14 months (word types) explains only 12% of the variance in PPVT scores at 42 months (p < 0.05). Thus, there is variance left over to explain with other variables.

Table 2.

A series of regression models predicting child 42-month PPVT scores based on 14-month speech and gesture measures and background characteristics (N 352)

| Vocabulary comprehension parameter estimate (SE) |

||||||

|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | Model 5 | Model 6 | |

| Intercept | 100.26*** (3.16) | 89.35*** (3.97) | 78.13*** (5.94) | 87.19*** (4.17) | 73.03*** (6.02) | 79.20*** (4.92) |

| Child word types (14 months) | 0.44* (0.17) | 0.01 (0.19) | 0.12 (0.18) | 0.05 (0.19) | 0.12 (0.18) | |

| Child gesture types (14 months) | 0.80*** (0.21) | 0.62** (0.21) | 0.64** (0.23) | 0.63* (0.20) | 0.78*** (0.15) | |

| Parent word types (14 months) | 0.04* (0.02) | 0.03† (0.02) | ||||

| Parent gesture types (14 months) | 0.13 (0.09) | |||||

| Family income (US$, in thousands) | 0.15* (0.06) | 0.18** (0.06) | ||||

| R 2 statistic (%) | 11.7 | 32.4 | 39.9 | 35.5 | 46.7 | 43.3 |

p < 0.10;

p < 0.05;

p < 0.01;

p < 0.001

In Model 2, we add in the number of child gesture types produced at 14 months. We chose gesture types to use as our gesture predictor since it is the measure with the strongest controlled relation to PPVT. Interestingly, when child gesture types is included in Model 2, the effect of child word types at 14 months reduces to non-significance and child gesture types is a significant positive predictor of PPVT scores (p < 0.001). In other words, the variance in PPVT scores explained by child word types can also be explained by child gesture types, and child gesture types is more strongly related to PPVT scores than child word types. In this model, controlling for child word types, the parameter estimate associated with child gesture types (β = 0.80) indicates that every additional meaning a child conveys in gesture at 14 months is positively associated with a 0.80-point difference on the PPVT at 42 months. As a whole, Model 2 indicates that child word types (ns) and gesture types (p < 0.001) combine to explain approximately 32% of the variance in PPVT scores, with the majority of the variance (27%) explained by child gesture types.

Our next step was to examine whether, controlling for child speech and child gesture, there is added value in including parent speech and gesture as predictors of child vocabulary. We first examined correlations between both parent gesture and speech at child age 14 months and child PPVT scores at 42 months. Parent word types is related to subsequent child PPVT scores (r = 0.41, p < 0.01), as are all four of the parent gesture measures; of the four measures, parent gesture types shows the strongest relation to child PPVT scores (r = 0.42, p < 0.01). In addition, as one might expect, parents who produced more word types produced more gesture types (r = 0.60, p < 0.001), and thus were communicating more meanings or vocabulary items in both modalities.

We continued to build on the multiple regression analyses presented above by including parent word types (Model 3) and parent gesture types (Model 4) at 14 months as predictors of child PPVT scores at 42 months (Table 2), controlling for child word types and child gesture types at 14 months. In Model 3, parent word types is a significant predictor of child PPVT scores (p < 0.05), and child gesture types remains a significant predictor of child PPVT scores (p < 0.01). Under Model 3, parent word types explains an additional 8% of the variance and, taken together, child word types, child gesture types and parent word types explain approximately 40% of the variance in child PPVT scores at 42 months. In Model 4, parent gesture types is not a significant predictor of child PPVT scores once child speech and child gesture are controlled.

As a final step, we explored whether adding background characteristics to Model 3 improves our ability to predict child PPVT scores. The background characteristics of interest include parent education, family income, parent age, child birth order and child gender.4 We found in a series of regression models (not shown) that only two of the background measures were significant predictors of PPVT scores when each is added to Model 3. Specifically, parent education is a positive predictor and explains an additional 5% of the variance and family income is a positive predictor and explains an additional 6.8% of the variance, controlling for child word types, child gesture types and parent word types. In each case, including the background variable (i.e., education or income) reduced the effect of parent word types to non-significance. Importantly, however, the effect of child gesture on PPVT scores remained significant. We could not fit a model including both education and income as they were collinear predictors whose effects cancelled each other out. The model including family income was the best-fitting model with background characteristics and is presented as Model 5 in Table 2. This model contains child word types (ns), child gesture types (p < 0.05), parent word types (p < 0.10), and family income (p < 0.05) and explains 46.7% of the variance in child PPVT scores at 42 months. When added to Model 5, none of the other background variables –parent education, parent age, child gender or child birth-order – is a significant predictor of child PPVT scores above and beyond child word types, child gesture types and parent word types.

Finally, Model 6 was the most parsimonious model we could fit to the data, as the non-significant effects of child word types and parent word types have been removed. In this model, we see that child gesture types (p < 0.001) and family income (p < 0.01) combine to explain approximately 43% of the variance in child PPVT scores at 42 months.5 The parameter estimate associated with child gesture types (β = 0.78) tells us that, controlling for family income, every additional meaning that a child conveys in gesture at 14 months translates into an additional 0.78 points on the PPVT at 42 months. Similarly, the parameter estimate associated with family income (β = 0.18) tells us that, controlling for child gesture types at 14 months, every additional $1000 of yearly family income is positively associated with a 0.18-point difference on the PPVT at 42 months.

What predicts gesture?

We now know that early child gesture predicts later vocabulary. Our next question is: what predicts early child gesture? We began to address this question by examining whether child speech, parent speech, parent gesture, and background characteristics relate to child gesture types at 14 months. As shown in Table 3, child gesture types at 14 months is positively and significantly related to child word types, parent gesture types and parent education. In follow-up multiple regression analyses (not shown), we found that child word types at 14 months explains approximately 35% of the variance in child gesture types at 14 months (p < 0.001). Thus, children who use more varied vocabulary in speech, use more varied vocabulary in gesture, and vice versa. Furthermore, controlling for child word types at 14 months, parent gesture types at 14 months is also significantly related to child gesture types at 14 months (p < 0.001), explaining an additional 14% of the variance. Taken together, the child’s productive speech vocabulary, coupled with the parent’s gesture vocabulary, explains half of the variance in the child’s gesture vocabulary at 14 months. Moreover, none of the other parent or background characteristics (including parent speech) predicts child gesture types, after child word types and parent gesture types have been controlled.

Table 3.

Zero-order correlations (Pearson’s r) between child gesture types at 14 months and child word types, parent word types and parent gesture types at 14 months, and background characteristics (N = 53)

| Child gesture types (14 months) | |

|---|---|

| Child word types (14 months) | 0.59*** |

| Parent word types (14 months) | 0.27† |

| Parent gesture types (14 months) | 0.48*** |

| Parent education | 0.38** |

| Family income | 0.08 |

| Parent age | 0.24† |

| Child gender (male) | −0.04 |

| Child birth order (first-born) | 0.07 |

p < 0.10;

p < 0.01;

p < 0.001

These results underscore two important findings. First, parent gesture relates to child gesture, and this relationship holds even when parent speech is taken into account. Second, the significant relation between parent education and child gesture shown in Table 3 is no longer significant once parent gesture and child word types are taken into account. This finding suggests that the effect of education on child gesture may be mediated by parent gesture types and/or child word types. Indeed, parent gesture types relates to parent education (r = 0.48, p < 0.001) although child word types at 14 months does not.

In sum, the results are consistent with the following scenario: (1) parents who produce more gesture types with their 14-month-old children have children who produce more gesture types at that time, and vice versa; (2) the children who produce more gesture meanings at 14 months have larger verbal vocabularies (as measured by the PPVT) at 42 months than children who produce fewer gesture types at 14 months, controlling for early spoken vocabulary, parent input and family income.

DISCUSSION

We have found that, during the 20-month period between 14 and 34 months, children come to use gesture in essentially the same ways as their parents do. Indeed, we found that one of the strongest correlates of child gesture use at 14 months appears to be parent gesture use at 14 months. In turn, child gesture use at 14 months is a significant predictor of child spoken vocabulary two years later. And the relation between early child gesture and later spoken vocabulary growth is robust; it holds even after the child’s early spoken vocabulary and background factors (e.g., family income) are controlled. These findings underscore the importance of gesture – both the child’s and the parent’s – in children’s vocabulary development. We begin by discussing the gestures that parents and children produce during the early stages of language-learning, with a focus on variability across individuals. We then turn to gesture’s role in predicting child vocabulary development.

Parent and child gesture: individual variability

We found that parents were stable in the gestures they used with their children during the period from 14 to 34 months. The children were not stable in their gesture use and, as a group, their gestures became more and more like their parents’ during this time period. Indeed, by 22 months the children were producing the same number of gesture tokens and the same number of gesture types as their parents. The children had not yet achieved parental levels for the other two gesture measures – the proportion of speech accompanied by gesture, and the proportion of gestures produced without speech – but, at the final observation session at 34 months, they were approaching their parents in these measures as well.

There were, however, wide individual differences in how the parents used gesture: some used as many as 114 gesture types per 90 minutes, some as few as 0. Similarly, the children also varied in the number of gestures they used: from 54 types to 4 at 14 months. Interestingly, parental differences in gesture use were systematically related to child differences in gesture use: children whose parents gestured a great deal produced more of their own gestures than children whose parents gestured less. Note, however, that although early parent gesture was related to early child gesture, it did not predict later child spoken vocabulary.6 To the extent that parent gesture had an influence on later child vocabulary, it seemed to be through its relation to early child gesture.

The individual differences in the rate at which the children produced their gestures not only related to their parents’ gesture use, but also to their own word use. Children who produced many different word types also produced many different types of gestures. Note that this positive relation between gesture and word vocabulary could have been otherwise. Particularly at the earliest stage of language-learning when children have relatively small spoken vocabularies, a child might use gesture to compensate, developing a relatively large gestural vocabulary. However, our data on associations between measures provide no support for this hypothesis: children with larger vocabularies in gesture at 14 months also had larger vocabularies in speech at 14 months and years later at 42 months. (Note that the compensation hypothesis might be an accurate description of children who are having difficulty learning language, and we are currently investigating this hypothesis in a group of children with unilateral focal brain injury).

How does child gesture play a role in vocabulary development?

We found that child gesture types at 14 months is one of the most reliable predictors of child vocabulary size years later (see Table 2). Why is it that children who convey many different meanings in gesture during the early stages of language-learning have larger vocabularies later on, compared with children who convey fewer meanings in gesture? One possibility is that child gesture reflects skills responsible for vocabulary learning – in other words, the gesture signals that a child is, or is not, ready to build his or her vocabulary, but plays no role in the actual building. Alternatively, child gesture could be part of the learning mechanism itself. Child gesture has the potential to play a causal role in vocabulary learning in at least two non-mutually exclusive ways.

First, parents may respond to children’s gestures in ways that facilitate word learning. For example, a parent who says the word ‘dog’ in response to the child’s point at a dog is providing a verbal label for an object at just the moment that the child is clearly interested in communicating about the object. Indeed, Goldin-Meadow, Goodrich, Sauer & Iverson (2007) found that when mothers translated their child’s gestures into words, those words tended to become part of the child’s spoken vocabulary several months later. In addition, Masur (1982) found that the number of times a mother provided word labels in response to her child’s pointing gestures predicted the number of object names in the child’s lexicon.

Second, the act of gesturing by the child may itself play a role in word-learning. Iverson & Goldin-Meadow’s (2005) finding that the specific lexical items in children’s gesture vocabulary show up in their verbal vocabularies three months later suggests that gesture may be providing children with an early way for meanings to enter their communicative repertoires. They conclude that gesture may give children an opportunity to practice these meanings, laying the foundation for their appearance in speech (Iverson & Goldin-Meadow, 2005). Indeed in the current study we found that early in development most of the children’s gestures conveyed meanings that they did not yet convey in speech. In addition, there is evidence that school-aged children who gesture on a maths task in response to the gestures modeled by their instructor are more likely to profit from instruction on the task than children who do not gesture (Cook & Goldin-Meadow, 2006). Importantly, this effect holds even when gesture is experimentally manipulated: children are more likely to benefit from instruction in the maths task when explicitly told to gesture than when told not to gesture (Broaders, Cook, Mitchell & Goldin-Meadow, 2007; Cook, Mitchell & Goldin-Meadow, 2008). We did, in fact, find in our study that children whose parents gestured frequently when they were 14 months old produced more of their own gestures and had larger gesture vocabularies than children whose parents gestured less often. Thus, young language-learning children may see their parents gesturing and may try doing it themselves, which may, in turn, facilitate word-learning (cf. Goldin-Meadow & Wagner, 2005). Of course, correlations do not imply causality. In future work, we hope to conduct experimental manipulations of the sort done with school-aged children to explore the causal relations between parent gesture, child gesture and vocabulary development.

One of the most striking aspects of our findings is that a child’s gesture vocabulary at 14 months is a strong predictor of vocabulary skill two years later. Thus, early gesture does more than predict early success in word learning – it signals that the child will be at an advantage for years to come. This finding is an important one for those interested in identifying children with language delay as early as possible. Early deficits in gesture use could prove to be a useful warning signal. In addition, early intervention programs may find it useful to target the gestures that parents use with their toddlers (along with their speech) since our results suggest that parent gesture is indirectly related (through child gesture) to vocabulary development.

In sum, during the initial stages of language learning, the gestures that children produce in everyday interactions with their parents provide an early window into later vocabulary development, over and above the words that they use. Furthermore, children whose parents gesture a great deal gesture more themselves and, in turn, develop larger verbal vocabularies more quickly than children whose parents gesture less often. Our findings suggest that if our goal is to predict children’s vocabulary development, we need to pay attention not only to what children and parents say with their mouths at the beginning stages of language-learning, but also to what they say with their hands.

Acknowledgments

We thank Kristi Schoendube and Jason Voigt for administrative and technical support, and Karyn Brasky, Laura Chang, Elaine Croft, Kristin Duboc, Jennifer Griffin, Sarah Gripshover, Kelsey Harden, Lauren King, Carrie Meanwell, Erica Mellum, Molly Nikolas, Jana Oberholtzer, Calla Rousch, Lillia Rissman, Becky Seibel, Meredith Simone, Kevin Uttich and Julie Wallman for help in data collection and transcription. We are grateful to the participating children and families. The research was supported by grants from the National Institute of Child Health and Human Development: P01 HD40605 to Susan Goldin-Meadow and F32 HD045099 to Meredith Rowe.

Footnotes

As in most longitudinal studies, families occasionally missed a data-collection period. Thus, sample sizes for each visit varied and are as follows for the six visits: 53, 53, 51, 50, 52, 53.

Five of the children did not produce any words at 14 months; the proportion of speech utterances accompanied by gesture was not calculated for these children at that one time-point.

One child did not complete the PPVT at 42 months, resulting in a sample of 52 for this analysis.

Ethnicity was not investigated as its own measure, as the distribution was too skewed for statistical analyses (e.g., over half the sample was White). However, ethnicity was strongly related to both income (see Table 1) and education in this sample, and thus the income measure encompasses much of the ethnicity distribution.

There was no significant interaction between income and child gesture. Residuals of model 6 were examined to ensure that no assumptions were violated.

This finding may be surprising given that the way in which mothers move their hands as they speak has been found to influence early child word-learning. For example, Gogate and colleagues (1998, 2000, 2006) have found that mothers tend to move objects in synchrony with the labels they produce for those objects and that this temporal synchrony facilitates word-mapping abilities in pre-lexical children (5–8 months). The parents in our study were likely to have synchronized their gestures with the words they produced (indeed, gesture is routinely synchronized with speech in all speakers; McNeill, 1992). We might therefore have expected this synchrony to have had an effect on child word learning. Note, however, that the children in our study were well beyond the pre-lexical stage and thus may not have needed this type of synchrony to learn words. Moreover, the action-sound synchrony described by Gogate may be quite different from the subtle temporal synchronization found between gesture and speech (Kendon, 1980; McNeill, 1992).

Contributor Information

Meredith L. Rowe, University of Chicago

Şeyda Özçalişkan, University of Chicago.

Susan Goldin-Meadow, University of Chicago.

References

- Acredolo LP, Goodwyn SW. Symbolic gesturing in normal infants. Child Development. 1988;59:450–466. [PubMed] [Google Scholar]

- Bates E. Language and context. Orlando: Academic Press; 1976. [Google Scholar]

- Bates E, Benigni L, Bretherton I, Camaioni L, Volterra V. The emergence of symbols: Cognition and communication in infancy. New York: Academic Press; 1979. [Google Scholar]

- Broaders SC, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136:539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Butcher C, Goldin-Meadow S. Gesture and the transition from one- to two-word speech: When hand and mouth come together. In: McNeill D, editor. Language and gesture. New York: Cambridge University Press; 2000. pp. 235–257. [Google Scholar]

- Butterworth G, Grover L. The origins of referential communication in human infancy. In: Weiskrantz L, editor. Thought without language. Oxford: Clarendon Press; 1988. pp. 5–24. [Google Scholar]

- Carpenter M, Nagell K, Tomasello M. Social cognition, joint attention, and communicative competence from 9 to 15 months of age. Monographs of the Society for Research in Child Development. 1998;63:5. Serial No. 255. [PubMed] [Google Scholar]

- Cook SW, Goldin-Meadow S. The role of gesture in learning: Do children use their hands to change their minds? Journal of Cognition and Development. 2006;7:211–232. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Fenson L, Dale P, Reznick JS, Bates E, Thal D, Pethick S. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59:5. Serial No. 242. [PubMed] [Google Scholar]

- Gogate LJ, Bahrick LE. Intersensory redundancy facilitates learning of arbitrary relations between vowel-sounds and objecets in 7-month-olds. Journal of Experimental Child Psychology. 1998;69(2):133–149. doi: 10.1006/jecp.1998.2438. [DOI] [PubMed] [Google Scholar]

- Gogate LJ, Bahrick LE, Watson JD. A study of multimodal motherese: The role of temporal synchrony between verbal labels and gestures. Child Development. 2000;71(4):878–894. doi: 10.1111/1467-8624.00197. [DOI] [PubMed] [Google Scholar]

- Gogate LJ, Bolzani LH, Betancourt EA. Attention to maternal multimodal naming by 6- to 8-month old infants and learning of word-object relations. Infancy. 2006;9(3):259–288. doi: 10.1207/s15327078in0903_1. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Goodrich W, Sauer E, Iverson J. Young children use their hands to tell their mothers what to say. Developmental Science. 2007;10(6):778–785. doi: 10.1111/j.1467-7687.2007.00636.x. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C. Gestural communication in deaf children: The effects and non-effects of parental input on early language development. Monographs of the Society for Research in Child Development. 1984;49:3. Serial No. 207. [PubMed] [Google Scholar]

- Goldin-Meadow S, Wagner SM. How our hands help us learn. Trends in Cognitive Science. 2005;9:230–241. doi: 10.1016/j.tics.2005.03.006. [DOI] [PubMed] [Google Scholar]

- Greenfield PM, Smith JH. The structure of communication in early language development. New York: Academic Press; 1976. [Google Scholar]

- Hart B, Risley TR. Meaningful differences in the everyday experiences of young American children. Baltimore, MD: Paul H. Brooks; 1995. [Google Scholar]

- Hoff E. The specificity of environmental influence: Socioeconomic status affects early vocabulary development via maternal speech. Child Development. 2003a;74:1368–1378. doi: 10.1111/1467-8624.00612. [DOI] [PubMed] [Google Scholar]

- Hoff E. Causes and consequences of SES-related differences in parent-to-child speech. In: Bornstein MH, editor. Socioeconomic status, parenting, and child development. Mahwah, NJ: Erlbaum; 2003b. pp. 147–160. [Google Scholar]

- Huttenlocher J, Haight W, Bryk A, Seltzer M, Lyons T. Early vocabulary growth: Relation to language input and gender. Developmental Psychology. 1991;27:236–248. [Google Scholar]

- Iverson JM, Capirci O, Longobardi E, Caselli MC. Gesturing in mother-child interactions. Cognitive Development. 1999;14:57–75. [Google Scholar]

- Iverson JM, Goldin-Meadow S. Gesture paves the way for language development. Psychological Science. 2005;16:368–371. doi: 10.1111/j.0956-7976.2005.01542.x. [DOI] [PubMed] [Google Scholar]

- Kendon A. Gesticulation and speech: Two aspects of the process of utterance. In: Key MR, editor. Relationship of verbal and nonverbal communication. The Hague: Mouton; 1980. pp. 207–228. [Google Scholar]

- Masur EF. Mothers’ responses to infant’s object-related gestures: Influence on lexical development. Journal of Child Language. 1982;9:23–30. doi: 10.1017/s0305000900003585. [DOI] [PubMed] [Google Scholar]

- Masur EF. Gestural development, dual-directional signaling, and the transition to words. In: Volterra V, Erting CJ, editors. From gesture to language in hearing and deaf children. New York: Springer-Verlag; 1990. pp. 18–30. [Google Scholar]

- McNeill D. Hand in mind: What gestures reveal about thought. Chicago: University of Chicago Press; 1992. [Google Scholar]

- Murphy CM, Messer DJ. Mothers, infants and pointing: A study of a gesture. In: Schaffer HR, editor. Studies in mother-infant interaction. London: Academic Press; 1977. pp. 325–354. [Google Scholar]

- Namy LL, Acredolo L, Goodwyn S. Verbal labels and gestural routines in parental communication with young children. Journal of Nonverbal Behavior. 2000;24:63–79. [Google Scholar]

- Özçalişkan S, Goldin-Meadow S. Do parents lead their children by the hand? Journal of Child Language. 2005;32:481–505. doi: 10.1017/s0305000905007002. [DOI] [PubMed] [Google Scholar]

- Pan BA, Rowe ML, Singer JD, Snow CE. Maternal correlates of growth in toddler vocabulary production in low-income families. Child Development. 2005;76(4):763–782. doi: 10.1111/j.1467-8624.2005.00876.x. [DOI] [PubMed] [Google Scholar]

- Rowe ML. Pointing and talk by low-income mothers and their 14-month-old children. First Language. 2000;20:305–330. [Google Scholar]

- Shatz M. On mechanisms of language acquisition: Can features of the communicative environment account for development? In: Wanner E, Gleitman L, editors. Language acquisition: The state of the art. New York: Cambridge University Press; 1982. pp. 102–127. [Google Scholar]

- Zukow-Goldring P. Sensitive caregiving fosters the comprehension of speech: When gestures speak louder than words. Early Development and Parenting. 1996;5(4):195–211. [Google Scholar]