Abstract

Classification image and other similar noise-driven linear methods have found increasingly wider applications in revealing psychophysical receptive field structures or perceptual templates. These techniques are relatively easy to deploy, and the results are simple to interpret. However, being a linear technique, the utility of the classification-image method is believed to be limited. Uncertainty about the target stimuli on the part of an observer will result in a classification image that is the superposition of all possible templates for all the possible signals. In the context of a well-established uncertainty model, which pools the outputs of a large set of linear frontends with a max operator, we show analytically, in simulations, and with human experiments that the effect of intrinsic uncertainty can be limited or even eliminated by presenting a signal at a relatively high contrast in a classification-image experiment. We further argue that the subimages from different stimulus-response categories should not be combined, as is conventionally done. We show that when the signal contrast is high, the subimages from the error trials contain a clear high-contrast image that is negatively correlated with the perceptual template associated with the presented signal, relatively unaffected by uncertainty. The subimages also contain a “haze” that is of a much lower contrast and is positively correlated with the superposition of all the templates associated with the erroneous response. In the case of spatial uncertainty, we show that the spatial extent of the uncertainty can be estimated from the classification subimages. We link intrinsic uncertainty to invariance and suggest that this signal-clamped classification-image method will find general applications in uncovering the underlying representations of high-level neural and psychophysical mechanisms.

Keywords: classification image, reverse correlation, spatial uncertainty, invariance, nonlinearity

Introduction

If a system responds linearly to its input by correlating it with a single template (by taking the dot product), then this template can be recovered by presenting the system with samples of white noise and averaging those noise samples that led to the same response. Since the 1980s, this simple form of reverse correlation, also known as spike-triggered averaging, has been routinely applied to map the receptive fields of neurons in the early stages of the sensory systems (e.g., de Boer & de Jongh, 1978; de Boer & Kuyper, 1968; Jones & Palmer, 1987). When applied to visual psychophysics, where the stimulus noise is in the form of an image (or a movie), the technique is often referred to as the “classification-image method” (Ahumada 2002; Beard & Ahumada, 1999), which owes its roots to the early work of Ahumada and colleagues in auditory psychophysics (Ahumada & Lovell, 1971; Ahumada & Marken, 1975).

In recent years, classification image and similar techniques have been applied to study vernier acuity (Beard & Ahumada, 1999), stereopsis (Neri, Parker, & Blakemore, 1999), illusory-contour perception (Gold, Murray, Bennett, & Sekuler, 2000), identification of facial expression (Adolphs et al., 2005; Gosselin & Schyns, 2003), and surround effect on contrast discrimination (Shimozaki, Eckstein, & Abbey, 2005), to name a few. We can divide these applications into two broad categories. In one category, the primary goal of the investigation was to discern from where in the stimulus an observer extracts information. For example, Gold et al. (2000) found that when an observer was asked to judge whether the shape of an illusory square was “thin” or “fat,” observers often based their decision on the left and right illusory edge, while ignoring the top and bottom ones, which are equally informative. Adolphs et al.’s (2005) work, showing that a patient’s failure to use information in the eye region of faces impaired the perception of fear, is another example of this category. In the second category, the main purpose of using the classification-image method was to infer the “perceptual template” used by an observer to perform a given task. For example, Beard and Ahumada(1999) showed with classification images that vernier discrimination was mediated by an orientation-tuned mechanism, as had been previously suggested.

The method of classification image can recover a mechanism’s template if the mechanism is equivalent to a linear noisy correlator (Ahumada, 2002). Murray, Bennett, and Sekuler (2002) argued that this requirement can be relaxed to include observer models that have an additive noise whose variance is proportional to the contrast energy of the input (as opposed to being a constant) and to models with nonlinear transducer functions when tested over a narrow range (for a more precise description of the requirements regarding nonlinear transducer functions, see Neri, 2004). Even with these generalizations, the range of observer models for which the classification-image method is valid for inferring the perceptual template appears to be restricted. Physiologists have long maintained that spike-triggered averaging (identical to classification image) is of very limited use for uncovering the receptive field structures of higher order visual neurons. Various higher order techniques, such as spike-average covariance (de Ruyter van Steveninck & Bialek, 1988; Rust, Schwartz, Movshon, & Simoncelli, 2004, 2005), are used to augment spike-triggered averaging. Neri and Heeger (2002) recently extended the classification-image method to include the analysis of covariance. Despite its theoretical limitation, the linear version of the classification-image method has been applied to increasingly complex visual tasks, such as face recognition and object categorization, yielding intriguing results.

A simple yet ubiquitous form of nonlinearity generally believed to pose a severe problem to the method of classification image is uncertainty. Murray et al., (2002) described this problem succinctly:

One type of nonlinearity that does pose a problem for the noisy cross-correlator1 model is stimulus uncertainty. Even when observers are told the exact shape and location of the signals that they are to discriminate between, they sometimes behave as if they are uncertain as to exactly where the stimulus will appear or what shape it will take (e.g., Manjeshwar & Wilson, 2001; Pelli, 1985). We can model spatial uncertainty by assuming that the observer has many identical templates that he applies over a range of spatial locations in the stimulus, but the effects of this operation are complex, and it is not obvious precisely how a classification image is related to the template of such an observer, or how the SNR of the classification image is related to quantities such as the observer’s performance level or internal-to-external noise ratio. If an observer is very uncertain about some stimulus properties, such as the phase of a grating signal, a response classification experiment may produce no classification image at all (Ahumada & Beard, 1999).

This problem is more serious because of the equivalence between feature invariance and intrinsic uncertainty (an uncertainty internal to the observer, as opposed to that in the stimuli, or extrinsic uncertainty), which we shall explain next.

Visual processing entails the extraction of “features” from retinal inputs that are relevant to behavior. In theories of object perception, the degree of invariance that a feature possesses is a central issue. Biederman (1987) and Marr (1982), for example, viewed visual processing as a stage-wise process designed to recover, from retinal images, non-accidental features of increasing complexity and invariance (edges, contours, corners, simple volumes, and structural description of volumes). For example, an edge feature is invariant to local contrast and immune to changes in local illumination; a volumetric feature is invariant not only to local and global illumination but also to the observer’s viewpoint. All theories of object recognition involve invariance but differ in the degree of invariance they rely on to make the final determination of object identity (cf. Tjan, 2002; Tjan & Legge, 1998).

Consider a detector that signals the presence of a particular feature (e.g., an edge) while ignoring the specific image properties that the feature was rendered with (e.g., the colors across the edge). It is as if the detector is obligatorily considering all possible versions of the feature (e.g., white–black edge, white–gray edge, red–green edge, etc.). Such a feature detector will exhibit an amount of intrinsic uncertainty, equal to the effective number of orthogonal instances in the equivalent set of the input images that lead to the same response. The notions of “invariance” and “uncertainty,” albeit different in their historical and theoretical origins, are therefore the same.

If the method of classification image indeed could not handle uncertainty, it would be of limited use as a tool to reveal the mechanisms of vision, which undoubtedly involve invariance. Limitations imposed by uncertainty have been noted and partially addressed in the past. Using a vernier-offset detection task, Barth, Beard and Ahumada (1999) rejected the linear observer model (one that does not have uncertainty) by showing a significant discrepancy between classification images from human observers and those from a linear model, when the classification images from the offset-present and offset-absent trials were considered separately. They also estimated the amount of positional and orientation uncertainty in the human observers by explicitly modeling uncertainty as a small Gaussian weighting function. Solomon (2002) likewise pointed out that for a yes–no signal detection task, any difference in the shapes of templates estimated from target-present trials as compared with target-absent trials may be due to uncertainty. Abbey and Eckstein (2002) extended this observation to 2AFC tasks and provided a statistical test for using classification images to detect the presence of observer nonlinearities. Although these studies showed how observer nonlinearity, such as uncertainty, could be detected from classification images, they did not provide a general method for template estimation in the face of large uncertainty. Eckstein, Shimozaki, and Abbey (2002) went one step further to show that, at least for a small amount of positional uncertainty in the task (two possible positions), the classification image computed at each possible position was an unbiased estimate of the underlying templates of a Bayesian ideal observer.

The goal of this paper is to show that with a slight modification to the current practice, the method of classification image is generally applicable even when the task, the visual system, or both possess a great deal of uncertainty (and invariance). This is achieved by understanding the role that a signal plays in a classification-image experiment in the context of a well-established uncertainty model (cf. Pelli, 1985), first proposed by Tanner (1961). Specifically, we will demonstrate the theoretical feasibility and empirical practicality of recovering the perceptual templates of an observer for tasks with a high degree of spatial uncertainty. We will also demonstrate how the degree of uncertainty may be estimated from the resulting classification images.

Overview

The rest of this paper is organized as follows. In the first half of the paper, we will explore the theoretical under-pinnings that allow the use of classification-image methods in conditions with high uncertainty. We will illustrate the various aspects of our proposed method analytically and by means of simulations using an ideal-observer model for which we know the ground truth about the observer’s templates. In the second half of the paper, we will demonstrate the practicality of our method via three sets of experiments with human observers. Experiments 1 and 2 will show that we can uncover, within a reasonable number of trials, the perceptual templates for letter identification and detection tasks in conditions with varying degrees of spatial uncertainty. We will also show that the degree of uncertainty can be estimated from the classification images. In Experiment 3, we will demonstrate the potential of our method by using it to measure both the quality of the perceptual templates and the amount of intrinsic spatial uncertainty in human peripheral vision.

Theory

We first consider an ideal observer for identifying known patterns in additive Gaussian noise (Tjan, Braje, Legge, & Kersten, 1995; Tjan & Legge, 1998). An ideal observer is a theoretically optimal decision mechanism for a given task and its stimuli. Strictly speaking, an ideal observer is not a model of any actual observer. Its formulation is completely determined by the given task and its stimuli. An ideal observer establishes the upper bound of the level of performance achievable by any observer, biological or otherwise, and often provides a good starting point for modeling human observers.

A typical task used in a classification-image experiment is to discriminate between two patterns embedded in additive Gaussian white noise. A detection task is a special case of this, where one of the patterns is a blank (noise-only) display. For each of the two patterns, there may be one or more instances. Consider for example a task to identify if the noisy stimulus contains the letter “O” or “X.” A single-instance version of this task is one where there is only one version of “X” and one version of “O.” For a single-instance task, the signal for each response is known exactly, and there is no stimulus uncertainty. Stimulus uncertainty is introduced when different image patterns are to be associated with the same response—for example, in a multiple-instance version of the task, the letters may appear in different fonts, sizes, or positions.

Let Tr,j be the jth version of a noise-free contrast pattern with a response label r. (Unless the context suggests otherwise, we generally present a 2-D pattern as a column vector by concatenating all columns of an image into a single column.) Let Nσ be a sample of a Gaussian white noise (a multinomial normal distribution of zero mean and diagonal covariance σ2 I). A noisy stimulus with a signal contrast of c is,

| (1) |

The general form of the ideal observer for identifying the embedded pattern in I with maximum accuracy is to select the response label r that maximizes the posterior probability (Duda & Hart, 1973; Green & Swets, 1974; Peterson, Birdsall, & Fox, 1954). That is,

| (2) |

The summation over j (marginalization) in the second expression follows strictly from probability theory because the occurrence of the different versions of a pattern is mutually exclusive in a single presentation.

Assuming that all patterns are equally likely to occur, by applying the Bayes theorem and the probability density function (p.d.f.) of a normal distribution and by collecting into a constant the terms that do not vary with either r or j, we have:

| (3) |

where M is the number of distinct patterns with the same response label, the ks are constants, and the superscript T denotes matrix transpose. We note that ITI does not vary with either r or j and has therefore been treated as a constant.

Equation 3 provides us with the optimal decision rule for pattern identification with or without stimulus uncertainty. The optimal decision rule is to choose the response r that maximizes a univariate decision variable λ(r):

| (4) |

An appendix in Tjan and Legge (1998) provides a computationally efficient way of implementing this decision mechanism when the stimulus uncertainty (M) is large (in the tens of thousands).

Two special cases of the optimal decision rule are noteworthy. For a task where all signal patterns have the same contrast energy, the dot product Tr,jT Tr,j is a positive constant and can be removed from the decision rule:

| (5) |

For a task with equal-energy signals and no stimulus uncertainty (M = 1), the optimal decision rule can be further reduced to that of a linear correlator by taking advantage of the fact that the exponential function is monotonically increasing and by removing all constant terms:

| (6) |

What we have shown is that the popular linear observer model (Equation 6), which makes a decision by linearly correlating the input with a template, is the optimal decision mechanism when there is no stimulus uncertainty and when the stimulus noise is white, a well-known result that is worth reiterating. To maintain optimality under these conditions, it is not necessary to know either the signal contrast (c) or the noise variance (σ2). The assumption of a linear observer is the singularly most important assumption for the classification-image method (Ahumada, 2002; Murray et al., 2002). Also evident from our derivation is the reason why uncertainty presents a significant challenge to the classification-image method—because there are no apparent means of approximating the optimal decision variable of Equation 5 to something similar to Equation 6.

The uncertainty model

When stimulus uncertainty is due to the task (multiple input patterns are to be associated to the same response per task requirement), such uncertainty is often referred to as “extrinsic uncertainty” because it is external to an observer. With extrinsic uncertainty, Equation 4 or 5 is the optimal decision rule if the equal contrast energy condition is met. Extrinsic uncertainty is contrasted with “intrinsic uncertainty,” which refers to the uncertainty assumed by the observer. For example, in a letter identification task where there is only one instance of “X” and one instance of “O”, observers may still insist on considering different versions of the letters during a trial either because they lack the precision for encoding certain attributes of the instances (e.g., the exact stimulus size or position) or because they are misinformed about the task. With intrinsic uncertainty, Equation 4 or 5 becomes an ideal-observer model2 of the observer. When M in Equation 4 or 5 is greater than 1, the decision rule would be suboptimal for the task, which has no uncertainty, but it is optimal for the observer with the explicit limitation that the observer had assumed that there was uncertainty in the task. Tanner (1961) pointed out that if an observer did not know the signal exactly and had to consider a number of possibilities, the observer, which could be otherwise ideal, would have a steeper psychometric function compared with that of an ideal observer. Early studies in audition (cf. Green, 1964) and vision (e.g., Foley & Legge, 1981; Nachmias & Sansbury, 1974; Stromeyer & Klein, 1974; Tanner & Swets, 1954) found that when a subject was asked to detect a faint but precisely defined signal, the resulting psychometric function had a slope consistent with the presence of a significant intrinsic uncertainty.

In a seminal paper, Pelli (1985) made the case that intrinsic uncertainty could account for a large range of psychophysical data related to contrast detection and discrimination. Pelli demonstrated that a simple model of intrinsic uncertainty, which was already quite popular at the time of his writing but with properties not well understood, provided an excellent fit to psychophysical data for contrast detection and discrimination in many different conditions. In a nutshell, the uncertainty model makes a decision based on a decision variable of the form:

| (7) |

The model essentially says that the observer selects a response associated with the “loudest” channel. With hindsight, it is not difficult to see why Equation 7 is a reasonable approximation to the optimal decision rule (Equation 5):

| (8) |

We use ↔ to indicate that two functions are monotonically related such that replacing one with the other does not affect the rank order of the values of the function. The only approximation in Equation 8 is the replacement of the sum of a set of exponentials by the largest value from the set (Equations 12 and 13 in Nolte & Jaarsma, 1967). This approximation is reasonable if the largest value is very large relative to the other values to be summed, as is often the case with an exponential function.

The uncertainty model (Equation 7) is the key theoreical foundation that led to our proposed method for obtaining a classification image in the face of uncertainty. The results of Pelli (1985) showing the general validity of this model to a large set of empirical data and the rather ubiquitous applications of the model in visual psychophysics justified this starting point. Nevertheless, we note that our approach does not depend on any subtle assumptions of the uncertainty model beyond Equation 7 and is theoretically robust.

Isolating a channel in the uncertainty model by using a signal

If there is no uncertainty and if the linear observer model (Equation 6) is a good approximation of an actual observer, then it has been established that the classification-image method could uncover the observer templates Tr (cf. Ahumada, 2002). The same, however, could not be said when there is significant extrinsic or intrinsic uncertainty.

An inherent property of the uncertainty model (Equation 7) offers a way to reduce or eliminate intrinsic uncertainty and thus reduces the uncertainty model to a linear observer model. Because the channel with the highest response drives the net output of the uncertainty model, the presence of a relatively strong signal in the noisy stimulus will bias one channel over the others in terms of its contribution to the observer’s response. When the observer made an incorrect response while the signal is present, we know with relative certainty that it was that channel that often responded maximally to the signal that was suppressed. The linear kernel associated with this channel can then be recovered using the conventional classification-image technique.

We can illustrate this logic more precisely by combining Equation 7 with the definition of the stimulus (Equation 1):

| (9) |

where we let Tr,z, z ∈ [1, M] denote the channel that has the highest response for signal S The last line of approximation is justified because (1) for equal-energy signals, NσTTr,j is statistically identical for all channels j, and (2) the term STTr,z leads one particular channel to have the highest response most of the time and thus to single-handedly drive the decision variable λ(r). What is critical for this approximation is that the response STTr,z must be significantly larger than the responses from the other channels. We refer to this requirement as the “signal-clamping” requirement and the approximation in Equation 9 as the signal-clamping approximation.

In short, we are using a fixed signal to hold on to a specific channel and a varying noise to map the linear kernel of the channel. We refer to this approach as the signal-clamped classification-image method. Our logic is essentially the same as the two-bar method by Movshon, Thompson, and Tolhurst (1978) for mapping the linear component of the receptive field of a complex cell. We can think of a complex cell as an observer with uncertainty in the phase of a grating and is approximately equivalent to a detector that does a max-response pooling from a large set of detectors, each selective to a specific phase.3 With this perspective, we can think of the two-bar method as using one bar to select a channel of a specific phase, and the other bar, with varying positions relative to the first bar, to map the receptive field of the selected channel.

Properties of signal-clamped classification images

Signal-clamped classification images have distinct properties that can be exploited to estimate the amount of intrinsic uncertainty (or equivalently, the degree of invariance) of an observer. We will illustrate these properties, first analytically and then by simulation using an ideal-observer model (Equation 4), for which we know the ground truth about the observer’s internal templates and the amount of intrinsic uncertainty. We used an ideal-observer model in the simulation instead of the uncertainty model (Equation 7) to show that the analytical properties of signal clamping derived from the uncertainty model does not depend on the absolute validity of the uncertainty model. Whether these properties are valid for a human observer is an empirical question. The three human experiments in the second half of this paper will confirm that these analytical properties of signal-clamped classification images are indeed valid and robust.

Contrast of signal-clamped classification images

For the rest of this paper, we will consider a two-letter identification task (“O” vs. “X”) and a single-letter detection task (detecting “O” against a noisy background). We restrict the form of uncertainty to the uncertainty about the location of the stimulus on the display. The templates (or channels) for a given response are shifted versions of one another but are otherwise identical; that is,

| (10) |

where Tr is the position-normalized template for response r and pj is a position on the display. Our goals are to recover Tr and the range of pj. Possible generalizations of the signal-clamping technique to other types of uncertainty beyond that of shift invariance will be addressed in the General discussions section.

A conventional classification image is a composition of a set of classification subimages. A subimage CIAB is the average of all the noise patterns Nσ (Equation 1) from trials where the signal in the stimulus was A and the observer’s response was B. Consider the two-letter identification task (“O” vs. “X”). The subimage CIOX is the average of the noise patterns NOX from trials where “O” was in the stimulus but the observer responded “X” (we refer to this as an OX trial). An “X” response implies that the internal decision variable for an “X” response was greater than that for an “O” response; that is, λ(“X”) > λ(“O”). Appealing to the uncertainty model (Equation 7) and the composition of a stimulus (Equation 1) and letting Xj = Tx,j and Oj = To,j to improve readability, we have

| (11) |

where O (without any subscript) is the “O” signal in the noisy stimulus presented to the observer. If there is no uncertainty (M = 1), Equation 11 becomes the familiar form that underlies the conventional classification image:

| (12) |

The right-hand side of the inequality is a positive number because a noiseless “O” stimulus will activate the “O” channel (O1) more than the “X” channel (X1); that is, OTO1 > OTX1. For this inequality to hold, the average noise pattern on the left-hand side must have a positive correlation with the X template and a negative correlation with the O template. Ahumada (2002) showed analytically that

| (13) |

where E[·] denotes a mathematical expectation (see also Abbey & Eckstein, 2002; Murray et al., 2002). The proportional constant is affected by the probability of an OX trial (stimulus “O”, response “X”), and the internal-to-external noise ratio (ratio between the variances of the noise internal to an observer and that in the stimuli; e.g., see Equation A3 in Murray et al., 2002). CIOX approaches E[NOX] as the number of OX trials (NOX) approaches infinity. For a finite number of trials, the variance of CIOX is rather cumbersome because the probability density of CIOX is a truncated version of the multidimensional Gaussian (Nσ) used to form the stimuli. Ahumada (2002) pointed out that the variance of CIOX is upper bounded by the variance of the nontruncated distribution. Murray et al. (2002, Appendices A and F) further argued that the difference between the upper bound and the actual variance is negligible for a typical classification-image experiment where (1) the amount of the stimulus noise is comparable to the level of the observer’s internal noise, (2) the number of independent image pixels (and hence the dimensionality of stimulus) is large, and (3) the accuracy level is above 75%. All of the experiments in the current study met these three conditions. Thus, CIOX can be approximated as

| (14) |

where Nσ is a sample of white noise from the distribution used to form the stimuli (Equation 1).

Equations 13 and 14 show that in a conventional classification-image experiment, where a great deal of effort is directed toward the elimination of uncertainty in the experiment, each classification subimage contains both a positive image of one template and a negative image of the alternative template. In the case of an error trial, the negative image is the template for the presented signal, and the positive image is the template associated with the response.

Now consider a condition where there is no extrinsic uncertainty (i.e., the Xs, and Os were always presented at the same position on the display) but with a significant amount of intrinsic uncertainty (M » 1). Applying the signal-clamping approximation (Equation 9) to the right-hand side of Equation 11, we have

| (15) |

The signal-clamping approximation applies only to λ(“O”) because the “O” signal in the stimulus consistently biases one particular “O” channel (Oz in the equation). There is no such trial-to-trial consistency among the “X” channels because none of them are tuned to the “O” signal. Hence, the signal-clamping approximation does not apply to λ(“X”). Following the logic of Ahumada (2002), we can show that (Appendix)

| (16) |

Furthermore, the relationship between the expected value of the noise (E[NXO]) and the classification subimage (CIOX) remains the same as stated in Equation 14.

Equation 16 shows that the average of the noise patterns of the error trials contains a negative image of exactly one of the many templates for the presented signal. Important for our purpose is that this negative image is not affected by uncertainty and thus provides a good estimate of the unknown template. This is due to the signal-clamping approximation applied to the right-hand side of Equation 11. That is, the presence of a relatively strong “O” signal in the stimulus biased the signal response to precisely one of the many “O” channels; when the observer made an error and responded “X”, we are relatively certain that the noise pattern suppressed the particular “O” channel (Oz in Equations 15 and 16) that would otherwise be responding.

Critically, the signal-clamping approximation is applicable only when the signal contrast in the noisy stimulus is sufficiently strong. The disadvantage of this requirement is that when the signal contrast is high, the number of the error trials, which is more informative than the correct trials, will be low, and the average of the noise patterns from the error trials will have a high variance (Equation 14). Hence, the contrast of the signal must be sufficiently high but not too high. As will be shown in the simulations and with human data, a contrast that achieves an accuracy of 75% correction reaches this balance.

Unlike the negative image, the positive image in the average noise pattern is severely affected by uncertainty. This positive image (E[Xj] of Equation 16) corresponds to the average of all the channels associated with the response (“X” in our example). As a result, there will not be any clear positive image in the classification subimages when there is significant intrinsic uncertainty. The clarity of the positive image provides a way to estimate the degree of uncertainty.

Estimation of spatial uncertainty

In the case of spatial uncertainty, the channels (or templates) are assumed to be shifted versions of one another (Equation 10). If we represent the spatial distribution of the channels with an image S, with each pixel corresponding to a location in the image and the pixel value representing the probability of a channel at the location responding erroneously to noise, then

| (17) |

where * denotes a convolution and Xz is the position-normalized template for “X”. Combining Equations 16 and 17, we have

| (18) |

If S can be parameterized with a small number of parameters (e.g., S being a square region with uniform distribution), then Equation 18 provides a way to estimate both the perceptual templates and the amount of spatial uncertainty. We can obtain these estimates in stages. The classification subimage CIOX, which, in the limit, approaches E[NOX], contains a negative image of the “O” template, unaffected by uncertainty. Likewise, the subimage CIXO provides a direct estimate of the “X” template. Knowing both the “O” and “X” templates, Equation 17 and the corresponding equation for E[NXO] can be used to estimate the spatial uncertainty S.

In practice, the estimation of the templates is never precise and methods for removing the noise term in the sub-images tend to introduce various idiosyncratic artifacts. Fortunately, we will show with simulation that estimation of the spatial uncertainty S appears robust, particularly if it can be parameterized with very few parameters.

Classification images with extrinsic uncertainty

So far, we have assumed that there is no spatial uncertainty in the experiment, and the only uncertainty is intrinsic to the observer. In this case, the presentation of an “O” signal at a fixed location will most likely elicit a response from one particular “O” channel. The classification subimages (e.g., CIOX) can be calculated by averaging the noise patterns in the conventional matter:

| (19) |

We can obtain a clear template despite the spatial uncertainty caused by having a relatively strong signal at a fixed position. No special operation is needed to reconstruct the classification images.

With a small but important modification, Equations 16 and 18 will hold even when the spatial uncertainty is both in the stimuli and the observer. Such a condition arises in experiments when we want to test a shift-invariant observer by using signals whose positions vary from trial to trial. The modification is to simply shift the noise pattern (with wraparound) by an amount that either recenters the signal with respect to the image or otherwise normalizes its spatial position. That is, if a stimulus at trial i was created by shifting the stimulus O1 by an amount pi:

| (20) |

then we will replace NOX in all of the preceding equations with a shifted version SNOX, where

| (21) |

This modification is valid under the assumption that the templates for a given response are shifted versions of one another (Equation 10).

Simulations

To illustrate the various properties of the signal-clamped classification images, we consider an observer model that is otherwise optimal except for two limitations: (1) it uses templates that are slightly different from the presented signal and (2) it may have a high degree of intrinsic spatial uncertainty—spatial uncertainty that is not present in the stimuli but nevertheless assumed by the observer. The decision rule for such an ideal-observer model is given by Equation 5. We assume that there is no internal noise in the ideal-observer model. The presence of internal noise before or after the template comparison stage will lower the contrast of the resulting classification images without qualitatively affecting the critical properties that we are trying to illustrate. In contrast, internal noise during template matching will interact with intrinsic uncertainty and can lead to complex effects on the classification images. Template estimation by the signal-clamping method will remain robust under this type of noise; however, such noise will lead to a biased estimation of the spatial extent of an observer’s intrinsic uncertainty when the method that we will be describing in Equations 23a and 23b is used.

We simulated two tasks, a two-letter identification task and a single-letter detection task. For each task, we simulated two levels of intrinsic spatial uncertainty. For each pair of conditions (task and uncertainty level), we estimated the observer templates and the amount of the intrinsic spatial uncertainty from the classification images. We also illustrated the effect of signal clamping by simulating the tasks at two different signal contrast levels, one leading to a 55% correct performance level and another to a 75% correct level.

For the letter identification task, the signals were lowercase “o” and “x” in Times New Roman font with an x-height of 21 pixels. The signals were always presented at the center of a 128 × 128 pixel image. The ideal-observer model used lowercase “p” and “k” from the same font and size as its templates for “o” and “x”, respectively. In the case of no uncertainty (M = 1), the templates were positioned to have the maximum overlap with the signal. In case of high spatial uncertainty, the center position of a template is uniformly distributed within the center 64 × 64 pixels of the image. There were 1,000 spatially shifted templates for each response (M = 1,000). The relative positions of the signals and the templates are shown in Figure 1a. For each trial, the observer model made a decision according to Equation 5. The external noise had a variance of 1/16 (σ = 0.25), identical to that used in the human experiments. The signal contrast was set to a level to obtain an accuracy of 55% correct (low contrast) or 75% correct (high contrast). The observer model was assumed to know the signal contrast (parameter c in Equation 5).

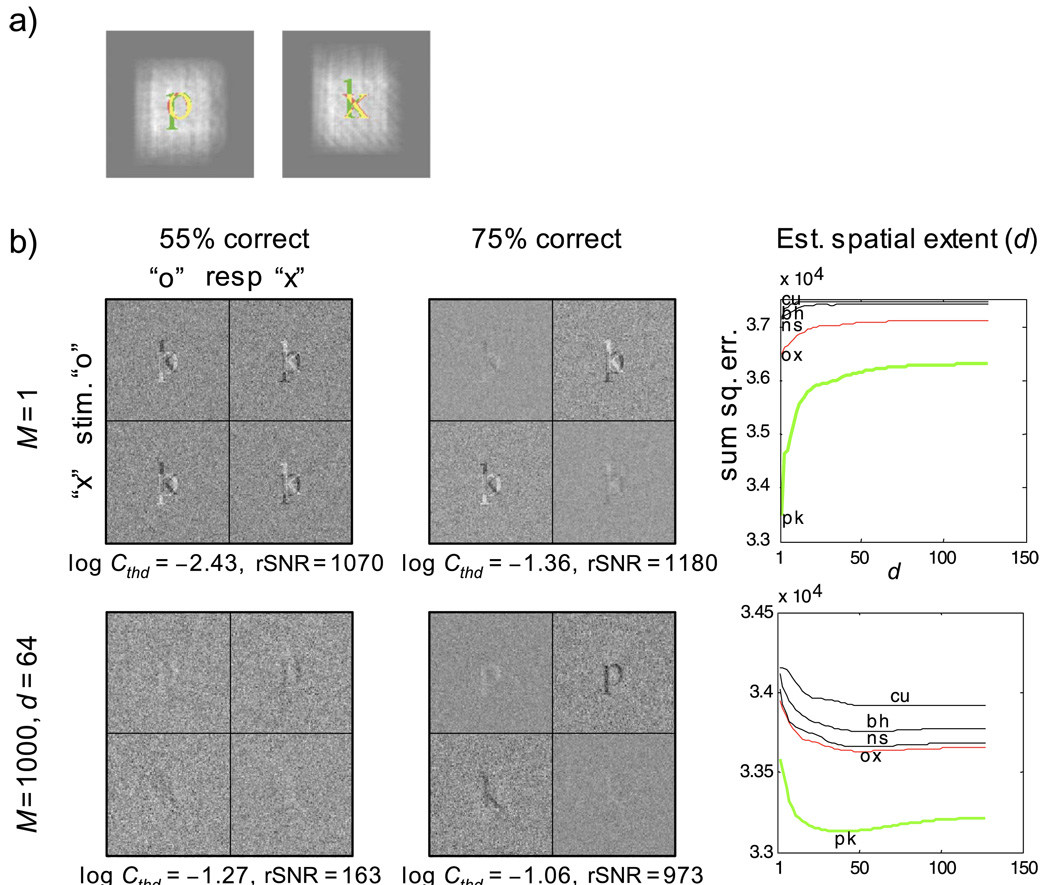

Figure 1.

(a) Signals and templates used for simulating the letter identification task using an ideal-observer model. The white haze shows the spatial extent of the intrinsic spatial uncertainty of the model for M = 1,000 and spatial extent (d) equal to 64 pixels. The templates used by the model are shown in green. The letter stimuli are shown in red and overlapping regions in yellow, (b) Classification images from the ideal-observer model for the letter identification task: first row, simulations with no spatial uncertainty (M = 1); second row, simulations with high spatial uncertainty (M = 1,000, d = 64); left column, low signal-contrast simulations at an accuracy criterion of 55% correct; middle column, high signal-contrast simulations at an accuracy criterion of 75% correct; right column, estimations of the spatial extent (d) of the uncertainty for the high signal-contrast condition (middle column)—each curve is an error function labeled by the templates used to obtain the estimate. The value of d at the minimum of each error function represents the estimated spatial extent of the uncertainty. The minimum of each curve is marked by the position of the first character of the corresponding label. The green curves were obtained using the actual observer templates from the model, the red curves were obtained using the stimuli letters as templates, and the black curves were obtained using pairs of letters that closely resembled (in terms of rms distance) the true templates. The high degree of similarity in the estimated values of d using different putative templates shows the robustness of the method. The stimulus noise had a pixel-wise standard deviation of 0.25. rSNR was computed using only the error trials, as described in Equation 26.

Figure 1b shows the four sets of classification sub-images from these four simulated conditions. Consider the high signal contrast conditions (middle column). When there was no spatial uncertainty (first row), the subimages contain an equal portion of both a positive and a negative image of the two templates (“p” and “k”) used by the observer model. As predicted by Equation 13, these are the templates of the observer and not the presented stimuli. Compare these subimages to the ones obtained with high degree of spatial uncertainty (second row, middle column). As predicted by Equation 16, only one clear template is visible in each subimage. Specifically, for the trials where the signal was “o” and the response was “x”, the classification subimage CIOX contains a clear negative image of the template for the “o” response, which in this case was the letter “p”—the template we built into the ideal-observer model. Remarkably, this image is sharp and unaffected by the high degree of intrinsic uncertainty. This is the main result of the signal-clamping technique. Also, as predicted by Equation 16, there is no clear positive template in CIOX, which is the single most important difference between the two uncertainty levels (M = 1 vs. M = 1,000). It is important to reiterate the point that the negative image in CIOX resembles the observer’s template “p” and not the signal “o” that was presented. The “o” signal biased a “p” template at a particular location, allowing the effect of noise on that particular template to accumulate over all the error trials when the presented signal was “o”. The effect of the noise was on the nonzero regions of the biased template, although these regions may not overlap with the signal (e.g., the descender of the lowercase “p”).

The signal-clamping approximation that led to Equation 16 relies on the fact that there is sufficient signal contrast in the stimulus to select a particular channel for imaging. When the signal contrast was reduced, the image quality of the signal-clamped classification images is markedly degraded (left column of Figure 1b). This is in stark contrast to the conventional classification-image method (or reverse correlation) without uncertainty. When there is no uncertainty, the overall image quality of the classification images improved with decrease in signal contrast, as is commonly observed. The improvements are due to a decrease in noise for the error-trial subimages (because the number of error trials increases) and an increase in signal for the correct-trial subimages (because with a weak signal, correct responses are often aided by coincidence with noise). With uncertainty, however, these improvements were overridden by a failure of the signal-clamping approximation, allowing the uncertainty to affect the accumulated template images and rendering the templates invisible. This effect is clearly shown in the left column, second row of Figure 1b, where signal contrast was set to a low value to achieve an accuracy of 55%.

We next turn to the estimation of the extent of the spatial uncertainty intrinsic to the observer using Equation 18. We assumed S to be a uniform square region centered in the image with d pixels on a side. Thus,

| (22) |

From Equations 14, 18, and 22, we have

| (23a) |

Likewise,

| (23b) |

The noise terms in Equations 23a and 23b are white and can be made to have the same variance if we multiply both sides of Equations 23a and 23b by √NOX and √nXO respectively. If we knew the observer’s signal-clamped templates (Oz and Xz), then k, and most importantly the extent of the spatial uncertainty d, can be estimated from the classification subimages for the error trials by minimizing the least-squared error. The right-most column of Figure 1b plots the residual sum-of-squares error for different values of d (with the value of k chosen to minimize the residual at each level of d). The solid green curves were obtained using the veridical observer templates (lowercase “p” and “k”). The value of d at which a global minimum is achieved provides the estimate of the extent of the spatial uncertainty. The estimated values for the two levels of uncertainty are 1 and 35 pixels, respectively, and are indicated by the first character of the template label “pk”. For the high-uncertainty condition, the residual landscape suggests that although the lower bound of d is well defined, the upper bound is not. In the context of this limitation, the estimated values are in good agreement with the veridical values (1 for the nouncertainty condition and 64 for the high-uncertainty condition).

The black curves and the one red curve represent the residual landscape of d computed using incorrect observer templates. Each of the three black curves was obtained with a pair of lowercase letters (except “p” and “k”) that resembled the classification subimages as the presumed observer templates. The red curve was obtained with the presented signals (“o” and “x”) as the presumed observer templates. Note that the values of d at the global minimum of each of these residual curves are very similar. This result demonstrates the robustness of the estimate of the spatial extent d of the underlying uncertainty, even when the observer template is not precisely known. In practice, this means that we can obtain a reasonable estimate of the spatial extent by assuming that the observer templates were identical to the presented signals.

Figure 2 shows the results of the single-letter detection task. The signal in this task is the lowercase letter “o” from the two-letter identification task. The ideal-observer model used a lowercase “e” as the template to detect the signal (Figure 2a). Two levels of intrinsic uncertainty were simulated: spatial extents with a uniform distribution of 32 (medium uncertainty) and 64 (high uncertainty) pixels on a side of a square centered on the image. For the condition with the smaller spatial extent, two types of spatial uncertainty were considered: one with a constant M for both levels of spatial extents (M = 1,000) and another with a constant density (M = 1,000 for high uncertainty, M = 250 for medium uncertainty).4 The telltale sign of uncertainty is evident in the classification subimages for all conditions (Figure 2b). In particular, the classification subimage from the miss trials (CImiss) shows a negative image of the observer’s template (a lowercase letter “e”), whereas the subimage of the false-alarm trials (CIFA) shows only a positive haze (if there were no uncertainty, it would be a positive image of observer’s template).

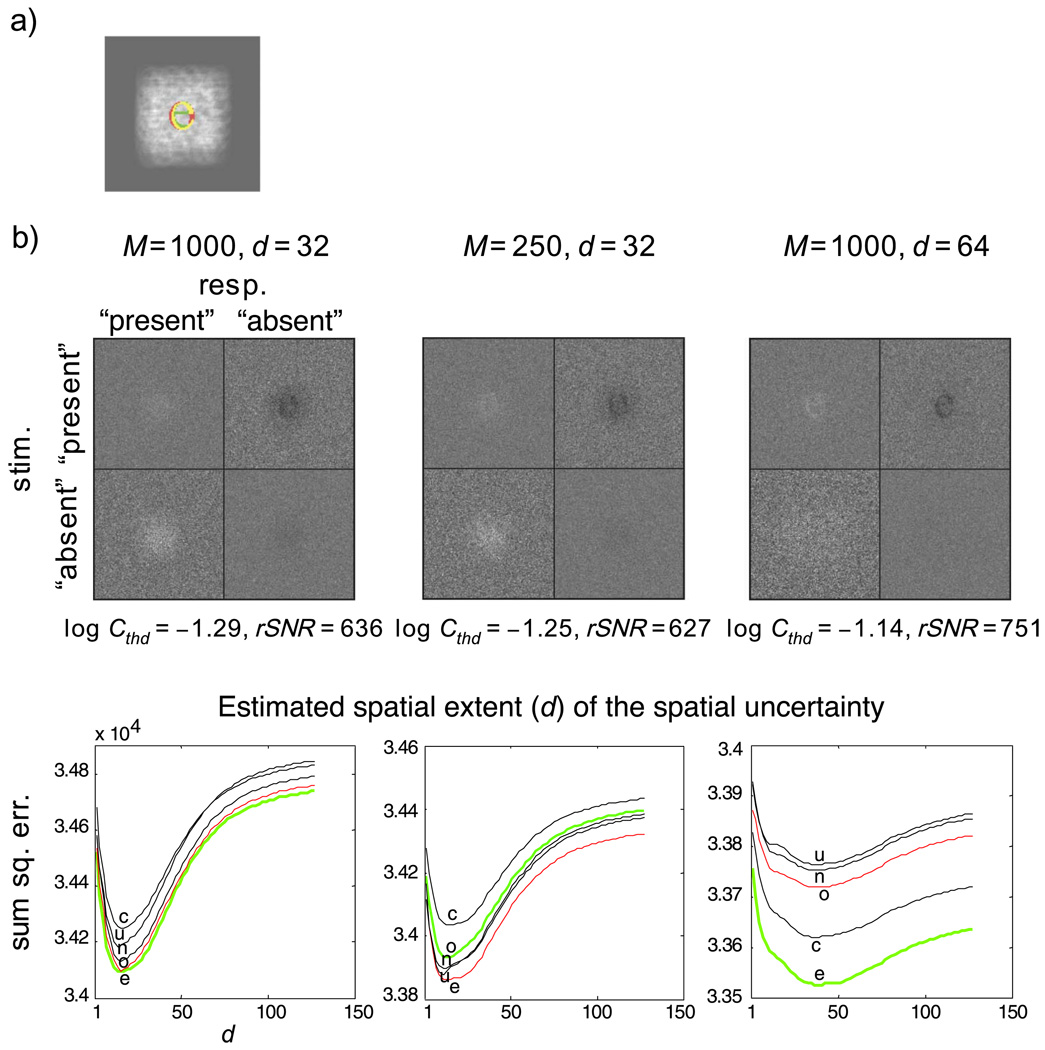

Figure 2.

(a) Signal and template used for simulating the letter detection task with an ideal-observer model. The white haze shows the extent of the intrinsic spatial uncertainty of the model observer for M = 1,000 and spatial extent (d) equal to 64. The template used by the model is shown in green. The letter stimulus is shown in red. The overlapping regions are shown in yellow, (b) Classification images from the ideal-observer model performing the letter detection task at an accuracy level of 75% correct: first column, classification-image and spatial-extent estimations for the medium spatial uncertainty condition (M = 1,000, d = 32); second column, classification-image and spatial-extent estimations for a medium spatial uncertainty condition (M = 250, d = 32), which has the same spatial density of templates as the high-uncertainty condition; third column, classification-image and spatial-extent estimations for the high spatial uncertainty condition (M = 1,000, d = 64). The error functions of spatial-extent estimations are labeled by the putative template used for the estimation. The value of d at the minimum of each curve represents the estimated spatial extent and is marked by the position of the corresponding label. The green curves were obtained using the model’s template, the red curves were obtained using the stimulus letter as the template, and the black curves were obtained using letters that resembled (in terms of rms distance) the model template.

Performance of the ideal-observer model in the two medium-uncertainty conditions was essentially the same in terms of threshold contrast (C250/C1,000 = 1.1) and classification images (Figure 2b, first row, left and middle columns). This is consistent with the finding of Tjan and Legge, (1998) that there exists a task-dependent upper bound of the effective level of uncertainty, which can be substantially less than the highest possible level of physical uncertainty. With respect to our current letter detection task, this means that increasing M beyond a density of 250 possible positions per 32 × 32 pixels has no consequence in performance.

For the signal-clamping approximation (Equation 9) to be exact, an observer’s internal templates should be orthogonal, the signal should be strong, or both. Orthogonality is effectively reduced when the spatial extent of the templates are confined to a smaller space. That is, a randomly selected channel will tend to be in closer proximity to the channel at the stimulus position. A reduction in the spatial extent also reduced the threshold contrast for detection (by a factor of about 1.5 for the ideal-observer model). The combined effect of reduced orthogonality and reduced signal contrast was incomplete signal clamping, which resulted in the noticeable dark haze around the negative image of the observer template in CImiss in both of the medium-uncertainty conditions. This dark haze was absent in the high-uncertainty condition.

The white haze in CIFA is noticeably broader and fainter in the high-uncertainty condition compared with the medium-uncertainty condition.

Equations 23a and 23b were used to estimate the spatial extent (d) of the uncertainty. Note that for a detection task, one of the templates (X in this case) is an image of zeros; that is,

| (24) |

The residual landscape for estimating d is plotted in the second row of Figure 2b. As with the case of the letter identification simulation, the green curve represents using the veridical observer template (“e”) to perform the estimation, the red curve represents using the signal in the stimuli as the template, and the three black curves were obtained using other lowercase letters that resembled the classification subimages. Again, the values of d that minimize these residual functions are relatively independent of the assumed observer templates. The averaged estimated value of d was 14.6 pixels for the medium-uncertainty condition and 37.4 pixels for the high-uncertainty condition. Although showing the same ratio of difference as the veridical values (32 vs. 64 pixels, respectively), the estimated values are admittedly a factor of 2 less. This is probably because the simulation used only 1,000 positions within Sd, as opposed to a true uniform distribution of positions.

Summary of the method of signal-clamped classification image

Our main finding here is that by presenting a relatively strong signal in the stimulus, the observer template for the presented signal can be imaged using the conventional classification-image method in the face of a high degree of intrinsic spatial uncertainty. We called this type of classification image obtained with a relatively strong signal embedded in the stimulus the “signal-clamped” classification image. If spatial uncertainty is extrinsic (i.e., in the stimulus), then the only minor change to the calculation of classification images is to shift the noise pattern (with wraparound) to recenter the presented signal in the image (Equation 21). How this finding may be generalized to other types of uncertainties will be addressed in the General discussions section.

We have shown analytically and with simulations the following properties of signal-clamped classification images obtained with a high degree of spatial uncertainty:

Each of the classification subimages from the error trials contains a clear negative image of the observer’s template for the presented signal, unaffected by spatial uncertainty intrinsic or extrinsic to the observer. However, in the presence of uncertainty, the clarity of the template image markedly deteriorates if the contrast of the presented signal is not sufficiently high. The need for a high-contrast signal goes opposite to the conventional practice of using a low-contrast signal to increase the effect of noise on the observer’s response.

Any positive image of the alternative template in a classification subimage for the error trials is blurred by spatial uncertainty, often rendering it indiscernible.

The extent to which these positive template images are blurred provides an estimate of the spatial extent of the uncertainty.

Because of the presence of a relatively strong signal in the stimulus, the classification subimages from the correct trials contain very little contrast and are relatively uninformative. As a result, we do not advocate combining the subimages to form a single classification image as in the conventional approach.

Our discussions have been and will continue to be focusing on the subimages from the error trials, although the general properties of signal-clamped classification images derived in this section also apply to the subimages from correct trials with merely a sign change. We ignore the correct-trial subimages for the sake of simplicity. We do not lose much because with a relatively strong signal in the stimulus, the signal-to-noise ratio (SNR) of correct-trial subimages are often quite low.

Experiments

Three sets of human experiments were conducted to determine the practicality and utility of the signal-clamped classification-image method. Experiments 1 and 2 paralleled the simulation studies and aimed to demonstrate the feasibility of the proposed method and to empirically validate the various properties of signal-clamped classification images. Experiment 1 used the two-letter identification task, whereas Experiment 2 used the single-letter detection task. To compare the effects of spatial uncertainty, both experiments were performed in the fovea where spatial uncertainty of a human observer can be effectively manipulated with the stimulus. We introduced spatial uncertainty into the task by randomizing the signal position within a given region in the stimulus display. Knowing the actual spatial extent of the stimulus-level spatial uncertainty provides a reference for evaluating the estimated spatial extent obtained from the signal-clamped classification-image method.

Experiment 3 tested letter identification in the periphery. The visual periphery is known to have a considerable amount of intrinsic spatial uncertainty (Hess & Field, 1993; Hess & McCarthy, 1994; Levi & Klein, 1996; Levi, Klein, & Yap, 1987). No spatial uncertainty was added to the stimulus. The objective of this experiment was to demonstrate that the method of signal-clamped classification images can be used to uncover the perceptual template in the presence of spatial uncertainty and to estimate the spatial extent of the uncertainty.

General methods

Procedure

In the identification experiments (Experiments 1 and 3), the task was to indicate which of the two lowercase letters “o” or “x” was presented. In the detection experiments (Experiment 2), the task was to indicate whether the lowercase letter “o” was presented.

Each experiment consisted of 10 blocks with 1,050 trials per block. In each trial, a white-on-black letter was presented in a field of Gaussian white noise. The noisy stimuli (letter + noise) were presented at the fovea for Experiments 1 and 2 and at 10 deg in the inferior visual field for Experiment 3. The first 50 trials in each block were calibration trials in which the letter contrast was dynamically adjusted using the QUEST procedure (Watson & Pelli, 1983) as implemented in the Psychophysics Toolbox extension in MATLAB (Brainard, 1997; Pelli, 1997) to obtain a “calibrated” threshold letter contrast for reaching an accuracy level of 75%. The remaining 1,000 trials were divided into five subblocks of 200 trials each, and QUEST was reinitialized to the calibrated value at the beginning of each subblock. During the initial 50 calibration trials, the standard deviation of the prior distribution of the threshold value was set to 5 log units (a practically flat prior), but for each subblock, the prior was narrowed to a standard deviation of 1 log unit. This restricted the variability of the test contrast but still allowed adequate flexibility for the procedure to adapt to the observers’ continuously improving threshold levels.

For the foveal experiments, the letter size was fixed at 48 pt in Times New Roman font (x-height = 22 pixels). For the peripheral experiments, an acuity measurement was first performed for each subject, in which the subject was instructed to identify any of the 26 letters presented at a 10-deg retinal eccentricity in the inferior field. The size of the presented letter was varied using the QUEST procedure to achieve an identification accuracy of 79%. Twice the acuity size so determined was used in the main experiment.

Stimuli

The stimulus for each trial consisted of a white-on-black letter added to a Gaussian, spectrally white noise field of 128 × 128 pixels. Before being presented to the observers, each pixel of this noisy stimulus was duplicated by a factor of 2, such that four screen pixels were used to render a single pixel in the stimulus. This was done to increase the spectral density of the noise. The noise contrast was fixed at 25% rms. At a viewing distance of 105 cm, the noisy stimulus was of size 4.7 deg, and the noise has a two-sided spectral density of 85.5 μdeg2. The mean luminance of the noisy background was 19.8 cd/m2.

For the fovea experiments (Experiments 1 and 2), the letters were of size 0.81 deg (x-height) in visual angle. For the periphery experiment (Experiment 3), the letters were of size 0.85 deg for one subject and 1.15 deg for the other subject. The periphery letter size was 0.3 log units above the subject’s letter acuity at 10 deg eccentricity. The contrast of the target letter was adjusted with a QUEST procedure as described in the Procedure section.

For the experiments with spatial uncertainty, 1,000 uniformly distributed random positions, representing the center of a presented letter, were preselected with replacement from an imaginary square centered in the noise field. The spatial extent of the spatial uncertainty was manipulated by changing the size of the imaginary square: 32 stimulus pixels on a side (i.e., 64 screen pixels because of the factor-of-2 blocking to increase noise spectral density, 1.18 deg of visual angle) for the “medium” level of uncertainty and 64 stimulus pixels (128 screen pixels, 2.37 deg of visual angle) for the “high” level of uncertainty. For the experiments without spatial uncertainty, the letter was always presented at the center of the noise field, marked by a fixation cross before and after stimulus presentation. Figure 3a depicts a noisy stimulus used in the experiment.

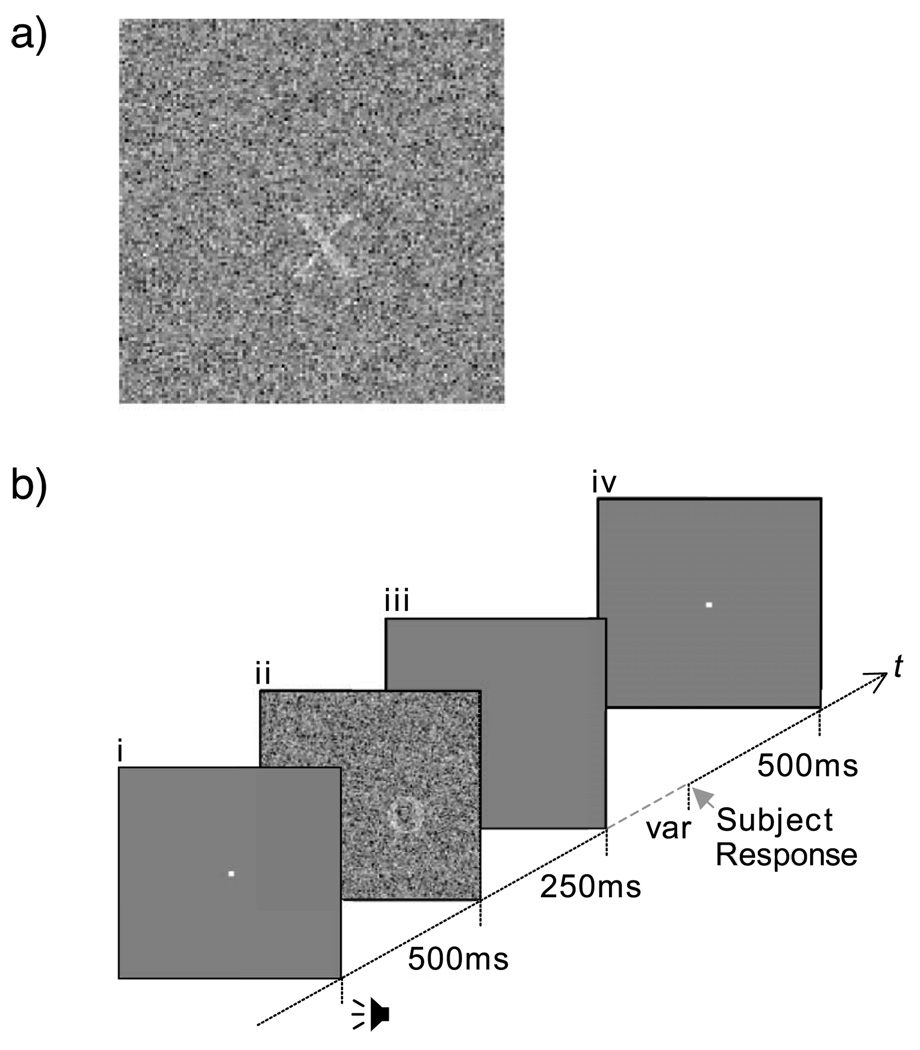

Figure 3.

(a) A sample of the noisy stimulus, (b) Timing of stimuli presentation: (1) fixation beep immediately followed by a fixation screen for 500 ms, (2) stimulus presentation for 250 ms, (3) subject response period (variable) with positive feedback beep for correct trials, and (4) 500 ms delay before onset of next trial.

The stimuli were displayed in the center of a 19-in. CRT monitor (Sony Trinitron CPD-G400), and the monitor was placed at a distance of 105 cm from a subject. The monitor has 11 bits (2,048 levels) of linearly spaced contrast level. All 11 bits of the contrast levels were addressable to render the noisy stimulus for each trial. This was achieved by using a passive video attenuator (Pelli & Zhang, 1991) and a custom-built contrast calibration and control software implemented in MATLAB. Only the green channel of the monitor was used to present the stimuli.

The stimuli were presented according to the following temporal design: (1) a fixation beep immediately followed by a fixation screen for 500 ms, (2) a stimulus presentation for 250 ms, (3) a subject response period (variable) with positive feedback beep for correct trials, and (4) a 500-ms delay before onset of the next trial (see Figure 3b).

At the end of each trial, the following data were collected for the subsequent classification-image reconstruction: the center position of the target letter, the state of the pseudorandom number generator used to produce the noise field, the identity and contrast of the presented letter, and the response of the subject.

Subjects

Five subjects (one of the authors and four paid students at the University of Southern California who were unaware of the purpose of the study) with normal or corrected-to-normal vision participated in the experiments. All had (corrected) acuity of 20/20 in both eyes. Subjects viewed the stimuli binocularly in a dark room. Written informed consent was obtained from each subject before the commencement of data collection. Because of the monotonous nature and long duration of each experiment (approximately 8–10 hr), subjects were allowed (and encouraged) to take breaks whenever they so desired. All the subjects completed their respective experiments in three to five sessions.

Classification-image reconstruction

For the purpose of reconstructing the classification images, the calibration trials in each experiment block were ignored. For the rest of the 10,000 trials, the noise field was first regenerated using the stored random number state. Next, the noise field was shifted with wraparound based on the stored target position information as if to recenter the presented letter (Equation 21). This shifting procedure was obviously unnecessary when there was no spatial uncertainty at the stimulus level (Experiment 3, and one condition in Experiment 1). For each trial, the recentered noise field was then classified into one of four bins based on the presented stimulus and the subjects’ response. The noise fields in each bin were then averaged pixel-wise to form the corresponding classification subimages.

Relative SNR of classifications subimages

The most practical concern in the signal-clamped classification method is whether the method would require an unreasonably large number of trials to make up for the loss in the number of error trials due to the need to use a relatively strong signal. For our experiments, as it will transpire, 10,000 trials were sufficient to obtain classification images of good quality. We sought to estimate from our data the minimum number of trials that would be needed when uncertainty is high. We did so by computing the relative SNR (rSNR; Murray et al, 2002) as a function of the number of trials; we then compared this function across different uncertainty levels.

Murray et al. (2002) defined rSNR of a classification image C as:

| (25) |

where T′ is an assumed template and σC is the pixel-wise standard deviation of the image C. Murray et al. showed that the discrepancy between T′ and the observer’s actual template only leads to a reduction in the amplitude of rSNR by a constant factor relative to the inherent variability of a classification image, thereby making the measurement less reliable. We modified this approach to measure only the classification subimages of the error trials (e.g., CIOX and CIXO for the letter identification experiment) and only the negative template images in these subimages.

For the two-letter identification task, we define rSNR as follows:

| (26) |

Here, X and O are the presented letter stimuli. Equation 26 is applicable to the letter detection task by setting X to zero. In essence, Equation 26 measured the SNR of the pixels that overlap the negative O template in the subimage CIOX and the negative X template in the subimage CIXO.

Experiment 1: Letter identification with and without spatial uncertainty

Experiment 1 was conducted in two different conditions, as was the case for the simulation study: The first condition (no uncertainty) was intended to replicate past findings without spatial uncertainty; the second condition was intended to verify our signal-clamped classification-image method and the associated theoretical claim that perceptual templates can be uncovered under conditions of spatial uncertainty.

Two subjects (A.O. and B.B.) participated in the nouncertainty condition. In this condition, the letters (“o” and “x”) were presented at fixation without any spatial uncertainty. The task was to indicate which of the two letters (“o” or “x”) was presented at each trial. The subjects were explicitly told that the letters were always centered at fixation.

Subject A.O., and a third subject, A.S.N., participated in the high-uncertainty condition in which the letter stimuli (“x” or “o”) were presented at any one of 1,000 different random positions (see General methods section). The set of random positions was chosen from within a square of 64 × 64 stimulus pixel (128 screen pixels or 2.37 deg on a side) centered in the stimulus area. The extent of the spatial uncertainty was not known explicitly to the subjects (except the author A.S.N.).

Results and discussions

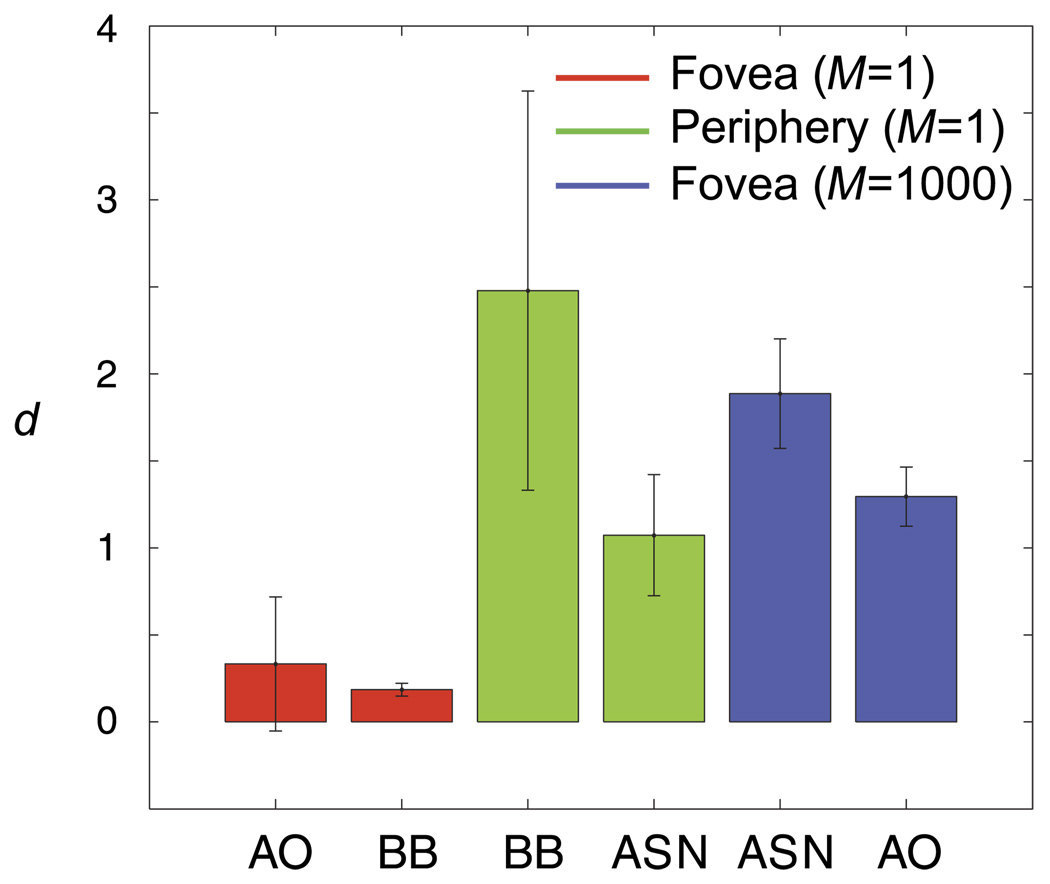

Introducing a high degree of uncertainty into the stimulus elevated the contrast threshold by a factor of 1.74 on average across subjects, although the effect of uncertainty on contrast threshold is not of interest here. The left column of Figure 4 shows the classification subimages for both levels of spatial uncertainty. The results of Experiment 1 bear out the theoretical predictions described earlier, that a clear classification image showing what could be an observer’s perceptual templates can be obtained under high spatial uncertainty within a reasonable number of trials (10,000 in this case). Consider only the classification subimages from the error trials (top right—CIOX and bottom left—CIXO). The most crucial finding is that across uncertainty conditions, there was little or no difference between the negative components of the error-trial classification subimages. This was true both within and between subjects, confirming the general validity of the signal-clamping approximation (Equations 9 and 15).

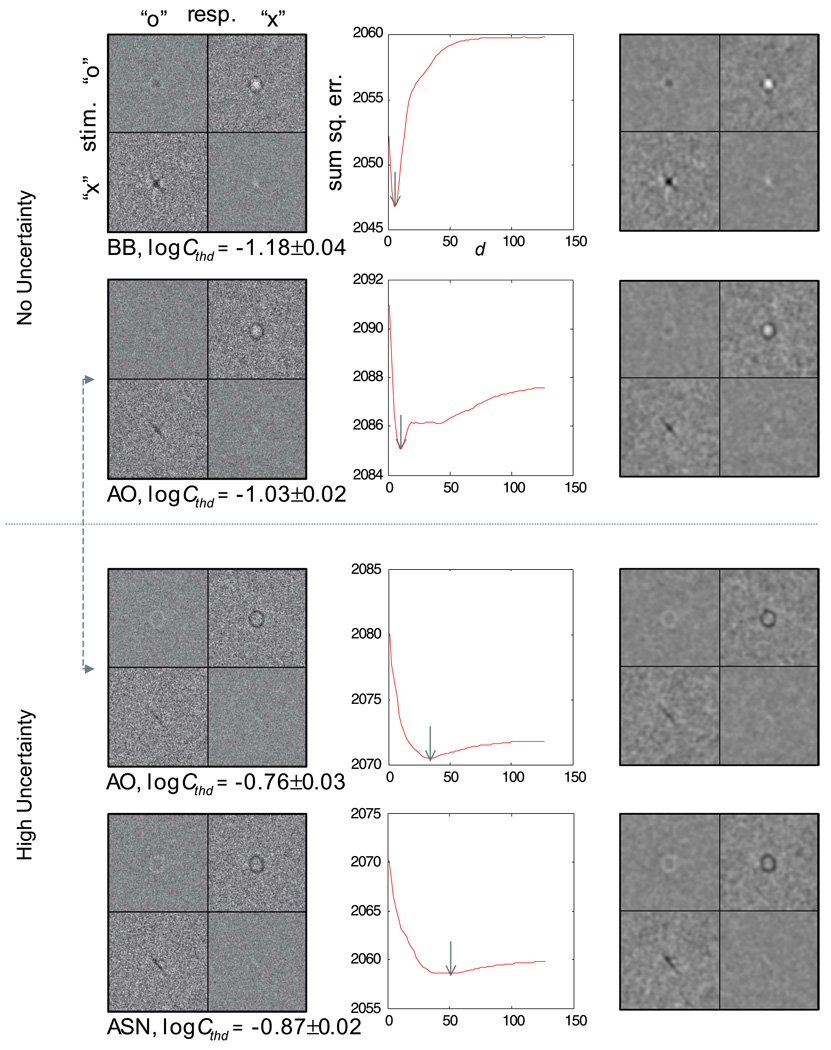

Figure 4.

Classification images for the human observers in the letter identification task (Experiment 1): top two rows, no spatial uncertainty (M = 1); bottom two rows, high spatial uncertainty (M = 1,000, d = 64 stimulus pixels); left column, classification images at a signal contrast corresponding to 75% correct; middle column, the spatial extent of the uncertainty estimated from the classification images in the left column; the value of d at the minimum of each curve (marked by the gray arrow) represents the mean spatial extent of the uncertainty; right column, blurred versions of the classifications images from the left column using a Gaussian kernel with space constant of 1.4 stimulus pixels for visualization purposes only. Image intensities in each column are identically scaled to facilitate across-condition comparisons.

There was a subtle difference in the estimated “x” templates from the two uncertainty conditions. One stroke appeared missing in the high-uncertainty condition. With the Times New Roman font used in the experiment, the missing stroke was about one third the width of the other stroke. As a result, the lowercase “x” is not isotropic in its ability to limit spatial uncertainty. It is less able to “clamp” spatial shift of an observer’s internal template along the thicker stroke than across the thicker stroke. Shift or spatial uncertainty, along the thicker stroke blurred the image of the thinner stroke, rendered it invisible for subject A.S.N, and only partly visible for subject A.O. In other words, we do not think that the observer template for “x” changed as a function of spatial uncertainty; rather, the difference in the observed templates was a result of imperfect signal clamping, which is not always avoidable. We will return to this issue when we consider the perceptual templates in the visual periphery in Experiment 3.

The most noticeable difference between the classification subimages obtained from the two uncertainty conditions is that for the condition without uncertainty in the stimuli (top two rows), the subimages from the error trials showed both a negative and a positive component; for the condition with a high degree of uncertainty (bottom two rows), only the negative component was apparent, with the positive component being smeared out due to the spatial uncertainty. This is predicted by Equations 18, 23a, and 23b and consistent with our simulation results.

To aid visual inspection, particularly regarding the absence of the positive components in the high-uncertainty condition, we blurred the classification subimages with a Gaussian kernel with a space constant of 1.4 stimulus pixels (right column of Figure 4). In the condition where there was no spatial uncertainty in the stimulus, the positive component in the error-trial subimages appeared considerably weaker and less defined than the negative component. Because the positive component is susceptive to uncertainty (Equations 23a and 23b), it stands to reason that there existed measurable amounts of spatial uncertainty internal to the observers. Having no uncertainty in the stimuli does not guarantee the absence of uncertainty intrinsic to an observer.

To estimate the spatial extent of the uncertainty (extrinsic and intrinsic) from the classification images, we fitted Equations 23a and 23b to the two error-trial subimages to obtain a numerical estimation of d in terms of stimulus pixels, using the lowercase stimuli as the presumed templates. As demonstrated in the simulation, the choice of the presumed templates, which may be different from the actual observer templates, does not significantly affect the estimated value of d. The residual landscapes are plotted in the middle column of Figure 4. The standard error of the estimate was determined by bootstrapping (Efron & Tibshirani, 1994). The results are summarized in Table 1. As expected, the estimated spatial extent (d) of the combined uncertainty (extrinsic and intrinsic) was significantly higher in the high-uncertainty condition than the no-uncertainty condition. Moreover, these values are in reasonable agreement with the veridical values (1 for the no-uncertainty condition and 64 for the high-uncertainty condition).

Table 1.

The estimated extents of spatial uncertainty for conditions in Experiment 1 in units of stimulus pixels.

| Condition | Subject | d ± SE |

|---|---|---|

| No uncertainty | B.B. | 5 ± 1.0 |

| A.O. | 9 ± 10.4 | |

| High uncertainty | A.O. | 35 ± 4.6 |

| A.S.N. | 51 ± 8.5 |

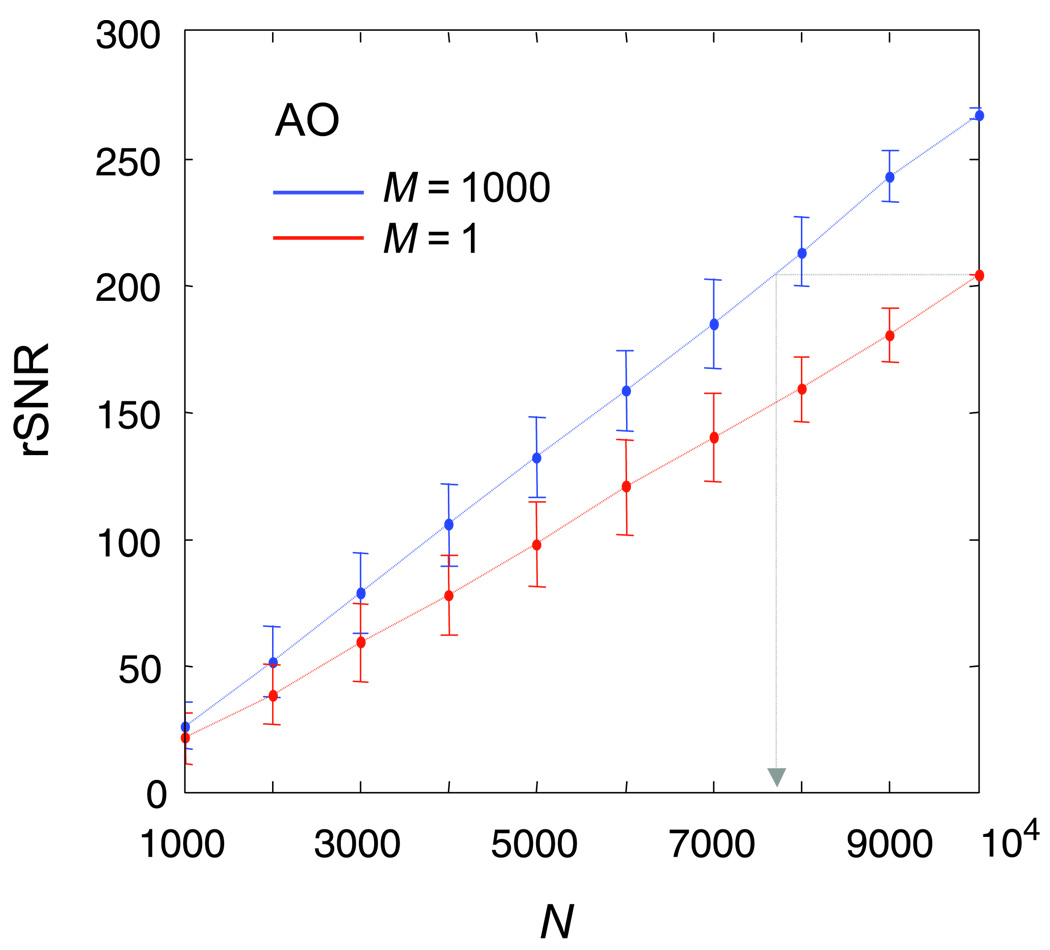

Lastly, we sought to determine the minimum number of trials that would be required to obtain classification subimages of sufficient quality. Figure 5 plots the rSNR (Equation 26) of the error-trial classification subimages for subject A.O. as a function of the number of trials for both the no-uncertainty and high-uncertainty conditions. rSNR linearly increased as a function of the number of trials. This is expected because the pixel-wise variance of a classification subimage linearly decreases with the number of trials. What is noteworthy is that the rSNR for the high-uncertainty condition was higher than that for the no-uncertainty condition, which is opposite to the results of the ideal-observer simulation (Figure 1). This remains to be the case even when we changed Equation 26 to include both the negative and positive template images in the calculation. We will address the relationship between rSNR and uncertainty in the General discussions section.

Figure 5.

rSNR versus number of trials for subject A.O. who participated in both conditions of Experiment 1. The gray arrow marks the approximate number of trials that would be needed in the high-uncertainty condition to achieve the same classification-image quality as the no-uncertainty condition. Error bars are boot-strap standard errors of the mean.

Subjectively speaking, with 10,000 trials, both of the error-trial subimages for the no-uncertainty condition were of sufficient quality. If we use this as a standard, then we only need about 8,000 trials in the high-uncertainty condition to reach the same level of rSNR. We note with interest that although uncertainty leads to an increase in threshold, the increase in threshold, in turn, keeps in check the number of trials required for a signal-clamped classification-image experiment.

Experiment 2: Letter detection with medium and high degree of uncertainty

The ideal-observer simulations described earlier (see Figure 2) predict that the extent of the spatial uncertainty (d as opposed to M) can be estimated from the classification images. This prediction was tested in Experiment 2.

The task was to detect a lowercase letter “o” in noise. In each trial, the target was either presented at any one of 1,000 different random positions (see General methods section) or not presented with equal probability. Subjects were asked to indicate whether the letter was presented or not. In the medium-uncertainty condition, the total set of random positions was chosen from within a central square of 32 × 32 stimulus pixels (1.18 deg). The extent of the spatial uncertainty was indicated to the subjects by means of a white rectangular bounding box that was displayed during the fixation period immediately prior to the stimulus onset. Subjects J.H. and M.J. participated in this condition.

In the high-uncertainty condition, the 1,000 different random positions were chosen from a central square of 64 × 64 stimulus pixels (2.37 deg), and the extent of this uncertainty range was not explicitly indicated to the subjects. In all other respects, the high-uncertainty condition was identical to the medium-uncertainty condition. Two subjects, J.H. (who also participated in the medium-uncertainty condition) and B.B., participated in this condition.

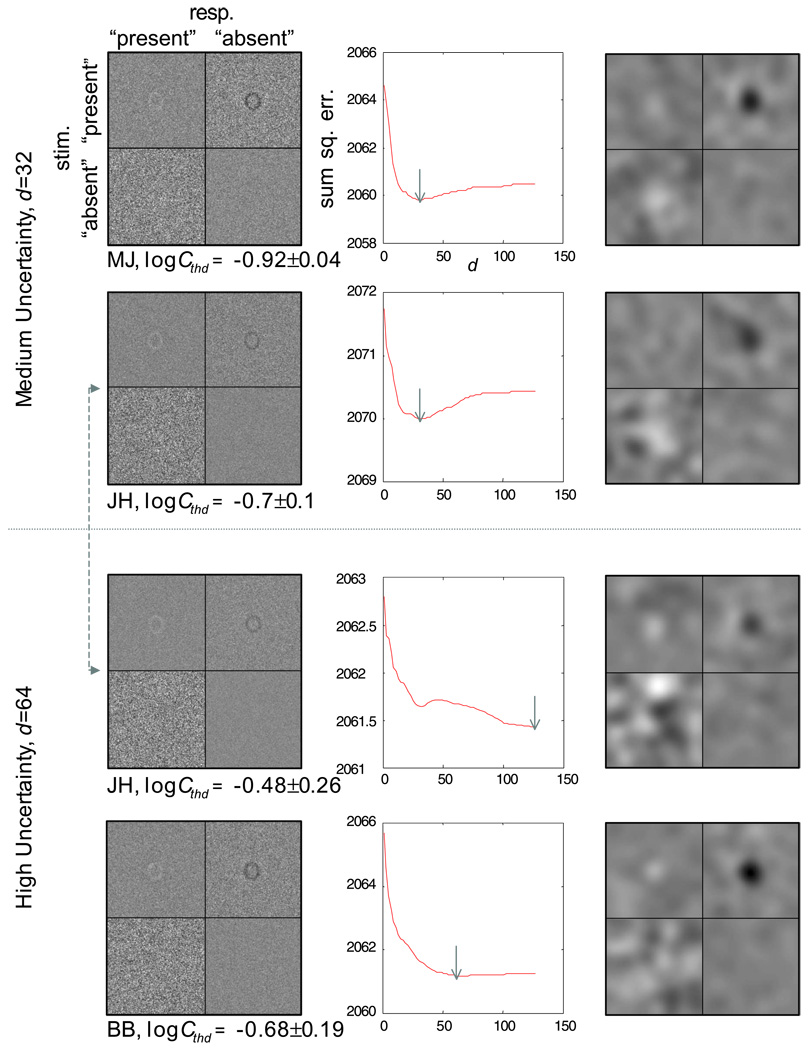

Results and discussions

The resulting classification images and the estimation of the spatial extent of the uncertainty are shown in Figure 6. As predicted by Equation 24 and consistent with the simulation result, a clear negative signal was visible in CImiss (the subimage from the miss trials) in the high-uncertainty condition. Also, as predicted, there was no clear image of the target in CIFA (the subimage from the false-alarm trials). The positive haze in CIFA is not as pronounced as that in the simulation, probably due to the presence of internal noise and intrinsic spatial uncertainty. The presence of a significant amount of intrinsic uncertainty in the observers may also explain the absence of any blurring of the negative template image in CImiss in the medium-uncertainty condition, which was observed in the simulation.

Figure 6.

Classification images for the human observers in the letter detection task (Experiment 2): top two rows, medium spatial uncertainty; bottom two rows, high spatial uncertainty; left column, classification images at a signal contrast corresponding to 75% correct; middle column, estimation of spatial extent of the uncertainty from the classifications images in the left column; the estimated value of d with the minimum residual error is marked by the gray arrows; right column, blurred versions of the classifications images in the left column using a Gaussian kernel of space constant equal to 14.1 stimulus pixels to visualize the positive haze in the false-alarm trials.

The positive haze in CIFA is more visible for the medium-uncertainty condition if we blur the subimages (using a Gaussian kernel with a space constant of 14.1 stimulus pixel, right column of Figure 6). Such a positive haze around the center of the image appears to be absent from CIFA in the high-uncertainty condition.

The quantitative results for the estimation of spatial extent are depicted as plots of residual versus d (middle column of Figure 6) and summarized in Table 2. These results were obtained by fitting Equation 24 to the classification subimages, using the target letter “o” as the presumed observer template. The spatial extent of the uncertainty (d) was significantly higher in the high-uncertainty condition as compared with the medium-uncertainty condition, both within and between subjects. The standard errors were estimated with bootstrap.

Table 2.

The estimated extents of spatial uncertainty for conditions in Experiment 2 in units of stimulus pixels.

| Condition | Subject | d ± SE |

|---|---|---|

| Medium uncertainty | M.J. | 31 ± 22 |

| J.H. | 31 ± 13 | |

| High uncertainty | J.H. | 127 ± 45.3 |

| B.B. | 65 ± 28 |

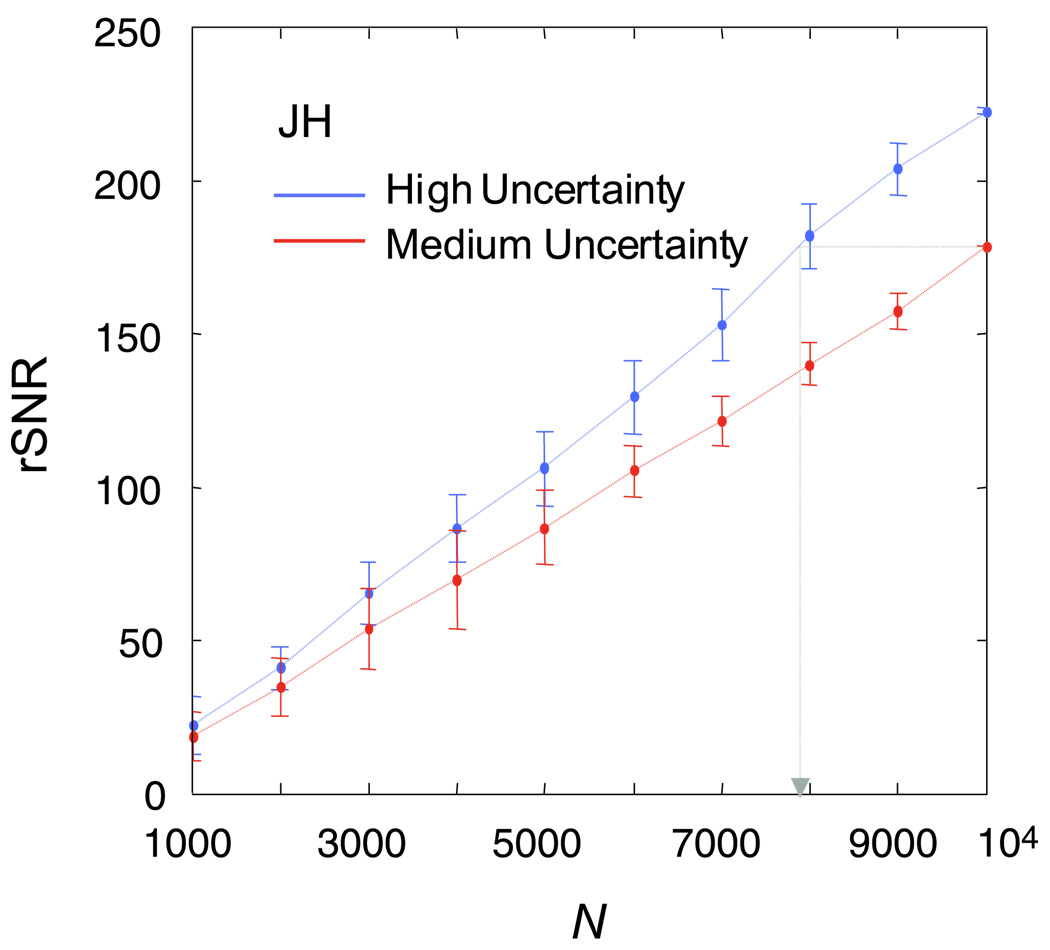

For the subject who participated in both of the uncertainty conditions (J.H.), we plotted rSNR versus number of trials in Figure 7. Consistent with the result of Experiment 1, we found that the rSNR was higher for the condition with a large extent in spatial uncertainty (and a higher detection threshold). However, unlike Experiment 1, this result is consistent with the result of the corresponding ideal-observer model (Figure 2), which also exhibited a higher rSNR in the high-uncertainty condition.

Figure 7.

Plot of rSNR versus number of trials for subject J.H. who participated in both conditions of Experiment 2. The gray arrow marks the approximate number of trials needed in the high-uncertainty condition to achieve the same classification-image quality as the medium-uncertainty condition.

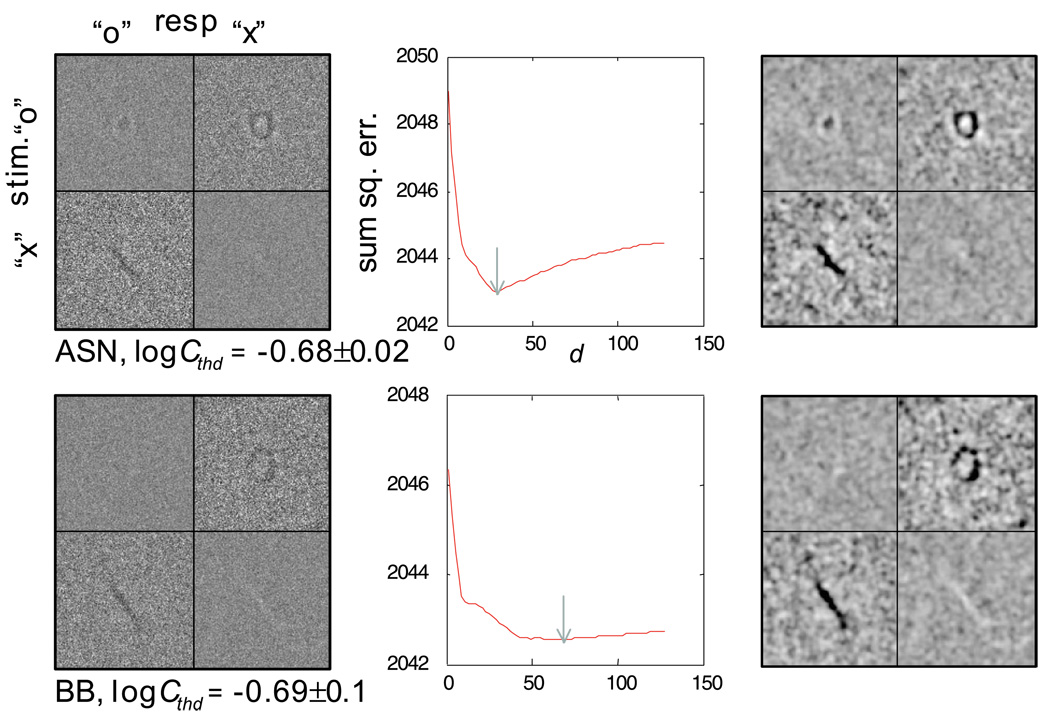

Experiment 3: Letter identification in the periphery

We explicitly manipulated spatial uncertainty in Experiments 1 and 2 to test if the various properties of signal clamping derived from analysis and simulation were empirically relevant. The results from the two preceding experiments suggest that these properties are indeed valid. In Experiment 3, we used the method of signal-clamped classification image to estimate the letter templates in the visual periphery and to determine the level of intrinsic spatial uncertainty in the periphery (10 deg in the inferior field). It has been suggested that one reason for an impoverished form vision in the periphery was because of a high degree of intrinsic spatial uncertainty in the human periphery. The cause of the intrinsic spatial uncertainty can be due to undersampling of the visual space (Levi & Klein, 1996; Levi et al., 1987) or to an uncalibrated disarray in spatial sampling (Hess & Field, 1993; Hess & McCarthy, 1994). The theory of uncalibrated disarray would predict a distorted perceptual template, whereas that of undersampling would not. These predictions are contingent on the possibility of recovering the observer’s template despite the high intrinsic spatial uncertainty in the periphery.

Prior to the main experiment, an acuity measurement was first performed on each subject (see General methods section for details). In the main experiment, letter stimuli (lowercase “x” and “o”) were presented at a fixed retinal eccentricity of 10 deg. There was no stimulus-level spatial uncertainty, and the letter was always presented at the center of the noise field. The subjects were apprised of this fact before the commencement of data collection. Subjects maintained fixation at a green LED and were asked to identify which letter was presented in each trial.