Abstract

Automated facial measurement using computer vision has the potential to objectively document continuous changes in behavior. To examine emotional expression and communication, we used automated measurements to quantify smile strength, eye constriction, and mouth opening in two six-month-old/mother dyads who each engaged in a face-to-face interaction. Automated measurements showed high associations with anatomically based manual coding (concurrent validity); measurements of smiling showed high associations with mean ratings of positive emotion made by naive observers (construct validity). For both infants and mothers, smile strength and eye constriction (the Duchenne marker) were correlated over time, creating a continuous index of smile intensity. Infant and mother smile activity exhibited changing (nonstationary) local patterns of association, suggesting the dyadic repair and dissolution of states of affective synchrony. The study provides insights into the potential and limitations of automated measurement of facial action.

During interaction, infants and mothers create and experience emotional engagement with one another as they move through individual cycles of affect (Cohn & Tronick, 1988; Lester, Hoffman, & Brazelton, 1985; Stern, 1985). Synchronous, rhythmic interaction is predicted by early infant physiological cyclicity (Feldman, 2006) and interaction patterns characterized by maternal responsivity and positive affect predict later toddler internalization of social norms (Feldman, Greenbaum, & Yirmiya, 1999; Kochanska, 2002; Kochanska, Forman, & Coy, 1999; Kochanska & Murray, 2000). Previous investigations of interaction have relied on manual coding of discrete infant and parent behaviors (Kaye & Fogel, 1980; Van Egeren, Barratt, & Roach, 2001) or ordinal scaling of predefined affective engagement states (Beebe & Gerstman, 1984; Cohn & Tronick, 1987; Weinberg, Tronick, Cohn, & Olson, 1999). Both methods rely on labor intensive manual categorization of ongoing behavior streams (Cohn & Kanade, 2007). In the current study, automated measurements were used to measure infant and mother emotional expressions during face-to-face interaction (see Figure 1).

Figure 1. Active Appearance Models (AAM).

a) The AAM is a mesh that tracks and separately models rigid head motion (e.g., yaw, pitch, and roll, visible in the upper left hand portion of the images) and non-rigid facial movement over time (left to right). b) In this output display, each partner's face is outlined to illustrate lip corner movement, mouth opening, the eyes, brows, and the edges of the lower face; these outlines are reproduced in iconic form to the right of each partner.

The temporal precision of automated measurements is ideal for examining infant-parent interaction at various time-scales. Researchers are often interested in capturing a summary measure of infant-parent synchrony and assume these interactive dynamics are stable over the course of an interaction. Yet infant-parent interaction can be characterized as the disruption and repair of synchrony, of matches and mismatches in affective engagement (Schore, 1994; Tronick & Cohn, 1989). Disruption and repair imply time-varying changes in how infant and parent interactive behavior is associated. The current study applies automated measurements to examine the stability of infant-parent interaction dynamics over the course of an interaction.

Automated measurements may offer insights into the dynamics of positive emotional expressions, a building block of early interaction. The smile—in which the zygomatic major pulls the lip corners obliquely upward—is the prototypic expression of joy. Among both infants and adults, stronger smiles involving greater lip corner movement tend to occur during positive events and are perceived as more emotionally positive than weaker smiles (Bolzani-Dinehart et al., 2005; Ekman & Friesen, 1982; Fogel, Hsu, Shapiro, Nelson-Goens, & Secrist, 2006; Schneider & Uzner, 1992). Likewise, smiles involving eye constriction—Duchenne smiles in which orbicularis oculi, pars lateralis, raises the cheek under the eye—tend to occur in positive situations and to be perceived as more emotionally positive than smiles not involving eye constriction (Bolzani-Dinehart et al., 2005; Ekman, Davidson, & Friesen, 1990; Fogel et al., 2006; Fox & Davidson, 1988; Frank, Ekman, & Friesen, 1993; Messinger, 2002; Messinger, Fogel, & Dickson, 2001).

Infant smiles involving mouth opening also tend to occur during emotionally positive periods of interaction (Messinger et al., 2001) and may be perceived as more emotionally positive than other smiles (Beebe, 1973; Bolzani-Dinehart et al., 2005; Messinger, 2002). Among infants, mouth opening tends to accompany cheek-raise smiling, and smiles with these characteristics tend to involve stronger lip corner movement than other smiles (Fogel et al., 2006; Messinger et al., 2001). It is unclear, however, whether continuous changes in one of these parameters is associated with continuous change in the others (Messinger, Cassel, Acosta, Ambadar, & Cohn, 2008). It is also not clear whether associations between parameters such as eye constriction and mouth opening are dependent on smiling, or whether they are a more general feature of infant facial action. Finally, little is known about how such parameters are associated in mothers when they engage in face-to-face interactions with their infants (Chong, Werker, Russell, & Carroll, 2003). By addressing these questions, we sought to describe differences and similarities in how mothers and their infants expressed positive emotion in interaction with one another.

The laborious quality of manual coding represents a limitation to large-scale measurement of continuous changes in facial expression. Early face-to-face interactions, for example, can challenge infant’s self-regulation abilities leading to autonomic behaviors such as spitting up (Tronick et al., 2005; Weinberg & Tronick, 1998) and non-smiling actions that may diminish the expression of positive emotion (e.g., dimpling of the lips and lip tightening). Perhaps because of difficulties in reliably identifying such potentially subtle facial actions, little is known about their role in early interaction. Automated measurement is a promising approach to addressing such difficulties (Cohn & Kanade, 2007).

Automated measurement approaches have the potential to produce objective, continuous documentation of behavior (Bartlett et al., 2006; Cohn & Kanade, 2007). Automated measurement of adult faces has led to progress distinguishing spontaneous and posed smiles (Schmidt, Ambadar, Cohn, & Reed, 2006), objectively categorizing pain-related facial expressions (Ashraf, 2007), detecting coordination patterns in head movement and facial expression (Cohn et al., 2004), identifying predictors of expressive asymmetry (Schmidt, Liu, & Cohn, 2006), and documenting post-intervention improvements in facial function (Rogers et al., 2007). Applied to infants, automated measurements have been used to indicate similarities between static positive and negative facial expressions (Bolzani-Dinehart et al., 2005) and to document the dynamics of five segments of infant smiling (Messinger et al., 2008). These studies paved the way for the current implementation of automated measurement of facial expressions during infant-parent interaction.

In this study, automated facial image analysis—computer vision modeling supplemented with machine learning—was used to measure infant and mother smile strength, eye constriction, and mouth opening, as well as infant non-smiling actions. Using a repeated case study of two infant-mother dyads, we assessed the association of these automated measurements with anatomically based manual coding (an index of concurrent validity) and with subjective ratings of positive emotion (an index of construct validity). We then documented the association of the automated measures of facial actions, asking whether infants and mothers smile in a similar fashion. Finally, we described the interactive patterns of infant and mother smiling activity. Specifically, we contrasted overall associations in infant and mother smile activity during relatively lengthy segments of interaction with associations during brief periods of interaction using windowed cross-correlations (Boker, Rotondo, Xu, & King, 2002).

Methods

Participants

We sequentially screened four non-risk six-month-old/mother dyads who took part in an ongoing longitudinal project comparing infants who were and were not at risk for autism. With both partners seated in a standard face-to-face position, mothers were asked to play with their infants as they normally would at home. In two dyads, excessive occlusion precluded automated measurement. In one of these dyads, the mother played peek-a-boo by repeatedly covering her face with her hands; in the other, the lower portion of mother’s face was frequently obscured by her infant’s head (over which she was being video recorded). Efforts to allow automated analysis of occluded faces (Gross, Matthews, & Baker, 2006) were not sufficient to capture these data, an issue to which we return in the discussion. The remaining two dyads are the focus of this report.

These dyads were designated A (male infant) and B (female infant). Both mothers were White. One identified her infant as White; the other identified her infant as White and Black. Both mothers had finished four years of college, and one had a graduate degree. In these dyads, we selected the four longest video segments that involved relatively unobstructed views of each partner’s face. Facial expression played no role in this selection. The segments comprised 157 (Dyad A) and 146 seconds (Dyad B) of each dyad’s 180 second interaction. Periods not modeled were subject to occlusion, typically of the mother’s face, caused either by her hands (e.g., when playing “itsy-bitsy spider”) or by 90 degree head rotation (e.g., during a variant of peek-a-boo).

Comparison Indices: Positive Emotion Ratings and Manual Coding

Positive emotion ratings

We assessed construct validity by examining the correspondence between automated measurements of facial actions and ratings of positive emotion. Data to assess the construct validity of smile-related measures came from the first video segment for each dyad in which automated measurements were conducted (segment 2 for Dyad A and 3 for Dyad B). The two infant and two mother video segments were rated in randomized order. Raters used a joystick interface to move a cursor over a continuous color-coded rating scale to indicate the “positive emotion, joy, and happiness” (from none to maximum) they perceived as the video played. Raters were 32 female and 21 male undergraduate students (M age = 18 years, range: 18–30, 59% White, 20% Hispanic, 13% Black, 6% Asian, and 2% other) obtaining extra credit in an introductory psychology class. Average intra-class correlations over segments were high (M = .95, Range: .88 – .96), indicating the consistency of ratings over time. We used the mean of the ratings in analyses because of the reliability of such aggregated independent measurements (Ariely, 2000).

Manual coding

The Facial Action Coding System (FACS) (Ekman, Friesen, & Hager, 2002) is an anatomically-based gold standard for measuring facial movements (termed Action Units, AUs), which has been adapted for use with infants (BabyFACS) (Oster, 2006). To assess convergent validity and inter-rater reliability, frame-by-frame anatomically-based coding of mother and infant facial movement was conducted by FACS-certified, BabyFACS-trained coders. 1 Both smiling (AU12) and eye constriction caused by orbicularis oculi, pars lateralis (AU6) were coded on 6-point scales, ranging from absent (0), to trace to maximum (1–5, corresponding to the five FACS intensity levels). Smiling and eye constriction were coded for all video segments because this coding was used to train measurement algorithms (see below). Mouth opening was manually coded in the first interaction segment for each dyad in which automated measurements had been conducted (segment 2 for Dyad A and 3 for Dyad B). These segments were also used to assess inter-rater reliability. Mouth opening was coded on a 12-point scale from 0 (jaws together, lips together), to 1 (lips parted, AU25) through the five intensity levels of jaw dropping (AU26) and the five intensity levels of jaw stretching (AU27). There was no variation in lip parting – aside from its presence or absence – that affected degree of mouth opening in this sample (Oster, 2006). For continuous coding of FACS AU intensity, the mean correlation between coders was .81 for infant codes and .70 for mother codes. The presence and absence of mother tickling was coded reliably (mean Kappa = .83, mean 93% agreement) based on the movement of the mother’s hands against the infant’s body.

Infant A occasionally produced a set of non-smiling lower and midface actions that did not have documented affective significance in infants (Oster, 2006). Two of these actions (dimpling, AU14, and lip tightening, AU23) are associated with smile dampening in adults (Ekman, Friesen, & O'Sullivan, 1988; Reed, Sayette, & Cohn, 2007). The remaining actions (spitting-up; upper lip raising, AU10; deepening of the nasolabial furrow, AU11; and lip stretching, AU10) can be elements of infant expressions of negative affect if they occur with actions such as brow lowering (AU4) (Messinger, 2002; Oster, 2006), but this co-occurrence was observed only once in the current study. Based on preliminary analyses showing similarities in how the actions were associated, we created a composite non-smiling action category. The non-smiling actions were coded when they occurred at the trace (A) level or higher. If the actions co-occurred, the dominant action was coded. These actions were coded both in the presence and absence of smiling. Inter-rater reliability was assessed in Infant A’s video segment four (the actions did not occur in A’s segment 2). Reliability in distinguishing the presence and absence of these infrequently occurring actions was adequate (Bakeman & Gottman, 1986; Bruckner & Yoder, 2006) (Kappa = .52, 83% agreement).

Automated Measurements

Computer vision software, CMU/Pitt’s Automated Facial Image Analysis (AFA4), was used to model infant and mother facial movement (see Figure 1). AFA4 uses Active Appearance Models (AAMs), which distinguish rigid head motion parameters (e.g., x translation, y translation, and scale) from expressive facial movement. AAMs involve a shape component and an appearance component. The shape component is a triangulated mesh model of the face containing 66 vertices each of which has an X and Y coordinate (Baker, Matthews, & Schneider, 2004; Cohn & Kanade, 2007). The mesh moves and deforms in response to changes in parameters corresponding to a face undergoing both whole head rigid motion and non-rigid motion (facial movement). The appearance component contains the 256 grey scale values (lightness/darkness) for each pixel contained in the modeled face.

AAMs were trained on approximately 3% of the frames in the video record, typically those that exhibited large variations in appearance with respect to surrounding frames. In these training frames, the software fit the mesh to the videotaped image of the face and a research assistant adjusted the vertices to ensure the fit of the mesh. After training, the AAM independently modeled the entire video sequence (i.e. both training and test frames). We collected data on the AAM training procedure in two of the four video recorded segments contributed by each dyad. Training required approximately 4 minutes per frame for a B.A. level research assistant who was supervised by a Ph.D. level computer vision scientist.

Mouth opening was measured as the mean vertical distance between the upper and lower lips at three points (midline and below the right and left nostril) using the shape component of the AAM. Smile strength, eye constriction caused by orbicularis oculi (pars lateralis), and the non-smiling actions exhibited by Infant A involved complex changes in the shape and appearance of the face. Separate machine learning algorithms were used to measure each of these variables. The algorithms were trained to use a lower dimension representation of the appearance and shape data from the AAM to separately predict three classes of manually coded infant actions: a) smiling intensity (AU12, from absent to maximal), b) eye constriction (AU6, from absent to maximal), and c) non-smiling actions (spitting-up, dimpling, lip tightening, upper lip raising, nasolabial furrow deepening, and lip stretching). 2 Each instance of training was carried out using a separate sample of 13% of the frames; these training frames were randomly selected to encompass the entire range of predicted actions. Measures of association between the algorithms and manual coding (reported below) exclude the training frames.

Data analysis

This is a descriptive study of expressivity and interaction in two infant-mother dyads using relatively new measurement techniques. Significance tests were employed to identify patterns within and between dyads using video frames as the unit of analysis. Analyses were based on correlation coefficients, which index effect sizes. Correlations were compared using Z-score transformations (Meng, Rosenthal, & Rubin, 1992). Correlations at various levels of temporal resolution were used to describe infant-mother interaction. We used windowed cross-correlations (Boker, Xu, Rotondo, & King, 2002), for example, to examine the association between infant and mother smiling activity over brief, successive windows of interaction.

Results

We first report correlations of automated measurements of facial action with rated positive emotion (construct validity) and with manual FACS/BabyFACS coding (convergent validity). We next examine the associations of automated measurements of smile strength, eye constriction, and mouth opening within infants and within mothers. Next, we examine the overall correlation of infant and mother smiling activity, tickling, and infant non-smiling actions. Finally, we use windowed cross-correlations to investigate local changing patterns of infant-mother synchrony.

Comparison Indices: Positive Emotion Ratings and Manual Coding

Associations with positive emotion ratings

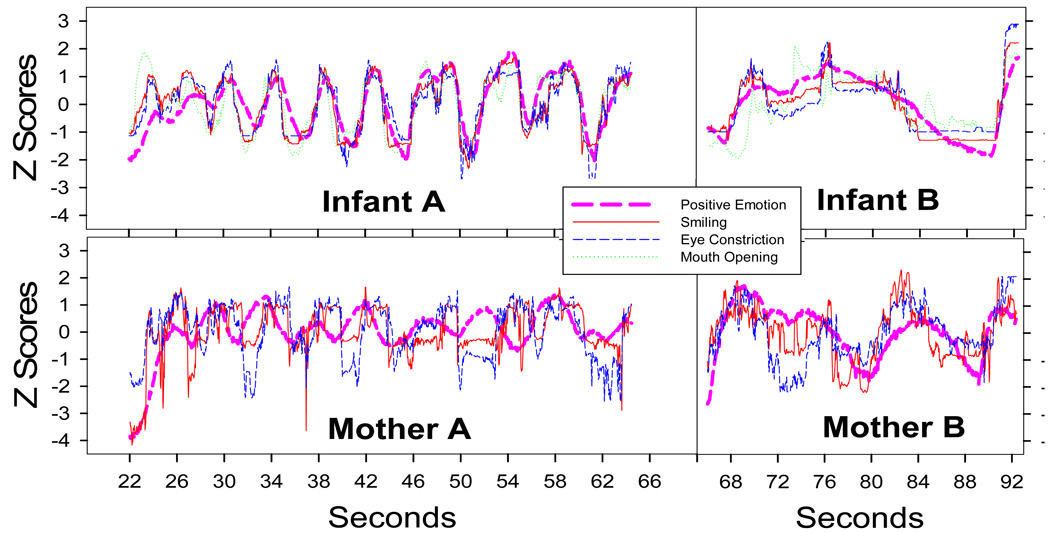

We assessed the association of smile strength, mouth opening, and eye constriction with the mean continuous rating of positive emotion (see Table 1 and Figure 2). We expected ratings to lag the continuously running video as raters assessed affective valence and moved the joystick. After examining cross-correlations, we chose a uniform lag of 3/5 of a second, which optimized the associations of the mean ratings and the automated measurements of facial action. Smile strength was a common predictor of perceived positive emotion among both infants and mothers. Infant positive emotion was relatively highly correlated with infant smile strength, eye constriction, and mouth opening, M = .77). Rated mother positive emotion was moderately correlated with mother smile strength, M = .58), and showed lower correlations with eye constriction, M = .29, and with mouth opening, M = .36).

Table 1.

Correlations of Automated Facial Measurements with FACS/BabyFACS Coding and Emotional Valence Ratings

| Dyad A | Dyad B | |||||||

|---|---|---|---|---|---|---|---|---|

| Smile Presence | Smile Strength | Eye Constriction | Mouth Open | Smile Presence | Smile Strength | Eye Constriction | Mouth Open | |

| Infant | ||||||||

| Coding | 82%, K = .64 | . 92 | . 88 | .79 | 89%, K = .79 | .94 | .90 | .86 |

| Rating | .79 | .73 | .65 | .90 | .78 | .74 | ||

| Mother | ||||||||

| Coding | 90%, K = .76 | .94 | .94 | .89 | 94%, K = .82 | .90 | .91 | .71 |

| Rating | .61 | .24 | .49 | .54 | .34 | .23 | ||

Note. Correlations involving emotional valence rating and correlations involving mouth opening are based on the first interaction segment for each dyad in which automated measurements were conducted (segment 1 for Dyad A, n = 1292 frames, and segment 2 for Dyad B, n = 818 frames). Correlations between automated and coded measurements of smile strength and eye constriction are based on all frames except those used for training the automated Support Vector Machines (Dyad A n = 4,100, Dyad B n = 3,815). FACS = Facial Action Coding System. For all correlations and Cohen's Kappas (K), ps < .01.

Figure 2. Smile parameters and rated positive emotion over time.

Infant graphs show the association of automated measurements of smile strength, eye constriction, mouth opening, and rated positive emotion. Mother graphs show the association of automated measurements of smile strength, eye constriction, and rated positive emotion. Positive emotion is offset by three fifths of a second to account for rating lag.

Associations with manual coding

Correlations between automated measurements and FACS/BabyFACS coding of infant and mother smiling, eye constriction, and mouth opening are displayed in Table 1. Correlations were high for both infants, M = .87, and for mothers, M = .88, indicating strong correspondences between automated and anatomically-based manual coding. Agreement between manual and automated coding on the absence versus presence of smiles (at the A, “slight,” level or stronger) was also high both for infants and mothers as assessed both by percent agreement, M = 89%, and Cohen’s Kappa, M = .75). Automated measurements of the presence and absence of non-smiling actions showed adequate agreement with manual measurements, 89%, Kappa = .54 (Bakeman & Gottman, 1986).

Infant smiling parameters and mother smiling parameters

We correlated automated measurements of smile strength, eye constriction, and mouth opening to understand how these parameters were associated. Similarities and differences between infants and mothers emerged (see Table 2). Smile strength and eye constriction were highly correlated within infants and within mothers, M = .84. In infants, correlations of mouth opening with smile strength (rA = .66, rB = .47) and eye constrictions (rA = 64, rB = .54) were moderate to high. In mothers, correlations of mouth opening with smile strength (rA = . 19, rB = .52) and with eye constriction (rA = = .21, rB = .30) were lower and more variable.

Table 2.

Correlations of Automated Measurements of Smile-Related Actions Within and Between Partners

| Infant | Mother | ||||||

|---|---|---|---|---|---|---|---|

| Smile Strength | Eye Constriction | Mouth Opening | Smile Strength | Eye Constriction | Mouth Opening | ||

| Smile Strength | - | .86 | .66 | .38 | .43 | .26 | |

| Eye Constriction | .88 | - | .64 | .31 | .39 | .36 | |

| Mouth Opening | .47 | .54 | - | −.04 | −.09 | −.25 | |

| Smile Strength | .41 | .46 | .13 | - | .82 | .19 | |

| Eye Constriction | .38 | .46 | .14 | .78 | - | .21 | |

| Mouth Opening | .05 | .08 | −.05 | .52 | .30 | - | |

Note. Correlations among infant smile parameters are contained in the top left quadrant; correlations among mother parameters are contained in the bottom right quadrant. Dyad A's cross-partner correlations are contained on the top right quadrant and those of dyad B on the bottom left quadrant. Correlations pertaining to Dyad A are shaded. The correlation, for example, between infant and mother mouth opening is −.25 for Dyad A and −.05 for Dyad B. Dyad A's correlations reflect 4,713 observations; those of Dyad B reflect 4,377 observations. All correlations have p values below .01.

We conducted partial correlations to determine whether the associations of eye constriction and mouth opening were dependent on smiling. Infant eye constriction and mouth opening exhibited low levels of association while controlling for level of smiling (rA = .23 & rB = .30). Mother eye constriction and mouth opening were not consistently associated when controlling for smiling (rA = .11 & rB = −.20). In sum, the association of these parameters was dependent on smiling in mothers, but showed some independence from smiling among infants. This might be due, in part, to differences in smiling level between the partners. Overall, mothers smiled more intensely (MA = 2.41, MB = 2.62) than infants (MA = 1.80, MB = 1.64). Mothers also smiled for a higher proportion of the interactions (A =.77, B = .84) than infants (A = .66, B = .62).

Interaction

Overall correlations of individual smiling parameters between partners

In each dyad, infant smile strength and eye constriction were moderately positively correlated with mother smile strength and eye constriction (see Table 2). Each partner’s degree of mouth opening, however, showed weak and sometimes negative associations with the smiling parameters of the other partner.

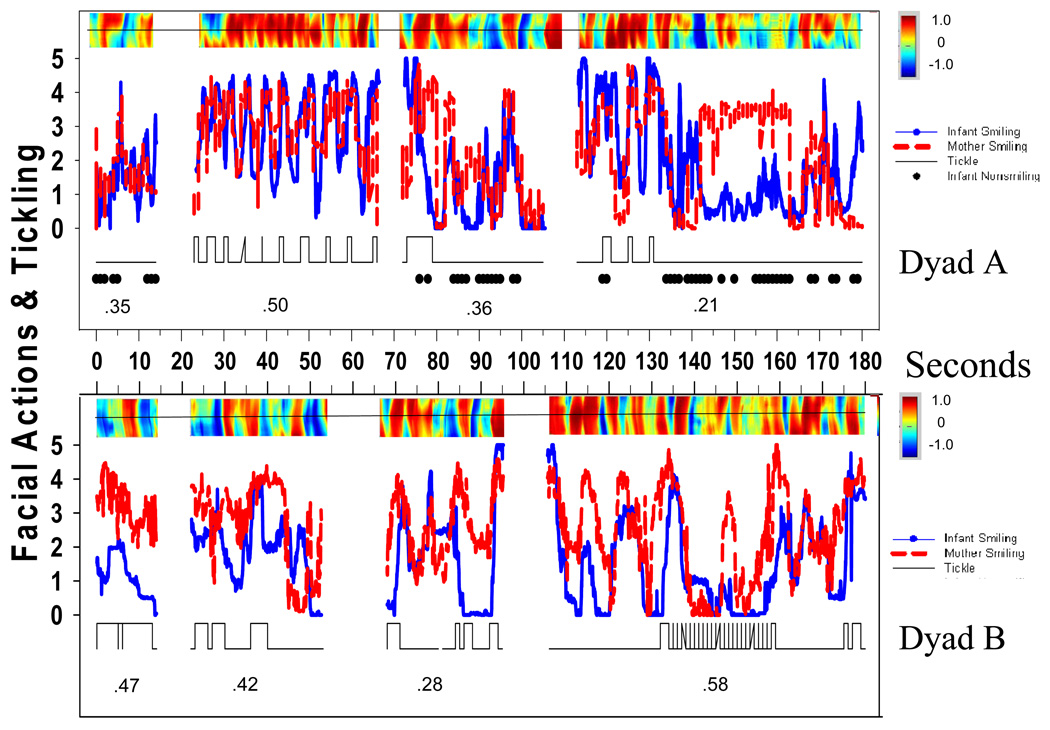

Overall interactive associations of smiling activity and tickling

As smile strength was consistently associated with eye constriction within infants and within mothers, a single index of smiling activity—the mean of these variables—was calculated for each partner over the course of the interaction. As seen in Table 3 and Figure 3, variability and consistency were evident in the correlations involving tickling, and smiling activity. In both Dyad A’s and B’s four segments of interaction, infant and mother smiling activity ranged from being weakly to moderately correlated. Mother tickling was typically associated with high levels of mother smiling activity. Mother tickling was frequently associated with high levels of infant smiling activity. An exception was Dyad B’s third video segment in which mother tickling also occurred during ebbs in infant smiling activity, probably as an attempt to elicit infant smiling.

Table 3.

Correlations Between Automated Measurements of Infant and Mother Smiling Activity and Mother Tickling

| Dyad | Segment | n | Seconds | Infant Smile Activity with Mother Smile Activity |

Infant Smile Activity with Tickling |

Mother Smile Activity with Tickling |

|---|---|---|---|---|---|---|

| A | 1 | 425 | 14 | .35 | . | . |

| 2 | 1292 | 43 | .50 | .35 | .29 | |

| 3 | 991 | 33 | .36 | .30 | .54 | |

| 4 | 2005 | 67 | .21 | .37 | .35 | |

| Overall | 4713 | 157 | .40 | .35 | .39 | |

| B | 1 | 420 | 14 | .47 | .15 | .16 |

| 2 | 913 | 31 | .42 | .44 | .39 | |

| 3 | 818 | 27 | .28 | −.22 | .52 | |

| 4 | 2234 | 74 | .58 | .07 | .25 | |

| Overall | 4385 | 146 | .47 | .06 | .37 |

Note. Pearson's correlations are reported between infant and mother smiling activity. Spearman's correlations are reported for the association of these parameters with the presence of mother tickling. There was no tickling in Dyad A's first segment. All correlations have p values below .01.

Figure 3. Mother tickling and automated measures of infant and mother smiling activity.

Tickling and smiling activity are plotted over seconds. Smiling activity is the mean of smile strength and eye constriction intensity. Correlations between infant and mother smiling activity for each segment of interaction are displayed below that segment. Above each segment of interaction is a plot of the corresponding windowed cross-correlations between infant and mother smiling activity. As illustrated by the color bar to the right of the cross-correlation plots, high positive correlations are deep red, null correlations a pale green, and high negative correlations are deep blue. The horizontal midline of these plots indicates the zero-order correlation between infant and mother smiling activity. The correlations are calculated for successive three second segments of interaction. The plots also indicate the associations of one partner's current smiling activity with the successive activity of the other partner. Area above the midline indicates the correlation of current infant activity with successive lags of mother smiling activity. Area beneath the midline indicates the correlation of mother smiling activity with successive lags of infant smiling activity. Three lags of such activity are shown. For example, the area at the very bottom of a plot shows the correlation of a window of three seconds of current mother activity with a window of three seconds of infant activity that is to occur after three fifths of a second.

Correlations involving non-smiling actions

Infant A’s non-smiling actions occurred for approximately 14.5 seconds. These actions co-occurred with infant smiling in 4.7 % of the total video frames, less than the rate of chance co-occurrence, χ2 = 49.60, p < .01. The non-smiling actions were associated with dampening of multiple indices of positive interaction. T-tests indicated that non-smiling actions were associated with lower levels of infant and mother smiling activity, MI = .73 and MM = .51 on the six-point intensity metric, ps < .01. In addition, mother tickling very rarely co-occurred with these non-smiling infant actions, a significant absence of association, χ2 = 46.0, p < .001 (see Figure 3). Most intriguingly, the association between infant and mother smiling activity was stronger in the absence, r = .44, than the presence, r = −.34, of infant A’s non-smiling actions p < .01.

Windowed cross-correlations of infant and mother smiling activity

Overview

Each segment of each dyad’s interaction was itself characterized by changing levels of local correlation between infant and mother smiling activity (see Figure 3). These were explored with windowed cross-correlations in which a temporally defined window was used to calculate successive local zero-order correlations over the course of an interaction (Boker et al., 2002). Cross-correlations were also calculated using this window. These indicate the degree to which one partner’s current smiling activity predicted the subsequent smiling activity of the other partner. Preliminary analyses revealed the comparability of windows of a range of durations and a three second window was chosen.

Local changes in zero-order correlations

Local periods of positive, nil, and negative correlation between infant and mother smiling activity were interspersed throughout both dyad’s interactions. These are displayed on the midline of the rectangular plots in Figure 3. They index processes in which dyadic synchrony—defined as local periods of high correlation—emerged, dissolved, and were repaired. These are evident, for example, in Dyad A’s fourth video segment in which synchronous rises and declines in smiling activity were interrupted by an epoch of relatively low local correlation, which was followed by more synchronous activity. Changes in the level of local correlations index nonstationarity, variability in how infant and mother interact over time.

Local cross-correlations

Changing patterns of cross-correlation were also evident. Around 85 seconds, for example, a decrease in Infant A’s smiling activity was mirrored by the mother; between 90 and 95 seconds, an increase in mother smiling activity was followed by an infant increase. Prominent throughout each dyad’s interaction were symmetries between the top and bottom halves of the cross-correlation plots. These indicate that local periods of positive correlation were characterized by each partner mirroring the other’s changes in level of smiling activity. That is, the current smiling activity of each partner showed comparable correlations with the subsequent activity of the other. This underscores the co-constructed quality of the emotional communication observed.

Discussion

In the current study, automated facial image analysis was used to reliably measure infant non-smiling actions and the smile-related actions of interacting infants and mothers. This allowed quantification of expressive actions with frame-by-frame precision. These automated measurements revealed that smiling involved multiple associated actions that changed continuously in time within each partner. Levels of infant and mother smiling activity were positively associated over the course of interactive sessions but exhibited frequently changing levels of local association (nonstationarity).

Anatomically-based manual coding of facial expression is sufficiently difficult and labor intensive as to discourage its widespread use. Automated approaches to facial action measurement are a potential solution to difficulties with manual coding, although commercial offerings relevant to infants are not yet available. These automated approaches are an active research topic in the field of computer vision and machine learning (Fasel & Luettin, 2003) and frequently involve collaborations between computer scientists and psychologists (Bartlett et al., 2006; Cohn & Kanade, 2007).

There were limitations to the current automated measurement approach. Of four dyads screened, two could not be analyzed because of occlusion. In one dyad, camera positioning was not sufficiently flexible to capture mother’s face as she bent in over the infant, an issue that might be addressed with remote camera-angle adjustment. In another dyad, frequent maternal self-occlusion in peek-a-boo precluded measurement. We are currently working on re-initialization procedures that would render automated measurement of multiple short segments of interaction feasible. Such epochs of self-occlusion hinder both manual and automated measurement; however, to the degree that they hide one partner’s facial expression from the other, they may render measurement of the hidden expressions immaterial to the study of interaction.

Implementing the automated measurements was a two-step process involving training both AAMs and SVMs. SVMs were trained on 13% of frames selected randomly from manual coding of all available data. Efforts to minimize the role of human beings in training AAMs and circumscribe the coding required to train SVMs is a topic of continuous research activity (Wang, 2008, in press). Because current approaches are exploratory and involve both automated and manual measurement, the current study was limited to two dyads and results should be considered preliminary. In these dyads, between 81% and 87% of available video was suitable for automated measurement, quantities comparable to studies employing manual measurement of infant facial expression (Messinger et al., 2001). The current study is the first application of automated measurement to infant-parent interaction and produced a novel portrait of emotional communication dynamics.

Comparison Indices: Manual Coding and Positive Emotion Ratings

Associations with manual coding

Agreement between human and automated identification of infant non-smiling actions was modest, which was not unexpected for rare, potentially subtle events that could potentially co-occur (Bakeman & Gottman, 1986; Bruckner & Yoder, 2006). Automated measurements were highly associated with FACS/BabyFACS coding of the presence and intensity of smiling, eye constriction, and mouth opening. Overall, associations between manual and automated measurements were comparable to the associations between manual measurements (inter-rater reliability). These findings add to a growing literature documenting convergence between manual FACS and automated measurements of facial action in infants (Messinger et al., 2008) and adults (Bartlett et al., 2005, , 2006; Cohn & Kanade, 2007; Cohn, Xiao, Moriyama, Ambadar, & Kanade, 2003; Cohn, Zlochower, Lien, & Kanade, 1999; Tian, Kanade, & Cohn, 2001; Tian, Kanade, & Cohn, 2002). The current use of machine learning algorithms to directly predict the intensity (as well as the presence) of FACS Action Units is an advance important for understanding how emotion communication occurs in time using a gold standard metric (Ekman et al., 2002; Oster, 2006).

Associations with positive emotion ratings

Among infants, automated measurements of smile strength, eye constriction, and mouth opening were highly associated with rated positive emotion. Among mothers, smile strength and, to a lesser degree, mouth opening, was moderately associated with rated positive emotion. For both infants and mothers, automated and manual FACS/Baby-FACS measurements of smiling related actions showed comparable associations with rated positive emotion. It was not surprising that infant smiling parameters were more consistently associated with perceived positive emotion than mother smiling parameters. The facial actions of six-month-old infants appear to directly reflect their emotional states during interactions. By contrast, mothers are responsible not only for engaging positively with their infants during interactions but also for simultaneously managing their infants’ emotional states. Mothers’ multiple roles may reduce the degree to which smile strength and other facial actions are perceived as directly indexing maternal positive emotion. At times, for example, ratings of mother positive emotion remained high although smiling levels dropped (see Figure 2), perhaps because mother’s non-smiling attempts to engage the infant were perceived positively.

Infant smiling parameters and mother smiling parameters

Among infants, degree of smiling, eye constriction, and mouth opening were highly associated. Moreover, smiling appeared to ‘bind’ the other two actions together more tightly than would otherwise be the case. Extending previous research (Fogel, et al. 2007; Messinger et al., 2001), the current findings indicate that, like smile strength, infant eye constriction and mouth opening vary continuously and that each is strongly associated with continuous ratings of positive emotion (Bolzani-Dinehart et al., 2005; Ekman & Friesen, 1982; Fogel et al., 2006; Schneider & Uzner, 1992). This suggests that early infant positive emotion is a unitary construct expressed through the intensity of smiling and a set of linked facial actions (Messinger & Fogel, 2007). It may be that research comparing different “types” of infant smiling (i.e., Duchenne and open mouth smiles) categorizes phenomena that are frequently continuously linked in time.

Among mothers, degree of smiling and eye constriction—the components of Duchenne smiling—were highly associated. In other words, mothers, like infants, engaged in Duchenne smiling to varying degrees. This raises questions about the utility of dichotomizing smiles as expressions of joy or as non-emotional social signals based on the presence of the Duchenne marker (Harker & Keltner, 2001). It is also intriguing that mother smiling and eye constriction exhibited associations with mouth opening as all these actions were associated with rated positive emotion. Mothers appeared to use mouth opening not only to vocalize, verbalize, and create displays for their infants, but also to express positive emotion with them (Chong et al., 2003; Ruch, 1993; Stern, 1974).

Interaction

Both dyads exhibited moderate overall levels of association between infant and mother smiling activity which were consistently positive but varied in strength over different segments of interaction. In both dyads, mother smiling activity and tickling were consistently associated. Tickling has a mock aggressive quality (‘I’m gonna get ya’) and high levels of maternal smiling activity may serve to emphasize tickling’s game-like intent (Harris, 1999). Although tickling appeared to typically be successful at eliciting increased infant smiling activity, this was not always the case (i.e., in Dyad B’s third segment), nor were high levels of smiling activity always associated with tickling (Fogel et al., 2006).

The temporal patterning of infant-mother interaction is increasingly being used as a measure of individual differences between dyads that is sensitive to symptoms of maternal psychopathology (Beebe et al., 2007) and predictive of self regulation and the capacity for empathy (Feldman, 2007). We found that changes in the degree of association between infant and mother smiling activity—both between and within segments of interaction—were the norm. Interactions, then, were characterized by frequent changes in level of correlation that constituted the dissolution and repair of dyadic synchrony. When the association between infant and mother smiling activity changes over the course of interaction, the assumption of bivariate stationary is violated. Methodologically, this suggests the importance of careful application of time-series techniques that correct for stationarity violations. More generally, changing local synchrony levels suggest caution in the use of comprehensive (time-invariant) summary measures of dyadic interaction. This is underscored by the preponderance of lead-lag symmetries observed in these interactions. Epochs in which one partner’s smiling activity was associated with the other partner’s subsequent smiling were often coincident or soon followed by epochs in which the situation was reversed.

Automated facial measurements provided rich data not only for characterizing smile intensity but also for identifying other subtle actions relevant to face-to-face interaction. Infant A displayed a large set of non-smiling actions including a brief instance of spitting up, upper lip raising, nasolabial furrow deepening, lip stretching, dimpling and lip tightening, which did not have clear affective significance. These actions were associated with lower intensities of mother smiling activity and a reduction in mother tickling, perhaps because his mother recognized them as signals of infant over-arousal even when they occurred with smiling. Strikingly, these actions were associated not only with lower intensities of smiling activity, but also with reductions in infant-mother synchrony, suggesting the actions altered the emotional climate of the interaction. Larger-scale studies utilizing automated measurement could provide data with which to more precisely understand the potentially distinct affective and communicative significance of such actions.

Studies employing automated measurements have the potential to describe normative and disturbed patterns of emotional and communicative development in children. We are currently applying such measurement approaches to children with Autism Spectrum Disorders (ASDs), for example, and to infants who have an older sibling with an ASD and so are themselves at risk for the disorder (Ibanez, Messinger, Newell, Sheskin, & Lambert, 2008). Other potential applications include investigating the development of infants of mothers manifesting different types of depressive symptomatology, infants born prematurely, and infants with prenatal drug exposures. In all these cases, precise measurement may help distinguish difficulties in infant-parent interaction that partially constitute more general patterns of environmental and genetic risk.

Through interaction, infants come to understand themselves as social beings engaged with others. These experiences are thought to contribute to the infant’s understanding of their own developing emotional expressions (Stern, 1985; Tronick, 1989) and to lay the groundwork of responsivity and initiation that underlie basic social competence (Cohn & Tronick, 1988;Kaye & Fogel, 1980). This report presents the first analysis of infant-parent interaction using automated measurements of facial action. We examined the organization of smiling activity in infants and mothers, and explored the micro-structure of infant-mother synchrony. Temporally precise objective measurements offered a close-up view of the flow of emotional interaction which may offer new insights into early communication and development.

ACKNOWLEDGMENTS

The authors thank J.D. Haltigan, Ryan Brewster, and the families who participated in this research. The research was supported by NICHD R01 047417 & R21 052062, NIMH R01 051435, Autism Speaks, and the Marino Autism Research Institute.

Footnotes

In the current study, the Continuous Measurement System (CMS) was used to record continuous ratings of positive emotion via a joystick. It was used separately to conduct continuous FACS/BabyFACS coding via mouse and keyboard. The CMS is available for download at http://www.psy.miami.edu/faculty/dmessinger/dv/index.html.

These machine learning algorithms are known as Support Vector Machines (SVM) (Chang & Lin, 2001). SVMs have been used in previous work distinguishing the presence of eye constriction (AU6) in adult smiles (Littlewort, Bartlett, & Movellan, 2001). In the current application, the appearance data from the AAM were highly complex, with 256 grayscale values in each of the approximately 10,000 pixels in the AAM for each frame of video. Consequently, we used a Laplacian Eigenmap (Belkin & Niyogi, 2003)–a nonlinear data reduction technique–to represent the appearance and shape data as a system of twelve variables per frame.

Contributor Information

Daniel S. Messinger, University of Miami, Coral Gables, FL 33146, (dmessinger@miami.edu)

Mohammad H. Mahoor, University of Denver (mmahoor@du.edu)

Sy-Miin Chow, University of North Carolina (symiin@email.unc.edu).

Jeffrey F. Cohn, University of Pittsburgh and Carnegie Mellon University, Pittsburgh, PA 15260 (jeffcohn@pitt.edu)

References

- Ariely D, Au WT, Bender RH, Budescu DV, Dietz C, Gu H, Wallsten TS, Zauberman G. The effects of averaging subjective probability estimates between and within judges. Journal of Experimental Psychology: Applied. 2000;6:30–147. doi: 10.1037//1076-898x.6.2.130. [DOI] [PubMed] [Google Scholar]

- Ashraf ABL, S Chen T, Prkachin K, Solomon P, Ambadar Z, Cohn J. The painful face: Pain expression recognition using active appearance models; Paper presented at the Proceedings of the ACM International Conference on Multimodal Interfaces.2007. [Google Scholar]

- Bakeman R, Gottman J. Observing Interaction: An Introduction to Sequential Analysis. New York, NY: Cambridge University Press; 1986. [Google Scholar]

- Baker S, Matthews I, Schneider J. Automatic Construction of Active Appearance Models as an Image Coding Problem. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2004;26(10):1380–1384. doi: 10.1109/TPAMI.2004.77. [DOI] [PubMed] [Google Scholar]

- Bartlett MS, Littlewort G, Frank M, Lainscsek C, Fasel I, Movellan J. Recognizing Facial Expression: Machine Learning and Application to Spontaneous Behavior; Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; IEEE Computer Society; 2005. pp. 568–573. [Google Scholar]

- Bartlett MS, Littlewort G, Frank M, Lainscsek C, Fasel I, Movellan J. Fully Automatic Facial Action Recognition in Spontaneous Behavior; Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition; IEEE Computer Society; 2006. pp. 223–230. [Google Scholar]

- Beebe B. Ontongeny of positive affect in the third and fourth months of life of one infant. 1973;35(2):1014B. [Google Scholar]

- Beebe B, Gerstman L. A method of defining “packages” of maternal stimulation and their functional significance for the infant with mother and stranger. International Journal of Behavioral Development. 1984;7(4):423–440. [Google Scholar]

- Beebe B, Jaffe J, Buck K, Chen H, Cohen P, Blatt S, Kaminer T, Feldstein S, Andrews H. Six-week postpartum maternal self-criticism and dependency and 4-month mother-infant self-and interactive contingencies. Developmental Psychology. 2007;43(6):1360–1376. doi: 10.1037/0012-1649.43.6.1360. [DOI] [PubMed] [Google Scholar]

- Belkin M, Niyogi P. Laplacian Eigenmaps for dimensionality reduction and data representation. Neural Computation Archive. 2003;15(6):1373–1396. [Google Scholar]

- Boker SM, Rotondo JL, Xu M, King K. Windowed cross-correlation and peak picking for the analysis of variability in the association between behavioral time series. Psychological Methods. 2002;7(3):338–355. doi: 10.1037/1082-989x.7.3.338. [DOI] [PubMed] [Google Scholar]

- Bolzani-Dinehart L, Messinger DS, Acosta S, Cassel T, Ambadar Z, Cohn J. Adult perceptions of positive and negative infant emotional expressions. Infancy. 2005;8(3):279–303. [Google Scholar]

- Bruckner CT, Yoder P. Interpreting Kappa in Observational Research: Baserate Matters. American Journal on Mental Retardation. 2006;111(6):433–441. doi: 10.1352/0895-8017(2006)111[433:IKIORB]2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: a library for support vector machines. 2001 [Google Scholar]

- Chong SCF, Werker JF, Russell JA, Carroll JM. Three facial expressions mothers direct to their infants. Infant and Child Development. 2003;12:211–232. [Google Scholar]

- Cohn J, Kanade T. Automated facial image analysis for measurement of emotion expression. In: Coan JA, Allen JB, editors. The handbook of emotion elicitation and assessment. New York: Oxford; 2007. pp. 222–238. [Google Scholar]

- Cohn J, Reed LI, Moriyama T, Xiao J, Schmidt K, Ambadar Z. Multimodal coordination of facial action, head rotation, and eye motion during spontaneous smiles. Paper presented at the Sixth IEEE International Conference on Automatic Face and Gesture Recognition.2004. [Google Scholar]

- Cohn JF, Tronick EZ. Mother-infant face-to-face interaction: The sequence of dyadic states at 3, 6, and 9 months. Developmental Psychology. 1987;23(1):68–77. [Google Scholar]

- Cohn JF, Tronick EZ. Mother-infant face-to-face interaction: Influence is bidirectional and unrelated to periodic cycles in either partner's behavior. Developmental Psychology. 1988;24(3):386–392. [Google Scholar]

- Cohn JF, Xiao J, Moriyama T, Ambadar Z, Kanade T. Automatic recognition of eye blinking in spontaneously occurring behavior. Behavior Research Methods,Instruments, and Computers. 2003;35:420–428. doi: 10.3758/bf03195519. [DOI] [PubMed] [Google Scholar]

- Cohn JF, Zlochower AJ, Lien J, Kanade T. Automated face analysis by feature point tracking has high concurrent validity with manual FACS coding. Psychophysiology. 1999;36(1):35–43. doi: 10.1017/s0048577299971184. [DOI] [PubMed] [Google Scholar]

- Ekman P, Davidson RJ, Friesen W. The Duchenne smile: Emotional expression and brain physiology II. Journal of Personality and Social Psychology. 1990;58:342–353. [PubMed] [Google Scholar]

- Ekman P, Friesen W, Hager JC. The Facial Action Coding System on CD ROM. Network Information Research Center; 2002. [Google Scholar]

- Ekman P, Friesen WV. Felt, false, and miserable smiles. Journal of Nonverbal Behavior. 1982;6(4):238–252. [Google Scholar]

- Ekman P, Friesen WV, O'Sullivan M. Smiles when lying. Journal of Personality and Social Psychology. 1988;54(3):414–420. doi: 10.1037//0022-3514.54.3.414. [DOI] [PubMed] [Google Scholar]

- Fasel B, Luettin J. Automatic Facial Expression Analysis: A Survey. Pattern Recognition. 2003;36:259–275. [Google Scholar]

- Feldman R. From biological rhythms to social rhythms: Physiological precursors of mother-infant synchrony. Developmental Psychology. 2006;42(1):175–188. doi: 10.1037/0012-1649.42.1.175. [DOI] [PubMed] [Google Scholar]

- Feldman R. On the origins of background emotions: From affect synchrony to symbolic expression. Emotion. 2007;7:601–611. doi: 10.1037/1528-3542.7.3.601. [DOI] [PubMed] [Google Scholar]

- Feldman R, Greenbaum CW, Yirmiya N. Mother-infant affect synchrony as an antecedent of the emergence of self-control. Developmental Psychology. 1999;35(1):223–231. doi: 10.1037//0012-1649.35.1.223. [DOI] [PubMed] [Google Scholar]

- Fogel A, Hsu H-C, Shapiro AF, Nelson-Goens GC, Secrist C. Effects of normal and perturbed social play on the duration and amplitude of different types of infant smiles. Developmental Psychology. 2006;42:459–473. doi: 10.1037/0012-1649.42.3.459. [DOI] [PubMed] [Google Scholar]

- Fox, Davidson RJ. Patterns of brain electrical activity during facial signs of emotion in 10 month old infants. Developmental Psychology. 1988;24(2):230–236. [Google Scholar]

- Frank MG, Ekman P, Friesen WV. Behavioral markers and the recognizability of the smile of enjoyment. Journal of Personality and Social Psychology. 1993;64(1):83–93. doi: 10.1037//0022-3514.64.1.83. [DOI] [PubMed] [Google Scholar]

- Gross R, Matthews I, Baker S. Active Appearance Models with Occlusion. Image and Vision Computing. 2006;24:593–604. [Google Scholar]

- Harker L, Keltner D. Expressions of positive emotion in women's college yearbook pictures and their relationship to personality and life outcomes across adulthood. Journal of Personality and Social Psychology. 2001;80(1):112–124. [PubMed] [Google Scholar]

- Harris CR. The mystery of ticklish laughter. American Scientist, July–August 1999 v87 i4 p344(8) Page 1. 1999 [Google Scholar]

- Ibanez L, Messinger D, Newell L, Sheskin M, Lambert B. Visual disengagement in the infant siblings of children with an Autism Spectrum Disorder (ASD) Autism:International Journal of Research and Practice. 2008;12(5):523–535. doi: 10.1177/1362361308094504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaye K, Fogel A. The temporal structure of face-to-face communication between mothers and infants. Developmental Psychology. 1980;16(5):454–464. [Google Scholar]

- Kochanska G. Mutually responsive orientation between mothers and their young children: A context for the early development of conscience. Current Directions in Psychological Science. 2002;11(6):191–195. [Google Scholar]

- Kochanska G, Forman DR, Coy KC. Implications of the mother-child relationship in infancy socialization in the second year of life. Infant Behavior & Development. 1999;22(2):249–265. [Google Scholar]

- Kochanska G, Murray KT. Mother-child mutually responsive orientation and conscience development: From toddler to early school age. Child Development. 2000;71(2):417–431. doi: 10.1111/1467-8624.00154. [DOI] [PubMed] [Google Scholar]

- Lester BM, Hoffman J, Brazelton TB. The rhythmic structure of mother-infant interaction in term and preterm infants. Child Development. 1985;56:15–27. [PubMed] [Google Scholar]

- Littlewort G, Bartlett MS, Movellan JR. Are your eyes smiling? Detecting genuine smiles with support vector machines and Gabor wavelets. Paper presented at the Proceedings of the 8th Annual Joint Symposium on Neural Computation.2001. [Google Scholar]

- Meng X-l, Rosenthal R, Rubin DB. Comparing correlated correlation coefficients. Psychological Bulletin. 1992;111:172–175. [Google Scholar]

- Messinger D. Positive and negative: Infant facial expressions and emotions. Current Directions in Psychological Science. 2002;11(1):1–6. [Google Scholar]

- Messinger D, Fogel A. The interactive development of social smiling. In: Kail R, editor. Advances in Child Development and Behavior. Vol. 35. Oxford: Elsevier; 2007. pp. 328–366. [DOI] [PubMed] [Google Scholar]

- Messinger D, Fogel A, Dickson K. All smiles are positive, but some smiles are more positive than others. Developmental Psychology. 2001;37(5):642–653. [PubMed] [Google Scholar]

- Messinger DS, Cassel T, Acosta S, Ambadar Z, Cohn J. Infant Smiling Dynamics and Perceived Positive Emotion. Journal of Nonverbal Behavior. 2008;32:133–155. doi: 10.1007/s10919-008-0048-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oster H. Unpublished monograph and coding manual. New York University; 2006. Baby FACS: Facial Action Coding System for Infants and Young Children. [Google Scholar]

- Reed LI, Sayette MA, Cohn JF. Impact of depression on response to comedy: A dynamic facial coding analysis. Journal of Abnormal Psychology. 2007;116(4):804–809. doi: 10.1037/0021-843X.116.4.804. [DOI] [PubMed] [Google Scholar]

- Rogers CR, Schmidt KL, VanSwearingen JM, Cohn JF, Wachtman GS, Manders EK, Deleyiannis FWB. Automated facial image analysis: Detecting improvement in abnormal facial movement after treatment with Botulinum toxin. A Annals of Plastic Surgery. 2007;58:39–47. doi: 10.1097/01.sap.0000250761.26824.4f. [DOI] [PubMed] [Google Scholar]

- Ruch W. Exhilaration and humor. In: Lewis M, Haviland JM, editors. Handbook of emotions. New York: Guilford; 1993. pp. 605–616. [Google Scholar]

- Schmidt KL, Ambadar Z, Cohn JF, Reed L. Movement differences between deliberate and spontaneous facial expressions: Zygomaticus major action in smiling. Journal of Nonverbal Behavior. 2006;30(1):37–52. doi: 10.1007/s10919-005-0003-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schneider K, Uzner L. Preschoolers' attention and emotion in an achievement and an effect game: A longitudinal study. Cognition and Emotion. 1992;6(1):37–63. [Google Scholar]

- Schore AN. Affect Regulation & the Origin of Self: The Neurobiology of Emotional Development. Hillsdale, NJ: Erlbaum; 1994. [Google Scholar]

- Stern DN. Mother and infant at play: The dyadic interaction involving facial, vocal,and gaze behaviors. In: Lewis M, Rosenblum LA, editors. The effect of the infant on its caregiver. New York: Wiley; 1974. pp. 187–213. [Google Scholar]

- Stern DN. The interpersonal world of the infant: A view from psychoanalysis and developmental psychology. New York: Basic Books; 1985. [Google Scholar]

- Tian Y, Kanade T, Cohn JF. Recognizing Action Units for Facial Expression Analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2001;23(2):97–116. doi: 10.1109/34.908962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian YL, Kanade T, Cohn JF. Evaluation of Gabor-wavelet-based facial action unit recognition in image sequences of increasing complexity. Washington, DC. Paper presented at the Proceedings of the Fifth IEEE International Conference on Automatic Face and Gesture Recognition.2002. [Google Scholar]

- Tronick Emotions and emotional communication in infants. American Psychologist. 1989;44(2):112–119. doi: 10.1037//0003-066x.44.2.112. [DOI] [PubMed] [Google Scholar]

- Tronick EZ, Cohn JF. Infant-mother face-to-face interaction: Age and gender differences in coordination and the occurrence of miscoordination. Child Development. 1989;60(1):85–92. [PubMed] [Google Scholar]

- Tronick EZ, Messinger D, Weinberg KM, Lester BM, LaGasse L, Seifer R, Bauer C, Shankaran S, Bada H, Wright LL, Smeriglio V, Poole K, Liu J. Cocaine Exposure Compromises Infant and Caregiver Social Emotional Behavior and Dyadic Interactive Features in the Face-to-Face Still-Face Paradigm. Developmental Psychology. 2005;41(5):711–722. doi: 10.1037/0012-1649.41.5.711. [DOI] [PubMed] [Google Scholar]

- Van Egeren LA, Barratt MS, Roach MA. Mother-infant responsiveness: Timing, mutual regulation, and interactional context. Developmental Psychology. 2001;37(5):684–697. doi: 10.1037//0012-1649.37.5.684. [DOI] [PubMed] [Google Scholar]

- Wang Y, Lucey S, Saragih J, Cohn JF. Non-rigid tracking with local appearance consistency constraint. Amsterdam, NE. Paper presented at the Eighth IEEE International Conference on Automatic Face and Gesture Recognition; 2008. in press. [Google Scholar]

- Weinberg KM, Tronick EZ. Infant and Caregiver Engagement Phases system. Boston, MA: Harvard Medical School; 1998. [Google Scholar]

- Weinberg MK, Tronick EZ, Cohn JF, Olson KL. Gender differences in emotional expressivity and self-regulation during early infancy. Developmental Psychology. 1999;35(1):175–188. doi: 10.1037//0012-1649.35.1.175. [DOI] [PubMed] [Google Scholar]