Abstract

Partially Observable Markov Decision Processes (POMDPs) provide a rich framework for sequential decision-making under uncertainty in stochastic domains. However, solving a POMDP is often intractable except for small problems due to their complexity. Here, we focus on online approaches that alleviate the computational complexity by computing good local policies at each decision step during the execution. Online algorithms generally consist of a lookahead search to find the best action to execute at each time step in an environment. Our objectives here are to survey the various existing online POMDP methods, analyze their properties and discuss their advantages and disadvantages; and to thoroughly evaluate these online approaches in different environments under various metrics (return, error bound reduction, lower bound improvement). Our experimental results indicate that state-of-the-art online heuristic search methods can handle large POMDP domains efficiently.

1. Introduction

The Partially Observable Markov Decision Process (POMDP) is a general model for sequential decision problems in partially observable environments. Many planning and control problems can be modeled as POMDPs, but very few can be solved exactly because of their computational complexity: finite-horizon POMDPs are PSPACE-complete (Papadimitriou & Tsitsiklis, 1987) and infinite-horizon POMDPs are undecidable (Madani, Hanks, & Condon, 1999).

In the last few years, POMDPs have generated significant interest in the AI community and many approximation algorithms have been developed (Hauskrecht, 2000; Pineau, Gordon, & Thrun, 2003; Braziunas & Boutilier, 2004; Poupart, 2005; Smith & Simmons, 2005; Spaan & Vlassis, 2005). All these methods are offline algorithms, meaning that they specify, prior to the execution, the best action to execute for all possible situations. While these approximate algorithms can achieve very good performance, they often take significant time (e.g. more than an hour) to solve large problems, where there are too many possible situations to enumerate (let alone plan for). Furthermore, small changes in the environment’s dynamics require recomputing the full policy, which may take hours or days.

On the other hand, online approaches (Satia & Lave, 1973; Washington, 1997; Barto, Bradtke, & Singhe, 1995; Paquet, Tobin, & Chaib-draa, 2005; McAllester & Singh, 1999; Bertsekas & Castanon, 1999; Shani, Brafman, & Shimony, 2005) try to circumvent the complexity of computing a policy by planning online only for the current information state. Online algorithms are sometimes also called agent-centered search algorithms (Koenig, 2001). Whereas an offline search would compute an exponentially large contingency plan considering all possible happenings, an online search only considers the current situation and a small horizon of contingency plans. Moreover, some of these approaches can handle environment changes without requiring more computation, which allows online approaches to be applicable in many contexts where offline approaches are not applicable, for instance, when the task to accomplish, as defined by the reward function, changes regularly in the environment. One drawback of online planning is that it generally needs to meet real-time constraints, thus greatly reducing the available planning time, compared to offline approaches.

Recent developments in online POMDP search algorithms (Paquet, Chaib-draa, & Ross, 2006; Ross & Chaib-draa, 2007; Ross, Pineau, & Chaib-draa, 2008) suggest that combining approximate offline and online solving approaches may be the most e3cient way to tackle large POMDPs. In fact, we can generally compute a very rough policy offline using existing offline value iteration algorithms, and then use this approximation as a heuristic function to guide the online search algorithm. This combination enables online search algorithms to plan on shorter horizons, thereby respecting online real-time constraints and retaining a good precision. By doing an exact online search over a fixed horizon, we can guarantee a reduction in the error of the approximate offline value function. The overall time (offline and online) required to obtain a good policy can be dramatically reduced by combining both approaches.

The main purpose of this paper is to draw the attention of the AI community to online methods as a viable alternative for solving large POMDP problems. In support of this, we first survey the various existing online approaches that have been applied to POMDPs, and discuss their strengths and drawbacks. We present various combinations of online algorithms with various existing offline algorithms, such as QMDP (Littman, Cassandra, & Kaelbling, 1995), FIB (Hauskrecht, 2000), Blind (Hauskrecht, 2000; Smith & Simmons, 2005) and PBVI (Pineau et al., 2003). We then compare empirically different online approaches in two large POMDP domains according to different metrics (average discounted return, error bound reduction, lower bound improvement). We also evaluate how the available online planning time and offline planning time affect the performance of different algorithms. The results of our experiments show that many state-of-the-art online heuristic search methods are tractable in large state and observation spaces, and achieve the solution quality of state-of-the-art offline approaches at a fraction of the computational cost. The best methods achieve this by focusing the search on the most relevant future outcomes for the current decision, e.g. those that are more likely and that have high uncertainty (error) on their long-term values, such as to minimize as quickly as possible an error bound on the performance of the best action found. The tradeoff between solution quality and computing time offered by the combinations of online and offline approaches is very attractive for tackling increasingly large domains.

2. POMDP Model

Partially observable Markov decision processes (POMDPs) provide a general framework for acting in partially observable environments (Astrom, 1965; Smallwood & Sondik, 1973; Monahan, 1982; Kaelbling, Littman, & Cassandra, 1998). A POMDP is a generalization of the MDP model for planning under uncertainty, which gives the agent the ability to effectively estimate the outcome of its actions even when it cannot exactly observe the state of its environment.

Formally, a POMDP is represented as a tuple (S, A, T, R, Z, O) where:

S is the set of all the environment states. A state is a description of the environment at a specific moment and it should capture all information relevant to the agent’s decision-making process.

A is the set of all possible actions.

T : S × A × S → [0, 1] is the transition function, where T(s, a, s′) = Pr(s′|s, a) represents the probability of ending in state s′ if the agent performs action a in state s.

R : S × A → ℝ is the reward function, where R(s, a) is the reward obtained by executing action a in state s.

Z is the set of all possible observations.

O : S × A × Z → [0, 1] is the observation function, where O(s′, a, z) = Pr(z|a, s′) gives the probability of observing z if action a is performed and the resulting state is s′.

We assume in this paper that S, A and Z are all finite and that R is bounded.

A key aspect of the POMDP model is the assumption that the states are not directly observable. Instead, at any given time, the agent only has access to some observation z ∈ Z that gives incomplete information about the current state. Since the states are not observable, the agent cannot choose its actions based on the states. It has to consider a complete history of its past actions and observations to choose its current action. The history at time t is defined as:

| (1) |

This explicit representation of the past is typically memory expensive. Instead, it is possible to summarize all relevant information from previous actions and observations in a probability distribution over the state space S, which is called a belief state (Astrom, 1965). A belief state at time t is defined as the posterior probability distribution of being in each state, given the complete history:

| (2) |

The belief state bt is a sufficient statistic for the history ht (Smallwood & Sondik, 1973), therefore the agent can choose its actions based on the current belief state bt instead of all past actions and observations. Initially, the agent starts with an initial belief state b0, representing its knowledge about the starting state of the environment. Then, at any time t, the belief state bt can be computed from the previous belief state bt−1, using the previous action at−1 and the current observation zt. This is done with the belief state update function τ(b, a, z), where bt = τ (bt−1, at−1, zt) is defined by the following equation:

| (3) |

where Pr(z|b, a), the probability of observing z after doing action a in belief b, acts as a normalizing constant such that bt remains a probability distribution:

| (4) |

Now that the agent has a way of computing its belief, the next interesting question is how to choose an action based on this belief state.

This action is determined by the agent’s policy π, specifying the probability that the agent will execute any action in any given belief state, i.e. π defines the agent’s strategy for all possible situations it could encounter. This strategy should maximize the amount of reward earned over a finite or infinite time horizon. In this article, we restrict our attention to infinite-horizon POMDPs where the optimality criterion is to maximize the expected sum of discounted rewards (also called the return or discounted return). More formally, the optimal policy π* can be defined by the following equation:

| (5) |

where γ ∈ [0, 1) is the discount factor and π(bt, a) is the probability that action a will be performed in belief bt, as prescribed by the policy π.

The return obtained by following a specific policy π, from a certain belief state b, is defined by the value function equation Vπ:

| (6) |

Here the function RB(b, a) specifies the immediate expected reward of executing action a in belief b according to the reward function R:

| (7) |

The sum over Z in Equation 6 is interpreted as the expected future return over the infinite horizon of executing action a, assuming the policy π is followed afterwards.

Note that with the definitions of RB(b, a), Pr(z|b, a) and τ (b, a, z), one can view a POMDP as an MDP over belief states (called the belief MDP), where Pr(z|b, a) specifies the probability of moving from b to τ (b, a, z) by doing action a, and RB(b, a) is the immediate reward obtained by doing action a in b.

The optimal policy π* defined in Equation 5 represents the action-selection strategy that will maximize equation Vπ (b0). Since there always exists a deterministic policy that maximizes Vπ for any belief states (Sondik, 1978), we will generally only consider deterministic policies (i.e. those that assign a probability of 1 to a specific action in every belief state).

The value function V* of the optimal policy π* is the fixed point of Bellman’s equation (Bellman, 1957):

| (8) |

Another useful quantity is the value of executing a given action a in a belief state b, which is denoted by the Q-value:

| (9) |

Here the only difference with the definition of V* is that the max operator is omitted. Notice that Q*(b, a) determines the value of a by assuming that the optimal policy is followed at every step after action a.

We now review different offline methods for solving POMDPs. These are used to guide some of the online heuristic search methods discussed later, and in some cases they form the basis of other online solutions.

2.1 Optimal Value Function Algorithm

One can solve optimally a POMDP for a specified finite horizon H by using the value iteration algorithm (Sondik, 1971). This algorithm uses dynamic programming to compute increasingly more accurate values for each belief state b. The value iteration algorithm begins by evaluating the value of a belief state over the immediate horizon t = 1. Formally, let V be a value function that takes a belief state as parameter and returns a numerical value in ℝ of this belief state. The initial value function is:

| (10) |

The value function at horizon t is constructed from the value function at horizon t − 1 by using the following recursive equation:

| (11) |

The value function in Equation 11 defines the discounted sum of expected rewards that the agent can receive in the next t time steps, for any belief state b. Therefore, the optimal policy for a finite horizon t is simply to choose the action maximizing Vt(b):

| (12) |

This last equation associates an action to a specific belief state, and therefore must be computed for all possible belief states in order to define a full policy.

A key result by Smallwood and Sondik (1973) shows that the optimal value function for a finite-horizon POMDP can be represented by hyperplanes, and is therefore convex and piecewise linear. It means that the value function Vt at any horizon t can be represented by a set of |S|-dimensional hyperplanes: Γt = {α0, α1,…, αm}. These hyperplanes are often called α-vectors. Each defines a linear value function over the belief state space associated with some action α ∈ A. The value of a belief state is the maximum value returned by one of the α-vectors for this belief state. The best action is the one associated with the α-vector that returned the best value:

| (13) |

A number of exact value function algorithms leveraging the piecewise-linear and convex aspects of the value function have been proposed in the POMDP literature (Sondik, 1971; Monahan, 1982; Littman, 1996; Cassandra, Littman, & Zhang, 1997; Zhang & Zhang, 2001). The problem with most of these exact approaches is that the number of α-vectors needed to represent the value function grows exponentially in the number of observations at each iteration, i.e. the size of the set Γt is in O(|A||Γt−1||Z|). Since each new α-vector requires computation time in O(|Z||S|2), the resulting complexity of iteration t for exact approaches is in O(|A||Z||S|2|Γt−1||Z|). Most of the work on exact approaches has focused on finding efficient ways to prune the set Γt, such as to effectively reduce computation.

2.2 Offline Approximate Algorithms

Due to the high complexity of exact solving approaches, many researchers have worked on improving the applicability of POMDP approaches by developing approximate offline approaches that can be applied to larger problems.

In the online methods we review below, approximate offline algorithms are often used to compute lower and upper bounds on the optimal value function. These bounds are leveraged to orient the search in promising directions, to apply branch-and-bound pruning techniques, and to estimate the long term reward of belief states, as we will show in Section 3. However, we will generally want to use approximate methods which require very low computational cost. We will be particularly interested in approximations that use the underlying MDP1 to compute lower bounds (Blind policy) and upper bounds (MDP, QMDP, FIB) on the exact value function. We also investigate the usefulness of using more precise lower bounds provided by point-based methods. We now briefly review the offline methods which will be featured in our empirical investigation. Some recent publications provide a more comprehensive overview of offline approximate algorithms (Hauskrecht, 2000; Pineau, Gordon, & Thrun, 2006).

2.2.1 Blind policy

A Blind policy (Hauskrecht, 2000; Smith & Simmons, 2005) is a policy where the same action is always executed, regardless of the belief state. The value function of any Blind policy is obviously a lower bound on V * since it corresponds to the value of one specific policy that the agent could execute in the environment. The resulting value function is specified by a set of |A| α-vectors, where each α-vector specifies the long term expected reward of following its corresponding blind policy. These α-vectors can be computed using a simple update rule:

| (14) |

where . Once these α-vectors are computed, we use Equation 13 to obtain the lower bound on the value of a belief state. The complexity of each iteration is in O(|A||S|2), which is far less than exact methods. While this lower bound can be computed very quickly, it is usually not very tight and thus not very informative.

2.2.2 Point-Based Algorithms

To obtain tighter lower bounds, one can use point-based methods (Lovejoy, 1991; Hauskrecht, 2000; Pineau et al., 2003). This popular approach approximates the value function by updating it only for some selected belief states. These point-based methods sample belief states by simulating some random interactions of the agent with the POMDP environment, and then update the value function and its gradient over those sampled beliefs. These approaches circumvent the complexity of exact approaches by sampling a small set of beliefs and maintaining at most one α-vector per sampled belief state. Let B represent the set of sampled beliefs, then the set Γt of α-vectors at time t is obtained as follows:

| (15) |

To ensure that this gives a lower bound, Γ0 is initialized with a single α-vector . Since |Γt−1| ≤ |B|, each iteration has a complexity in O(|A||Z||S||B|(|S|+|B|)), which is polynomial time, compared to exponential time for exact approaches.

Different algorithms have been developed using the point-based approach: PBVI (Pineau et al., 2003), Perseus (Spaan & Vlassis, 2005), HSVI (Smith & Simmons, 2004, 2005) are some of the most recent methods. These methods differ slightly in how they choose belief states and how they update the value function at these chosen belief states. The nice property of these approaches is that one can tradeoff between the complexity of the algorithm and the precision of the lower bound by increasing (or decreasing) the number of sampled belief points.

2.2.3 MDP

The MDP approximation consists in approximating the value function V * of the POMDP by the value function of its underlying MDP (Littman et al., 1995). This value function is an upper bound on the value function of the POMDP and can be computed using Bellman’s equation:

| (16) |

The value V̂ (b) of a belief state b is then computed as V̂ (b) = Σs∈S VMDP(s)b(s). This can be computed very quickly, as each iteration of Equation 16 can be done in O(|A||S|2).

2.2.4 QMDP

The QMDP approximation is a slight variation of the MDP approximation (Littman et al., 1995). The main idea behind QMDP is to consider that all partial observability disappear after a single step. It assumes the MDP solution is computed to generate (Equation 16). Given this, we define:

| (17) |

This approximation defines an α-vector for each action, and gives an upper bound on V * that is tighter than V MDP ( i.e. for all belief b). Again, to obtain the value of a belief state, we use Equation 13, where Γt will contain one α-vector for each a ∈ A.

2.2.5 FIB

The two upper bounds presented so far, QMDP and MDP, do not take into account the partial observability of the environment. In particular, information-gathering actions that may help identify the current state are always suboptimal according to these bounds. To address this problem, Hauskrecht (2000) proposed a new method to compute upper bounds, called the Fast Informed Bound (FIB), which is able to take into account (to some degree) the partial observability of the environment. The α-vector update process is described as follows:

| (18) |

The α-vectors can be initialized to the α-vectors found by QMDP at convergence, i.e. . FIB defines a single α-vector for each action and the value of a belief state is computed according to Equation 13. FIB provides a tighter upper bound than QMDP ( i.e. for all b). The complexity of the algorithm remains acceptable, as each iteration requires O(|A|2|S|2|Z|) operations.

3. Online Algorithms for POMDPs

With offline approaches, the algorithm returns a policy defining which action to execute in every possible belief state. Such approaches tend to be applicable only when dealing with small to mid-size domains, since the policy construction step takes significant time. In large POMDPs, using a very rough value function approximation (such as the ones presented in Section 2.2) tends to substantially hinder the performance of the resulting approximate policy. Even more recent point-based methods produce solutions of limited quality in very large domains (Paquet et al., 2006).

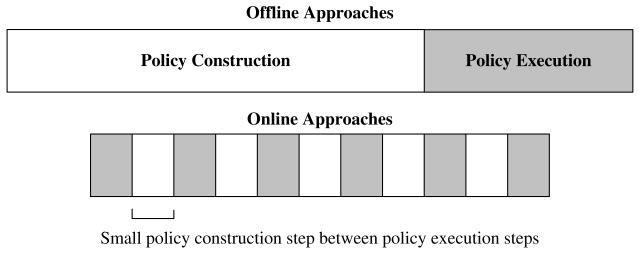

Hence in large POMDPs, a potentially better alternative is to use an online approach, which only tries to find a good local policy for the current belief state of the agent. The advantage of such an approach is that it only needs to consider belief states that are reachable from the current belief state. This focuses computation on a small set of beliefs. In addition, since online planning is done at every step (and thus generalization between beliefs is not required), it is sufficient to calculate only the maximal value for the current belief state, not the full optimal α-vector. In this setting, the policy construction steps and the execution steps are interleaved with one another as shown in Figure 1. In some cases, online approaches may require a few extra execution steps (and online planning), since the policy is locally constructed and therefore not always optimal. However the policy construction time is often substantially shorter. Consequently, the overall time for the policy construction and execution is normally less for online approaches (Koenig, 2001). In practice, a potential limitation of online planning is when we need to meet short real-time constraints. In such case, the time available to construct the plan is very small compared to offline algorithms.

Figure 1.

Comparison between offline and online approaches.

3.1 General Framework for Online Planning

This subsection presents a general framework for online planning algorithms in POMDPs. Subsequently, we discuss specific approaches from the literature and describe how they vary in tackling various aspects of this general framework.

An online algorithm is divided into a planning phase, and an execution phase, which are applied alternately at each time step.

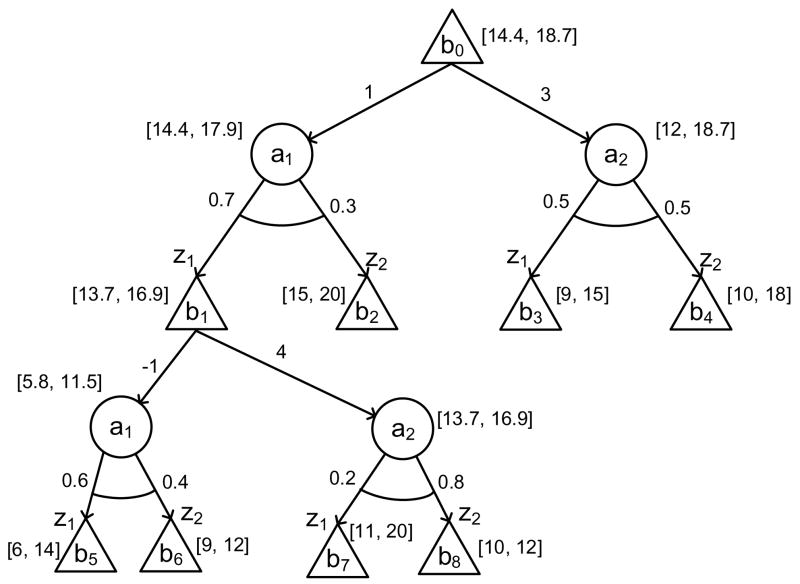

In the planning phase, the algorithm is given the current belief state of the agent and computes the best action to execute in that belief. This is usually achieved in two steps. First a tree of reachable belief states from the current belief state is built by looking at several possible sequences of actions and observations that can be taken from the current belief. In this tree, the current belief is the root node and subsequent reachable beliefs (as calculated by the τ (b, a, z) function of Equation 3) are added to the tree as child nodes of their immediate previous belief. Belief nodes are represented using OR-nodes (at which we must choose an action) and actions are included in between each layer of belief nodes using AND-nodes (at which we must consider all possible observations that lead to subsequent beliefs). Then the value of the current belief is estimated by propagating value estimates up from the fringe nodes, to their ancestors, all the way to the root, according to Bellman’s equation (Equation 8). The long-term value of belief nodes at the fringe is usually estimated using an approximate value function computed offline. Some methods also maintain both a lower bound and an upper bound on the value of each node. An example on how such a tree is contructed and evaluated is presented in Figure 2.

Figure 2.

An AND-OR tree constructed by the search process for a POMDP with 2 actions and 2 observations. The belief states OR-nodes are represented by triangular nodes and the action AND-nodes by circular nodes. The rewards RB(b, a) are represented by values on the outgoing arcs from OR-nodes and probabilities Pr(z|b, a) are shown on the outgoing arcs from AND-nodes. The values inside brackets represent the lower and upper bounds that were computed according to Equations 19 - 22, assuming a discount factor γ = 0.95. Also notice in this example that the action a1 in belief state b1 could be pruned since its upper bound (= 11.5) is lower than the lower bound (= 13.7) of action a2 in b1.

Once the planning phase terminates, the execution phase proceeds by executing the best action found for the current belief in the environment, and updating the current belief and tree according to the observation obtained.

Notice that in general, the belief MDP could have a graph structure with cycles. Most online algorithms handle such a structure by unrolling the graph into a tree. Hence, if they reach a belief that is already elsewhere in the tree, it is duplicated. These algorithms could always be modified to handle generic graph structures by using a technique proposed in the LAO* algorithm (Hansen & Zilberstein, 2001) to handle cycles. However there are some advantages and disadvantages to doing this. A more in-depth discussion of this issue is presented in Section 5.4.

A generic online algorithm implementing the planning phase (lines 5–9) and the execution phase (lines 10–13) is presented in Algorithm 3.1. The algorithm first initializes the tree to contain only the initial belief state (line 2). Then given the current tree, the planning phase of the algorithm proceeds by first selecting the next fringe node (line 6) under which it should pursue the search (construction of the tree). The Expand function (line 7) constructs the next reachable beliefs (using Equation 3) under the selected leaf for some pre-determined expansion depth D and evaluates the approximate value function for all newly created nodes. The new approximate value of the expanded node is propagated to its ancestors via the UpdateAncestors function (line 8). This planning phase is conducted until some terminating condition is met (e.g. no more planning time is available or an ε-optimal action is found).

Algorithm 3.1.

Generic Online Algorithm.

| 1: | Function OnlinePOMDPSolver() |

| Static: bc: The current belief state of the agent. | |

| T: An AND-OR tree representing the current search tree. | |

| D: Expansion depth. | |

| L: A lower bound on V *. | |

| U: An upper bound on V *. | |

| 2: | bc ← b0 |

| 3: | Initialize T to contain only bc at the root |

| 4: | while not ExecutionTerminated() do |

| 5: | while not PlanningTerminated() do |

| 6: | b* ← ChooseNextNodeToExpand() |

| 7: | Expand(b*, D) |

| 8: | UpdateAncestors(b*) |

| 9: | end while |

| 10: | Execute best action â for bc |

| 11: | Perceive a new observation z |

| 12: | bc ← τ(bc, â, z) |

| 13: | Update tree T so that bc is the new root |

| 14: | end while |

The execution phase of the algorithm executes the best action α̂ found during planning (line 10) and gets a new observation from the environment (line 11). Next, the algorithm updates the current belief state and the search tree T according to the most recent action α̂ and observation z (lines 12–13). Some online approaches can reuse previous computations by keeping the subtree under the new belief and resuming the search from this subtree at the next time step. In such cases, the algorithm keeps all the nodes in the tree T under the new belief bc and deletes all other nodes from the tree. Then the algorithm loops back to the planning phase for the next time step, and so on until the task is terminated.

As a side note, an online planning algorithm can also be useful to improve the precision of an approximate value function computed offline. This is captured in Theorem 3.1.

Theorem 3.1

(Puterman, 1994; Hauskrecht, 2000) Let V̂ be an approximate value function and ε = supb |V *(b) − V̂ (b)|. Then the approximate value V̂ D(b) returned by a D-step lookahead from belief b, using V̂ to estimate fringe node values, has error bounded by |V*(b) − V̂ D(b)| ≤ γD ε.

We notice that for γ ∈ [0, 1), the error converges to 0 as the depth D of the search tends to ∞. This indicates that an online algorithm can effectively improve the performance obtained by an approximate value function computed offline, and can find an action arbitrarily close to the optimal for the current belief. However, evaluating the tree of all reachable beliefs within depth D has a complexity in O((|A||Z|)D|S|2), which is exponential in D. This becomes quickly intractable for large D. Furthermore, the planning time available during the execution may be very short and exploring all beliefs up to depth D may be infeasible. Hence this motivates the need for more efficient online algorithms that can guarantee similar or better error bounds.

To be more efficient, most of the online algorithms focus on limiting the number of reachable beliefs explored in the tree (or choose only the most relevant ones). These approaches generally differ only in how the subroutines ChooseNextNodeToExpand and Expand are implemented. We classify these approaches into three categories: Branch-and-Bound Pruning, Monte Carlo Sampling and Heuristic Search. We now present a survey of these approaches and discuss their strengths and drawbacks. A few other online algorithms do not proceed via tree search; these approaches are discussed in Section 3.5.

3.2 Branch-and-Bound Pruning

Branch-and-Bound pruning is a general search technique used to prune nodes that are known to be suboptimal in the search tree, thus preventing the expansion of unnecessary lower nodes. To achieve this in the AND-OR tree, a lower bound and an upper bound are maintained on the value Q*(b, a) of each action a, for every belief b in the tree. These bounds are computed by first evaluating the lower and upper bound for the fringe nodes of the tree. These bounds are then propagated to parent nodes according to the following equations:

| (19) |

| (20) |

| (21) |

| (22) |

where ℱ(T) denotes the set of fringe nodes in tree T, UT (b) and LT (b) represent the upper and lower bounds on V *(b) associated to belief state b in the tree T, UT (b, a) and LT (b, a) represent corresponding bounds on Q*(b, a), and L(b) and U(b) are the bounds used at fringe nodes, typically computed offline. These equations are equivalent to Bellman’s equation (Equation 8), however they use the lower and upper bounds of the children, instead of V *. Several techniques presented in Section 2.2 can be used to quickly compute lower bounds (Blind policy) and upper bounds (MDP, QMDP, FIB) offline.

Given these bounds, the idea behind Branch-and-Bound pruning is relatively simple: if a given action a in a belief b has an upper bound UT (b, a) that is lower than another action ã’s lower bound LT (b, ã), then we know that ã is guaranteed to have a value Q*(b, ã) ≥ Q*(b, a). Thus a is suboptimal in belief b. Hence that branch can be pruned and no belief reached by taking action a in b will be considered.

3.2.1 RTBSS

The Real-Time Belief Space Search (RTBSS) algorithm uses a Branch-and-Bound approach to compute the best action to take in the current belief (Paquet et al., 2005, 2006). Starting from the current belief, it expands the AND-OR tree in a depth-first search fashion, up to some pre-determined search depth D. The leaves of the tree are evaluated by using a lower bound computed offline, which is propagated upwards such that a lower bound is maintained for each node in the tree.

To limit the number of nodes explored, Branch-and-Bound pruning is used along the way to prune actions that are known to be suboptimal, thus excluding unnecessary nodes under these actions. To maximize pruning, RTBSS expands the actions in descending order of their upper bound (first action expanded is the one with highest upper bound). By expanding the actions in this order, one never expands an action that could have been pruned if actions had been expanded in a different order. Intuitively, if an action has a higher upper bound than the other actions, then it cannot be pruned by the other actions since their lower bound will never exceed their upper bound. Another advantage of expanding actions in descending order of their upper bound is that as soon as we find an action that can be pruned, then we also know that all remaining actions can be pruned, since their upper bounds are necessarily lower. The fact that RTBSS proceeds via a depth-first search also increases the number of actions that can be pruned since the bounds on expanded actions become more precise due to the search depth.

In terms of the framework in Algorithm 3.1, RTBSS requires the ChooseNextNodeToExpand subroutine to simply return the current belief bc. The UpdateAncestors function does not need to perform any operation since bc has no ancestor (root of the tree T). The Expand subroutine proceeds via depth-first search up to a fixed depth D, using Branch-and-Bound pruning, as mentioned above. This subroutine is detailed in Algorithm 3.2. After this expansion is performed, PlanningTerminated evaluates to true and the best action found is executed. At the end of each time step, the tree T is simply reinitialized to contain the new current belief at the root node.

Algorithm 3.2.

Expand subroutine of RTBSS.

| 1: | Function Expand(b, d) |

| Inputs: b: The belief node we want to expand. | |

| d: The depth of expansion under b. | |

| Static: T: An AND-OR tree representing the current search tree. | |

| L: A lower bound on V *. | |

| U: An upper bound on V *. | |

| 2: | if d = 0 then |

| 3: | LT (b) ← L(b) |

| 4: | else |

| 5: | Sort actions {a1, a2, …, a|A|} such that U(b, ai) ≥ U(b, aj) if i ≤ j |

| 6: | i ← 1 |

| 7: | LT (b) ← −∞ |

| 8: | while i ≤ |A| and U(b, ai) > LT (b) do |

| 9: | LT (b, ai) ← RB(b, ai) + γ Σz ∈ Z Pr(z|b, ai)Expand(τ(b, ai, z), d − 1) |

| 10: | LT (b) ← max{LT (b), LT (b, ai)} |

| 11: | i ← i + 1 |

| 12: | end while |

| 13: | end if |

| 14: | return LT (b) |

The efficiency of RTBSS depends largely on the precision of the lower and upper bounds computed offline. When the bounds are tight, more pruning will be possible, and the search will be more efficient. If the algorithm is unable to prune many actions, searching will be limited to short horizons in order to meet real-time constraints. Another drawback of RTBSS is that it explores all observations equally. This is inefficient since the algorithm could explore parts of the tree that have a small probability of occurring and thus have a small effect on the value function. As a result, when the number of observations is large, the algorithm is limited to exploring over a short horizon.

As a final note, since RTBSS explores all reacheable beliefs within depth D (except some reached by suboptimal actions), then it can guarantee the same error bound as a D-step lookahead (see Theorem 3.1). Therefore, the online search directly improves the precision of the original (offline) value bounds by a factor γD. This aspect was confirmed empirically in different domains where the RTBSS authors combined their online search with bounds given by various offline algorithms. In some cases, their results showed a tremendous improvement of the policy given by the offline algorithm (Paquet et al., 2006).

3.3 Monte Carlo Sampling

As mentioned above, expanding the search tree fully over a large set of observations is infeasible except for shallow depths. In such cases, a better alternative may be to sample a subset of observations at each expansion and only consider beliefs reached by these sampled observations. This reduces the branching factor of the search and allows for deeper search within a set planning time. This is the strategy employed by Monte Carlo algorithms.

3.3.1 McAllester and Singh

The approach presented by McAllester and Singh (1999) is an adaptation of the online MDP algorithm presented by Kearns, Mansour, and Ng (1999). It consists of a depth-limited search of the AND-OR tree up to a certain fixed horizon D where instead of exploring all observations at each action choice, C observations are sampled from a generative model. The probabilities Pr(z|b, a) are then approximated using the observed frequencies in the sample. The advantage of such an approach is that sampling an observation from the distribution Pr(z|b, a) can be achieved very efficiently in O(log |S| + log |Z|), while computing the exact probabilities Pr(z|b, a) is in O(|S|2) for each observation z. Thus sampling can be useful to alleviate the complexity of computing Pr(z|b, a), at the expense of a less precise estimate. Nevertheless, a few samples is often sufficient to obtain a good estimate as the observations that have the most effect on Q*(b, a) (i.e. those which occur with high probability) are more likely to be sampled. The authors also apply belief state factorization as in Boyen and Koller (1998) to simplify the belief state calculations.

For the implementation of this algorithm, the Expand subroutine expands the tree up to fixed depth D, using Monte Carlo sampling of observations, as mentioned above (see Algorithm 3.3). At the end of each time step, the tree T is reinitialized to contain only the new current belief at the root.

Algorithm 3.3.

Expand subroutine of McAllester and Singh’s Algorithm.

| 1: | Function Expand(b, d) |

| Inputs: b: The belief node we want to expand. | |

| d: The depth of expansion under b. | |

| Static: T: An AND-OR tree representing the current search tree. | |

| C: The number of observations to sample. | |

| 2: | if d = 0 then |

| 3: | LT (b) ← maxa ∈ A RB(b, a) |

| 4: | else |

| 5: | LT (b) ← −∞ |

| 6: | for all a ∈ A do |

| 7: | Sample  = {z1, z2, … zC} from distribution Pr(z|b, a) = {z1, z2, … zC} from distribution Pr(z|b, a) |

| 8: | |

| 9: | LT (b) ← max{LT (b), LT (b, a)} |

| 10: | end for |

| 11: | end if |

| 12: | return LT (b) |

Kearns et al. (1999) derive bounds on the depth D and the number of samples C needed to obtain an ε-optimal policy with high probability and show that the number of samples required grows exponentially in the desired accuracy. In practice, the number of samples required is infeasible given realistic online time constraints. However, performance in terms of returns is usually good even with many fewer samples.

One inconvenience of this method is that no action pruning can be done since Monte Carlo estimation is not guaranteed to correctly propagate the lower (and upper) bound property up the tree. In their article, the authors simply approximate the value of the fringe belief states by the immediate reward RB(b, a); this could be improved by using any good estimate of V * computed offline. Note also that this approach may be difficult to apply in domains where the number of actions |A| is large. Of course this may further impact performance.

3.3.2 Rollout

Another similar online Monte Carlo approach is the Rollout algorithm (Bertsekas & Castanon, 1999). The algorithm requires an initial policy (possibly computed offline). At each time step, it estimates the future expected value of each action, assuming the initial policy is followed at future time steps, and executes the action with highest estimated value. These estimates are obtained by computing the average discounted return obtained over a set of M sampled trajectories of depth D. These trajectories are generated by first taking the action to be evaluated, and then following the initial policy in subsequent belief states, assuming the observations are sampled from a generative model. Since this approach only needs to consider different actions at the root belief node, the number of actions |A| only influences the branching factor at the first level of the tree. Consequently, it is generally more scalable than McAllester and Singh’s approach. Bertsekas and Castanon (1999) also show that with enough sampling, the resulting policy is guaranteed to perform at least as well as the initial policy with high probability. However, it generally requires many sampled trajectories to provide substantial improvement over the initial policy. Furthermore, the initial policy has a significant impact on the performance of this approach. In particular, in some cases it might be impossible to improve the return of the initial policy by just changing the immediate action (e.g. if several steps need to be changed to reach a specific subgoal to which higher rewards are associated). In those cases, the Rollout policy will never improve over the initial policy.

To address this issue relative to the initial policy, Chang, Givan, and Chong (2004) introduced a modified version of the algorithm, called Parallel Rollout. In this case, the algorithm starts with a set of initial policies. Then the algorithm proceeds as Rollout for each of the initial policies in the set. The value considered for the immediate action is the maximum over that set of initial policies, and the action with highest value is executed. In this algorithm, the policy obtained is guaranteed to perform at least as well as the best initial policy with high probability, given enough samples. Parallel Rollout can handle domains with a large number of actions and observations, and will perform well when the set of initial policies contain policies that are good in different regions of the belief space.

The Expand subroutine of the Parallel Rollout algorithm is presented in Algorithm 3.4. The original Rollout algorithm by Bertsekas and Castanon (1999) is the same algorithm in the special case where the set of initial policies contains only one policy. The other subroutines proceed as in McAllester and Singh’s algorithm.

Algorithm 3.4.

Expand subroutine of the Parallel Rollout Algorithm.

| 1: | Function Expand(b, d) |

| Inputs: b: The belief node we want to expand. | |

| d: The depth of expansion under b. | |

| Static: T: An AND-OR tree representing the current search tree. | |

| Π: A set of initial policies. | |

| M: The number of trajectories of depth d to sample. | |

| 2: | LT (b) ← −∞ |

| 3: | for all a ∈ A do |

| 4: | for all π ∈ Π do |

| 5: | Q̂π(b, a) ← 0 |

| 6: | for i = 1 to M do |

| 7: | b̃ ← b |

| 8: | ã ← a |

| 9: | for j = 0 to d do |

| 10: | |

| 11: | z ← SampleObservation(b̃, ã) |

| 12: | b̃ ← τ(b̃, ã, z) |

| 13: | ã ← p(b̃) |

| 14: | end for |

| 15: | end for |

| 16: | end for |

| 17: | LT (b, a) = maxπ∈ Π Q̂π (b, a) |

| 18: | end for |

3.4 Heuristic Search

Instead of using Branch-and-Bound pruning or Monte Carlo sampling to reduce the branching factor of the search, heuristic search algorithms try to focus the search on the most relevant reachable beliefs by using heuristics to select the best fringe beliefs node to expand. The most relevant reachable beliefs are the ones that would allow the search algorithm to make good decisions as quickly as possible, i.e. by expanding as few nodes as possible.

There are three different online heuristic search algorithms for POMDPs that have been proposed in the past: Satia and Lave (1973), BI-POMDP (Washington, 1997) and AEMS (Ross & Chaib-draa, 2007). These algorithms all maintain both lower and upper bounds on the value of each node in the tree (using Equations 19 – 22) and only differ in the specific heuristic used to choose the next fringe node to expand in the AND/OR tree. We first present the common subroutines for these algorithms, and then discuss their different heuristics.

Recalling the general framework of Algorithm 3.1, three steps are interleaved several times in heuristic search algorithms. First, the best fringe node to expand (according to the heuristic) in the current search tree T is found. Then the tree is expanded under this node (usually for only one level). Finally, ancestor nodes’ values are updated; their values must be updated before we choose the next node to expand, since the heuristic value usually depends on them. In general, heuristic search algorithms are slightly more computationally expensive than standard depth- or breadth-first search algorithms, due to the extra computations needed to select the best fringe node to expand, and the need to update ancestors at each iteration. This was not required by the previous methods using Branch-and-Bound pruning and/or Monte Carlo sampling. If the complexity of these extra steps is too high, then the benefit of expanding only the most relevant nodes might be outweighed by the lower number of nodes expanded (assuming a fixed planning time).

In heuristic search algorithms, a particular heuristic value is associated with every fringe node in the tree. This value should indicate how important it is to expand this node in order to improve the current solution. At each iteration of the algorithm, the goal is to find the fringe node that maximizes this heuristic value among all fringe nodes. This can be achieved efficiently by storing in each node of the tree a reference to the best fringe node to expand within its subtree, as well as its associated heuristic value. In particular, the root node always contains a reference to the best fringe node for the whole tree. When a node is expanded, its ancestors are the only nodes in the tree where the best fringe node reference, and corresponding heuristic value, need to be updated. These can be updated efficiently by using the references and heuristic values stored in the lower nodes via a dynamic programming algorithm, described formally in Equations 23 and 24. Here denotes the highest heuristic value among the fringe nodes in the subtree of b, is a reference to this fringe node, HT (b) is the basic heuristic value associated to fringe node b, and HT (b, a) and HT (b, a, z) are factors that weigh this basic heuristic value at each level of the tree T. For example, HT (b, a, z) could be Pr(z|b, a) in order to give higher weight to (and hence favor) fringe nodes that are reached by the most likely observations.

| (23) |

| (24) |

This procedure finds the fringe node b ∈ ℱ(T) that maximizes the overall heuristic value , where bi, ai and zi represent the ith belief, action and observation on the path from bc to b in T, and dT (b) is the depth of fringe node b. Note that and are only updated in the ancestor nodes of the last expanded node. By reusing previously computed values for the other nodes, we have a procedure that can find the best fringe node to expand in the tree in time linear in the depth of the tree (versus exponential in the depth of the tree for the exhaustive search through all fringe nodes). These updates are performed in both the Expand and the UpdateAncestors subroutines, each of which is described in more detail below. After each iteration, the ChooseNextNodeToExpand subroutine simply returns the reference to this best fringe node stored in the root of the tree, i.e. .

The Expand subroutine used by heuristic search methods is presented in Algorithm 3.5. It performs a one-step lookahead under the fringe node b. The main difference with respect to previous methods in Sections 3.2 and 3.3 is that the heuristic value and best fringe node to expand for these new nodes are computed at lines 7–8 and 12–14. The best leaf node in b’s subtree and its heuristic value are then computed according to Equations 23 and 24 (lines 18–20).

Algorithm 3.5.

Expand: Expand subroutine for heuristic search algorithms.

| 1: | Function Expand(b) | |

| Inputs: b: An OR-Node we want to expand. | ||

| Static: bc: The current belief state of the agent. | ||

| T: An AND-OR tree representing the current search tree. | ||

| L: A lower bound on V *. | ||

| U: An upper bound on V *. | ||

| 2: | for each a ∈ A do | |

| 3: | for each z ∈ Z do | |

| 4: | b′ ← τ(b, a, z) | |

| 5: | UT (b′) ← U(b′) | |

| 6: | LT (b′) ← L(b′) | |

| 7: | ||

| 8: | ||

| 9: | end for | |

| 10: | LT (b, a) ← RB(b, a) + γ Σz ∈ Z Pr(z|b, a)LT (τ(b, a, z)) | |

| 11: | UT (b, a) ← RB(b, a) + γ Σz ∈ Z Pr(z|b, a)UT (τ(b, a, z)) | |

| 12: | ||

| 13: | ||

| 14: | ||

| 15: | end for | |

| 16: | LT (b) ← max{maxa ∈ A LT (b, a), LT (b)} | |

| 17: | UT (b) ← min{maxa ∈ A UT (b, a), UT (b)} | |

| 18: |

|

|

| 19: |

|

|

| 20: |

|

The UpdateAncestors function is presented in Algorithm 3.6. The goal of this function is to update the bounds of the ancestor nodes, and find the best fringe node to expand next. Starting from a given OR-Node b′, the function simply updates recursively the ancestor nodes of b′ in a bottom-up fashion, using Equations 19–22 to update the bounds and Equations 23–24 to update the reference to the best fringe to expand and its heuristic value. Notice that the UpdateAncestors function can reuse information already stored in the node objects, such that it does not need to recompute τ(b, a, z), Pr(z|b, a) and RB(b, a). However it may need to recompute HT (b, a, z) and HT (b, a) according to the new bounds, depending on how the heuristic is defined.

Algorithm 3.6.

UpdateAncestors: Updates the bounds of the ancestors of all ancestors of an OR-Node

| 1: | Function UpdateAncestors(b′) |

| Inputs: b′: An OR-Node for which we want to update all its ancestors. | |

| Static: bc: The current belief state of the agent. | |

| T: An AND-OR tree representing the current search tree. | |

| L: A lower bound on V *. | |

| U: An upper bound on V *. | |

| 2: | while b′ ≠ bc do |

| 3: | Set (b, a) so that action a in belief b is parent node of belief node b′ |

| 4: | LT (b, a) ← RB(b, a) + γ Σz ∈ Z Pr(z|b, a)LT (τ(b, a, z)) |

| 5: | UT (b, a) ← RB(b, a) + γ Σz ∈ Z Pr(z|b, a)UT (τ(b, a, z)) |

| 6: | |

| 7: | |

| 8: | |

| 9: | LT (b) ← maxa′ ∈ A LT (b, a′) |

| 10: | UT (b) ← maxa′ ∈ A UT (b, a′) |

| 11: | |

| 12: | |

| 13: | |

| 14: | b′ ← b |

| 15: | end while |

Due to the anytime nature of these heuristic search algorithms, the search usually keeps going until an ε-optimal action is found for the current belief bc, or the available planning time is elapsed. An ε-optimal action is found whenever UT (bc) − LT (bc) ≤ ε or LT (bc) ≥ UT (bc, a′), ∀a′ ≠ argmaxa∈A LT (bc, a) (i.e. all other actions are pruned, in which case the optimal action has been found).

Now that we have covered the basic subroutines, we present the different heuristics proposed by Satia and Lave (1973), Washington (1997) and Ross and Chaib-draa (2007). We begin by introducing some useful notation.

Given any graph structure G, let us denote ℱ(G) the set of all fringe nodes in G and ℋG(b, b′) the set of all sequences of actions and observations that lead to belief node b′ from belief node b in the search graph G. If we have a tree T, then ℋT (b, b′) will contain at most a single sequence which we will denote . Now given a sequence h ∈ ℋG(b, b′), we define Pr(hz|b, ha) the probability that we observe the whole sequence of observations hz in h, given that we start in belief node b and perform the whole sequence of actions ha in h. Finally, we define Pr(h|b, π) to be the probability that we follow the entire action/observation sequence h if we start in belief b and behave according to policy π. Formally, these probabilities are computed as follows:

| (25) |

| (26) |

where d(h) represents the depth of h (number of actions in the sequence h), denotes the ith action in sequence h, the ith observation in the sequence h, and bhi the belief state obtained by taking the first i actions and observations of the sequence h from b. Note that bh0 = b.

3.4.1 Satia and Lave

The approach of Satia and Lave (1973) follows the heuristic search framework presented above. The main feature of this approach is to explore, at each iteration, the fringe node b in the current search tree T that maximizes the following term:

| (27) |

where b ∈ ℱ(T) and bc is the root node of T. The intuition behind this heuristic is simple: recalling the definition of V *, we note that the weight of the value V *(b) of a fringe node b on V *(bc) would be exactly , provided is a sequence of optimal actions. The fringe nodes where this weight is high have the most effect on the estimate of V *(bc). Hence one should try to minimize the error at these nodes first. The term UT (b) − LT (b) is included since it is an upper bound on the (unknown) error V *(b) − LT (b). Thus this heuristic focuses the search in areas of the tree that most affect the value V *(bc) and where the error is possibly large. This approach also uses Branch-and-Bound pruning, such that a fringe node reached by an action that is dominated in some parent belief b is never going to be expanded. Using the same notation as in Algorithms 3.5 and 3.6, this heuristic can be implemented by defining HT (b), HT (b, a) and HT (b, a, z), as follows:

| (28) |

The condition UT (b, a) > LT (b) ensures that the global heuristic value HT (bc, b′) = 0 if some action in the sequence is dominated (pruned). This guarantees that such fringe nodes will never be expanded.

Satia and Lave’s heuristic focuses the search towards beliefs that are most likely to be reached in the future, and where the error is large. This heuristic is likely to be efficient in domains with a large number of observations, but only if the probability distribution over observations is concentrated over only a few observations. The term UT (b) − LT (b) in the heuristic also prevents the search from doing unnecessary computations in areas of the tree where it already has a good estimate of the value function. This term is most efficient when the bounds computed offline, U and L, are sufficiently informative. Similarly, node pruning is only going to be efficient if U and L are sufficiently tight, otherwise few actions will be pruned.

3.4.2 BI-POMDP

Washington (1997) proposed a slightly different approach inspired by the AO* algorithm (Nilsson, 1980), where the search is only conducted in the best solution graph. In the case of online POMDPs, this corresponds to the subtree of all belief nodes that are reached by sequences of actions maximizing the upper bound in their parent beliefs.

The set of fringe nodes in the best solution graph of G, which we denote ℱ̂(G), can be defined formally as ℱ̂(G) = {b ∈ ℱ(G)| ∃h ∈ HG(bc, b), Pr(h|b, π̂G) > 0}, where π̂G(b, a) = 1 if a = argmaxa′ ∈ A UG(b, a′) and π̂G(b, a) = 0 otherwise. The AO* algorithm simply specifies expanding any of these fringe nodes. Washington (1997) recommends exploring the fringe node in ℱ̂(G) (where G is the current acyclic search graph) that maximizes UG(b) − LG(b). Washington’s heuristic can be implemented by defining HT (b), HT (b, a) and HT (b, a, z), as follows:

| (29) |

This heuristic tries to guide the search towards nodes that are reachable by “promising” actions, especially when they have loose bounds on their values (possibly large error). One nice property of this approach is that expanding fringe nodes in the best solution graph is the only way to reduce the upper bound at the root node bc. This was not the case for Satia and Lave’s heuristic. However, Washington’s heuristic does not take into account the probability Pr(hz|b, ha), nor the discount factor γd(h), such that it may end up exploring nodes that have a very small probability of being reached in the future, and thus have little effect on the value of V *(bc). Hence, it may not explore the most relevant nodes for optimizing the decision at bc. This heuristic is appropriate when the upper bound U computed offline is sufficiently informative, such that actions with highest upper bound would also usually tend to have highest Q-value. In such cases, the algorithm will focus its search on these actions and thus should find the optimal action more quickly then if it explored all actions equally. On the other hand, because it does not consider the observation probabilities, this approach may not scale well to large observation sets, as it will not be able to focus its search towards the most relevant observations.

3.4.3 AEMS

Ross and Chaib-draa (2007) introduced a heuristic that combines the advantages of BI-POMDP, and Satia and Lave’s heuristic. It is based on a theoretical error analysis of tree search in POMDPs, presented by Ross et al. (2008).

The core idea is to expand the tree such as to reduce the error on V *(bc) as quickly as possible. This is achieved by expanding the fringe node b that contributes the most to the error on V *(bc). The exact error contribution eT (bc, b) of fringe node b on bc in tree T is defined by the following equation:

| (30) |

This expression requires π* and V * to be computed exactly. In practice, Ross and Chaib-draa (2007) suggest approximating the exact error (V *(b) − LT (b)) by (UT (b) − LT (b)), as was done by Satia and Lave, and Washington. They also suggest approximating π* by some policy π̂T, where π̂T (b, a) represents the probability that action a is optimal in its parent belief b, given its lower and upper bounds in tree T. In particular, Ross et al. (2008) considered two possible approximations for π*. The first one is based on a uniformity assumption on the distribution of Q-values between the lower and upper bounds, which yields:

| (31) |

where η is a normalization constant such that the sum of the probabilities π̂T (b, a) over all actions equals 1.

The second is inspired by AO* and BI-POMDP, and assumes that the action maximizing the upper bound is in fact the optimal action:

| (32) |

Given the approximation π̂T of π*, the AEMS heuristic will explore the fringe node b that maximizes:

| (33) |

This can be implemented by defining HT (b), HT (b, a) and HT (b, a, z) as follows:

| (34) |

We refer to this heuristic as AEMS1 when π̂T is defined as in Equation 31, and AEMS2 when it is defined as in Equation 32.2

Let us now examine how AEMS combines the advantages of both the Satia and Lave, and BI-POMDP heuristics. First, AEMS encourages exploration of nodes with loose bounds and possibly large error by considering the term UT (b) − LT (b) as in previous heuristics. Moreover, as in Satia and Lave, it focuses the exploration towards belief states that are likely to be encountered in the future. This is good for two reasons. As mentioned before, if a belief state has a low probability of occurrence in the future, it has a limited effect on the value V*(bc) and thus it is not necessary to know its value precisely. Second, exploring the highly probable belief states increases the chance that we will be able to reuse these computations in the future. Hence, AEMS should be able to deal efficiently with large observation sets, assuming the distribution over observations is concentrated over a few observations. Finally, as in BI-POMDP, AEMS favors the exploration of fringe nodes reachable by actions that seem more likely to be optimal (according to π̂T). This is useful to handle large action sets, as it focuses the search on actions that look promising. If these “promising” actions are not optimal, then this will quickly become apparent. This will work well if the best actions have the highest probabilities in π̂T. Furthermore, it is possible to define π̂T such that it automatically prunes dominated actions by ensuring that π̂T(b, a) = 0 whenever UT(b, a) < LT(b). In such cases, the heuristic will never choose to expand a fringe node reached by a dominated action.

As a final note, Ross et al. (2008) determined sufficient conditions under which the search algorithm using this heuristic is guaranteed to find an ε-optimal action within finite time. This is stated in Theorem 3.2.

Theorem 3.2

(Ross et al., 2008) Let ε > 0 and bc the current belief. If for any tree T and parent belief b in T where UT (b) − LT (b) > ε, π̂T (b, a) > 0 for a = argmaxa′∈A UT (b, a′), then the AEMS algorithm is guaranteed to find an ε-optimal action for bc within finite time.

We observe from this theorem that it is possible to define many different policies π̂T under which the AEMS heuristic is guaranteed to converge. AEMS1 and AEMS2 both satisfy this condition.

3.4.4 HSVI

A heuristic similar to AEMS2 was also used by Smith and Simmons (2004) for their offline value iteration algorithm HSVI as a way to pick the next belief point at which to perform α-vector backups. The main difference is that HSVI proceeds via a greedy search that descends the tree from the root node b0, going down towards the action that maximizes the upper bound and then the observation that maximizes Pr(z|b, a)(U(τ(b, a, z)) − L(τ(b, a, z))) at each level, until it reaches a belief b at depth d where γ−d(U(b) − L(b)) < ε. This heuristic could be used in an online heuristic search algorithm by instead stopping the greedy search process when it reaches a fringe node of the tree and then selecting this node as the one to be expanded next. In such a setting, HSVI’s heuristic would return a greedy approximation of the AEMS2 heuristic, as it may not find the fringe node which actually maximizes . We consider this online version of the HSVI heuristic in our empirical study (Section 4). We refer to this extension as HSVI-BFS. Note that the complexity of this greedy search is the same as finding the best fringe node via the dynamic programming process that updates and in the UpdateAncestors subroutine.

3.5 Alternatives to Tree Search

We now present two alternative online approaches that do not proceed via a lookahead search in the belief MDP. In all online approaches presented so far, one problem is that no learning is achieved over time, i.e. everytime the agent encounters the same belief, it has to recompute its policy starting from the same initial upper and lower bounds computed offline. The two online approaches presented next address this problem by presenting alternative ways of updating the initial value functions computed offline so that the performance of the agent improves over time as it stores updated values computed at each time step. However, as is argued below and in the discussion (Section 5.2), these techniques lead to other disadvantages in terms of memory consumption and/or time complexity.

3.5.1 RTDP-BEL

An alternative approach to searching in AND-OR graphs is the RTDP algorithm (Barto et al., 1995) which has been adapted to solve POMDPs by Geffner and Bonet (1998). Their algorithm, called RTDP-BEL, learns approximate values for the belief states visited by successive trials in the environment. At each belief state visited, the agent evaluates all possible actions by estimating the expected reward of taking action a in the current belief state b with an approximate Q-value equation:

| (35) |

where V(b) is the value learned for the belief b.

If the belief state b has no value in the table, then it is initialized to some heuristic value. The authors suggest using the MDP approximation for the initial value of each belief state. The agent then executes the action that returned the greatest Q(b, a) value. Afterwards, the value V(b) in the table is updated with the Q(b, a) value of the best action. Finally, the agent executes the chosen action and it makes the new observation, ending up in a new belief state. This process is then repeated again in this new belief.

The RTDP-BEL algorithm learns a heuristic value for each belief state visited. To maintain an estimated value for each belief state in memory, it needs to discretize the belief state space to have a finite number of belief states. This also allows generalization of the value function to unseen belief states. However, it might be difficult to find the best discretization for a given problem. In practice, this algorithm needs substantial amounts of memory (greater than 1GB in some cases) to store all the learned belief state values, especially in POMDPs with large state spaces. The implementation of the RTDP-Bel algorithm is presented in Algorithm 3.7.

Algorithm 3.7.

RTDP-Bel Algorithm.

| 1: | Function OnlinePOMDPSolver() |

| Static: bc: The current belief state of the agent. | |

| V0: Initial approximate value function (computed offline). | |

| V: A hashtable of beliefs and their approximate value. | |

| k: Discretization resolution. | |

| 2: | Initialize bc to the initial belief state and V to an empty hashtable. |

| 3: | while not ExecutionTerminated() do |

| 4: | For all a ∈ A: Evaluate Q(bc, a) = RB(b, a) + γΣz∈Z Pr(z|b, a) V(Discretize(τ(b, a, z), k)) |

| 5: | â ← argmaxa∈A Q(bc, a) |

| 6: | Execute best action â for bc |

| 7: | V(Discretize(bc, k)) ←Q(bc, â) |

| 8: | Perceive a new observation z |

| 9: | bc ← τ(bc, â, z) |

| 10: | end while |

The function Discretize(b, k) returns a discretized belief b′ where b′(s) = round(kb(s))/k for all states s ∈ S, and V(b) looks up the value of belief b in a hashtable. If b is not present in the hashtable, the value V0(b) is returned by V. Supported by experimental data, Geffner and Bonet (1998) suggest choosing k ∈ [10, 100], as it usually produces the best results. Notice that for a discretization resolution of k there are O((k + 1)|S|) possible discretized beliefs. This implies that the memory storage required to maintain V is exponential in |S|, which becomes quickly intractable, even for mid-size problems. Furthermore, learning good estimates for this exponentially large number of beliefs usually requires a very large number of trials, which might be infeasible in practice. This technique can sometimes be applied in large domains when a factorized representation is available. In such cases, the belief can be maintained as a set of distributions (one for subset of conditionaly independent state variables) and the discretization applied seperately to each distribution. This can greatly reduce the possible number of discretized beliefs.

3.5.2 SOVI

A more recent online approach, called SOVI (Shani et al., 2005), extends HSVI (Smith & Simmons, 2004, 2005) into an online value iteration algorithm. This approach maintains a priority queue of the belief states encountered during the execution and proceeds by doing α-vector updates for the current belief state and the k belief states with highest priority at each time step. The priority of a belief state is computed according to how much the value function changed at successor belief states, since the last time it was updated. Its authors also propose other improvements to the HSVI algorithm to improve scalability, such as a more efficient α-vector pruning technique, and avoiding to use linear programs to update and evaluate the upper bound. The main drawback of this approach is that it is hardly applicable in large environments with short real-time constraints, since it needs to perform a value iteration update with α-vectors online, and this can have very high complexity as the number of α-vectors representing the value function increases (i.e. O(k|S||A||Z|(|S| + |Γt−1|)) to compute Γt).

3.6 Summary of Online POMDP Algorithms

In summary, we see that most online POMDP approaches are based on lookahead search. To improve scalability, different techniques are used: branch-and-bound pruning, search heuristics, and Monte Carlo sampling. These techniques reduce the complexity from different angles. Branch-and-bound pruning lowers the complexity related to the action space size. Monte Carlo sampling has been used to lower the complexity related to the observation space size, and could also potentially be used to reduce the complexity related to the action space size (by sampling a subset of actions). Search heuristics lower the complexity related to actions and observations by orienting the search towards the most relevant actions and observations. When appropriate, factored POMDP representations can be used to reduce the complexity related to state. A summary of the different properties of each online algorithm is presented in Table 1.

Table 1.

Properties of various online methods.

| Algorithm | ε-optimal | Anytime | Branch & Bound | Monte Carlo | Heuristic | Learning |

|---|---|---|---|---|---|---|

| RTBSS | yes | no | yes | no | no | no |

| McAllester | high probability | no | no | yes | no | no |

| Rollout | no | no | no | yes | no | no |

| Satia and Lave | yes | yes | yes | no | yes | no |

| Washington | acyclic graph | yes | implicit | no | yes | no |

| AEMS | yes | yes | implicit | no | yes | no |

| HSVI-BFS | yes | yes | implicit | no | yes | no |

| RTDP-Bel | no | no | no | no | no | yes |

| SOVI | yes | yes | no | no | no | yes |

4. Empirical Study

In this section, we compare several online approaches in two domains found in the POMDP literature: Tag (Pineau et al., 2003) and RockSample (Smith & Simmons, 2004). We consider a modified version of RockSample, called FieldVisionRockSample (Ross & Chaib-draa, 2007), that has a higher observation space than the original RockSample. This environment is introduced as a means to test and compare the different algorithms in environments with large observation spaces.

4.1 Methodology

For each environment, we first compare the real-time performance of the different heuristics presented in Section 3.4 by limiting their planning time to 1 second per action. All heuristics were given the same lower and upper bounds such that their results would be comparable. The objective here is to evaluate which search heuristic is most efficient in different types of environments. To this end, we have implemented the different search heuristics (Satia and Lave, BI-POMDP, HSVI-BFS and AEMS) into the same best-first search algorithm, such that we can directly measure the efficiency of the heuristic itself. Results were also obtained for different lower bounds (Blind and PBVI) to verify how this choice affects the heuristic’s efficiency. Finally, we compare how online and offline times affect the performance of each approach. Except where stated otherwise, all experiments were run on an Intel Xeon 2.4 Ghz with 4GB of RAM; processes were limited to 1GB of RAM.

4.1.1 Metrics to compare online approaches

We compare performance first and foremost in terms of average discounted return at execution time. However, what we really seek with online approaches is to guarantee better solution quality than that provided by the original bounds. In other words, we seek to reduce the error of the original bounds as much as possible. This suggests that a good metric for the efficiency of online algorithms is to compare the improvement in terms of the error bounds at the current belief before and after the online search. Hence, we define the error bound reduction percentage to be:

| (36) |

where UT(b), LT(b), U(b) and L(b) are defined as in Section 3.2. The best online algorithm should provide the highest error bound reduction percentage, given the same initial bounds and real-time constraint.

Because the EBR metric does not necessarily reflect true error reduction, we also compare the return guarantees provided by each algorithm, i.e. the lower bounds on the expected return provided by the computed policies for the current belief. Because improvement of the lower bound compared to the initial lower bound computed offline is a direct indicator of true error reduction, the best online algorithm should provide the greatest lower bound improvement at the current belief, given the same initial bounds and real-time constraint. Formally, we define the lower bound improvement to be:

| (37) |

In our experiments, both the EBR and LBI metrics are evaluated at each time step for the current belief. We are interested in seeing which approach provides the highest EBR and LBI on average.

We also consider other metrics pertaining to complexity and efficiency. In particular, we report the average number of belief nodes maintained in the search tree. Methods that have lower complexity will generally be able to maintain bigger trees, but the results will show that this does not always relate to higher error bound reduction and returns. We will also measure the efficiency of reusing part of the search tree by recording the percentage of belief nodes that were reused from one time step to the next.

4.2 Tag

Tag was initially introduced by Pineau et al. (2003). This environment has also been used more recently in the work of several authors (Poupart & Boutilier, 2003; Vlassis & Spaan, 2004; Pineau, 2004; Spaan & Vlassis, 2004; Smith & Simmons, 2004; Braziunas & Boutilier, 2004; Spaan & Vlassis, 2005; Smith & Simmons, 2005). For this environment, an approximate POMDP algorithm is necessary because of its large size (870 states, 5 actions and 30 observations). The Tag environment consists of an agent that has to catch (Tag) another agent while moving in a 29-cell grid domain. The reader is referred to the work of Pineau et al. (2003) for a full description of the domain. Note that for all results presented below, the belief state is represented in factored form. The domain is such that an exact factorization is possible.

To obtain results in Tag, we run each algorithm in each starting configuration 5 times, (i.e. 5 runs for each of the 841 different starting joint positions, excluding the 29 terminal states). The initial belief state is the same for all runs and consists of a uniform distribution over the possible joint agent positions.

Table 2 compares the different heuristics by presenting 95% confidence intervals on the average discounted return per run (Return), average error bound reduction percentage per time step (EBR), average lower bound improvement per time step (LBI), average belief nodes in the search tree per time step (Belief Nodes), the average percentage of belief nodes reused per time step (Nodes Reused), the average online planning time used per time step (Online Time). In all cases, we use the FIB upper bound and the Blind lower bound. Note that the average online time is slightly lower than 1 second per step because algorithms sometimes find ε-optimal solutions in less than a second.

Table 2.

Comparison of different search heuristics on the Tag environment using the Blind policy as a lower bound.

| Heuristic | Return | EBR (%) | LBI | Belief Nodes | Nodes Reused (%) | Online Time (ms) |

|---|---|---|---|---|---|---|

| RTBSS(5) | −10.31 ± 0.22 | 22.3 ± 0.4 | 3.03 ± 0.07 | 45066 ± 701 | 0 | 580 ± 9 |

| Satia and Lave | −8.35 ± 0.18 | 22.9 ± 0.2 | 2.47 ± 0.04 | 36908 ± 209 | 10.0 ± 0.2 | 856 ± 4 |

| AEMS1 | −6.73 ± 0.15 | 49.0 ± 0.3 | 3.92 ± 0.03 | 43693 ± 314 | 25.1 ± 0.3 | 814± 4 |

| HSVI-BFS | −6.22 ± 0.19 | 75.7 ± 0.4 | 7.69 ± 0.06 | 64870 ± 947 | 54.1 ± 0.7 | 673 ± 5 |

| BI-POMDP | −6.22 ± 0.15 | 76.2 ± 0.5 | 7.81 ± 0.06 | 79508 ± 1000 | 54.6 ± 0.6 | 622 ± 4 |

| AEMS2 | −6.19 ± 0.15 | 76.3 ± 0.5 | 7.81 ± 0.06 | 80250 ± 1018 | 54.8 ± 0.6 | 623 ± 4 |

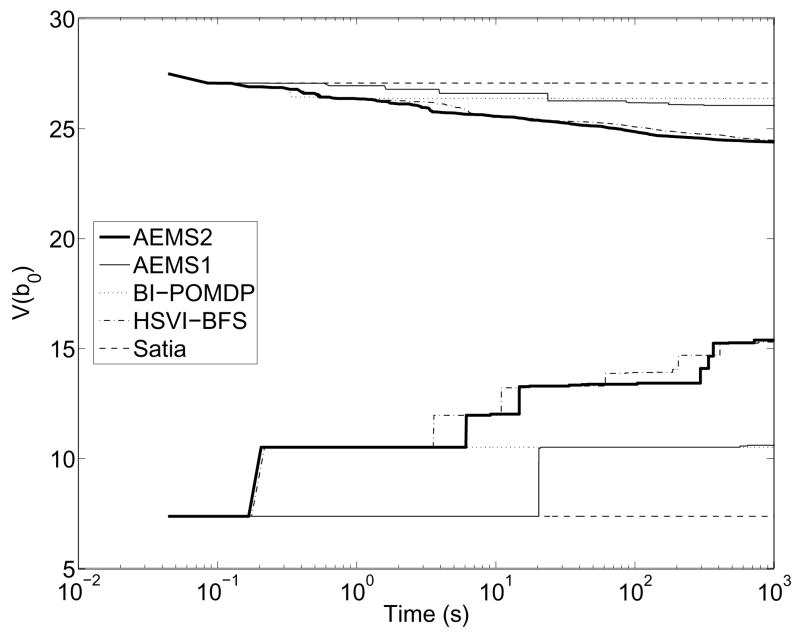

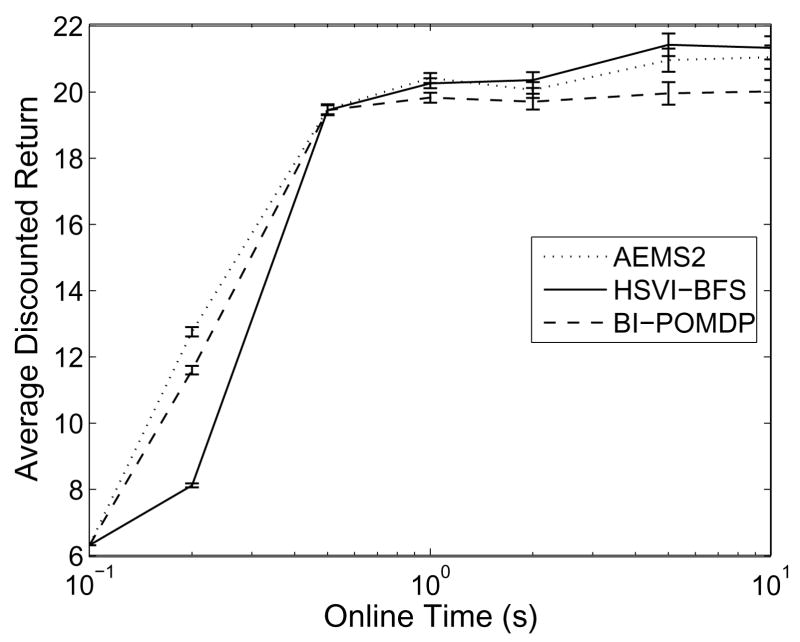

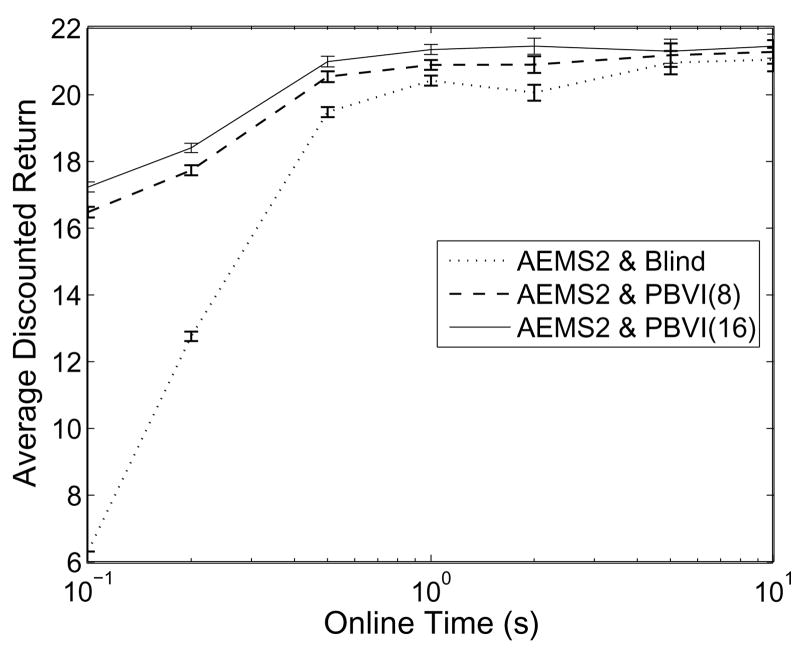

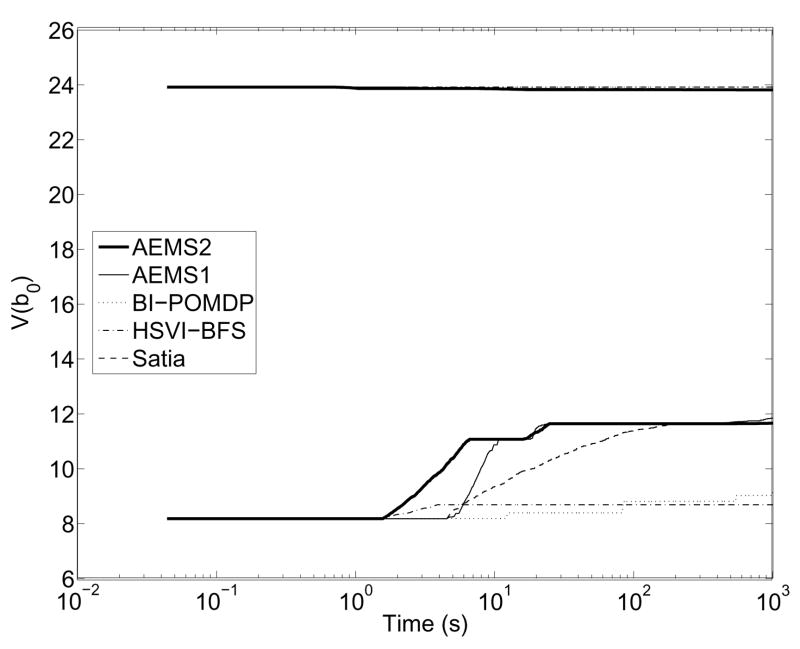

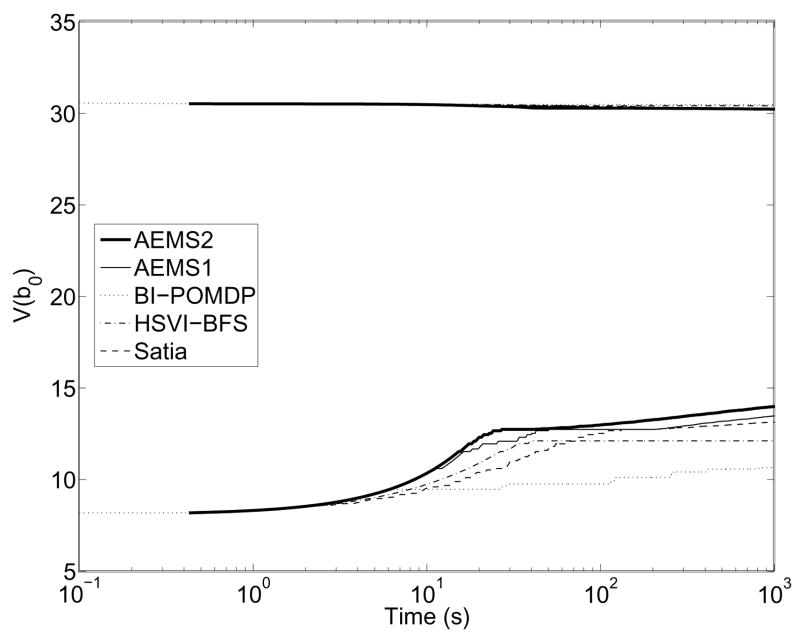

We observe that the efficiency of HSVI-BFS, BI-POMDP and AEMS2 differs slightly in this environment and that they outperform the three other heuristics: RTBSS, Satia and Lave, and AEMS1. The difference can be explained by the fact that the latter three methods do not restrict the search to the best solution graph. As a consequence, they explore many irrelevant nodes, as shown by the lower error bound reduction percentage, lower bound improvement, and nodes reused. This poor reuse percentage explains why Satia and Lave, and AEMS1 were limited to a lower number of belief nodes in their search tree, compared to the other methods which reached averages around 70K. The results of the three other heuristics do not differ much here because the three heuristics only differ in the way they choose the observations to explore in the search. Since only two observations are possible after the first action and observation, and one of these observations leads directly to a terminal belief state, the possibility that the heuristics differed significantly was very limited. Due to this limitation of the Tag domain, we now compare these online algorithms in a larger and more complex domain: RockSample.

4.3 RockSample

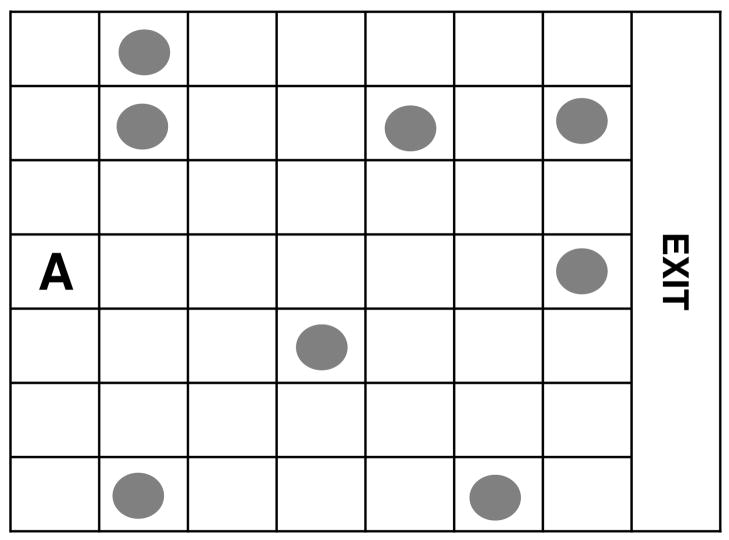

The RockSample problem was originally presented by Smith and Simmons (2004). In this domain, an agent has to explore the environment and sample some rocks (see Figure 3), similarly to what a real robot would do on the planet Mars. The agent receives rewards by sampling rocks and by leaving the environment (at the extreme right of the environment). A rock can have a scientific value or not, and the agent has to sample only good rocks.

Figure 3.

RockSample[7,8].

We define RockSample[n, k] as an instance of the RockSample problem with an n × n grid and k rocks. A state is characterized by k+1 variables: XP, which defines the position of the robot and can take values {(1, 1), (1, 2),…, (n, n)} and k variables, through , representing each rock, which can take values {Good, Bad}.

The agent can perform k + 5 actions: {North, South, East, West, Sample, Check1,…, Checkk}. The four motion actions are deterministic. The Sample action samples the rock at the agent’s current location. Each Checki action returns a noisy observation from {Good, Bad} for rock i.

The belief state is represented in factored form by the known position and a set of k probabilities, namely the probability of each rock being good. Since the observation of a rock state is independent of the other rock states (it only depends on the known robot position), the complexity of computing Pr(z|b, a) and τ(b, a, z) is greatly reduced. Effectively, the computation of Pr(z|b, Checki) reduces to: . The probability that the sensor is accurate on rock i, , where η(Xp, i) = 2−d(Xp,i)/d0, d(Xp, i) is the euclidean distance between position Xp and the position of rock i, and d0 is a constant specifying the half efficiency distance. is obtained directly from the probability (stored in b) that rock i is good. Similarly, τ(b, a, z) can be computed quite easily as the move actions deterministically affect variable XP, and a Checki action only changes the probability associated to according to the sensor’s accuracy.