Abstract

The brain basis of bilinguals’ ability to use two languages at the same time has been a hotly debated topic. On the one hand, behavioral research has suggested that bilingual dual language use involves complex and highly principled linguistic processes. On the other hand, brain-imaging research has revealed that bilingual language switching involves neural activations in brain areas dedicated to general executive functions not specific to language processing, such as general task maintenance. Here we address the involvement of language-specific versus cognitive-general brain mechanisms for bilingual language processing by studying a unique population and using an innovative brain-imaging technology: bimodal bilinguals proficient in signed and spoken languages and functional Near-Infrared Spectroscopy (fNIRS; Hitachi ETG-4000), which, like fMRI, measures hemodynamic change, but which is also advanced in permitting movement for unconstrained speech and sign production. Participant groups included (i) hearing ASL-English bilinguals, (ii) ASL monolinguals, and (iii) English monolinguals. Imaging tasks included picture naming in “Monolingual mode” (using one language at a time) and in “Bilingual mode” (using both languages either simultaneously or in rapid alternation). Behavioral results revealed that accuracy was similar among groups and conditions. By contrast, neuroimaging results revealed that bilinguals in Bilingual mode showed greater signal intensity within posterior temporal regions (“Wernicke’s area”) than in Monolingual mode. Significance: Bilinguals’ ability to use two languages effortlessly and without confusion involves the use of language-specific posterior temporal brain regions. This research with both fNIRS and bimodal bilinguals sheds new light on the extent and variability of brain tissue that underlies language processing, and addresses the tantalizing questions of how language modality, sign and speech, impact language representation in the brain.

Introduction

The ability to learn multiple languages is not only useful, it is also a marvel of the human language capacity that taunts the scientific mind. While it is remarkable for an individual to achieve proficiency in more than one language, it is especially remarkable to be able to use multiple languages within one conversation without confusing them. Scientific understanding of the human language capacity is incomplete without an in-depth understanding of how the bilingual brain enables the use of multiple languages with ease across various language contexts. Thus, one of the most prominent research questions in neurolinguistics, psycholinguistics, and cognitive neuroscience investigates the brain mechanisms that allow healthy bilinguals to use their two languages without confusion and in a highly linguistically principled manner (Abutalebi et al., 2008; Abutalebi, Cappa & Perani, 2001; Abutalebi & Green, 2007; Christoffels, Firk, & Schiller, 2007; Crinion et al., 2006; Dehaene et al., 1997; Dijkstra & Van Heuven, 2002; Fabbro, 2001; Grosjean, 1997; Hernandez, Dapretto, Mazziotta, & Bookheimer, 2001; Kim, Relkin, Lee & Hirsch, 1997; Kovelman, Shalinsky, Berens, & Petitto, 2008; Paradis, 1977, 1997; Perani, Abutalebi, et al., 2003; Perani, 2005; Perani et al., 1996; Perani et al., 1998; Price, Green, & von Studnitz, 1999; Rodriguez-Fornells, Rotte, Heinze, Noesselt, & Muente, 2002; van Heuven, Schriefers, Dijkstra, & Hagoort, 2008; Venkatraman, Siong, Chee, & Ansari, 2006).

As suggested by François Grosjean (1997), bilinguals typically find themselves either in a “Monolingual mode,” using one language at a time, or in a “Bilingual mode,” using two languages in rapid alternation. Being in a Bilingual mode can at times lead to mixed-language productions, otherwise known as “code-switching,” and both the nature of this fascinating process as well the underlying mechanisms that give rise to it have especially attracted the attention of scientists.

Intra-utterance use of two languages has been found to be complex and rule-governed language-specific behavior that takes into account structure and meaning of both of the bilingual’s languages—and this is surprisingly the case even in the youngest bilinguals (Cantone & Muller, 2005; Grosjean & Miller, 1994; Holowka, Brosseau-Lapre, & Petitto, 2002; Lanza, 1992; MacSwan, 2005; Paradis, Nicoladis, & Genesee, 2000; Petitto & Holowka, 2002; Petitto, Katerelos, Levy, Gauna, Tetreault, & Ferraro, 2001; Petitto & Kovelman, 2003; Poplack, 1980). For instance, in French, adjectives typically follow the noun that they modify, whereas, in English, adjectives precede the noun (e.g., “table rouge” in French versus “red table” in English). Even 3 year-old French-English bilingual children typically do not mix, alter, or insert new lexical items into each respective language’s noun-adjective canonical patterning, and, thus, avoid making a significant grammatical violation in either of their two languages (Petitto et al., 2001).

One of the most common types of intra-utterance dual language use, however, occurs at the lexical level of language organization. Here, a bilingual may place an “open-class” lexical item (noun, verb, adverb, adjective or interjection) from language A into an utterance or phrase in language B (Poplack, 1980). For instance, a French-English bilingual might say, “Yesterday we ate crème glacée,” (“ice cream” in French spoken in Quebec, Canada). Thus, bilinguals must know how to navigate between their respective languages’ sets of lexical items.

Current theories of bilingual lexico-semantic representation have assumed the existence of a combined lexical store, in which each lexical item is connected to a number of semantic features in a common semantic store (Ameel, Storms, Malt, & Sloman, 2005; Dijkstra & Van Heuven, 2002; Dijkstra, Van Heuven, & Grainger, 1998; Green, 1998; Kroll & Sunderman, 2003; Monsell, Matthews, & Miller, 1992; Von Studnitz & Green, 2002). Words in two languages that share overlapping semantic representations within the common semantic store are called “translation equivalents” (e.g., “mother” in English and “mère” in French). The idea that there is a common store is supported, for instance, by the fact that bilinguals can be semantically primed in one language to produce a word in the other language (Dijkstra & Van Heuven, 2002; Kerkhofs, Dijkstra, Chwilla, & de Bruijn, 2006; Kroll & Sunderman, 2003). Moreover, behavioral and imaging research has shown that bilinguals are likely to have both of their languages active to some extent at all times (e.g., studies in word priming, and comprehension of cognates, homophones and homographs, Doctor & Klein, 1992; Kerkhofs, et al., 2006; van Hell & De Groot, 1998; van Heuven et al., 2008).

How do bilinguals successfully operate in bilingual mode without confusing their languages, with respect to the semantic and grammatical content of their mixed utterances, and to their language selection? Contemporary research on the underlying brain mechanisms that make possible bilingual dual language use leaves many questions. On the one hand, bilingual language use has been said to be a highly principled language process which involves activation of both languages in a linguistically-based (rule-governed) manner (Grosjean, 1997; MacSwan, 2005; Petitto et al., 2001). On the other hand, bilingual language also appears to involve cognitive control and allocation of attention, with current research suggesting that bilinguals’ ability to use their languages is akin to many other types of general cognitive processes (Abutalebi, 2008; Abutalebi & Green, 2007; Bialystok, 2001; Crinion et al., 2006; Green, 1998; Meuter & Allport, 1999; Thomas & Allport, 2000).

The ideas that language-specific mechanisms and cognitive-general mechanisms are involved in dual language use are not necessarily in conflict with each other. In fact, both types of processing seem crucial. The bilingual must preserve the overall linguistic integrity of the utterance while also rapidly selecting one of the competing linguistic representations, the appropriate phonological encoding for that representation, and finally send the correct articulation-motor command.

It is noteworthy that the overwhelming majority of brain imaging studies with bilinguals, including our own, support the idea that cognitive-general mechanisms are heavily involved in dual language use in Bilingual mode. In their recent theoretical overview of the bilingual behavioral, imaging and lesion literature, Abutalebi & Green (2007) and Abutalebi (2008) outline the network of brain regions that has been consistently shown to participate in dual language selection. Prefrontal cortex has been shown to participate in bilingual language use during both language production and comprehension (e.g., Hernandez et al., 2000; 2001; Kovelman, Shalinsky, Berens & Petitto, 2008; Rodriguez-Fornells et al., 2002) and, importantly, prefrontal cortex typically participates in other tasks that require complex task monitoring and response selection as well (Wager, Jonides, & Reading, 2004). Anterior cingulate cortex (ACC), which typically plays a role in selective attention, error monitoring and interference resolution (Nee, Wager, Jonides, 2007), also participates in the language selection process (e.g., Abutalei et al., 2008; Wang, Xue, Chen, Xue, & Dong, 2007). Parietal regions, including supramarginal gyrus and subcortical regions, particularly the caudate nucleus, are also thought to be key to bilingual dual language use, as shown by imaging studies with healthy bilinguals (Abutalebi et al., 2007; 2008; Crinion et al., 2006; Green, Crinion, & Price, 2006; Khateb et al., 2007), as well as pathological language switching cases of caudate lesions (Abutalebi, Miozzo, & Cappa, 2000; Mariën, Abutalebi, Engelborghs, & De Deyn, 2005). Most likely, it is the complex interplay between these regions that constitutes the cognitive basis for bilingual language use (Abutalebi, 2008).

But can bilingual language use be dependent on cognitive-general mechanisms alone? There have only been a handful of studies suggesting that language-dedicated brain regions (including classic Broca and Wernicke’s areas) might also show a modulation in activity as a function of dual language use (Abutalebi et al., 2007, Chee, Soon, & Lee, 2003). However, these studies are limited to examining receptive language.

How do we bring into greater focus the function of language-dedicated brain mechanisms during dual language use? During dual language use, unimodal (speech-speech, e.g., English-French) bilinguals must inhibit competing alternatives in the same modality. It is possible that this competition is reduced in bimodal (sign language-speech) bilinguals, where competition for language articulation and language comprehension perception is less direct. As complex as it might be to use both the hands and mouth simultaneously or in rapid alternation during Bilingual mode, these two articulation modalities do not physically preclude each other (consider the fact that people can gesture while they speak). Might it be the case that in unimodal bilinguals’ activations in language-specific regions are occluded by the overwhelmingly high activations in cognitive-general regions, which might result in part from high attention-sensory/motor costs of integrating and differentiating two languages within one modality (thus, possibly driving high statistical thresholds for selected activations)? Bimodal bilinguals who know a signed and a spoken language (hence “bimodal bilinguals”) therefore represent an excellent case for studying the underlying mechanisms of dual language use, particularly on the language-specific level, as their language production and comprehension faculties might experience reduced levels of interference.

Prefrontal activations in particular have been consistently observed during blocks of sustained dual language production and comprehension (e.g., Hernandez et al., 2000; Kovelman, Shalinsky, Berens, & Petitto, 2008). Cognitive-general mechanisms operate in a semi-hierarchical arrangement, where prefrontal cortex most likely represents the effortful “top-down” control, while ACC, parietal and subcortical regions are involved in more automated aspects of attention allocation. Unimodal or bimodal, all bilinguals must choose the appropriate language at any given moment. Importantly, however, for bimodal bilinguals, the costs of selection errors are reduced: if the competition is not perfectly resolved, both languages can “come out” simultaneously – which does occasionally happen even when hearing signers interact with non signers (Emmorey et al., 2004; Petitto et al., 2001). Given the reduced cost of selection errors, bimodal bilinguals might not devote as many resources to top-down monitoring as unimodal bilinguals. This population, therefore, allows a nice window into language-related processing mechanisms.

Studying bimodal bilinguals who are proficient in signed and spoken languages is a powerful tool for revealing the underlying principles of bilingual acquisition, processing, and code-switching (c.f., Petitto et al., 2001; Emmorey et al., 2004). Bimodal bilinguals, child and adult, commonly produce intra-utterance code-switching much like unimodal bilinguals when in Bilingual mode. Unlike unimodal bilinguals, they take full advantage of their bimodality and commonly produce open-class words in both languages simultaneously. During simultaneous mixing (also called “code-blending,” rather than “code-switching,” (Emmorey et al., 2004)) the two words in different languages are typically semantically congruent (i.e. they are similar or identical in their meaning), revealing that even in the youngest bilinguals, their two languages come together in concert rather than in unprincipled confusion (Emmorey et al., 2004; see especially Petitto et al., 2001; Petitto & Holowka, 2002; Petitto & Kovelman, 2003).

If the ability to use two languages in the same context is uniquely a general cognitive ability, then during Bilingual mode, even sign-speech bimodal bilinguals should only show changes in activation in cognitive-general regions. In particular, sustained dual-language cognitive effort should result in high activations in the prefrontal regions (Buckner et al., 1996). Alternatively, if language-specific mechanisms also play a key role in dual language use, once the competition from two spoken languages using one mouth is reduced, sign-speech bimodal bilinguals in Bilingual mode might show increased recruitment of classic language brain tissue, such as left inferior frontal gyrus (particularly in BA 44/45, classic “Broca’s area”) and left superior temporal gyrus (particularly in the posterior part of left STG, classic “Wernicke’s area”).

The present study represents a principled attempt to reconcile decades of behavioral and imaging work by investigating whether language-specific mechanisms, as has been shown for cognitive-general mechanisms, play a role in dual language use. To investigate this question, we use a novel technology, functional Near-Infrared Spectroscopy (fNIRS) brain-imaging. Like fMRI, fNIRS measures changes in the brain’s blood oxygen level density (BOLD) while a person is performing specific cognitive tasks. Due to the nature of NIRS imaging, we do not measure the activation in subcortical and ACC regions; however, we do not question whether or not these regions are involved in dual language use, as we believe our colleagues have provided ample evidence to that effect (c.f. Abutalebi & Green, 2007). Dual language use most likely involves a complex network of cortical and subcortical regions, which are both language-dedicated and cognitive-general. This study focuses on examining how cognitive-general and language-specific cortical regions participate in dual language use. A key advantage over fMRI for the purposes of our language study is that fNIRS places minimal restriction on body motion and it is nearly completely silent (see methods section for further details on the spatial and temporal resolution, as well as other technical characteristics of fNIRS).

Here we use fNIRS brain-imaging technology to evaluate sign-speech bimodal bilinguals during overt picture-naming. Monolinguals were tested in their native language (English or ASL). Bimodal ASL-English bilinguals were tested in each of their languages separately, as well as in simultaneous (naming pictures in ASL and in English at the same time) and alternating (naming pictures either in ASL or in English in rapid alternation) Bilingual modes. The study included English and ASL monolinguals as control groups, in order to ensure that bilingual participants were linguistically and neurologically comparable to their monolingual counterparts in each language.

Methods

Participants

A total of 32 adults participated in this study (5 hearing bimodal bilinguals, 20 hearing monolinguals, 7 deaf monolinguals; given the rarity of our deaf and bilingual populations, we were indeed fortunate to achieve such samples). All participants received compensation for their time. The treatment of all participants and all experimental procedures were in full compliance with the ethical guidelines of NIH and the university Ethical Review Board.

Bilingual Participants

Five hearing right-handed American Sign Language (ASL)-English bilinguals participated in this experiment (see Table 1). All bilingual participants were children of deaf adults (CODAs) and had high, monolingual-like, language proficiency in each of their two languages, as was established with the participant language assessment methods described below. All participating individuals achieved the required accuracy of at least 80%. The bilingual participants received their intensive dual-language exposure to both ASL and English at home within the first five years of life, as early dual language exposure is key to comparable linguistic processing in bilinguals (Kovelman, Baker & Petitto, 2008; Kovelman, Baker & Petitto, in press; Perani, Abutalebi et al., 2003; Wartenburger, Heekeren, Abutalebi, Cappa, Villringer, & Perani, 2003). All bilingual participants used both English and ASL consistently from the first onset of bilingual exposure to the present, had at least one deaf parent who used ASL, and learned to read in English between the ages of 5–7. Bilingual participants had no exposure to a language other than English and ASL until after age 10 and only in the format of a foreign language class.

Table 1.

Descriptive information and language background for participant groups.

| Group | Mean age (range) | Age of language exposure | Parents’ native language(s) | Mean performance on language proficiency tasks | ||

|---|---|---|---|---|---|---|

| Eng | ASL | Eng | ASL | |||

| English monolinguals n = 20 | 19 (18–25) | Birth | English only | 95.67% | ||

| ASL monolinguals n = 7 | 26 (19–42) | Birth-4 | ASL, English, both | 100% | ||

| ASL-English bilinguals n = 5 | 24 (16–32) | Birth | Birth | ASL, English, both | 96.4% | 97.95% |

Monolingual Participants

Twenty hearing right-handed English monolinguals and seven deaf right-handed ASL monolinguals participated in this experiment (see Table 1). All monolingual participants completed language assessment tasks in English or in ASL with the required accuracy of 80% and above. English monolinguals came from monolingual English families and had no other language exposure until after age 10 and only in the format of a foreign language class. ASL participants were profoundly deaf, with five of the seven being born deaf (congenitally deaf), and the remaining two deaf participants having lost their hearing by age 12 months. Six of the deaf participants were exposed to ASL from birth, while one was first exposed to ASL at age four. All ASL monolinguals studied English only in a school/class format and indicated that they experienced difficulty understanding English on our extensive “Bilingual Language Background & Use Questionnaire” (BLBU). Previous research has established that adult deaf individuals typically achieve the equivalent of 4th grade level reading comprehension in English (e.g., Traxler, 2000). All our monolingual participants had taken “second language” classes in school (including English, Spanish, French, German and other languages). Their proficiency in the variety of second languages learned at school was assessed via self-report in the BLBU questionnaire.

Language Assessments

Bilingual Language Background & Use Questionnaire

All participants were first administered an extensive Bilingual Language Background and Use Questionnaire that has been standardized and used across multiple spoken and signed language populations (Penhune, Cismaru, Dorsaint-Pierre, Petitto, & Zatorre, 2003; Petitto, Zatorre et al., 2000; Petitto et al., 2001). This questionnaire enabled us to achieve confidence both in our “bilingual” and our “monolingual” group assignments and in their early-exposed, highly-proficient status in their language(s). This tool permitted us to determine the age of first bilingual language exposure, language(s) used in the home by all caretakers and family members/friends, language(s) used during/throughout schooling, language(s) of reading instruction, cultural self-identification and language maintenance (language(s) of the community in early life and language(s) used throughout development up until the present).

Language Competence/Expressive Proficiency (LCEP)

This task was administered to assess participants’ language production in each of their languages, and has been used effectively to measure both signed and spoken language proficiency and competency (e.g., Kegl, 1994; Kovelman, Baker & Petitto, 2008, in press; Kovelman, Shalinsky, Berens, & Petitto, 2008; Petitto et al., 2000; Senghas, 1994; Senghas & Kegl, 1994). The task includes two one-minute cartoons containing a series of events that the participant watches and then describes to an experimenter. Monolingual participants described each of the two cartoons either in English or in ASL (as relevant); bilingual participants described one of the cartoons in English to a native English-speaker and one of the cartoons in ASL to a different experimenter who was a native ASL speaker (the order of the language presentation and cartoons was randomized across participants). Sessions were videotaped and highly proficient speakers of English and of ASL trained as linguistic coders identified the presence or absence of semantic, phonological, syntactic, and morphological errors. Each participant was required to produce at least 80% correct utterances in each native language in order to participate in the experiment. Inter-rater reliability for both transcription and coding for a subset of participants (25%) was 98.0%.

English Grammaticality Judgment Behavioral Task

An English grammaticality judgment task was administered to English monolinguals and ASL-English bilinguals. In this grammaticality judgment task, modeled after ones used by Johnson and Newport (1989), McDonald (2000), and Winitz (1996), participants were presented with grammatical and ungrammatical sentences and instructed to read each sentence and indicate whether or not the sentence was grammatical. Examples: I see a book (grammatical); I see book (ungrammatical). This type of task is effective at identifying individuals’ proficiency and age of first exposure to the language; crucially, only those exposed to the language before age 7 have been observed to perform with high accuracy on this task. All English-speaking participants had to score at least 80% correct to be eligible.

Experimental Tasks

Picture Naming

Black line-drawing pictures of everyday objects (e.g., table, lamp) were selected from the Peabody Picture Vocabulary Test (PPVT; Dunn & Dunn, 1981) and the International Picture Naming Project (Abbate & La Chappelle, 1984; Max Planck Institute for Psycholinguistics; Kaplan, Goodglass, & Weintraub, 1983; Oxford Junior Workbooks, 1965). There were 4 different sets of pictures and the background color (described below) of the pictures was altered to elicit responses in specific languages from participants. We used a block design, with 5 blocks for each of the 4 language conditions (English, ASL, Simultaneous, and Alternation). Each run was preceded by 60 seconds of rest/baseline. Each 35 s block contained 14 pictures (1.5 s picture presentation and 1 s inter-picture fixation interval), with 20 second rest-periods between blocks during which a white fixation cross on a black background was presented. Participants received a break and a reminder of instructions between the runs.

The participant’s task was to name the picture they saw on each trial. Their responses were recorded and later scored using video-audio recordings synchronized with imaging data collection. Picture Naming runs occurred in the following manner: Monolingual mode English - White background for all pictures in this run of 5 blocks indicated for ASL-English bilinguals that an English response was required. Monolingual mode ASL - Blue backgrounds indicated for ASL-English bilinguals that an ASL response was required. Bilingual mode Simultaneous - Grey backgrounds indicated for ASL-English bilinguals that they should name all pictures in both ASL and English simultaneously. Bilingual mode Alternating - Blocks contained “blue” (ASL) and “white” (English) background pictures, indicating that bilingual participants should alternate between the two languages. The ordering of ASL and English trials was randomized in the Alternating block. English and ASL monolinguals saw the same pictures, but were asked to disregard differences in background color and to name the pictures in English or in ASL only, respectively. The order of experimental runs was randomized across participants. Picture naming stimuli were presented using E-Prime software on a flatscreen Dell monitor connected to a Dell laptop running Windows XP. Task order was randomized for each participant. Prior to imaging, participants were trained in the task using stimuli not used in the experiment.

fNIRS Imaging

General Information

fNIRS holds several key technological brain-imaging advantages over fMRI, as rather than yielding BOLD, which is a ratio between oxygenated and deoxygenated hemoglobin, fNIRS yields the measures of deoxygenated and oxygenated hemoglobin separately and thus yields a closer measure of the underlying neuronal activity than fMRI. While fNIRS does not record deep into the human brain (maximum ~4cm depth), it has excellent spatial resolution that is outstanding for studies of human higher cognition and language, and it has better temporal resolution than fMRI (~<5s Hemodynamic Response, HR), as well as a remarkably good sampling rate of 10 x per second. Unlike MRI scanners, fNIRS scanners are very small (the size of a desktop computer), portable, and particularly child friendly (children and adults sit normally in a comfortable chair, and babies can be studied while seated on mom’s lap). It is the fNIRS’ detailed signal yield [HbO] index versus BOLD, its rapid sampling rate, relative silence, high motion tolerance, and participant friendly set-up, which have contributed to the rapidly growing use of fNIRS as one of today’s leading brain-imaging technologies.

Apparatus & Procedure

To record the hemodynamic response we used a Hitachi ETG-4000 with 44 channels, acquiring data at 10 Hz (Figure 1a). The lasers were factory set to 690 nm and 830 nm. The 16 lasers and 14 detectors were segregated into two 5 × 3 arrays corresponding to 30 probes (15 probes per array; Figure 1a). Once the participant was comfortably seated, one array was placed on each side of the participant’s head. Positioning of the array was accomplished using the 10–20 system (Jasper, 1957) to maximally overlay regions classically involved in language, verbal, and working memory areas in the left hemisphere as well as their homologues in the right hemisphere.

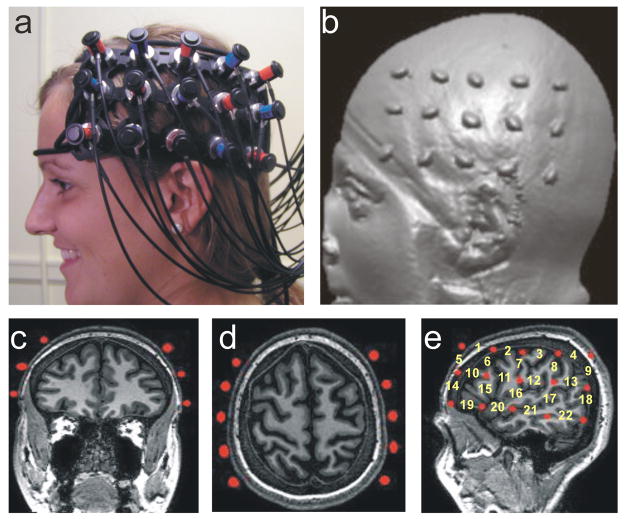

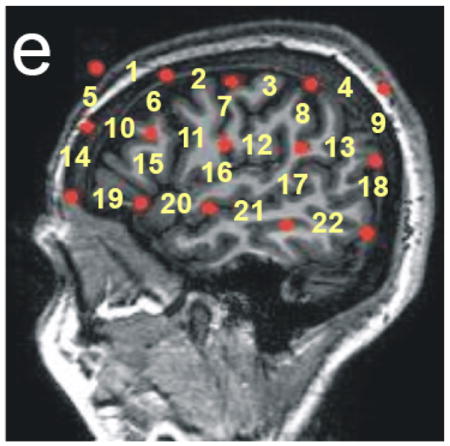

Figure 1.

Hitachi ETG-4000 Imaging System, Neuroanatomical Probe Positioning, and MRI Neuroanatomical Co-Registration. (a) Participant with Hitachi 24-channel ETG-4000, with lasers set to 698nm and 830nm, in place and ready for data acquisition. The 3×5 optode arrays were positioned on participants’ heads using rigorous anatomical localization measures including 10 × 20 system and MRI coregistration (see b-e). (b) MRI co-registration was conducted by having participants (N=9) wear 2 3×5 arrays with vitamin-E capsules in MRI. (c-e) anatomical MRI images were analyzed in coronal (c), axial (d) and sagital (e) views allowing us to identify the location of optodes (Vitamine E capsules) with respect to underlying brain structures. (e) Anatomical view of the position of the fNRIRs channels.

During recording, channels were tested for noise prior to the beginning of the recording session. Digital photographs were taken of the positioning of the probe arrays on the participant’s head prior to and after the recording session to identify if the arrays had moved during testing. An MPEG video recording was synchronized with the testing session, so any apparent movement artifacts could be confirmed during offline analysis and to score participants’ responses.

After the recording session, data were exported and analyzed using Matlab (The Mathworks Inc.). Conversion of the raw data to hemoglobin values was accomplished in two steps. Under the assumption that scattering is constant over the path length, we first calculated the attenuation for each wavelength by comparing the optical density of light intensity during the task to the calculated baseline of the signal. We then used the attenuation values for each wavelength and sampled time points to solve the modified Beer-Lambert equation to convert the wavelength data to a meaningful oxygenated and deoxygenated hemoglobin response (HbO and Hb respectively).

MRI Coregistration

For MRI (anatomical) co-registration, a 5×3 array of Vitamin E tablets was constructed with the tablets placed precisely at each of the optode locations used during our fNIRS experiments above (Figure 1b-e). The array was then placed on to the participant’s head using the 10–20 coordinate system and secured in place with MRI safe tape and straps. Using a Philips 3T MRI scanner, an anatomical scan was then taken from 9 participants. Foam padding was placed in the head coil to limit subject head movement during image acquisition. T1-weighted three-dimensional magnetization-prepared rapid acquisition gradient echo (3D-MPRAGE) sagittal images were obtained with a Phillips 3T scanner. Scanning parameters were as follows: echo time (TE) = 4.6 ms, repetition time (TR) = 9.8 ms, flip angle = 8 degrees, acquisition matrix = 256 × 256, 160 sagittal slices, and voxel size = 1 × 1 × 1 mm with no gap.

These scans confirmed that the placement of the Vitamin E tablets, and hence the placement of our fNIRS recorded channels, indeed covered the anatomical locations anticipated by the 10–20 coordinate system (see Figure 1b-e).

Results

Behavioral Results

Twenty English monolinguals completed the Picture naming task. Due to camera failure, behavioral responses for one English monolingual participant were not recorded; these data are thus omitted in this behavioral analysis. Participants’ average scores and standard deviations for this task are reported in Table 2.

Table 2.

Mean (and standard deviation) accuracy score for each language group and condition on the behavioral picture naming task.

| Group | Conditions | |||

|---|---|---|---|---|

| English | ASL | Simultaneous | Alternating | |

| English monolinguals | 89.8 (.05) | |||

| ASL monolinguals | 95.2 (.03) | |||

| ASL-English Bilinguals | 90.0 (.05) | 88.5 (.10) | 91.6 (.09) | 90.4 (.05) |

We first compared the three language groups (hearing/native ASL-English bilinguals, ASL monolinguals, and English monolinguals) on their overall picture naming performance. For this comparison, scores for hearing/native ASL-English bilinguals were obtained by averaging across the four naming conditions (ASL, English, Alternating, Simultaneous). Please see Table 2 for behavioral scores. A one-way ANOVA with these average percent correct scores revealed a marginal effect of language group, (F(2, 28) = 3.0, p = 0.066). Post-hoc comparisons revealed that the ASL monolinguals (mean = 95.2% correct) performed marginally better than the English monolinguals (mean = 89.8%) on this task (Tukey’s Honestly Significant Difference (HSD), p = 0.057). No other group comparisons reached significance.

For hearing/native ASL-English bilinguals, we further compared performance on each of the naming conditions, to determine whether there were differences in difficulty across conditions (ASL, English, Alternating, Simultaneous). A repeated-measures ANOVA on these percent correct scores revealed no significant effect of condition, F(3,12) <1, p > .05, ns.

Imaging results

Identifying functional regions of interest

The first step in our analysis was to use Principal Components Analysis (PCA) to identify channels that formed data-driven clusters of activation, which shaped our functional regions of interest. The first and second principal components (PC), which explain the largest proportion of the variance, were used for each. To determine functional clusters for English, data from the hearing/native ASL-English bilinguals and English monolinguals were used. To determine functional clusters for ASL, data from the hearing/native ASL-English bilinguals and ASL monolinguals were used.

First, we present PCA results for English, for the left and right hemispheres. We then present PCA results in ASL, again first for the left then for the right hemisphere. Finally, we present the PCA results for the two Bilingual mode conditions in bimodal bilinguals. Throughout our description of the PCA results in ASL we note the similarities and differences between the two languages, as well as differences and similarities in Monolingual and Bilingual modes. The channels active and loadings for clusters identified in the first component for each condition (English, ASL, and Bilingual mode) are presented in Table 3. See the estimated anatomical location of channels in Figure 1e.

Table 3.

Figure 1e. Clusters in left and right hemisphere for the first principle component. Please refer to Figure 1e with regard to channel locations.

| Left Hemisphere | Right Hemisphere | ||||

|---|---|---|---|---|---|

| Cluster | Loading* | Channels | Cluster | Loading* | Channels |

| English | |||||

| 1 Anterior perisylvian | − | 15, 19, 20 | Anterior perisylvian | − | 15, 19, 20 |

| 2 DLPFC | + | 10, 11, 14 | DLPFC | + | 1, 5 |

| 3 Parietal | ++ | 8, 9, 13 | Parietal | ++ | 3, 4, 8, 11, 13 |

| ASL | |||||

| 1 Anterior perisylvian | − | 15, 19, 20 | Anterior perisylvian | − | 15, 19, 20 |

| 2 DLPFC | − | 6, 10, 14 | DLPFC | − | 10, 14 |

| 3 Parietal-Motor | ++ | 2, 4, 7, 9 | Parietal | ++ | 8, 9, 13 |

| Bilingual Mode | |||||

| 1 Temporal-parietal | − | 16, 20, 21 | Anterior perisylvian- parietal | − | 7, 12, 15, 16, 20 |

| 2 Inferior frontal- parietal | ++ | 2, 8, 11, 13, 14, 19 | Frontal-parietal-temporal | ++ | 1, 2, 9, 10, 13, 14, 18, 21, 22 |

| 3 Medial frontal-posterior temporal | ++ | 10, 12, 17, 18 | Inferior Frontal-posterior temporal | + | 11, 17 |

| 4 | Medial & Inferior Frontal | ++ | 5, 6, 19 | ||

Loadings: “++” indicates positive loading greater than 0.5, “+” indicates a positive loading less than 0.5, “−” indicates a negative loading.

English Left Hemisphere PCA

In the English condition with hearing/native ASL-English bilinguals and English monolinguals, the PCA analysis yielded a first component that explained 27% of the variance and yielded the following clusters: (1) Anterior perisylvian cluster, covering inferior frontal gyrus (IFG) as well as anterior superior temporal gyrus (STG), (2) Dorsal lateral prefrontal cortex (DLPFC) cluster overlaying SFG/MFG, and (3) Parietal cluster, maximally overlaying motor and parietal regions.

The second component for English in the left hemisphere explained 17% of the variance and helped identify larger functional clusters that appear to reflect functional networks. In particular, we identified the following. (1) Parieto-temporal cluster. Negative loadings fell on channels maximally overlaying parietal (channels: 4, 9, 13), and temporal regions (including inferior, middle and superior temporal gyri, spanning anterior-to-posterior temporal regions; channels: 12, 16–18, 20, 21). (2) Frontal-temporal cluster. Low and medium value loadings (0.1–0.6) fell on frontal (including inferior and middle frontal gyri; channels: 10, 15, 19), motor (channels: 2, 7), and posterior inferior temporal (channel 22) regions. (3) Medial frontal cluster. High loadings (0.7–0.9) fell on frontal lobe regions (predominantly including middle and superior frontal gyri: channels: 1, 5, 6, 14).

English Right Hemisphere PCA

In the English condition with hearing/native ASL-English bilinguals and English monolinguals, right hemisphere PCA results closely resembled those observed in the left hemisphere. The first principal component explained 27% of the variance. Clusters included (1) Anterior perisylvian cluster maximally overlaying IFG and anterior STG, (2) DLPFC cluster, identical to the left hemisphere, including frontal regions, predominantly MFG, and again (3) Parietal cluster.

The second principal component for English RH explained 14% of the variance. Two functional clusters were obtained. (1) Distributed network cluster. Negative and low (below 0.4) loadings fell on channels maximally overlaying a full variety of cortical regions covered by the fNIRS probes: frontal (channels: 1, 2, 5, 19), parieto-temporral (channels: 4, 8, 9, 12, 13, 17, 18, 22), and motor (channels: 3, 7) regions. (2) Frontal. Medium and high loadings (above 0.4) consistently fell on extensive frontal, and fronto-temporal regions (channels: 6, 10, 11, 14, 15, 16, 20, 21).

ASL Left Hemisphere PCA

In the ASL condition with hearing/native ASL-English bilinguals and ASL monolinguals, the first PC explained 30% of the variance. The following clusters were obtained: (1) Anterior perisylvian cluster, as in English, maximally overlaying inferior frontal gyrus and anterior superior temporal gyrus clustered together (IFG & anterior STG), (2) DLPFC cluster overlaying frontal lobe regions of middle and superior frontal gyri (SFG, MFG), and (3) Parietal-motor cluster, including parietal and motor regions. As in English, ASL channels overlaying parietal regions received the highest loadings (above 0.7).

The second PCA component for ASL (LH) explained 18% of the variance and also appeared to identify larger functional clusters. (1) Parieto-frontal cluster. Negative loadings fell on channels maximally overlaying parietal (channels: 4, 8, 9, 13) and frontal (channels: 1, 2, 6) regions. Recall that in English, negative loadings helped identify a parietal-temporal rather than parietal-frontal cluster. (2) Frontal-temporal cluster. Similar to English, but with wider temporal coverage, this cluster had low and medium value loadings (0.1–0.6) on frontal (channels: 5, 15, 19) and temporal (channels: 12, 17, 18, 20–22) regions. (3) Medial frontal cluster. As in English, high loadings (0.7–0.9) fell on channels maximally overlaying frontal regions (channels: 10, 11, 14).

ASL Right Hemisphere PCA

In the ASL condition with hearing/native ASL-English bilinguals and ASL monolinguals, the first PC explained 27% of the variance. Right hemisphere PCA results closely resembled those observed with the left hemisphere. Areas identified included: (1) Anterior perisylvian cluster overlaying IFG and anterior STG. (2) DLPFC cluster (3) Parietal cluster. As in the left hemisphere and as in English, most of the channels with high loadings (greater than 0.7) were channels maximally overlaying motor and parietal regions (channels 8, 9, 13). Identically to the left hemisphere, frontal regions received negative loadings in ASL (compared to low loadings in English).

The second principal component for ASL RH explained 17% of the variance. Similar to English RH two functional clusters were obtained. (1) Distributed network cluster. Negative and low (below 0.04) loadings fell on channels maximally overlaying a full variety of cortical regions covered by the fNIRS probes: frontal (channels: 1, 2, 5, 11), parieto-temporal (channels: 4, 8, 9, 12, 13, 16, 17, 18, 20, 21, 22), and motor (channels: 3, 7) regions. (2) Inferior-medial frontal cluster. Medium and high loadings (above 0.4) consistently fell on frontal (IFG, MFG), and superior temporal regions (channels: 6, 10, 14, 15, 16, 19).

Bilingual mode PCA Left Hemisphere

In order to increase the power of this analysis, both Alternating and Simultaneous bimodal conditions were combined. The first PC explained 36% of the variance. There was a stark difference between PCA results for Monolingual mode as compared to Bilingual mode. Instead of yielding units of language, general-cognitive, and sensory-motor processing, this set of PCA results for both first and second components yielded functional processing networks. Clusters identified were (1) Temporal-parietal cluster covering anterior/superior and medial temporal regions, sensory/motor and parietal regions, (2) Inferior frontal-parietal cluster including IFG, and parietal/motor regions, and (3) Medial frontal-posterior temporal cluster on frontal regions (MFG), motor and posterior/posterior-inferior temporal regions.

The second component in the left hemisphere explained 27% of the variance and suggested that the anterior and posterior language regions (“Broca’s”, “Wernicke’s” areas) and parietal regions (which, among other functions, support key linguistic processing in sign language) were indeed working together during bilingual mode. (1) Inferior frontal - posterior temporal – parietal cluster. The highest loadings fell on inferior frontal (channel: 15), posterior/inferior temporal (channels: 17, 21, 22), and parietal regions (channels: 4, 9). Similar to first component, other clusters from the second component were also “multi-region” clusters that incorporated channels maximally overlaying a variety of cortical regions.

Bilingual mode PCA Right Hemisphere

In order to increase the power of this analyses, both Alternating and Simultaneous bimodal conditions were combined. The first PC explained 50% of the variance. Similar to the left hemisphere, we observed that channels grouped into large networks rather than smaller anatomical units. These clusters were (1) Anterior perisylvian cluster – parietal cluster, covering inferior frontal/anterior STG regions and motor/parietal regions, (2) Frontal-parietal-temporal cluster fell on a large area covering frontal, parietal and temporal regions, (3) Inferior frontal-posterior temporal cluster including inferior frontal gyrus and posterior temporal regions, and (4) Medial – inferior frontal cluster, including anterior inferior and middle frontal gyri.

The second principal component for Bilingual mode RH explained 20% of the variance. Similar to English and ASL RH, two functional clusters were obtained. (1) Distributed network cluster. Negative and low (below 0.04) loadings fell on channels maximally overlaying a full variety of cortical regions covered by the fNIRS probes: frontal (channels: 1, 2, 5, 10, 14, 19), parietal (channels: 4, 8, 9), temporal (channels: 12, 13, 17, 18, 21, 22), and motor (channel: 3) regions. (2) Frontal. Medium and high loadings (above 0.4) consistently fell on frontal, and superior temporal regions (channels: 6, 11, 15, 16, 20).

ROIs: Predicted & Functional

In our introduction we hypothesized that we may see task-related brain activations in the frontal lobe, including left inferior frontal gyrus and DLPFC, posterior temporal regions, as well as parietal regions. The channels selected for ROI analysis were governed by these predictions, MRI coregistration (see Figure 1b-e), as well as the data-driven clusters identified by the first PCs described above. In this way, we identified seven regions of interest. (i) Anterior perisylvian cluster (inferior frontal gyrus and anterior aspect of the superior temporal gyrus; channels: 15, 19, 20). (ii) DLPFC (prefrontal cortex, primarily including MFG; channels: 10, 14), (iii) Superior frontal (SFG; channels 1, 5), (iv) Posterior temporal (posterior STG and posterior MTG, overlapping with classic “Wernicke’s area” and channel: 17), (v) Parietal (superior and inferior parietal lobules; channels: 4, 8, 9, 13) (vi) Sensory-motor (postcentral and precentral gyri; channel 7) and (vii) Posterior frontal/motor (dorsal frontal regions adjacent to primary motor cortex and primary motor cortex; channels: 6, 11). Average and standard deviations of signal changes for each ROI, each group and each experimental condition are presented in Table 4, please see Figure 1e for anatomical localization of individual channels.

Table 4.

Mean (and standard deviation) percent signal change for each region of interest (ROI) in the brain for each language group and task conditions in (a) left and (b) right hemispheres.

| (a) Left Hemisphere | ||||||||

|---|---|---|---|---|---|---|---|---|

| Group | Condition | Anterior Perisylvian |

Posterior Temporal |

DLPFC | Superior frontal |

Parietal | Posterior frontal/ motor |

Sensory- Motor |

| English Monolinguals | English | 0.54(0.19) | 0.31(0.23) | 0.32(0.18) | 0.26(0.23) | 0.27(0.17) | 0.30(0.17) | 0.27(0.17) |

| ASL Monolinguals | ASL | 0.41(0.23) | 0.27(0.19) | 0.48(0.22) | 0.52(0.22) | 0.28(0.24) | 0.33(0.14) | 0.49(0.29) |

| ASL-English Bilinguals | English | 0.62(0.23) | 0.16(0.10) | 0.43(0.28) | 0.16(0.09) | 0.22(0.14) | 0.30(0.21) | 0.29(0.18) |

| ASL | 0.48(0.30) | 0.17(0.07) | 0.35(0.30) | 0.30(0.23) | 0.31(0.11) | 0.34(0.23) | 0.43(0.26) | |

| Simultaneous | 0.52(0.18) | 0.36(0.25) | 0.33(0.28) | 0.26(0.17) | 0.28(0.10) | 0.48(0.19) | 0.44(0.27) | |

| Alternating | 0.40(0.19) | 0.38(0.27) | 0.38(0.22) | 0.21(0.11) | 0.27(0.12) | 0.27(0.15) | 0.49(0.35) | |

| (b) Right Hemisphere | ||||||||

|---|---|---|---|---|---|---|---|---|

| Group | Condition | Anterior Perisylvian |

Posterior Temporal |

DLPFC | Superior frontal |

Parietal | Posterior frontal/motor |

Sensory- Motor |

| English Monolinguals | English | 0.53(0.21) | 0.27(0.17) | 0.30(0.19) | 0.24(0.13) | 0.34(0.21) | 0.31(0.18) | 0.23(0.16) |

| ASL Monolinguals | ASL | 0.38(0.12) | 0.28(0.24) | 0.43(0.28) | 0.41(0.13) | 0.30(0.18) | 0.21(0.18) | 0.41(0.19) |

| ASL-English Bilinguals | English | 0.52(0.21) | 0.22(0.14) | 0.31(0.19) | 0.28(0.11) | 0.14(0.12) | 0.34(0.15) | 0.41(0.26) |

| ASL | 0.62(0.16) | 0.31(0.11) | 0.34(0.09) | 0.41(0.20) | 0.23(0.08) | 0.36(0.18) | 0.49(0.34) | |

| Simultaneous | 0.53(0.24) | 0.28(0.10) | 0.31(0.19) | 0.37(0.16) | 0.28(0.22) | 0.35(0.26) | 0.49(0.36) | |

| Alternating | 0.50(0.24) | 0.27(0.12) | 0.21(0.07) | 0.33(0.18) | 0.25(0.06) | 0.40(0.18) | 0.39(0.24) | |

ROI analysis of hearing/native ASL-English bilinguals in Bilingual versus Monolingual modes

Left Hemisphere

A 2 (language modes: Monolingual (ASL & English) versus Bilingual (Alternation & Simultaneous), within factor) X 7 (ROIs, within factor) repeated-measures ANOVA yielded no significant main effect of language modes (F(1, 4) = 1.1), no significant difference between ROIs (F(6, 24) < 1), and a significant mode by ROI interaction (F(6, 24) = 3.7, p < 0.01). Figure 2 shows left hemisphere brain activations in bilinguals during Bilingual and Monolingual modes, suggesting that the interaction might stem from greater recruitment of the posterior temporal and sensory-motor regions during Bilingual mode, and greater recruitment of inferior frontal/anterior STG regions during Monolingual mode.

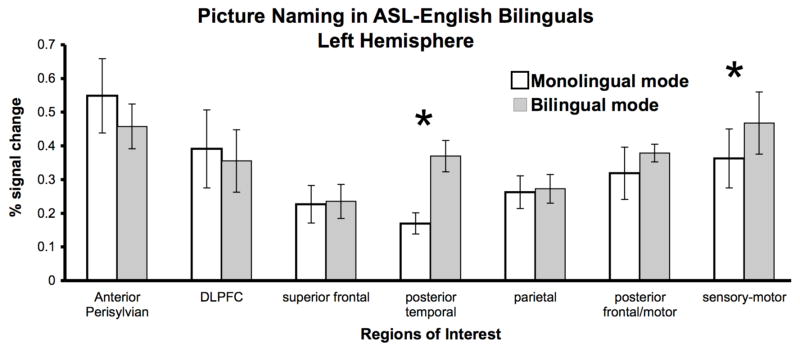

Figure 2.

Brain activation in ASL-English bilinguals during Monolingual and Bilingual modes. Bilinguals showed a significant left hemisphere ROI × Language Mode interaction (p < 0.01). Wilcoxon Signed Ranks test comparisons revealed that there was greater activation in posterior temporal and sensory-motor ROIs in the Bilingual mode as compared to the Monolingual mode (p < 0.05).

We further investigated the source and location of potential differences between Bilingual and Monolingual modes in the left hemisphere. Given the small number of bilingual subjects, we conducted a non-parametric test for matched pairs on the 7 ROIs. Wilcoxon Signed Ranks test revealed that during Bilingual mode, hearing/native ASL-English bilinguals had significantly greater activation in the left posterior temporal region (Z(4) = −2.02, p < 0.05) and left sensory-motor region (Z(4) = −2.02, p < 0.05). The results revealed that all ranks were positive only for Posterior temporal and Sensory-motor ROIs, which means that all subjects showed an increase in activation during bilingual mode in these two regions.

Right Hemisphere

A 2 × 7 repeated-measures ANOVA yielded no significant differences between language modes (F(1, 4) <1), a marginally significant difference between ROIs (F(6, 24) = 3.0, p = 0.02), and no significant interactions, (F(6, 24) <1). As can be seen in Table 4, in both Monolingual and Bilingual modes, right inferior frontal/anterior STG regions appear to have the greatest involvement in the task (the highest percent signal change).

ROI analysis of hearing/native ASL-English bilinguals in Bilingual mode: Alternating versus Simultaneous

Alternating versus Simultaneous

There were no significant main effects or interactions for our ROIs in either hemisphere, as revealed by two (one for each hemisphere) 2 (Alternating & Simultaneous conditions, within factor) X 7 (ROIs, within factor) repeated-measures ANOVAs (LH: condition F(1, 4) <1, ROI F(6, 24) = 2.0; RH: F(1, 4) = <1, ROI F(6, 24) = 1.7, p =0.16; all ns).

ROI analysis of English Picture Naming: ASL-English Bilinguals and English Monolinguals

The comparison of bilingual and monolingual groups was conducted to ensure that bilinguals indeed had an overall native-like neural organization for each of their languages. However, we note that the discrepancy in the sample sizes of monolinguals and bilinguals prevents us from making strong claims about these comparisons. Left Hemisphere English: A 2 (groups: English monolinguals and bilinguals, between factor) X 7 (ROI, within factor) mixed-measures ANOVA yielded a significant main effect for ROI (F(6, 138) = 7.3, p < 0.01), no significant differences between the groups and no significant interactions. Right Hemisphere English: A 2 × 7 mixed-measures ANOVA yielded a significant main effect for ROI (F(6, 138) = 5.4, p < 0.01), no significant differences between the groups and no significant interactions. Follow-up t-tests revealed that ASL-English bilinguals had marginally less recruitment of parietal areas as compared to English monolinguals (t(23) = 1.9, p = 0.06). Left Hemisphere ASL: A 2 (groups: ASL monolinguals & bilinguals, between factor) X 7 (ROI, within factor) mixed-measures ANOVA revealed no significant main effects or interactions. Right Hemisphere ASL: A 2 × 7 mixed-measures ANOVA revealed significant ROI differences (F(6, 60) = 3.0, p < 0.01), and no significant group differences or interactions.

Discussion

Here we addressed the involvement of language-specific versus cognitive-general brain mechanisms in bilingual language use by studying simultaneous and alternating dual-language production in a very special group of bilinguals – specifically, bilinguals who were exposed to a spoken and a signed language from very early in life.

Our primary finding is that the bilinguals showed greater recruitment of left posterior temporal brain regions (overlapping with the classic “Wernicke’s area”) during Bilingual mode as compared to Monolingual mode. These results suggest that left posterior temporal regions may play a key role in bilinguals’ ability to code-switch and use both languages appropriately at the same time—a finding that stands in contrast to accounts of bilingual’s ability to use two languages at the same time as involving cognitive-general brain mechanisms alone.

Behaviorally, all of our groups performed with high and overall comparable accuracy during the Picture Naming task. Interestingly, bilinguals also performed with equally high accuracy when completing the task either in Bilingual or in Monolingual modes.

Hearing/native ASL-English bilinguals showed similar accuracy when using their two languages across a variety of language contexts: one at a time (Monolingual mode), two in rapid alternation (Bilingual mode), and even both simultaneously (Bilingual mode). Bilinguals were also just as accurate as English and ASL monolinguals. This pattern of bilingual performance is commensurate with our own and previous findings showing that bilinguals are just as accurate on language tasks during Bilingual mode as they are in Monolingual mode, and just as accurate as monolinguals (Caramazza & Brones, 1980; Grosjean & Miller, 1994; Kovelman, Shalinsky, Berens & Petitto, 2007; Van Heuven, Dijkstra & Grainger, 1998). How then does the bilingual brain accomplish such a fascinating feat?

Our fNIRS brain imaging results revealed that in Bilingual mode, as compared to Monolingual mode, participants showed greater recruitment of left posterior temporal regions (STG/MTG). We believe that our results should generalize to unimodal bilinguals, because we observed an increase in signal change in posterior temporal regions during both the Simultaneous and Alternating Bilingual mode conditions (the latter being a mode of production in unimodal bilinguals as well), and also because our findings corroborate those of Chee et al. (2003) and Abutalebi et al. (2007) with unimodal bilinguals. Chee et al. observed an increase in activation in prefrontal regions as well as in posterior temporal regions (including posterior STG and supramarginal gyrus), when Chinese-English bilinguals were presented with words in both of their languages within the same trial (“mixed-language” condition). Chee and colleagues interpreted their results with the same line of reasoning as we do here; they suggest that increased signal change in posterior temporal regions is most likely due to the increased language-specific demands of having to differentiate and/or integrate semantic information.

Our findings are also commensurate with those by Abutalebi et al. (2007), where the researchers observed an increase in posterior temporal regions (MTG in particular) when bilinguals listened to sentences that contained lexico-semantic code-switches. The recent study by Abutalebi and colleagues used an event-related paradigm that was capable of detecting both sustained as well as rapidly-changing, switching-related brain activity. Importantly, their results were fully consistent with our hypotheses, and showed that both language-dedicated and cognitive general mechanisms were involved in bilingual dual-language use.

Posterior temporal regions have been consistently implicated in semantic and phonological processing in native sign and spoken language users (Emmorey, Allen, Bruss, Schenker, & Damasio, 2003; Penhune, Cismaru, Dorsaint-Pierre, Petitto, & Zatorre, 2003; Petitto, Zatorre et al., 2000; Zatorre, Meyer, Gjedde, & Evans, 1996). Increased activation in posterior temporal regions might have been driven by an increased demand of keeping lexical-semantic items and their phonological representations maximally active in both languages at the same time (Grosjean, 1997; Kroll & Sunderman, 2003; Paradis, 1997). The dual language comprehension studies by Chee et al. (2003) and Abutalebi et al. (2007), and our present dual language production study converge in suggesting that language-dedicated posterior temporal cortical regions are indeed heavily involved in both the perception and production of lexico-semantic information in Bilingual mode. Moreover, the present brain-imaging findings offer new support for decades of behavioral research suggesting language-specific processing involvement when bilinguals must use both of their languages in the same context.

Dual use of hands and mouth incurred greater activations in left sensory-motor regions. Increased activation in sensory-motor regions is not surprising as participants had to make full use of both of their expressive language modalities. Was the posterior temporal activation also driven purely by motor rather than linguistic demands? Previous research suggests that posterior temporal regions are linked to the processing of manual tools (Johnson-Frey, Newman-Norland, & Grafton, 2005). Could the increased activation in posterior regions have been due to the manual production of semantic information? If so, then left posterior temporal activation should have been of equal intensity during ASL Monolingual mode and Bilingual modes, and both of these conditions should have yielded higher activations than English Monolingual mode. This was not the case in this study (see Table 4), nor in other imaging studies that have compared sign to speech in posterior temporal regions (e.g., Emmorey et al., 2005; Penhune et al., 2003; Petitto et al., 2000).

Bilingual mode lexical tasks require bilinguals to activate and simultaneously operate their extensive dual-language phonological and lexico-semantic inventory. Our principal component analysis for Bilingual mode (particularly the second component) revealed that dual language processing required high coordination of multiple brain regions, particularly highlighting an extensive frontal-temporal-parietal network. Temporal regions are thought to “decode” phonological units, parietal regions are thought to provide the temporary maintenance space for verbal material, and frontal regions are thought to analyze the linguistic units as well as to exert control over the phonological/verbal working memory processes (e.g., Baldo & Dronkers, 2006). Therefore, our data might be consistent with the idea that Bilingual mode requires intensive involvement of the bilinguals’ phonological working memory (for further discussion of the role of verbal working memory in Bilingual mode see also Kovelman, Shalinsky, Berens & Petitto, 2008).

Previous research using similar paradigms (block as well as event-related designs) comparing bilinguals across Monolingual and Bilingual modes has commonly showed increased bilateral recruitment of prefrontal regions, particularly within DLPFC, during bilingual language switching (9/46; e.g., Hernandez et al., 2000; 2001; Rodriguez-Fornells et al., 2002; Wang et al., 2007). A carefully designed bilingual language switching study by Abutalebi et al. (2008) demonstrated that when a monolingual language switching control condition is introduced, it can fully account for DLPFC involvement during Bilingual mode. Moreover, Abutalebi & Green (2007) suggested that DLPFC involvement might also depend on the level of bilingual proficiency: the higher the proficiency, the less “effortful” is the language use, and hence the lower the prefrontal activation. Here we studied Bilingual mode processing in bimodal bilinguals, a population that was highly proficient in both of their native languages and also allowed us to reduce sustained articulation-motor competition demands. Thus, possibly due to both of these factors combined, we found no evidence of increased DLPFC activation.

It is not our intention to claim that bilingual code-mixing is free of general-cognitive task switching demands. We do, however, bring new evidence suggesting that some of the cognitive-general sustained and effortful top-down control in unimodal bilinguals might be due to the pressure to “finalize” language selection on the articulatory-motor level, which is reduced in bimodal bilinguals. Our results also show that language-dedicated mechanisms do play a key role in dual language processing. Finally, we agree with many of our colleagues that bilingual language switching ability is a complex phenomenon that most likely relies both on language-specific and cognitive-general mechanisms, which together involve a complex interplay of cortical and subcortical regions (to mention just a few: Abutalebi et al., 2007; Khateb et al., 2007; van Heuven et al., 2008).

Conclusion

This study utilized functional Near-Infared Spectroscopy (fNIRS) to study bilingual language processing in early-exposed and highly proficient bimodal sign-speech ASL-English bilinguals. The results suggest that language-specific brain areas (including posterior temporal regions, the classic “Wernicke’s area”) are indeed involved in the complex dual-language use ability of bilinguals. Bilinguals showed highly accurate performance when speaking or signing in one language at a time (Monolingual mode), or when using both of their languages in rapid alternation or simultaneously (Bilingual mode). While doing so, bimodal bilinguals showed greater recruitment of left posterior temporal areas in Bilingual mode, a neuroimaging finding that concurs with decades of linguistic and psycholinguistic work on language-specific or linguistic constraints on bilingual code-switching (MacSwan, 2005; Paradis et al., 2000; Petitto et al., 2001; Poplack, 1980). These findings offer novel insights into the nature of human language ability, especially pertaining to the mystery of the neural mechanisms that underlie bilingual language use.

Acknowledgments

We are grateful to the individuals who participated in this study. We sincerely thank Matthew Dubins and Elizabeth Norton for their careful reading of, and helpful comments on the drafts of this manuscript. We also thank Krystal Flemming, Karen Lau, and Doug McKenney. Petitto (Principal Investigator) is grateful to the following granting agencies for funding this research: The National Institutes of Health R01 (Fund: 483371 Behavioral and neuroimaging studies of bilingual reading) and The National Institutes of Health R21 (Fund: 483372 Infants’ neural basis for language using new NIRS). Petitto also thanks The University of Toronto Scarborough for research funds.

Footnotes

Send reprint requests to Petitto (corresponding author) at petitto@utsc.utoronto.ca. For related research see http://www.utsc.utoronto.ca/~petitto/index.html and http://www.utsc.utoronto.ca/~petitto/lab/index.html.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abbate MS, La Chappelle NB. Pictures, please! An articulation supplement. Tucson, AZ: Communication Skill Builders; 1984. [Google Scholar]

- Abutalebi J. Neural aspects of second language representation and language control. Acta Psychologica. 2008;28(3):466–478. doi: 10.1016/j.actpsy.2008.03.014. [DOI] [PubMed] [Google Scholar]

- Abutalebi J, Annoni JM, Zimine I, Pegna AJ, Seghier ML, Lee-Jahnke H, et al. Language control and lexical competition in bilinguals: An event-related fMRI study. Cerebral Cortex. 2008;18:1496–1505. doi: 10.1093/cercor/bhm182. [DOI] [PubMed] [Google Scholar]

- Abutalebi J, Brambati SM, Annoni JM, Moro A, Cappa SF, Perani D. The neural cost of the auditory perception of language switches: An event-related fMRI study in bilinguals. Journal of Neuroscience. 2007;27:13762–13769. doi: 10.1523/JNEUROSCI.3294-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abutalebi J, Cappa FS, Perani D. The bilingual brain as revealed by functional neuroimaging. Bilingualism: Language and Cognition. 2001;4(2):179–190. [Google Scholar]

- Abutalebi J, Green D. Bilingual language production: the neurocognition of language representation and control. Journal of Neurolinguistics. 2007;20:242–275. [Google Scholar]

- Abutalebi J, Miozzo A, Cappa SF. Do subcortical structures control language selection in bilinguals? Evidence from pathological language mixing. Neurocase. 2000;6:101–106. [Google Scholar]

- Ameel E, Storms G, Malt BC, Sloman SA. How bilinguals solve the naming problem. Journal of Memory and Language. 2005;53:60–80. [Google Scholar]

- Baldo J, Dronkers N. The role of inferior parietal and inferior frontal cortex in working memory. Neuropsychology. 2006;20:529–538. doi: 10.1037/0894-4105.20.5.529. [DOI] [PubMed] [Google Scholar]

- Bialystok E. Bilingualism in development: Language, literacy, and cognition. New York: Cambridge University Press; 2001. [Google Scholar]

- Buckner RL, et al. Detection of cortical activation during averaged single trials of a cognitive task using functional magnetic resonance imaging. Proceedings of the National Academy of Sciences USA. 1996;93(25):14878–14883. doi: 10.1073/pnas.93.25.14878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cantone KF, Muller N. Codeswitching at the interface of language-Specific lexicons and the computational system. International Journal of Bilingualism. 2005;9(2):205–225. [Google Scholar]

- Caramazza A, Brones I. Semantic classification by bilinguals. Canadian Journal of Psychology. 1980;34(1):77–81. [Google Scholar]

- Chee MWL, Soon CS, Lee HL. Common and segregated neuronal networks for different languages revealed using functional magnetic resonance adaptation. Journal of Cognitive Neuroscience. 2003;15(1):85–97. doi: 10.1162/089892903321107846. [DOI] [PubMed] [Google Scholar]

- Christoffels IK, Firk C, Schiller NO. Bilingual language control: An event-related brain potential study. Brain Research. 2007;1147:192–208. doi: 10.1016/j.brainres.2007.01.137. [DOI] [PubMed] [Google Scholar]

- Crinion J, Turner R, Grogan A, Hanakawa T, Noppeney U, Devlin JT, et al. Language control in the bilingual brain. Science. 2006;312:1537–1540. doi: 10.1126/science.1127761. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Dupoux E, Mehler J, Cohen L, Paulesu E, Perani D, et al. Anatomical variability in the cortical representation of first and second language. Neuroreport. 1997;8(17):3809–3815. doi: 10.1097/00001756-199712010-00030. [DOI] [PubMed] [Google Scholar]

- Dijkstra T, Van Heuven WJB. The architecture of the bilingual word recognition system: From identification to decision. Bilingualism: Language & Cognition. 2002;5(3):175–197. [Google Scholar]

- Dijkstra T, Van Heuven WJB, Grainger J. Simulating cross-language competition with the bilingual interactive activation model. Psychologica Belgica. 1998;38(3–4):177–196. [Google Scholar]

- Doctor EA, Klein D. Phonological processing in bilingual word recognition. In: Harris RJ, editor. Cognitive Processing in Bilinguals. Advances in Psychology. Vol. 83. Oxford, England: North-Holland; 1992. pp. 237–252. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test-Revised. Circle Pines, MN: American Guidance Service; 1981. [Google Scholar]

- Emmorey K, Allen JS, Bruss J, Schenker N, Damasio H. A morphometric analysis of auditory brain regions in congenitally deaf adults. Proceedings of the National Academy of Sciences USA. 2003;100(17):10049–10054. doi: 10.1073/pnas.1730169100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Borinstein HB, Thompson R. In: Cohen J, et al., editors. Bimodal bilingualism: Code-blending between spoken English and American Sign Language; Proceedings of the 4th International Symposium on Bilingualism; Somerville, MA: Cascadilla Press; 2004. [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Ponto LL, Hichwa RD, Damasio H. The neural correlates of spatial language in English and American Sign Language: a PET study with hearing bilinguals. NeuroImage. 2005;24(3):832–840. doi: 10.1016/j.neuroimage.2004.10.008. [DOI] [PubMed] [Google Scholar]

- Fabbro F. The bilingual brain: Cerebral representation of languages. Brain & Language. 2001;79(2):211–222. doi: 10.1006/brln.2001.2481. [DOI] [PubMed] [Google Scholar]

- Green DW. Mental control of the bilingual lexico-semantic system. Bilingualism: Language & Cognition. 1998;1(2):67–81. [Google Scholar]

- Green DW, Crinion J, Price CJ. Convergence, degeneracy and control. Language Learning. 2006;56:99–125. doi: 10.1111/j.1467-9922.2006.00357.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosjean F. Processing mixed language: Issues, findings and models. In: de Groot AMB, Kroll JF, editors. Tutorials in bilingualism: Psycholinguistic perspectives. Mahwah, NJ: Lawrence Erlbaum; 1997. pp. 225–254. [Google Scholar]

- Grosjean F, Miller JL. Going in and out of languages: An example of bilingual flexibility. Psychological Science. 1994;5(4):201–206. [Google Scholar]

- Hernandez AE, Dapretto M, Mazziotta J, Bookheimer S. Language switching and language representation in Spanish-English bilinguals: An fMRI study. NeuroImage. 2001;14(2):510–520. doi: 10.1006/nimg.2001.0810. [DOI] [PubMed] [Google Scholar]

- Hernandez AE, Martinez A, Kohnert K. In search of the language switch: An fMRI study of picture naming in Spanish-English bilinguals. Brain and Language. 2000;73(3):421–431. doi: 10.1006/brln.1999.2278. [DOI] [PubMed] [Google Scholar]

- Holowka S, Brosseau-Lapre F, Petitto LA. Semantic and conceptual knowledge underlying bilingual babies’ first signs and words. Language Learning. 2002;52(2):205–262. [Google Scholar]

- Jasper HH. Report of the Committee on Methods of Clinical Examination in Electroencephalography. Electroencephalography and Clinical Neurophysiology. 1957;10:371–375. [Google Scholar]

- Johnson JS, Newport EL. Critical period effects in second language learning: The influence of maturational state on the acquisition of English as a second language. Cognitive Psychology. 1989;21(1):60–99. doi: 10.1016/0010-0285(89)90003-0. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex. 2005;15(6):681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaplan E, Goodglass H, Weintraub S. Boston Naming Test. Philadelphia, PA: Lee & Febiger; 1983. [Google Scholar]

- Khateb A, Abutalebi J, Michel CM, Pegna AJ, Lee-Jahnke H, Annoni JM. Language selection in bilinguals: a spatio-temporal analysis of electric brain activity. International Journal of Psychophysiology. 2007;65(3):201–213. doi: 10.1016/j.ijpsycho.2007.04.008. [DOI] [PubMed] [Google Scholar]

- Kegl J. The Nicaraguan Sign Language Project: An overview. Signpost/International Sign Linguistics Quarterly. 1994;7(1):24–31. [Google Scholar]

- Kerkhofs R, Dijkstra T, Chwilla DJ, de Bruijn E. Testing a model for bilingual semantic priming with interlingual homographs: RT and N400 effects. Brain Research. 2006;1068(1):170–183. doi: 10.1016/j.brainres.2005.10.087. [DOI] [PubMed] [Google Scholar]

- Kim KHS, Relkin NR, Lee KM, Hirsch J. Distinct cortical areas associated with native and second languages. Nature. 1997;388(6638):171–174. doi: 10.1038/40623. [DOI] [PubMed] [Google Scholar]

- Kovelman I, Baker SA, Petitto LA. Bilingual and monolingual brains compared using fMRI: Is there a neurological signature of bilingualism? Journal of Cognitive Neuroscience. 2008;20(1):1–17. doi: 10.1162/jocn.2008.20011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovelman I, Baker SA, Petitto LA. Age of bilingual language exposure as a new window into bilingual reading development. Bilingualism: Language & Cognition. doi: 10.1017/S1366728908003386. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovelman I, Shalinsky M, Berens MS, Petitto LA. Shining new light on the brain’s “Bilingual Signature:” A functional Near Infrared Spectroscopy investigation of semantic processing. NeuroImage. 2008;39(3):1457–1471. doi: 10.1016/j.neuroimage.2007.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kroll JR, Sunderman G. Cognitive processes in second language learners and bilinguals: The development of lexical and conceptual representations. In: Doughty C, Long M, editors. The handbook of second language acquisition. Oxford, England: Blackwell; 2003. pp. 104–129. [Google Scholar]

- Lanza E. Can bilingual two-year-olds code-switch? Journal of Child Language. 1992;19(3):633–658. doi: 10.1017/s0305000900011600. [DOI] [PubMed] [Google Scholar]

- MacSwan J. Codeswitching and generative grammar: A critique of the MLF model and some remarks of “Modified Minimalism”. Bilingualism: Language and Cognition. 2005;8(1):1–22. [Google Scholar]

- Mariën P, Abutalebi J, Engelborghs S, De Deyn PP. Acquired subcortical bilingual aphasia in an early bilingual child: Pathophysiology of pathological language switching and language mixing. Neurocase. 2005;11:385–398. doi: 10.1080/13554790500212880. [DOI] [PubMed] [Google Scholar]

- McDonald JL. Grammaticality judgments in a second language: Influences of age of acquisition and native language. Applied Psycholinguistics. 2000;21(3):395–423. [Google Scholar]

- Meuter RFI, Allport A. Bilingual language switching in naming: Asymmetrical costs of language selection. Journal of Memory and Language. 1999;40(1):25–40. [Google Scholar]

- Monsell S, Matthews GH, Miller DC. Repetition of lexicalization across languages: A further test of the locus of priming. Quarterly Journal of Experimental Psychology: Human Experimental Psychology. 1992;44A(4):763–783. doi: 10.1080/14640749208401308. [DOI] [PubMed] [Google Scholar]

- Nee DE, Wager TD, Jonides J. Interference resolution: Insights from a meta-analysis of neuroimaging tasks. Cognitive, Affective, & Behavioral Neuroscience. 2007;7(1):1–17. doi: 10.3758/cabn.7.1.1. [DOI] [PubMed] [Google Scholar]

- Paradis J, Nicoladis E, Genesee F. Early emergence of structural constraints on code-mixing: Evidence from French-English bilingual children. Bilingualism: Language & Cognition. 2000;3(3):245–261. [Google Scholar]

- Paradis M. Bilingualism and aphasia. In: Whitaker H, Whitaker HA, editors. Studies in Neurolinguistics. Vol. 3. New York: Academic Press; 1977. pp. 65–121. [Google Scholar]

- Paradis M. The cognitive neuropsychology of bilingualism. In: de Groot AMB, editor. Tutorials in bilingualism: Psycholinguistic perspectives. Mahwah, NJ: Lawrence Erlbaum; 1997. pp. 331–354. [Google Scholar]

- Paradis J, Nicoladis E, Genesee F. Early emergence of structural constraints on code-mixing: Evidence from French-English bilingual children. Bilingualism: Language & Cognition. 2000;3(3):245–261. [Google Scholar]

- Penhune VB, Cismaru R, Dorsaint-Pierre R, Petitto LA, Zatorre RJ. The morphometry of auditory cortex in the congenitally deaf measured using MRI. NeuroImage. 2003;20(2):1215–1225. doi: 10.1016/S1053-8119(03)00373-2. [DOI] [PubMed] [Google Scholar]

- Perani D. The neural basis of language talent in bilinguals. Trends in Cognitive Science. 2005;9(5):211–213. doi: 10.1016/j.tics.2005.03.001. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J, Paulesu E, Brambati S, Scifo P, Cappa SF, Fazio F. The role of age of acquisition and language usage in early, high-proficient bilinguals: an fMRI study during verbal fluency. Human Brain Mapping. 2003;19(3):170–182. doi: 10.1002/hbm.10110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perani D, Paulesu E, Galles NS, Dupoux E, Dehaene S, Bettinardi V, Cappa SF, Fazio F, Mehler J. The bilingual brain: Proficiency and age of acquisition of the second language. Brain. 1998;121(10):1841–1852. doi: 10.1093/brain/121.10.1841. [DOI] [PubMed] [Google Scholar]

- Perani D, Dehaene S, Grassi F, Cohen L, Cappa SF, Dupoux E, Fazio F, Mehler J. Brain processing of native and foreign languages. Neuroreport. 1996;7(15–17):2439–2444. doi: 10.1097/00001756-199611040-00007. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Holowka S. Evaluating attributions of delay and confusion in young bilinguals: Special insights from infants acquiring a signed and a spoken language. Journal of Sign Language Studies. 2002;3(1):4–33. [Google Scholar]

- Petitto LA, Katerelos M, Levy BG, Gauna K, Tetreault K, Ferraro V. Bilingual signed and spoken language acquisition from birth: Implications for the mechanisms underlying early bilingual language acquisition. Journal of Child Language. 2001;28(2):453–496. doi: 10.1017/s0305000901004718. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Kovelman I. The Bilingual Paradox: How signing-speaking bilingual children help us to resolve it and teach us about the brain’s mechanisms underlying all language acquisition. Learning Languages. 2003;8:5–18. [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proceedings of the National Academy of Sciences USA. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poplack S. Sometimes I’ll start a sentence in English y termino en Espanol. Linguistics. 1980;18:581–616. [Google Scholar]

- Price CJ, Green DW, von Studnitz R. A functional imaging study of translation and language switching. Brain. 1999;122:2221–2235. doi: 10.1093/brain/122.12.2221. [DOI] [PubMed] [Google Scholar]

- Rodriguez-Fornells A, Rotte M, Heinze HJ, Noesselt T, Muente TF. Brain potential and functional MRI evidence for how to handle two languages with one brain. Nature. 2002;415(6875):1026–1029. doi: 10.1038/4151026a. [DOI] [PubMed] [Google Scholar]

- Senghas A. Nicaragua’s lessons for language acquisition. Signpost/International Sign Linguistics Quarterly. 1994;7(1):32–39. [Google Scholar]

- Senghas RJK, Kegl J. Social considerations in the emergence of Idioma de Signos Nicaraguense. Signpost/International Sign Linguistics Quarterly. 1994;7(1):40–46. [Google Scholar]

- Traxler CB. Measuring up to performance standards in reading and mathematics: Achievement of selected deaf and hard-of-hearing students in the national norming of the 9th Edition Stanford Achievement Test. Journal of Deaf Studies and Deaf Education. 2000;5:337–348. doi: 10.1093/deafed/5.4.337. [DOI] [PubMed] [Google Scholar]