Abstract

With many predictors, choosing an appropriate subset of the covariates is a crucial, and difficult, step in nonparametric regression. We propose a Bayesian nonparametric regression model for curve-fitting and variable selection. We use the smoothing spline ANOVA framework to decompose the regression function into interpretable main effect and interaction functions. Stochastic search variable selection via MCMC sampling is used to search for models that fit the data well. Also, we show that variable selection is highly-sensitive to hyperparameter choice and develop a technique to select hyperparameters that control the long-run false positive rate. The method is used to build an emulator for a complex computer model for two-phase fluid flow.

Keywords: Bayesian hierarchical modeling, Nonparametric regression, Markov Chain Monte Carlo, Smoothing splines ANOVA, Variable selection

1 Introduction

Nonparametric regression techniques have become a popular tool for analyzing complex computer model output. For example, we consider a two-phase fluid flow simulation study (Vaughn et al., 2000) carried out by Sandia National Labs as part of the 1996 compliance certification application for the Waste Isolation Pilot Plant (WIPP) in New Mexico. The computer model simulates the waste panel’s condition 10,000 years after the waste panel has been penetrated by a drilling intrusion. The simulation model uses several input variables describing various environmental conditions. The objectives are to predict waste pressure for new sets of environmental conditions and to determine which environmental factors have the largest effect on the response. Since the simulation model is computationally intensive, we would like to develop an emulator, i.e., a statistical model to replicate the output of the complex computer model, to address these objectives.

The nonparametric regression model for response yi is yi = μ + f(x1i,…,xpi) + ϵi, i = 1, …,N, where μ is the intercept, f is the unknown function of covariates x1i, …,xpi, and ϵi is error. With many predictors, choosing an appropriate subset of the covariates is a crucial, and difficult, step in fitting a nonparametric regression model. Several methods exist for curve fitting and variable selection for multiple nonparametric regression. Multivariate adaptive regression splines (MARS; Friedman, 1991) is a stepwise procedure that selects variables and knots for a spline basis for each curve. However, it is well-known that stepwise selection can be unstable and highly sensitive to small changes in the data, as it is a discrete procedure (Breiman, 1995). Therefore, Lin and Zhang (2006) propose the component selection and smoothing operator (COSSO) in smoothing spline analysis of variance models. The COSSO is a penalization technique to perform variable selection via continuous shrinkage of the norm of each of the functional components.

The Bayesian framework offers several potential advantages for nonparametric regression. For example, missing data and non-Gaussian likelihoods can easily be incorporated in the Bayesian model. Also, prediction is improved via Bayesian model averaging, and posterior model probabilities are natural measures of model uncertainty.

A common approach is to model computer output as a Gaussian process. For example, “blind Kriging” of Joseph et al. (2008) assumes the response is the sum of a mean trend and a Gaussian process and variable selection is performed on the mean trend which is taken to be the sum of second-order polynomials and interactions. However, all potential predictors are included in the Gaussian process covariance and thus blind kriging does not perform any variable selection on the overall model.

In contrast, Linkletter et al. (2006) model the regression function f as a p-dimensional Gaussian process with covariance that depends on the p covariates. They perform variable selection on the overall model using stochastic search variable selection via MCMC sampling (e.g., George and McCulloch, 1993; Chipman, 1996; George and McCulloch, 1997; Mitchell and Beauchamp; 1998) to include/exclude variables from the covariance of the Gaussian process. While this method of variable selection improves prediction for complex computer model output, it is difficult to interpret the relative contribution of each covariate, or groups of covariates, to the p-dimensional fitted surface. Also, the covariance function used results in a model that includes all higher-order functional interactions among the important predictors, that is, their model can not reduce to an additive model where the response surface is the sum of univariate functions. Therefore, the functional relationship between a predictor and the outcome is always dependent on the value of all of the other predictors included in the model. As a result, this model is well-suited for a complicated response surface, however, estimation and prediction can be improved in many cases by assuming a simpler model.

Shively et al. (1999), for instance, propose a model for variable selection in additive non-parametric regression. They take an empirical Bayesian approach and give each main effect function an integrated Brownian motion prior. Wood et al. (2002) extend the work of Shively et al. (1999) to non-additive models. They again assume integrated Brownian motion priors for the main effect functions and model interactions between predictors as two-dimensional surfaces with thin plate spline priors. However, it is difficult to interpret the relative contributions of the main effect and interaction terms because the spans of these terms overlap. To perform model selection, they use data-based priors for the parameters that control the prior variance of the functional components. This allows for a BIC approximation of the posterior probability of each model under consideration. This approach requires computing posterior summaries of all models under consideration, which is infeasible in situations with many predictors, especially when high-order interaction terms are being considered. Gustafson (2000) also includes a two-way interactions but, to ensure identifiability, main effects are not allowed to be in the model simultaneously with interactions and predictors are allowed to interact with at most one other predictor. Complex computer models often have many interaction terms, so this is a significant limitation.

In this paper, we propose a Bayesian model for variable selection and curve fitting for nonparametric multivariate regression. Our model uses the functional analysis of variance framework (Wahba, 1990; Wahba et al., 1995, and Gu, 2002) to decompose the function f into main effects fj, two-way interactions fjk, and so on, i.e.,

| (1) |

The functional ANOVA (BSS-ANOVA) is equipped with stochastic constraints that ensure that each of the components are identified so their contribution to the overall fit can be studied independently. Rather than confining the regression functions to the span of a finite set of basis functions as in Bayesian splines, we use a more general Gaussian process prior for each regression function.

We perform variable selection using stochastic search variable selection via MCMC sampling to search for models that fit the data well. The orthogonality of the functional ANOVA framework is particularly important when the objective is variable selection. For example, assume two variables have important main effects but their interaction is not needed. If the interaction is modeled haphazardly so the span of the interaction includes the main effect spaces, it is possible that the inclusion probability could be split between the model with main effects and no interaction and the model with the interaction alone, since both can give the same fit. In this case, inclusion probabilities for the main effects and interaction could be less than 1/2 and we would fail to identify the important terms. Because of the orthogonality, our model only includes interactions that explain features of the data that can not be explained by the main effects alone. Also, due to the additive structure of our regression function, we are able to easily include categorical predictors which is problematic for Gaussian process models (although Qian et al. (2008) suggest a way to incorporate categorical predictors into a GP model). Our model is also computationally-efficient, as we avoid enumerating all possible models and we avoid inverting large matrices at each MCMC iteration. We show that stochastic search variable selection can be sensitive to hyperparameter selection and overcome this problem by specifying hyperparameters that control the long-run false positive rate. Bayesian model averaging is used for prediction, which is shown to improve predictive accuracy.

The paper proceeds as follows. Sections 2 and 3 introduce the model. The MCMC algorithm for stochastic search variable selection is described in Section 4. Section 5 presents a brief simulation study comparing our model with other nonparametric regression procedures. Our Bayesian model compares favorably to MARS, COSSO, and Linkletter et al.’s method in terms of predictive performance and selecting important variables in the model. Section 6 analyzes the WIPP data. Here we illustrate the advantages of the Bayesian approach for quantifying variable uncertainty. Section 7 concludes.

2 A Bayesian smoothing spline ANOVA (BSS-ANOVA) model

2.1 Simple nonparametric regression

For ease of presentation, we introduce the nonparametric model first in the single-predictor case and then extend to the multiple-predictor case in Section 2.2. The simple nonparametric regression model is

| (2) |

where f is an unknown function of a single covariate xi ∈ [0, 1] and . The regression function f is typically restricted to a particular class of functions. We consider the subset of Mth-order Sobolev space that includes only functions that integrate to zero and have M proper derivatives, i.e., f ∈ ℱM where

| (3) |

To ensure that each draw from f’s prior is a member of this space, we select a Gaussian process prior with cov(f(s), f(t)) = σ2τ2K1(s,t), where the kernel is defined as,

cm > 0 are known constants, and Bm is the mth Bernoulli polynomial. Wahba (1990) shows that each draw from this Gaussian process resides in ℱM and the posterior mode assuming cm = ∞ for m ≤ M and cM+1 = 1 is the (M + 1)st order smoothing spline. Steinberg and Bursztyn (2004) discuss an additional interpretation of this kernel. They show that for the same choices of cm that this Gaussian process model is equivalent to a Bayesian trigonometric regression model with diffuse priors for the low-order polynomial trends, a proper Gaussian prior for the (M+1)st-degree polynomial, and independent Gaussian priors for the trigonometric basis function’s coefficients with variances that depend on the frequencies of the trigonometric functions.

For the remainder of the paper we select M = 1 and set c ≡ c1 = … = cM+1. Therefore draws from the prior are continuously differentiable with path properties of integrated Brownian motion. As discussed in Section 3, to perform variable selection we require c < ∞. In this kernel, the term controls the variability of the (M + 1)st-degree polynomial trend and is the stationary covariance of the deviation from the polynomial trend. In our analyses in Sections 5 and 6, the constant c is set to 100 to give vague, yet proper, priors for the linear and quadratic trends. So our model essentially fits a quadratic response surface regression plus a remainder term which is a zero-mean stationary Gaussian process constrained to be orthogonal to the quadratic trend. We have intentionally overparameterized with τ2 and σ2 for reasons that will be clear in Section 3.

2.2 Multiple regression

The nonparametric multiple regression model for response yi is yi = μ + f(x1i, …, xpi) + ϵi, where x1i, …, xpi ∈ [0, 1] are covariates, f ∈ ℱ is the unknown function, and . To perform variable selection, we use the ANOVA decomposition of the space ℱ into orthogonal subspaces, i.e.,

| (4) |

where ⊕ the direct sum, ⊗ is the direct product, and each ℱj is given by (3) (See Wahba (1990) or Gu (2002) for more details). Assume that each fj is a Gaussian process with covariance and that each fkl is a Gaussian process with covariance , where

| (5) |

For large c, the final term (c−1) KP (xki, xki′) KP (xli, xli′) gives a vague prior to the low-order bivariate polynomial trend. Using this kernel, fj ∈ ℱj and fkl ∈ ℱk ⊗ ℱl. This will ensure that each draw from this space will satisfy , to identify the intercept. This also identifies the main effects by forcing the interactions to satisfy . These constraints allow for a straightforward interpretation of each term’s effect.

Higher order interactions can also be included. However, these terms are difficult to interpret. Therefore, we combine all higher order interactions into a single process. Let the higher-order interaction space be

| (6) |

where AC is the compliment of A. The covariance of the Gaussian process fo ∈ ℱo is

| (7) |

Defining the covariance this way assures that f0 will be orthogonal to each main effect and interaction term.

The finite-dimensional model for the vector of observations y = (y1, …, yn)′ is

| (8) |

where μ = (μ, …, μ)′ is the intercept, xj = (xj1, …, xjn)′ is a vector of observations for the jth covariate, fj(xj) is the jth main effect function evaluated at the n observations, fkl(xk, xl) is the vectorized interaction, f0(x1, …, xp) captures higher-order interactions, and ε ~ N (0,σ2In). We assume the intercept μ has a flat prior and that σ2 ~ InvGamma(a/2, b/2). The priors for the main effect and interaction functions are defined through the kernels in (4) as

| (9) |

| (10) |

| (11) |

where the (i, i′) component of the covariance matrix Σj is K1(xji; xji′), the (i, i′) component of the covariance matrix Σkl is K2(xki, xki′, xli; xli′), Σ0 is defined similarly following (7), and τj, τkl, and τ0 are unknown with priors given in Section 3. To help specify priors for τj, τkl, and τ0, we rescale Σj, Σkl, and Σ0 to have trace n. After this standardization, στj (στkl) can be thought of as the typical prior standard deviation of an element of fj (fkl).

2.3 Categorical predictors

Complex models often have categorical variables that represent different states or point to different submodels to be used in the analysis. The BSS-ANOVA framework is also amendable to these unordered categorical predictors. Assume xi ∈ {1, 2,…,G} is categorical and f(xi) = θxi, where , g = 1, …, G. To identify the intercept we enforce the sum-to-zero constraint . This model can also be written in the kernel framework by taking f to be a mean-zero Gaussian process with singular covariance cov(f(s), f(t)) = σ2τ2K1(s, t), where the kernel is defined as K1(s, t) = KN(s, t),

| (12) |

and I(·) is the indicator function. Note that with unordered categorical predictors we exclude the low-order polynomial trend, i.e., KP (s, t) = 0 for all s and t.

Interactions including categorical predictors with the kernel given in (12) are handled no differently than interactions between continuous predictors. For example, assume x1 ∈ {1, …, G} is categorical and x2 ∈ [0, 1] is continuous. The kernel-based interaction is equivalent to the model f1,2(x1; x2) = hx1(x2) for some hx1 ∈ ℱM that is, the effect of x2 is different within each level of x1. An attractive feature of this kernel is that it enforces the restrictions ∫ hx1(x2)dx2 = 0 for all x1 ∈ {1, …, G} and Σg hg(x2) = 0 for all x2 ∈ [0, 1] to separate the interaction from the main effects.

3 Variable selection

It is common in variable selection to represent the subset of covariates included in the model with indicator variables γj and γjk, where γj is one if the main effect for xj is in the model and zero otherwise, and γjk is one if the interaction for xj and xk is in the model and zero otherwise. To avoid enumerating all possible models, stochastic search variable selection (e.g., George and McCulloch, 1993; Chipman, 1996; George and McCulloch, 1997; Mitchell and Beauchamp, 1998) assigns priors to the binary indicators and computes model probabilities using MCMC sampling. To perform variable selection in the nonparametric setting, we specify priors for the standard deviations τj and τkl in terms of indicators γj and γkl to give priors with positive mass at zero. Given that τj (τkl) is zero and c is finite, the curve fj (fkl) is equal to zero and the term is removed from the model. This approach is slightly different than the original formulation of George and McCulloch (1993), who give small but non-zero variance to negligible variables; in contrast with their approach, setting the variance precisely to zero completely removes variables from the model.

Parameterization, identification, and prior selection for the hypervariances in Bayesian hierarchical models is notoriously problematic and is an area of active research. After a comparative study of several commonly-used priors, Gelman (2006) recommends either a uniform or half-Cauchy prior on the standard deviation. Following this recommendation, we assume , where ρ is the median of the half-Cauchy prior. The interaction standard deviations τkl are modelled similarly.

Variable selection can be sensitive to the prior standard deviation. To illustrate the effect of the prior standard deviation on model selection, first consider the simpler case of multiple linear regression with orthogonal covariates, i.e., , where Bern (0.5), β ~ N (0, σ2τ2In), and ϵ ~ N (0, σ2In). Assuming σ2 ~ InvGamma (a/2, b/2), the marginal posterior log odds of γj = 1 are approximately

| (13) |

where is the least squares estimate of β and σ̂2 = (y′y + b)/(n + a) is σ2’s posterior mode. If τ = 0, the log odds are zero for any value of ; as τ goes to infinity, the log odds decline to negative infinity for any value of . Therefore, if τ’s prior is chosen haphazardly, the influence of the data can be completely overwhelmed by the prior standard deviation.

Given the subtle relationship between τ and the posterior of γj, it is difficult to chose a prior for τ that accurately depicts our prior model uncertainty. To alleviate this issue, we select priors for the standard deviations to give desirable long-run false positive rates. The marginal log odds for the univariate nonparametric model in Section 2.1 (analogous to (13) for linear regression) are approximately

| (14) |

Appendix A.1 shows that under the null distribution y ~ N(0,σI),

| (15) |

where the expected value is taken with respect to y. This suggests that τ’s prior should be scaled by , e.g., we take . This is similar to the unit-information prior of Kass and Wasserman (1995) which uses -scaling and to the approach of Ishwaran and Rao (2005) who use -scaling for the Bayesian linear regression model to give desirable frequentist properties. It is important to note that since our prior depends on the sample size n the procedure is not technically fully-Bayesian, however the procedure could easily be modified to be fully-Bayesian by incorporating reliable prior information for τ.

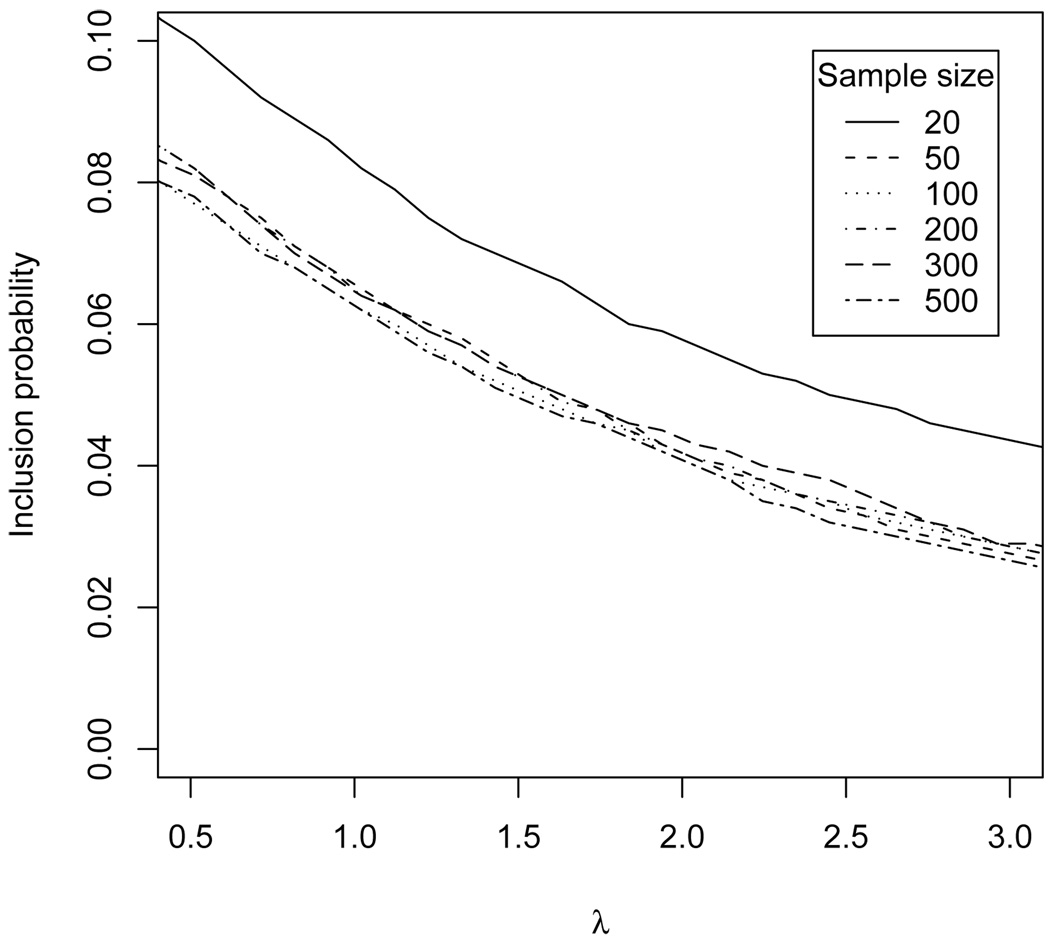

To select λ, we randomly generate 10,000 y for various n assuming y ~ N(0, In). For each simulated data set, we compute E(π|y). Since it is common to select a variable if E(π|y) > 0.5 (e.g., Barbieri and Berger, 2004), Figure 1 shows the proportion of the 10,000 data sets that give E(π|y) > 0.5 for each n and λ. After tuning τ’s prior to depend on n, the false positive rate remains stable for n ≥ 50 and is around 0.05 for λ = 2. Although this result applies to the univariate model, we also use half-Cauchy priors with λ = 2 for each standard deviation in the multivariate model. Section 5’s simulation study verifies that this prior controls the false positive rate in the multiple-predictor setting as well, even in the presence of correlated predictors.

Figure 1.

Plot of the probability (with respect to y’s null distribution) that E(π|y, λ) > 0.5 by λ.

4 MCMC algorithm

This section describes the algorithm used to draw MCMC samples from the posterior of the models defined in Sections 2 and 3. Gibbs sampling is used for μ and σ2. The full conditionals for these parameters are

| (16) |

| (17) |

where

| (18) |

| (19) |

In the case of categorical predictors the covariance matrices will be singular, and we use the generalized inverses.

Define all the parameters in the model other than the jth main effect parameters fj(xj) and τj as Θj. Draws from p(fj(xj), τj|Θj) are made by first integrating over fj(xj) and making a draw from p(τj|Θj) and then sampling fj(xj) given τj and Θj. Integrating over fj(xj) gives

| (20) |

where , zj = y − f(x1,…,xp) + fj(xj), and g(τj|λ) is the half-Cauchy density function. Samples are drawn from p(τj|Θj) using adaptive-rejective sampling with candidates taken from the τj’s prior. Note that we do not directly sample γj or ηj, but rather we directly sample the standard deviation τj = γjηj assuming its zero-inflated half-Cauchy prior. Given τj, the main effect curve has full conditional

| (21) |

and is updated using Gibbs sampling. This approach is also used to update the interaction curves.

Inverting the n × n matrix at each MCMC iteration can be cumbersome for large data sets. However, matrix inversion can be avoided by computing the spectral decomposition Σj outside of the MCMC algorithm. Let , where Γj is the n × n orthonormal eigenvector matrix and Dj is the diagonal matrix of eigenvalues dj1 ≥ … ≥ djn. Then fj(xj) can updated by drawing and setting

| (22) |

This sampling procedure only requires inversion of the diagonal matrix .

In practice retaining all n eigenvector/eigenvalue pairs in the spectral decomposition of Σj may be unnecessary. A reduced model replaces is the first K rows of Γj and is the diagonal matrix with diagonal elements dj1, …, djK. Analogous simplifications may be used for the interaction curves.

MCMC sampling is carried out in the freely available software package R (R Development Core Team, 2006). We generate 20,000 samples from the posterior and discard the first 5,000. Convergence is monitored by inspecting trace plots of the deviance and several of the variance parameters. For each MCMC iteration our model is on the order of (number of terms in the model)*K2. Therefore as the number of interactions increases computation becomes more time-consuming. For the WIPP data in Section 6 the two-way interaction model runs in a few hours on an ordinary PC.

We compare models using the deviance information criterion (DIC) of Speigelhalter et al. (2002), defined as DIC = D̄ + pD where D̄ is the posterior mean of the deviance, pD = D̄ − D̂ is the effective number of parameters, and D̂ is the deviance evaluated at the the posterior mean of the parameters in the likelihood. The model’s fit is measured by D̄, while the model’s complexity is captured by pD. Models with smaller DIC are preferred.

5 Simulation study

In this section we conduct a simulution study to compare the BSS-ANOVA model described in Section 2 to MARS, the COSSO, and the Gaussian process model of Linkletter et al. (2006). MARS analyses are done in R using the “polymers” function in the “polspline” package. The Gaussian process model of Linkletter assumes that f (x1,…, xp) is multivariate normal with mean μ and covariance

| (23) |

The correlation parameter ρj’s prior is a mixture of a Uniform(0,1) and a point mass at one, with the point mass at one having prior probability 0.25. If ρk = 1, xk does not appear in the covariance and is essentially removed from the model.

5.1 Setting

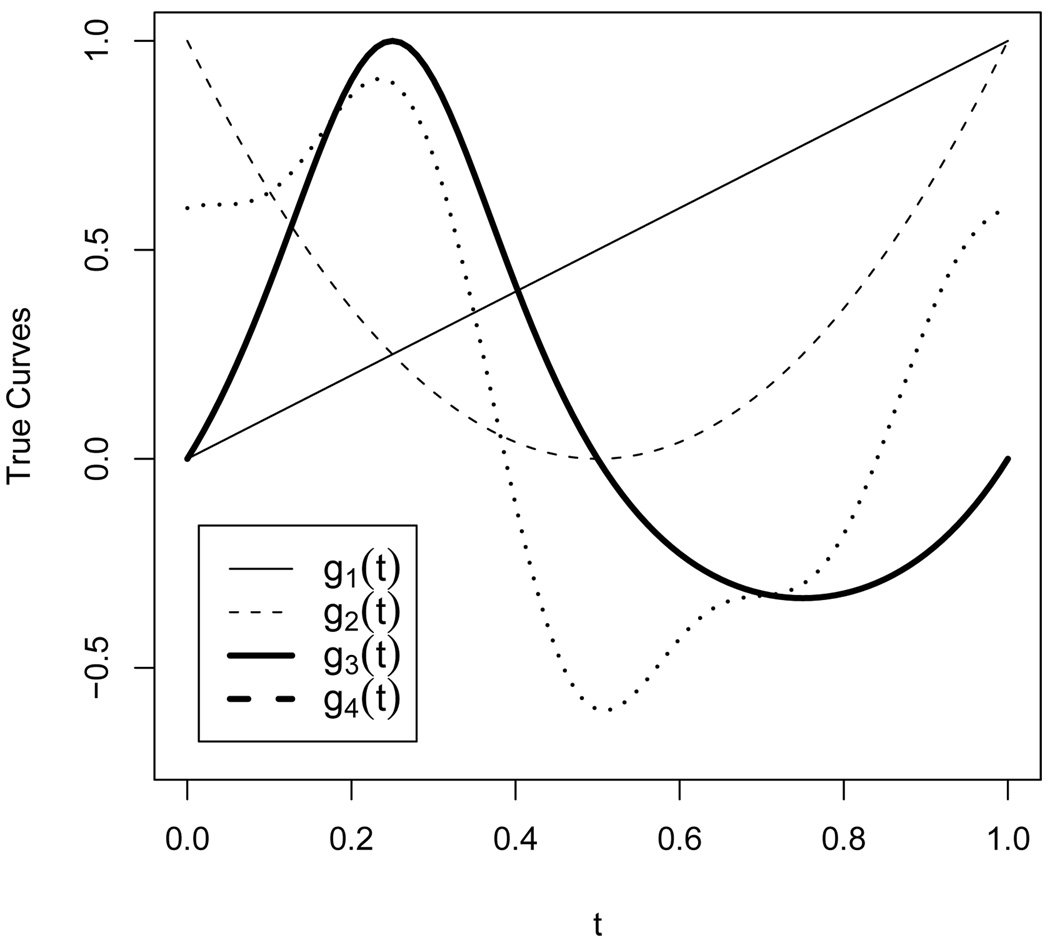

Data are generated assuming the underlying models in Table 1. We use 50 simulated data sets for each simulation scenario. Following Li and Zhang (2006), we specify models using four building block functions (plotted in Figure 2):

g1(t) = t

g2(t) = (2t − 1)2

g3(t) = sin(2πt)/(2 − sin(2πt))

g4(t) = 0.1 sin(2πt) + 0.2 cos(2πt) + 0.3 sin2(2πt) + 0.4 cos3(2πt) + 0.5 sin3(2πt).

Table 1.

Simulation study design.

| Design | n | p | σ | f (x1, …, xp) |

|---|---|---|---|---|

| 1 | 100 | 10 | 2.28 | 5g1(x1) + 3g2(x2) + 4g3(x3) + 6g4(x4) |

| 2 | 100 | 4 | 2.28 | 5g1(x1) + 3g2(x2) + 4g3(x1x2x3) |

| 3 | 100 | 6 | 2.28 | 5g1(x1) + 3g2(x2) + 4g3(x3) + 6g4(x4) 4g3(x1x2) |

Figure 2.

Plots of the true functions used in the simulation study.

The covariates x1, …, xp are generated on the interval [0,1] using three covariance structures: independence, compound symmetry (CS), and autoregressive (AR). For the independence case, the covariates are generated as independent Uniform(0,1). To draw covariates with a compound symmetric covariance, we sample w0, …, wp as independent Uniform(0,1) variables and define xj = (wj + two)/(1 + t), to give for any pair (j, j′). The autoregressive covariates are generated by sampling w1, …, wp as independent Normal (0,1) variables, and defining x1 = w1 and . The covariates are trimmed on [−2.5, 2.5] and scaled to [0,1].

The methods are compared in terms of prediction accuracy and variable selection. For each data set and method we compute

| (24) |

where f is the true mean curve, f̂ is the estimated value (the posterior mean, averaged over all models, for Bayesian methods), xi, i = 1, …, n, are the observed design points, and zj, j = 1, …, 1000, are unobserved locations drawn independently from the covariate distribution.

We also record the true positive and false positive rates for each model. The true (false) positive rate is computed by recording the proportion of the important (unimportant) variables included for each data set and averaging over all simulated data sets. A variable is deemed to be included in the BSS-ANOVA model if the posterior inclusion probability is greater than 0.5. Linkletter et al. use an added-variable method to select important variables at a given Type I error level. For computational convenience, we assume a covariate is in the model if the posterior median of ρk < 0.5. This gives Type I error of approximately 0.05 for the simulation below. We also tune MARS’s generalized cross-validation penalty to give Type I error near 0.05 (gcv = 2.5 for design 1 and gcv = 2 for designs 2 and 3).

Main effects only models are used for design 1 for MARS, COSSO, and the BSS-ANOVA models; all possible two-way interactions are included as candidates for the other designs. The f0 component is included for all BSS-ANOVA fits.

5.2 Results

For each simulation design, the Bayesian MSE is substantially smaller than the MSE for MARS and COSSO (Table 2a). Although MARS is able is mimic many of the important features of the true curves, its piece-wise linear fit does not match the smooth true curves in Figure 2. As also shown by Lin and Zhang (2006), the COSSO improves on MARS. Although the fitted curves from the COSSO are often similar to the Bayesian model, the Bayesian model achieves smaller MSE through model averaging. It may also be possible to improve the performance of the frequentist methods using non-Bayesian model averaging such as bagging (Brieman, 1996).

Table 2.

Results of the simulation study. PMSE is reported as the mean (standard error) over the simulated datasets for each simulation setting. The true (false) positive rate is computed by recording the proportion of the important (unimportant) variables included for each data set and averaging over all simulated data sets. A variable is deemed to be included in the Bayesian models if the posterior inclusion probability is greater than 0.5.

| (a) Prediction mean squared error (PMSE) | |||||

|---|---|---|---|---|---|

| Design | Correlation of the predictors | MARS | COSSO | BSS-ANOVA | Linkletter |

| 1 | Ind | 3.23 (0.28) | 2.33 (0.13) | 1.67 (0.08) | 3.50 (0.12) |

| 1 | CS (t=1) | 7.60 (0.83) | 6.08 (0.40) | 4.11 (0.27) | 7.39 (0.24) |

| 1 | AR (ρ=0.5) | 5.86 (0.44) | 5.37 (0.33) | 3.72 (0.18) | 6.38 (0.22) |

| 2 | Ind | 2.26 (0.08) | 1.68 (0.04) | 1.63 (0.04) | 1.40 (0.05) |

| 3 | Ind | 5.03 (0.38) | 4.79 (0.23) | 2.72 (0.09) | 4.50 (0.08) |

| (b) Inclusion percentage for variables not in the true model | |||||

|---|---|---|---|---|---|

| Design | Correlation of the predictors | MARS | COSSO | BSS-ANOVA | Linkletter |

| 1 | Ind | 0.04 | 0.06 | 0.03 | 0.03 |

| 1 | CS (t=1) | 0.04 | 0.13 | 0.03 | 0.08 |

| 1 | AR (ρ=0.5) | 0.03 | 0.12 | 0.05 | 0.06 |

| 2 | Ind | – | – | – | – |

| 3 | Ind | 0.04 | 0.13 | 0.10 | 0.11 |

| (c) Inclusion percentage for variables in the true model | |||||

|---|---|---|---|---|---|

| Design | Correlation of the predictors | MARS | COSSO | BSS-ANOVA | Linkletter |

| 1 | Ind | 0.78 | 0.91 | 0.91 | 0.81 |

| 1 | CS (t=1) | 0.74 | 0.83 | 0.79 | 0.80 |

| 1 | AR (ρ=0.5) | 0.75 | 0.82 | 0.78 | 0.80 |

| 2 | Ind | – | – | – | – |

| 3 | Ind | 0.67 | 0.77 | 0.82 | 0.89 |

For each simulation design (inclusion rates are not given for Design 2 because it is does not fall within our BSS-ANOVA model that does not include three-way interactions) the BSS-ANOVA model also maintains the nominal false positive rate; for all simulations 3–10% of the truly uninformative variables are included in the model (Table 2b), supporting the choice of hyperparameters in Section 3. To further support the hyperparameter selection, we also simulated 50 data sets from the null model with p = 10 unimportant predictors and σ = 2.28 (not shown in Table 2). The false selection rate was no more than 7.5% for independent, CS, or AR(1) covariates. Also, despite the potential effects of concurvity (Gu, 1992; Gu, 2004), nonparametric analogue of multicollinearity, the BSS-ANOVA is able to identify truly important predictors at a high rate even with correlated predictors.

The BSS-ANOVA model also outperforms Linkletter’s method for designs 1 and 3. These designs exclude some of the interactions involving the important main effects, and therefore Linletter’s full-interaction model is not appropriate. Linkletter’s method does perform well for design 2 which includes a three-way interaction and no variables not included in the three-way interaction. This illustrates that Linkletter et al.’s method is preferred if the response surface is a complicated function of high-order interactions between all of the significant predictors, whereas the proposed method is likely to perform well if the response surface is the sum of simple univariate and bivariate functions.

6 Analysis of the WIPP data

In this section we analyze the WIPP data described in Section 1. The outcome variable of interest here is cumulative brine flow (m3) into waste repository at 10,000 years for a drilling intrusion at 1000 year that penetrates the repository and an underlying region of pressurized brine; an E1 intrusion in the terminology of Helton et al. (2000). The four main pathways by which brine enters the repository are flow through the anhydrite marker beds, drainage from the disturbed rock zone, flow down the intruding borehole from overlying formations, and brine flow up the borehole from a pressurized brine pocket. There are n = 300 observations and we include p = 11 possible predictors. The predictors involved in the Two-Phase Fluid Flow model describe various environmental conditions and are described briefly in Appendix A.2 and in detail in Vaughn et al. (2000). All of the predictors are continuous except for the pointer variable for microbial degradation of cellulose (WMICDFLG) which has three levels: (1) no microbial degradation of cellulose, (2) microbial degradation of only cellulose, and (3) microbial degradation of cellulose, plastic, and rubber.

We compare the BSS-ANOVA model with two-way interactions with the model of Linkletter et al. (2007). It is difficult to incorporate categorical predictors in Linkletter et al.’s Gaussian process model, but to facilitate the comparison we order the three categories of microbial degradation of cellulose by their within-level mean response and treat the ordered variable as continuous. The inclusion probabilities in Table 3 for the BSS-ANOVA’s main effects are fairly similar to the inclusion probabilities for Linkletter et al.’s model. Five variables have posterior inclusion probabilities equal to 1.00 for both models: anhydrite permeability (ANHPRM), borehole permeability (BHPRM), bulk compressibility of brine pocket (BPCOMP), halite porosity (HALPOR), and microbial degradation of cellulose (WMICDFLG). This set of important variables is consistent with previous analysis of this model using stepwise regression approaches (Helton, 2000 and Storlie, 2007).

Table 3.

Comparison of variable importance for the WIPP data. “Inc. Prob” in the posterior inclusion probability and “L2 norm” is the posterior 95% interval of .

| BSS-ANOVA | Linkletter | BSS-ANOVA best model | ||

|---|---|---|---|---|

| Inc. Prob. | L2 norm | Inc. Prob. | L2 norm | |

| ANHPRM | 1.00 | (0.43, 1.10) | 1.00 | (0.41, 0.89) |

| BHPERM | 1.00 | (1.59, 3.13) | 1.00 | (1.81, 2.88) |

| BPCOMP | 1.00 | (0.78, 1.67) | 1.00 | (0.83, 1.45) |

| BPPRM | 0.08 | (0.00, 0.03) | 0.01 | – |

| HALPOR | 1.00 | (0.56, 1.67) | 1.00 | (0.58, 1.09) |

| HALPRM | 0.46 | (0.00, 0.10) | 0.03 | – |

| SHPRMCLY | 0.28 | (0.00, 0.07) | 0.01 | – |

| SHPRMSAP | 0.12 | (0.00, 0.03) | 0.07 | – |

| SHPRNHAL | 0.11 | (0.00, 0.04) | 0.00 | – |

| SHRBRSAT | 0.66 | (0.00, 0.14) | 0.03 | (0.01, 0.13) |

| WMICDFLG | 1.00 | (0.66, 1.55) | 1.00 | (0.80, 1.57) |

| BPCOMP × WMICDFLG | 1.00 | (0.41, 0.99) | – | (0.44, 0.92) |

| BPCOMP × BHPERM | 0.93 | (0.00, 0.21) | – | (0.04, 0.34) |

| SHPRMSAP × WMICDFLG | 0.85 | (0.00, 0.18) | – | (0.02, 0.15) |

| SHRGSSAT × SHPRNHAL | 0.60 | (0.00, 0.10) | – | (0.01, 0.12) |

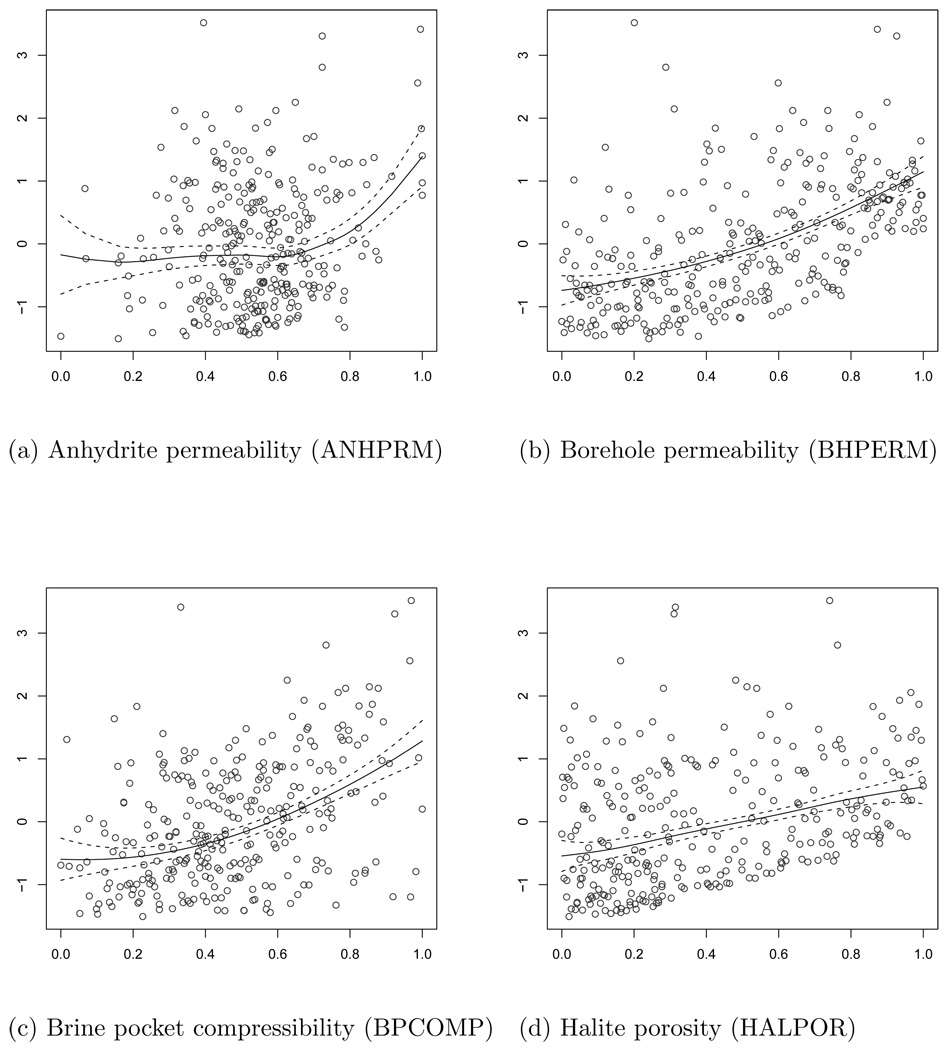

The posterior mean curves from the BSS-ANOVA model for several predictors are plotted in Figure 3. Note that due to the BSS-ANOVA decomposition the estimates of the main effect curves are interpretable on their own. There is no need to numerically integrate over the other predictors as in partial dependence plots (Hastie et al., 2001). The effects for bulk compressibility (BPCOMP) and borehole permeability (BHPERM) are postive. Increasing BPCOMP increases the amount of brine that leaves the brine pocket for each unit drop in pressure, and increasing BHPRM both reduces the pressure in the repository and reduces resistance to flow between the brine pocket and the repository. Both of these result in a larger brine flow into the repository through the borehole. Positive effects are also indicated for ANHPRM and HALPOR. These result from reducing the resistance to flow in the anhydrite and halite, respectively which increases brine flow from the marker beds. Notice also how the effect from ANHPRM is flat for the first half of its range. This is because it needs to exceed a threshold before the permeability is high enough to counteract the pressure in the repository and allow for brine to flow from the marker beds. There is also an overall negative effect when going from levels 1 to 2 to 3 for the microbial degradation flag (WMICDFLG) as seen in Figure 4b. This is because the more microbial gas that is generated, the higher the repository pressure which discourages brine inflow.

Figure 3.

Raw data vs main effect curves (i.e., fj(x)) for the WIPP data. The solid lines are the medians and the dashed lines are 95% intervals.

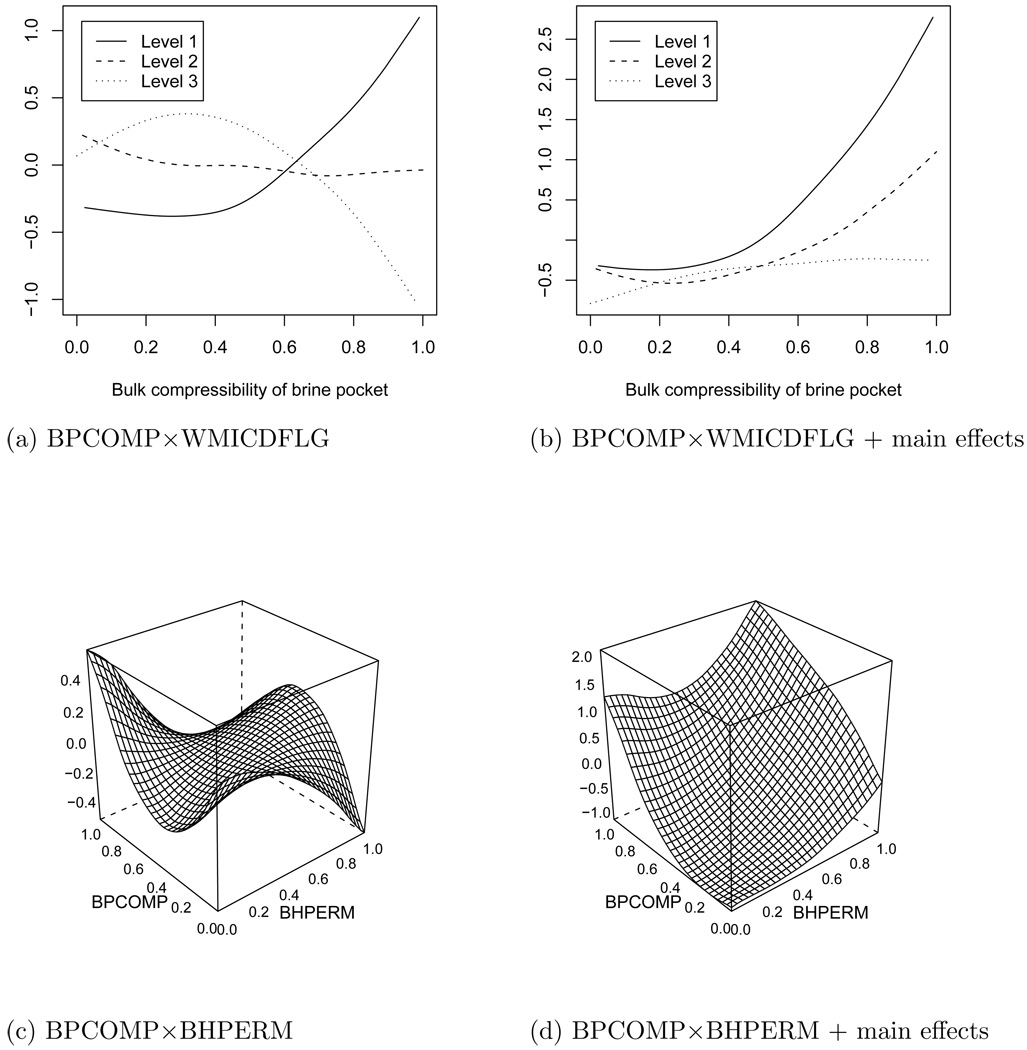

Figure 4.

Interaction plots for compressibility of brine pocket (BPCOMP) × microbial degradation of cellulose (WMICDFLG) and compressibility of brine pocket (BPCOMP) × intrinsic brine pocket permeability (BHPERM). Panels (a) and (c) give the posterior mean of fjk(xj, xk) and Panels (b) and (d) give the posterior means of fj(xj) + fk(xk) + fjk(xj, xk).

The inclusion probabilities for the remaining variables are less than 0.10 using Linkletter et al.’s model. The BSS-ANOVA model identifies an additional main effect, residual brine saturation in the shaft (SHRBRSAT), with inclusion probability 0.66. This association is somewhat surprising because the shaft seals are quite effective so the flow is unlikely to go down the shaft. This is being looked into further.

The inclusion probability for the BSS-ANOVA’s main effects are as high or higher than the inclusion probabilities for Linkletter et al.’s model for each predictor. This may be due to the fact that when a variable is included in the Gaussian process model all interactions must be included, whereas the additive model can simply add a main effect curve. The posterior mean curves in Figure 3 are fairly smooth, suggesting that low-order polynomial fits are adequate. The priors for these low-order polynomials are vague under the BSS-ANOVA model, so the model is able to essentially reduce to quadratic regression for these predictors. This is a very different fit from Linkletter et al.’s model which for most draws is a full Gaussian process in these five dimensions. For these data DIC prefers the BSS-ANOVA decomposition (DIC = 362; pD = 68.4) compared to Linkletter et al.’s model (DIC = 375; pD = 65.3). Note that we do not use DIC for variable selection – this is done using the Bayesian variable selection algorithm described in Section 3 – we use DIC to compare the fits of the non-nested BSS-ANOVA and Gaussian process models, both of which average over several models defined by the binary inclusion indicators.

Of the 55 possible two-way interactions in the BSS-ANOVA, 4 have inclusion probability greater than 0.5 (Table 3). Also, the f0 term for higher order terms is included only 7% of the time. The interaction with the highest inclusion probability (1.00) is the interaction between bulk compressibility of brine pocket (BPCOMP) and microbial degradation of cellulose (WMICDFLG). Figures 4a and 4b plot the fitted values (posterior mean, averaging over all models) of the interaction effect for this pair of predictors. Figure 4a clearly demonstrates the constraints of the BSS-ANOVA model for interactions. The curve for each level of WMICDFLG integrates to zero and the sum of the three curves equals zero for each value of BPCOMP. Figure 4b is the sum of the interaction and main effect curves. Here we see an increasing trend for BPCOMP for each level of WMICDFLG, however the trend is nearly flat when WMICDFLG equals level 3, which implies microbial degradation of cellulose, plastic, and rubber. This is reasonable because the gas produced by the degradation could cause enough pressure to make brine inflow negligable for this range of BPCOMP. Figures 4c and 4d plot the fitted values for the interaction between compressibility of brine pocket (BPCOMP) and intrinsic brine pocket permeability (BHPERM) which has the second largest inclusion probability (0.93). Notice in the upper right corner that the interaction indicates a decrease in brine inflow from the additive effects. This is very interesting because at large values of BHPERM and BPCOMP so much brine flows down the borehole that the repository saturates and rises to hydrostatic pressure, which reduces brine inflow from the brine pocket. These important interactions have not been studied in the previous analysis of this problem. However, they would easily help to give the scientists an increased understanding and/or confirmation of their model.

We measure variable importance with the posterior 95% interval of fj and fkl’s L2-norms, , respectively. The L2-norms are proportional to the proportion of variation in the model explained by each term. We approximate these integrals by taking the sum at the n = 300 design points. The L2-norm intervals in Table 3 show that of the predictors included with probability 1.00, borehole permeability (BHPRM) generally explains the largest proportion of the variance in the fitted function. Also, even though there are interactions selected with probability greater than 0.5, these terms explain less variation in the fitted surface than the important main effects. This sensitivity analysis accounts for variable selection uncertainty, that is, the L2-norm is computed every MCMC iteration, even iterations that exclude the variable. Another common approach to sensitivity analysis is to first select the important variables and then compute the L2-norms for the important variables using the model including only the selected variables. To illustrate how these approaches differ, we refit the BSS-ANOVA model using only the variables with inclusion probability greater than 0.5. The resulting L2-norms are given in Table 3 (“BSS-ANOVA best model”). The intervals for this model are generally narrower than the intervals from the full model. Therefore, accounting for variable selection uncertainty in sensitivity analysis gives wider, more realistic, posterior intervals.

Section 3 develops a method for selecting the hyperparameter λ which controls the strength of the variances’ priors. Based on these results we recommend using λ = 2. For these data however the posterior inclusion probabilities are robust to the selection of λ. We refit the model with λ ∈ {1, 2, 3}; the posterior mean number of variables in the model (i.e., the posterior mean of ) was 16.4 with λ = 1, 14.8 with λ = 2, and 13.4 with λ = 3. Also, for all three choices of λ the same subset of terms with inclusion probability greater than 0.5 are identified with the sole exception that halite permeability (HALPRM) is included in the model with inclusion probability 0.51 with λ = 1 (compared to 0.46 with λ = 2).

7 Discussion

This paper presents a fully-Bayesian procedure for variable selection and curve-fitting for nonparametric regression. Our model uses the smoothing splines ANOVA decomposition and selects components via stochastic search variable selection. We tune the model to have a desired false positive rate. The simulation study shows that the Bayesian model has advantages over other nonparametric variable selection models in terms of both prediction accuracy and variable selection. The model is used to build an emulator for complex computer model output.

Another challenge in the analysis of complex computer model output is jointly modeling computer model output and actual field data. A common approach is to model both the true response and the bias between field and simulated data with separate Gaussian processes. Our approach could be used in this case to identify important variables for both Gaussian processes, that is, to identify conditions that affect the true process and to identify potentially different variables that predict a discrepancy between simulated and real data. Also, although we applied our method to the deterministic WIPP model, our simulation study suggests that the BSS-ANOVA model is also adept at estimating the mean response for data having random errors.

Appendix

A.1 Approximate expected log odds of π = 1

For the univariate nonparametric model in Section 2.1, integrating over f and σ2 gives

| (25) |

Assuming the data are standardized so that y′y = n and assuming a = b, for large n we have

| (26) |

Taking the expected value with respect to y ~ N (0, I) gives

| (27) |

| (28) |

where d1, …, dn are the eigenvalues of Σ. Recalling that Σ is scaled so that trace, a first-order Taylor series at τ2 = 0 gives .

A.2 Variable Descriptions for Two-Phase Fluid Flow Example

ANHPRM - Logarithm of anhydrite permeability (m2)

BHPRM - Logarithm of borehole permeability (m2)

BPCOMP - Bulk compressibility of brine pocket (Pa−1)

BPPRM - Logarithm of intrinsic brine pocket permeability (m2).

HALPOR - Halite porosity (dimensionless)

HALPRM - Logarithm of halite permeability (m2)

SHPRMSAP - Logarithm of permeability (m2) of asphalt component of shaft seal (m2)

SHPRMCLY - Logarithm of permeability (m2) for clay components of shaft.

SHPRMHAL - Pointer variable (dimensionless) used to select permeability in crushed salt component of shaft seal at different times

SHRBRSAT - Residual brine saturation in shaft (dimensionless)

WMICDFLG - Pointer variable for microbial degradation of cellulose. WMICDFLG = 1, 2, and 3 implies no microbial degradation of cellulose, microbial degradation of only cellulose, microbial degradation of cellulose, plastic, and rubber.

Footnotes

The authors that the National Science Foundation (Reich, DMS-0354189; Bondell, DMS-0705968) and Sandia National Laboratories (SURP Grant 22858) for partial support of this work. The authors would also like to thank Dr. Hao Zhang of North Carolina State University for providing code to run the COSSO model and Jon Helton for his help with the analysis of the two-phase flow model. Lastly the authors are grateful to the reviewers, associate editor, and co-editors for their most constructive comments, many of which are incorporated in the current version of this article.

References

- Barbieri M, Berger J. Optimal predictive model selection. Annals of Statistics. 2004;32:870–897. [Google Scholar]

- Brieman L. Better subset regression using the nonnegative garrote. Technometrics. 1995;37:373–384. [Google Scholar]

- Brieman L. Bagging predictors. Machine Learning. 1996;24:123–140. [Google Scholar]

- Chipman H. Bayesian variable selection and related predictors. Canadian Journal of Statistics. 1996;24:17–36. [Google Scholar]

- Friedman JH. Multivariate adaptive regression splines. Annals of Statistics. 1991;19:1–141. [Google Scholar]

- Gelman A. Prior distributions for variance parameters in hierarchical models (Comment on Article by Browne and Draper) Bayesian Analysis. 2006;1:515–534. [Google Scholar]

- George EI, McCulloch RE. Variable selection via Gibbs sampling. Journal of the American Statistical Association. 1993;88:881–889. [Google Scholar]

- George EI, McCulloch RE. Approaches for Bayesian variable selection. Statistica Sinica. 1997;7:339–373. [Google Scholar]

- Gustafson P. Bayesian regression modeling with interactions and smooth effects. Journal or the American Statistical Association. 2000;95:745–763. [Google Scholar]

- Gu C. Model Diagnostics for Smoothing Spline ANOVA Models. The Canadian Journal of Statistics. 2004;32:347–358. [Google Scholar]

- Gu C. Smoothing Spline ANOVA models. Springer-Verlag; 2002. [Google Scholar]

- Gu C. Diagnostics for Nonparametric Regression Models with Additive Terms. Journal of the American Statistical Association. 1992;87:1051–1058. [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer; 2001. [Google Scholar]

- Helton JC, Bean JE, Economy K, Garner JW, MacKinnon RJ, Miller J, Schreiber JD, Vaughn P. Uncertainty and sensitivity analysis for two-phase flow in the vicinity of the repository in the 1996 performance assessment for the Waste Isolation Pilot Plant: disturbed conditions. Reliability Engineering and System Safety. 2000;69:263–304. [Google Scholar]

- Ishwaran H, Rao JS. Spike and slab variable selection: frequentist and Bayesian strategies. Annals of Statistics. 2005;33:730–773. [Google Scholar]

- Joseph VR, Hung Y, Sudjianto A. Blind Kriging: A New Method for Developing Metamodels. Journal of Mechanical Design. 2008;130 031102. [Google Scholar]

- Kass RE, Wasserman L. A reference Bayesian test for nested hypotheses and its relationship to the Scharz criterion. Journal of the American Statistical Association. 1995;90:928–934. [Google Scholar]

- Lin Y, Zhang HH. Component selection and smoothing in smoothing spline analysis of variance models. Annals of Statistics. 2006;34:2272–2297. [Google Scholar]

- Linkletter C, Bingham D, Hengartner N, Higdon D, Ye KQ. Variable Selection for Gaussian Process Models in Computer Experiments. Technometrics. 2006;48:478–490. [Google Scholar]

- Mitchell TJ, Beauchamp JJ. Bayesian variable selection in linear regression (with discussion) Journal of the American Statistical Association. 1988;83:1023–1036. [Google Scholar]

- Qian Z, Wu H, Wu CFJ. Gaussian Process Models for Computer Experiments with Qualitative and Quantitative Factors. Technometrics. 2008 In press. [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. 2006 http://www.R-project.org.

- Shively T, Kohn R, Wood S. Variable selection and function estimation in nonparametric regression using a data-based prior (with discussion) Journal of the American Statistical Association. 1999;94:777–806. [Google Scholar]

- Spiegelhalter DJ, Best NG, Carlin BP, van der Linde A. Bayesian measures of model complexity and fit (with discussion and rejoinder) J. Roy. Statist. Soc., Ser. B. 2002;64:583–639. [Google Scholar]

- Steinberg DM, Bursztyn D. Data analytic tools for understanding random field regression models. Technometrics. 2004;46:411–420. [Google Scholar]

- Storlie CS, Helton JC. Multiple predictor smoothing methods for sensitivity analysis: example results. Reliability Engineering and System Safety. 2007;93:55–77. [Google Scholar]

- Wahba G. Spline Models for Observational Data. vol. 59. SIAM. CBMS-NSF Regional Conference Series in Applied Mathematics; 1990. [Google Scholar]

- Wahba G, Wang Y, Gu C, Klein R, Klein B. Smoothing spline ANOVA for exponential families, with application to the WESDR. Annals of Statistics. 1995;23:1865–1895. [Google Scholar]

- Wood S, Kohn R, Shively T, Jiang W. Model selection in spline nonparametric regression. Journal of the Royal Statistical Society: Series B. 2002;64:119–140. [Google Scholar]

- Vaughn P, Bean JE, Helton JC, Lord ME, MacKinnon RJ, Schreiber JD. Representation of Two-Phase Flow in the Vicinity of the Repository in the 1996 Performance Assessment for the Waste Isolation Pilot Plant. Reliability Engineering and System Safety. 2000;69:205–226. [Google Scholar]