Abstract

Studies of explicit processing of facial expressions by individuals with autism spectrum disorder (ASD) have found a variety of deficits and preserved abilities compared to their typically developing (TD) peers. However, little attention has been paid to their implicit processing abilities for emotional facial expressions. The question has also been raised whether preferential attention to the mouth region of a speaker’s face by ASD individuals has resulted in a relative lipreading expertise. We present data on implicit processing of pseudo-dynamic facial emotions and visual speech in adolescents with autism. We compared 25 ASD and 25 TD participants on their ability to recreate the sequences of four dynamic emotional facial expressions (happy, sad, disgust, fear) as well as four spoken words (with, bath, thumb, watch) using six still images taken from a video sequence. Typical adolescents were significantly better at recreating the dynamic properties of emotional expressions than those of facial speech, while the autism group showed the reverse accuracy pattern. For Experiment 2 we obscured the eye region of the stimuli and found no significant difference between the 22 adolescents with ASD and 22 TD controls. Fearful faces achieved the highest accuracy results among the emotions in both groups.

Reading Faces for Information about Words and Emotions in Adolescents with Autism

Autism is a complex developmental disorder that includes social, pragmatic, and language deficits, which can have a devastating impact on a person’s ability to conduct daily face-to-face conversation. The capacity to recognize and respond to nonverbal conversational cues, including facial expressions, is central to effective human communication. Typically developing (TD) infants develop the capacity to recognize faces and facial expressions very early. They preferentially attend to faces within a few hours of birth (Goren, Sarty, & Wu, 1975; Johnson and Morton, 1991); at four months they can already discern emotional facial expressions (Montague & Walker-Andrews, 2001). Although there is evidence that the ability to process facial expressions of emotions (FEEs) continues to improve throughout childhood (Egan, Brown, Goonan, Goonan, & Celano, 1998), adult competence is reached by early adolescence (Custrini & Feldman, 1989; Batty & Taylor, 2006). In contrast, individuals with autism spectrum disorders (ASD), including autism, Asperger syndrome, and pervasive developmental disorder-not otherwise specified (PPD-NOS), experience a variety of difficulties and use atypical strategies in both face recognition and the identification of emotional facial expressions that persist through adulthood (Schultz, 2005, for review).

A rapidly growing literature exists on the recognition of FEEs by children and adults with ASD. Some studies found that individuals with ASD are able to match emotions during slowed, (Tardif, Laineé, Rodriguez, & Gepner, 2007), or even strobelike presentations (Gepner, Deruell, & Grynfelt, 2001) and to recognize basic emotions, although not complex ones (Castelli, 2005). Other studies, however, indicate that children with ASD show a deficit in labeling, categorizing, matching, or identifying static facial emotions (Tantam et al. 1989, Gepner, et al. 1996, Gross, 2004, Celani, Battacchi, & Arcidiacono, 1999, see Jemel, Mottron, & Dawson, 2006 for review), dynamic expressions (Lindner & Rosén 2006), and for categorical perception of emotional expressions (Teunisse & de Gelder, 2001).

When trying to interpret an emotional facial expression, typical adults initially focus their gaze on the eye region of a face. However, when they are attending to a spoken word, their gaze focuses more directly on the lower half of the face since the mouth is more relevant for processing facial speech (Buchan, Paré, & Munhall, 2007). In contrast, several studies of face processing in individuals with ASD suggest that they focus on the lower half of the face, particularly the mouth, in a variety of social or emotional contexts (Joseph & Tanaka, 2003; Klin, Jones, Schultz, Volkmar, & Cohen 2002; Pelphrey et al., 2002, Dawson, Webb, Carver, Panagiotides & McPartland, 2004). The lack of attention toward the eye region of the face may account for some of the deficits identified in studies of face and emotion recognition.

In a classic study, Langdell (1978) found that children with ASD were better than their age-matched peers in face identification when only the lower part of the face was shown. Gross (2004) found that very young children with ASD were relatively less able than a TD cohort to match emotions of humans, dogs, and apes based on the upper face alone, and their error patterns suggested that primary attention was focused on the lower portion of the face. Baron-Cohen et al. (1997; 2001) found that typical adults were able to rely exclusively on the eye region of a face to extract information about an expressed complex emotion or mental state, whereas individuals with ASD were significantly impaired on such tasks. Spezio, Adolphs, Hurley, & Piven (2007), demonstrated not only that TD individuals visually attend to the eye region of a face, but that the eyes are crucial to their successful identification of emotional expressions. In contrast, participants with ASD used mostly information from the mouth to try and determine facial emotion. Some studies, however, indicate that individuals with ASD are able to attend to the eye region of faces (Lahaie et al. 2006, van der Geest et al., 2002, Bar-Haim et al., 2006). Using a task similar to Baron-Cohen’s (1997, 2001) Roeyers, Buysse, Ponnet, and Pichal, (2001) and Ponnet, et al. (2004) found that high functioning adults with ASD can identify emotions based on photographs of eyes alone. The difference between these results and those obtained by Baron-Cohen et al. may be explained by the fact that Roeyers et al. used posed expressions and a slightly different task design, as well as differences in autism symptomology among their respective ASD populations. The differences in these data raise the question whether individuals with ASD do in fact use information from a speaker’s eye region without being specifically prompted to do so, especially when the eyes are presented within the context of an entire, natural face.

Another potential cause for the different face processing results obtained across studies may be the presence or absence of dynamic transitions in the facial emotion stimuli. Back, Ropar, and Mitchell (2007) used computer-manipulated stimuli that preserved the dynamic components of either the entire face or only the eye or mouth region and found that adolescents with ASD were less able to determine complex emotions than their TD peers in all conditions. Lindner and Rosén (2006) found deficits in the ASD population’s ability to process static and dynamic expressions in a small sample of children and adolescents (aged 5–16), and Gepner et al. (2001) showed that children with ASD were able to recognize dynamic facial emotions only in slowed, strobe-like dynamic presentations, but demonstrated deficits in the processing of normal-paced dynamic expressions. The question remains whether preserving the natural dynamic transitions of emotional facial expressions allows individuals with ASD to successfully interpret the depicted emotion, or not. The ability to process the dynamic transitions of facial expressions is an important measure of the processing skills required during successful social interaction.

The studies mentioned so far rely on explicit processing tasks involving the recognition, labeling, or matching of emotional facial expressions. Studies of typical individuals show that implicit face processing may develop before explicit recognition ability reaches maturity (Lobaugh, Gibson, & Taylor, 2006) and involve different neuronal activation patterns (Habel et al. 2007, Hariri, Bookheimer, & Mazziotta, 2000), especially for fearful faces (Lange et al. 2003). Very little work has been done to date on implicit emotional facial expression processing among individuals with ASD. Critchley et al. (2000) found that adults with ASD and a typical control group had less neurological activation for implicit than explicit processing of facial expression stimuli, but that the ASD group showed significantly less neuronal activation for both explicit and implicit FEE processing compared to their TD peers. These neuro-imaging data raise the as yet unanswered question of whether individuals with ASD differ in their behavioral approach to implicit emotion processing as well. Implicit processing of emotional faces is an important component of social interaction. Understanding the ability of individuals with ASD to process facial expressions implicitly is highly relevant to understanding their ability to function in a social environment.

Although it is likely that individuals with ASD have deficits in the processing of dynamic emotional facial expressions, at least for rapid dynamic stimuli in explicit task designs, there is evidence to suggest that they are as competent as their typically developing peers to recognize visual speech, i.e. lipreading. Lipreading is used by TD individuals during daily conversation and can boost comprehension of spoken language in noisy or distracting environments (e.g. Sumby & Pollack 1954, Lidestam, Lyxell, & Lundeberg, 2001, Ross, Saint-Amour, Leavitt, Javitt, & Foxe, 2007). Typical infants are sensitive to the facial movements that accompany speech as early as two months of age (Dodd 1979, Kuhl & Metzoff, 1982, 1984) and can detect audio-visual mismatches through speech reading by five months (e.g. Rosenblum, Schmuckler, & Johnson, 1997). Their silent lipreading skills, however, are relatively poor through late adolescence (Kishon-Rabin & Henkin, 2000) and are inferior to the lipreading skills of hearing-impaired adolescents who presumably rely more heavily on lipreading in daily life (Elphick, 1996, Lyxell & Holmberg, 2000).

Individuals with ASD pay greater attention to the mouth regions of the face (e.g. Klin et al., 2002) and may therefore have acquired an enhanced lipreading expertise. Again, the evidence is contradictory. Some studies showed that ASD participants performed more poorly than TD controls on a static face matching task involving lipreading (Deruelle, Rondan, Gepner, & Tardif, 2004), and on a silent lipreading task using a dynamic computer generated face (Williams et al. 2004), while others found preserved matching skills for lipreading in the ASD population based on static images of real faces portraying the intonation of singles vowels (Gepner, et al. 2001) or dynamic videos of vowel-consonant-vowel syllables (De Gelder, Vroomen, & van der Heide,1991). To some extent these different findings may be due to differences in stimuli, which range from static to dynamic and real to computer generated. There is evidence to suggest that typical individuals are less successful at processing full language visual speech information from computer generated “speakers” than real people (Lidestam & Beskow, 2006, Lidestam, et al., 2001), which might explain the differences in results obtained for these two types of stimuli. It is noteworthy that the task using dynamic videos of real faces and speech sounds (de Gelder et al. 1991) determined that individuals with ASD had good lipreading skills, even compared to their TD peers. The question remains whether the use of real faces and preserved dynamic transitions will allow individuals with ASD to speech-read full language stimuli accurately. Being able to use lip-reading could help individuals with ASD to comprehend spoken language in daily conversation.

The studies described in this paper were designed to answer several of the questions that emerge from the literature, specifically regarding the ability of adolescents with autism to implicitly process dynamic facial expressions of emotion, a skill required for everyday social interaction. We also aim to investigate whether the documented attention to the mouth region in this population has led to enhanced lipreading skills which could be used to enhance spoken language comprehension. The direct comparison of these face-based stimuli enables us to assess the autism and TD groups’ competence at extracting information from emotional and speech-based facial expressions encountered during everyday communication.

Experiment 1

In Experiment 1, we aim to determine whether high functioning adolescents with autism are as familiar as typical controls with the dynamic properties of four basic emotions (happy, sad, fear, disgust) by asking them to recreate the dynamic sequence of emotional facial expressions using six still images extracted from a video clip, a task assumed to require attention to the entire face and particularly the eyes. We based our experimental design on the paradigm developed by Edwards (1998), who found that typical adults were able to recreate the dynamic sequences of several basic emotions using 14–18 images taken from video clips depicting their onset, apex, and offset. In order to ascertain whether individuals with autism derive benefit from their reported preferential attention to the mouth region, we also investigated their ability to recreate the dynamic sequences of four words (with, watch, bath, thumb), a task that relies exclusively on attention to the mouth. Our task is designed not to require explicit labeling or matching so that we can investigate knowledge of facial expressions in the absence of secondary verbal task demands. Based on the target group’s reduced neurological involvement for implicit processing, as well as their deficits in explicit dynamic FEE processing, we hypothesized that participants with autism would perform worse than controls on the emotional facial expression task. However, due to their preferential attention to the mouth, and thereby possibly acquired relative lipreading expertise, we expected them to perform better than controls on the lipreading task.

Method

Participants

Two groups participated in this study: 25 adolescents with autism (22 males, 3 females, mean age 13;8) and 25 typically developing (TD) controls (20 males, 5 females, mean age 14;1). The participants were recruited through advocacy groups for parents of children with autism, local schools, and advertisements placed in local magazines, newspapers, and on the internet. Only participants who spoke English as their native and primary language were included. The Kaufman Brief Intelligence Test, Second Edition (K-BIT 2, Kaufman & Kaufman, 2004) was used to assess IQ and the Peabody Picture Vocabulary Test (PPVT-III, Dunn & Dunn, 1997) was administered to assess receptive vocabulary. We did not administer the PPVT-III to one participant, due to time constraints, but since his verbal IQ data fell within the matched range, he was included in the final sample. Using a multivariate ANOVA with group as the independent variable we confirmed that the groups were matched on age (F(1,49)=.23, p=.64), sex (χ2(1, N=50)=.6, p=.44), verbal IQ (F(1,49)= 2.43, p=.13), nonverbal IQ (F(1,49)=2.65, p=.11), and receptive vocabulary (F(1,49)=.42, p=.42) (Table 1).

Table 1.

Group Matching for Autism and TD in Experiment 1

| Autism Mean(StDev) | Typical Controls Mean(StDev) | |

|---|---|---|

| Age | 13;8 (3;3) | 14;1 (3;0) |

| verbal IQ | 105.04 (19.35) | 112.60 (14.58) |

| NonVerbal IQ | 107.56 (10.80) | 113.00 (12.75) |

| PPVT | 111.63 (19.97) | 115.29 (9.6) |

Diagnosis of autism

The participants in the autism group met DSM-IV criteria for full autism, based on expert clinical impression and confirmed by the Autism Diagnostic Interview-Revised (ADI-R; Lord, Rutter & LeCouteur, 1994) and the Autism Diagnostic Observation Schedule (ADOS; Lord, Rutter, DiLavore, & Risi, 1999), which were administered by trained examiners. Participants with known genetic disorders were excluded.

Stimuli

The stimuli for this task were created by having a professional female actor portray five emotions (sad, happy, disgust, fear, and anger) and speak five words (watch, thumb, with, bath, moth) while facing directly into a camera. The recordings of each emotion included a few seconds of a neutral face, followed by the emotion moving from onset, through apex, and offset to baseline. The word stimuli were single syllable complete words with a CVC structure, where both the initial and final consonants were either labial or interdental, i.e. produced by movements of the lips, or the tongue between the teeth. Limiting the word productions to these types of highly visible consonants ensured that they could successfully be read on the speaker’s lips. Each emotion and word was recorded several times and three judgers selected the best portrayal for further manipulation.

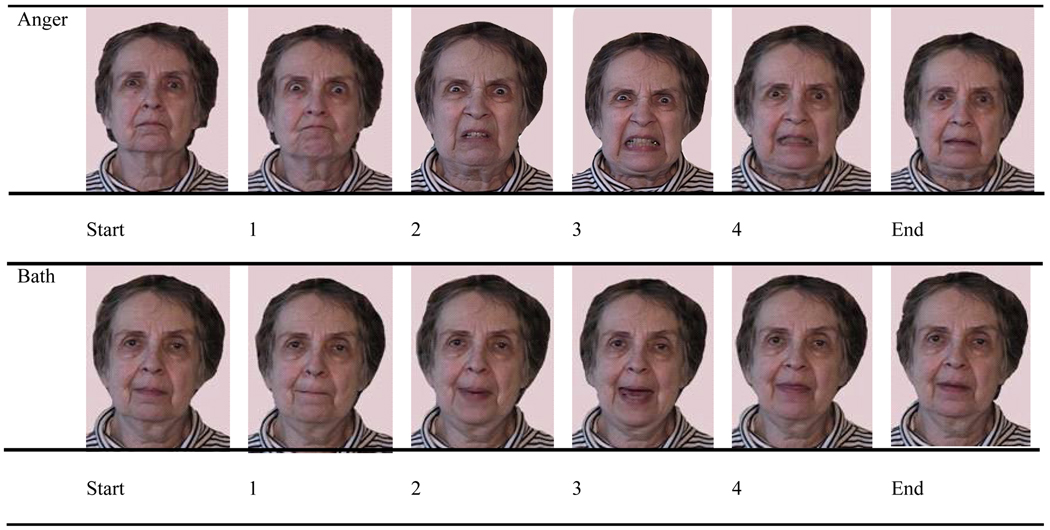

Once the best production of each stimulus was selected, we extracted six still images from every clip. The images were selected evenly across the clips, with three images chosen from the onset phase and three from the offset phase of the emotion. Previous research has shown that no single video frame contains the apex of all facial features simultaneously (Grossman 2001; Grossman & Kegl 2006), so we did not attempt to designate a central still frame as the depiction of maximum expression. Images were chosen to be noticeably different from the adjacent still frames and to represent the entire dynamic range of the facial emotion or word evenly (see Fig. 1 for examples of an emotion and a word sequence). The selected images were cropped to be of equal size and the background was changed to a solid neutral color in order to provide the least possible distraction from the face. The images were then printed in color and laminated. We also created labels for each emotion and word as well as a laminated “timeline” represented by six numbered spaces in which the participants could place the images during the task.

Figure 1.

Sample Emotion and Word Sequences

Procedures

Participants arrived at the testing site with a primary caregiver who gave informed consent. We also obtained assent of any minor participant over the age of 12. At the beginning of the study, the participant was given one example of a word (moth) and an emotion (anger) as training stimuli. The experimenter laid out the six cards of the first training stimulus in random order. We then showed and told the participant the label of that word or emotion, and the experimenter placed the first and last images of the sequence (representing the least deviation from neutral) in their designated spaces, labeled “start” and “end,” on the timeline. The participants were told that the person in the pictures either portrayed an emotion or said a word. We emphasized that the emotion was portrayed from onset, through peak, and offset, and that the start and end of the emotion was shown in the two pictures already given on the timeline. This last instruction was crucial to reinforcing that the most expressive images were expected to appear in the center of the timeline, not at either end. Participants were then asked to place the remaining four images in the correct sequence on the timeline within a 30 second time limit. This time limit was imposed because Edwards (1998) found that participants performed more poorly when they were given more time to consider their responses. Based on pilot testing we determined that 30 seconds was an appropriate time limit for our adolescent population. After completing the first training item, the experimenter showed the participant the correct sequence for that item and explained the dynamic contours of the stimulus, while giving the participant a chance to look over the correct sequence. Instructions were phrased to emphasize visualization, such as “imagine what a person looks like when she gets angry and then gets over it,” or “picture the face of a person saying the word “moth” and how her mouth looks while she says all the sounds of the word.” This procedure was repeated for the second training item and the order of training items was counterbalanced across subjects

After completing the training, the task stimuli (sad, happy, disgust, fear, and with, watch, bath, thumb) were presented in a randomized order within category (emotion and word). Presentation of the categories was counterbalanced across participants in both groups so that half the participants saw the emotion stimuli first and the other half the word stimuli. Participants were reminded of the 30 second time limit, which was enforced with a stopwatch that was not visible to the subjects in order to minimize pressure on their performance. All participants completed the task within the allotted time without requiring reminders. One participant exceeded the time limit and his data were not included in the final analysis.

Data analysis

We counted each correctly placed image, resulting in accuracy scores of 0, 1, 2, or 3 for each stimulus item. The maximum score was 3, rather than 4, since placing three cards correctly automatically ensures the accuracy of the fourth card. The numbers of correctly placed images for the four emotion stimuli were summed, and the same was done for the four word stimuli. We thereby created a cumulative accuracy score of total correct images for emotions (Maximum = 12) and total correct images for words (Maximum = 12). This coding scheme allowed us to give partial credit by counting each correctly placed image. Since the offset of an emotion is processed and produced dynamically differently from the onset (Ekman 1984, Edwards 1998, Grossman 2001) we did not count reversely placed images (i.e. image #1 in place of image #4), or complete sequence reversals (4,3,2,1) as correct. This strategy also enabled us to directly compare the accuracy levels for emotions with those for words, since it was not possible to reverse the word sequence, which begin and end with different consonants.

In order to establish the score that could be achieved on this task by chance, we calculated all 24 possible permutations of images within a given sequence of four images and assessed accuracy levels for each of those 24 sequences. As an example, the sequence “1,2,3,4,” achieved the accuracy score of 3, “1,2,4,3,” scored an accuracy level of 2, “1,3,2,4,” had an accuracy of 2, etc. We then summed the occurrences of each accuracy score (0, 1, 2, 3) for all 24 possible sequences and established the statistical likelihood of each of them occurring. This yielded a 4% chance of creating a completely correct sequence, 24% chance of having two images correct, 33% chance of placing one image correctly and 37% chance of creating a completely incorrect sequence. We calculated the mean chance accuracy level for each stimulus item by multiplying each possible score (0, 1, 2, 3) with its associated probability (.38, .33, .25, .04, respectively). The resulting chance scores (0, .33, .25, .12) were then averaged to obtain the mean chance level accuracy of 0.95/3, or 31.99%.

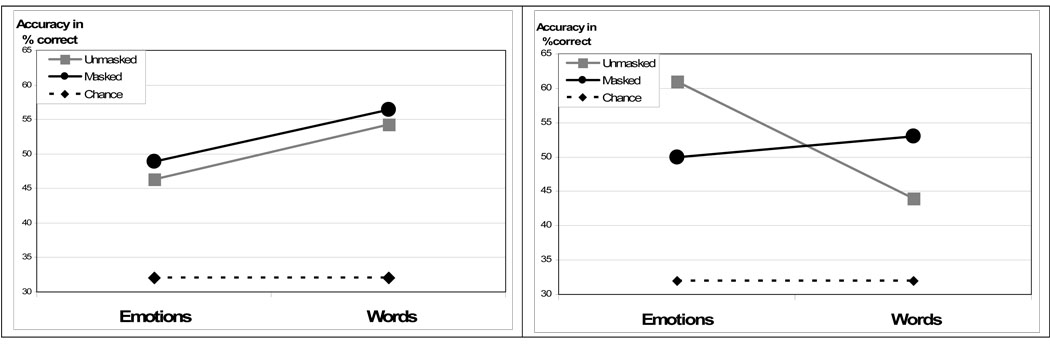

Results

The data for this study are presented in Table 2. A 2 (group) by 2(category: emotion vs. word) repeated measures ANOVA of accuracy revealed a significant group by task interaction (F(1,48)=11.21, p=.002), but no main effect for group (F(1,48)=.38, p=.54) or task (F(1,48)=1.45, p=.23). Pairwise comparisons within category showed that the TD participants were significantly better at recreating sequences of emotion than the participants with autism (t(48)=2.6, p=.012), although the autistic group was significantly better at recreating the word stimuli than their TD peers (t(48)=2.26, p=.029). Pairwise comparisons within group revealed a significant advantage for emotions over words in the TD participants (t(24)=2.8, p=.01), and a trend towards significance in the autism group for words over emotions (t(23)=1.9, p=.076).

Table 2.

Accuracy Levels for each Stimulus in Experiment 1

| Emotions (Number correct) | Sad | Happy | Disgust | Fear | All emotions % correct (StDev) | |

|---|---|---|---|---|---|---|

| Autism | Mean (StdDev) | 1.48 (1.26) | 1.20 (1.32) | 1.44 (1.66) | 2.28 (1.46) | 46.33 (21.12) |

| TD | Mean (StdDev) | 1.76 (1.39) | 1.96 (1.59) | 1.76 (1.51) | 2.52 (1.36) | 61.00 (18.59) |

| Words (Number correct) | Watch | With | Thumb | Bath | All words % correct (StDev) | |

| Autism | Mean (StdDev) | 2.00 (.91) | 1.16 (1.11) | 2.08 (1.15) | 1.92 (1.35) | 54.33 (13.84) |

| TD | Mean (StdDev) | 1.08 (.86) | 1.20 (.96) | 1.40 (1.04) | 1.68 (1.11) | 44.00 (18.24) |

A separate ANOVA for emotion within each group (Bonferroni corrected) revealed a main effect for emotion in the autism group (F(3,72)=3.1, p=.033), but not the TD group (F(3,72)=1.87, p=.14). Participants with autism were most accurate on fear (M=1.92) and least accurate on happy (M=1.08); the difference between these two emotions was statistically significant (p=.038). No other emotion comparisons reached statistical significance in the autism group. There was also a main effect for words in the autism group (F(3,72)=4.78, p=.004), but not the TD group (F(3,72)=1.92, p=.13). Pairwise comparisons of word accuracy scores in the autism group revealed significant differences between the lowest accuracy score (“with,” M=1.08) and the highest scores (“watch” and “thumb”, both M=1.88) at p=.024 for “watch” and p=.002 for “thumb”.

Discussion

The findings of this experiment support our initial hypothesis that the autism group will be better at sequencing words than emotions while the typical controls will show the reverse pattern, with a significant advantage of emotions over words. The data suggest that the documented attention to the mouth region in individuals with ASD may indeed have led to greater expertise with the dynamic properties of visual speech and therefore higher accuracy scores for the sequencing of word stimuli. Although most emotional expressions are formed by the movements of the mouth as well as the eye region, visual speech relies exclusively on mouth movement and should therefore be more affected by increased attention to the mouth. The autism group’s relative difficulties at processing pseudo-dynamic facial expressions of emotion may at least partially be due to a lack of familiarity with the dynamic properties of the eye region of faces which is crucial to the successful processing of facial emotion.

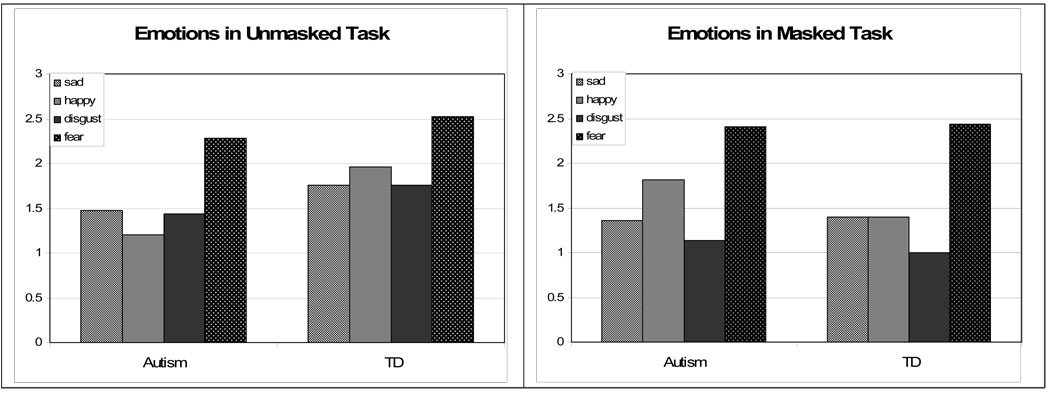

An unexpected result was the high accuracy achieved for the sequencing of fearful faces in both participant groups. Although this difference only reached statistical significance for the adolescents with autism, it was still present in the TD group (Fig. 4).This finding stands in contrast to other studies that have found relatively poor performance on the processing of fear among typical populations and individuals with ASD (e.g. Ashwin, Baron-Cohen, Wheelwright, O’Riordan, & Bullmore 2007, Humphreys, Minshew, Leonard, & Behrmann, 2007). Many studies have also documented an advantage for happy face processing, even in individuals with ASD (e.g. Grossman, Klin, Carter, & Volkmar 2000). Our results, however, indicate that the TD group was not more accurate for happy faces than any of the other expressions and that the autism group even demonstrated their poorest accuracy levels for these expressions. It is possible that the implicit nature of our task design allowed participants to demonstrate their familiarity with the dynamic contours of the evolutionarily important fear faces in the absence of secondary verbal task demands.

Figure 4.

Accuracy for each Emotion within Group

Overall, the results support our hypothesis that adolescents with autism prefer local processing of the mouth region for both types of facial stimuli. This preferential processing of the mouth may explain their advantage over the TD group for the word stimuli, which are exclusively conveyed by the mouth, as well as their relatively poor performance on the emotions, for which information from the eyes is critical. The question raised by this task is whether individuals with autism derive any benefit from the information conveyed by the eye region at all and whether emotions can be successfully processed by attending only to the mouth, even by TD individuals. We pursued this question in the second experiment.

Experiment 2

Experiment 2 replicates the design of Experiment 1, except that the eye region of the stimuli is obscured. This study was designed to determine whether individuals with autism derive any benefit from the eye region of faces during face processing, and also to demonstrate that the eyes are crucial to the determination of facial emotion in typical adolescents. Our hypothesis underlying this task was that the autism group would show no difference in their performance on emotion and speech stimuli compared to their results in Experiment 1. In contrast, we expected the lack of information from the eyes to cause TD participants to perform more poorly on the emotion task than in Experiment 1, but they would show the same level of accuracy for speech stimuli as before.

Method

Participants

We recruited a second set of participants for this study. Although 12 out of 22 adolescents with autism and 5 out of 22 typical controls in Experiment 2 also participated in Experiment 1, a minimum of 5 months had passed between the two studies (mean 11 months, maximum 16 months), so we were confident none of the participants remembered the stimuli in detail. Furthermore, no feedback of accuracy on the task stimuli had been provided to the participants in the first study. There were 22 participants who met criterion for full autism (16 males, 6 females, mean age 13;11) and 22 TD participants (20 males, 2 females, mean age 14;2). The groups were matched on age (F(1,43)=.12, p=.74), sex (χ2(1, N=44)=1.53, p=.22), verbal IQ (F(1,43)=.63, p =.43), non-verbal IQ (F(1,43)=.78, p =.38), and receptive vocabulary (F(1,43)=.52, p =.48) (Table 3).

Table 3.

Group Matching for Autism and TD in Experiment 2

| Category | Autism Mean(StDev) | Typical Controls Mean(StDev) |

|---|---|---|

| Age | 13.9 ( 3.02) | 14.2 ( 2.24) |

| Verbal IQ | 103.36 (20.9) | 107.32 (10.41) |

| Nonverbal IQ | 110.32 ( 9.57) | 107.82 ( 9.19) |

| Vocabulary (PPVT) | 109.77 (21.03) | 111.61 (11.57) |

Stimuli

The stimuli were identical to those used in Experiment 1, with the exception that the eye region of the face was obscured by a large sleep mask (Fig. 2). The mask was positioned to fully cover the eyes, upper cheeks, eyebrows, and lower forehead, which tend to move with the eyes and provide crucial information of eye involvement in an emotion (Ekman, 1984).

Figure 2.

Masked Face Stimulus

Procedures

The procedures were the same as in Experiment 1.

Results

The results for this study are presented in Table 4. We conducted a 2(group) by 2(category) ANOVA for total accuracy, which revealed no main effect for task (F(1,42)=2.07, p=.16), group (F(1,42)=.075, p=.79), or group by task interaction (F(1,42)=0.38, p=.54). Separate ANOVAs within each participant group revealed a main effect for emotion in the autism group (F(3,63)=3.24, p=.028) and the TD group (F(3,63)=8.05, p<.001). Post-hoc pairwise comparisons (Bonferroni corrected) showed that the autistic participants were significantly better at recreating the sequence for the highest scoring fear expressions (M=1.9) than the lowest scoring sad faces (M=1.05), p=.01. The TD group’s highest accuracy levels were also for fearful faces (M=2.23) and their lowest scores were for disgust (M=.91). The accuracy scores for fear in the TD group were significantly higher than those for sad (M=1.55, p=.046), happy (M=1.32, p=.006) and disgust (M=.91, p<.001). A comparison of word scores revealed no main effect for words in the TD group (F(3,63)=2.33 , p=.83) or the autism group (F(3,63)=1.09 , p=.36) (Fig. 4).

Table 4.

Accuracy Levels for each Stimulus in Experiment 2

| Emotions (Number correct) | Sad | Happy | Disgust | Fear | All emotions % correct (StDev) | |

|---|---|---|---|---|---|---|

| Autism | Mean (StdDev) | 1.36 (.85) | 1.82 (1.44) | 1.14 (1.58) | 2.41 (1.22) | 48.86 (18.41) |

| TD | Mean (StdDev) | 1.40 (.957) | 1.40 (1.118) | 1.00 (1.500) | 2.44 (1.193) | 50.00 (18.37) |

| Words (Number correct) | Watch | With | Thumb | Bath | All words % correct (StDev) | |

| Autism | Mean (StdDev) | 1.41 (.80) | 2.05 (1.13) | 1.55 (.80) | 2.32 (1.39) | 56.44 (16.63) |

| TD | Mean (StdDev) | 1.52 (1.229) | 1.68 (1.069) | 1.24 (1.052) | 2.40 (1.225) | 53.03 (20.01) |

Comparison of performance across studies

Figure 3 shows the data for each participant group’s performance across category (words, emotions) and studies (unmasked, masked). We conducted a 2 (category) by 2 (study) ANOVA for accuracy within each group to ascertain whether performance across the two studies, masked and unmasked, was significantly different within each group. For the autism group there was a main effect for category (F(1,45)=5.8, p=.02), but no significant effect for study (F(1,45)=.33, p=.57) and no task by study interaction (F(1,45)=.004, p=.95) indicating that the ASD participants performed better on words than emotions in both studies. There was no main effect for task (F(1,45)-2.82, p=.1) or study (F(1,45)=.75, p=.79) in the TD group, but we did find a significant task by study interaction (F(1,52)=5.46, p=.013), with the accuracy on masked emotions having dropped significantly in comparison to the unmasked emotions in Experiment 1. Post-hoc pairwise comparisons (Bonferroni corrected) for masked vs. unmasked emotions within each group showed a significant advantage for unmasked emotions over masked emotions in the TD group (p=.044). There was no difference between masked vs. unmasked words in either group.

Figure 3.

Performance across Studies within Group

Discussion

The aim of these experiments was to determine how adolescents with autism approach implicit processing of communicative facial stimuli and how familiar they are with the dynamic properties of emotional facial expressions and visual speech. We presented emotions and words in a pseudo-dynamic form, preserving dynamic contours, but reducing presentation speed and complexity of dynamic transitions by extracting individual frames from a video sequence. The group by task interaction in Experiment 1 clearly supports our hypothesis, with the autism group outperforming the TD group on words, but scoring lower than their typical peers on emotion stimuli. These results support findings in the literature that describe deficits in explicit processing of dynamic emotional expression in individuals with ASD (Lindner & Rosén, 2006, Back et al., 2007), but preserved lipreading skills (Gepner et al. 2001, De Gelder et al. 1991). Our results go further and demonstrate not just preserved, but enhanced lipreading skills in adolescents with autism for these pseudo-dynamic stimuli.

Comparing the performance of both groups across the two study tasks, we find that the autism group showed no difference in accuracy levels for masked vs. unmasked stimuli. One possible interpretation is that individuals with autism do not use the information contained in the eye region of emotional facial expressions. A second interpretation is that the eyes are simply not necessary to process and recreate the dynamic properties of FEEs. The second interpretation can be discarded, because of the performance difference in the TD group across the masked and unmasked modalities. The TD individuals demonstrated a significant advantage for emotions over words in Experiment 1. However, in Experiment 2, where the eye region was not visble, the typical group’s accuracy results for emotional expressions dropped significantly and were no longer distinguishable either from their own low accuracy scores for words, or the emotion processing scores of the autism group. Based on this significant performance gap in the TD group across the two studies and the lack of change in the corresponding accuracy levels of the autism group, we conclude that the eyes do provide crucial information towards the processing of dynamic facial expressions and that adolescents with autism do not have access to, or choose to ignore, this information when attempting to recreate the dynamic properties of basic emotions.

Our results support prior findings that adolescents with ASD have deficits in their ability to explicitly process basic dynamic facial emotions (e.g. Lindner & Rosén, 2006), but Gepner et al. (2001), who found preserved facial emotion processing in ASD for slowed, strobe-like presentations. One explanation for this discrepancy can be found in the differences in stimuli and task designs across studies. Gepner et al. (2001) used a more explicit task involving matching of a still photograph to a dynamic sequence. It is also not clear whether our cohort of participants with ASD is comparable to the group studied by Gepner et al., since different assessment tools were used to determine autism symptomology in the two studies. But more importantly, the emotion stimuli used in Gepner et al.’s study portrayed only the onset of the emotion up to the maximum expression; the emotional sequences in the experiments presented here contained the entire dynamic range from onset through peak and offset. It is possible that including the entire dynamic contour of emotional facial expressions increased the processing demands sufficiently to lower the accuracy scores of our autistic participants. It is also likely that the implicit vs. explicit processing demands of the two tasks account for at least some of the differences between our results. Overall, our data confirm the documented difficulties of individuals with ASD to process FEEs and suggest that their deficits in interpreting emotional expressions are tied to an avoidance of the eye region of the face. We conclude that the autism group has less familiarity with the dynamic aspects of emotional facial expressions than the TD group, as demonstrated by their relatively poor performance on all emotion stimuli when compared to the TD group in Experiment 1, and compared to their own performance on words.

In contrast to their relatively poor performance on emotional expressions, the autism group outperformed the TD group on the sequencing of word stimuli, due in part to the fact that the TD group scored very low on the word task. A possible explanation for this poor performance on lipreading in the typical group can be found in the documented developmental aspect of lipreading ability in typical individuals (Bar-Haim et al., 2006). Full adult lipreading ability did not appear to develop until late adolescence, effectively placing the majority of our TD participants below the threshold of lipreading competence. The autism group, on the other hand, may have gained earlier expertise in this domain due to their preferential visual attention to the lower part of the face and the mouth during social interaction (Klin et al. 2002, Joseph & Tanaka, 2003, Langdell, 1978). The fact that lipreading is a skill that can be trained and improved upon through daily exposure has been demonstrated by studies of adolescents with hearing impairments. These individuals, due to their greater need for daily lipreading, acquire earlier expertise for the processing and interpretation of dynamic properties of the mouth during visual speech (Elphick, 1996, Lyxell & Holmberg, 2000). In the same vein, our participants with autism may have developed greater familiarity with speech-related mouth movements due to their presumed greater daily visual attention to the mouth regions of their conversation partners.

An alternative interpretation of these data is that adolescents with autism do not actually have greater skill at lipreading, but rather a developmental advantage at the age of sampling that may disappear with time. Their preferential attention to the mouth region of a speaker may have led them to develop full adult competence for lipreading earlier than their typical peers, rather than reach a higher skill level in this area overall. Further studies of lipreading using groups of adult participants with autism and typical controls are necessary to decide whether the lipreading advantage we found in individuals with autism remains constant as they age.

A surprising result is that both groups consistently achieved the highest accuracy levels of any emotion for the fearful faces in both the masked and unmasked tasks. This good performance for fear faces is the only consistent ranking finding among the emotions or words in either participant group. There are no clear patterns of accuracy rankings among the words and no single emotion that consistently achieves the lowest accuracy levels. Although the literature shows that the highest accuracy levels and fastest reaction times are often reached for the identification of happy faces (Bullock & Russell, 1986; Custrini & Feldman, 1989; Grossman et al. 2000, Phillippot & Feldman, 1990), and that participants, including those with ASD, are specifically poor at processing fearful faces (Ashwin et al. 2007, Humphreys, et al. 2007, Dawson et al., 2004, Ogai et al., 2003, Pelphrey et al., 2002, Howard et al. 2000), we found no such pattern here. There are at least some data to support that different emotions are processed equally well (Egan, 1998), or even that happy and fear are processed better than other basic emotions during an implicit task in typical adults (Edwards, 1998). But it is still surprising that both groups maintained this processing advantage for fear faces even in Experiment 2, which provided no information from the eye region of the face.

Although fearful faces carry many of their defining facial characteristics in the eyes (e.g. Morris, deBonis & Dolan, 2002), they do also involve defining movements of the lips and jaw (Ekman & Friesen, 1978), thereby possibly allowing our participants to accurately process this facial emotion from the mouth movements alone, as they apparently did in Experiment 2. Also, Dadds et al. (2006) found that children with mild callus-unemotional traits showed no difference in fear processing accuracy whether their gaze was exclusively directed to the eyes or the mouth region of a face, indicating that it is possible to accurately identify fearful faces through attention only to the mouth. These data combined with the Facial Action Coding System (FACS) defined involvement of the lips and jaw in producing fearful faces support our claim that the mouth does carry crucial information for the processing of fearful faces, which may help explain the high accuracy results achieved by our participants for this emotion.

Another difference between our study and prior research on the processing of fearful faces in individuals with ASD is that our stimuli preserved the dynamic contours, although not the dynamic speed, of the emotional expressions. Natural conversational facial expressions are defined not only by their constellations of facial features at any given moment, but rather by the dynamic changes and interactions of these features over time. We hypothesize that the dynamic properties of fearful faces, which evoke an immediate neurological reaction in humans (Whalen, et al., 1998, 2004), are quite familiar to adolescents with autism and successfully recreated, and that a different level of cognitive processing is needed to successfully connect the expression with a verbal label or visual match. In a recent study by Humphreys et al. (2007), individuals with ASD were significantly worse at labeling fearful faces than at recognizing them in a same-different task. Humphreys et al. (2007) argued that this performance difference in the ASD group was based on the added language component of the labeling task, which increased difficulty and therefore lowered accuracy. Our results support that hypothesis. In our implicit emotion processing task, adolescents with ASD and their TD peers were able to recreate the dynamic properties of fearful faces significantly better than any of the other emotions presented, contrary to findings of explicit emotion processing tasks with the same populations. It is possible that the lack of explicit second-layer task demands and preservation of dynamic contours in our design enabled participants in both groups to deal with these expressions on a more immediate level, demonstrating easy familiarity with fearful faces over other basic emotions, which do not evoke the same hard-wired neuro-physiological responses as fear. However, considering that our results are based on a single sample of fear, we cannot generalize these findings, even though our stimuli were judged to be clear expressions of their target emotion during the stimulus creation process. Further studies of implicit processing of dynamic fearful faces by individuals with autism are required to determine the general validity of this finding.

Conclusion

In an implicit processing task assessing familiarity with the dynamic properties of emotional facial expressions and visual speech, adolescents with autism show a reverse pattern of competence for emotion and word sequencing than their TD peers. The autism group is more accurate for words than emotions and the TD group is more accurate for emotions than words. The autism group’s performance does not change whether information from the eye region is accessible, indicating that they do not use this information for processing of basic emotions. The TD group, on the other hand, demonstrates a significant drop in accuracy for recreating dynamic properties of facial emotional expressions in trials with obscured eye regions, suggesting that the dynamic information contained in the eyes, brows, lower forehead and upper cheeks is crucial to the successful processing and recreation of emotional facial expressions in typical adolescents. The attention preference to the lower face among individuals with autism may also lead to an acquired lipreading expertise and explain their advantage for the processing of visual speech compared to their typical peers. Surprisingly, both groups reach their highest accuracy rates for fearful faces, contradicting literature showing poor performance for the recognition, labeling, or matching of fear faces in both typical and autistic populations. We propose that an implicit task design and preservation of dynamic contours in the emotional stimuli play a significant role in the ability of both groups of adolescents to interpret fearful faces.

During social interactions, processing of emotional or speech-based facial actions happens on an implicit level. Unfortunately, implicit processing of facial stimuli in individuals with ASD has not been studied extensively to date. This paper’s results highlight the reduced ability of adolescents with autism to implicitly process facial emotion as well as their enhanced capacity for processing facial speech. Further studies are required to ascertain how individuals with ASD implicitly process a speaker’s emotional state as well as possibly enhance their comprehension of spoken language through lipreading.

Acknowledgments

The authors wish to thank Rhyannon Bemis, Chris Connolly, Danielle Delosh, Alex B. Fine, Meaghan Kennedy, and Janice Lomibao for their assistance in conducting this study. We also thank Geraldine Owen, whose face is portrayed in our stimuli and the children and families who gave their time to participate in this study.

Funding was provided by NAAR, NIDCD (U19 DC03610; H. Tager-Flusberg, PI) which is part of the NICHD/NIDCD Collaborative Programs of Excellence in Autism, and by grant M01-RR00533 from the General Clinical Research Ctr. program of the National Center for Research Resources, National Institutes of Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Ashwin C, Baron-Cohen S, Wheelwright S, O’Riordan M, Bullmore ET. Differential activation of the amygdala and the ‘social brain’during fearful face-processing in Asperger Syndrome. Neuropsychologia. 2007;45:2–14. doi: 10.1016/j.neuropsychologia.2006.04.014. [DOI] [PubMed] [Google Scholar]

- Back E, Ropar D, Mitchell P. Do the eyes have it? Inferring mental states from animated faces in autism. Child Development. 2007 March/April;78:397–411. doi: 10.1111/j.1467-8624.2007.01005.x. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Shulman C, Lamy D, Reuveni A. Attention to Eyes and Mouth in High-Functioning Children with Autism. Journal of Autism and Developmental Disorders. 2006 January 2006;Vol. 36 doi: 10.1007/s10803-005-0046-1. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The "Reading the Mind in the Eyes" Test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology & Psychiatry & Allied Disciplines. 2001;42:241–251. [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Jolliffe T. Is there a "language of the eyes?” Evidence from normal adults and adults with autism or Asperger syndrome. Visual Cognition. 1997;4:311–331. [Google Scholar]

- Batty M, Taylor MJ. The development of emotional face processing during childhood. Developmental Science. 2006;9:207–220. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Buchan JN, Paré M, Munhall K. Spatial statistics of gaze fixations during dynamic face processing. Social Neuroscience. 2007;2:1–13. doi: 10.1080/17470910601043644. [DOI] [PubMed] [Google Scholar]

- Bullock M, Russell JA. Concepts of emotion in developmental psychology. In: Izard CE, Read PB, editors. Measuring emotions in infants and children. Vol. 2. New York: Cambridge University Press; 1986. pp. 75–89. [Google Scholar]

- Castelli F. Understanding emotions from standardized facial expressions in autism and normal development. Autism. 2005;9:428–449. doi: 10.1177/1362361305056082. [DOI] [PubMed] [Google Scholar]

- Celani G, Battacchi MW, Arcidiacono L. The understanding of the emotional meaning of facial expressions in people with autism. Journal of Autism and Developmental Disorders. 1999;29:57–66. doi: 10.1023/a:1025970600181. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Daly EM, Bullmore ET, Williams SCR, Van Amelsvoort T, Robertson DM, Rowe A, Phillips M, McAlonan G, Howlin P, Murphy DGM. The functional neuroanatomy of social behavior: Changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain: A Journal of Neurology. 2000;123:2203–2212. doi: 10.1093/brain/123.11.2203. [DOI] [PubMed] [Google Scholar]

- Custrini RJ, Feldman RS. Children’s social competence and nonverbal encoding and decoding of emotions. Journal of Clinical Child Psychology. 1989;18:336–342. [Google Scholar]

- Dadds MR, Perry Y, Hawes DJ, et al. Attention to the eyes reverses fear-recognition deficits in child psychopathy. British Journal of Psychiatry. 2006;189:280–281. doi: 10.1192/bjp.bp.105.018150. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, Carver L, Panagiotides H, McPartland J. Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Developmental Science. 2004;7:340–359. doi: 10.1111/j.1467-7687.2004.00352.x. [DOI] [PubMed] [Google Scholar]

- De Gelder B, Vroomen J, van der Heide L. Face recognition and lip-reading in autism. European Journal of Cognitive Psychology. 1991;3:69–86. [Google Scholar]

- Deruelle C, Rondan C, Gepner B, Tardif C. Spatial frequency and face processing in children with autism and Asperger syndrome. Journal of Autism & Developmental Disorders. 2004;34:199–210. doi: 10.1023/b:jadd.0000022610.09668.4c. [DOI] [PubMed] [Google Scholar]

- Dodd B. Lip reading in infants: Attention to speech presented in- and out-of synchrony. Cognitive Psychology. 1979;11:478–484. doi: 10.1016/0010-0285(79)90021-5. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3rd ed. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Edwards K. The face of time: Temporal cues in facial expressions of emotion. Psychological Science. 1998;9:270–276. [Google Scholar]

- Egan GJ, Brown RT, Goonan L, Goonan BT, Celano M. The development of decoding of emotions in children with externalizing behavioral disturbances and their normally developing peers. Archives of Child Neurology. 1998;13:383–396. [PubMed] [Google Scholar]

- Ekman P. Expression and the nature of emotion. In: Scherer K, Ekman P, editors. Approaches to emotion. Hillsdale, N.J: Lawrence Erlbaum; 1984. pp. 319–343. [Google Scholar]

- Ekman P, Friesen WV. The Facial Action Coding System (FACS): A technique for the measurement of facial action. Palo Alto, CA: Consulting Psychologists Press; 1978. [Google Scholar]

- Elphick R. Issues in comparing the speechreading abilities of hearing-impaired and hearing 15–16 year-old pupils. British Journal of Educational Psychology. 1996;66:357–365. doi: 10.1111/j.2044-8279.1996.tb01202.x. [DOI] [PubMed] [Google Scholar]

- Gepner B, de Gelder B, de Schonen S. Face processing in autistics: Evidence for a generalized deficit? Child Neuropsychology. 1996;2:123–139. [Google Scholar]

- Gepner B, Deruelle C, Grynfelt S. Motion and emotion: A novel approach to the study of face processing by young autistic children. Journal of Autism and Developmental Disorders. 2001;31:37–45. doi: 10.1023/a:1005609629218. [DOI] [PubMed] [Google Scholar]

- Goren CC, Sarty M, Wu PY. Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics. 1975;56:544–549. [PubMed] [Google Scholar]

- Gross TF. The perception of four basic emotions in human and nonhuman faces by children with autism and other developmental disabilities. Journal of Abnormal Child Psychology. 2004;32:469–480. doi: 10.1023/b:jacp.0000037777.17698.01. [DOI] [PubMed] [Google Scholar]

- Grossman JB, Klin A, Carter AS, Volkmar FR. Verbal bias in recognition of facial emotions in children with Asperger’s Syndrome. Journal of Child Psychology, and Psychiatry. 2000;41:369–379. [PubMed] [Google Scholar]

- Grossman RB. Dynamic Facial Expressions in American Sign Language: Behavioral, Neuroimaging, and Facial-Coding Analyses for Deaf and Hearing Subjects. Dissertation. Boston, MA: Boston University; 2001. [Google Scholar]

- Grossman RB, Kegl J. To Capture a Face: A Novel Technique for the Analysis and Quantification of Facial Expressions in American Sign Language. Sign Language Studies. 2006;6(3) [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin-Exner I, Gur RC, Moser E. Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia. 2007;45:2369–2377. doi: 10.1016/j.neuropsychologia.2007.01.023. [DOI] [PubMed] [Google Scholar]

- Hariri A, Tessitore A, Mattay VS, Fera F, Weinberger DR. The amygdala response to emotional stimuli: a comparison of faces and scenes. NeuroImage. 2002;17:317–323. doi: 10.1006/nimg.2002.1179. [DOI] [PubMed] [Google Scholar]

- Howard MA, Cowell PE, Boucher J, Broks P, Maves A, Farrant A, Roberts N. Convergent neuroanatomical and behavioral evidence of an amygdala hypothesis of autism. Neuroreport. 2000;11:2931–2935. doi: 10.1097/00001756-200009110-00020. [DOI] [PubMed] [Google Scholar]

- Humphreys K, Minshew N, Leonard GL, Behrmann M. A fine-grained analysis of facial expression processing in high-functioning adults with autism. Neuropsychologia. 2007;45:685–695. doi: 10.1016/j.neuropsychologia.2006.08.003. [DOI] [PubMed] [Google Scholar]

- Jemel B, Mottron L, Dawson M. Impaired face processing in autism: Fact or artifact? Journal of Autism and Developmental Disorders. 2006;36:91–106. doi: 10.1007/s10803-005-0050-5. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Morton J. Biology and cognitive development: The case of face recognition. Oxford: Blackwell; 1991. [Google Scholar]

- Joseph RM, Tanaka J. Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry. 2003;44:529–542. doi: 10.1111/1469-7610.00142. [DOI] [PubMed] [Google Scholar]

- Kaufman A, Kaufman N. Manual for the Kaufman Brief Intelligence Test Second Edition. Circle Pines, MN: American Guidance Service; 2004. [Google Scholar]

- Kishon-Rabin L, Henkin Y. Age-related changes in the visual perception of phonologically significant contrasts. British Journal of Audiology. 2000;34:363–374. doi: 10.3109/03005364000000152. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218(4577):1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Meltzoff AN. The intermodal representation of speech in infants. Infant Behavior and Development. 1984;7:361–381. [Google Scholar]

- Lahaie A, Mottron L, Arguin M, Berthiaume C, Jemel B, Saumier D. Face perception in high-functioning autistic adults: evidence for superior processing of face parts, not for a configural face processing deficit. Neuropsychology. 2006;20:30–41. doi: 10.1037/0894-4105.20.1.30. [DOI] [PubMed] [Google Scholar]

- Langdell T. Recognition of faces: an approach to the stud of autism. Journal of Child Psychology and Psychiatry. 1978;19:255–268. doi: 10.1111/j.1469-7610.1978.tb00468.x. [DOI] [PubMed] [Google Scholar]

- Lange K, Williams LM, Young AW, Bullmore ET, Brammer MJ, Williams SC, Gray J, Phillips M. Task instructions modulate neural responses to fearful facial expressions. Biological Psychiatry. 2003;53:226–232. doi: 10.1016/s0006-3223(02)01455-5. [DOI] [PubMed] [Google Scholar]

- Lidestam B, Beskow J. Visual phonemic ambiguity and speechreading. Journal of Speech Language & Hearing Research. 2006;49:835–847. doi: 10.1044/1092-4388(2006/059). [DOI] [PubMed] [Google Scholar]

- Lidestam B, Lyxell B, Lundeberg M. Speech-reading of synthetic and natural faces: effects of contextual cueing and mode of presentation. Scandinavian Audiology. 2001;30:89–94. doi: 10.1080/010503901300112194. [DOI] [PubMed] [Google Scholar]

- Lindner JL, Rosén LA. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with Asperger’s syndrome. Journal of Autism and Developmental Disorders. 2006;36:769–777. doi: 10.1007/s10803-006-0105-2. [DOI] [PubMed] [Google Scholar]

- Lobaugh NJ, Gibson E, Taylor MJ. Children recruit distinct neural systems for implicit emotional face processing. NeuroReport. 2006;17:215–219. doi: 10.1097/01.wnr.0000198946.00445.2f. [DOI] [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S. Autism Diagnostic Observation Schedule - WPS (ADOS-WPS) Los Angeles, CA: Western Psychological Services; 1999. [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders. 1994;24:659–685. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Lyxell B, Holmberg I. Visual speechreading and cognitive performance in hearing-impaired and normal hearing children (11–14 years) British Journal of Educational Psychology. 2000;70:505–518. doi: 10.1348/000709900158272. [DOI] [PubMed] [Google Scholar]

- Montague DPF, Walker-Andrews AS. Peekaboo: A new look at infants’ perception of emotion expressions. Developmental Psychology. 2001;37:826–838. [PubMed] [Google Scholar]

- Morris JS, deBonis M, Dolan RJ. Human amygdala responses to fearful Eyes. Neuroimage. 2002;17:214–222. doi: 10.1006/nimg.2002.1220. [DOI] [PubMed] [Google Scholar]

- Ogai M, Matsumoto H, Suzuki K, Ozawa F, Fukuda R, Uchiyama I, Suckling J, Isoda H, Mori N, Takei N. fMRI study of recognition of facial expressions in high-functioning autistic patients. Neuroreport. 2003;14:559–563. doi: 10.1097/00001756-200303240-00006. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Phillippot P, Feldman RS. Age and social competence in preschooler’s decoding of facial expression. British Journal of Social Psychology. 1990;29:43–54. doi: 10.1111/j.2044-8309.1990.tb00885.x. [DOI] [PubMed] [Google Scholar]

- Ponnet KS, Roeyers H, Buysse A, De Clercq A, van der Heyden E. Advanced mind-reading in adults with Asperger Syndrome. Autism. 2004;8:249–266. doi: 10.1177/1362361304045214. [DOI] [PubMed] [Google Scholar]

- Roeyers H, Buysse A, Ponnet KS, Pichal B. Advanced mind reading tests: Empathetic accuracy in adults with a pervasive developmental disorder. Journal of Child Psychology and Psychiatry. 2001;42:271–278. [PubMed] [Google Scholar]

- Rosenblum L, Schmuckler MA, Johnson JA. The McGurk effect in infants. Perception and Psychophysics. 1997;59:347–357. doi: 10.3758/bf03211902. [DOI] [PubMed] [Google Scholar]

- Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cerebral Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Schultz RT. Developmental deficits in social perception in autism: The role of the amygdale and fusiform face area. Internation Journal of Developmental Neuroscience. 2005;23:125–141. doi: 10.1016/j.ijdevneu.2004.12.012. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RSE, Piven J. Abnormal use of facial information in high-functioning autism. Journal of Autism and Developmental Disorders. 2007;37:929–939. doi: 10.1007/s10803-006-0232-9. [DOI] [PubMed] [Google Scholar]

- Sumby WH, Pollack I. Visual contribution to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Tantam D, Monaghan L, Nicholson H, Stirling J. Autistic children's ability to interpret faces: a research note. Journal of Child Psychology & Psychiatry & Allied Disciplines. 1989;30:623–630. doi: 10.1111/j.1469-7610.1989.tb00274.x. [DOI] [PubMed] [Google Scholar]

- Tardif C, Lainé F, Rodriguez M, Gepner B. Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial-vocal imitation in children with autism. Journal of Autism and Developmental Disorders. 2007;37:1469–1484. doi: 10.1007/s10803-006-0223-x. [DOI] [PubMed] [Google Scholar]

- Teunisse JP, de Gelder B. Impaired categorical perception of facial expressions in high-functioning adolescents with autism. Child Neuropsychology. 2001;7:1–14. doi: 10.1076/chin.7.1.1.3150. [DOI] [PubMed] [Google Scholar]

- van der Geest J, Kemner C, Verbaten M, van Engeland H. Gaze behavior of children with pervasive developmental disorder toward human faces. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1111/1469-7610.00055. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee M, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams JHG, Massaro DW, Peel NJ, Bosseler A, Suddendorf T. Visual-auditory integration during speech imitation in autism. Research in Developmental Disabilities. 2004;25:559–575. doi: 10.1016/j.ridd.2004.01.008. [DOI] [PubMed] [Google Scholar]