Abstract

Constraint-based lexical models of language processing assume that readers resolve temporary ambiguities by relying on a variety of cues, including particular knowledge of how verbs combine with nouns. Previous experiments have demonstrated verb bias effects only in structurally complex sentences, and have been criticized on the grounds that such effects could be due to a rapid reanalysis stage in a two-stage modular processing system. In a self-paced reading experiment and an eyetracking experiment, we demonstrate verb bias effects in sentences with simple structures that should require no reanalyis, and thus provide evidence that the combinatorial properties of individual words influence the earliest stages of sentence comprehension.

Keywords: language, sentence comprehension, parsing, verb bias, garden-path sentences, eyetracking, self-paced reading

The apparent everyday ease of language comprehension conceals considerable complexity in the processes that are necessary to its success. Certain kinds of sentences, however, reveal the underlying complexity by leading to characteristic errors of interpretation called “garden paths.” “Garden-pathing” occurs when a sentence is misinterpreted at first and then must be reinterpreted. Since they require reanalysis, garden-path sentences generally take longer to understand than other sentences. One goal of language comprehension research is to provide explanations for why and how certain kinds of sentences lead to garden-pathing, and thus provide a fuller account of the human capacity for language.

Sentence (1a) below is an example of a sentence that is likely to garden-path most readers.

1a. The professor read the newspaper had been destroyed.

1b. The professor read the newspaper during his break.

The phrase the newspaper in (1a) is likely to be interpreted initially as the direct object of read, but the rest of sentence makes it clear that that interpretation is incorrect. Instead, newspaper in (1a) turns out to be the subject of an embedded clause. Thus, the role of the phrase the newspaper is temporarily ambiguous in sentences like (1), since it could turn out to be either the direct object of read as in (1b), or the subject of an embedded clause as in (1a). Most readers would probably make the direct object interpretation of the newspaper at first in these examples, and thus be surprised when the word had disambiguates the sentence in the unexpected direction in (1a). Potential mistakes could easily be avoided, of course, if people waited until they reached the word had in (1a) or during in (1b) before deciding how newspaper should be integrated into the sentence. However, considerable evidence indicates that people generally do not wait. Instead, as soon as they recognize each word, they try to integrate it into a continually evolving interpretation of the sentence. Since there are many kinds of sentences containing temporary ambiguities, people are often faced with uncertainty about how to integrate incoming words into the sentence structure. In (1a), a decision must be made about whether or not the newspaper is the thing being read. How and when people make such decisions, and what kinds of information influence those decisions, has been the focus of intense debate in psycholinguistics.

Broadly speaking, two classes of comprehension theories have been developed. Modular two-stage theories (e.g., Frazier, 1990) argue that comprehension is made manageable by first trying to resolve ambiguities in the simplest possible way. According to the Minimal Attachment Principle of the Garden Path Model (Frazier & Fodor, 1978), for example, nouns following verbs are first interpreted as direct objects because that is the simplest alternative. If wrong, this interpretation is then revised at a second reanalysis stage. Simpler sentence structures are thought to be easier and thus faster for comprehenders to construct, so a system that always pursues those first could be especially efficient. Of course, the relative efficiency of a system that works in this way depends on how often the initial choices are wrong, as well as the cost of revising them when they are.

In the second type of comprehension model, multiple factors continuously contribute to and constrain decisions about ambiguities throughout comprehension. Such models have been most fully developed in the constraint satisfaction framework (e.g., MacDonald, Pearlmutter, & Seidenberg, 1994; Trueswell & Tanenhaus, 1994). Such interactive models generally incorporate some limited capacity to pursue multiple interpretations in parallel, each weighted according to the evidence in its favor. The likelihood that a verb will be followed by a particular type of sentence structure constitutes one important source of evidence in most such models, leading to the use of the label “constraint-based lexical models” (Trueswell & Tanenhaus, 1994). Knowledge tied to verbs is particularly influential because verbs tend to place strong constraints on how the other words in a sentence can combine. For example, the verb read is one that can be followed by simple direct objects, or more complex embedded clauses, or various kinds of prepositional phrases, but it is most likely to be followed by a direct object (i.e., it has a “Direct-object bias”). Thus, comprehenders might find a sentence like example (1a), in which read is followed by an embedded clause, particularly difficult because it violates their verb-based expectation for a direct object. Thus, for sentences like example (1a), both kinds of models make the same predictions, but for partially different reasons.

Proponents of both kinds of models find supporting evidence in the literature, in part because it has proved to be quite difficult to clearly distinguish the initial decisions of the parser from subsequent reanalysis stages when initial decisions are incorrect. Evidence for the initial effects of multiple types of information, which some theorists have taken as support for an interactive system, has been interpreted by modular two-stage theorists as reflecting reanalysis. This impasse has arisen in part because most research so far has been restricted to testing the comprehension of temporarily ambiguous sentences that turn out to have the more complex of their possible structures. Various relevant factors have been found to reliably ease the interpretation of such sentences, but it remains possible that such effects are due to reanalysis. Frazier (1995) has argued that it would be more convincing to show that the comprehension of simple structures is also affected by the same kinds of cues. Since sentences with simple structures should never require reanalysis according to the Garden Path Model, their comprehension should not be affected by the kinds of cues that have been shown to affect more complex structures. As Frazier (1995) notes:

“It may be significant that garden paths have never been convincingly demonstrated in the processing of analysis A (the structurally simplest one)... In such cases, if analysis A ultimately proves to be correct, perceivers should show evidence of having been garden-pathed by a syntactically more complex analysis even though the syntactically simpler analysis is correct.” (p. 441)

The studies reported here follow the strategy suggested by Frazier, and examine the effect of one particular kind of cue on the comprehension of one particular kind of temporarily ambiguous sentence. The sentences contain a temporary ambiguity about whether a noun phrase immediately following a verb is its direct object or the subject of an embedded clause, as illustrated earlier in example (1). The relevant cue that is manipulated is the relative likelihood of the main verb being used in sentences with different kinds of structures (called “verb bias” here). Our studies include sentences that are resolved in the simplest possible way, as well as ones resolved in the more complex way.

The goal of the studies reported here is to determine whether verb bias influences the earliest stages of sentence processing by investigating its effects in temporarily ambiguous sentences that turn out to have the simpler of their possible structures. Since such sentences should never require reanalysis, evidence that verb bias affects their comprehension would provide strong evidence that the earliest stages of sentence processing are influenced by information tied to specific words, as predicted by constraint-based lexical models.

Method

Participants

63 native English speakers (23 males, 55 right-handed, mean age 21) participated for either partial course credit or a minimal sum. Due to experimenter error, only 54 participants’ data were available for analysis.

Materials

Verb bias was assessed in a norming study in which a group of 108 participants wrote sentence continuations for proper name + verb fragments. The norming study is described in more detail in Garnsey et al. (1997). Continuations were hand coded for each verb for the number of 1) direct objects, 2) that-clauses, and 3) other types of sentence continuations. Verbs were classified as Direct-object bias if they were used at least twice as often with a direct object as with an embedded clause in the norming study, and as Clause-bias if they were used at least twice as often with an embedded clause as with a direct object. Twenty verbs fitting these criteria were chosen for each verb type, with most of the verbs being used far more frequently with their preferred continuation. (See Table 1; biases for individual verbs are given in Appendix 2.) The two sets of verbs were matched in length and approximately matched in frequency, on average.

Table 1.

Verb Properties

| Mean DO-bias strength of verb1 | Mean Clause-bias strength1 | Mean frequency of verb2 | Mean length of verb | Mean frequency (mean summed length) of 2-word disambig region for clause continuations | Mean frequency2 (mean summed length) of 2-word disambig region for direct object continuations | Mean frequency (mean summed length) of first disambig word for clause continuations | Mean frequency (mean summed length) of first disambig word for direct object continuations | |

|---|---|---|---|---|---|---|---|---|

| Direct object bias Verbs | 76% | 13% | 190 | 7.9 | 7117 (9.0) | 10628 (9.8) | 1287 (5.7) | 968 (5.5) |

| Clause bias Verbs | 11% | 58% | 108 | 7.6 | 6834 (9.5) | 10758 (9.3) | 852 (5.8) | 834 (5.8) |

Bias was calculated as the percentage of sentences with a particular structure, out of all sentences containing the verb.

For each verb, three sentence versions were constructed containing the same subject noun and post-verbal noun. One version had a direct-object continuation (as shown in 2b and 3b below), and the other two both had embedded clause continuations (as shown in 2a and 3a below). One of the embedded clause versions was temporarily ambiguous, while the other was made unambiguous by including the complementizer that. Thirty plausible noun + verb + noun combinations were taken directly from items used by Garnsey et al. (1997), but new sentence continuations were written for them to allow better matching of the disambiguating regions across direct object and embedded clause continuations. Another 48 sentences were newly created. For each of 38 verbs, two item sets with different subject and post-verbal nouns were created, and for two additional verbs a single item set was created, thus yielding 78 experimental item sets overall. (There was only one item set each for two of the verbs because counterbalancing the design required the total number of item sets to be a multiple of three.) In all items, the temporary ambiguity about whether the second noun phrase in the sentence was a direct object of the main verb or the subject of an embedded clause was always immediately disambiguated by either a verb in the embedded clause versions (e.g., might in 2a and could in 3a) or a conjunction or preposition in the direct object versions (e.g., because in 2b and when in 3b). Since it is impossible to create a grammatical English sentence where the complementizer that is followed by a direct object, the design could not be completely crossed. Half of the items contained Direct-object bias verbs as in (3) and half contained Clause-bias verbs as in (2). All experimental sentences contained 11 words in their ambiguous versions and 12 words in their unambiguous versions.

Clause-bias verb

2a. The ticket agent admitted (that) the mistake might not have been caught.

2b. The ticket agent admitted the mistake because she had been caught.

Direct-Object bias verb

3a. The CIA director confirmed (that) the rumor could mean a security leak.

3b. The CIA director confirmed the rumor when he testified before Congress.

The temporarily ambiguous nouns were all plausible as direct objects of the verbs they followed (with the exception of two items for which a less plausible direct object was used in error in Experiment 1; both of these items had Direct-object bias verbs and were dropped from all analyses). Plausibility was assessed in two kinds of norming studies. In one, people rated the plausibility of sentences like “The ticket agent admitted the mistake.” on a 7-point scale (7 = highly plausible), where a period following the post-verbal noun made it clear that it was a direct object. Mean plausibility ratings were 5.8 for items with Clause-bias verbs and 6.6 for items with Direct-object bias verbs. In the second kind of norming study, people rated fragments like “The ticket agent admitted that the mistake …” as the beginnings of sentences, to evaluate the plausibility of the embedded clause interpretations of the items. Mean plausibility when such fragments contained Clause-bias verbs was 6.4, and 6.0 when they contained Direct-object bias verbs.

Notice that there was a trade-off in the two kinds of plausibility ratings, such that plausibility was rated slightly higher whenever the sentence structure matched the verb’s bias. It should not be surprising that people rate sentences as more plausible when the verb is used in the way it most often is, and a similar pattern was observed by Garnsey et al. (1997) for a partially overlapping set of items. The ratings show that the materials met the goal of using nouns that were plausible both as direct objects and as subjects of embedded clauses, but they highlight the difficulty of evaluating plausibility. It is not clear that matching plausibility ratings even more closely across verb types would have the desired effect of more closely equating the plausibility of sentences with the two kinds of verbs. That is, higher ratings for direct objects following Clause-bias verbs might require using verb/noun pairings that are actually even more plausible than the ones that produce the same ratings for Direct-object bias verbs. We concluded that our materials were sufficiently matched in plausibility, given that Garnsey et al. (1997) found no early effects of far larger plausibility differences (3.6 on the same rating scale, compared to 0.8 here) on initial reading times when verbs were strongly biased, as they are here. However, to rule out the possibility that any observed reading time differences might be due to differences in the plausibility of the temporarily ambiguous noun as a direct object, rather than or in addition to verb bias, the results were analyzed for both the full set of items and for a subset that equated plausibility ratings across verb bias. In the set that equated rated plausibility, the 11 highest-rated items with Clause-bias verbs and the 11 lowest-rated items with Direct-object bias verbs were dropped, leaving 56 items over which the average rating was 6.4 for both verb types. The items that were dropped in this analysis are indicated in Appendix 1.

The two words following the post-verbal noun, which are underlined in (2) and (3), are called the “disambiguating region” here. It was not possible to closely match the frequency of the two words in the disambiguating region across the two types of sentence continuations while also satisfying other constraints on stimulus naturalness and plausibility. On average, the two words in the disambiguating region of direct object continuations were more frequent than those in the embedded clause continuations. However, this is not particularly problematic because our most important predictions concern the effect of the preceding main verb on reading times for the same type of continuation, and within continuation type, the frequencies of the disambiguating words were well-matched for the two types of verbs.

However, within continuation type there were small but potentially problematic length differences. The two-word disambiguation toward a clause continuation was 9.5 characters long on average after a Clause-bias verb, while it was 9.0 characters long on average after a Direct-object bias verb. The opposite pattern was true for direct object continuations, which were 9.8 characters long after Direct-object bias verbs and 9.3 characters long on average after Clause-bias verbs. Thus, in both cases the two-word disambiguating region tended to be a half character longer, on average, in continuations that matched verb bias. These differences arose because the length of the first but not the second word in the two-word disambiguating region was carefully controlled during stimulus construction. In spite of this, we decided to group the two words into a single analysis region to increase the stability of the results at this critical region, since it is well-known that short, high-frequency words (like most of the two words in the disambiguating region in these sentences) tend to be read very quickly and even skipped fairly often in free reading. Such words often fail to show reliable differences in reading time between conditions even when longer words immediately before and after do. Although a two-word disambiguating region was used, the analysis took a two-pronged approach to mitigate the possibility that length differences might obscure effects of verb bias at the disambiguation. First, as is customary, we corrected reading times for length differences, using procedures described below in the results section for Experiment 1. Second, to rule out the possibility that the length-correction procedure might distort results at the crucial disambiguating region, we also analyzed uncorrected reading times for just the first disambiguating word, since its length was matched better across conditions. If the pattern of uncorrected condition means for the first word is similar to that for the length-corrected two-word region, we can be assured that the pattern is not an artifact of the length-correction procedure.

The 78 experimental item sets were counterbalanced over three lists such that participants saw only one version from each item set and saw equal numbers of items in each condition. Each verb was repeated twice during a list, appearing once with a direct object continuation and once with a clause continuation, with different subject and post-verbal nouns each time, with the exceptions that two verbs only appeared once per list, as described above, and that one verb, asserted, was followed by the same noun, belief, in both of its items by mistake. In the latter case, the rest of the two asserted sentences were different. The experimental items were presented intermixed with 119 distractors and preceded by 10 practice items for a total of 207 trials. There were six types of distractors, including two reduced that-clauses, 23 conjoined clauses, 27 sentences with subject relative clauses, 24 sentences with infinitive clauses, 20 sentences with transitively biased verbs used in simple transitive sentences such as The politician ignored the scandal in his brief speech, and 23 sentences with intransitively biased verbs used in simple intransitive sentences such as The physical act of respiration begins with the diaphragm. The distractors varied in length from 7 to 14 words.

Each sentence in the experiment was followed by a yes/no comprehension question. Since the meaning of the sentences differed between the direct object and clause continuations, the comprehension question sometimes differed across versions in order to fit the sentence. Items were pseudo-randomly ordered with the constraints that 1) the first two sentences in the list were distractor items; 2) there were no more than two experimental items in a row; and 3) there were no more than 4 trials in a row with the same answer to the post-sentence question. Trials containing the same verb were as widely spaced as possible, with a minimum of 25 trials between the two repetitions of the same verb. Each list was presented in the same order, and each participant saw only one list.

Procedure

Participants read 207 sentences in a word-by-word self-paced moving-window paradigm. Sentences were displayed on a computer screen using the MEL (Microcomputer Experimental Laboratory) software package. Each sentence was followed by a yes/no comprehension question that was answered by pushing a key on a computer keyboard.

Each trial began with dashes on the screen in place of all non-space characters in the sentence. After the first spacebar push, the trial number was displayed at the left side of the screen. With each successive spacebar push, the next word appeared and the previous word reverted to dashes. All sentences fit on exactly one line of the computer screen. Participants were instructed to use whichever hand they felt most comfortable using to push the spacebar and answer the questions. The experiment lasted about 35 minutes.

Results

Two items were dropped from further analysis when errors in them were discovered (indicated in Appendix 1). Both of the dropped items contained Direct-object bias verbs.

Accuracy in answering the comprehension questions was generally high, with 91% correct performance overall. However, questions following sentences containing Direct-object bias verbs were answered slightly less accurately (89%) than those following sentences containing Clause-bias verbs (92%), reliable only by participants (F1(1,53) = 11.7, p < .01; F2(1,74) = 2.3, p >.1; MinF′(1,105) = 1.9, p>.1). This difference came almost entirely from sentences with direct object continuations, which were responded to 8% less accurately when they contained Direct-object bias verbs than when they contained Clause-bias verbs, exceeding the 95% confidence interval of 4% on the difference by both participants and items. Because only one sentence type showed such a difference, there was an interaction between verb bias and sentence structure in the omnibus ANOVA, but only by participants (F1(2,106) = 8.4, p < .01; F2(2,148) = 2.8, p > .1; MinF′(2,121) = 2.1, p > .1). There was no main effect of sentence structure on accuracy.

Trials on which there was an incorrect response to the comprehension question were omitted from analyses of the reading time data, resulting in an overall loss of 9% of the data. The primary analyses reported here are based on reading times corrected for length differences (Ferreira & Clifton, 1986). A regression equation characterizing the relationship between region length and reading time was calculated separately for each participant, based on the experimental items only. (Length correction was also calculated using all items including distractors, which produced the same pattern of results.) Expected reading times were calculated for each region length for each participant based on the regression equations, and then subtracted from the actual reading times to yield length-corrected residual reading times. The length-corrected data were then trimmed at three standard deviations from the overall mean across conditions for a particular participant in a particular region, such that values exceeding the cutoff were replaced with the value at three standard deviations. Approximately 1.4% of the data were replaced in this way. Analyses were then performed separately for each of the sentence regions indicated below in (4) with slashes separating the regions. The critical disambiguating region is underlined.

4. The ticket agent/admitted/that/the mistake/might not/have been caught.

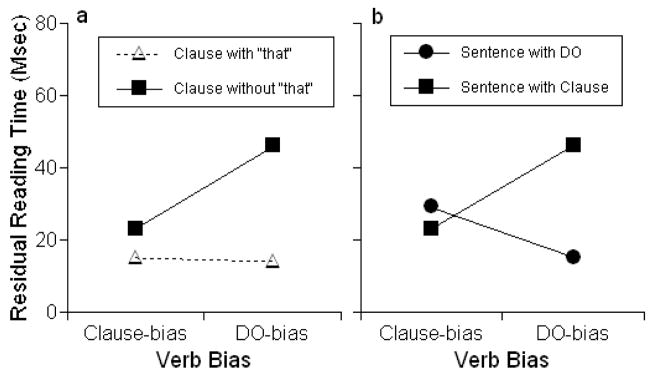

Since the design was necessarily incompletely crossed, two separate analyses of variance (ANOVAs) were conducted on partially overlapping subsets of the data, for both question response accuracy and reading time measures. First, a 2 (verb bias) × 2 (ambiguity: with or without that) ANOVA tested the effect of verb bias in sentences with embedded clauses. (The label ANOVA1 is used in tables throughout the paper for this analysis). This analysis omitted the conditions with direct object continuations and specifically tested whether the results replicated previous studies showing an effect of verb bias in sentences with embedded clause continuations. A second 2 (verb bias) × 2 (sentence continuation type) ANOVA tested the effect of verb bias in temporarily ambiguous sentences with the two different kinds of continuations (labeled ANOVA2 in tables throughout.). This analysis omitted unambiguous sentences and compared only the temporarily ambiguous conditions. All analyses were conducted by both participants and items, and MinF’s were also computed (Clark, 1973). For the most part, ANOVA results will be reported in tables (with the occasional isolated result reported in the text instead). 95% confidence intervals were calculated for pairwise contrasts of interest (Masson & Loftus, 2003). Mean length-corrected residual reading times are shown for all conditions in all regions in Table 2 below, and the results for just the disambiguating region are also shown in Figures 1a and 1b. Results of both ANOVAs at both the temporarily ambiguous noun phrase and the disambiguating verb region are shown in Table 3.

Table 2.

Mean residual reading times in Experiment 1 (Msec)

| Subject NP | admitted/confirmed | (that) | Temp. Ambig. NP | Disambig. | Rest of sentence | |

|---|---|---|---|---|---|---|

| Clause-bias verb + that + Clause | 19 | 59 | 23 | −5 | 15 | −69 |

| Clause-bias verb + Clause | 19 | 50 | - | 27 | 23 | −64 |

| Clause-bias verb + DO | 21 | 47 | - | 28 | 29 | −62 |

| DO-bias verb + that + Clause | 9 | 65 | 26 | 3 | 14 | −70 |

| DO-bias verb + Clause | 17 | 68 | - | 17 | 46 | −61 |

| DO-bias verb + DO | 20 | 65 | - | 21 | 15 | −60 |

Bias was calculated as the percentage of sentences with a particular structure, out of all sentences containing the verb.

Figure 1.

Residual reading times at the disambiguating region in Experiment 1

Table 3.

Analysis of variance results at the temporarily ambiguous NP and the disambiguating verb region for length-corrected reading times in Experiment 1

| By participants | By items | MinF′ | ||||||

|---|---|---|---|---|---|---|---|---|

| Source | F1 | df1 | F2 | df2 | MinF′ | df | ||

| Temporarily Ambiguous NP | ANOVA1 | Verb | 3.1! | 1,53 | 2.4 | 1,74 | 1.3 | 1,127 |

| Ambiguity | <1 | 1,53 | <1 | 1,74 | <1 | |||

| V × A | <1 | 1,53 | <1 | 1,74 | <1 | |||

| ANOVA2 | Verb | <1 | 1,53 | <1 | 1,74 | <1 | ||

| Structure | 30.4** | 1,53 | 36.5** | 1,74 | 16.6** | 1,119 | ||

| V × S | 4.3* | 1,53 | 4.0* | 1,74 | 2.1 | 1,125 | ||

| Disambig | ANOVA1 | Verb | <1 | 1,53 | <1 | 1,74 | <1 | |

| Ambiguity | 9.9** | 1,53 | 7.1** | 1,74 | 4.1* | 1,127 | ||

| V × A | 19.4** | 1,53 | 14.3** | 1,74 | 8.3** | 1,127 | ||

| ANOVA2 | Verb | 11.3** | 1,53 | 3.7! | 1,74 | 2.8! | 1,113 | |

| Structure | 16.8** | 1,53 | 26.9** | 1,74 | 10.4** | 1,109 | ||

| V × S | 6.0* | 1,53 | 7.8** | 1,74 | 3.4! | 1,117 | ||

Note. ANOVA1 includes only temporarily ambiguous and unambiguous sentences with Clause continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted that the mistake might not have been caught”). ANOVA2 includes only temporarily ambiguous sentences with either Clause or DO continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted the mistake because she had been caught”).

p < .05;

p < .01;

p < .10

Disambiguating region

Since the hypotheses driving this study primarily concern what happens at the disambiguating region, results from that region will be described first. As noted above in Table 2, the disambiguating region was read 21 msec more slowly overall in temporarily ambiguous sentences without that. However, this ambiguity effect was 24 msec larger in sentences with Direct-object bias verbs than in sentences with Clause-bias verbs, leading to a reliable interaction between verb bias and ambiguity. Reading times were also 12 msec slower overall in sentences with Direct-object bias verbs than with Clause-bias verbs, resulting in a main effect of verb bias by participants. This pattern of results replicates previous studies finding that people had disproportionately more difficulty when a Direct-object bias verb was followed by a clause without the complementizer that (e.g., Garnsey et al., 1997; Trueswell et al., 1993) than when a Clause-bias verb was.

The central focus of the current study, however, is what happens in sentences with direct object continuations, which was assessed in a second ANOVA testing the effect of verb bias in temporarily ambiguous sentences with both kinds of continuations (i.e., direct objects and embedded clauses), illustrated in Figure 1b and summarized in Table 3 as ANOVA2. As noted in the table, the disambiguating region was read 13 msec more slowly overall in embedded clause continuations than in direct object continuations, producing a reliable main effect of continuation type. However, as Figure 1b shows, this effect came entirely from sentences with Direct-object bias verbs. In sentences with Clause-bias verbs, there was actually a small difference in the opposite direction. This pattern resulted in a reliable interaction between sentence continuation type and verb bias. There was no main effect of verb bias in this analysis.

The nature of the interaction between verb bias and sentence continuation type illustrated in Figure 1b suggests that reading times were longer whenever there was a mismatch between verb bias and sentence continuation, and this was confirmed in pairwise comparisons. Embedded clause continuations were read significantly more slowly after Direct-object bias verbs than after Clause-bias verbs (mean difference = 22.9 msec, 95% CI = 9.8 to 36.1). The reverse was also true: direct object continuations were read significantly more slowly after Clause-bias verbs than after Direct-object bias verbs (mean difference = −14.5 msec, 95% CI = −27 to −2.1). It is worth pointing out here that despite the greater conceptual and structural complexity of embedded clauses, Clause continuations were actually read slightly faster (mean difference = 6 msec, 95% CI = −4.1 to 15.9) than direct object continuations after Clause-bias verbs, although this difference did not approach reliability.

In the Materials section above, we described two ways in which the sentences were not matched across verb type as well as would be ideal, and described two additional analyses that would be done to help rule out alternative interpretations of the results based on small but possibly important mismatches in sentence properties. In the first of those additional analyses, we analyzed uncorrected reading times for just the first disambiguating word, since the length of the first disambiguating word was matched better across sentences with the two kinds of verbs than the two-word disambiguating region was. (See Table 1 above for length and frequency information about the first disambiguating word, and Table 8 in Appendix 3 for uncorrected and untrimmed reading times for all sentence regions. Note, however, that the disambiguating region whose uncorrected, untrimmed reading times are presented in Table 8 is the two-word region.)

The pattern of results for the first disambiguating word was similar to that for the corrected two-word disambiguating region, though weaker. These results are listed in Appendix 3. In general, the numeric pattern across condition means was similar for uncorrected times at the first disambiguating word and corrected times on the two-word disambiguating region, but the differences were smaller and less reliable in the single-word analysis. Recall that the effect of verb bias was predicted to be smaller in sentences with direct object continuations than in those with clause continuations, so it is not surprising that it would fail to reach reliability in an analysis of a less stable single-word measure on a short, high-frequency function word. Most crucially, uncorrected reading times on just the first disambiguating word in direct object continuations were numerically longer following Clause-bias verbs than following Direct-object bias verbs, so the similar but statistically reliable pattern observed for the length-corrected reading times on the two-word disambiguating region cannot be an artifact of the length-correction procedure.

In the second additional analysis done to rule out alternative explanations of the results, only items that equated plausibility ratings across verb type were analyzed. Again the pattern of results was similar. These results are also listed in Appendix 3. Crucially, it cannot be the case that small overall plausibility differences between sentences with the two types of verbs were responsible for the pattern of results at the disambiguation in the full set of items, since the pattern stayed the same when only items equating plausibility ratings across verb type were included.

The temporarily ambiguous noun phrase

There was a main effect of ambiguity at the noun phrase, which was read 23 msec more slowly in ambiguous sentences than in unambiguous ones. Although there was no main effect of the verb’s bias at the noun phrase, there was a reliable interaction between verb bias and ambiguity such that the inclusion of that decreased reading times on the noun phrase by 17 msec more in sentences with Clause-bias verbs than it did in sentences with Direct-object bias verbs (95% CI = 0.5 to 33.0). Although the word that itself was read almost equally quickly following both kinds of verbs (26 vs. 23 msec; Fs < 1), the interaction at the noun phrase after it suggests that the mismatch between a verb that predicts a direct object and a complementizer which clearly contradicts that prediction may have slowed readers when they reached the following noun phrase in sentences with Direct-object bias verbs.

Other sentence regions

Only two other sentence regions showed any reliable differences. At the main verb itself, Direct-object bias verbs were read 17 msec slower than Clause-bias verbs, leading to a main effect of verb bias in the analysis that included only the temporarily ambiguous conditions (F1(1,53) = 7.0, p < .05; F2(1,74) = 5.7, p <.05; MinF′(1,127) = 3.1, p < .1). A similar numeric pattern was also reported by Garnsey et al. (1997) for a partially overlapping set of materials. In both sets of materials, Direct-object bias verbs were read more slowly in spite of the fact that they were actually higher frequency than the Clause-bias verbs.

In the analysis that included only clause continuations, the sentence-final region was read 6 msec more slowly in ambiguous than in unambiguous sentences, which led to a main effect of ambiguity that was reliable only by participants (F1(1,53) = 7.4, p < .05; F2(1,74) = 2.9, p < .1; MinF′(1,118) = 2.1, p > .1).

Correlations between reading times and verb properties

A comparison of the temporarily ambiguous and unambiguous versions of sentences with embedded clause continuations showed that readers had more difficulty at the disambiguation in the ambiguous versions when the verb had a stronger Direct-object bias (r = .30, p < .01) and when it had a stronger that-preference (r = .34, p < .01), and less difficulty when the verb had a stronger Clause-bias (r = −.25, p < .05). The same pattern was observed in a comparison of the ambiguous sentences with the two kinds of continuations, where the disambiguating regions in embedded clause continuations were read more slowly as both the verb’s Direct-object bias and its that-preference increased (Direct-object bias: r = .40, p < .01, that-preference: r = .46, p < .01) and more quickly as the verb’s Clause-bias increased (r = −.28, p < .05).

The complementizer that in the unambiguous sentences was read more quickly as the overall frequency of the verb preceding it increased (r = −.34, p < .05). This may be a kind of spillover effect often seen in reading time data, i.e., difficulty in reading a less familiar word often spills over onto the word following it as well.

Discussion

Prior experience with the relative likelihood of the different argument structures that are possible for a verb guided readers’ interpretation of sentences containing those verbs. The new and most important finding here was that verb bias affected reading times in simple direct object continuations. Pairwise comparisons in the main analysis using a two-word disambiguating region and an item analysis demonstrated that when readers were led by verb bias to expect an embedded clause but got a direct object instead, they slowed down reliably compared to when the verb had predicted a direct object all along. In followup analyses using either just the first disambiguating word or a subset of items closely matched in rated plausibility, a similar pattern was observed, though since effects were weaker from a reduced number of items, effects did not always reach reliability in the single-word analysis. Consistent with previous studies, however, readers also used their prior experience with verbs to help them understand embedded clauses. As expected, readers had very little trouble at the disambiguating region of sentences that contained the complementizer that, regardless of verb bias, since this word strongly predicts an upcoming clause. If the sentence did not contain that, however, readers were helped if the sentence contained a Clause-bias verb and hindered if the sentence contained a Direct-object bias verb. The finding that comprehension of clauses is aided by a verb that strongly predicts an embedded clause replicates earlier findings by Trueswell et al. (1993) and Garnsey et al. (1997).

Finding verb bias effects in sentences with direct object continuations provides strong evidence that lexical properties such as verb bias guide the earliest parsing decisions in temporary ambiguities. If the comprehension system initially analyzed every noun following a verb as a direct object, then direct object continuations should be read equally quickly after Clause-bias verbs and Direct-object bias verbs, since no revision should be required in either case. The results reported here are not consistent with that prediction. The speed-accuracy tradeoff obtained in comprehension questions for Direct-object bias verbs followed by direct object continuations (ie, these continuations were read more quickly but the subsequent comprehension questions were answered more inaccurately) provides further indirect evidence on this point. Previous authors have noted that although comprehension processes are by and large “automatic, highly overlearned mental procedures,” this does not mean that they are error-free (McElree & Nordlie, 1999, pg. 486). If in fact a Clause-bias verb followed by a direct object causes a reader to devote more mental resources for a presumed upcoming complex clause than while reading a Direct-object bias verb followed by a direct object, then the more complex sentences may counterintuitively be answered more accurately. This is especially true if participants are not fully engaged during every sentence of the study. This evidence is of course largely indirect, since it occurs after the complete sentence has already been displayed. It also does not distinguish between classes of theories, since a serial model would also presumably predict that Direct-object bias verbs followed by direct object continuations should be answered more accurately. Whatever the source, however, a speed-accuracy tradeoff should not affect the results of the experiment above since all incorrect sentences were discarded from analysis in these results.

Finally, previous studies of similar sentences have found reading times to be correlated with properties of the verbs in the sentences (e.g., Garnsey et al., 1997; Trueswell et al., 1993), and the same was true here. In addition to Direct-object bias and Clause-bias strength, another verb property that has sometimes been found to be correlated with reading times is that-preference. A verb’s that-preference was calculated as the percentage of all sentences in the norming study containing that verb followed by an embedded clause that explicitly included the complementizer that. That-preference is typically positively correlated with Direct-object bias (r = .58 here), since the more likely a verb is to be followed by direct objects, the more likely it is that that will be included when it is instead followed by an embedded clause. Conversely, the more likely a verb is to be followed by embedded clauses, the less likely it is that that will be included, so that-preference is generally negatively correlated with Clause-bias (r = −.54 here). Of course, Direct-object bias and Clause-bias are also inevitably negatively correlated with each other (r = −.73 here). We calculated only simple correlations between each of these three verb properties and reading times because we were interested in using graded effects of verb properties to help diagnose how quickly knowledge about the verbs began to influence reading times, rather than in trying to determine the independent contributions of each verb property. As noted above, readers had a more difficult time in sentences without that which continued as clauses when the verb had a stronger Direct-object bias preference or a stronger preference for being followed by that.

The general picture that emerges from Experiment 1, therefore, is that prior experience with verbs guided readers’ interpretation of all sentences. This is suggested by the relative difficulty experienced by readers at a disambiguation that was not congruent with their verb-based expectations, and the relative ease at a disambiguation that was consistent with those expectations. However, a note of caution is in order. Experiment 1 used a self-paced moving window reading paradigm, which is unlike normal reading in several respects. In part because readers cannot go back and re-read earlier sentence regions when they encounter difficulty, self-paced reading times are probably influenced by both initial processing difficulty and the work done to recover from that difficulty. In order to obtain a clearer picture of what readers do when they are free to read more normally, Experiment 2 replicated Experiment 1 using eyetracking.

Experiment 2

The results of Experiment 1 supported the claim that readers use their prior experience with verbs in order to constrain their interpretations of sentences. However, participants in Experiment 1 were tested using self-paced reading, in which participants only see one word at a time. Thus, the purpose of the second experiment was to replicate the findings of Experiment 1 under conditions that more closely approximated natural reading. Accordingly, the stimuli from Experiment 1 were tested using an eyetracker.

Method

Participants

84 participants (37 males, 70 right-handed, mean age 21) participated for a minimal sum. All had normal uncorrected vision. All were native speakers of English except for one, who was excluded from further analyses. An additional 8 participants were dropped for reasons described below, leaving 75 participants whose results are reported here.

Procedure

The stimuli and procedures in Experiment 2 were identical in all respects to Experiment 1, except that participants’ eye movements were monitored using a Fifth Generation Dual Purkinje Eyetracker interfaced with an IBM-compatible PC. Stimuli were presented on a black and white ViewSonic monitor, at a font size equivalent to four characters per degree of visual angle. Although viewing of stimuli was binocular, only the right eye was monitored. Horizontal and vertical eye positions were sampled once per millisecond, and head position was held constant by a bite bar prepared for every participant. The eyetracker was calibrated for each participant at the beginning of the experimental session and after every break.

A trial began with the trial number displayed at the left side of the screen. Once the participant fixated the trial number and pushed a button, the trial began. An entire sentence was presented on one line of the monitor, and participants were instructed to read the sentence as normally as possible. Once finished, participants were instructed to look at a box on the right side of the screen and push the “yes” button to continue to the comprehension question. As in Experiment 1, each question was a “yes/no” comprehension question that participants answered by pushing buttons held in either hand.

Stimuli

The stimuli were identical to Experiment 1, except that two item errors were corrected.

Reading Time Measures

Reading patterns were examined using several different measures, including first-pass reading time, proportion of trials on which the first pass ended with a regressive eye movement, regression-path reading time (also sometimes called “right-bounded time”), and total time. First-pass reading time for a region summed all of the fixations made on that region before the eyes moved to any other region of the sentence. First-pass times for a region were included in the analyses reported below only if the participant looked at that region before fixating any region further to the right, in order to prevent contamination of the first-pass measure by trials where participants already knew from looking ahead what the eventual sentence continuation would be. For total times, all fixations on a region, including those during both initial reading and re-reading, were included.

Both first-pass and total times combine fixations that are spatially contiguous, as noted by Liversedge, Paterson, and Pickering (1998). Another approach described by Liversedge et al. is to sum fixations that are temporally rather than spatially contiguous. “Regression-path reading time” is a measure that combines all temporally contiguous fixations from the time the reader first reaches the region of interest until the reader first advances to the right beyond that region. This is an important distinction, since if the current region causes the reader to regress to earlier portions of the sentence, the fixations on earlier regions resulting from those regressions will be included in the regression-path time for the current region. If readers respond to processing difficulty by regressing to earlier portions of the sentence rather than by slowing down or rereading the same region, both the proportion of trials on which the first pass ends with a regression and the regression-path reading time will be increased. Thus, both of these measures can reveal effects that are missed by both first-pass time and total-time. (See Liversedge et al., 1998, for a more complete discussion, and Clifton, Traxler, Mohamed, Williams, Morris, & Rayner, 2003, for another recent paper using the regression path reading time measure.)

Results

As in Experiment 1, accuracy in answering the comprehension questions was generally high, with 90% correct performance overall. Also as in Experiment 1, questions following sentences containing Direct-object bias verbs tended to be answered 2% less accurately than those following sentences containing Clause-bias verbs, which was reliable only by participants (F1(1,74) = 5.9, p < .05; F2 < 1; mean difference = −0.018, 95% CI = −0.03 to 0.003). Unlike Experiment 1, there was also a main effect of sentence structure on accuracy by participants, with 1.4% fewer correct responses after sentences with a clause structure (F1(2,148) = 3.5, p < .05; F2 < 1; mean difference = 0.014, 95% CI −0.003 to 0.03). As in Experiment 1, there was also an interaction between verb bias and sentence structure by participants (F1(2,148) = 6.5, p < .01; F2(2,152) = 2.4, p > .1; MinF′(2,126) = 1.8, p > .1), again because the effect of verb bias was greater for sentences with direct object continuations (5%) than for sentences with embedded clause continuations (1.8%).

Sentences were divided into regions as in Experiment 1. (The critical disambiguating region is underlined below.)

5. The ticket agent/admitted/that/the mistake/might not/have been caught.

Trials included in the analysis had to meet the following criteria. First, entire trials were excluded from analysis if the comprehension question was not answered correctly or if the eyetracker lost track of the eye for an extended period, resulting in a loss of 9% and < 1% of the data, respectively. Second, an entire region was excluded from the analysis for first-pass times, regression-path times, or total times if the eyetracker lost track of the participant’s eyes in that region during collection of the data contributing to that measure, resulting in a further loss of < 1% of the data from participants included in the analysis. Third, a region was excluded from the analysis of first-pass reading time and regression-path time if the participant fixated on any word to the right of the region before fixating on the region itself, resulting in a loss of approximately 2% of the data in every condition except those containing the complementizer that, where approximately 5% of the data were lost. As in Experiment 1, times for a particular participant at a particular region greater than three standard deviations from the mean were replaced with the cutoff value at three standard deviations from the mean, resulting in approximately 1.6% of the data being replaced in first pass times and total times, and 1.5% of the data being replaced in regression-path duration times.

Cells with three or fewer trials remaining in a condition for a participant or an item after all of the exclusion criteria were applied were replaced with the grand mean across conditions for the individual participant or the individual item at the appropriate region of the sentence, in order to eliminate empty or extremely noisy cells (1.4% of the data were replaced in this way). Finally, participants who had 5 or more cells (out of the 34 condition × region cells in the design) replaced were excluded entirely from the analysis, resulting in the loss of 8 participants. All results reported below are based on the remaining 75 participants.

As in Experiment 1, two different analyses of variance (ANOVAs) were conducted on partially overlapping subsets of the design. First, a 2 (verb bias) × 2 (ambiguity) ANOVA that included only the sentences with embedded clauses was performed, to evaluate the joint effects of verb bias and ambiguity. Second, a 2 (verb bias) × 2 (sentence continuation) ANOVA was performed to evaluate the effects of verb bias in temporarily ambiguous sentences where the ambiguity resolved as either a direct object structure or an embedded clause structure. All analyses were performed on length-corrected reading times by both participants and items, calculated in the same way as described for Experiment 1.

Also as in Experiment 1, two additional sets of ANOVAs were performed, one on a subset of 56 items that equated the rated plausibility of items with the two types of verbs, and the other on uncorrected reading times on just the first word of the two-word disambiguating regions (see Appendix 3 for uncorrected reading times). Two items that were dropped from the analyses in Experiment 1 were included here because errors in them were fixed.

Disambiguating region

Once again, results at the disambiguating region are the most important ones for distinguishing among processing theories, so those will be described first. Also, since the primary motivation for the eyetracking study was to obtain measures of early processing, first-pass reading times will be described first, followed by regression-path times and then total times.

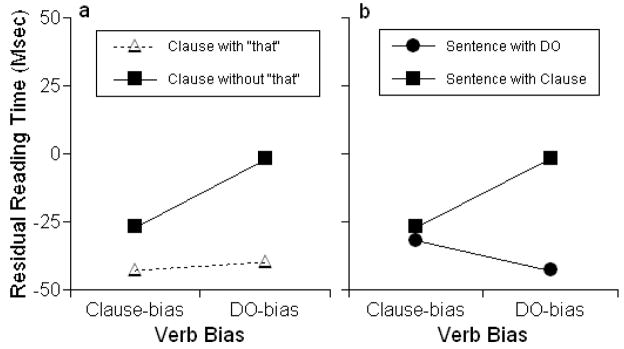

First-pass reading times

First-pass reading times at each region are summarized in Table 4, first-pass times at just the disambiguating region are shown in Figures 2a and 2b, and the results of the ANOVAs for that region are summarized in Table 5. As noted in the table, the analysis including only sentences with embedded clauses revealed that first-pass reading times at the disambiguating region were significantly slower in temporarily ambiguous sentences than in unambiguous ones, resulting in a main effect of ambiguity. First-pass times on the disambiguation were also significantly slower overall in embedded clause sentences with Direct-object bias verbs than in those with Clause-bias verbs, leading to a main effect of verb bias that was reliable by participants but not by items. Although ambiguity slowed first-pass reading more in sentences with Direct-object bias verbs than in ones with Clause-bias verbs, the interaction between verb bias and ambiguity was only marginal by both participants and items. This particular interaction has been found to be more robust in several previous studies (e.g., Garnsey et al, 1997; Trueswell et al., 1993; see, however, Kennison, 2001). The interaction failed to reach reliability here in part because there was a small effect of verb bias in the unambiguous sentences (4 msec) as well as in the ambiguous ones (24 msec). Although the 24-msec difference in ambiguous sentences exceeded its 95% confidence interval of 17 msec by participants and 21 msec by items, the overall pattern was not robust enough to produce a reliable interaction.

Table 4.

Mean residual reading times in Experiment 2 (Msec)

| Subject NP | admitted/confirmed | (that) | Post-verb NP | Disambig. | Rest of sentence | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1st | RP | T | 1st | RP | T | 1st | RP | T | 1st | RP | T | 1st | RP | T | 1st | RP | T | |

| Clause-bias verb + that + Clause | 88 | - | 37 | −9 | −25 | −53 | 42 | −8 | −31 | −60 | −30 | −89 | −43 | −46 | −16 | −59 | - | −26 |

| Clause-bias verb + Clause | 84 | - | 39 | −7 | −19 | −16 | - | - | - | −32 | −19 | −56 | −27 | −12 | 13 | −62 | - | −58 |

| Clause-bias verb + DO | 95 | - | 45 | 2 | −19 | 10 | - | - | - | −21 | −7 | −31 | −32 | 4 | −30 | −53 | - | −64 |

| DO-bias verb + that + Clause | 84 | - | 34 | −14 | −34 | −53 | 49 | 0 | −8 | −58 | −13 | −62 | −40 | −26 | −28 | −16 | - | −37 |

| DO-bias verb + Clause | 83 | - | 51 | −5 | −36 | 23 | - | - | - | −43 | −30 | 11 | −2 | 34 | 110 | −69 | - | 33 |

| DO-bias verb + DO | 80 | - | 15 | −7 | −25 | −27 | - | - | - | −22 | −25 | −66 | −43 | −28 | −51 | −58 | - | −94 |

Note. 1st= Mean residual first pass reading times (not calculated at first or last sentence positions); RP= Mean residual regression path times; T= Mean residual total times.

Figure 2.

Residual first-pass reading times at the disambiguating region in Experiment 2

Table 5.

ANOVAs for length-corrected times at the disambiguating region for all reading time measures in Experiment 2

| By participants | By items | MinF′ | ||||||

|---|---|---|---|---|---|---|---|---|

| Source | F1 | df | F2 | df | MinF′ | df | ||

| First-Pass Time | ANOVA1 | Verb | 5.2* | 1,74 | 2.5 | 1,76 | 1.7 | 1,135 |

| Ambiguity | 15.8** | 1,74 | 15.9** | 1,76 | 7.9** | 1,151 | ||

| V × A | 3.4! | 1,74 | 3.3! | 1,76 | 1.7 | 1,151 | ||

| ANOVA2 | Verb | 1.6 | 1,74 | 1.1 | 1,76 | <1 | ||

| Structure | 12.4** | 1,74 | 7.2** | 1,76 | 4.6* | 1,142 | ||

| V × S | 7.8** | 1,74 | 5.0* | 1,76 | 3.1! | 1,145 | ||

| Regression-Path Time | ANOVA1 | Verb | 11.4** | 1,74 | 7.3** | 1,76 | 4.4* | 1,144 |

| Ambiguity | 17.8** | 1,74 | 20.5** | 1,76 | 9.5** | 1,150 | ||

| V × A | 1.6 | 1,74 | 3.1! | 1,76 | 1.1 | 1,137 | ||

| ANOVA2 | Verb | <1 | 1,74 | <1 | 1,76 | <1 | ||

| Structure | 3.5! | 1,74 | 2.6 | 1,76 | 1.5 | 1,148 | ||

| V × S | 8.9** | 1,74 | 10.8** | 1,76 | 4.9* | 1,149 | ||

| Total Time | ANOVA1 | Verb | 12.1** | 1,74 | 2.9! | 1,76 | 2.3 | 1,110 |

| Ambiguity | 31.7** | 1,74 | 29.9** | 1,76 | 15.4** | 1,151 | ||

| V × A | 18.1** | 1,74 | 21.1** | 1,76 | 9.7** | 1,150 | ||

| ANOVA2 | Verb | 54.4** | 1,74 | 5.0* | 1,76 | 3.4! | 1,134 | |

| Structure | 39.6** | 1,74 | 28.3** | 1,76 | 16.5** | 1,147 | ||

| V × S | 23.5** | 1,74 | 10.9** | 1,76 | 7.4** | 1,134 | ||

Note. ANOVA1 includes only temporarily ambiguous and unambiguous sentences with Clause continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted that the mistake might not have been caught”). ANOVA2 includes only temporarily ambiguous sentences with either Clause or DO continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted the mistake because she had been caught”).

p < .05;

p < .01;

p < .10

As in Experiment 1, for our purposes the most informative test of the effect of verb bias comes from the second ANOVA, which included only ambiguous (that-less) sentences that have the two kinds of continuations (see Figure 2b and compare to Figure 1b). Also as in Experiment 1, the most important result in this analysis was a reliable interaction between verb bias and sentence continuation, reflecting the fact that people’s first pass on the disambiguating region was longer whenever the sentence continuation did not match the main verb’s bias. Pairwise comparisons showed that embedded clause continuations were read 24 msec slower after Direct-object bias verbs than after Clause-bias verbs, exceeding the 95% confidence interval on the difference of 17 msec by participants and 21 msec by items. However, unlike Experiment 1, the 11-msec difference in the opposite direction in sentences with direct object continuations did not exceed the 95% confidence intervals of 16 msec by participants and 21 msec by items. Thus, unlike moving-window reading times in Experiment 1, first-pass times on the two-word disambiguating region in sentences with direct object continuations were not reliably slower after Clause-bias verbs than after Direct-object bias verbs, though there was an 11-msec difference in the expected direction. Finally, there was also a main effect of sentence continuation in first-pass times on the two-word disambiguating region, with first-pass times 23 msec slower overall in sentences with clause continuations than in sentences with direct object continuations. The main effect of verb bias was not reliable.

The pattern of results was similar when uncorrected first-pass times on just the first word of the disambiguating region were analyzed, but less so when the two-word region was analyzed for a subset of 56 items that equated rated plausibility across verb type. These results are summarized in Appendix 3. Given that results for this second analysis changed slightly when comparing only the subset of sentences with equal plausibility, it is possible that the effect observed in first-pass times in sentences with direct object continuations were due to small differences in plausibility rather than to verb bias. We will return to this point in the discussion section below.

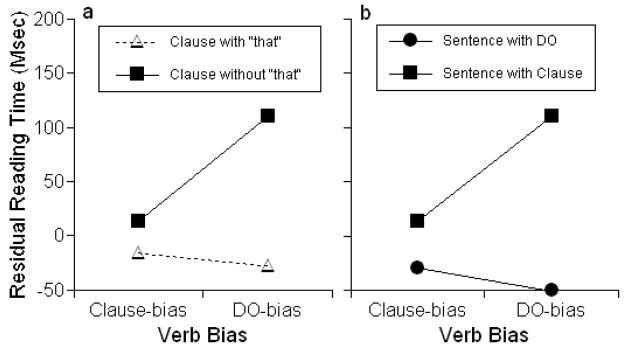

Regression-path time

Readers typically respond to difficulty by either slowing down or going back to re-read earlier sentence regions, or both. The analysis of first pass times showed that readers slowed down reliably when a clause continuation followed a Direct-object bias verb but that when a direct object continuation followed a Clause-bias verb, their slowing did not reach reliability. An examination of how often the first pass on the disambiguating region ended with a regression suggested that readers might be having difficulty that was not adequately captured by the first pass time measure. In both direct object and clause-continuation sentences, the first pass on the disambiguation was slightly more likely to end with a regression whenever the continuation was not the one predicted by verb bias (16% vs. 14%, for both kinds of continuation). Although this difference was small and did not lead to any reliable effects in an analysis of the proportions themselves, it did suggest that it might be informative to examine what happened following regressions from the disambiguating region. As described earlier, regression-path reading time is a measure that captures both slowing down and what happens following regressions. Regression-path times for each region are summarized in Table 4, the pattern at the disambiguating region is shown in Figure 3, and the ANOVA results at the disambiguating region are shown in Table 5. Regression-path times were not calculated for the main subject noun phrase region of the sentence since there was nowhere to regress to, or for the final region of the sentence since many of the regressions originating there are actually sweeps back to the left side of the screen in preparation for reading the comprehension question following the sentence.

Figure 3.

Residual regression-path reading times at the disambiguating region in Experiment 2

At the disambiguating region, regression-path results were generally similar to the first-pass times reported above, with one important exception, which is apparent from comparing Figures 2b and 3. In comparison to sentences where the verb was followed by the kind of continuation it predicted, readers regressed at the end of their first pass on the disambiguation and spent more time re-reading earlier sentence regions both when a Clause-bias verb was followed by a direct object continuation (32 msec more than Direct object-bias verb + direct object, exceeding the 31-msec 95% confidence interval on the difference by participants, but not the 40-msec 95% confidence interval by items), and when a Direct-object bias verb was followed by a clause continuation (46 msec more than Clause-bias verb + clause, exceeding the 95% confidence intervals on the difference of 41 msec by participants and 35 msec by items). This pattern led to a reliable interaction between verb bias and sentence continuation by both participants and items. No other differences at the disambiguation or any other regions of the sentence were reliable in the analysis of the regression-path measure in temporarily ambiguous sentences (ANOVA2 in Table 5).

When non-length-corrected regression-path times were analyzed for just the first word of the two-word disambiguating region, the pattern was similar. These results are summarized in Appendix 3. When the subset of 56 items that equated rated plausibility across verb type was analyzed, the pattern of results was numerically similar but statistically weaker. These results are also summarized in Appendix 3.

The regression-path measure showed that a mismatch between verb bias and sentence continuation appeared to cause difficulty in both kinds of sentences, but the difficulty was still more robust when a clause continuation followed a Direct-object bias verb than when a direct object continuation followed a Clause-bias verb, as predicted in the introduction. In addition, the nature of the response to that difficulty differed somewhat in these two conditions. When an embedded clause was initially encountered following a Direct-object bias verb, readers both slowed down and went back to re-read, but when a direct object was initially encountered following a Clause-bias verb, they mainly immediately went back to re-read without first slowing down much on the disambiguation. Possible reasons for these different kinds of response to difficulty will be addressed later in the discussion section.

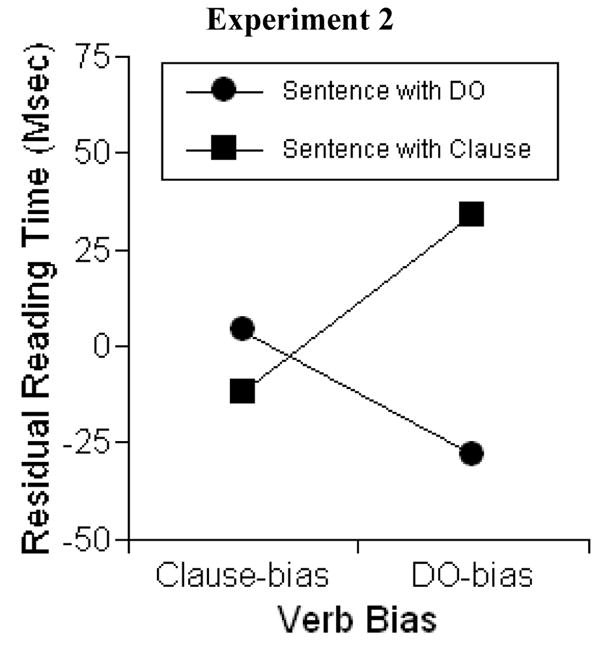

Total times

Total reading times are shown in Figures 4a and 4b. Total times at the disambiguating region showed a similar pattern of results to the other two measures, though they were most similar to first-pass times, since total times and first-pass times both combine spatially contiguous fixations.

Figure 4.

Residual total reading times at the disambiguating region in Experiment 2

In the ANOVA including only sentences with embedded that-clauses (see Figure 4a), readers spent longer on the disambiguating region in temporarily ambiguous sentences (without that) than in unambiguous versions, resulting in a main effect of ambiguity. However, this effect was larger in sentences with Direct-object bias verbs (138 msec) than in sentences with Clause-bias verbs (29 msec), leading to a reliable interaction between verb bias and ambiguity. Total reading times were also longer overall in sentences with Direct-object bias verbs than in ones with Clause-bias verbs, resulting in a main effect of verb bias that was reliable by participants and marginal by items.

Figure 4b shows the pattern of total reading times in temporarily ambiguous sentences with both kinds of continuations. People spent 102 msec more time on the disambiguating region of sentences with embedded clauses than in sentences with direct objects, resulting in a main effect of sentence continuation. There was also a main effect of verb bias, with longer total times in sentences with Direct-object bias verbs than in sentences with Clause-bias verbs. In addition, sentence continuation interacted reliably with verb bias.

Pairwise comparisons showed that the interaction in Figure 4b came from longer times when sentence continuation mismatched verb bias. Embedded clause continuations were read 97 msec more slowly after Direct-object bias verbs than after Clause-bias verbs, exceeding the 95% confidence interval on the difference of 38 msec by participants and 58 msec by items). However, direct object continuations were read only 21 msec slower after Clause-bias verbs than after Direct-object bias verbs, which did not exceed the 95% confidence interval on the difference of 28 msec by participants and 49 msec by items. Followup analyses of uncorrected times on just the first disambiguating word and on a plausibility-rating-matched subset of items are not reported for total times, since those analyses were intended to provide evidence relevant to interpreting effects observed in reading time measures that tap into early processing stages.

The temporarily ambiguous noun phrase

ANOVA results at the temporarily ambiguous noun phrase are shown in Table 6 for all measures. Neither first-pass times nor regression-path times on the temporarily ambiguous noun phrase showed any effect of the bias of the preceding verb in any of the ANOVAs (all Fs <= 2). There were, however, effects of ambiguity in the ANOVA evaluating the effects of ambiguity in sentences that contained embedded clauses. First-pass times on noun phrases preceded by that were 44 msec shorter than on noun phrases not preceded by that, which was reliable by both participants and items.

Table 6.

Analysis of variance results at the temporarily ambiguous NP region for all reading time measures in Experiment 2

| By participants | By items | MinF′ | ||||||

|---|---|---|---|---|---|---|---|---|

| Source | F1 | df | F2 | df | MinF′ | Df | ||

| First-Pass Time | ANOVA1 | Verb | <1 | 1,74 | <1 | 1,76 | <1 | |

| Ambiguity | 7.5** | 1,74 | 12.9** | 1,76 | 4.7* | 1,140 | ||

| V × A | 1.8 | 1,74 | 1.5 | 1,76 | <1 | |||

| ANOVA2 | Verb | 1.2 | 1,74 | <1 | 1,76 | <1 | ||

| Structure | 5.9* | 1,74 | 4.6* | 1,76 | 2.6 | 1,149 | ||

| V × S | <1 | 1,74 | 1.9 | 1,76 | <1 | |||

| Regression-Path Time | ANOVA1 | Verb | <1 | 1,74 | <1 | 1,76 | <1 | |

| Ambiguity | <1 | 1,74 | <1 | 1,76 | <1 | |||

| V × A | 4.9* | 1,74 | 2.7 | 1,76 | 1.7 | 1,140 | ||

| ANOVA2 | Verb | 2.4 | 1,74 | <1 | 1,76 | <1 | ||

| Structure | <1 | 1,74 | <1 | 1,76 | <1 | |||

| V × S | <1 | 1,74 | <1 | 1,76 | <1 | |||

| Total Time | ANOVA1 | Verb | 22.7** | 1,74 | 4.0* | 1,76 | 3.4! | 1,102 |

| Ambiguity | 9.2** | 1,74 | 18.8** | 1,76 | 6.2* | 1,135 | ||

| V × A | 2.7 | 1,74 | 3.4! | 1,76 | 1.5 | 1,149 | ||

| ANOVA2 | Verb | 2.1 | 1,74 | <1 | 1,76 | <1 | ||

| Structure | 3.4! | 1,74 | 4.0* | 1,76 | 1.8 | 1,150 | ||

| V × S | 10.8** | 1,74 | 11.4** | 1,76 | 7.8** | 1,133 | ||

Note. ANOVA1 includes only temporarily ambiguous and unambiguous sentences with Clause continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted that the mistake might not have been caught”). ANOVA2 includes only temporarily ambiguous sentences with either Clause or DO continuations. (Clause-bias examples: “The ticket agent admitted the mistake might not have been caught” vs. “The ticket agent admitted the mistake because she had been caught”).

p < .05;

p < .01;

p < .10

In the ANOVA evaluating the effect of the sentence continuation in temporarily ambiguous sentences only, there was also a reliable effect of the continuation following the noun phrase in first-pass times, with noun phrases followed by clause continuations being read 15 msec faster than those followed by direct object continuations. Since the sentences with different continuations were still identical at this point, the effect in first-pass times must be due to preview. One possible explanation for the direction of this effect is that the first word of the disambiguating region was a bit more frequent for clause continuations than for direct object continuations (1070 vs 901, on average; see Table 1), so to the extent that there was any preview of the next word, that word was a bit more familiar on average in clause continuations.

Other regions in the sentence

At the final region of the sentence, there was an interaction between ambiguity and verb type in both first-pass times and in total times, because there was a larger effect of ambiguity at the ends of sentences with Direct-object bias verbs than in sentences with Clause-bias verbs.

In the unambiguous conditions, first-pass times on the complementizer that were slightly faster after Clause-bias verbs than after Direct-object bias verbs, by 7 msec by participants, equaling the 95% confidence interval on the difference of 7 msec by participants, and by 13 msec by items, exceeding the 95% confidence interval on the difference of 11 msec. The same pattern was found in total times, which were 23 msec shorter after Clause-bias verbs by participants, exceeding the 95% confidence interval of 16 msec, and 33 msec faster by items, exceeding the 95% confidence interval of 24 msec. In regression-path times, a difference in the same direction did not reach reliability. The effect of verb bias in first-pass times suggests that comprehenders found a complementizer more felicitous after Clause-bias verbs than after Direct-object bias verbs. These results replicate similar results in Garnsey et al. (1997).

Correlations between reading times and verb properties

Correlations between properties of the verbs and reading times were analyzed just as in Experiment 1, and are reported in Table 7 alongside those from Experiment 1. Verb properties correlated reliably with reading times in much the same way in both experiments, suggesting that verb properties began to influence reading times quite rapidly. Since the most important aspect of the current study is the comparison of temporarily ambiguous sentences with direct object and clause continuations, in the interests of brevity correlation results will be reported only for that comparison.

Table 7.

Correlations between verb properties and reading time measures at the disambiguating region in temporarily ambiguous sentences, across Experiments 1 and 2

| Verb Properties | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Clause-bias | DO-bias | That-preference | |||||||

| Expt.1 Reading Times | Expt. 2 First-pass Times | Expt. 2 Regress-path Times | Expt.1 Reading Times | Expt. 2 First-pass Times | Expt. 2 Regress-path Times | Expt.1 Reading Times | Expt. 2 First-pass Times | Expt. 2 Regress-path Times | |

| Clause continuation | −.25* | −.13 | −.32** | .33** | .24* | .27* | .39** | .21 | .38** |

| DO continuation | .12 | .14 | .08 | −.21! | −.13 | −.20! | −.22! | −.17! | −.16 |

| Difference (C - DO) | −.28* | −.19! | −.27* | .40** | .27* | .32** | .46** | .28* | .37** |

Note: = p < .1,

= p < .05,

= p < .01

Correlations above and below dashed line are reliably different from each other at p < .05

Reading times were longer at the disambiguating region in clause continuations after verbs with stronger Direct-object bias (first pass r = .27, p < .05; regression-path r = .32, p < .01) and stronger that-preference (first pass r = .28, p < .05; regression-path r = .37, p < .01), just as was found for moving window times in Experiment 1. Also as in Experiment 1, reading times on the disambiguation toward the clause interpretation were shorter following verbs with stronger Clause-bias (first pass r = −.19, p < .1; regression path r = −.27, p < .05).

In Experiment 1, the only verb property that reliably affected reading times on the complementizer that in the unambiguous sentences was the overall frequency of the verb. In contrast, in Experiment 2 first-pass times on that were reliably affected by verbs’ Clause-bias strength (r = −.28, p < .01), Direct-object bias strength (r = .29, p < .01), and that-preference (r = .23, p < .05).

The overall pattern of the correlation results across both studies will be addressed in more detail in the general discussion section.

Discussion

The results for the subset of the Experiment 2 design including only ambiguous and unambiguous sentences with embedded clauses showed that Clause-bias verbs reliably eased the comprehension of the ambiguous versions, thereby replicating several previous studies (Garnsey et al., 1997; Trueswell et al., 1993) as well as Experiment 1 here. This effect was observed in all three of the measures used in Experiment 2: first-pass reading time, regression-path time, and total reading time.

More important is what happened in ambiguous sentences with Clause and direct object continuations. The interaction between verb bias and sentence continuation reported in Experiment 1 was also replicated in all three reading-time measures in Experiment 2. In all measures, the interaction reflected the fact that readers slowed down whenever the sentence continuation violated verb-based expectations. However, the reliability levels of an important component of the interaction varied across the three measures. While the degree of slowing when a clause continuation followed a Direct-object bias verb was robust enough to reach reliability in all measures, the slowing observed when a direct object continuation followed a Clause-bias verb reached reliability only in the regression-path reading time measure, with trends in the predicted direction in the other measures. Thus, readers’ immediate response to violations of verb-based expectations was somewhat different in the two kinds of sentence continuations.

In Clause continuations, readers stayed on the disambiguation long enough to produce reliably longer first-pass times, and then they also went back and reread earlier sentence regions, resulting in reliably longer regression-path times as well. In contrast, in direct object continuations, readers went straight back and re-read earlier regions without staying on the disambiguation long enough to produce reliably longer first-pass times. Thus, both kinds of violation of verb-based expectations led to difficulty, but the response to that difficulty differed. One possible explanation for this differing response has to do with what readers encountered, compared to what they were expecting. Embedded clauses are both structurally and conceptually more complex than direct objects. Clause-bias verbs led readers to expect a complex clause, but what they found instead was a simple direct object, while Direct-object bias verbs led readers to expect a simple direct object and what they found instead was a complex clause. It may be harder to recover from encountering something complex when you expect something simple than it is to recover from encountering something simple when you expect something complex. If so, this would explain why readers both slowed down (first-pass times) and went back and reread earlier regions (regression-path times) when they encountered something complex when they were expecting something simple, but they mainly went back and reread earlier regions when they encountered something simple when they expected something complex.