Abstract

Objective

There has been a growth of home health care technology in rural areas. However, a significant limitation has been the need for costly and repetitive training in order for patients to efficiently use their home telemedicine unit (HTU). This research describes the evaluation of an architecture for remote training of patients in a telemedicine environment. This work examines the viability of a remote training architecture called REmote Patient Education in a Telemedicine Environment (REPETE). REPETE was implemented and evaluated in the context of the IDEATel project, a large-scale telemedicine project, focusing on Medicare beneficiaries with diabetes in New York State.

Methods

A number of qualitative and quantitative evaluation tools were developed and used to study the effectiveness of the remote training sessions evaluating: a) task complexity, b) changes in patient performance and c) the communication between trainer and patient. Specifically, the effectiveness of the training was evaluated using a measure of web skills competency, a user satisfaction survey, a cognitive task analysis and an interaction analysis.

Results

Patients not only reported that the training was beneficial, but also showed significant improvements in their ability to effectively perform tasks. Our qualitative evaluations scrutinizing the interaction between the trainer and patient showed that while there was a learning curve for both the patient and trainer when negotiating the shared workspace, the mutually visible pointer used in REPETE enhanced the computer-mediated instruction.

Conclusions

REPETE is an effective remote training tool for older adults in the telemedicine environment. Patients demonstrated significant improvements in their ability to perform tasks on their home telemedicine unit.

Keywords: telemedicine, older adults, training

1 Introduction

Despite best efforts to make home healthcare technology easy to use, patients frequently require multiple training sessions to master the skills needed to use these devices, particularly devices which include web-access. In geographically distributed telemedicine projects such as IDEATel [1, 2], where the use of telemedicine can be most advantageous, home-based training sessions can be costly due to the amount of time that is necessary to reach the patients' homes [3]. For all these reasons, the ability to provide timely assistance or on-site training can be seriously disrupted.

A potentially viable solution to this problem would be to develop a method to remotely educate patients to use their home healthcare devices. Remote training may be more cost effective, and may be able to provide for more frequent training, as well as provide assistance on demand. Conventional telephone support solutions are frequently unsatisfactory in populations with limited computer experience. For example, elderly telemedicine patients frequently lack the expressive vocabulary for speaking the language of graphical user interfaces such as scroll bars and other widgets [4]. This is compounded by problems associated with older adults' lack of visual acuity and limits in their ability to selectively attend to relevant screen features [5]. Therefore, the patient may not be able to connect the trainer's words to the images on the screen. Due to the difficulty of orienting users to specific objects on the computer screen, success with training using only verbal descriptions is likely to be very limited.

To address these difficulties, the REmote Patient Education in a Telemedicine Environment (REPETE) architecture [6, 7] was developed. REPETE is an architecture for training patients in a telemedicine environment. Through the use of collaborative workspace and methodologies commonly used in the field of Computer-Supported Cooperative Work (CSCW) [8], this architecture utilizes a remote control mechanism to provide HTU training to telemedicine patients without requiring a trainer to travel to the patient's home.

The focus of this paper is on the methods and evaluation tools developed to study the effectiveness of the training performed using REPETE and the results of those evaluations. Below, we briefly introduce the REPETE architecture and the prior research supporting the remote training approach used in REPETE.

2 Background

2.1.1 IDEATel

The REPETE remote training architecture was developed to provide an additional training method in the Informatics for Diabetes Education and Telemedicine (IDEATel) project. The IDEATel project is a randomized controlled study of the efficacy of a home telemedicine system for diabetes care of the underserved rural and inner-city residents, supported by a cooperative agreement from the Centers for Medicare and Medicaid Services [1]. The subjects in the study were Medicare beneficiaries with diabetes and living in medically underserved areas of New York State. The project was initially funded for 4 years, beginning in 2000 and was renewed for an additional 4-year cycle.

Patients in the intervention group received an HTU, which is a custom computer that connects to the Internet using a dialup networking over a telephone line. The HTU provided the following functions: synchronous videoconferencing, electronic transmission of finger-stick glucose and blood pressure readings, secure messaging, web-based review of one's clinical data, and access to web-based educational materials. These functions were designed to enable a patient to self-monitor his or her health. In addition, these functions facilitate accurate communication of a patient's health status to clinicians [9]. Patients had regular video televisits with nurse case managers [10].

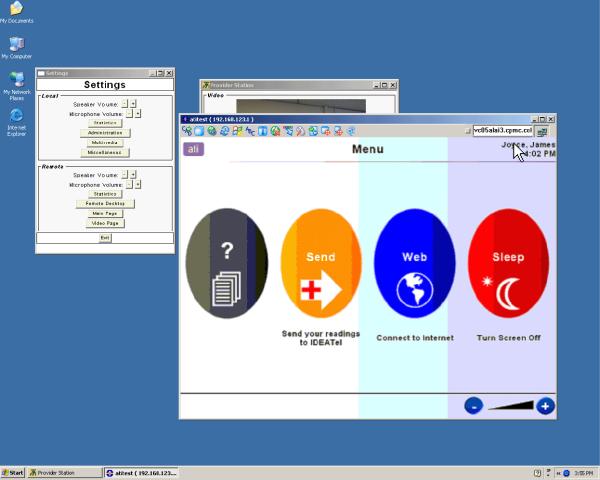

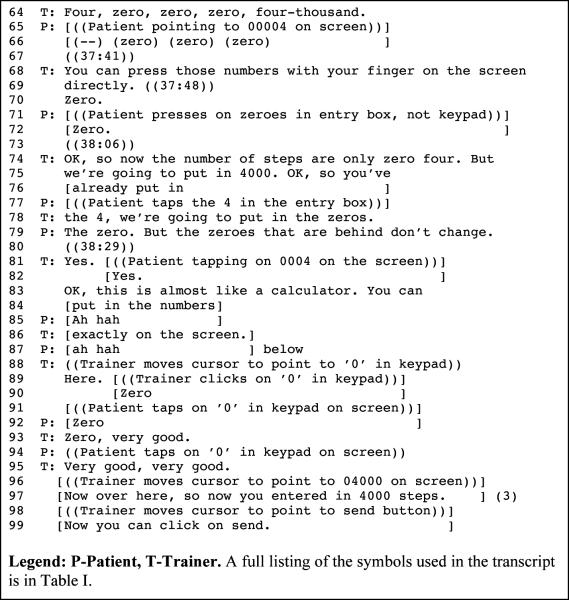

The HTU was designed from the ground up to be used by older adults with minimal computer experience and training. Two generations of hardware and software were used in the IDEATel project. Research performed on the Generation 1 HTU demonstrated that, despite efforts to reduce complexity, many older adults still had considerable difficulty using the system. Much of this was due to older adults having difficulty in learning to use the mouse and mastering other aspects of the graphical user interface [4]. Based on these lessons, the new HTUs (Generation 2 HTU) included an integrated touch screen to minimize the need for use of a mouse for interacting with the HTU. The patient portal site, myIDEATel, was also redesigned using lessons learned in studies carried out on the Generation 1 portal, Diabetes Manager, as well as those from a variety of studies. A number of computer system design guidelines for older adults have been proposed by various researchers [11-13]. Many of the guidelines target the proper design of websites for older adults [14, 15]. Among these recommendations and guidelines, are to increase the size, brightness, and contrast of visual objects. These recommendations and guidelines were incorporated into the myIDEATel design. Figure 1 shows a patient interacting with myIDEATel on a Generation 2 HTU.

Figure 1.

Patient on Generation 2 HTU using myIDEATel to send a message to a provider

Despite the redesign of the system to more closely suit the needs of older adults, many of the IDEATel patients still had trouble using the myIDEATel portal without training. Some basic training on how to use the Internet and portal was given to the patients at the time of the installation. However, many patients still had trouble using the portal independently without additional training. In addition, due to the redesign of the portal software, many of the patients felt that they needed additional training in order to use the new portal.

2.1.2 Cognitive Aging

It is worth noting that adults undergo changes in cognitive and perceptual motor function that can affect their use of computers. For example, older adults have slower dynamic visual attention, requiring more time when scanning the environment, shifting focus from one place to another [13]. Older adults are also more affected by stimuli such as flashing lights, which more easily captures their attention [5]. In addition, older adults have poorer visual acuity in periphery [13]. With reduced visual acuity, older adults may not be able to as easily segment different areas on the screen that depict windows, widgets, and even the cursor. This difficulty must be taken into account when developing a remote training solution. In addition, evidence indicates that older adults have significant declines in effortful (serial) visual search tasks such as those that require effort to process and require focused attention. In contrast, older adults do not have significant declines in automatic (parallel) visual search tasks, such as motion [16]. There have been many studies indicating that motion can be very effective in capturing attention, even when in the periphery of the visual field [17-20], which may be valuable when designing a remote training solution for older adults.

2.1.3 CSCW

Based on the prior research in cognitive aging, we hypothesized that techniques developed in Computer Supported Cooperative Work (CSCW) may be effective in enhancing the effectiveness of a remote training solution. CSCW is an interdisciplinary field that “examines computer-assisted coordinated activities such as problem solving and communication carried out by a group of collaborating individuals” [21]. It has been suggested that CSCW be applied to the design of medical information systems [22]. Many features in these collaborative environments are used to increase the level of common ground available to users of these environments. Common ground is the mutual knowledge, beliefs, and assumptions used in the communication process [23]. Clark and Brennan discussed this concept of common ground and establishing it the context of a variety of media, including face-to-face, telephone, video teleconference, terminal teleconference, answering machines, e-mail, and letters. They described it as the coordination of both the content and process of what the participants are doing [23]. A number of articles have discussed difficulties in distributed collaboration environments related to workspace awareness a factor in establishing common ground [24-26]. Gutwin and Greenberg define workspace awareness, as “the up-to-the-moment understanding of another person's interaction with the shared workspace” [24]. Berlage used a mouse to generate mutually visible remote pointer (referred to as a telepointer) gestures in a shared workspace to support and accelerate communication between collaborating clinicians in a diagnostic cardiology task [27].

2.1.4 REPETE

Based on cognitive aging studies [28-30], using motion and verbal cueing to direct attention to the appropriate area on the screen is hypothesized to be useful in remote training. This training method leverages the types of cueing studied in CSCW by using the mouse for deictic referencing (e.g. “this one,” “that one,” “here”) and gestures in addition to voice communication. However, to date, there has been little if any formal research studying the effectiveness of using these CSCW techniques to support training.

Using knowledge gained from the cognitive aging and CSCW literature as a starting point, we developed an architecture and an approach to remote training called REPETE. A central aspect of REPETE is the ability for a trainer to remotely control and view a patient's HTU screen in order to demonstrate and assist with the operation of their HTUs. REPETE was designed to be a vehicle for providing personalized training and reiteration of the training already received as suggested by recommended practices for providing older adults computer training [15, 31]. REPETE had to be designed to work within the constraints of the home telemedicine environment and work on dialup telephone networks.

REPETE provides the trainer with an ability to observe the actions of the patient, take over control of the HTU and direct attention to different areas of the screen. It leverages the existing H.323 video-conferencing capabilities used by many home telehealth devices and simultaneously supports voice communication alongside the remote control capabilities over a single telephone line. This approach allows the trainer to use verbal cues to direct attention and describe the actions being taken by the trainer. A screenshot of the trainer's workspace is shown in Figure 2.

Figure 2.

REPETE Trainer's Workspace. Figure shows the trainer's workspace with a view of the remote patient's HTU with the main HTU screen visible.

The optimizations and other technical aspects of REPETE, such as optimizing REPETE to work over a dialup telephone connection, are described further and evaluated in a previous study [32]. While the telemedicine system was capable of video conferencing, due to limitations in bandwidth, the trainer was unable to view the patient while performing a remote training session. In addition, because a video overlay on the patient's interface would take up a significant portion of the screen real estate in an already very screen real estate constrained interface, the patient was also unable to view the trainer.

This article discusses the evaluation of the REPETE architecture with patients. We evaluated the architecture by attempting to train IDEATel patients to perform the various tasks supported by the IDEATel data review portal, myIDEATel, over a telephone line.

The objectives of this study were as follows: 1) to understand effective methods for establishing computer mediated remote training with an older adult home telemedicine population; 2) to quantify the effectiveness of computer mediated remote training; and 3) to determine participants' satisfaction with remote training using REPETE.

3 Methods

3.1 Methodology Overview

In this evaluation, ten patients were selected from the New York City IDEATel intervention subgroup. Using a web skills competency instrument, we performed a pre- and post-test of a series of web skills. The pre- and post-training evaluation of their web skills competency is compared as a measure of the efficacy of remote training.

The subjects' interactions with the system were videotaped throughout the training sessions. The videotapes generated by recording the interaction are important in order to perform in-depth analyses of the interaction. The videos were used to determine difficulties in the training model as well as a way to inform future studies. Lastly, the subjects were interviewed after the study and asked to judge their satisfaction with the training methods.

The videotapes of the training sessions were analyzed using a combination of methods. This included an evaluation using: a) a web skills competency benchmark to assess changes in performance, b) a cognitive task analysis to characterize system task in terms of complexity and potential problems, and c) an interaction analysis to characterize the communication process between trainer and patient. The evaluation instruments used in this study are described in further detail in Section 3.3.

3.2 Subject Recruitment

The study participants were drawn from IDEATel participants living near Columbia University Medical Center. Subjects who needed more training were identified and were contacted by IDEATel staff from this smaller patient list via telephone. The study procedure was explained. We emphasized that the goal was to try a new feature of the HTU and to see how well the new feature performed. It was also explained that as part of their participation in the study, they would receive additional training on how to use their HTU. They were also informed that participation in the study was completely voluntary and would not affect their status in the IDEATel study or any future care they might receive at the Columbia University Medical Center. The study was approved by the Columbia University IRB and written informed consent was obtained from all participants.

3.3 Evaluation Tool Development

3.3.1 Overview

The analyses of the training sessions were conducted using a combination of methods. The training sessions focused on a set of core tasks a patient would perform while using the myIDEATel website. The training sessions were videotaped. The analyses were performed using a combination of qualitative evaluation methods and a user satisfaction survey.

3.3.2 Web Skills Competency Instrument

We had previously developed a set of training benchmarks (performance levels on basic tasks) based on a cognitive task analysis (CTA) [33]. In applying the tool to this question, the first step was to perform a CTA of the IDEATel patient portal website—myIDEATEL. The tasks, goals, and actions were used as a list of steps that a subject would need to perform. This list of steps was used to define the optimal approach to completing tasks and is used as the basis of comparison to steps the patient performed. Based on this analysis, five archetypal tasks were selected for the current study: 1) logging into the myIDEATel website; 2) reviewing monitoring data; 3) entering pedometer data; 4) sending messages to a provider; and 5) reviewing messages from a provider. These tasks comprise the core activities an IDEATel patient would perform when interacting with the myIDEATel website. Based on pilot testing and a desire to limit the possibility of subject fatigue, we elected to focus on tasks 2-4.

3.3.3 Web Skills Competency Benchmark

A web skills competency benchmark was developed. The web skills competency benchmark was scored on an ordinal scale from 1 to 5, where 1 indicates that a patient is unable to perform the task and 5 suggests that the patient can perform the task autonomously. The primary factor contributing to a patient's score was the degree to which the patient could perform the task autonomously. However, contributing factors to a patient's autonomy include the self-efficacy and confidence of the patient to use the system as well as the ability of the patient to recover from errors. The original study procedure had intended for the patients to be scored prior to training and after the training. However, pilot testing of the training methodology indicated that few patients, if any, would be able to initiate these tasks without assistance prior to training. For this reason, rather than score all patients as being unable to perform any of the tasks prior to training, evaluations of competency during the first pass through the training material were used as pre-scores. Post-training scores are evaluations of competency after training and the patients felt that they were ready to try the task themselves.

3.3.4 Conversation and Interaction Analysis

In addition to coding the videos with the web skills competency benchmark, sections of the interaction between the trainer and the patient were transcribed and annotated following a notation used in conversation analysis as developed by Gail Jefferson [34, 35]. REPETE is a communication tool and analyzing these transcripts using conversation and interaction analysis techniques provided a uniform and reliable way of observing the success of the communication between the trainer and patient as mediated through conversation and telepointer gestures. The subset of symbols used in these transcripts can be seen in Table I. The fine grained detail that these transcripts provided allows for a very detailed microanalysis, which helped elucidate whether or not there has been a successful communication between the trainer and patient.

Table I.

Symbols Used in Conversation Analysis

| Symbol | Description |

|---|---|

| [ ] | used for overlapping or simultaneous speech |

| (n) | used to indicate n seconds of silence |

| - | cut-off or self-interruption |

| (( )) | transcriber's description of events |

| (word) | uncertainty about word on transcriber's part |

Due to the highly gestural nature of the training, it was necessary to extend the notation to include non-vocal communication such as gestures and actions being made on the computer. Throughout the training sessions, many interesting events were not expressed verbally, but in nonvocal behavior and communication such as the gaze of the patient towards certain areas of the screen as well as the on-screen gestures being used by the trainer. Other researchers have investigated gaze direction [36] and hand gestures [37] and have found ways to incorporate these events into their transcripts. The approach used in this research is to encode the gaze and gestures used in the training sessions as transcriber's description of the events, in conjunction with the notation for overlapping and simultaneous speech. For gestures or gaze overlapping with speech, a comment was noted concerning the nature of the gesture or gaze and is aligned with square brackets ([ ]) on adjacent lines with the corresponding speech. For sessions conducted in Spanish, the sessions were translated to English before being transcribed. The translations were conducted by a native Spanish speaker, fully fluent in both English and Spanish and well acquainted with the IDEATel project.

3.3.5 User Satisfaction Tool

In addition to qualitative evaluation benchmarks, in order to judge the satisfaction of patients with being trained using REPETE, we also developed a second evaluation tool, the user satisfaction survey. In this survey we interviewed the patients concerning their experience with training using the REPETE architecture. Questions concerning comfort with computers were selected from preexisting metrics for determining comfort with computers such as the Attitudes Towards Computers Questionnaire [38] and the Computer Attitude Scale [39]. Questions concerning training feedback were based on questions asked in the Computers for Elder Learning course [40].

3.4 Study Procedure

After signing consent forms, the patients were audiotaped for a pre-training interview. The patients were asked questions concerning their demographics such as level of education and how often they used the computer. They were also asked five questions to gauge their comfort with computers.

After the pre-training interview, the remote training session began. The remote training sessions were both videotaped and audiotaped. The first time the patients were guided through the tasks served as pre-training evaluations for the web skills competency benchmark. If the patient was guided through a task multiple times, the last time through the training served as the post-training evaluation. The pre- and post-training evaluations of their web skills competency were compared as a measure of the efficacy of remote training.

The patients were trained on the five tasks of the web skills competency instrument discussed in Section 3.3.2 using the remote training method. In order to limit subject fatigue, pre-training and post-training measurements were only made for tasks 2-4. A new feature in myIDEATel (as compared to the Phase I website) was the use of a calendar to set ranges for dates. For data retrieval tasks, the patients were presented with two calendars, one used to indicate the start date and a second calendar to indicate the end date of the date range in which the patient would retrieve measurements. After training, the patients were asked to repeat these tasks without assistance. Lastly, in order to evaluate acceptability of the remote training methodology, they were asked five questions regarding their satisfaction with the REPETE training method and two questions regarding how well they were able to hear the trainer and see what occurred on the screen. These questions are shown in the Appendix. We reviewed the videotapes and analyzed how well the user completed each task.

All subjects were familiar with the IDEATel HTU and had previously received some training on how to use the old data review portal, Diabetes Manager. The remote training sessions coincided with the introduction of a new portal, myIDEATel, which provided several new functions. The interface for the new myIDEATel portal was designed to be similar in structure to the older Diabetes Manager. The most notable addition to the features in the data review website was the inclusion of a pedometer result entry module. The subjects had not received training on and had limited experience using the myIDEATel website. Subjects were assessed pre- and post-training using the web skills competency instrument. For three subjects, scores assigned using the web skills competency instrument were also validated by a second rater. These scores were compared using a one-way repeated measures ANOVA using Stata/SE 9.1. This statistical measure was selected instead of the Kappa statistic because we were primarily interested in the directionality and magnitude of the change from pre- and post-test scores rather than exact matches. Given the small number of subjects, the analysis is best viewed as exploratory. When the scores were in disagreement, a third rater was used to arbitrate. All interactions of the patient using the HTU were videotaped and audiotaped.

4 Results

The mean age of the subjects in the remote training evaluation study was 73.8. The male/female ratio was skewed, with 80% of the subjects being female. The level of education amongst the subjects varied, with 50%, 20%, and 30% of the subjects having less than a high school, high school graduate, and some college or more respectively. One subject, Subject 3, had only a primary school education in the Dominican Republic. Because of her low level of education, her daughter assisted her in nearly all tasks and the pair was considered as a single unit. None of the patients studied had a computer other than the one provided by the IDEATel project. However, one subject, Subject 4, mentioned that she had access to a computer at her senior's residence and that she had taken computer classes there.

All ten subjects reported that they thought that the training difficulty was just right, the training speed was just right, the training was well organized, and that the training was interesting. All subjects also felt that REPETE training helped them learn.

The interview guide appears in the Appendix. These statements were scored on a 5 point Likert scale, with 1 being strongly disagree and 5 being strongly agree. Patients did not have trouble hearing the trainer or seeing what was on the screen. For the statement “It was easy to hear the instructor,” the mean response was 4.8. For the statement “It was easy to see what happened on the screen,” the mean response was 4.55. During remote training, occasional audio dropout occurred. However, the occasional audio dropout was never mentioned as a problem. This is likely due to the fact that it usually occurred during the web page downloads, and not much dialogue between the patient and the trainer is took place during this time as both are waiting for system response and a screen refresh before they can continue with the training session.

During the interviews, the patients were asked 5 questions assessing their agreement with statements concerning their comfort with computers before and after the training. Overwhelmingly, the subjects believed that working with computers was worthwhile and that it was enjoyable. Most also felt comfortable with computers. On the statement of whether or not computers made them nervous, the responses were mixed. With regards to whether or not computers were confusing, nearly all of the responses in the pre-training condition were neutral. While it was not expected that their comfort level with computers would change significantly before and after training, due to the short duration of the training, we believed that the information would be worth investigating. However, after the training, six of the ten subjects exhibited shifts in the desired direction, disagreeing with statement that “Computers are confusing.”

4.1 Results from web skills competency benchmark

Results from the web skills competency benchmark were generally positive. The general trend of the users pre- and post-training was an increase in web competency. There were no decreases in competency from pre- to post-training. A Wilcoxon Signed-Rank test [41] was performed to compare pre- and post-training scores. The values were computed using Stata/SE 9.1. In terms of the data retrieval (Task 2) and data entry (Task 3) tasks, there was a significant difference in the competency of the patients pre- and post-training for those two tasks (Z=-2.414, p=0.016) and (Z=-2.53, p=0.011), respectively. However, looking at Task 3, the messaging task, there was not a significant difference in web skill competency for that task between pre- and post-training (Z=-1, p=0.317).

Interrater reliability was assessed by having a second rater code videotapes from three subjects (S04, S07, and S09). A comparison of the scores from the first and second raters was computed using a one-way repeated measures ANOVA using Stata/SE 9.1. The data shows that the difference between raters are not significantly different (F=3.0, df=5, p=0.127), (F=2.2, df=5, p=0.204), (F=1.0, df=5, p=0.5), respectively. All of the scores between raters were within 1 point variation. There were 44.4% that had a zero point difference and 55.6% had a 1 point difference in the score. Although small, the differences clustered around the evaluation of Task 3. A third rater was used to arbitrate this inconsistency. It is interesting to note that for Task 3, the first two raters disagreed a total of 4 times out of 6. In these results where the two raters disagreed, rater 3 agreed with rater 1 twice and rater 2 twice. This indicates that the discrepancy between the two raters is likely due to a thresholding problem between the categories. An example of this thresholding problem is when the scorers score either one point up or down from each other because the item being scored is borderline in one category or the other. New Z and p-values were calculated for Task 3, using the new arbitrated scores (Z=-2.449, p=0.014). This still indicates that there was a significant difference pre- and post-training.

The results of this analysis indicate that the subjects exhibited measurable positive changes in competency for the data retrieval (Tasks 2) and data entry (Task 3) tasks. However, for Task 4, there was no measurable change in competency pre- and post-training. The reasons for this lack of change in competency are discussed more fully in later sections.

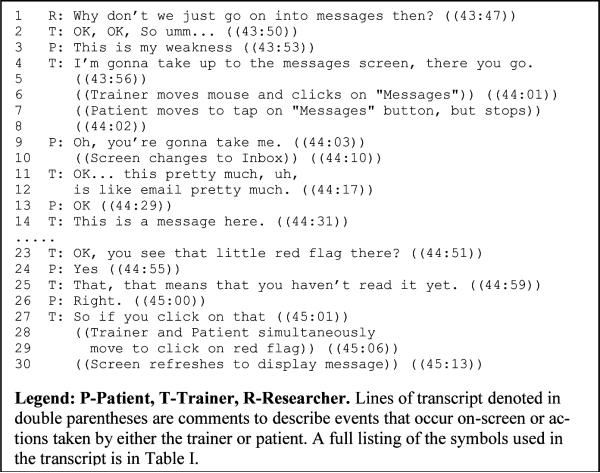

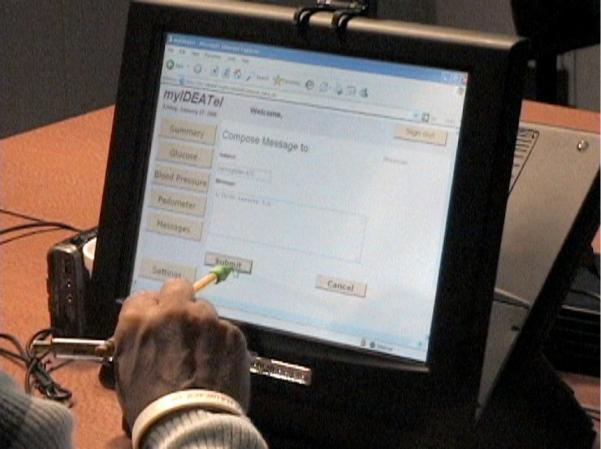

4.2 Results from Interaction Analysis Microcoding

Detailed transcripts and annotations using the notation based on that developed by Jefferson were generated. A full transcript of one session was performed. Partial transcripts were generated from short sections from four other training sessions that were deemed to contain interesting artifacts concerning the process of training. Transcripts from short sections of the remote training are included and discussed in detail below.

Figure 3 illustrates the interaction of a patient being shown the messaging task. Prior to training, the user was unfamiliar with this task and was unable to compose a message. After training, the subject was able to complete the task and compose a message to his provider without mistakes. The interaction illustrates various aspects of the remote training scenario. For example, in lines 4-10 of Figure 3, when the trainer was attempting to demonstrate the messaging component of myIDEATel, both the trainer and patient attempted to control the system simultaneously, producing a temporary clash of control. In lines 4 and 9, the turn taking was then negotiated through dialog between the trainer and patient. In line 23, when the trainer was describing the red flag denoting a new message, he neglected to use the telepointer to gesture and to provide additional visual cues to the patient. This example shows how this remote training method requires the trainer to learn a number of techniques in order for the interaction to be optimal. At the end of the task, in lines 28-29, the trainer and patient had another tug-of-war over control of the system.

Figure 3.

Partial transcript of dialog and interaction between trainer and patient. In this transcript, the trainer is teaching the patient how to read a message from his provider.

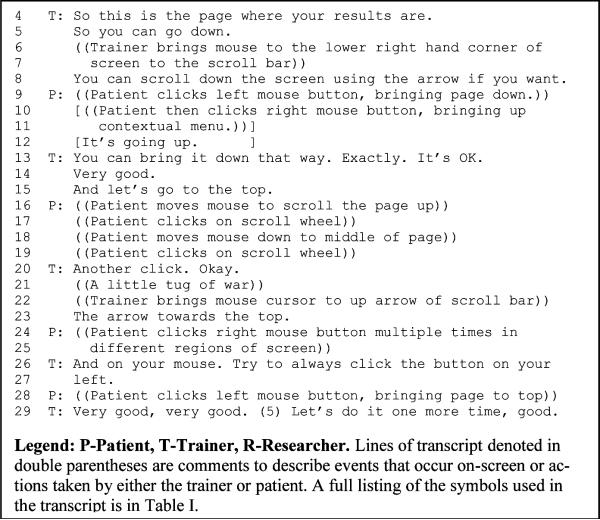

Figure 4 shows a transcript of an interaction between a patient and a trainer where the trainer is demonstrating to the patient how to scroll up and down the screen. In lines 5-8, the trainer describes to the patient how to scroll down the web page. In lines 6-7, the trainer uses the cursor to demonstrate to the patient where to look on the screen. In line 8, the trainer tells the patient that she can scroll down the screen using the arrow. In lines 9-11, the patient tries to use the mouse to click on the arrow in the scroll bar. The patient clicks with both the left and right mouse buttons. In lines 13-29, the patient is guided to scroll the page back up to the top. In lines 16-19 and 24-25, the patient does not consistently click on the left mouse button, rather she clicks on the left mouse button, right mouse button, as well as the scroll wheel. The trainer realizes that the patient is unaware that she needs to click on the left mouse button. In lines 26 and 27, the trainer tells the patient to always use the left mouse button. In addition, in lines 21 and 22, one can see that the trainer and the patient have a “tug of war,” fighting over control of the cursor. The source of this “tug of war” was due to the patient trying to click in the wrong location on the screen and the trainer trying to direct the patient to click in the right location.

Figure 4.

Excerpt from transcript of interaction between Patient (P) and Trainer (T), with trainer demonstrating how to scroll on a web page.

This transcript is interesting for a number of reasons. While the trainer was unable to see the patient or the patient's actions directly, the trainer was able to infer that the patient was unaware of how to use the left mouse button from the on screen actions. For example, the trainer was able to see that the incorrect mouse button was being pressed because the contextual menu being triggered by the right mouse button and an on screen icon depicting the scroll button being pressed. The trainer and the patient were able to negotiate control over the cursor without any verbal exchanges.

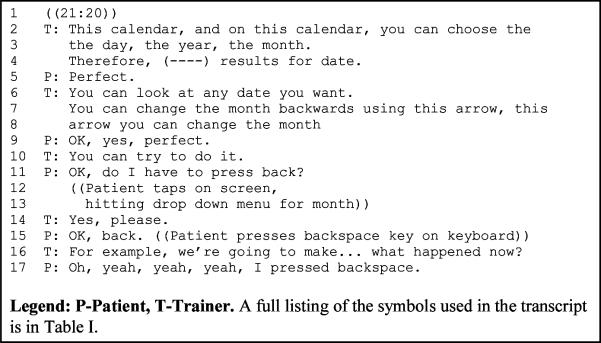

Figure 5 shows an excerpt from a transcript of a trainer guiding a patient through the process of choosing a date in the calendar. In lines 2-6, the trainer describes the task that the patient is about to do. In lines 7-8, the trainer describes how to do the task of going to the previous month in the calendar, which is to tap on an arrow on the screen pointing to the left (backwards). In line 9, the patient acknowledges that she understands. In line 10, the trainer instructs the patient to try to go to the previous month. In line 11, the patient asks if she needs to press back. In lines 12-13, the patient taps on the screen using the eraser of the pencil, hitting the drop down menu for choosing the month. In line 14, the trainer confirms that the patient needs to tap on the back arrow. In line 15, the patient says that she is hitting back, and hits backspace on the keyboard. In line 16, the trainer then starts to describe what they're going to do next and then notices something wrong. In line 17, the patient then tells her what she had pressed.

Figure 5.

Excerpt from transcript of interaction between Patient (P) and Trainer (T), with patient trying to change month.

This excerpt highlights a few scenarios showing some of the advantages and disadvantages of training using REPETE. Because the trainer is not sitting next to the patient, the trainer was unable to prevent the patient from hitting the backspace button. Interestingly, in line 15, we see that the trainer is adjusting for the delay that she has when viewing the patient's screen remotely and anticipatorily begins to give instructions on the next step. The trainer is then surprised by what she sees on her screen. Without the remote viewing capability of REPETE, which adds significantly to establishing common ground between the patient and the trainer, the trainer would not know that an unexpected action on the part of the patient and an unexpected behavior on the part of the system had occurred. In the case of telephone support, the training probably would have continued for quite some time before the trainer realized that the patient was looking at a different screen.

Figure 6 shows a partial transcript of a patient being instructed on how to enter the number of pedometer steps from pedometer into the myIDEATel web site. The patient has been instructed by the trainer to enter in the number 4000 as the reading from the pedometer. At the beginning of this excerpt, the patient has already entered in the number four. In lines 64-70, the trainer is instructing the patient to enter in the zeroes. In lines 71-81, the patient is tapping on the numbers displaying the entered steps instead of the numbers in the keypad. In lines 74-78, the trainer is explaining to the patient that she has only put in 4 steps as the reading. In this section of the transcript, the trainer does not realize that the patient is confused regarding how to enter in the remaining numbers. The trainer then assumes that the difficulty is that the patient does not know how to enter in the remaining numbers. In lines 82-90, the trainer describes to the patient how to tap the on-screen buttons to record his pedometer steps. The trainer then uses a gesture, pointing to the keypad and demonstrates using the keypad by clicking on the `0'. In lines 91-94, the patient enters in the remaining zeroes. In line 95-99, the trainer explains to the patient that she has entered in 4000 steps and now that they are going to submit the results.

Figure 6.

Excerpt from transcript of interaction between Patient (P) and Trainer (T), with patient trying to enter steps.

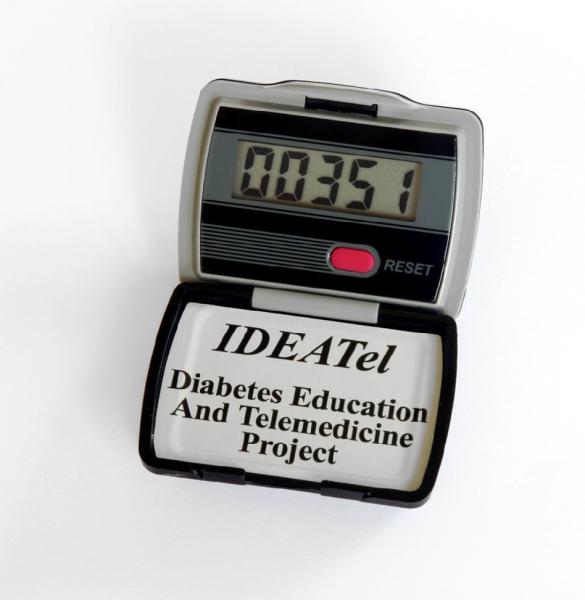

From this excerpt, we can make a number of interesting observations. Based on analyzing the corresponding video, as well as from the patient saying that “the zeroes behind don't change,” presumably the patient is confused by what she is seeing on the screen. One explanation is that the patient was trying to enter in the zeroes by tapping on the results display. However, this explanation is unlikely because the patient was able to enter in the first number, 4. A more likely possibility is that the user interface was confusing to the patient. The pedometer entry interface was designed to be zero padded, where 351 steps would read 00351, similar to how it would appear on the pedometer itself (see Figure 7). The interface was also designed to behave similarly to a calculator, which fills the number in from the right. This behavior is different from that of most text boxes, which fill in from the left. The reasoning behind this design was to allow the patients to match the display of the pedometer to the entry display. After the demonstration of the interface by the trainer in lines 88-89, putting in one of the zeroes, the patient did not appear to have any additional trouble completing the pedometer data entry task.

Figure 7.

The IDEATel Pedometer

After the study was performed, logs of myIDEATel utilization were examined. Because the data collection for this study ranged over a period of six months, the logs were examined between 4 and 10 months after the remote training study was performed. Subjects 1, 2 and 7 used the system substantially after training. We examined the logs for actions they performed between September and October 2006. Subject 1 had no logins before training, but had 100 different login sessions over the 10-month period after receiving remote training. The logs showed that over the 2 month period, he reviewed his results 16 times. Subject 2 was logged as having 8 myIDEATel sessions during the 3 month period before the remote training and 17 sessions over the 9 month period after training. She reviewed results 2 times in September and October. Subject 7 logged in 23 times and entered 11 pedometer results over the 5 month period after receiving remote training. In addition, over September and October, she looked for new messages 3 times and reviewed results once. Despite the fact that Subject 7 only scored a 3 on the web skills competency instrument in her post-training for the pedometer task, it appears that she was able to enter in her pedometer results.

Unfortunately, on the basis of solely the log information, we cannot know whether or not these subjects were performing these tasks unassisted or assisted. It is possible that these subjects are performing these tasks with assistance from family members or friends. However, based upon our interviews and observations during data collection, it is highly likely that these subjects were indeed performing these tasks unassisted. However, the most significant point is that for at least Subjects 1 and 7, there was in fact a behavior change and they were going online to review and enter data.

5 Discussion

The REPETE architecture provided an effective remote training platform for home telehealth, resulting in improvements in both subjective measures of patient attitudes as well as objective measures of task performance. The effectiveness of the training with older adults demonstrated that REPETE is able to successfully operate over narrowband networking.

REPETE allows trainers to observe student actions in near real time. There were occasional situations in which the trainer and patient could be out of sync. However, there were few if any situations in which being out of sync posed any difficulty to the trainer or patient. It necessitated some adjustments, but all participants were able to negotiate them.

The trainer was able to control the patient's HTU in the training sessions. This feature was heavily used in each of the training sessions described and evaluated. The trainer was able to speak to the patient at the same time as using the mouse cursor to support deictic referencing to on-screen objects. The trainers frequently used a smooth circular gesture with the cursor in order to direct the patients' attention during the training sessions.

There is little literature on remote training over narrowband connections such as dialup networking. Surprisingly, few, if any, computer trainings studies with novice users have assessed changes in task performance. A large portion of studies have focused only on changes in user attitudes [40, 42-44]. In addition, there are very few studies if any concerning distance education using a one-on-one approach, in a synchronous manner, or with older adults. The vast majority of the distance learning studies have been focused on asynchronous teaching using web-based tools such as forums to provide assistance to virtual classroom studies [45-47]. Other studies have focused on evaluating the level of satisfaction with using health portals [48]. Many studies have noted concerns regarding the computer literacy of patients and the frequent frustration they experience when using web-based healthcare resources [49-51].

To the best of our knowledge, this work represents the first documented integration of H.323 voice communication and remote control protocols for remote training and also the first use of remote control training in conjunction with a touchscreen computer. This combination provides a set of unique challenges to this paradigm. In a mouse-based system, the trainer sees the cursor move before the trainee selects a target. However, when the trainee is reaching toward a touchscreen, no such feedback is given to the trainer; the only feedback is given at the time the screen is touched. This requires the trainer to be cognizant of these issues and to continually ask for status. For this reason, the parallel audio channel in the REPETE architecture becomes even more critical.

The results also demonstrate that in-person training approaches must be modified for the remote training domain. One example was pointer “tug-of-war.” We observed situations in which both the trainer and the patient were trying to control the pointer at the same time. This tug-of-war was the source of some frustration and confusion. In addition, the trainer frequently uses the pointer to gesture to different areas of the screen. With experience, both trainer and trainee soon learned to recognize these situations. Technology solutions, such as visually indicating who had cursor control or enabling a secondary screen overlay cursor that did not control the remote cursor might also prove beneficial.

The evaluation has some obvious limitations. Only ten patients were evaluated. However, as proof-of-concept, even this small number is adequate to demonstrate the functionality of the architecture. In addition, subjects only participated in a single training session and there was no follow-up competency testing. It is important to know whether they retained their skills over time. The subjects in this study were significantly less computer literate than subjects in many other computer training and usage studies for older adults [52]. Therefore, we believe the results are likely to be generalizable to the typical elderly population. This architecture was designed for narrowband communication. However, broadband is increasingly common and there would be a richer array of resources available to us in this wider channel. In the higher bandwidth scenario, it would be possible to have the web camera running to allow the trainer to monitor the actions of the patient, giving the trainer gaze awareness and higher levels of workspace awareness. With this capability, the experience from a trainer and patient perspective would likely be significantly different.

The REPETE architecture provided better common ground than that of telephone support through the addition of the shared workspace. However, there were some issues concerning workspace awareness [8]. There is a lack of gaze awareness in REPETE [53]. There were situations in which the trainer was not aware that the patient was not looking in the right place or was waiting. Enabling the trainer to visually monitor the actions of the patient through the web camera, as would be available on a broadband network, would provide gaze awareness. Increasing gaze awareness would help address the lack of copresence in REPETE.

Issues regarding scrolling with touchscreens might be resolved by increasing the size of the scroll bar widgets. The increased width of the shaft and increased size of the scroll arrows would allow the centroid of the finger to more easily fit into the requisite areas. However, this reduces the amount of available screen real estate, in an already screen real estate challenged environment.

In addition to remote training, the REPETE architecture was used in a variety of remote technical support situations. REPETE was used for both upgrading the ISP phone numbers in all of the deployed HTUs as well as a device audit to ensure that patient information was programmed into each HTU correctly. These tasks normally would require an installer to travel to the homes of each of the patients to change or confirm the configuration in each of the HTUs individually. However, using REPETE, the technical support staff was able to upgrade the ISP numbers and audit over 250 HTUs without leaving the office. These two technical support scenarios saved over $130,000 in technical support costs, as well as reduced the amount of time needed to complete these tasks.

In summary, this evaluation demonstrates the effectiveness of remote training using the REPETE architecture. The training method has been successfully demonstrated with a small number of elderly subjects. The training method used in this study is believed to be generalizable to other distance learning situations. We also employed novel methods of analysis to provide an in-depth look into the communication process. As home telemedicine becomes ubiquitous, the need for remote training and online assistance will become increasingly important. The REPETE remote training approach may serve as an instrumental part of the solution.

Acknowledgements

This work is supported by National Library of Medicine Training Grant NO1-LM07079 and Centers for Medicare and Medicaid Services Cooperative Agreement 95-C-90998. Special thanks to Vinicio Nunez, Jenia Pevzner, and Martha Rodriquez for their help in recruiting and training patients.

Appendix

Comfort with computers

Scale of 1-5, 1 being strongly disagree and 5 strongly agree:

I feel comfortable with computers.

Computers make me nervous.

Computers are confusing.

I think working with computers would be enjoyable and stimulating.

Learning about computers is worthwhile.

Training Methodology Questions

Was the training too easy or too difficult or just right?

Was the training taught too fast or too slow or just right?

Was the training poorly organized or well organized?

Was the training boring or interesting?

Did the training help you learn?

It was easy to hear the instructor.

It was easy to see what happened on the screen.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Starren J, Hripcsak G, Sengupta S, Abbruscato CR, Knudson PE, Weinstock RS, Shea S. Columbia University's Informatics for Diabetes Education and Telemedicine (IDEATel) project: technical implementation. J Am Med Inform Assoc. 2002;9:25–36. doi: 10.1136/jamia.2002.0090025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Shea S, Starren J, Weinstock RS, Knudson PE, Teresi J, Holmes D, Palmas W, Field L, Goland R, Tuck C, Hripcsak G, Capps L, Liss D. Columbia University's Informatics for Diabetes Education and Telemedicine (IDEATel) Project: rationale and design. J Am Med Inform Assoc. 2002;9:49–62. doi: 10.1136/jamia.2002.0090049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Starren J, Tsai C, Bakken S. The role of nurses in installing telehealth technology in the home. Comput Inform Nurs. 2005;23:181–189. doi: 10.1097/00024665-200507000-00004. [DOI] [PubMed] [Google Scholar]

- [4].Kaufman DR, Patel VL, Hilliman C, Morin PC, Pevzner J, Weinstock RS, Goland R, Shea S, Starren J. Usability in the real world: assessing medical information technologies in patients' homes. J Biomed Inform. 2003;36:45–60. doi: 10.1016/s1532-0464(03)00056-x. [DOI] [PubMed] [Google Scholar]

- [5].Fisk AD. Designing for older adults: principles and creative human factors approaches. CRC Press; Boca Raton: 2004. [Google Scholar]

- [6].Lai AM, Starren JB, Shea S, IDEATel Consortium Architecture for remote training of home telemedicine patients. AMIA Annu Symp Proc. 2005:1015. [PMC free article] [PubMed] [Google Scholar]

- [7].Lai AM, Kaufman DR, Starren J. Training Digital Divide Seniors to use a Telehealth System: A Remote Training Approach. AMIA Annu Symp Proc. 2006:459–463. [PMC free article] [PubMed] [Google Scholar]

- [8].Gutwin C, Greenberg S. The Importance of Awareness for Team Cognition in Distributed Collaboration. In: Salas E, Fiore SM, editors. Team Cognition: Understanding the Factors that Drive Process and Performance. APA Press; Washington, DC: 2004. pp. 177–201. [Google Scholar]

- [9].Rahimpour M, Lovell NH, Celler BG, McCormick J. Patients' perceptions of a home telecare system. International Journal of Medical Informatics. 2008;77:486–498. doi: 10.1016/j.ijmedinf.2007.10.006. [DOI] [PubMed] [Google Scholar]

- [10].Shea S, Weinstock RS, Starren J, Teresi J, Palmas W, Field L, Morin P, Goland R, Izquierdo RE, Wolff LT, Ashraf M, Hilliman C, Silver S, Meyer S, Holmes D, Petkova E, Capps L, Lantigua RA. A randomized trial comparing telemedicine case management with usual care in older, ethnically diverse, medically underserved patients with diabetes mellitus. J Am Med Inform Assoc. 2006;13:40–51. doi: 10.1197/jamia.M1917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Fisk AD, Rogers WA, Charness N, Czaja SJ, Sharit J. Designing for older adults: principles and creative human factors approaches. CRC Press; Boca Raton: 2004. [Google Scholar]

- [12].Morrell RW, Echt KV. Designing Written Instructions for Older Adults: Learning to Use Computers. In: Fisk AD, Rogers WA, editors. Handbook of human factors and the older adult. Academic Press, Inc.; San Diego, CA: 1997. pp. 335–361. [Google Scholar]

- [13].Morrell RW. Older adults, health information, and the World Wide Web. Lawrence Erlbaum Associates; Mahwah, NJ: 2002. [Google Scholar]

- [14].Kurniawan S, Zaphiris P. Research-Derived Web Design Guidelines for Older People. Assets '05: Proceedings of the 7th international ACM SIGACCESS Conference on Computers and Accessibility; Baltimore, MD, USA: ACM Press; 2005. pp. 129–135. [Google Scholar]

- [15].Demiris G, Finkelstein SM, Speedie SS. Considerations for the Design of a Web-based Clinical Monitoring and Educational System for Elderly Patients. J Am Med Inform Assoc. 2001;8:468–472. doi: 10.1136/jamia.2001.0080468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Oken BS, Kishiyama SS, Kaye JA. Age-related differences in visual search task performance: relative stability of parallel but not serial search. J Geriatr Psychiatry Neurol. 1994;7:163–8. doi: 10.1177/089198879400700307. [DOI] [PubMed] [Google Scholar]

- [17].Petersen HE, Dugas DJ. The relative importance of contrast and motion in visual detection. Hum Factors. 1972;14:207–16. doi: 10.1177/001872087201400302. [DOI] [PubMed] [Google Scholar]

- [18].Hillstrom AP, Yantis S. Visual motion and attentional caputer. Perception & Psychophysics. 1994;55:399–411. doi: 10.3758/bf03205298. [DOI] [PubMed] [Google Scholar]

- [19].McLeod P, Driver J, Crisp J. Visual search for a conjunction of movement and form is parallel. Nature. 1988;332:154–155. doi: 10.1038/332154a0. [DOI] [PubMed] [Google Scholar]

- [20].Bartram L, Ware C, Calvert T. Moving Icons: Detection And Distraction. Proc. of the IFIP TC.13 Interational Conferene on Human-Computer Interaction (INTERACT 2001); Tokyo, Japan. 2001. [Google Scholar]

- [21].Baecker RM, Baecker RM, Baecker RMs. Readings in groupware and computer-supported cooperative work: assisting human-human collaboration. Morgan Kaufman; San Mateo, CA: 1993. [Google Scholar]

- [22].Pratt W, Reddy MC, McDonald DW, Tarczy-Hornoch P, Gennari JH. Incorporating ideas from computer-supported cooperative work. J Biomed Inform. 2004;37:128–37. doi: 10.1016/j.jbi.2004.04.001. [DOI] [PubMed] [Google Scholar]

- [23].Clark HH, Brennan SE. Grounding in Communication. In: Resnick LB, Levine JM, Teasley SD, editors. Perspectives on Socially Shared Cognition. American Psychological Association; Washington, DC: 1991. pp. 127–149. [Google Scholar]

- [24].Gutwin C, Greenberg S. A Descriptive Framework of Workspace Awareness for Real-Time Groupware Computer Supported Cooperative Work (CSCW) 2002;11:411–446. [Google Scholar]

- [25].Gutwin C, Greenberg S. The Importance of Awareness for Team Cognition in Distributed Collaboration. In: Salas E, Fiore SM, editors. Team cognition: understanding the factors that drive process and performance. 1st ed. American Psychological Association; Washington, DC: 2004. pp. 177–201. [Google Scholar]

- [26].Wang H, Chee YS. Supporting Workspace Awareness in Distance Learning Environments: Issues and Experiences in the Development of a Collaborative Learning System. Proceedings of the Ninth International Conference on Computers in Education/SchoolNet 2001; Seoul, South Korea. 2001. pp. 1109–1116. [Google Scholar]

- [27].Berlage T. Augmented-reality communication for diagnostic tasks in cardiology. IEEE Trans Inf Technol Biomed. 1998;2:169–73. doi: 10.1109/4233.735781. [DOI] [PubMed] [Google Scholar]

- [28].Hillstrom AP, Yantis S. Visual motion and attenional capture. Perception & Psychophysics. 1994;55:399–411. doi: 10.3758/bf03205298. [DOI] [PubMed] [Google Scholar]

- [29].Bartram L, Ware C, Calvert T. Moving Icons: Detection And Distraction. Proc of the IFIP TC.13 International Conference on Human-Computer Interaction (INTERACT 2001); Tokyo, Japan. 2001. [Google Scholar]

- [30].Khan A, Matejka J, Fitzmaurice G, Kurtenbach G. Spotlight: directing users' attention on large displays. Proceedings of the SIGCHI conference on Human factors in computing systems; Portland, Oregon, USA: ACM; 2005. pp. 791–798. [Google Scholar]

- [31].Mayhorn CB, Stronge AJ, McLaughlin AC, Rogers WA. Older Adults, Computer Training, and the Systems Approach: A Formula for Success. Educational Gerontology. 2004;30:185–203. [Google Scholar]

- [32].Lai AM, Starren JB, Kaufman DR, Mendonça EA, Palmas W, Nieh J, Shea S. The REmote Patient Education in a Telemedicine Environment Architecture (REPETE) Telemedicine and e-Health. 2008;14 doi: 10.1089/tmj.2007.0066. [DOI] [PubMed] [Google Scholar]

- [33].Kaufman DR, Pevzner J, Hilliman C. Redesigning a Telehealth Diabetes Management Program for a Digital Divide Seniors Population. Home Health Care Management & Practice. 2006;18:223–234. [Google Scholar]

- [34].Atkinson JM, Heritage J. Structures of social action: studies in conversation analysis. Cambridge University Press; New York: 1984. [Google Scholar]

- [35].Hutchby I, Wooffitt R. Conversation analysis: principles, practices, and applications. Malden, Mass.: Polity Press; Cambridge: 1998. [Google Scholar]

- [36].Goodwin C. Conversational organization: interaction between speakers and hearers. Academic Press; New York: 1981. [Google Scholar]

- [37].Schegloff EA. On some gestures' relation to talk. In: Atkinson JM, Heritage J, editors. Structures of Social Action: Studies on Conversation Analysis. Cambridge University Press; New York, NY: 1984. pp. 266–296. [Google Scholar]

- [38].Jay GM, Willis SL. Influence of direct computer experience on older adults' attitudes toward computers. J Gerontol. 1992;47:P250–7. doi: 10.1093/geronj/47.4.p250. [DOI] [PubMed] [Google Scholar]

- [39].Loyd BH, Loyd DE. The Reliability and Validity of an Instrument for the Assessment of Computer Attitudes. Educational and Psychological Measurement. 1985;45:903–908. [Google Scholar]

- [40].Brigden S, Petker C. Computers for Elder Learning. Province of British Columbia Ministry of Advanced Education and the Department of Human Resources Development Canada National Literacy Secretariat; Chilliwack, BC: 2002. [Google Scholar]

- [41].Wilcoxon F. Individual Comparisons by Ranking Methods. Biometrics. 1945;1:80–83. [Google Scholar]

- [42].Campbell RJ, Nolfi DA. Teaching Elderly Adults to Use the Internet to Access Health Care Information: Before-After Study. J Med Internet Res. 2005;7:e19. doi: 10.2196/jmir.7.2.e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Czaja SJ, Sharit J. Age Differences in Attitudes Toward Computers. J Gerontol B Psychol Sci Soc Sci. 1998;53B:P329–40. doi: 10.1093/geronb/53b.5.p329. [DOI] [PubMed] [Google Scholar]

- [44].Dyck JL, Smither JA-A. Age Differences in Computer Anxiety: The Role of Computer Experience, Gender, and Education. J Educational Computing Research. 1994;10:239–248. [Google Scholar]

- [45].Fahy PJ, Ally M. Student Learning Style and Asynchronous Computer-Mediated Conferencing (CMC) Interaction. American Journal of Distance Education. 2005;19:5–22. [Google Scholar]

- [46].Morris LV, Wu S-S, Finnegan CL. Predicting Retention in Online General Education Courses. American Journal of Distance Education. 2005;19:23–36. [Google Scholar]

- [47].Austin Z, Dean MR. Impact of Facilitated Asynchronous Distance Education on Clinical Skills Development of International Pharmacy Graduates. American Journal of Distance Education. 2006;20:79–91. [Google Scholar]

- [48].Bickmore TW, Caruso L, Clough-Gorr K, Heeren T. 'It's just like you talk to a friend' relational agents for older adults. Interacting with Computers. 2005;17:711–735. [Google Scholar]

- [49].Rezailashkajani M, Roshandel D, Ansari S, Zali MR. A web-based patient education system and self-help group in Persian language for inflammatory bowel disease patients. International Journal of Medical Informatics. 2008;77:122–128. doi: 10.1016/j.ijmedinf.2006.12.001. [DOI] [PubMed] [Google Scholar]

- [50].Dey A, Reid B, Godding R, Campbell A. Perceptions and behaviour of access of the Internet: A study of women attending a breast screening service in Sydney, Australia. International Journal of Medical Informatics. 2008;77:24–32. doi: 10.1016/j.ijmedinf.2006.12.002. [DOI] [PubMed] [Google Scholar]

- [51].Lee M, Delaney C, Moorhead S. Building a personal health record from a nursing perspective. International Journal of Medical Informatics. 2007;76:S308–S316. doi: 10.1016/j.ijmedinf.2007.05.010. [DOI] [PubMed] [Google Scholar]

- [52].Lee T-I, Yeh Y-T, Liu C-T, Chen P-L. Development and evaluation of a patient-oriented education system for diabetes management. International Journal of Medical Informatics. 2007;76:655–663. doi: 10.1016/j.ijmedinf.2006.05.030. [DOI] [PubMed] [Google Scholar]

- [53].Ishii H, Kobayashi M. ClearBoard: a seamless medium for shared drawing and conversation with eye contact. CHI '92: Proceedings of the SIGCHI conference on human factors in computing systems; Monterey, CA. 1992. [Google Scholar]