Abstract

Objective

To develop a framework that public health practitioners could use to measure the value of public health services.

Data Sources

Primary data were collected from August 2006 through March 2007. We interviewed (n=46) public health practitioners in four states, leaders of national public health organizations, and academic researchers.

Study Design

Using a semi-structured interview protocol, we conducted a series of qualitative interviews to define the component parts of value for public health services and identify methodologies used to measure value and data collected.

Data Collection/Extraction Methods

The primary form of analysis is descriptive, synthesizing information across respondents as to how they measure the value of their services.

Principal Findings

Our interviews did not reveal a consensus on how to measure value or a specific framework for doing so. Nonetheless, the interviews identified some potential strategies, such as cost accounting and performance-based contracting mechanisms. The interviews noted implementation barriers, including limits to staff capacity and data availability.

Conclusions

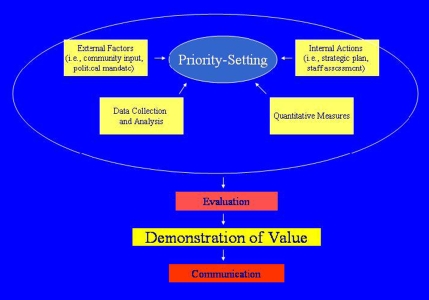

We developed a framework that considers four component elements to measure value: external factors that must be taken into account (i.e., mandates); key internal actions that a local health department must take (i.e., staff assessment); using appropriate quantitative measures; and communicating value to elected officials and the public.

Keywords: Public health systems, measures of value, public health services

Public health practitioners understand that there is inherent value in maintaining governmental public health services (GPHS) to protect the population against the spread of disease. But the reality of continuing budgetary constraints facing GPHS suggests that neither politicians nor the public shares a similar perception. To change public attitudes, GPHS must demonstrate and communicate measurable contributions to the population's health and allocate resources to those activities likely to achieve maximum value. At the margin, choices need to be made regarding which services to preserve, which can be cut while minimizing harm to population health outcomes, and which need additional resources. This is especially true at a time when public health systems are expected to incorporate multiple mandates (both funded and unfunded), such as emergency preparedness.

In this article, we propose a framework that public health practitioners might use to measure the value of the various services local health departments (LHDs) offer. To develop the framework, we synthesize the results of case study interviews and the characteristics of five current strategies for measuring value that our respondents identify. After describing the study methods and the strategies, we set forth our proposed framework, its rationale, and limitations. We conclude with a discussion of the practice and policy implications.

METHODS

To understand how public health practitioners define and measure the value of public health services, we conducted a series of qualitative interviews with leaders of national public health organizations, state and local public health practitioners, academics, and elected officials (such as local boards of health). Using a semistructured interview protocol, we asked respondents to define the component parts of value for public health services and to identify what the metrics of value should be, what methodologies they use to measure value, and what data they collect. Altogether, we interviewed 46 respondents: 24 from LHDs; 7 from state health agencies; 8 representing national organizations; 4 academics; and 3 members of local boards of health. State and local respondents are located in four states (three in the Midwest and one on the West Coast) and include small rural health departments, larger urban ones, and midsized departments. Some departments are located in affluent areas; others are in poor areas.

To identify respondents, we used a snowball sampling strategy starting with contacts at national public health organizations and contacts developed from previous research projects. Everyone we contacted agreed to be interviewed. We promised confidentiality to all respondents. The lead author conducted all of the interviews and took detailed notes (the interviews were not audio or video recorded). Most of the interviews were in-person with one individual. Two interviews were small focus groups (5 and 10 respondents, respectively), three interviews were with 2 respondents, and one was via telephone.

We did not use a qualitative software program to conduct the analysis because we determined that we could adequately analyze the data through reading and re-reading iteratively the interview notes. To assist the analysis, a research assistant (RA) coded the interview notes, then the lead author reviewed the coding and suggested collapsing the categories. The RA recoded the interview notes, and the lead author again separately reviewed the interview notes to verify the coding. We analyzed the distribution of the content codes to identify patterns and relationships (i.e., common themes). The primary form of analysis is descriptive, synthesizing information across respondents as to how they approach the problem of measuring the value of their services.

RESULTS

We first asked respondents to characterize the components of value. The two most frequently mentioned components are prevention and public health's intangible core values (centered around notions of social justice). A third component is quality of services. According to our respondents, the important aspects of quality are performance standards, accreditation, and community assessment tools. A fourth component is communication. Although not a uniform response, many argue strongly that communication to the public and policy makers is an essential attribute of value. The final component is the importance of process.

We then asked respondents to describe potential models for measuring the value of public health services. As the context for the framework we are proposing, we examine the pros and cons of each approach elicited (Table 1).

Table 1.

Pros and Cons of Models

| Model(s) | Pros | Cons |

|---|---|---|

| Cost accounting | Deliberative and transparent process of decision making and fee setting Informs political process Realistic (recognizes constraints and trade-offs) | Subjective method—not scaled Time consuming Omits quality-of-care measurements Does not account for intangible values |

| Performance-based contracting | Deliberative, disciplined, and accountable process Enables flexible funding arrangements Less focused on monitoring budget allocations Captures intangible values in negotiation process | Requires strong and continuous political support and willingness to sanction failure Requires substantial investment in evaluation Likely to foster status quo |

| Logic | Focuses on value and impact (expenditures linked to outcomes) Provides context for data collection and analysis Evaluates specific program contributions to public health | Inadequately defines output or outcome measures Time consuming Requires substantial investment in evaluation |

| Performance standards and accreditation | Widely used in other fields and to define quality of care Indicates best practices of services delivered | Limited ability to define value of services Lacks evidentiary/research base |

| Quantifying outputs | Easier to measure Can be tied to quality indicators to estimate program value | Not reflective of outcomes Difficult to communicate to policy makers |

Cost Accounting

The most promising model combines cost-accounting methods, community assessment, and an internal consensus-building process to set priorities for allocating program resources.1 Since the 1980s, its developers have used the model to rank programs according to a priority score based on each program's cost, revenue sources, community input, and staff assessment. The goal is to allocate resources to those programs most highly valued through the process. Depending on its ranking, a program may see an increase or decrease in funding.

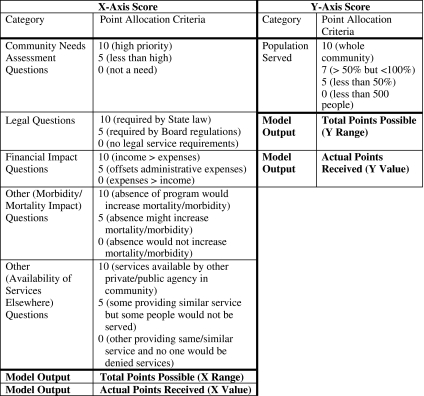

The model uses a point system to determine a program's public health importance (the X-axis in Figure 1). Staff award points for several categories, starting with the community needs assessment the LHD conducts every 5 years. Programs the community identifies as high priority receive more points than others with lower priority. Likewise, legally mandated services receive more points than discretionary programs. The third category is the estimated financial impact, determined through cost-accounting methods (i.e., income relative to expenses). Next, staff determine the extent to which a program's absence would increase morbidity and mortality. Finally, staff assess whether the service would be available elsewhere in the community for the same number of people. The points assigned are summed to provide the public health importance score.

Figure 1.

Cost-Accounting Model Calculation Method and Outputs

Source: Lake County Health Department (Ohio). See Note 1.

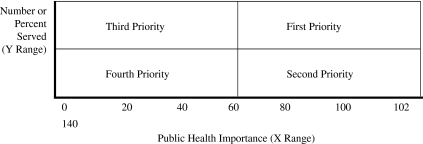

Each program is then assigned points according to the percentage of the population served (the Y-axis in Figure 1). The more people the program serves, the higher the score. Based on the total public health importance score relative to the population served, the program is placed into a priority quadrant (Figure 2). All services in the department are then ranked in priority order.

Figure 2.

Priority-Setting Schematic

Source: Joel Lucia and Jeff Campbell, Department of Health, Lake County, Ohio.

After the rankings are completed, each division director meets with the board of health to describe the ranking's rationale. This meeting is the process through which the LHD and elected officials determine how to allocate public health resources each year.

Several assumptions animate the approach as follows: (1) given budget constraints, public health services are not equal; (2) discontinuing an existing service is difficult; (3) once established, fees stay the same for years; and (4) decisions to begin new programs are usually driven by grants, rather than community need. The model's key advantages are, first, that the deliberative process allows for staff involvement in program decisions, as well as a defensible method for making decisions at the margin. Second, it informs the political process and provides transparency for political decisions and accountability. Third, it recognizes that difficult choices must be made.

At the same time, the model has several limitations. First, it does not measure quality of care. Second, it is very time consuming. Third, much of it is subjective (especially the values derived for public health importance). For instance, this approach does not specify the criteria for establishing priorities and lacks an adequate scaling mechanism to assess community input. Fourth, there is no accounting for public health's potential intangible values. In response, the developers argue that staff take into account both the tangible and intangible values during their discussions in valuing each program.

Performance-Based Contracting

In an alternative approach, Wisconsin is experimenting with performance-based contracts through which state and local public health departments negotiate contracts for the state to buy products and services from LHDs (Chapin and Fetter, 2002). The state and LHDs negotiate exactly what the LHD will provide for the state's investment and what outcomes will result. Respondents characterize the model as a quasi-market process that “moves away from the entitlement or social goods mentality” toward a social exchange based on value—in essence a social willingness to pay. Through the negotiations, an LHD sets priorities for which services it values most. Each LHD will negotiate to provide different levels and types of services reflecting local needs. If the LHD can provide the expected results on less money, it keeps the difference. If the LHD does not meet its expected performance targets, it must reimburse the state for a portion of the money.

A major advantage of this model is the discipline and accountability it forces in creating an explicit priority-setting process with measurable goals, and the flexibility it provides to LHDs to explore cross-program synergies. LHDs are responsible for meeting goals; how they deliver the product is at their discretion. Respondents argue that the model facilitates difficult choices because of the flexibility and the opportunity to pool funding with other LHDs (i.e., environmental health across several rural LHDs). Another advantage is freeing LHDs from categorical funding silos.

As with the cost-accounting model, the intangibles are captured in the negotiation process. If an LHD wants to factor in core public health values such as social justice, it is free to do so, as long as it meets the objectives of the state funding. Still, the state buys a specific activity or product, not the social values per se that may be incorporated into the product.

Regardless of its potential advantages, implementing the Wisconsin approach has been difficult. For this model to work, respondents note, it requires strong and continuous political support and a willingness to sanction failure to meet the contractual productivity goals. A second problem is that it requires a substantial investment in evaluation. Whether the model works can only be determined after several years of data collection and analysis. Third, according to state-level respondents, LHDs resist changing the status quo. LHDs fear that because so many aspects of meeting productivity goals (such as increased vaccination rates) are beyond their control, they could lose funding in the next round.

Logic Models

Several LHDs in our sample use logic models to evaluate programs through specific performance indicators. (Logic models are defined as systematic and visual displays of the sequence of actions that describe what a program is and will do, what the outcomes are, and how the program will be evaluated. A logic model links investments to results and will typically display inputs, activities, outputs, outcomes, and impact.)2 The stated purpose is to connect themes of performance management, continuous quality improvement (CQI), and strategic planning. Doing so promises to improve the quality of services provided, which, in turn, is a measure of value.

Proponents of this approach suggest that using logic models is a way to assure value because the exercise links expenditures to outcomes. Logic models have the added attraction of providing context for the data to be collected and analyzed and allowing elected officials to follow the process to results. The logic model is a useful tool for identifying existing data, gaps in data, and developing the database needed to demonstrate value. An integral aspect of the logic model approach is an evaluation plan to show how much each program contributes to population health (i.e., through reductions in morbidity and mortality). A rigorous evaluation process using the logic model helps articulate why money should be invested in “x” or “y” program.

Performance Standards/Accreditation

The academic and national respondents in our sample are the primary proponents of using performance standards and accreditation (which are widely used in other fields) to measure value. Proponents assert that evidence-based performance standards indicate best practices that will help improve quality and efficiency, and therefore enhance value. By improving workforce competency and efficient delivery mechanisms, this approach will improve quality and enhance the system's credibility (and accountability) with policy makers. At this point, however, performance standards do not provide an evidentiary base showing quality improvements or other outcome measures. The absence of a research base linking inputs to outputs is an impediment to using performance standards to measure value.

Quantifying Outputs

Our interviews suggest that numbers of services provided and people served are necessary but not sufficient as an approach to measuring value. For instance, vaccination rates and reductions in infant mortality are important indicators of program productivity, yet the number of home health visits has little meaning in and of itself. A typical LHD response is that “numbers are important as outputs, or measures of productivity—not as outcomes.” No respondent equated numbers with outcomes. An additional concern of quantifying outputs is that they are hard to communicate effectively to policy makers. “Burying people with numbers loses the human drama. People don't receive information that way. [We] need to communicate the impact of public health on people—how it affected someone's life.”

Developing the Framework

When we assess the systematic models in conjunction with respondents' views, the cost-accounting approach, even with its limitations, appears to be the model that best incorporates the most important component parts of value. The model also benefits from the experience gained in being used to make programmatic choices, indicating that it is feasible. Therefore, we use the cost-accounting approach as our point of departure, although the framework borrows elements from the other models described above.

In setting forth the framework, we have erred on the side of comprehensiveness. We recognize that the framework will need to be streamlined based on feedback from practitioners. At this stage, we consider the framework to be an aid or guide for LHDs to assess the value of their services; it will not provide a single answer regarding value.

Our interviews suggest various categories that should be included in the framework. Among the features are whether the service is mandated; is available elsewhere; would increase morbidity/mortality if not provided; is financially viable; is effective; serves a critical mass of people; and reflects core public health values. Based on the interviews, several other facets of public health must also be captured, such as quality of care, intangible values (i.e., social justice), and investments in prevention.

An important aspect of the framework is to develop a process for defining value through priority setting to determine how the community and public health practitioners assess the importance of specific services. The process is important for gaining consensus among the staff.

Very few respondents include an evaluation component to determine whether public health services are achieving their stated goals or whether the services could be provided in a more cost-effective way. Resource limitations, along with a general lack of staff expertise, make it difficult for LHDs to invest in program evaluation. Nonetheless, implementing rigorous, periodic program evaluations is a key component of the framework.

The Proposed Framework

Our proposed framework (Diagram 1) considers four component elements to determine program priorities. First, what are the external factors that must be taken into account? Second, what are the key internal actions that an LHD must take? Third, what are the appropriate quantitative measures to assess value? Fourth, how can value be practically measured and communicated to elected officials and to the public?

Diagram 1.

Proposed Framework

External Factors

LHDs do not operate in a vacuum. Each LHD has an array of external stakeholders, constituents, and responsibilities that must be factored into programmatic decisions. The framework considers four external factors.

Community Needs Assessment

The first factor involves developing a process for assessing community needs, identifying gaps in services the community wants, and engaging the community in deciding what services to provide. Although there are countless ways of obtaining community input, our respondents mention surveys (mail or web-based), focus groups, and stakeholder priority-setting sessions as their primary methods.

Mandates

Determining which services are legally mandated is the second factor. Along with federal mandates, each state and even each county may mandate that an LHD provide specific services. Beyond the legal mandates, elected officials have a different set of metrics regarding programs to adopt or support. As our interviews disclose, some services are not supportable in certain areas, while some programs are retained regardless of any objective metrics of the service's value or need.

Revenue Sources

Third, the cost-accounting framework examines extensively each program's revenue sources. Those sources include general revenue, grants, contracts, local taxes, and fees. The purpose is to compare revenue with cost (and its impact on the community) to assess the program's viability.

Private Sector Alternatives

The fourth factor is to determine alternative program delivery options. In an era of private sector dominance, LHDs often look to the private sector to avoid duplication of services and, more importantly, to assess whether the private sector would provide services no longer feasible or cost-effective for LHDs to offer. The difficulty in this strategy is determining the long-term impact to the population being served and to the community if services are shifted to the private sector, how the private sector provider would be monitored, and how much money would be saved if shifted to the private sector.

Internal Factors

Not only do most LHDs operate in isolation from one another, they are organized and operate differently internally, even relative to others in the same state. The problems LHDs face, such as organizational structure, staff capacity, and available resources, vary substantially across LHDs. As a result, each LHD faces different incentives (and limits) in weighing these factors based on the organization's distinct cultures and options. The framework considers four internal factors.

Strategic Plan

Several respondents (though not a majority) mention the importance of developing and implementing a strategic plan as part of the process of measuring value. Although potentially a useful tool, a strategic plan is not a necessary component of deriving measures of value.

Staff Assessment

Both as a process mechanism and for the substantive determination of a program's value, involving all staff in program assessment is central to implementing the framework. The framework incorporates criteria for rating and then ranking each program used in the cost-accounting approach. These criteria help identify the core public health programs unique to population heath that the LHD provides; the impact on vulnerable populations from the failure to maintain current spending levels; and potential increases in health care disparities. Using the criteria facilitates comparisons across programs that serve different constituencies and provide differing types of services. Ranking the programs then informs policy makers about which programs to retain, cut, or eliminate.

Quality of Services

In our interviews, the quality of a service emerged as an important component of value. While the framework does not explicitly address quality of services, using the framework will isolate factors contributing to overall quality. For instance, the framework helps determine whether an LHD is the most efficient and cost-effective service provider, on what basis (tangible or intangible) each service contributes to improving the public's health, whether services are based on the best available scientific evidence, and the data supporting outcomes analyses.

Our interview results support the use of performance standards and the accreditation process as mechanisms for examining quality of care. Logic models may be an effective way of implementing performance standards. We are cognizant that performance standards are at a nascent stage in public health and may be of limited utility right now.

Data Collection and Analysis

Our interviews suggest that LHDs have generally not developed data collection and analysis strategies. A shortcoming many of our respondents note with regard to measuring value is the paucity of outcomes measures and data. Without adequate outcomes data, it will be difficult to assess the value of any given service or program. As others have noted, LHDs lack sophisticated information technology infrastructure and data collection systems, limiting their ability to measure program outcomes. Unfortunately, our interviews were not designed to develop either a methodology or a specific set of questions to ascertain outcomes. Nevertheless, a core element of our framework is collecting and analyzing data to identify program outcomes, specify the data needed to assess outcomes, and develop appropriate data collection and analysis strategies. Otherwise, it will be difficult to set program priorities.

Methodologies

The third feature of the framework is to select the quantitative methodologies for measuring value. Our review of the economic evaluation literature does not provide a single, obvious choice of an applicable methodology, but cost-utility analysis (CUA) has emerged as a favored analytic technique for economic evaluation in health care (Neumann, Jacobson, and Palmer 2008). CUA presents the impact of services or programs in terms of incremental costs per incremental quality-adjusted life years or QALYs. CUA thus incorporates the impact in terms of the prolongation and quality of life, two crucial aspects of showing value for public health services.

In Neumann, Jacobson, and Palmer (2008), we discuss a broader range of methodologies, such as the results of cost-effectiveness/benefit/utility analyses, that LHDs should also consider using. We conclude that QALYs offer two advantages over other metrics: they capture in a single measure gains from both reduced morbidity and reduced mortality, and they incorporate the value or preferences people have for different outcomes. CUAs have their own limitations as we also note, but they provide a means for comparing diverse programs in a consistent and defensible fashion. But until a consensus approach emerges, a variety of methodological strategies will be compatible with the framework.

Communication

The final element of our framework is communicating value to policy makers and the public. Our interviews suggest that LHDs may need to communicate and engage each of these audiences differentially, and differed about the most effective strategies. Demonstrating tangible value from investments in public health will resonate with policy makers and the community and enhance the presentation of individual stories.

DISCUSSION

A key premise of our research is the idea that public health practitioners lack effective mechanisms for measuring the value of the services they provide. As a result, public health is inevitably short-changed in battles over resource allocation decisions for public services. Without the ability to measure and communicate that investments in public health services add value to population health, LHDs will continue to have difficulty competing for scarce governmental resources. The framework we propose is one step toward a more robust public health system.

We used the following criteria to develop a framework that is both conceptually rigorous and useful to practitioners. Whether these criteria are inherently incompatible and whether any one framework can address all of the dimensions simultaneously remains to be tested.

First, the framework should represent a general measure of overall value of the public health system's goods and services. Second, the framework should operate to enable practitioners to make tradeoffs at the margins between desirable services. In an era of constrained resources, LHDs may need to choose which programs to retain, which to eliminate, or which to cut. Third, the framework should distinguish between the value of public health as a system and the value of specific services. Fourth, the framework should incorporate both tangible and intangible measures of value. Fifth, the framework should be a vehicle for communicating the value of public health services to the community and its elected representatives. Sixth, the framework must be feasible. Seventh, the framework should function as a mechanism to hold practitioners accountable for public health activities.

Finally, the framework should guide the data collection and analysis processes. Key to successfully using the framework is developing the evidentiary base. As our interviews suggest, scientific effectiveness (i.e., evidence-based public health) should guide program decisions. A framework can help LHDs decide which data to collect and how the data should be analyzed, along with identifying gaps in the data and how those gaps can be filled. Implementing the framework can identify lost opportunities (and related opportunity costs) resulting from investments in public health services that do not provide value to the public's health.

The primary policy implication of our study is that the demand to demonstrate value through quantitative measures is likely to increase. Like it or not, public health practitioners will be forced to make difficult tradeoffs among desirable programs and populations. Budget reductions mean that not all services can be offered and that wrenching choices are inevitable. No framework can substitute for human judgment and experience in deciding which programs to cut or retain. But an operational framework can be effective for evaluating all programs under similar rules, providing a sound analytical rationale for decision making, and articulating the decisions to policy makers and the public.

As academics, we face the conundrum of trying to develop elegant solutions that will satisfy peer reviewers without ignoring realities that practitioners face in implementing proposed solutions. This project is a good example. The framework must be practicable, yet robust enough to achieve results that would otherwise be unavailable. Thus, the proposed framework needs to be tested empirically to determine its practicability and robustness.

Implementing the Framework

Aside from overall feasibility, the key challenges for implementing the framework are obtaining data, standardizing metrics, incorporating both tangible and intangible measures of value, and convincing skeptical elected officials to accept the resulting measures of value. Without developing better outcomes measures, along with improved data collection and analysis, there is no easy way to define and measure value. As our interviews indicate, currently available data for measuring value may be inadequate and LHDs often lack the staff capacity to implement a framework. Even if LHDs have staff capacity, the data needed to assess value may not be available or easily collected. Except, perhaps, for available resources to conduct analyses to measure value, nothing in our results suggests that variation relative to a health department's size or location would change the nature of the framework or how it might be operationalized.

Our respondents identify staff capacity issues as a major impediment to measuring value. Workforce concerns have generally been at the forefront of discussions regarding the delivery of public health services. Indeed, many respondents say they lack staff capability to conduct even rudimentary CBAs/CEAs. Several suggest the need for academic partnerships to provide the analytical capability to conduct these analyses. We support such collaborations for providing added analytical capacity.

Potential Limitations

Underlying the developing of the framework is an assumption that successful implementation will benefit LHDs. But a case can be made that using the framework will actually harm vulnerable populations. For example, it could leave LHDs worse off than before if health officers recommend an area to cut while other agencies abjure similar actions. It could result in cutting programs that would exacerbate existing inequities in service delivery. For particularly hard to reach populations, measures of value may expose them to fewer services. Another concern is that the framework essentially replaces the core values animating public health with what amounts to a market-based approach. If so, the entire exercise can be counterproductive.

Two other limitations should be mentioned. First, additional interviews might have identified other models. Second, a weakness of qualitative research is its lack of generalizability. Even so, our results have generated a testable framework for subsequent analysis.

Potential Benefits

At the same time, there are significant potential benefits from adopting this or a similar framework. As a start, the framework provides a mechanism for LHDs to identify and support programs that improve public health and eliminate those that are not producing a commensurate benefit. The framework could also be used to involve elected officials and the community in the process. Involving these groups directly in the process can raise public health's profile and mobilize public support for investing in population health.

Most importantly, our interviews suggest that the status quo is not sustainable. Our framework is hardly a panacea, and the implementation barriers are daunting. Even so, the barriers are not insurmountable, and the framework responds to the perceived need to develop new strategies that will enhance population health. Using the framework creates some risk, but so does relying on current approaches.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: This research project was funded by the Robert Wood Johnson Foundation under the Changes in Health Care Financing and Organization Program (HCFO) (grant ID #56782). We are grateful to the Foundation and Academy Health for their funding and support. We particularly want to thank Bonnie Austin, J.D., Sharon Arnold, Ph.D., Debra Perez, Ph.D., and Kate Papa, M.P.H., for their insightful comments and support throughout the research. We received significant research assistance from Jennifer A. Palmer, M.S., Margaret Parker, M.H.S.A., and Sejica Kim, M.H.S.A. (who helped prepare Table 1 and Figures 1 and 2).

Disclosures: None.

Disclaimers: None.

NOTES

The developers, Joel Lucia and Jeff Campbell of the Department of Health, Lake County, OH, have waived confidentiality so that we can attribute due credit to them.

Supporting Information

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Chapin J, Fetter B. Performance-based Contracting in Wisconsin Public Health: Transforming State-Local Relations. The Milbank Quarterly. 2002;80:97–124. doi: 10.1111/1468-0009.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neumann PJ, Jacobson PD, Palmer JA. Measuring the Value of Public Health Systems: The Disconnect between Health Economists and Public Health Practitioners. American Journal of Public Health. 2008;98:2173–80. doi: 10.2105/AJPH.2007.127134. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.