Abstract

We consider asymptotic properties of the maximum likelihood and related estimators in a clustered logistic joinpoint model with an unknown joinpoint. Sufficient conditions are given for the consistency of confidence bounds produced by the parametric bootstrap; one of the conditions required is that the true location of the joinpoint is not at one of the observation times. A simulation study is presented to illustrate the lack of consistency of the bootstrap confidence bounds when the joinpoint is an observation time. A removal algorithm is presented which corrects this problem, but at the price of an increased mean square error. Finally, the methods are applied to data on yearly cancer mortality in the United States for individuals age 65 and over.

Keywords: logistic joinpoint regression, confidence estimation, parametric bootstrap, maximum likelihood, mortality trends

1 Introduction

There are a wide variety of statistical methods for analyzing nonlinear models. If one is interested only in summarizing the trends in the data and in obtaining a good flexible non-linear fit, then one may take advantage of many types of spline models existing in the literature (see [10, 11, 13, 14, 20, 21, 23, 36, 42] and the references therein). On the other hand, if one’s interest lies mainly in estimating and making inferences on the location of structural changes in the underlying model, the model frequently considered is that of a segmented regression in which the knots (also referred to as joinpoints) are unknown (see, for instance, [15, 16, 25, 26, 27, 29, 31, 37]).

Segmented regression models are popular, for instance, as tools in modeling general disease trends and originally have been introduced in the context of epidemiological studies of occupational exposures for modeling threshold limit values in logistic regression models with a single joinpoint (see [18, 41]). Subsequently, various multiple joinpoint algorithms have also been applied to disease trend models. For instance, Kim et al. [28] suggested a sequential backward selection algorithm for testing the number of joinpoints in a model that uses the least-squares criteria under squared-error loss and a goodness-of-fit measure based on the F-statistic, and applied the algorithm to model U.S. yearly cancer rates. The algorithm has been implemented in the free software Joinpoint, version 3.3, (see http://srab.cancer.gov/joinpoint/) which facilitates fitting and testing the model for Gaussian and Poisson regressions. Czajkowski et al. [7] compared the above algorithm with a forward selection algorithm in the logistic joinpoint regression setting with multiple joinpoints where model parameters were estimated by maximum likelihood and applied the methods to model longitudinal data on cancer mortality in a cohort of chemical workers. An R package ljr [6] available at http://www.R-project.org [34] has been developed implementing both the backward and forward algorithms for the logistic joinpoint model. Many alternative approaches for selecting the number of change points based on information theory have been also considered in a variety of contexts (see [8, 30, 32, 40, 43] and the references therein).

When working with statistics having complicated distributions such as those encountered in segmented regression models, the bootstrap and parametric bootstrap are common tools used for confidence estimation (see, for instance, [4, 9, 12, 22]). In many cases, the bootstrap is effective, but there are also cases where the bootstrap is not consistent when parameters are on the boundary of the parameter space. Several examples are discussed in [1]. In this paper, we are interested in consistency of parametric bootstrap confidence bounds in the context of the logistic joinpoint regression. In particular, we describe herein a situation in which the consistency of the bootstrap confidence bounds fails, in the sense that they are not asymptotically correct. We refer to Section 7.4 of [39] for a general discussion of the bootstrap confidence bounds. The material in this paper discusses the behavior of the bootstrap for a segmented regression model; however, see [24] for a detailed discussion of the asymptotic behavior of the bootstrap for detecting changes in a multiphase linear regression model which differs from ours in that it does not impose continuity constraints.

The paper is organized as follows. In Section 2, we introduce a clustered logistic joinpoint model and discuss maximum likelihood estimation of its parameters. The consistency and asymptotic normality of the maximum likelihood and related estimators are discussed in Section 3. Section 4 gives sufficient conditions for the consistency of the parametric bootstrap, illustrates via simulation a situation when the parametric bootstrap is not consistent, and suggests a removal algorithm to restore consistency for the simulated example. Finally, in Section 5, the methods proposed are applied to data on yearly cancer mortality in the United States for individuals age 65 and over.

2 Clustered Logistic Joinpoint Model

Suppose that Y1,…, YN are independent Binomial random variables such that Yi is the sum of mi independent Bernoulli random variables each with probability of success p0i. Denote the realizations of these random variables as y1,…, yN, respectively, let y = [y1,…, yN]Τ, and let . Furthermore, let p0i have the functional form

| (1) |

where

| (2) |

and t+ = max{t, 0}. Here x1,…, xN are fixed q-dimensional covariates, and the ‘times’ t1 ≤ … ≤ tN are ordered covariates. Also, α0 is the unknown intercept, τ0 is the unknown joinpoint, β0 is the unknown slope coefficient for ti before τ0, δ0 ≠ 0 is the change in the slope coefficient after τ0, and γ0 is the unknown q-dimensional vector of coefficients for the fixed covariates.

2.1 Maximum Likelihood Estimation

In this section, the maximum likelihood estimate of is derived. The log-likelihood function for the sample y1,…, yN is given by

where θ = [α, β, τ, δ, γΤ]Τ and

It is useful to consider the jth super log-likelihood function

| (3) |

for j = 1,…,N − 1. Note that

and lj is infinitely differentiable with respect to θ, but l is not differentiable at τ = ti for i = 1,…,N if δ ≠ 0. Letting ϕ(θ) = [α, β, α − δτ, β + δ, γΤ]Τ, we can express (3) as

where and

This is a logistic regression model with the design matrix and the canonical parameter vector ϕ(θ).

The function lj is an infinitely differentiable function with respect to ϕ. Differentiating lj with respect to ϕ, we have

| (4) |

where with . Setting (4) to zero, dividing by n, and simplifying, we obtain

| (5) |

where ui = mi/n. If the matrix is of full rank, then the solution to (5) is the unique maximizer of lj since

is positive definite, where W(j)(θ) is a diagonal matrix with diagonal elements i = 1,…,N and

| (6) |

For each j = 1,…,N − 1, denote the maximizer of lj as

| (7) |

Using the invariance property of the method of maximum likelihood (for example, see [33]) where is the solution to (5).

The following algorithm can be used to compute the maximum likelihood estimate (MLE) θ̂n of θ̂0.

For j = 1,…,N −1, compute . If , then compute ; otherwise, the MLE of τ0 is not in (tj, tj+1) and there is no need to evaluate l at any θ such that τ ∈ (tj, tj+1).

- For j = 2,…,N − 1, fix τ = tj so that the model is equivalent to logistic regression with covariates 1, ti, (ti − tj)+, and xi, i = 1,…,N. Then fit a logistic regression model to obtain respective possible estimates of α0, β0, δ0, and γ0. Denote the possible estimate of θ0 as

and compute. The MLE of θ0 is the value of θ which maximizes l(θ) among the values at which we evaluate l in steps 1 and 2.

See [7] for a detailed description of an algorithm for computing the MLE in the logistic joinpoint regression model with multiple joinpoints.

3 Asymptotic properties

In this section, the consistency and the asymptotic distribution of the maximum likelihood estimator are established. Consider the function F(j) : ℝN × ℝ4+q → ℝ4+q such that

where p = [p1,…, pN]Τ and pi ∈ (0, 1) for i = 1,…,N. In particular, we will be interested in values of k such that

| (8) |

Throughout this paper, k shall be reserved for values such that (8) holds. If τ0 ∈ (tk, tk+1), then k is unique. If τ0 = tℓ for some ℓ, then (8) holds for both k = ℓ − 1 and k = ℓ.

Letting p0 = [p01,…, p0N]Τ, the following lemma uses the Implicit Function Theorem (see, for example, [35]) to express ϕ(θ) as a function of p. Proofs of lemmas and theorems are deferred to Appendix A.

Lemma 3.1

Suppose that F(k)(p, θ) = 0 and (8) holds. If Z(k) is full rank, then in an open neighborhood of (p0, θ0), say Bp0, there exists a function g(k) such that g(k)(p) = ϕ(θ) for (p, θ) ∈ Bp0 and the second partial derivatives of g(k) exist and are continuous.

Implicit differentiation can be used to obtain the explicit form for the partial derivatives of g(k). Differentiating both sides of F(k)(p, θ) = 0 with respect to pΤ, we obtain

Whereis the matrix of partial derivatives of each component of g with respect to pΤ. If Z(k) is full rank, then we have

Let p̂n = [y1/m1,…, yN/mN]Τ. Lemma 3.1 can be used to show that the maximizer of lk is a strongly consistent estimator of θ0 for any k such that the model is correctly specified. The following lemma states this result for the case when each of the mi’s increases linearly, although the linearity can be relaxed to deal with differing rates of divergence. Let be as defined by (7).

Lemma 3.2

For sufficiently large n0, suppose there exist constants 0 < c1 < c2 such that nc1 ≤ mi ≤ nc2 for i = 1,…,N when n ≥ n0. If Z(k) is full rank and (8) holds, then.

In this lemma and the following theorems, N is taken to be fixed. This setting is reasonable for analyzing data retrospectively when there is a large amount of data at each observation time or in each time-specific cluster of observations (see, for example, [7]). Since N is fixed, few assumptions are required to achieve consistent estimators. In practice, the assumption that Z(k) is full rank for values of k such that (8) holds is needed so that the parameters are identifiable. In other settings in which N is allowed to increase to infinity, additional assumptions are required on the spacing of the covariates and/or the location of the change-point parameter to ensure that there is enough data in each part of the input space to estimate all of the parameters consistently. However, the specific assumptions required depends on the particular way that N increases. For discussion about typical assumptions for some other change-point problems, see [2] and [32].

Under the conditions of Lemma 3.2, it is seen that the estimator of θ0 based on k such that the model is correctly specified is consistent. Next, it must be shown that, for each j such that τ0 ∉ [tj, tj+1], the maximum value of lj is less than the maximum value of lk. To prove this, we introduce the saturated likelihood function s : ℝN → ℝ defined by

| (9) |

which provides a parameter for each distinct set of observed covariates. Using this concept, it can be shown that the maximum likelihood estimator (maximizer of l) is strongly consistent. As before, let θ̂n denote the maximum likelihood estimator of θ0.

Theorem 3.1

Under the conditions of Lemma 3.2 for each k such that (8) holds, it follows that θ̂n → θ0 a.s. as n→∞.

Next, we examine the asymptotic distribution of θ̂n. Since consistency has been established, it suffices to consider the distribution(s) of with k given by (8).

There are two cases which must be examined. First consider the more complicated one when τ0 = tℓ for some ℓ. In this case, we must consider the joint behavior of . Denote by the (q+4)×(q+4) matrix of partial derivatives of the components of ϕ−1. Let 𝒜(0,S) denote the (multivariate) normal distribution with zero mean vector and covariance matrix S. Also, let ⇒ denote convergence in distribution. The following theorem proves the asymptotic normality of the joint distribution of these quantities. The proof is given in Appendix A.

Theorem 3.2

For sufficient large n, suppose there exist constants 0 < c1 < c2 such that nc1 ≤ mi ≤ nc2. If τ0 = tℓ for some ℓ and Z(k) is full rank for k = ℓ − 1 and k = ℓ, then

| (10) |

as n→∞ where and W(k) = W(k)(θ0) for k = ℓ − 1 and k = ℓ, and U is defined by (6).

The result for the second case where τ0 ∈ (tk, tk+1) for some k is given in Theorem 3.3. The proof is omitted since it can be proven in a manner similar to Theorem 3.2. Note that when n is sufficiently large, as shown in the proof of Theorem 3.1.

Theorem 3.3

For sufficient large n, suppose there exist constants 0 < c1 < c2 such that nc1 ≤ mi ≤ nc2. If τ0 ∈ (tk, tk+1) for some k and Z(k) is full rank, then

as n → ∞ where , and U is defined by (6).

4 Consistency of Bootstrap Confidence Bounds

Suppose we use the parametric bootstrap to generate Binomial random variables with sizes mi and probabilities of success p̂0i for i = 1,…,N and b = 1,…, B where B is the number of bootstrap samples. Set and let denote the bth bootstrap replication of θ̂n, with P and P* being the probability measures under θ0 and, respectively.

4.1 Consistency when τ0 ≠ tℓ for any ℓ

We shall consider the consistency of the bootstrap percentile method. For simplicity, we describe the one-sided interval for a parameter ζ0, but the results extend to two-sided intervals with proper modifications. Set. Then the bootstrap estimator of the upper bound of the α-level one-sided percentile confidence interval for ζ0 is . The following lemma gives sufficient conditions for the consistency of bootstrap confidence intervals of this form. For a proof, see Theorem 7.9 of [39].

Lemma 4.1

Let and. Suppose that

supx|Hn (x) − H^B(x)| = op(1)

supx|Hn(x) − Ψ(x)| = o(1) for some continuous, strictly increasing and symmetric about zero distribution Ψ(x)

Then P(ζBP ≤ ζ0) → 1 − α as n → ∞.

Thus, in order to prove consistency of bootstrap confidence intervals for aΤθ0 where a is a fixed non-zero (q + 4)-dimensional vector, we need to check conditions (1) and (2). If τ0 ∈ (tk, tk+1), Theorem 3.3 verifies condition (2) of Lemma 4.1. So, for the case when τ0 ∈ (tk, tk+1), we next verify condition (1) in the following result.

Theorem 4.1

Under the conditions of Theorem 3.3, it follows that

| (11) |

as n → ∞.

Hence, from the last theorem, we see that the conditions for bootstrap confidence interval consistency given in Lemma 4.1 hold when τ0 ∈ (tk, tk+1) since (11) implies convergence in probability. Continuity of Ψ will not hold if τ0 = tℓ for some ℓ as shown by Theorem 3.2.

4.2 Simulation when τ0 = tℓ for some ℓ

To illustrate the behavior of the bootstrap estimates and confidence bounds when τ0 = tℓ for some ℓ, we perform the following simulation study. Suppose that we have equally-spaced observation times ti = i for i = 1,…,N = 7, no additional covariates (q = 0), true coefficient values α0 = β0 = 0 and δ0 = 0.2, and a joinpoint at τ0 = 4 for the model specified by (1) and (2). For various choices of m1 =…= m7, we simulate R = 1, 000, 000 data sets and compute the estimate of τ0 and the bootstrap estimate of τ0 for each data set.

One simple way to see that Theorem 4.1 does not hold for this case is to use our simulation to estimate the proportion of times that τ̂n equals τ0 = 4 and compare this with the proportion of times that the bootstrap estimate equals τ0. These quantities, denoted by P̂ (τ̂n = 4) and , as well as empirical estimates of the mean square error and the bootstrap estimateof this quantity are reported in Table 1 for mi = 100, 105, 107, and 109 using the contributed R package ljr [6]. Clearly, the bootstrap underestimates the true probability that τ̂n = 4 as seen in columns 2 and 4 of Table 1 and overestimates the true MSE of τ̂n as seen in columns 3 and 5.

Table 1.

Simulation results for the bootstrap method applied to the MLE.

|

mi, i = 1,…, 7 |

P^(τ^n = τ0) | |||

|---|---|---|---|---|

| 103 | .2007 | 3.967 × 10−1 | .1097 | 4.779 × 10−1 |

| 105 | .2023 | 2.792 × 10−3 | .1157 | 3.545 × 10−3 |

| 107 | .2020 | 2.899 × 10−5 | .1155 | 3.698 × 10−5 |

| 109 | .2020 | 3.122 × 10−7 | .1160 | 3.972 × 10−7 |

The estimate P̂(τ̂n = 4) = .2020 when mi = 109 agrees with the theoretical value suggested by Theorem 3.2. As n → ∞, note that

where

and

Thus, for large n, we have

this bivariate normal probability can be computed using the contributed R package mvtnorm [17].

4.3 Removal Algorithm

Herein we discuss a modified estimator of τ0 for which bootstrap consistency holds as a remedy for the lack of consistency of the parametric bootstrap when τ0 = ti for some i. First, compute θ̂n. Then remove the observation(s) at the observation time tℓ which is closest to τ̂n and re-fit the MLE without the observation(s), denoting the result as

If the matrix Z(k), with k defined by (8), is still full rank without the observation(s) and mi satisifies the condition in Lemma 3.2, then bootstrap consistency holds for this algorithm even if τ0 = tℓ for some ℓ (since these observations will be removed with probability 1 as n → ∞).

As an illustration of the effect of using this algorithm, we use the same simulated data sets considered in Section 4.2 and compare the results for the bootstrap based on the method of maximum likelihood with the bootstrap based on the removal algorithm. Letting τ̂ (.95) and represent the 95th percentiles of the empirical distributions of τ̂n and , Table 2 computes the estimated tail probabilities andfor the bootstrap method. Clearly, the bootstrap overestimates the 95th percentile of the distribution of τ̂n − τ0, but provides a good estimate of even for relatively small sample sizes. However, the tradeoff for obtaining accurate confidence bounds is the loss of information caused by discarding part of the data. This also can be seen in Table 2 by observing that the estimates of the mean square errors for are higher than those for τ̂n.

Table 2.

Comparison of results for the MLE and the estimates based on the removal algorithm.

|

mi, i = 1,…, 7 |

||||

|---|---|---|---|---|

| 103 | .0625 | 3.967 × 10−1 | .0496 | 7.910 × 10−1 |

| 105 | .0670 | 2.792 × 10−3 | .0500 | 4.738 × 10−3 |

| 107 | .0676 | 2.899 × 10−5 | .0499 | 4.730 × 10−5 |

| 109 | .0676 | 3.122 × 10−7 | .0499 | 5.061 × 10−7 |

5 Example

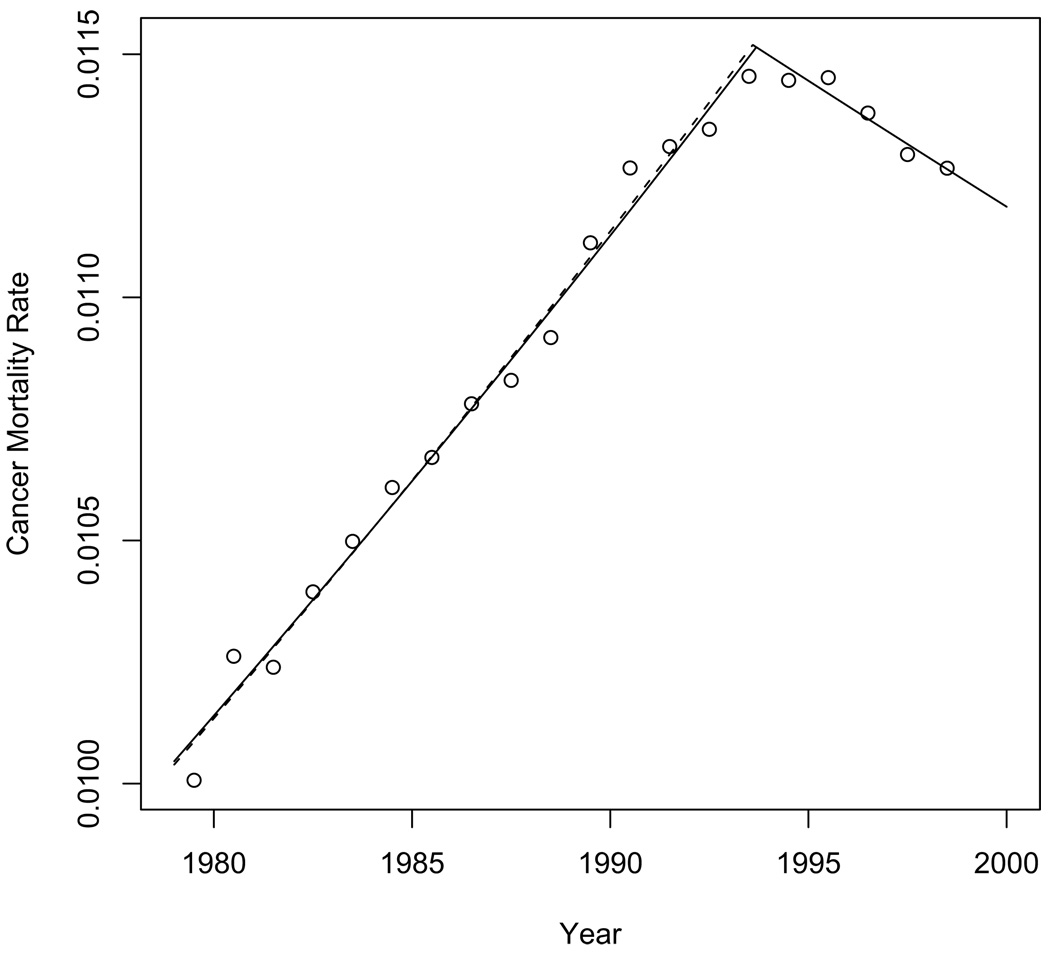

We now apply the method of clustered logistic joinpoint regression to model yearly cancer mortality in the United States for individuals age 65 and over during the period 1979–1998. The data set was obtained from the CDC wonder database [5], and it includes yi – the number of deaths in the ith observed year due to neoplasms (ICD-9 codes 140–239), mi –the population during the ith observed year, and ti = 1978.5 + i – the midpoint of the ith observed year. So, we use the model given by (1) and (2) with q = 0. The observed cancer mortality rates yi/mi are plotted versus time in Figure 1.

Figure 1.

Observed US yearly cancer mortality rates for individuals age 65 and over. The solid line gives the fitted model based on all of the data. The dashed line gives the fitted model with the 15th observation (where t15 = 1993.5) removed.

Table 3 gives the parameter estimates for each of the unknown parameters and the fitted probabilities based on maximum likelihood are illustrated by the solid curve in Figure 1. The estimated joinpoint τ̂n = 1993.686 supports previous findings which attribute the decrease in cancer mortality to improvements in prevention, detection, and treatments [19].

Table 3.

Parameter estimates in the logistic joinpoint regression model for the US yearly 65+ cancer mortality data.

| Parameter | Estimate | Value | Estimate | Value |

|---|---|---|---|---|

| α0 | α^n | −23.17397 | −23.44145 | |

| β0 | β^n | .0093903 | .0095251 | |

| δ0 | δ^n | −.0140205 | −.0141553 | |

| τ0 | τ^n | 1993.686 | 1993.595 | |

Next, we obtain estimated 95% confidence bounds for τ based on the parametric bootstrap. Using the estimates listed in Table 3 as our model parameters, we generate R = 100000 bootstrap samples and compute the estimate of τ for each sample. Then our estimated confidence bounds are the 2.5 and 97.5 percentiles of the bootstrap distribution of τ. In this manner, we obtain the confidence bounds (1993.371, 1993.930).

Note that the 15th observed year t15 = 1993.5 falls within this interval. Thus, there might be a problem with consistency of the bootstrap as discussed in Section 4. We can attempt to remedy this possible bias by repeating our analysis with i = 15 removed from the data set. When the model is fit without the 15th observation, the estimate of the joinpoint changes slightly to . The estimated coefficients change very little (see Table 3) and the fitted probabilities based on the removal algorithm are illustrated by the dashed curve in Figure 1.

The 95% estimated confidence bounds with observation 15 omitted are (1993.318,1993.851). While it is true that the interval still contains 1993.5, this value is no longer in our data set since it has been removed, and it seems reasonable to claim that it is unlikely that we are dealing with a situation where τ0 = tℓ for some ℓ and, thus, the consistency of the bootstrap confidence bounds is more plausible.

6 Summary and Conclusion

After presenting details necessary for the computation of the maximizer of the super log-likelihood functions and the MLE of the parameters in the clustered logistic joinpoint model, we considered the asymptotic properties of these estimators. Sufficient conditions for the consistency of the MLE were given. Asymptotic normality of the MLE was also shown under the same conditions as long as the true location of the joinpoint had not been at one of the observation times. Under this latter proviso we also showed the consistency of the bootstrap confidence bounds.

However, if the true location of the joinpoint was one of the observation times, then it was shown that the joint distribution of the maximizers of indices corresponding to the neighboring intervals was asymptotically normal. A simulation study was performed to illustrate the lack of consistency of the bootstrap method in generating confidence bounds in this case due to asymptotic bias. It was also shown that we could remove this bias and obtain consistent estimates via a removal algorithm at the cost of a higher MSE.

Finally, the model and the methods were used to analyze yearly cancer mortality in the United States for individuals age 65 and over. The bootstrap confidence interval included one observation time, so the model was refit without that observation. There is only a slight change in the resulting fitted model, but the consistency of the bootstrap is more plausible with the second fit. An R package ljr [6] capable of fitting these models is available in the contributed packages at http://www.R-project.org [34].

A Proofs

Proof of Lemma 3.1

Since F(k) is infinitely differentiable and F(k)(p0, θ0) = 0, it remains to verify that the determinant ofis not zero in order to apply the Implicit Function Theorem. Here D = UW(k)(θ0) is a N × N diagonal matrix with diagonal elements di = mip0i(1 − p0i)/n for i = 1,…,N. Since di ∈ (0, 1),has a nonzero determinant if and only if Z(k) is full rank.

Proof of Lemma 3.2

The strong law of large numbers implies that p̂n → p0 a.s. as n → ∞. For all θ, the left side of (5) converges to F(k)(p0, θ) a.s. The continuity of F(k) implies that.

It is clear that θ0 is the unique value of θ such that F(k)(p0, θ) = 0 by the same argument as the one used to show that the solution to (5) is a unique maximizer of lj when yi/ni is replaced by pi. Thus, it follows that as n→∞.

Proof of Theorem 3.1

In view of Lemma 3.2, it suffices to show that, with probability 1,if τ0 ∈ [tk, tk+1] but τ0 ∉ [tj, tj+1] From Lemma 3.2, we have. Using the continuity of , it follows that . for i = 1,…,N. Hence, we have as n→∞.

Let be the set of points considered by the saturated model and let

be the set of fitted probabilities corresponding to restricting τ ∈ [tk, tk+1]. Clearly 𝒜k ⊂ 𝒮 for all k. Furthermore, p0 ∈ 𝒜k if τ0 ∈ [tk, tk+1] and p0 ∉ 𝒜j if τ0 ∉ [tj, tj+1]. Thus, maxθ∈𝒜j l(θ) < s(p0) and so that maxθ∈𝒜j l(θ) < maxθ∈𝒜k l(θ) with probability 1.

Consequently, if k is unique, then τ̂n ∈ (tk, tk+1) a.s. as n → ∞. If k is not unique, then τ̂n ∈ (tℓ−1, tℓ+1) a.s. as n →∞, and we have as n → ∞ by Lemma 3.2. In either case, it follows that θ̂n → θ0 a.s. as n → ∞.

Proof of Theorem 3.2

Take any a ∈ ℝ2(q+4) and let. Using a multivariate Taylor series expansion, we obtain

| (12) |

where is the Hessian matrix of aΤg evaluated at a point p̂n on the segment connecting p̂n and p0. Note that

| (13) |

as n → ∞. Since H(p̂n) is symmetric, we can apply the singular value decomposition to obtain H(p̂n) = C(p̂n)Λ(p̂n)C(p̂n)Τ where C(·) is orthogonal and Λ(·) is diagonal with entries λ1 ≥ … ≥ λ2(q+4). Note that λ1 is bounded as n → ∞. Thus, (13) implies that

| (14) |

Hence, (12), (14), and Slutsky’s Theorem (see [3]) imply that

as n → ∞. So, the Cramér-Wold Criterion (see, for example, [38]) implies that

| (15) |

as n → ∞. Sincefor k =ℓ−1, ℓ and, a similar argument can be used to show that (15) implies (10).

Proof of Theorem 4.1

Simple modifications can be made to the arguments given in Theorem 3.2 to show that

as n → ∞, where

As n → ∞, we have p̂n → p0 a.s. and θ̂n → θ0 a.s. so that Sn → S0 a.s. where

Thus, by Slutsky’s Theorem, we have

as n → ∞. Thus for any non zero vector a ∈ Rq+4 with probability one andhave the same weak limit. The triangle inequality and the fact that in this case the weak convergence is uniform, yield (11).

Acknowledgement

The research was partially sponsored by the National Cancer Institute under grant R15 CA106248-02. The authors thank an anonymous reviewer and the associate editor for their helpful comments which helped to improve the paper.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Andrews DWK. Inconsistency of the bootstrap when a parameter is on the boundary of the parameter space. Econometrica. 2000;68(2):399–405. [Google Scholar]

- 2.Bai J. Estimation of a change point in multiple regression models. The Review of Economics and Statistics. 1997;79(4):551–563. [Google Scholar]

- 3.Bilodeau M, Brenner D. Theory of Multivariate Statistics. New York: Springer-Verlag; 1999. [Google Scholar]

- 4.Carpenter J, Bithell J. Bootstrap confidence intervals: when, which, what? A practical guide for medical statisticians. Statistics in Medicine. 2000;19:1141–1164. doi: 10.1002/(sici)1097-0258(20000515)19:9<1141::aid-sim479>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- 5.Centers for Disease Control and Prevention, National Center for Health Statistics. Compressed Mortality File 1979–1998. CDC WONDER On-line Database, compiled from Compressed Mortality File CMF 1968–1988, Series 20, No. 2A, 2000 and CMF 1989–1998, Series 20, No. 2E. 2003 Accessed at http://wonder.cdc.gov/cmf-icd9.html on March 23, 2008.

- 6.Czajkowski M, Gill R, Rempala G. ljr: Logistic Joinpoint Regression. 2007 R package version 1.0-1. [Google Scholar]

- 7.Czajkowski M, Gill R, Rempala G. Model selection in logistic joinpoint regression with applications to analyzing cohort mortality patterns. Statistics in Medicine. 2008;27:1508–1526. doi: 10.1002/sim.3017. [DOI] [PubMed] [Google Scholar]

- 8.Chen J, Gupta AK. Parametric Statistical Change Point Analysis. Boston: Birkhauser; 2000. [Google Scholar]

- 9.Davison AC, Hinkley DV. Bootstrap Methods and their Applications. Cambridge: Cambridge University Press; 1997. [Google Scholar]

- 10.de Boor C. A Practical Guide to Splines. Berlin: Springer; 1978. [Google Scholar]

- 11.Dierckx P. Curve and Surface Fitting with Splines. Oxford: Clarendon; 1993. [Google Scholar]

- 12.Efron B, Tibshirani R. An Introduction to the Bootstrap. New York: Chapman and Hall; 1993. [Google Scholar]

- 13.Eilers PHC, Marx BD. Flexible smoothing with B-splines and penalties. Statistical Science. 1996;11(2):89–102. [Google Scholar]

- 14.Eubank RI. Spline Smoothing and Nonparametric Regression. New York: Marcel Dekker; 1988. [Google Scholar]

- 15.Feder PI. On asymptotic distribution theory in segmented regression problems – identified case. Annals of Statistics. 1975;3:49–83. [Google Scholar]

- 16.Gallant AR, Fuller WA. Fitting segmented polynomial regression models whose joinpoints have to be estimated. Journal of the American Statistical Association. 1973;68:144–147. [Google Scholar]

- 17.Genz A, Bretz F, Hothorn T. mvtnorm: Multivariate Normal and T Distribution. 2008 R package version 0.8–3. [Google Scholar]

- 18.Gössl C, Küchenhoff H. Bayesian analysis of logistic regression with an unknown change point and covariate measurement error. Statistics in Medicine. 2001;20:3109–3121. doi: 10.1002/sim.928. [DOI] [PubMed] [Google Scholar]

- 19.Grady DUS. Cancer Death Rates Are Found to Be Falling. New York Times. 2007 October 15; Accessed at http://www.nytimes.com/2007/10/15/us/15cancer.html on March 23, 2008.

- 20.Green PJ, Silverman BW. Nonparametric Regression and Generalized Linear Models. London: Chapman & Hall; 1994. [Google Scholar]

- 21.Gu C. Smoothing Spline ANOVA Models. New York: Springer; 2002. [Google Scholar]

- 22.Hall P. The Bootstrap and Edgeworth Expansion. New York: Spring-Verlag; 1992. [Google Scholar]

- 23.Hastie T, Tibshirani R, Friedman J. Elements of Statistical Learning: Data Mining, Inference, and Prediction. New York: Springer; 2001. [Google Scholar]

- 24.Hušková M, Picek J. Bootstrap in detection of changes in linear regression. Sankhyā. 2005;67:200–226. [Google Scholar]

- 25.Jandhyala VK, MacNeill IB. Tests for parameter changes at unknown times in linear regression models. Journal of Statistical Planning and Inference. 1991;27:291–316. [Google Scholar]

- 26.Jandhyala VK, MacNeill IB. Iterated partial sum sequences of regression residuals and tests for changepoints with continuity constraints. Journal of the Royal Statistical Society B. 1997;59:147–156. [Google Scholar]

- 27.Jarušková D. Testing appearance of linear trend. Journal of Statistical Planning and Inference. 1998;70:263–276. [Google Scholar]

- 28.Kim H-J, Fay MP, Feuer EJ, Midthune DN. Permutation tests for joinpoint regression with applications to cancer rates. Statistics in Medicine. 2000;19:335–351. doi: 10.1002/(sici)1097-0258(20000215)19:3<335::aid-sim336>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- 29.Kim H-J, Fay MP, Yu B, Barrett MJ, Feuer EJ. Comparability of segmented regression models. Biometrics. 2004;60:1005–1014. doi: 10.1111/j.0006-341X.2004.00256.x. [DOI] [PubMed] [Google Scholar]

- 30.Kim H-J, Yu B, Feuer EJ. Selecting the number of change-points in segmented linear regression. Statistica Sinica. preprint. [PMC free article] [PubMed] [Google Scholar]

- 31.Kim J, Kim H-J. Asymptotic results in segmented multiple regression. Journal of Multivariate Analysis. preprint. [Google Scholar]

- 32.Liu J, Wu S, Zidek JV. On segmented multivariate regression. Statistica Sinica. 1997;7:497–525. [Google Scholar]

- 33.Pawitan Y. In All Likelihood: Statistical Modelling and Inference Using Likelihood. Oxford: Oxford University Press; 2001. [Google Scholar]

- 34.R Development Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2008. ISBN 3-900051-07-0, URL http://www.R-project.org. [Google Scholar]

- 35.Rempala GA, Szatzschneider K. Bootstrapping Parametric Models of Mortality. Scandinavian Actuarial Journal. 2004;1:53–78. [Google Scholar]

- 36.Ruppert D. Selecting the number of knots for penalized splines. Journal of Computational Graphics and Statistics. 11(4):735–757. [Google Scholar]

- 37.Seber GAF, Wild CJ. Nonlinear Regression. New York: Wiley; 1989. [Google Scholar]

- 38.Serfling RJ. Approximation Theorems of Mathematical Statistics. New York: Wiley; 1980. [Google Scholar]

- 39.Shao J. Mathematical Statistics. New York: Springer; 1999. [Google Scholar]

- 40.Tiwari RC, Cronin KA, Davis W, Feuer EJ. Bayesian model selection for join point regression with application to age-adjusted cancer rates. Applied Statistics. 2005;54:919–939. [Google Scholar]

- 41.Ulm K. A statistical method for assessing a threshold in epidemiological studies. Statistics in Medicine. 1991;20:341–349. doi: 10.1002/sim.4780100306. [DOI] [PubMed] [Google Scholar]

- 42.Wahba G. Spline Models for Observational Data. Philadelphia: Society for Industrial and Applied Mathematics; 1990. [Google Scholar]

- 43.Yao Y-C. Estimating the number of change-points via Schwarz’ criterion. Statistics and Probability Letters. 1988;6:181–189. [Google Scholar]