Abstract

The spontaneous tendency to synchronize our facial expressions with those of others is often termed emotional contagion. It is unclear, however, whether emotional contagion depends on visual awareness of the eliciting stimulus and which processes underlie the unfolding of expressive reactions in the observer. It has been suggested either that emotional contagion is driven by motor imitation (i.e., mimicry), or that it is one observable aspect of the emotional state arising when we see the corresponding emotion in others. Emotional contagion reactions to different classes of consciously seen and “unseen” stimuli were compared by presenting pictures of facial or bodily expressions either to the intact or blind visual field of two patients with unilateral destruction of the visual cortex and ensuing phenomenal blindness. Facial reactions were recorded using electromyography, and arousal responses were measured with pupil dilatation. Passive exposure to unseen expressions evoked faster facial reactions and higher arousal compared with seen stimuli, therefore indicating that emotional contagion occurs also when the triggering stimulus cannot be consciously perceived because of cortical blindness. Furthermore, stimuli that are very different in their visual characteristics, such as facial and bodily gestures, induced highly similar expressive responses. This shows that the patients did not simply imitate the motor pattern observed in the stimuli, but resonated to their affective meaning. Emotional contagion thus represents an instance of truly affective reactions that may be mediated by visual pathways of old evolutionary origin bypassing cortical vision while still providing a cornerstone for emotion communication and affect sharing.

Keywords: affective blindsight, electromyography, emotional body language, motor resonance, emotional contagion, face

Facial and bodily gestures play a fundamental role in social interactions, as shown by the spontaneous tendency to synchronize our facial expressions with those of another person during face-to-face situations, a phenomenon termed emotional contagion (1). This rapid and unintentional transmission of affects across individuals highlights the intimate correspondence between perception of emotions and expressive motor reactions originally envisaged by Darwin. Moreover, emotional contagion has been proposed as the precursor of more complex social abilities such as empathy or the understanding of others' intentions and feelings (2, 3). Ambiguity nevertheless persists about the nature of emotional contagion, and the neuropsychological mechanisms underlying automatic expressive reactions in the observer are still underexplored.

A crucial question concerns the degree of automaticity of emotional contagion and the role of visual awareness for the eliciting stimulus in the unfolding of facial reactions. Available evidence shows that perceptual mechanisms that bypass visual cortex are sufficient for processing emotional signals, most notably facial expressions (4, 5), akin to phenomena previously shown in animal research (6). The clearest example is provided by patients with lesions to the visual cortex, as they may reliably discriminate the affective valence of facial expressions projected to their clinically blind visual field by guessing (affective blindsight), despite having no conscious perception of the stimuli to which they are responding (7, 8). But it is still unknown whether nonconscious perception of emotions in cortically blind patients may lead to spontaneous facial reactions or to other psychophysiological changes typically associated to emotional responses. Furthermore, whether emotional processing in the absence of cortical vision is confined to specific stimulus categories (e.g., facial expressions) or emotions (e.g., fear) is still the subject of debate (9).

One related issue regards the processes underlying emotional contagion. A current proposal posits that observable (i.e., expressive) aspects of emotional contagion are based on mimicry (1–3). According to this view, we react to the facial expression seen in others with the same expression in our own face because the perception of an action, either emotionally relevant or neutral, prompts imitation in the observer; a process that implies a direct motor matching between the effectors perceived and those activated by the observer (motor resonance) (10). An alternative account considers emotional contagion as an initial marker of affective, instead of motor-mimetic, reactions that unfold because the detection of an emotional expression induces in the observer the corresponding emotional state (11, 12). Since the same emotional state may be displayed by different expressive actions such as facial gestures or body postures, there is no need to postulate a direct motor matching between the observed and executed action, providing the two convey the same affective meaning (13, 14). Therefore, exposing participants to facial as well as bodily expressions of the same emotion would contribute toward the disentanglement of motor from affective accounts of emotional contagion, because it enables one to assess whether stimuli that are very different in their visual characteristics nevertheless trigger similar affective and facial reactions in the observer.

In the present study, we addressed these debated issues within a single experimental design applied to two selected patients, D.B. and G.Y., with unilateral destruction of occipital visual cortex and ensuing phenomenal blindness over one half of their visual fields. These patients provide a unique opportunity to compare directly and under the most stringent testing conditions emotional reactions to different classes of (consciously) seen and “unseen” stimuli. In fact, all parameters of stimulus presentation may be kept constant while simply varying the position where the stimuli are projected (intact vs. blind visual field). Furthermore, reactions arising in response to unseen stimuli in these subjects can be unambiguously considered to be independent of early visual cortical processing and also of conscious perception.

Facial and bodily expressions were presented in alternating blocks either to the intact or to the blind visual field for 2 s in a simple 2 × 2 exposure paradigm. Within each block, fearful or happy expressions were randomly intermingled. Facial movements were objectively measured through electromyography (EMG) to index expressive facial responses unknowingly generated by the patients. Specifically, activity in the zygomaticus major (ZM) and corrugator supercilii (CS) muscles was recorded, as these two muscles are differentially involved in smiling or frowning, respectively (15). Pupil dilatation was also independently assessed as a psychophysiological index of autonomic activity that might be expected to accompany early stages of emotional, but not motor-mimetic, responses (16). In fact, phasic pupil dilatation provides a sensitive measure of increase in autonomic arousal induced by sympathetic system activity (17). Finally, when stimulus offset was signaled by an acoustic tone, the patients were asked to categorize by button-press the emotional expression just presented (happy or fearful) in a two-alternative forced-choice (2AFC) task, thus providing a behavioral measure of emotion recognition. In blocks when the stimuli were projected to their blind field, the patients were required to “guess” the emotion displayed.

Results

Behavioral Results.

All expressions were correctly recognized significantly above chance level by D.B. (mean accuracy in the seeing field: 90.6%; in the blind field: 87.6%; P < 0.0001, by binomial tests for all eight conditions) and G.Y. (mean accuracy in the seeing field: 86.8%; in the blind field: 73.5%; P ≤ 0.05) (Tables S1 and S2). Noteworthy, accuracy of (nonconscious) emotion discrimination in the blind field was not significantly different from that in the seeing field and was not influenced by either affective valence (happy vs. fear) or type of expression (faces vs. bodies) [D.B.: χ2 (1) ≤ 0.001, P ≥ 0.99; G.Y.: χ2 (1) ≤ 0.001, P ≥ 0.95, for all cross-tabulations]. This confirms previous results of accurate discrimination of facial expressions in G.Y. and extends the same findings to bodily expressions and to patient D.B., who has the opposite field of cortical blindness.

EMG Results.

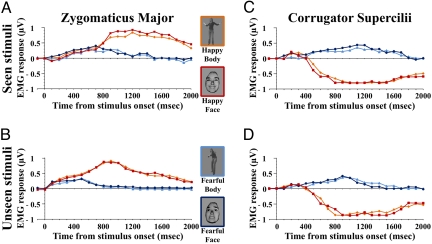

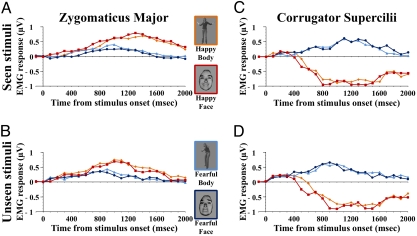

Figs. 1 and 2 display the mean rectified EMG responses from prestimulus baseline in patients D.B. and G.Y., respectively.

Fig. 1.

EMG responses in patient D.B. (A) Mean responses in the ZM for seen stimuli. (B) Mean responses in the ZM for unseen stimuli. (C) Mean responses in the CS for seen stimuli. (D) Mean responses in the CS for unseen stimuli. Frame color on the stimuli corresponds to coding of EMG response waveforms to the same class of stimuli.

Fig. 2.

EMG responses in patient G.Y. (A) Mean responses in the ZM for seen stimuli. (B) Mean responses in the ZM for unseen stimuli. (C) Mean responses in the CS for seen stimuli. (D) Mean responses in the CS for unseen stimuli. Frame color on the stimuli corresponds to coding of EMG response waveforms to the same class of stimuli.

D.B. Amplitude Profile.

Mean peaks of rectified EMG response amplitudes were subjected to a 2 × 2 × 2 factorial design with emotion (happiness vs. fear), expression (face vs. body), and visual field (intact vs. blind) as within-subjects factors. A separate repeated-measures analysis of variance (ANOVA) was performed for each muscular region.

ZM activity was enhanced in response to happy as compared to fearful expressions, as shown by the significant main effect of emotion [F (1, 31) = 644.2, P < 0.0001]. No other main effect or interaction resulted in significant outcomes [F (1, 31) ≤ 2.99, P ≥ 0.093; for all factors and interactions]. This indicates that facial and bodily expressions of happiness were equally effective in inducing a spontaneous ZM response that, in turn, was similar under conditions of either conscious or nonconscious stimulus perception.

The CS was more activated by fearful than by happy expressions [F (1, 31) = 405.8, P < 0.0001]. There was also a significant emotion × expression × visual field interaction [F (1, 31) = 4.29, P = 0.047]. Posthoc Bonferroni tests on the three-way interaction showed that fearful facial expressions evoked greater CS response than fearful bodily expressions, but only when the stimuli were projected to the intact field and, therefore, consciously perceived (P = 0.016). Conversely, facial and bodily expressions of fear evoked the same CS activity when presented to the blind field (P = 1).

D.B. Temporal Profile.

An ANOVA with the same factors and levels considered previously was also computed on mean time of peak amplitudes data to reveal possible differences in the time unfolding of the EMG response.

In the ZM, the main effect of emotion and of visual field were significant, as well as their interaction [F (1, 31) = 205.5, P < 0.0001; F (1, 31) = 93.1, P < 0.0001; F (1, 31) = 65.7, P < 0.0001; respectively]. Posthoc comparisons on the interaction showed that the EMG response to the happy expressions (i.e., to those expressions that boosted ZM activity) was significantly faster (peaking at around 900 ms from stimulus onset) when the stimuli were presented to the blind field, as compared to the seeing field (when peak activity occurred at around 1,200 ms) (P < 0.0001). This effect was not present in response to fearful expressions that produced a similar temporal profile of the ZM activity in both visual fields (P = 1).

A similar pattern of results was also observed in the CS muscle, with a significant main effect of emotion, of visual field, and a significant emotion × visual field interaction [F (1, 31) = 4041.9, P < 0.0001; F (1, 31) = 78.9, P < 0.0001; F (1, 31) = 61.9, P < 0.0001; respectively]. CS activity reached peak amplitude more rapidly in response to nonconsciously than consciously perceived fearful expressions (around 900 ms vs. 1,100 ms from exposure, respectively) (P < 0.0001). Even in this case, the faster response to unseen stimuli was specifically associated with the emotion that evoked greater CS activity, as this effect was not observed in response to happy expressions (P = 0.81).

G.Y. Amplitude Profile.

The ZM was more reactive to happy than to fearful expressions [F (1, 31) = 25.8, P < 0.0001]. The expression × visual field and the emotion × expression × visual field interactions also titled out significant [F (1, 31) = 5.8, P = 0.02; F (1, 31) = 5.5, P < 0.03; respectively]. Posthoc testing revealed that happy faces induced grater ZM response amplitude than happy bodies in the seeing visual field (P = 0.04), but elicited the same amplitude when shown in the blind field (P = 1).

CS activity increased in response to fearful but not to happy expressions, as shown by the significant main effect of emotion [F (1, 31) = 317.8, P < 0.0001]. No other factor or interaction resulted significant, thereby indicating similar CS responses to faces and bodies alike, and no difference between the blind and intact field [F (1, 31) ≤ 1.7, P ≥ 0.2].

G.Y. Temporal Profile.

The ANOVA computed on the ZM showed a significant effect of emotion, visual field, and of their interaction [F (1, 31) = 38.7, P < 0.0001; F (1, 31) = 43.7, P < 0.0001; F (1, 31) = 9.3, P = 0.005; respectively]. The interaction indicates that happy expressions induced faster ZM activity when shown to the blind field (peaking at around 1,000 ms from stimulus onset) as contrasted to the intact field (peaking at around 1,300 ms) (P < 0.0001); an effect that was not observed for fearful expressions (P = 0.6).

The CS showed a temporal profile similar to that reported for the ZM, with the factors emotion, visual field, and emotion × visual field resulting statistically significant [F (1, 31) = 2482.1, P < 0.0001; F (1, 31) = 48.8, P < 0.0001; F (1, 31) = 18.3, P = 0.0002; respectively]. Activity in the CS unfolded more rapidly in response to unseen (peaking about 900 ms after stimulus exposure) than seen fearful expressions (1,100 ms) (P < 0.0001). Happy expressions, which failed to trigger CS activity, did not induce a different temporal response as a function of conscious or nonconscious stimulus perception (P = 1).

Pupillometric Results.

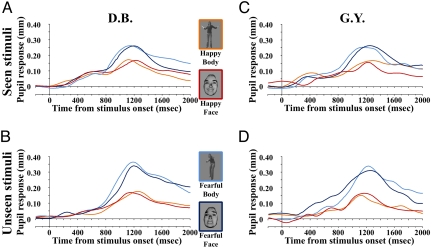

Mean papillary response waveforms from baseline are shown in Fig. 3 for D.B. and G.Y.

Fig. 3.

Pupil responses in patients D.B and G.Y. (A) Mean pupil responses for seen stimuli in patient D.B. (B) Mean pupil responses for unseen stimuli in patient D.B. (C) Mean pupil responses for seen stimuli in patient G.Y. (D) Mean pupil responses for unseen stimuli in patient G.Y. Frame color on the stimuli corresponds to coding of pupil response waveforms to the same class of stimuli.

D.B. Amplitude Profile.

Mean peak amplitudes of phasic pupil dilatation were submitted to a repeated-measures ANOVA with the same factors and levels previously considered in the analysis of EMG data. There was a significant main effect of emotion, showing that pupillary dilatation was boosted more by fearful than happy expressions [F (1, 31) = 55.2, P < 0.0001]. The emotion × visual field interaction was also significant [F (1, 31) = 5.2, P = 0.03], as fearful, but not happy, expressions evoked greater dilatation when presented to the blind as contrasted to seeing visual field (P = 0.013). Noteworthy, the effect of expression or any interaction with this factor did not reach significance, showing that facial and bodily expressions were equally valid in inducing physiological arousal [F (1, 31) ≤ 1.1, P ≥ 0.3].

D.B. Temporal Profile.

The same ANOVA computed on amplitude data was also performed on mean time of peak amplitudes, showing no significant main effect or interaction [F (1, 31) ≤ 1.4, P ≥ 0.2]. This indicates that the temporal profile of pupillary dilatation was similar for all conditions, peaking in the time-range 1,120–1,260 ms from stimulus onset.

G.Y. Amplitude Profile.

The ANOVA showed a grater pupillary dilatation in response to fearful expressions, as indicated by the significant factor of emotion [F (1, 31) = 52.2, P < 0.0001]. Although the emotion × visual field interaction only approached statistical significance [F (1, 31) = 3.5, P = 0.07], the trend was in the same direction reported for D.B., with higher dilatation when fearful expressions were presented to the blind visual field.

G.Y. Temporal Profile.

Mean peak of pupillary dilatation took place for all conditions around the time-range 1,120–1,280 ms from stimulus onset. No main effect or interaction was found in the ANOVA assessing the temporal profile of peak pupillary dilatation [F (1, 31) ≤ 3.9, P ≥ 0.06, for all factors and interactions].

Discussion

Emotional Contagion for Seen and Unseen Inducers.

The first noteworthy finding is that passive exposure to either seen or unseen expressions resulted in highly comparable psychophysiological responses that systematically reflected the affective valence and arousal components of the stimuli. Specifically, happy expressions selectively modulated EMG activity in the ZM, whereas fearful expressions increased responses in the CS. Consistent with expressive facial reactions, we also observed emotion-specific pupil responses indicative of autonomic arousal, with increased magnitude of pupillary dilatation for fearful as compared to happy expressions. These pupillary changes cannot be ascribed to physical parameters of the stimuli (e.g., brightness or light flux changes), because the different stimuli were matched in their physical characteristics such as size and mean luminance. Moreover, pupillary changes associated with these basic stimulus attributes or with orienting responses have faster latencies than those related to arousal responses (around 250 ms vs. 1,000 ms) (17).

Autonomic activity is generally considered to reflect the intensity rather than the valence of the stimuli. Therefore, the greater dilatation in response to fearful than to happy stimuli may simply indicate that the former stimuli were more effective than the latter in inducing an arousal response. An alternative explanation would be that coping with threatening situations requires greater energy resources to the organism. There is indeed some evidence that several basic emotions, such as happiness, fear or anger, are associated with specific patterns of autonomic nervous system activity (18). Nevertheless, the possibility to differentiate between emotions on the base of their specific autonomic architecture would require simultaneous recording of various measures (e.g., pupil size, heart rate, skin conductance, and blood pressure) and thus remains speculative as far as the present study is concerned.

Previous evidence of spontaneous expressive reactions to nonconsciously perceived emotional stimuli is based only on one study by Dimberg and colleagues that recorded facial EMG to backwardly masked, and thus undetected, facial expressions (19). However, unlike in the present case, these responses were not contrasted to those generated by conscious stimulus perception, therefore preventing a direct comparison of the conscious vs. nonconscious processing modes. Moreover, experimentally induced nonconscious vision in neurologically intact observers is liable to intersubject variability and to the different criteria to assess below-awareness thresholds (20, 21). Hence, masking constitutes a weaker case in support of emotional contagion for unseen stimuli compared to the study of patients with permanent cortical blindness.

Aside from their similarities, we also found that emotional contagion responses have different amplitude and temporal dynamics, depending on whether the eliciting stimulus is seen or unseen. Indeed, facial reactions were faster and autonomic arousal was higher for unseen than seen stimuli. This effect was not an unspecific one (e.g., a generalized speeding up of facial reactions in both muscles irrespectively of the stimulus valence), but was selective for the emotion displayed by the stimuli, arising for the ZM in response to happy expressions and for the CS in response to fearful expressions. Likewise, enhanced pupillary dilatation for unseen as compared to seen stimuli was specific to fearful expressions.

Evidence that affective reactions unfold even more rapidly and intensely when induced by a stimulus of which the subject is not aware is in line with data showing that emotional responses may be stronger when triggered by causes that remain inaccessible to introspection (22). These temporal diversities are also consistent with the neurophysiological properties of partly different pathways thought to sustain conscious vs. nonconscious emotional processing. Indeed, conscious emotional evaluation involves detailed perceptual analysis in cortical visual areas before the information is relayed to emotion-sensitive structures such as the amygdala or the orbitofrontal cortex (6). On the other hand, nonconscious emotional processing is carried out by a subcortical route that rapidly conveys coarse information to the amygdala via the superior colliculus and pulvinar, thereby bypassing primary sensory cortices (4, 5, 23).

The Nature of Emotional Contagion.

To what extent do emotional contagion responses originate from motor mimicry or rather are induced by an emotional state in the observer contingent upon visual detection of the stimulus valence? The present results plead in favor of the second alternative for the following reasons.

First, facial and bodily expressions evoked very similar facial reactions in the observers. This convincingly demonstrates that the patients did not simply match the muscular pattern observed in the stimuli, but rather resonated to their affective meaning with relative independence from the information-carrier format. According to the direct motor-matching hypothesis (2), we should have detected no facial reactions in response to bodily expressions, because no simple or direct motor correspondence exists between facial muscles responsible for engendering an expressive reaction in the observer's face, on the one hand, and arm/trunk muscles that convey emotional information in the projected body postures, on the other. Admittedly, it is possible that facial reactions arise in response to bodily expressions because of a filling-in process that adds to the body stimulus the missing facial information. Nevertheless, this possibility would not provide an argument in favor of the motor account of emotional contagion, because it would indicate that an observed expressive action is simulated only after its meaning has been previously evaluated.

Second, unseen face and body stimuli triggered emotion-specific expressive responses. As discussed above, these highly automatic reactions fit well with current evidence that phylogenetically ancient structures are able to attribute emotional value to environmental stimuli and to initiate appropriate responses toward them even in the absence of stimulus awareness and visual input from the cortex (4, 6). Although mimicry of nonemotional expressions may also unfold spontaneously, as in the case of contagious yawning or orofacial imitation of adult facial gestures in newborn infants, these phenomena typically occur under conditions of normal visibility of the eliciting stimuli (24, 25). Therefore, motor resonance seems to exhibit a lesser degree of automaticity than emotional processing, as it is liable to attentional load and visual awareness (26). Since perceptual consciousness of the stimulus was prevented in our patients by damage to the visual cortex, motor resonance is unlikely to explain emotional contagion responses, at least for stimuli projected to the cortically blind field.

Lastly, in addition to expressive facial reactions, we have been able to show arousal responses induced by the presentation of faces and bodies alike. This physiological component is a typical marker of ongoing emotional responses and was found to covary with the affective valence of the stimuli, being higher for fearful than for happy expressions (16, 18). A comparison of the temporal profiles of facial and pupillary reactions reveals that the two parameters have a closely similar unfolding over time, both peaking at around 1,100 ms from stimulus exposure. This temporal coincidence between expressive motor reactions and arousal responses is consistent with the notion that detection of emotional signals activates affect programs of old evolutionary origin that encompass integrated and simultaneous outputs like expressive behaviors, action tendencies, and autonomic changes (13, 27). The processing sequence envisaged by motor theories of emotional contagion, on the other hand, predicts an initial nonemotional mimicry, possibly carried out by the cortical mirror-neurons system, and a subsequent emotional response when the information is transferred via the insula to subcortical limbic areas processing its emotional significance (2). Even though our data were acquired with a temporal resolution of the order of milliseconds, there was no indication of such sequencing between facial and pupillary responses in the patients.

Of course, even though our findings show that emotional contagion represents an instance of truly affective reactions in the observer that is not reducible to, nor dependent on, motor resonance, they do not rule out the possible involvement of nonaffective imitative components. For instance, motor resonance responses may take place at later stages and under specific contextual conditions to modulate emotional contagion (28). There is evidence in the present data that this might be the case. Indeed, facial reactions were found to be more intense in response to the facial than bodily expressions, but notably under conditions of conscious stimulus perception only. Importantly, however, expressive responses were faster for nonconscious stimuli and in the latter case no difference between faces and bodies was found. This suggests that motor resonance may facilitate emotional contagion responses, but critically depends on perceptual awareness and cortical vision and comes into play only after nonconscious perceptual structures that bypass visual cortex have evaluated the affective valence of the stimuli.

Emotion Recognition, Affective Blindsight, and Conscious Experience.

A longstanding issue in affective blindsight is what stimulus categories or attributes can be processed in the absence of cortical vision and awareness to enable emotion recognition (29). The initial reports used facial expressions and affective pictures, with positive results for the former stimuli and negative results for the latter, therefore suggesting a special status for faces in conveying nonconscious emotional information (7–9). The present results show that affective blindsight arises also for bodily expressions, consistent with our previous findings that the same body stimuli may be processed implicitly in patients with hemispatial neglect (30). D.B. and G.Y. were in fact both able to discriminate correctly the emotional valence of unseen facial as well as bodily expressions in a 2AFC task. Overall, the findings indicate that nonconscious recognition of emotions in blindsight is not specific for faces, but rather for biologically primitive expressions at relatively low special frequencies.

To what extent is affective blindsight really affective? In principle, nonconscious discrimination of emotional expressions may not be different from discrimination of complex neutral images of animals or objects (31). The phenomenon might thus be confined to the visual domain and reduced to a specific instance of shape recognition. The present findings, however, provide direct evidence that this is not the case. Notwithstanding the patients had no conscious experience of the stimuli presented to their blind field, they were actually in an emotional state, as shown by the presence of expressive and arousal responses typical of emotions. The presence of somatic changes that go beyond activity in the visual system is relevant to understand what might be the process that leads blindsight patients to guess correctly the emotion of stimuli they do not see. It is possible the patients unwittingly “sense” the somatic changes elicited by the stimulus and use it as a guide to orient the decision about what emotion is displayed. This surmise is reminiscent of the facial-feedback hypothesis (32) or of more elaborated theories about the relationship between bodily changes and emotion understanding, such as the somatic-marker hypothesis (33). It is also consistent with previous reports showing that patients with visual agnosia, thereby unable to discriminate consciously between different line orientations or shapes, may nevertheless use kinesthetic information from bodily actions to compensate for their visuoperceptive deficits (34). This issue clearly deserves further study, and attention needs to be paid in the future to the affective resonance and influence exerted by unseen emotions over conscious experience and intentional behavior toward the normally perceived world.

Materials and Methods

Subjects.

D.B. is a 69-year-old male patient who underwent surgical removal of his right medial occipital lobe at age 33 because of an arterious venus malformation, giving raise to a left homonymous hemianopia that 44 months postoperatively contracted to a left inferior quadrantanopia (35).

G.Y. is a 53-year-old male patient with right homonymous hemianopia following damage to his left medial occipital lobe at age 7 due to a traumatic brain injury; see ref. 36 for a recent structural and functional description of G.Y.'s lesion and outcomes.

Both patients gave informed consent to participate in the study.

Stimuli and Procedure.

Face stimuli were modified from Ekman's series (37), and body stimuli were modified from the set developed by de Gelder et al. (11). As in the original set of stimuli, facial information was removed from body images by blurring. The whole set used in the present study consisted of 32 gray-scale images (16 faces, half of which expressing happiness and half fear, and 16 whole-body postures, half expressing happiness and half fear). All stimuli sustained a visual angle of 8° × 10.5° from a viewing distance of 50 cm from the screen of a 21-in CRT monitor and had a mean luminance of 15 cd/m2.

The stimuli were projected singly for 2,000 ms to the lower visual quadrants of the patients, 7° below and 7° to the left or right of a fixation cross (1.26°), against a dark background (20 cd/m2). Because patient D.B. reported in recent years visible after-images of the stimuli when they were turned off (38), particular attention was paid to avoid this phenomenon in the present study. Mean luminance of the visual stimuli was indeed kept darker than background luminance, a procedure that proved valid to avoid after-images in D.B. The stimuli were projected only if the patients were fixating the cross and immediately removed from the screen if they diverted the gaze during stimulus presence. In case of unsteady fixation during stimulus presentation, the trial was automatically discarded and replaced by a new one presented at the end of the block. Under these conditions, the patients never reported the presence of a stimulus in their blind field nor any associated conscious visual feeling like after-images and judged their recognition performance as “at chance” and as “blind guessing.”

Faces and bodies were presented in four alternating blocks, either to the intact or blind visual field. Within each block, happy and fearful expressions were randomly intermingled and separated by an interstimulus interval (ISI) of 5 s. Overall, this resulted in eight different conditions [two emotions (happiness and fear) × two types of expressions (face and body) × two visual fields (intact and blind)], each including 32 trial repetitions.

EMG Recording and Data Reduction.

Facial EMG was measured by surface Ag/AgCl bipolar electrodes placed over the CS and the ZM on both sides of the face, according to the guidelines given by Fridlund and Cacioppo (15). EMG signal was recorded at a sampling rate of 1,024 Hz and band-pass filtered (20–500 Hz). Subsequently, data were segmented into 3,000 ms epochs, including 1,000 ms of prestimulus baseline and 2,000 ms of stimulus exposure for each condition and muscular region separately, full-wave rectified and integrated (time constant = 20 ms). A baseline magnitude of EMG signal, corresponding to the tonic strength of muscular activity, was calculated for each trial by averaging the activity recorded during the 1,000 ms preceding stimulus onset. Phasic EMG responses were scored and averaged over 100 ms intervals starting from stimulus onset (overall corresponding to 20 time-bins) and expressed as μV of difference from baseline activity by subtracting the mean baseline EMG signal from all subsequent samples. A mean EMG response-from-baseline waveform was finally obtained for each patient and condition by averaging across trials the values at each time point.

Eye Movements Control, Pupillometric Recording, and Data Reduction.

Eye movements and pupillary diameter were monitored via an infrared camera (RED-III pan tilt) connected to an eye-tracking system that analyzed online monocular pupil and corneal reflection (sampling rate 50 Hz). Minor artifacts were corrected by linear interpolation. A five-point smoothing filter was then passed over the data. Artifact-free and smoothed pupillary response data were segmented into 3,000 ms epochs including 1,000 ms of prestimulus period and 2,000 ms after stimulus onset for each patient and condition separately. A baseline pupil diameter was calculated for each trial by averaging the pupillary diameter samples recorded during the 1,000 ms preceding stimulus onset. Data were then expressed as differences from baseline by subtracting the mean baseline pupillary diameter from all subsequent samples. A mean pupillary response-from-baseline waveform was finally obtained for each condition by averaging across trials the values at each time point.

Supplementary Material

Acknowledgments.

This study is dedicated to the precious memory of our dear friend and colleague Luca Latini Corazzini, whose assistance during the testing sessions was vital to this work. We thank D.B. and G.Y. for their patient collaboration and J. LeDoux and G. Berlucchi for valuable and constructive comments. Thanks are also due to A. Avenanti, C. Sinigaglia, and C. Urgesi for helpful discussions. M.T. was supported by a Veni grant (451–07-032) from the Netherlands Organization for Scientific Research, and partly by the Fondazione Carlo Molo. B.d.G. was supported by a Communication with Emotional Body Language (COBOL) grant from EU FP6–2005-NEST-Path, and by Netherlands Organization for Scientific Research. G.G., L.C., and P.P. were supported by the PRIN 2007 from Italian Ministry for University Education and Research, and by the Fondazione Carlo Molo. L.W. was supported by the McDonnell Oxford Cognitive Neuroscience Centre.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0908994106/DCSupplemental.

References

- 1.Hatfield H, Cacioppo JT, Rapson RL. Emotional Contagion. Cambridge, MA: Cambridge Univ Press; 1994. [Google Scholar]

- 2.Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci USA. 2003;100:5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- 4.Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124:1241–1252. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- 5.Morris JS, Ohman A, Dolan RJ. A subcortical pathway to the right amygdala mediating “unseen” fear. Proc Natl Acad Sci USA. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.LeDoux JE. The Emotional Brain. New York, NY: Simon & Shuster; 1996. [Google Scholar]

- 7.de Gelder B, Vroomen J, Pourtois G, Weiskrantz L. Non-conscious recognition of affect in the absence of striate cortex. Neuroreport. 1999;10:3759–3763. doi: 10.1097/00001756-199912160-00007. [DOI] [PubMed] [Google Scholar]

- 8.Pegna AJ, Khateb A, Lazeyras F, Seghier ML. Discriminating emotional faces without primary visual cortices involves the right amygdala. Nat Neurosci. 2005;8:24–25. doi: 10.1038/nn1364. [DOI] [PubMed] [Google Scholar]

- 9.de Gelder B, Pourtois G, Weiskrantz L. Fear recognition in the voice is modulated by unconsciously recognized facial expressions but not by unconsciously recognized affective pictures. Proc Natl Acad Sci USA. 2002;99:4121–4126. doi: 10.1073/pnas.062018499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Urgesi C, Candidi M, Fabbro F, Romani M, Aglioti SM. Motor facilitation during action observation: Topographic mapping of the target muscle and influence of the onlooker's posture. Eur J Neurosci. 2006;23:2522–2530. doi: 10.1111/j.1460-9568.2006.04772.x. [DOI] [PubMed] [Google Scholar]

- 11.de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc Natl Acad Sci USA. 2004;101:16701–16706. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moody EJ, McIntosh DN, Mann LJ, Weisser KR. More than mere mimicry? The influence of emotion on rapid facial reactions to faces. Emotion. 2007;7:447–457. doi: 10.1037/1528-3542.7.2.447. [DOI] [PubMed] [Google Scholar]

- 13.de Gelder B. Towards the neurobiology of emotional body language. Nat Rev Neurosci. 2006;7:242–249. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- 14.Dimberg U. Facial reactions to fear-relevant and fear-irrelevant stimuli. Biol Psychol. 1986;23:153–161. doi: 10.1016/0301-0511(86)90079-7. [DOI] [PubMed] [Google Scholar]

- 15.Fridlund AJ, Cacioppo JT. Guidelines for human electromyographic research. Psychophysiology. 1986;23:567–589. doi: 10.1111/j.1469-8986.1986.tb00676.x. [DOI] [PubMed] [Google Scholar]

- 16.Harrison NA, Singer T, Rotshtein P, Dolan RJ, Critchley HD. Pupillary contagion: Central mechanisms engaged in sadness processing. Soc Cogn Affect Neurosci. 2006;1:5–17. doi: 10.1093/scan/nsl006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Barbur JL. In: The Visual Neurosciences. Chalupa LM, Werner JS, editors. Cambridge, MA: MIT Press; 2004. pp. 641–656. [Google Scholar]

- 18.Levenson RW. Blood, sweat, and fears: The autonomic architecture of emotion. Ann NY Acad Sci. 2003;1000:348–366. doi: 10.1196/annals.1280.016. [DOI] [PubMed] [Google Scholar]

- 19.Dimberg U, Thunberg M, Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychol Sci. 2000;11:86–89. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- 20.Pessoa L, Japee S, Ungerleider LG. Visual awareness and the detection of fearful faces. Emotion. 2005;5:243–247. doi: 10.1037/1528-3542.5.2.243. [DOI] [PubMed] [Google Scholar]

- 21.Tamietto M, de Gelder B. Affective blindsight in the intact brain: Neural interhemispheric summation for unseen fearful expressions. Neuropsychologia. 2008;46:820–828. doi: 10.1016/j.neuropsychologia.2007.11.002. [DOI] [PubMed] [Google Scholar]

- 22.Winkielman P, Berridge KC. Unconscious emotion. Curr Dir Psychol Sci. 2004;13:120–123. [Google Scholar]

- 23.LeDoux JE. In: Frontiers of Consciousness. Weiskrantz L, Davies MS, editors. Oxford, UK: Oxford University Press; 2008. pp. 76–86. [Google Scholar]

- 24.Meltzoff AN, Moore MK. Newborn infants imitate adult facial gestures. Child Dev. 1983;54:702–709. [PubMed] [Google Scholar]

- 25.Platek SM, Critton SR, Myers TE, Gallup GG. Contagious yawning: The role of self-awareness and mental state attribution. Brain Res Cogn Brain Res. 2003;17:223–227. doi: 10.1016/s0926-6410(03)00109-5. [DOI] [PubMed] [Google Scholar]

- 26.Chong TT, Cunnington R, Williams MA, Mattingley JB. The role of selective attention in matching observed and executed actions. Neuropsychologia. 2009;47:786–795. doi: 10.1016/j.neuropsychologia.2008.12.008. [DOI] [PubMed] [Google Scholar]

- 27.Frijda NH. The Laws of Emotion. Mahwah, NJ: Lawrence Erlbaum Associates; 2007. [Google Scholar]

- 28.Kilner JM, Marchant JL, Frith CD. Modulation of the mirror system by social relevance. Soc Cogn Affect Neurosci. 2006;1:143–148. doi: 10.1093/scan/nsl017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Heywood CA, Kentridge RW. Affective blindsight? Trends Cogn Sci. 2000;4:125–126. doi: 10.1016/s1364-6613(00)01469-8. [DOI] [PubMed] [Google Scholar]

- 30.Tamietto M, Geminiani G, Genero R, de Gelder B. Seeing fearful body language overcomes attentional deficits in patients with neglect. J Cogn Neurosci. 2007;19:445–454. doi: 10.1162/jocn.2007.19.3.445. [DOI] [PubMed] [Google Scholar]

- 31.Trevethan CT, Sahraie A, Weiskrantz L. Form discrimination in a case of blindsight. Neuropsychologia. 2007;45:2092–2103. doi: 10.1016/j.neuropsychologia.2007.01.022. [DOI] [PubMed] [Google Scholar]

- 32.Tomkins SS. Affect, Imagery, Consciousness. New York, NY: Springer; 1962–1963. [Google Scholar]

- 33.Damasio AR. Descartes' Error: Emotion, Reason, and the Human Brain. New York, NY: G.P. Putnam's Sons; 1994. [Google Scholar]

- 34.Murphy KJ, Racicot CI, Goodale MA. The use of visuomotor cues as a strategy for making perceptual judgments in a patient with visual form agnosia. Neuropsychology. 1996;10:396–401. [Google Scholar]

- 35.Weiskrantz L. Blindsight: A Case Study Spanning 35 Years and New Developments. Oxford, UK: Oxford University Press; 2009. [Google Scholar]

- 36.Tamietto M, et al. Collicular vision guides nonconscious behavior. J Cogn Neurosci. 2009 Mar 25; doi: 10.1162/jocn.2009.21225. [DOI] [PubMed] [Google Scholar]

- 37.Ekman P, Friesen W. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- 38.Weiskrantz L, Cowey A, Hodinott-Hill I. Prime-sight in a blindsight subject. Nat Neurosci. 2002;5:101–102. doi: 10.1038/nn793. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.