Abstract

We propose and study a unified procedure for variable selection in partially linear models. A new type of double-penalized least squares is formulated, using the smoothing spline to estimate the nonparametric part and applying a shrinkage penalty on parametric components to achieve model parsimony. Theoretically we show that, with proper choices of the smoothing and regularization parameters, the proposed procedure can be as efficient as the oracle estimator (Fan and Li, 2001). We also study the asymptotic properties of the estimator when the number of parametric effects diverges with the sample size. Frequentist and Bayesian estimates of the covariance and confidence intervals are derived for the estimators. One great advantage of this procedure is its linear mixed model (LMM) representation, which greatly facilitates its implementation by using standard statistical software. Furthermore, the LMM framework enables one to treat the smoothing parameter as a variance component and hence conveniently estimate it together with other regression coefficients. Extensive numerical studies are conducted to demonstrate the effective performance of the proposed procedure.

Keywords: Key words and phrases: Semiparametric regression, Smoothing splines, Smoothly clipped absolute deviation, Variable selection

1. Introduction

Partially linear models are popular semiparametric modeling techniques which assume the mean response of interest to be linearly dependent on some covariates, whereas its relation to other additional variables are characterized by nonparametric functions. In particular, we consider a partially linear model Y = XT β + f(T) + ε, where X are explanatory variables of primary interest, β are regression parameters, f(·) is an unknown smooth function of the auxiliary covariate T, and the errors are uncorrelated. This model is a special case of general additive models (Hastie and Tibshirani, 1990). Estimation of β and f has been studied in various contexts including kernel smoothing (Speckman, 1998), smoothing splines (Engle et al., 1986; Heckman, 1986; Wahba, 1990; Green and Silverman, 1994; Gu, 2002), and penalized splines (Ruppert et al., 2003; Liang, 2006).

Often times, the number of potential explanatory variables, d, is large, but only a subset of them are predictive to the response. Variable selection is necessary to improve prediction accuracy and model interpretability of final models. In this paper, we treat f(T) as a nuisance effect and mainly focus on automatic selection, estimation and inferences for important linear effects in the presence of T. For linear models, numerous variable selection methods have been developed such as stepwise selection, best subset selection, and shrinkage methods like nonnegative garrote (Breiman, 1995), least absolute selection and shrinkage operator (LASSO; Tibshirani, 1996), smoothly clipped absolute deviation (SCAD; Fan and Li, 2001), least angle regression (Efron et al., 2004), adaptive lasso (Zou, 2006; Zhang and Lu, 2006). Information criteria commonly used for model comparison include Mallows Cp (Mallows, 1973), Akaike’s Information Criteria (Akaike, 1973) and Bayesian Information Criteria (BIC; Schwarz, 1978). A thorough review on variable selection for linear models is given in Linhart and Zucchini (1986) and Miller (2002).

Though there is a vast amount of work on variable selection for linear models, limited work has been done on model selection for partially linear models as noted in Fan and Li (2004). Model selection for partially linear models is challenging, since it consists of several interrelated estimation and selection problems: nonparametric estimation, smoothing parameter selection, and variable selection and estimation for linear covariates. Fan and Li (2004) has done some pioneering work in this area. In the framework of kernel smoothing, Fan and Li (2004) proposed an effective kernel estimator for nonparametric function estimation while using the SCAD penalty for variable selection; they were among the first to extend the shrinkage selection idea to partially linear models. Bunea (2004) proposed a class of sieve estimators based on penalized least squares for semiparametric model selection, and established the consistency property of their estimator. Bunea and Wegkamp (2004) suggested another two-stage estimation procedure and proved that the estimator is minimax adaptive under some regularity conditions. Recently, variable selection for high dimensional data, either d diverges with n or d > n, has been actively studied. Fan and Peng (2004) established asymptotic properties of the nonconcave penalized likelihood estimators for linear model variable selection when d increases with the sample size. Xie and Huang (2007) studied the SCAD-penalized regression for partially linear models for high dimensional data, where polynomial regression splines are employed for model estimation.

In this work, we propose a new regularization approach for model selection in the context of partially smoothing spline models and study its theoretical and computational properties. As we show in the paper, the elegant smoothing spline theory and formulation can be used to develop a simple yet effective procedure for joint function estimation and variable selection. Inspired by Fan and Li (2004), we adopt the SCAD penalty for model parsimony due to its nice theoretical properties. We will show that the new estimator has the oracle property if both smoothing and regularization parameters are chosen properly as n → ∞, when the dimension d is fixed. In the more challenging case when dn → ∞ as n→ ∞, the estimator is shown to be -consistent and be able to select important variables correctly with probability tending to one. In addition to these desired asymptotic properties, the new approach also has advantages in computation and parameter estimation. It naturally owns a linear mixed model (LMM) representation, which allows one to take advantage of standard software and implement it without much extra programming effort. This LMM framework further facilitates the process of tuning multiple parameters: the smoothing parameter in the roughness penalty and the regularization parameter associated with the shrinkage penalty. In our work, the smoothing parameter is treated as an additional variance component and estimated jointly with the residual variance using the restricted maximum likelihood (REML) approach, and therefore a two-dimensional grid search can be avoided. We also show that the local quadratic approximation (LQA; Fan and Li, 2001) technique used for computation provides us a convenient and robust sandwich formula for standard errors of the resulting estimates.

The rest of the article is organized as follows. In Section 2 we propose the double penalized least squares method for joint variable selection and model estimation, and establish the asymptotic properties of the resulting estimator β̂. We further study the large-sample properties of the estimator, such as the estimation consistency and variable selection consistency, in situations when the input dimension increases with the sample size n. In Section 3 we suggest a linear mixed model (LMM) representation for the proposed procedure, which leads to an iterative algorithm with easy implementation. We also discuss how to select the tuning parameters. In Section 4, we derive the covariance estimates for β̂ and f̂, from both Frequentist and Bayesian perspectives. Sections 5 and 6 present simulation results and a real data application. Section 7 concludes the article with a discussion.

2. Double-Penalized Least Squares Estimators and Their Asymptotics

2.1. Double-Penalized Least Squares Estimators

Suppose that the sample consists of n observations. For the ith observation, denote by yi the response, by xi the covariate vector from which important covariates are to be selected, and by ti the covariate whose effect cannot be adequately characterized by a parametric function. We consider the following partially linear model:

| (2.1) |

where β is a d×1 vector of regression coefficients, f(t) is an arbitrary twice-differentiable smooth function, and εi’s are assumed to be uncorrelated random variables with mean zero and a common unknown variance σ2. Define Y = (y1, ···, yn)T. Without loss of generality, we further assume that ti ∈[0, 1] and f (t) is in the Sobolev space {f (t): f, f′ are absolutely continuous, and J2(f) < ∞}, where .

To simultaneously achieve the estimation of the nonparametric function f(t) and the selection of important variables, we propose a double-penalized least squares (DPLS) approach by minimizing

| (2.2) |

The first penalty term in (2.2) penalizes the roughness of the nonparametric fit f(t) and the second penalty pλ2 (|βj|) is the shrinkage penalty on βj ‘s. To our best knowledge, there has been little work on the DPLS in literature. We call the minimizer of (2.2) double-penalized least squares estimators (DPLSEs). There are two tuning parameters in (2.2): λ1 ≥ 0 is a smoothing parameter which balances smoothness of f(t) with fidelity to data, and λ2 ≥ 0 is a regularization parameter controlling the amount of shrinkage used in the variable selection. Choices of tuning parameters are very important to assure effective model selection and estimation, which will be discussed later. In the DPLS (2.2), we adopt the nonconcave SCAD penalty proposed by Fan and Li (2001), which is a piecewise quadratic function and satisfies

| (2.3) |

where a > 2 is also a tuning parameter. Fan and Li (2001) showed that the SCAD penalty function results in consistent, sparse and continuous estimators in linear models.

2.2 Asymptotic Theory: d fixed

First we lay out regularity conditions on xi, ti and ε which are necessary for the theoretical results. Denote the true coefficients as , where β20 = 0 and β10 consists of all q nonzero components. Assume the uncorrelated random variables εi’s have uniformly bounded absolute third moments. In addition, we assume that x1, …, xn are independently and identically distributed with mean zero, finite positive definite covariance matrix R, and that the components of xi have finite third and fourth moments. As in Heckman (1986), we assume that are distinct values in [0, 1] and satisfy , where u(·) is a continuous and strictly positive function independent of n.

Define X = (x1, …, xn)T, ε = (ε1, …, εn)T and f = (f(t1), …, f(tn))T. The partially linear model (2.1) can then be expressed as Y = Xβ + f + ε. It can be shown that for given λ1 and λ2, minimizing the DPLS (2.2) leads to a smoothing spline estimate for f(·). Hence by theorem (2.1) in Green and Silverman (1994), we can rewrite the DPLS (2.2) as

| (2.4) |

where K is the nonnegative definite smoothing matrix defined by Green and Silverman (1994). Given λ1, λ2, and β, the DPLS minimizer of (2.4) is given by f̂(β) = (I + nλ1K)−1(Y − Xβ), where A(λ1) = (I + nλ1K)−1 is equivalent to the linear smoother matrix in Craven and Wahba (1979) and Heckman (1986). Plugging f̂(β) into (2.4), we obtain a penalized profile least squares only of β:

We call the quadratic term in Q(β) as the profile least squares and denote it by L(β).

In the following, we establish the asymptotic theory for our estimator in terms of both estimation and variable selection. Proofs of these results involve the second-order Taylor expansion of pλ2 (|β|), and we will adapt the derivations of Fan and Li (2001) to our partially linear model context. Compared to the linear models studied in Fan and Li (2001), the major difficulty here is due to the appearance of the nonparametric component f in (2.1), which can affect the linear estimate β through the smoother matrix A(λ1). In Lemma 1, we first establish some theoretical properties of L(β), which are useful for the proofs of Lemma 2 and Theorems 1 and 2 later in this section.

Lemma 1

Let L′(β0) and L″(β0) be the gradient vector and Hessian matrix of L respectively, evaluated at β0. Assume that Xi are independent and identically distributed with finite fourth moments. If λ1n → 0 and as n → ∞, then

,

.

From Lemma 1, we have n−1/2L′(β0) = Op(1), n−1L″ (β0) = R + op(1) and , where Rjk is the (j, k)th element of R. Using these results, we can prove the root-n consistency of the DPLSE β̂ and its oracle properties. Since the derivations of Theorems 1, 2, and Lemma 2 given in the following are similar to those in Fan and Li (2001), they are omitted in the paper.

Theorem 1

As n → ∞, if λ1n →0, and λ2n → 0, then there exists a local minimizer β̂ of Q(β) such that ||β̂− β0|| = Op(n−1/2).

Theorem 1 says that if we choose proper sequences of λ1n and λ2n as n → ∞, then the DPLSE β̂ is root-n consistent. In the following, we establish through Lemma 2 and Theorem 2 that β̂ can perform as well as the oracle procedure in variable selection.

Lemma 2

As n → ∞, if λ1n → 0, , λ2n → 0, and n1/2λ2n → ∞, then with probability tending to 1, for any β1 which satisfies ||β1 − β10||= O(n−1/2) and any constant C > 0,

Theorem 2

As n → ∞, if λ1n →0, , λ2n → 0, and n1/2→λ2n → ∞, then with probability tending to 1, the local minimizer in Theorem 1 must satisfy:

Sparsity: β̂2 = 0.

Asymptotic normality: , where R11 is the q × q upper-left sub-matrix of R.

2.3 Asymptotic Theory: dn → ∞ as n → ∞

In this section, we study the sampling properties of the DPLSEs in the situation where the number of linear predictors tends to ∞ as the sample size n goes to ∞. Similar to Fan and Peng (2004), we show that under certain regularity conditions, the DPLSEs are -consistent and also consistent in selecting important variables, where dn is the dimension of β to emphasize its dependence on the sample size n. Similarly, we re-define the number of important parametric effects as qn. We write the true regression coefficients as and the DPLSE estimator as . For any square matrix G, denote its smallest eigenvalue and largest eigenvalue respectively by Λmin(G) and Λmax(G). The following are the regularity conditions assumed to facilitate the technical derivations.

- (C1) The elements {βn10, j}’s of βn10 satisfy

(C2) There exist constants c1 and c2 such that

Both conditions above are adopted from Fan and Peng (2004), which is the first work to study the large-sample properties of the non-concave penalized estimators for linear models when the dimension of data diverges with the sample size n. As pointed out by Fan and Peng (2004), the condition (C1) gives the rate at which the penalized estimator can distinguish nonvanishing parameters from 0. Condition (C2) assumes that the R is positive definite and its eigenvalues are uniformly bounded.

Theorem 3

Under the conditions (C1) and (C2), as n → ∞, if λ1n →0, , λ2n →0, and , then there exists a local minimizer β̂n of Q(βn) such that .

Theorem 3 says that if we choose proper sequences of λ1n and λ2n as n → ∞, then the DPLSE β̂n is -consistent. This consistency rate is the same as the result of Fan and Peng (2004), where the number of parameters diverges in linear models. It is also the same as the result of the M-estimator studied by Huber (1973) in the diverging dimension situations. In the next, Theorem 4 shows that β̂n is also consistent in variable selection, i.e, unimportant linear predictors will be estimated as exactly zeros with probability tending to one. All the proofs are given in the Appendix.

Theorem 4

Under the regularity conditions (C1) and (C2), as n → ∞, if λ1n →0, , λ2n →0, , and , then with probability tending to 1, the local minimizer in Theorem 3 must satisfy β̂n2 = 0.

3. Computational Algorithm and Parameter Tuning

We reformulate the DPLS into a linear mixed model (LMM) representation for ease of computation. The LMM allows us to treat the smoothing parameter as a variance component and provides a unified estimation and inferential framework. An iterative algorithm is then outlined.

3.1. Linear Mixed Model (LMM) Representation

Let t = (t1, …, tn)T be the vector of distinct ti’s and f = (f (t1), …, f(tn))T. In the case where there are ties in ti’s, an incidence matrix can be used to cast the DPLS into a linear mixed model framework as in Zhang et al. (1998). The partially linear model (2.1) can then be expressed as

| (3.1) |

If εi’s were normally distributed, then minimizing (2.4) with respect to (β, f) is equivalent to maximizing the double-penalized likelihood

| (3.2) |

where ℓ(β, f; Y) = − (n/2) log σ2 − (Y − Xβ − f)T (Y −Xβ −f)/(2σ2). Following Green (1987), we may write f via a one-to-one linear transformation as f = Tδ + Ba, where T = [1, t], 1 is the vector of 1’s with length n, δ and a are of length 2 and n − 2 respectively, and B = L(LTL)−1 with L being an n × (n − 2) full rank matrix satisfying K = LLT and LT T = 0. It follows that fT Kf = aTa and yields an equivalent double-penalized log-likelihood

| (3.3) |

where X* = [T, X], β* = (δT, βT)T.

For fixed β* (and given λ1,λ2,σ2), (3.3) can be treated as the joint log-likelihood for the following linear mixed model (LMM) subject to the SCAD penalty on β

| (3.4) |

where β* represent fixed effects, and a are random effects with a ~ N(0, τI), τ = 2σ2/(nλ1), and θ = (τ, σ2) are variance components. We then conduct variable selection by maximizing the penalized log-likelihood of β* subject to the SCAD penalty

| (3.5) |

where V = σ2In +τBBT is the variance of Y under mixed model representation (3.4). After selecting important variables and obtaining estimates β̂*, we can use δ̂ and the best linear unbiased prediction (BLUP) estimate â to construct the smoothing spline fit f̂(t). This LMM representation suggests that the inverse of the smoothing parameter τ can be treated as a variance component and hence can be jointly estimated with σ2 using the maximum likelihood or restricted maximum likelihood (REML) approach during the variable selection process under the working distributional assumption that were normal. However, it should be noted that the above mixed model representation is merely a framework convenient for computation. The asymptotic results in Section 2 do not depend on the normal error assumption. Simulation results in Section 5 indicate that our procedure is quite robust to the distributional assumption for εi’s.

The SCAD penalty function defined by (2.3) is not differentiable at the origin, causing difficulty in maximizing (3.5) with gradient based methods such as the Newton-Raphson. Following Fan and Li (2001, 2004), we use a local quadratic approximation (LQA) approach. Assuming is an initial value close to the maximizer of (3.5), we have the following local approximation:

where ξ is a pre-specified threshold.

Using Taylor expansions, we can approximate (3.5) by

| (3.6) |

where . For fixed θ = (τ, σ2)we apply the Newton-Raphson method to maximize (3.6) and get the updating formula

| (3.7) |

It is easy to recognize that (3.7) is equivalent to an iterative ridge regression algorithm.

We propose to alternately estimate (β, f) and (τ, σ2) iteratively. The initial values for β*, τ and σ2 are obtained by the MIXED procedure in SAS to fit the linear mixed model (3.4) with all the covariates. We then use formula (3.7) to iteratively update β̂*. The LMM framework allows us to treat τ = σ2/(nλ1) as an extra variance component based on selected important linear covariates, so that we can estimate it together with the error variance σ2 using the restricted maximum likelihood (REML). There is rich literature on the use of REML to estimate smoothing parameters and variance components (e.g. Wahba, 1985; Speed, 1991; Zhang et al., 1998). For example, Zhang et al. (1998) estimated the smoothing parameter via REML for longitudinal data with a nonparametric baseline function and complex variance structures. The partially linear model (3.1) has a similar form as (2) of Zhang et al. (1998), with only two variance components (τ, σ2), and hence the estimation proceeds similarly.

3.2. Choice of Tuning Parameters

Although the smoothing parameter λ1 (or equivalently τ) is readily estimated in the LMM framework, we still need to estimate the SCAD tuning parameters (λ2, a). To find their optimal values, one common approach could be a two-dimensional grid search using some data-driven criteria, such as CV and GCV (Craven and Wahba, 1979), which can be rather computationally prohibitive. Fan and Li (2001) showed numerically that a = 3.7 minimizes the Bayesian risk and recommended its use in practice. Thus we set a = 3.7 and only tune λ2 in our implementation.

Many selection criteria, such as cross validation (CV), generalized cross validation (GCV), BIC and AIC selection can be used for parameter tuning. Wang et al. (2007) suggested using the BIC for the SCAD estimator in linear models and partially linear models, and proved its model selection consistency property, i.e. the optimal parameter chosen by the BIC can identify the true model with probability tending to one. We will also use the BIC to select the optimal λ2 from a gridded range under working normal distributional assumption for εi.

Given λ2, suppose q variables are selected by the algorithm in Section 3. Let X1 be the sub-matrix of X for the q important variables and β1 be the corresponding q × 1 regression coefficient vector. Then we may use the estimation method of Zhang et al. (1998) to solve the partially linear model (2.1). Consequently Ŷ = SY, where S is a smoother matrix with q1 = trace(S). The BIC criterion is then computed as BIC(λ2) = −2ℓ+ q1 log n, where ℓ = −(n/2) log(2πσ̂2) − (Y−X1β̂1 − f̂)T (Y −X1β̂1 − f̂)/(2σ̂2). For each grid point of λ2, the iterative ridge regression results in a model with a set of important covariates, and we compute the BIC for this selected model. Based on our empirical evidence and the fact that BIC is consistent in selecting correct models under certain conditions (Schwarz, 1978), we chose BIC over GCV for tuning λ2 in our numerical analysis.

4. Frequentist and Bayesian Covariance Estimates

We derive the frequentist and Bayesian covariance formulas for β̂ and f̂ parallel to Sections 3.4 and 3.5 in Zhang et al. (1998), except that we also take into account the bias introduced by the imposed penalty for the variable selection. Using these covariance estimates, we are able to construct confidence intervals for the regression coefficients and the nonparametric function. The proposed covariance estimates are evaluated via simulation in Section 5.

4.1. Frequentist Covariance Estimates

From frequentists’ point of view, cov(Y|t, x) = σ2I, and we can write β̂* = (δ̂T, β̂T)T as an approximately linear function of Y: β̂* = QY. Let , where Q1 and Q2 are partitions of Q with dimensions corresponding to (δT, βT)T, so that δ̂ = Q1Y, and β̂ = Q2Y. The estimated covariance matrix for β̂ is given by

| (4.1) |

where σ̂2 is the estimated error variance. It is easy to show that the empirical BLUP estimate of a is â = Ã(Y − X*β*) = SaY, where Sa= Ã(I−X*Q) and . Therefore f̂ = Tδ̂+ Bâ = (TQ1 + BSa)Y and its covariance

| (4.2) |

4.2. Bayesian Covariance Estimates

The LMM representation in Section 3.1 and (3.3) suggests a prior for f(t) of the form f = Tδ + Ba, with a ~ N(0, τI) and a flat prior for δ. As a prior for β, a reasonable choice appears to be the one with kernel , where Σλ2 is a diagonal matrix defined in Section 3.1. The definition of the SCAD penalty function (2.3) implies that some diagonal elements of the matrix Σλ2 can be zero, corresponding to those coefficients with |βj| > aλ2. Assume after reordering, Σλ2 = diag(0, Σ22), where Σ22 has positive diagonal elelments. It follows that β can be partitioned into , where β1 can be regarded as “fixed” effects and β2 as “random” effects with . The matrix X is partitioned into [X1, X2] accordingly. Now we reformulate the mixed model (3.4) as: Y = Tδ+X1β1 + X2β2 + B*a + ε, or as Y = χγ + Zb + ε, where χ = [T, X1], , Z = [X2, B*] and is the new random effect distributed as b ~ N(0, Σb) with a block diagonal covariance matrix . Under the reformulated linear mixed model, β consists of both fixed and random effects. Therefore the Bayesian covariances for (β̂,f̂) are

| (4.3) |

| (4.4) |

These Bayesian variance estimates can be viewed to account for the bias in β̂ and f̂ due to imposed penalties (Wahba, 1983).

5. Simulation Studies

We conduct Monte Carlo simulation studies to evaluate the finite sampling performance of the proposed DPLS method in terms of both model estimation and variable selection. Furthermore, we compare our procedure with the SCAD and LASSO methods proposed by Fan and Li (2004). In the following, these three methods are respectively referred to as “DPLSE”, “SCAD” and “LASSO”. When implementing Fan and Li (2004), we adopt their approach to choose the kernel bandwidth: first compute the difference based estimator (DBE) for β and then select the bandwidth using the plug-in method of Ruppert et al. (1995). To select the SCAD and LASSO tuning parameters, we tried both BIC and GCV and found that BIC generally gave better performance, so BIC was used for tuning in the SCAD and LASSO.

We simulate the data from a partially linear model y = xT β + f(t) + ε. Adopting the configuration in Tibshirani (1996) and Fan and Li (2001, 2004), we generate the correlated covariates x = (x1, …, x8)T from a standard normal distribution with AR(1) corr(xi, xj) = 0.5|i−j|, and we set the true coefficients β = (3, 1.5, 0, 0, 2, 0, 0, 0)T. Two types of non-normal errors are used to demonstrate that the proposed normal likelihood based REML estimation is robust to the distributional assumption of errors. We compare three methods in a 2 × 2 × 2 factorial experiment. There are two combinations of (f, ε): 1. f1(t) = 4 sin(2πt/4) with ε1 ~ C0t6; 2. f2(t) = 5β(t/20, 11, 5) + 4β(t/20, 5, 11) where , with a mixture normal error ε2 ~ C0 (0.5N (1, 1) + 0.5N (−1, 3)). The scale C0 is chosen such that the error variance is σ2 = 1 or 9. Consider two sample sizes n = 100 and n = 200. The number of observed unique time points ti’s is chosen to be 50 in all the settings.

As in Fan and Li (2004), we use the mean squares error (MSE) for β̂ and f̂ to respectively evaluate goodness-of-fit for parametric and nonparametric estimation. They are defined as MSE(β̂) = E(||β̂− β||2), and . In practice, we compute MSE(f̂) by averaging over the design knots. Under each setting, we carry out 100 Monte Carlo (MC) simulation runs and report the MC sample mean and standard deviation (given in the parentheses) for the MSEs. To evaluate variable selection performance of each method, we report the number of correct zero coefficients (denoted as “Corr.”), the number of coefficients incorrectly set to 0 (denoted as “Inc.”), and the model size. In addition, we report the point estimate, bias, and the 95% coverage probability of frequentist and Bayesian confidence intervals for the DPLSE.

5.1. Overall Model Selection and Estimation Results

Table 5.1 compares three variable selection procedures when σ2 = 1. The DPLSE outperforms other methods in terms of both estimation and variable selection in all scenarios, and SCAD performs better than LASSO. Overall, the DPLSE achieves a sparser model, with both “Corr.” and “Inc.” closer to the oracle (5 & 0 respectively). In our implementation for the SCAD and LASSO, the bandwidth selected using the plug-in method occasionally caused numerical problems and failed to converge. Therefore, the results of SCAD and LASSO are only based on converged cases.

Table 5.1.

Comparison of variable selection procedures (σ2 = 1)a

| Model | Zero coef. | |||||

|---|---|---|---|---|---|---|

| (n, f) | Method | MSE(β̂) | MSE(f̂) | Size (3) | Corr.(5) | Inc.(0) |

| (100, f1) | DPLSE | 0.05 (0.06) | 0.07 (0.04) | 3.22 | 4.78 | 0 |

| SCAD | 0.09 (0.09) | 0.17 (0.07) | 3.39 | 4.61 | 0 | |

| LASSO | 0.10 (0.09) | 0.17 (0.07) | 3.82 | 4.18 | 0 | |

| (100, f2) | DPLSE | 0.06 (0.06) | 0.14 (0.05) | 3.21 | 4.79 | 0 |

| SCAD | 0.08 (0.08) | 0.28 (0.10) | 3.31 | 4.69 | 0 | |

| LASSO | 0.13 (0.10) | 0.29 (0.11) | 3.69 | 4.31 | 0 | |

| (200, f1) | DPLSE | 0.02 (0.02) | 0.04 (0.02) | 3.08 | 4.92 | 0 |

| SCAD | 0.02 (0.02) | 0.09 (0.03) | 3.26 | 4.74 | 0 | |

| LASSO | 0.03 (0.02) | 0.09 (0.03) | 3.45 | 4.55 | 0 | |

| (200, f2) | DPLSE | 0.02 (0.02) | 0.08 (0.03) | 3.07 | 4.93 | 0 |

| SCAD | 0.03 (0.03) | 0.19 (0.05) | 3.24 | 4.76 | 0 | |

| LASSO | 0.04 (0.03) | 0.19 (0.05) | 3.53 | 4.47 | 0 | |

SCAD and LASSO estimates are based on M converged MC samples, where M ≥ 90 except M = 72 for (200, f1).

Table 5.2 presents the results for a high variance case σ2 = 9. We notice that, as σ2 increases from 1 to 9, although there is a substantial amount of increase in the MSEs, the DPLSE still maintains very good performance in model selection. The MSEs of the DPLSE are consistently smaller than those of SCAD and LASSO (not reported here to save space). The incidence of incorrect zero coefficients occurs seldom for n = 100 and never occurs for n = 200.

Table 5.2.

DPLSE model selection and estimation results (σ2 = 9)

| Zero coef. | |||||

|---|---|---|---|---|---|

| (n, f) | MSE(β̂) | MSE(f̂) | Model Size (3) | Corr. (5) | Inc. (0) |

| (100, f1) | 0.58 (0.67) | 0.55 (0.39) | 3.23 | 4.75 | 0.02 |

| (200, f1) | 0.22 (0.24) | 0.27 (0.15) | 3.12 | 4.88 | 0 |

| (100, f2) | 0.71 (0.75) | 0.92 (0.49) | 3.21 | 4.77 | 0.02 |

| (200, f2) | 0.22 (0.22) | 0.48 (0.19) | 3.97 | 4.93 | 0 |

5.2. Performance of DPLSE for Parametric Estimation

Table 5.3 presents the point estimate, relative bias, empirical standard error, model-based frequentist and Bayesian standard errors of the estimate. To save space, we only report the point estimation results for the parameters which are truly nonzero. The point estimate is the MC sample average and the empirical standard error is computed by the MC standard deviation. Relative bias is the ratio of the bias and the true value.

Table 5.3.

DPLSE point estimation results for four selected scenarios.

| Scenario | Model | Point | Relative Empirical | Model-based SE | 95% CP | |||

|---|---|---|---|---|---|---|---|---|

| (n, σ2, f) | Parameter | Estimate | Bias | SE | Freq. | Bayesian | Freq. | Bayesian |

| (100, 1, f1) | β1 | 3.011 | 0.004 | 0.129 | 0.128 | 0.129 | 0.95 | 0.95 |

| β2 | 1.500 | 0.000 | 0.113 | 0.106 | 0.107 | 0.94 | 0.94 | |

| β5 | 2.024 | 0.012 | 0.134 | 0.105 | 0.107 | 0.89 | 0.90 | |

| (200, 1, f1) | β1 | 3.006 | 0.002 | 0.086 | 0.087 | 0.087 | 0.94 | 0.95 |

| β2 | 1.502 | 0.002 | 0.086 | 0.087 | 0.088 | 0.95 | 0.96 | |

| β5 | 1.994 | −0.002 | 0.075 | 0.076 | 0.077 | 0.96 | 0.96 | |

| (200, 1, f2) | β1 | 3.009 | 0.003 | 0.088 | 0.087 | 0.088 | 0.94 | 0.94 |

| β2 | 1.497 | −0.002 | 0.088 | 0.088 | 0.088 | 0.96 | 0.97 | |

| β5 | 1.996 | −0.002 | 0.074 | 0.077 | 0.078 | 0.98 | 0.99 | |

| (200, 9, f2) | β1 | 3.037 | 0.012 | 0.242 | 0.261 | 0.263 | 0.94 | 0.94 |

| β2 | 1.487 | −0.009 | 0.302 | 0.264 | 0.265 | 0.96 | 0.96 | |

| β5 | 1.983 | −0.012 | 0.246 | 0.230 | 0.232 | 0.96 | 0.96 | |

We report the results in four scenarios with varying n, σ2 and f(t), and those in other scenarios are similar and hence omitted. We observe that β̂ is roughly unbiased in all scenarios. Both Bayesian and frequentist SEs of β̂j’s obtained from (4.1) and (4.3) agree well with the empirical SEs; all SEs decrease as n increases or σ2 decreases. Bayesian SEs are slightly larger than their frequentist counterparts, since they also account for bias in β̂j. The confidence intervals based on either Bayesian or frequentist SEs achieve the nominal coverage probability, indicating the accuracy of the SE formulas. Overall, the DPLSE works very well for estimating model parameters.

5.3. Performance of f̂ (t) and Pointwise Standard Errors

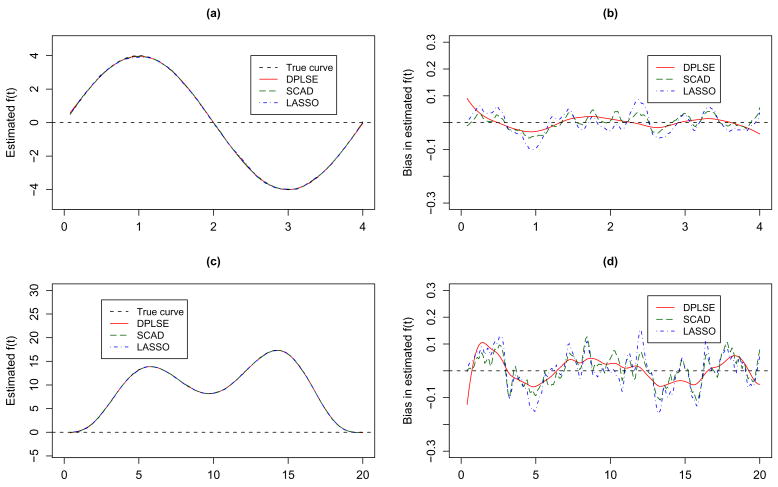

In Figure 5.1 we plot the pointwise estimates and biases for estimating f1(t) and f2(t) when n = 200 and σ2 = 1 for all three methods.

Figure 5.1.

Plots of f̂ (t) and pointwise biases (SCAD and LASSO are based on converged MC samples). Plots (a) and (b) are for f1; plots (c) and (d) are for f2. Here n = 200 and σ2 = 1. The horizontal axis is t in all the four plots.

In plots (a) and (c), the averaged fitted curves are almost indistinguishable from the true nonparametric function, indicating small biases in f̂ (t) for all three methods. Pointwise biases are magnified in plots (b) and (d), which show that the DPLSE overall has smaller bias than the other two methods. The SCAD and LASSO fits have slightly larger and rougher pointwise biases, which indicates under-smoothing due to a small bandwidth selected by the plug-in method. Our method is more advantageous in that it automatically estimates the smoothing parameter and controls the amount of smoothing more appropriately by treating τ = 1/(nλ1) as a variance component.

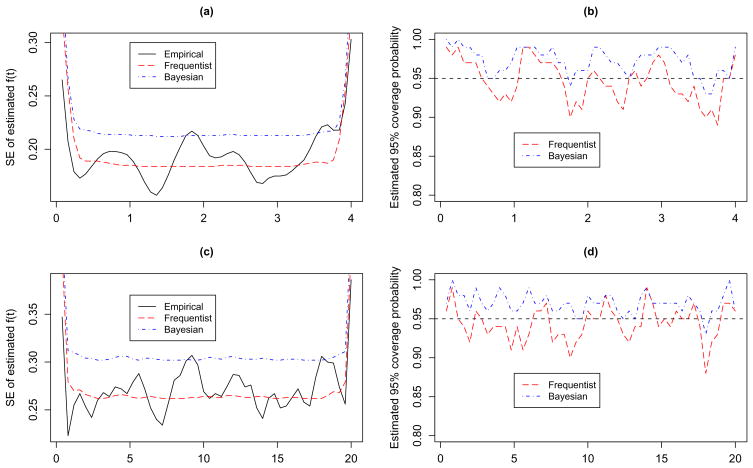

Figure 5.2 depicts the pointwise standard errors and pointwise coverage probabilities of confidence intervals given by the covariance formulas (4.2) and (4.4). Here n = 200 and σ2 = 1; (a) and (b) are for f1 with t6 errors, and (c) and (d) are for f2 with mixture normal errors.

Figure 5.2.

Plots of pointwise frequentist and Bayesian standard errors and coverage probability rates. Plots (a) and (b) are for f1(t); plots (c) and (d) are for f2(t). The horizontal axis is t in all plots.

We note that the frequentist pointwise SEs interlace with the empirical SEs, whereas the Bayesian pointwise SEs are a little larger than the frequentist counterparts. Accordingly, as shown in plots (b) and (d), the pointwise coverage probability rates for frequentist confidence intervals are around the nominal level, whereas most of the Bayesian coverage probabilities are higher than 95%.

6. Real Data Application

We apply the proposed DPLS method to the Ragweed Pollen Level data, which was analyzed in Ruppert et al. (2003). The data was collected in Kalamazoo, Michigan during the 1993 ragweed season, and it consists of 87 daily observations of ragweed pollen level and relevant information. The main interest is to develop accurate models to forecast daily ragweed pollen level. The raw response ragweed is the daily ragweed pollen level (grains/m3). Among the explanatory variables, x1 is an indicator of significant rain, where x1 = 1 if there is at least 3 hour steady or brief but intense rain and x1 = 0 otherwise; x2 is temperature (°F); x3 is wind speed (knots). The x-covariates are standardized first. Since the raw response is rather skewed, Ruppert et al. (2003) suggested a square root transformation . Marginal plots suggest a strong nonlinear relationship between y and the day number in the current ragweed pollen season. Consequently, a semiparametric regression model with a nonparametric baseline f(day) is reasonable. Ruppert et al. (2003) fitted a semiparametric model with x1, x2 and x3, whereas we add quadratic and interaction terms and consider a more complex model:

The tuning parameter selected by BIC is λ2 = 0.177. Table 6.1 gives the DPLSE for the regression coefficients and their corresponding frequentist and Bayesian standard errors.

Table 6.1.

Estimated coefficients and frequentist and Bayesian SE for ragweed pollen level data

| Full model | Selected model | ||||||

|---|---|---|---|---|---|---|---|

| Variable | Parameter | Frequentist | Bayesian | Parameter | Frequentist | Bayesian | |

| Estimate | SE | SE | Estimate | SE | SE | ||

| x1 | 0.64 | 0.22 | 0.23 | 0.70 | 0.18 | 0.18 | |

| x2 | 1.31 | 0.37 | 0.39 | 1.16 | 0.36 | 0.37 | |

| x3 | 0.87 | 0.19 | 0.20 | 0.76 | 0.19 | 0.20 | |

|

|

0.53 | 0.23 | 0.24 | 0 | - | - | |

|

|

0.04 | 0.19 | 0.19 | 0 | - | - | |

| x1x2 | 0.26 | 0.19 | 0.19 | 0 | - | - | |

| x1x3 | 0.02 | 0.22 | 0.23 | 0 | - | - | |

| x2x3 | 0.34 | 0.20 | 0.20 | 0 | - | - | |

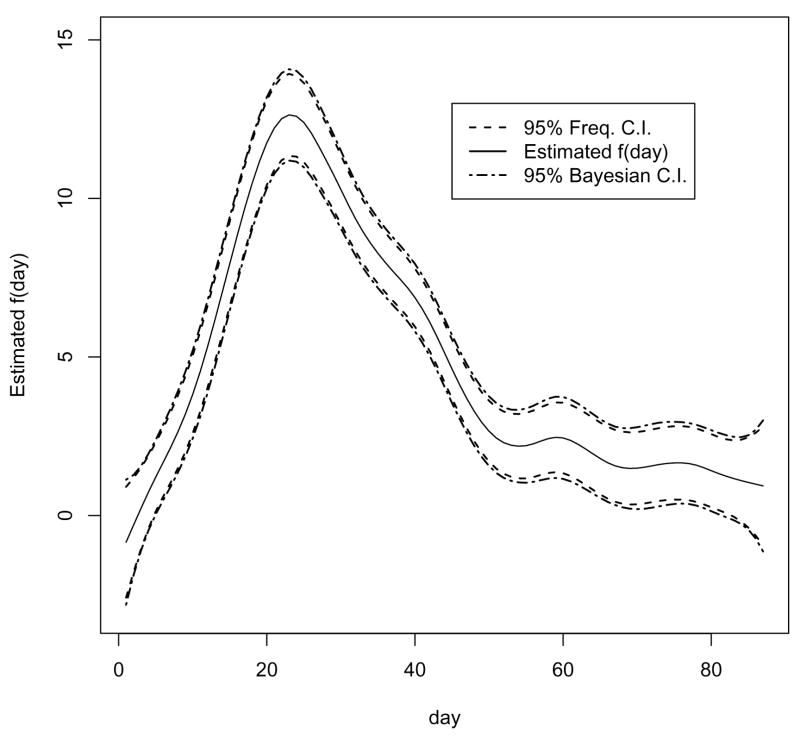

For comparison, we also fitted the full model via traditional partially splines with only roughness penalty on f. Table 6.1 shows that the final fitted model is ŷ = f̂ (day) + β̂1x1 + β̂2x2 + β̂3x3, indicating that the linear main effect model suffices. All the estimated coefficients are positive, suggesting that the ragweed pollen level increases as each of the covariates increases. The shrinkage estimates have relatively smaller standard errors than those under the full model. Figure 6.1 depicts the estimated nonparametric function f̂ (day) and its frequentist and Bayesian 95% pointwise confidence intervals. The plot indicates that the baseline f(day) climbs rapidly to the peak on around day 25 and plunges until day 60, and decreases steadily thereafter.

Figure 6.1.

Plot of estimated f(day) and its frequentist and Bayesian 95% pointwise confidence intervals for the Ragweed Pollen Level data.

7. Discussion

We propose a new regularization method for simultaneous variable selection and model estimation in partially linear models via double-penalized least squares. Under certain regularity conditions, the DPLSE β̂ is root-n consistent and has the oracle property. To facilitate computation, we reformulate the problem into a linear mixed model (LMM) framework, which allows us to estimate the smoothing parameter λ1 as an additional variance component instead of conducting the conventional two-dimensional grid search together with the other tuning parameter λ2. Another advantage of the LMM representation is that standard software can be used to implement the DPLS. Simulation studies show that the new method works effectively in terms of both variable selection and model estimation. We have derived both frequentist and Bayesian covariance formulas for the DPLSEs and empirical results favor the frequentist SE formulas for f(t). Furthermore, our empirical results suggest that the DPLSE is robust to the distributional assumption of errors, giving strong support for its application in general situations.

In this paper, we have studied the large sample properties of the new estimators when the dimension d satisfies: (i) d fixed, or (ii) dn → ∞ as n → ∞ with dn < n. In future research we will investigate the properties and performance of our estimators for the more challenging situation d ≫ n. Our major challenges will be to study how the convergence rate and asymptotic distributions of the linear components, in the presence of nuisance nonparametric components, will be affected when d > n. Very recently, Ravikumar et al. (2008) and Meier et al. (2008) consider the sparse estimation and function smoothing for additive models in high dimensional data settings. We will see how these works can be adapted to tackle our challenges in the future.

The proposed DPLS method assumes that the errors are uncorrelated. In future research, we will generalize it to model selection for correlated data such as longitudinal data. Another interesting problem is model selection for generalized semiparametric models, e.g. E(Y) = g{Xβ + f(t)}, where g is a link function. In that case we will consider the double-penalized likelihood and investigate asymptotic properties for the resulting estimators.

Acknowledgments

The authors thank the editor, the associate editor, and two reviewers for their constructive comments and suggestions. The research of Hao Zhang was supported by in part by National Science Foundation DMS-0645293 and by National Institute of Health R01 CA085848-08. The research of Daowen Zhang was supported by National Institute of Health R01 CA85848-08.

Appendix

Proofs

Proof of Lemma 1

Differentiating L(β) in Q(β) and evaluating at β0, we get:

| (A1) |

| (A2) |

For the partially linear model, we have Y − Xβ0 = f + ε. Substitution into (A1) yields

| (A3) |

Now, the proof of Theorem 1 in Heckman (1986) and its four propositions can be used. Under regularity conditions, we have that if λ1n → 0 and , then

| (A4) |

| (A5) |

Parts (a) and (b) are obtained by applying Slutsky’s theorem to (A1) and (A2).

To prove Theorems 3 and 4, we need the following lemma. Its proof can be derived in the similar fashion as Lemma 1 above and Theorem 1 of Heckman (1986). To save space, we only state the results below and omit the proof. For any vector v, we use[v]i to denote its ith component. For any matrix G, we use [G]ij to denote its (i, j)th element.

Lemma 3

Under the regularity conditions (C1) and (C2), if λ1n → 0, then

[L′(βn0)]i = Op(n1/2),

.

Proof of Theorem 3

Let . We need to show that for any given ε > 0, there exists a large constant C such that

| (A6) |

Let Δn(r) = Q(βn0 + cnr) − Q(βn0). Recall that the first qn components of βn0 are nonzero, pλ2n (0) = 0 and pλ2n(·) is nonnegative. By Taylor’s expansion, we have

By Lemma 3(a), we have

By Lemma 3(b), under the regularity condition (C2), we have

the last equation above is due to the dimension condition . With regard to I3 and I4, we have

and

Under the condition (C1), and when n is large enough and λ2n → 0. So, both I3 and I4 are dominated by I2. Therefore, by allowing C to be large enough, all terms I1, I3, I4 are dominated by I2, which is positive. This proves (A6) and completes the proof.

Proof of Theorem 4

Let . It suffices to show that as n → ∞ with probability tending to 1, for any βn1 satisfying and j = qn + 1, …, dn,

| (A7) |

| (A8) |

By Taylor expansion and the fact that L(βn) is quadratic in βn, we get

By Lemma 3 and the regularity conditions (C1) and (C2), we have

so . Since , from

we can see that the sign of is totally determined by the sign of βnj. Therefore, (A7) and (A8) hold for j > qn, which leads to β̂n2 = 0. Combining with the result of Theorem 2, there is a -consistent local minimizer β̂n of Q(βn), and β̂n has the form . This completes the proof.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Xiao Ni, Email: xni@stat.ncsu.edu.

Hao Helen Zhang, Email: hzhang2@stat.ncsu.edu.

Daowen Zhang, Email: zhang@stat.ncsu.edu.

References

- Akaike H. Maximum likelihood identification of Gaussian autoregressive moving average models. Biometrika. 1973;60:255–265. [Google Scholar]

- Breiman L. Better subset selection using the nonnegative garrote. Technometrics. 1995;37:373–384. [Google Scholar]

- Bunea F. Consistent covariate selection and post model selection inference in semiparametric regression. Annals of Statistics. 2004;32:898–927. [Google Scholar]

- Bunea F, Wegkamp M. Two-stage model detection procedures in partially linear regression. The Canadian Journal of Statistics. 2004;32:105–118. [Google Scholar]

- Craven P, Wahba G. Smoothing noisy data with spline functions: Estimating the correct degree of smoothing by the method of generalized cross-validation. Numerishe Mathematik. 1979;31:377–403. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Annals of Statistics. 2004;32:407–451. [Google Scholar]

- Engle R, Granger C, Rice J, Weiss A. Nonparametric estimates of the relation between weather and electricity sales. Journal of American Statistical Association. 1986;81:310–386. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Fan J, Li R. New estimation and model selection procedures for semi-parametric modeling in longitudinal data analysis. Journal of American Statistical Association. 2004;99:710–723. [Google Scholar]

- Fan J, Peng H. Nonconcave penalized likelihood with a diverging number of parameters. Annals of Statistics. 2004;32:928–961. [Google Scholar]

- Green PJ. Penalized likelihood for general semi-parametric regression models. Int Statist Rev. 1987;55:245–260. [Google Scholar]

- Green PJ, Silverman BW. Nonparametric Regression and Generalized Linear Models. Chapman and Hall; London: 1994. [Google Scholar]

- Gu C. Smoothing Spline ANOVA Models. Springer; New York: 2002. [Google Scholar]

- Hastie T, Tibshirani RJ. Generalized Additive Models. Chapman and Hall; London: 1990. [DOI] [PubMed] [Google Scholar]

- Heckman NE. Spline smoothing in a partly linear model. J R Statist Soc B. 1986;48:244–248. [Google Scholar]

- Liang H. Estimation in partially linear models and numerical comparisons. Computational Statistics and Data Analysis. 2006;50:675–687. doi: 10.1016/j.csda.2004.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linhart H, Zucchini W. Model Selection. New York: Wiley; 1986. [Google Scholar]

- Mallows C. Some comments on cp. Technometrics. 1973;15:661–675. [Google Scholar]

- Meier L, Van de Geer S, Bühlmann P. High-dimensional additive modeling. 2008 http://arxiv.org/abs/0806.4115v1.

- Miller AJ. Subset Selection in Regression. Chapman and Hall; London: 2002. [Google Scholar]

- Ravikumar P, Liu H, Lafferty J, Wasserman L. Spam: Sparse additive models. Advances in Neural Information Processing systems. 2008;20:1202–1208. [Google Scholar]

- Ruppert D, Sheather S, Wand M. An effective bandwidth selector for local least squares regression. Journal of American Statistical Association. 1995;90:1257–1270. [Google Scholar]

- Ruppert D, Wand MP, Carroll RJ. Semiparametric Regression. Cambridge University Press; Cambridge, New York: 2003. [Google Scholar]

- Schwarz G. Estimating the dimension of a model. Annals of Statistics. 1978;6:461–464. [Google Scholar]

- Speckman P. Kernel smoothing in partial linear models. J R Statist Soc B. 1988;50:413–436. [Google Scholar]

- Speed T. Discussion of ‘blup is a good thing: the estimation of random effects’ by G. K. Robinson. Statistical Science. 1991;6:15–51. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J R Statist Soc B. 1996;58:267–288. [Google Scholar]

- Wahba G. Bayesian ‘confidence intervals’ for the cross-validated smoothing spline. J R Statist Soc B. 1983;45:133–150. [Google Scholar]

- Wahba G. A comparison of GCV and GML for choosing the smoothing parameter in the generalized spline smoothing problem. Annals of Statistics. 1985;13:1378–1402. [Google Scholar]

- Wahba G. Spline Models for Observation Data. Society for Industrial and Applied Mathematics 1990 [Google Scholar]

- Wang H, Li R, Tsai CL. Tuning parameter selector for SCAD. Biometrika. 2007;94:553–568. doi: 10.1093/biomet/asm053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang D, Lin X, Raz J, Sowers M. Semiparametric stochastic mixed models for longitudinal data. Journal of American Statistical Association. 1998;93:710–719. [Google Scholar]

- Zhang H, Lu W. Adaptive lasso for cox’s proportional hazards model. Biometrika. 2007;94:691–703. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of American Statistical Association. 2006;101:1418–1429. [Google Scholar]