Abstract

Objectives

To examine the effect on peer review of asking reviewers to have their identity revealed to the authors of the paper.

Design

Randomised trial. Consecutive eligible papers were sent to two reviewers who were randomised to have their identity revealed to the authors or to remain anonymous. Editors and authors were blind to the intervention.

Main outcome measures

The quality of the reviews was independently rated by two editors and the corresponding author using a validated instrument. Additional outcomes were the time taken to complete the review and the recommendation regarding publication. A questionnaire survey was undertaken of the authors of a cohort of manuscripts submitted for publication to find out their views on open peer review.

Results

Two editors’ assessments were obtained for 113 out of 125 manuscripts, and the corresponding author’s assessment was obtained for 105. Reviewers randomised to be asked to be identified were 12% (95% confidence interval 0.2% to 24%) more likely to decline to review than reviewers randomised to remain anonymous (35% v 23%). There was no significant difference in quality (scored on a scale of 1 to 5) between anonymous reviewers (3.06 (SD 0.72)) and identified reviewers (3.09 (0.68)) (P=0.68, 95% confidence interval for difference −0.19 to 0.12), and no significant difference in the recommendation regarding publication or time taken to review the paper. The editors’ quality score for reviews (3.05 (SD 0.70)) was significantly higher than that of authors (2.90 (0.87)) (P<0.005, 95%confidence interval for difference −0.26 to −0.03). Most authors were in favour of open peer review.

Conclusions

Asking reviewers to consent to being identified to the author had no important effect on the quality of the review, the recommendation regarding publication, or the time taken to review, but it significantly increased the likelihood of reviewers declining to review.

Key messages

Arguments in favour of open peer review include increased accountability, fairness, and transparency.

Preliminary evidence suggests that open peer review leads to better quality reviews

We conducted a randomised controlled trial to examine the feasibility and impact of asking BMJ reviewers to sign their reviews

There were no differences in the quality of reviews between those who were randomised to be identified and those who were not

Most reviewers agreed to be identified to authors, and most of the authors surveyed were in favour of open peer review

Introduction

The BMJ, like other journals, is constantly trying to improve its system of peer review. After failing to confirm that blinding of reviewers to authors’ identities improved the quality of reviews,1 we decided to experiment with open peer review. There are several reasons for this. Firstly, no evidence exists that anonymous peer review (in which the reviewers know the authors’ identities but not vice versa) is superior to other forms of peer review. Secondly, some preliminary evidence suggests that open peer review may produce better opinions.1–3 Thirdly, if reviewers have to sign their reviews they may put more effort into their reviews and so produce better ones (although signing may lead them to blunt their opinions for fear of causing offence and so produce poorer ones). Fourthly, several editors have argued for open peer review,4–6 and some journals, particularly outside biomedicine, practise it already. Fifthly, open peer review should increase both the credit and accountability for peer reviewing, both of which seem desirable. Sixthly, the internet opens up the possibility of the whole peer review process being conducted openly on line, and it seems important to gather evidence on the effects of open peer review. Seventhly, and most importantly, it seems unjust that authors should be “judged” by reviewers hiding behind anonymity: either both should be unknown or both known, and it is impossible to blind reviewers to the identity of authors all of the time.1,7

We therefore conducted a randomised controlled trial to confirm that open review did not lead to poorer quality opinions than traditional review and that reviewers would not refuse to review openly (because open review would then be unworkable).

Methods

The study had a paired design. Consecutive manuscripts received by the BMJ and sent by editors for peer review during the first seven weeks of 1998 were eligible for inclusion. Four potential clinical reviewers were selected by one of the BMJ’s 13 editors. Two of these four reviewers were chosen to review the manuscript. The other two were kept in reserve in case a selected reviewer declined. The selected reviewers were randomised either to be asked to have their identity revealed to authors (intervention group) or to remain anonymous (control group), forming a paired sample. Randomisation was carried out by a researcher using a computerised randomisation program.

The BMJ has a general policy of routinely seeking consent from all authors submitting papers to the journal to take part in our ongoing programme of research. All reviewers were informed that they were part of a study at the time they were asked to provide a review of the manuscript. They were also sent two questionnaires. If unable or unwilling to provide a review for the particular manuscript, they were asked to complete the first questionnaire, which listed four possible reasons for declining and a fifth option allowing them to give details of other reasons for declining. If a reviewer declined he or she played no further part in the study, and a replacement reviewer was randomly chosen from the remaining two reviewers previously selected by the editor. This process was continued until two consenting reviewers were found, the manuscript being returned to the editor if necessary to select further potential reviewers. All reviewers were aware of the details of the author(s). Reviewers providing a review were asked to complete the second questionnaire stating how long they spent on their review and giving their recommendation regarding publication of the manuscript.

When both reviews were received, they and the manuscript were passed to the responsible editor, who was asked to assess the quality of the reviews using a validated review quality instrument (see Appendix).8 A second editor, randomly selected from the other 12 editors, independently assessed review quality. Editors did not know which of the reviewers had consented to be identified to the author. The corresponding author of each manuscript was sent anonymous copies of the two reviews, told that a decision on the manuscript had not yet been reached, and asked to assess their quality using the review quality instrument. A decision on whether to publish the paper was made in the journal’s usual manner and conveyed to the corresponding author after he or she had assessed the quality of both reviews. Our intention was to reveal the identity of the reviewer who had consented to be identified when notifying the author of the decision. However, this did not occur in every case because of difficulties encountered in changing our normal procedure.

After the randomised study was completed, we sent a short questionnaire to the corresponding authors of a cohort of 400 consecutive manuscripts submitted for publication in October 1998 in order to find out their views on open peer review.

Review quality instrument

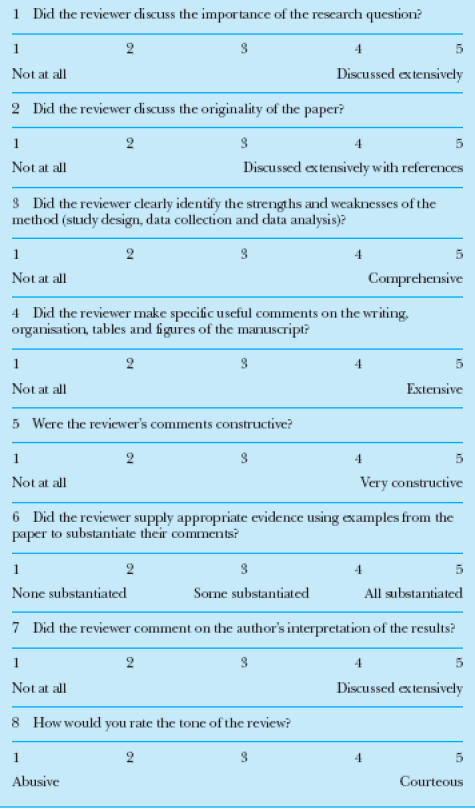

The review quality instrument (version 4) consists of seven items (importance of the research question, originality, method, presentation, constructiveness of comments, substantiation of comments, interpretation of results) each scored on a five-point Likert scale (1=poor, 5=excellent). It was a modified version of the previously validated version 3.8 A total score is based on the mean of the seven item scores. The quality of each review was based, firstly, on the means of the two editors’ scores and, secondly, on the corresponding author’s scores (for each item and total score). We used the mean of the two editors’ scores to improve the reliability of the method. We used two additional outcome measures: the time taken to write the review and the reviewer’s recommendation regarding publication (publish with minor revision, publish with major revision, reject).

Statistical analysis

With a maximum difference in review quality scores of 4 (scores being rated from 1 to 5), we considered a difference of 10% (that is, 0.4/4) to be editorially significant. To detect such a difference (α=0.05, β=0.10, SD=1.2), we needed 95 manuscripts available for analysis.

Since distributions of scores and differences were sufficiently close to a normal distribution to make t tests reliable, we used paired t tests to compare outcome measures between reviewers in the intervention and control groups and between editors’ and authors’ evaluations of review quality. We used McNemar’s χ2 test to compare recommendations regarding publication and to compare the number of reviewers in each group who declined to provide a review. Weighted κ statistics were used to measure interobserver reliability, with a maximum difference of 1 in scores between editors representing agreement.9

Results

Recruitment and randomisation

The 125 consecutive eligible papers received by the BMJ during the recruitment period were entered into the study. Eleven papers (9%) were excluded after randomisation because it was not possible to obtain two suitable reviews without causing an unacceptable delay in the editorial process. Two editors’ assessments were obtained for 113 papers (226 reviews), and the corresponding author’s assessment was obtained for 104 of these papers (208 reviews). The remaining paper was assessed by the author but by only one editor and was excluded from the analysis.

In order to assess the success of randomisation, we compared reviewers in terms of the characteristics known to be associated with review quality (mean age, place of residence, postgraduate training in epidemiology or statistics, and current involvement in medical research).10 There were no substantial differences between the intervention and control groups (table 1).

Table 1.

Characteristics of 125 reviewers randomised to remain anonymous (control) and 125 randomised to be identified (intervention). Values are numbers (percentages) unless stated otherwise

| Characteristic | Anonymous reviewers | Identified reviewers |

|---|---|---|

| Mean (SD) age (years) | 51.1 (9.0) (n=120) | 51.2 (8.4) (n=120) |

| Place of residence: | ||

| United Kingdom | 106/124 (85) | 106/123 (86) |

| North America | 8/124 (6) | 6/123 (5) |

| Other | 10/124 (8) | 11/123 (9) |

| Postgraduate training in epidemiology or statistics | 67/120 (56) | 62/119 (52) |

| Involved in medical research | 103/120 (86) | 103/121 (85) |

Totals less than 125 are because of missing data.

Reviewers declining to provide a review

Of the 250 reviewers initially invited to participate, 73 declined—29 (23%) in the group randomised to remain anonymous (anonymous reviewers) and 44 (35%) in the group randomised to be asked for consent to be identified (identified reviewers). In 11 of the 125 randomised papers both reviewers declined, in 18 only the anonymous reviewer declined, and in 33 only the identified reviewer declined. Thus, the difference between identified reviewers and anonymous reviewers in declining to review was 12% (35% v 23%, 95% confidence interval 0.2% to 24%), which is marginally significant (McNemar’s χ2=3.84, P=0.0499). Of the 44 identified reviewers who declined to review, nine (19%) gave as their main reason their opposition in principle to open peer review.

Effect of identification on review quality, time taken, and recommendation

There was no significant difference between the anonymous and the identified reviewers in the mean total score for quality allocated by editors (3.06 v 3.09) (table 2). The reviews produced by identified reviewers were judged to be slightly better for five and slightly worse for two of the seven items, but none of these differences was significant.

Table 2.

Effect of reviewers being randomised to be identified on the quality of their review (editors’ assessments) and time taken to review. Values are means (standard deviations) unless stated otherwise

| Item | Anonymous reviewers (n=113) | Identified reviewers (n=113) | Difference (95% CI) |

|---|---|---|---|

| Item of quality*: | |||

| Importance | 2.77 (0.96) | 2.86 (0.87) | −0.09 (−0.31 to 0.12) |

| Originality | 2.46 (1.18) | 2.47 (1.21) | −0.01 (−0.29 to 0.28) |

| Method | 3.38 (0.99) | 3.28 (1.00) | 0.10 (−0.13 to 0.34) |

| Presentation | 2.88 (1.05) | 2.91 (0.96) | −0.03 (−0.25 to 0.19) |

| Constructiveness of comments | 3.51 (0.89) | 3.56 (0.79) | −0.04 (−0.23 to 0.14) |

| Substantiation of comments | 3.16 (0.92) | 3.36 (0.90) | −0.19 (−0.40 to 0.01) |

| Interpretation of results | 3.22 (0.94) | 3.18 (0.95) | 0.04 (−0.18 to 0.26) |

| Mean total score | 3.06 (0.72) | 3.09 (0.68) | −0.03 (−0.19 to 0.12) |

| Time taken to review (hours) | 2.25 (1.46) | 2.20 (1.76) | 0.05 (−0.33 to 0.43) |

Items scored on a five-point scale (1=poor, 5=excellent).

Similarly, there was no significant difference between the anonymous and the identified reviewers in the mean total score allocated by the corresponding authors (2.83 v 2.99). The reviews produced by identified reviewers were judged to be slightly better for all seven items, but only one difference (for substantiation of comments) reached significance (−0.36, 95% confidence interval −0.64 to −0.08).

Comparing the mean total score for reviews in which the recommendation was to reject the paper with those in which the recommendation was to publish (subject to revision), we found no significant difference in editors’ assessments (3.04 v 3.14) but a highly significant difference in authors’ assessments (2.65 v 3.20; difference 0.54, 95% confidence interval 0.31 to 0.78). Similar results were obtained when we compared reviews of papers that were actually rejected with those of papers that were accepted with or without revision.

The anonymous and identified reviewers spent similar times carrying out their reviews (table 2).

The distribution of recommendations regarding publication was broadly similar (table 3). The anonymous reviewers rejected 8% more manuscripts than the identified reviewers (48% v 40%), though the difference was not significant (McNemar’s χ2=1.08, P=0.30), whereas the identified reviewers were more likely to give a positive recommendation (56% v 47%), again not significant (χ2=0.3, P=0.58). Of the 103 papers that were given a clear recommendation by both reviewers, the identified reviewers recommended publication of 56% and the anonymous group recommended publication of 51% (difference 5%, −9% to 19%).

Table 3.

Effect of reviewers being randomised to be identified on their recommendation for publication of reviewed manuscript. Values are numbers (percentages)

| Recommendation | Anonymous reviewers (n=114) | Identified reviewers (n=114) |

|---|---|---|

| Publish without revision | 1 (1) | 2 (2) |

| Publish after minor revision | 33 (29) | 41 (36) |

| Publish after major revision | 19 (17) | 20 (18) |

| Reject | 55 (48) | 46 (40) |

| Other | 1 (1) | 1 (1) |

| Missing data | 5 (4) | 4 (4) |

Comparison between the two editors’ independent assessments

We examined the interobserver reliability of the review quality instrument (version 4). Weighted κ statistics for items 1 to 7 were between 0.38 and 0.67, and were over 0.5 for four of the items. (A weighted κ statistic of <0.4 represents poor agreement, 0.4-0.75 is fair to good, and >0.75 is excellent.11) The correlation between the mean total scores for the two editors was 0.66 (0.69 for the anonymous reviewers, 0.64 for the identified reviewers).

Comparison between editors’ and authors’ assessments

The mean total score for quality allocated by authors was significantly lower than that allocated by editors (2.90 v 3.05; difference −0.14, −0.26 to −0.03). For two of the items (importance and originality), the authors’ ratings were higher than those of the editors, but the difference was significant only for importance. For the remaining five items (method, presentation, constructiveness of comments, substantiation of comments, and interpretation of results), the editors’ ratings were higher, and all of these differences were significant (table 4). The correlation between the mean total scores given by the editors and authors was 0.52 for anonymous reviewers and 0.35 for identified reviewers.

Table 4.

Comparison of editors’ and authors’ assessments of quality of reviews. Values are means (standard deviations) unless stated otherwise

| Item of quality* | Author’s assessment (n=208) | Editors’ assessment (n=208) | Mean difference (95% CI) |

|---|---|---|---|

| Importance | 2.96 (1.24) | 2.79 (0.90) | 0.18 (0.01 to 0.34) |

| Originality | 2.50 (1.25) | 2.46 (1.20) | 0.04 (−0.14 to 0.21) |

| Method | 3.12 (1.10) | 3.31 (1.00) | −0.19 (−0.35 to −0.03) |

| Presentation | 2.64 (1.21) | 2.85 (0.99) | −0.21 (−0.38 to −0.04) |

| Constructiveness of comments | 3.30 (1.18) | 3.50 (0.84) | −0.19 (−0.37 to −0.02) |

| Substantiation of comments | 2.89 (1.21) | 3.23 (0.92) | −0.31 (−0.48 to −0.14) |

| Interpretation of results | 2.90 (1.21) | 3.19 (0.97) | −0.28 (−0.46 to −0.11) |

| Mean total score | 2.90 (0.87) | 3.05 (0.70) | −0.14 (−0.26 to −0.03) |

Items scored on a five-point scale (1=poor, 5=excellent).

Response to authors’ questionnaire survey

Of the 400 questionnaires we sent out, 346 (87%) were returned. Of the respondents, 216 (62%) reported themselves to be the authors of research papers or short reports, 248 (72%) were men, 250 (72%) were aged 31-50, 228 (66%) worked in the United Kingdom, 53 (15%) worked in primary care, and 164 (47%) worked in secondary care (the remainder worked in epidemiology, public health, or some other non-clinical discipline). The characteristics of authors of research papers and short reports were similar to those of the entire sample.

Of the 346 responses received, 192 (55%) were in favour of reviewers being identified and 90 (26%) were against. The remainder indicated that they either had no view, no particular preference, or gave a non-specific response. Most (228 (66%)) stated that if the BMJ introduced open peer review it would make no difference to their decision on whether to submit any future manuscripts to the BMJ, 104 (30%) said they would be more or much more likely to submit manuscripts to the BMJ, and only five (under 2%) said they would be less or much less likely to submit manuscripts. Analysis of responses from authors of research papers and of short reports only gave an almost identical result.

Discussion

Reviewers who were to be identified to authors produced similar quality reviews and spent similar time on their reviews as did anonymous reviewers, but they were slightly more likely to recommend publication (after revision) and were significantly more likely to decline to review. The strengths of the study include its randomised design, the fact that it used a validated instrument, and its power to detect editorially significant differences between groups. This is the first time that the effect of identifying reviewers to the authors of a manuscript has been studied in a randomised trial on consecutive manuscripts and that authors have been asked to assess the quality of reviews before learning of the fate of their manuscript.

Our finding that authors rated reviews which recommended publication higher than those which recommended rejection is hardly surprising. Even though the authors were unaware of the ultimate fate of their paper, they seemed to be influenced by the opinion of their paper expressed in the review. Editors, on the other hand, did not seem to be influenced by a reviewer’s opinion of the merit of a paper when they assessed the quality of the review. These findings also show the ability of the review quality instrument to discriminate between reviews of differing qualities.

Comparison with other studies

McNutt et al reported that reviewers who chose to sign their reviews were more constructive in their comments.2 This difference from our findings may be explained by the fact that, while they randomised reviewers to blind or unblind review, they allowed them to choose whether or not to sign their reviews. Reviewers who chose to sign might have produced better reviews than those who did not. Alternatively, those who felt that they had done a good job might have been more willing to sign their reviews.

In contrast, a study in which 221 reviewers commented on the same paper found that those who were randomised to have their identity revealed to the authors were no better at detecting errors in the paper than those who remained anonymous.3 In our previous trial reviewers randomised to have their identity revealed to a co-reviewer, but not to the authors of the paper, produced slightly higher quality reviews, the difference being statistically but not editorially significant.1

Neither of the trials in which reviewers were randomised to sign their reviews found a significant effect on the rate of reviewers declining to review.1,3 Our present study suggests that more reviewers will decline to review if their identity will be revealed to authors. The number of reviewers declining to review was too small to allow meaningful analysis of the reasons for declining or the characteristics of the reviewers who declined.

Limitations of study

This study has several methodological limitations. Firstly, we confined our assessment to intermediate outcomes—the quality of the review, time taken, and recommendation to publish. It is likely that our intermediate outcomes are positively associated with the final outcome (quality of the published paper), but we cannot demonstrate this since there is at present no agreed validated instrument for assessing the quality of manuscripts.

Secondly, although the review quality instrument can assess the content and tone of a review, it is unable to determine its accuracy. It is therefore possible for a review to be highly rated but to contain erroneous observations and comments.

Thirdly, we do not know whether there was a Hawthorne effect because all reviewers knew they were taking part in a study. In our previous trial we included a group of reviewers who were unaware they were part of a study, to enable us to assess any Hawthorne effect. No such effect was apparent.1

Fourthly, this study was undertaken in a general medical journal. Given the differences between such a journal and a small, specialist journal in respect of the selection of reviewers and the size of the pool of reviewers from which selection can be made, it is possible that the results would be different if a similar study were conducted in a specialist journal.

Finally, the sensitivity of the review quality instrument is unknown, but total scores for the 226 reviews approximated to a normal distribution and extended over the full range of possible values, from 1 to 5. Previous use of the review quality instrument has produced similar findings.9

This study leaves a number of questions unanswered. Firstly, we do not know if the results would have been the same if we had concealed the names of the authors of manuscripts from the reviewers (blinding). In our previous study we found that blinding had no effect, with or without the additional intervention of identifying the reviewer to a co-reviewer,1 and a study that looked specifically at blinding reviewers to authors also found no effect.3 Secondly, we do not know the effect if authors knew the identity of a reviewer at the time they made their assessment. Thirdly, there is a risk that the practice of open peer review might lead to reviewers being less critical. Although there was a suggestion that this might be so (identified reviewers were more likely to recommend publication), the difference was not significant.

Conclusion

These results suggest that open peer review is feasible in a large medical journal and would not be detrimental to the quality of the reviews. It would seem that ethical arguments in favour of open peer review outweigh any practical concerns against it. The results of our questionnaire survey of authors also suggest that authors would support a move towards open peer review.

However, we would like to see similar studies undertaken in other large journals to establish the generalisability of our results. We would also strongly urge small, specialist journals to consider doing similar studies in order to ascertain whether the results we have found are only applicable to large, general medical journals.

Acknowledgments

We thank the authors and peer reviewers who took part in this study; the participating editors—Kamran Abbasi, Tony Delamothe, Luisa Dillner, Sandra Goldbeck-Wood, Trish Groves, Christopher Martyn, Tessa Richards, Roger Robinson, Jane Smith, Tony Smith, and Alison Tonks; the BMJ’s papers secretaries—Sue Minns and Marita Batten; and Andrew Hutchings of the London School of Hygiene and Tropical Medicine for additional statistical analysis.

Appendix: Review Quality Instrument (Version 4)

Editorial by Smith Education and debate p 44

Footnotes

Funding: The BMA provided financial support for this study.

Competing interests: None declared. Three of the authors are members of the BMJ editorial staff. The paper was therefore subject to a modified peer review process in order to minimise the potential for bias. An external adviser, Dr Robin Ferner, took the place of the editor responsible for the paper, and he selected and corresponded with the peer reviewers and chaired the advisory committee that decides which papers to publish and whether revisions should be made. The paper has undergone two revisions before the decision was made to publish. No BMJ editors were involved in the process at any stage.

References

- 1.Van Rooyen S, Godlee F, Smith R, Evans S, Black N. The effect of blinding and masking on the quality of peer review: a randomized trial. JAMA. 1998;280:234–237. doi: 10.1001/jama.280.3.234. [DOI] [PubMed] [Google Scholar]

- 2.McNutt RA, Evans AT, Fletcher RH, Fletcher SW. The effects of blinding on the quality of peer review. JAMA. 1990;263:1371–1376. [PubMed] [Google Scholar]

- 3.Godlee F, Gale C, Martyn C. Effect on the quality of peer review of blinding reviewers and asking them to sign their reports: a randomized controlled trial. JAMA. 1998;280:237–240. doi: 10.1001/jama.280.3.237. [DOI] [PubMed] [Google Scholar]

- 4.Rennie D. Commentary on: Fabiato A. Anonymity of reviewers. Cardiovasc Res. 1994;28:1142–1143. doi: 10.1093/cvr/28.8.1134. [DOI] [PubMed] [Google Scholar]

- 5.Lock S. Commentary on: Fabiato A. Anonymity of reviewers. Cardiovasc Res. 1994;28:1141. doi: 10.1093/cvr/28.8.1134. [DOI] [PubMed] [Google Scholar]

- 6.Smith R. Commentary on: Fabiato A. Anonymity of reviewers. Cardiovasc Res. 1994;28:1144. doi: 10.1093/cvr/28.8.1134. [DOI] [PubMed] [Google Scholar]

- 7.Justice AC, Winker MA, Berlin JA, Rennie D. Does masking author identity improve peer review quality? A randomized controlled trial. JAMA. 1998;280:240–242. doi: 10.1001/jama.280.3.240. [DOI] [PubMed] [Google Scholar]

- 8.Van Rooyen S, Black N, Godlee F. Development of the review quality instrument (RQI) for assessing peer reviews of manuscripts. J Clin Epidemiol (in press). [DOI] [PubMed]

- 9.Cohen J. Weighted kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol Bull. 1968;70:213–220. doi: 10.1037/h0026256. [DOI] [PubMed] [Google Scholar]

- 10.Black N, van Rooyen S, Godlee F, Smith R, Evans S. What makes a good reviewer and a good review in a general medical journal? JAMA. 1998;280:231–233. doi: 10.1001/jama.280.3.231. [DOI] [PubMed] [Google Scholar]

- 11.Fleiss J. Statistical methods for rates and proportions. 2nd ed. New York: John Wiley; 1981. [Google Scholar]