Abstract

Magnetoencephalography was used to examine the effect of individual differences on the temporal dynamics of emotional face processing by grouping subjects based on their ability to detect masked valence-laden stimuli. Receiver operating characteristic curves and a nonparametric sensitivity measure were used to categorize subjects into those that could and could not reliably detect briefly presented fearful faces that were backward-masked by neutral faces. Results showed that, in a cluster of face-responsive sensors, the strength of the M170 response was modulated by valence only when subjects could reliably detect the masked fearful faces. Source localization of the M170 peak using synthetic aperture magnetometry identified sources in face processing areas such as right middle occipital gyrus and left fusiform gyrus that showed the valence effect for those target durations at which subjects were sensitive to the fearful stimulus. Subjects who were better able to detect fearful faces also showed higher trait anxiety levels. These results suggest that individual differences between subjects, such as trait anxiety levels and sensitivity to fearful stimuli, may be an important factor to consider when studying emotion processing.

Keywords: MEG, face-selective M170, sensitivity to fearful faces, valence effect, trait anxiety

Face processing is one of the most important aspects of human cognition, and processing of emotional faces in particular has high evolutionary and social relevance. The neural dynamics underlying face processing have been widely studied. Electroencephalography (EEG) studies (Bentin et al., 1996; Botzel & Grusser, 1989; George, Evans, Fiori, Davidoff, & Renault, 1996) have revealed a characteristic negative deflection around 170 ms after the onset of a face, referred to as the face-selective N170 response. Using magnetoencephalography (MEG), investigators (Furey et al., 2006; Halgren, Raij, Marinkovic, Jousmaki, & Hari, 2000; Lee, Simos, Sawrie, Martin, & Knowlton, 2005; Liu, Harris, & Kanwisher, 2002; Liu, Higuchi, Marantz, & Kanwisher, 2000; Sams, Hietanen, Hari, Ilmoniemi, & Lounasmaa, 1997; Swithenby et al., 1998) have reported a similar increase in magnetic field strength measured at the scalp about 170 ms after face onset (M170). An important social aspect of face processing is how the emotional content (especially negative) of a face is processed. There is growing evidence from both EEG and MEG studies that the face-selective N170/M170 component may also be sensitive to emotion. In particular, negative expressions have been shown to elicit larger amplitudes of the N170/M170 response than neutral expressions (Blau, Maurer, Tottenham, & McCandliss, 2007; Eger, Jedynak, Iwaki, & Skrandies, 2003; Lewis et al., 2003; Liu, Ioannides, & Streit, 1999; Streit et al., 1999).

Emotion processing is a complex process that can vary widely from individual to individual. Recent studies have shown that an individual’s ability to process emotional stimuli can depend on several factors, including personality traits such as introversion and extraversion (Fink, 2005; Hamann & Canli, 2004), emotional abilities (Freudenthaler, Fink, & Neubauer, 2006), and anxiety levels (Cornwell, Johnson, Berardi, & Grillon, 2006; Engels et al., 2007; Fox, Russo, & Georgiou, 2005; Rossignol, Philippot, Douilliez, Crommelinck, & Campanella, 2005), to name only a few. Each of these factors may influence performance on the task at hand and processing of the emotional faces. A popular task employed in studies of emotion processing (especially fear processing) is the detection of emotional stimuli during near-threshold presentation using backward masking (Liddell, Williams, Rathjen, Shevrin, & Gordon, 2004; Ohman, 2005; Pessoa, Japee, Sturman, & Ungerleider, 2006; Pessoa, Japee, & Ungerleider, 2005; Phillips et al., 2004; Soares & Ohman, 1993; Suslow et al., 2006; Williams, Palmer, Liddell, Song, & Gordon, 2004). However, few studies have looked at the direct effects of individual differences in fear detection thresholds on the neural correlates and dynamics of emotional face processing.

In a recent functional magnetic resonance imaging (fMRI) study, Pessoa et al. (2006) investigated the neural correlates of target detectability using a near-threshold fear detection task and found that masked fearful stimuli evoked stronger responses than neutral ones in the fusiform gyrus (FG) and amygdala only when subjects were able to reliably detect the fearful stimuli. In that study, a method based on signal detection theory was used to quantify the ability to detect fearful faces for each subject, and the results showed that a valence effect (fearful > neutral) was observed in BOLD signals only when subjects’ sensitivity to fearful faces was significantly higher than that expected based purely on chance.

The goal of the present study was to extend this work using magnetoencephalography to investigate the neural dynamics that underlie the modulation of emotion processing by fear detection, thus taking into consideration individual differences in target detectability among subjects. As the M170 response has been linked to face and emotion processing by others, in this study the effect of different abilities to detect emotion on the M170 face response was investigated. Of particular interest was the comparison of the M170 response to fearful and neutral faces between groups of subjects who could reliably detect briefly presented and masked fearful faces and those who could not. To this end, MEG data were collected while subjects performed a near-threshold fear detection task involving backward masking of emotional and neutral faces. Using a sensitivity index based on signal detection theory, groups of subjects who were aware or unaware of briefly presented and masked fearful faces, were first identified (Pessoa et al., 2006; Szczepanowski & Pessoa, 2007). Neuromagnetic signals related to processing of emotional faces that were detected or undetected were then compared. Trait anxiety scores for a subset of subjects were used to examine the correlation of general anxiety levels with behavior. Furthermore, synthetic aperture magnetometry (SAM) was used for source localization to identify regions in the brain that gave rise to the valence-related differences in M170 response measured at the scalp.

Method

Subjects

Thirty-one normal volunteers (17 women) aged 28.9 (mean) ± 5.8 (SD) years participated in the study, which was approved by the National Institute of Mental Health (NIMH) Institutional Review Board. All subjects were in good health with no past history of psychiatric or neurological disease (as assessed by a medical history, physical and neurological examination administered by a board-certified clinician) and gave informed consent. Subjects had normal or corrected-to-normal vision, achieved via nonmetallic glasses during the scan. Eight of the 31 subjects also completed a State–Trait Anxiety Inventory (STAI), which is a questionnaire that measures anxiety levels in adults and differentiates between the temporary condition of “state anxiety” and the more general and long-standing quality of “trait anxiety” (Spielberger, 1983).

Trial Structure

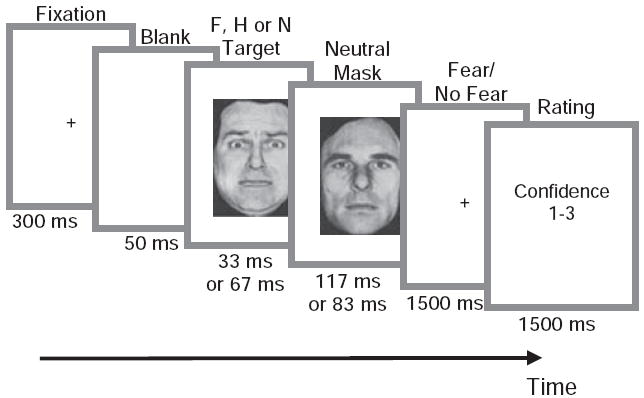

Trial structure and stimuli used were almost identical to those used in our previous fMRI study (Pessoa et al., 2006). In short, each trial began with a white fixation cross shown for 300 ms, followed by a 50-ms blank screen, followed by a pair of faces presented consecutively (see Figure 1). Each pair of faces consisted of a fearful, happy or neutral target face that was immediately followed by a neutral face, which served as a mask. The duration of the first face was either 33 or 67 ms. The total duration of the face pair was fixed at 150 ms. Subjects were instructed that the stimulus would always comprise two faces, but that the first would be very brief and the pair could appear as a single face. They were instructed to respond “fear” if they perceived fear, however briefly. Following the presentation of each face pair, subjects indicated “fear” or “no fear” with a button press using an MEG compatible fiber-optic response device. On each trial, subjects also rated the confidence (via button press) in their response on a scale of 1 to 3, with 1 = low confidence, and 3 = high confidence. Subjects were allowed 1.5 s to make their first “fear—no fear” response and another 1.5 s to provide their confidence rating. Total trial duration was 3.5 s. Each subject was shown 108 fearful, 54 happy and 108 neutral target faces at each stimulus duration (67 and 33 ms), in a total of 9 runs, each lasting 5 min 34 s. Thus within each run, the subject viewed 60 stimuli (belonging to 6 different conditions) presented in a random order. Faces subtended 4 degrees of visual angle. Target presentation times (onset and durations) were recorded along with MEG data via an ADC channel using an optical sensor placed directly in front of the projector lens during the experiment.

Figure 1.

Schematic of trial structure. On each trial, subjects fixated and viewed a fearful (F), happy (H), or neutral (N) target presented for 33 ms or 67 ms and masked by a neutral face presented for 117 ms or 83 ms. Subjects indicated with an appropriate button press whether they had seen a fearful face or not and then rated (via button press) the confidence in their response using a 1 to 3 scale. A modified version of a figure originally published in Cerebral Cortex (2006, Vol. 16, No. 3, Oxford University Press).

Stimuli

Face stimuli were obtained from the Ekman set (Ekman & Friesen, 1976), the Karolinska Directed Emotional Faces (KDEF) set (Lundqvist et al., 1998), as well as from a set developed and validated by Alumit Ishai at NIMH. A mix of faces from different face datasets was used to increase the number of unique faces, so that subjects would not habituate to any particular face. All faces were cropped and intensity normalized to the same mean intensity to minimize differences between the three face datasets. Fifty-four instances each of fearful, happy, and neutral faces were employed Thus each fearful face was repeated only four times throughout the entire experiment (displayed twice for 33 ms and twice for 67 ms). Happy faces were included to closely match fearful faces in terms of low-level features, such as brightness around the mouth and eyes, as both fearful and happy faces tend to be brighter than neutral ones in these regions. Thus, the inclusion of happy faces precluded subjects from utilizing a strategy of “detecting” fearful faces by simply using such low-level cues. The inclusion of happy faces also precluded subjects from adopting a strategy of indicating “fear” whenever facial features deviated from those of a neutral face. However, happy faces were not included in the MEG data analyses.

Behavioral Data Analysis

Behavioral response data were analyzed using receiver operating characteristic (ROC) curves (Green & Swets, 1966; Macmillan & Creelman, 1991), in a manner previously described (Pessoa et al., 2006, 2005). In brief, ROC curves were created for each subject based on yes-no fear response and confidence rating data; sensitivity to fearful stimuli was determined using A’, the area under the ROC curve, and tested for significance for each individual. Fear detection was considered reliable when A’ values were significantly greater than 0.5 (Hanley & McNeil, 1982), the value of the area under the ROC curve associated with chance performance (y = x line). Otherwise, fearful stimuli were considered undetected. The p value adopted for statistical significance of A’ was 0.05.

MEG Data Acquisition

MEG data were acquired using a whole-head CTF MEG system (VSM MedTech Systems Inc. Coquitlam BC, Canada). The system comprised a whole-head array of 275 first-order gradiometer/ SQUID sensors housed in a magnetically shielded room (Vacuumschmelze, Germany). Synthetic third-gradient balancing was used to remove background noise during acquisition. Head localization was performed at the beginning and at the end of each session to determine the position of the subject’s head and quantify movement during the recording session. Subjects whose movement exceeded 1 cm from the start to end of the recording session were excluded from analysis. A sampling rate of 600 Hz and a bandwidth of 0 to 150 Hz were used for data acquisition.

MEG Data Analysis

Preprocessing

Each subject’s MEG data were first preprocessed using CTF software. Specifically, data were baseline corrected by removing DC offset based on each run. A high-pass filter at 0.7 Hz (zero phase-shift 4th order Butterworth IIR filter with roll-off of 160 dB/decade) was applied to remove low-frequency components along with a 3.1 Hz width notch filter at 60 Hz (zero phase-shift 2nd order Butterworth IIR filter with roll-off of 80 dB/decade) to remove power-line noise and its harmonics. As onset and duration of stimuli were critical to the study, markers were created using an optical sensor signal recorded on a supplementary ADC channel. Four types of markers were created tagging the onsets of the 4 stimulus types (2 target stimulus durations × 2 emotion types) along with markers identifying subjects’ button presses (yes-fear or no-fear).

Identification of face-sensitive sensors

To identify sensors that responded maximally to faces for each subject, an average waveform for each of the four conditions (fearful faces displayed for 33 ms, neutral faces displayed for 33 ms, fearful faces displayed for 67 ms and neutral faces displayed for 67 ms) at each sensor was created. The scalp distributions of the resulting average waveforms were examined to locate groups of sensors in the left and right hemispheres that responded maximally to faces around 170 ms after stimulus onset (traditionally called the M170 response). Four sensors in each hemisphere that showed the largest M170 response were picked for further analysis (see Supplementary Figure 1 for data from a representative subject). Data from these eight sensors were first baseline corrected and then low-pass filtered at 30 Hz (zero phase-shift 6th order Butterworth IIR filter with roll-off of 240 dB/decade) to remove high-frequency oscillations. Next, for each subject, data were rectified by computing the root-mean-square at each time point, and an average waveform for each group of face-responsive sensors for each of the four conditions was created. All further analyses were performed on these baseline corrected, low-pass filtered and rectified averages (one waveform for each condition for each hemisphere for each subject). A similar procedure was adopted to identify sensors that responded maximally to faces at approximately 100 ms poststimulus and referred to here as the M100 response. Two subjects did not show an M100 response and were excluded from the M100 analysis.

Analysis of M170 response

To study differences in the M170 response based on emotion, the temporal location of the peak of the M170 response for each of the four conditions of interest (masked fearful and neutral targets, each displayed for 33 ms or 67 ms) was first identified for each hemisphere for each subject. As there was a certain amount of between-subjects variability in the temporal location of this peak (range of latencies across subjects: 120 to 188 ms, see Results), each subject’s data were then recentered about the M170 peak and a window of width ± 100 ms (± 60 samples) around the M170 peak was extracted for each subject. This allowed a comparison of the M170 response across conditions at the group level, which was done in two ways. First, to quantify the magnitude of the M170 valence effect, average amplitudes of the fearful and neutral traces in a ± 10 ms window around the M170 peak were computed for each subject, for each hemisphere, and for each stimulus duration. Next, these valence effects were compared using a four-way repeated measures analysis of variance (ANOVA); (group between-subjects factor; normals and high-performers; see Results for how these groups were defined) × hemisphere (left and right) × stimulus duration (67 ms and 33 ms) × valence (fearful and neutral)). Second, to locate exactly where around the M170 peak the fear trace differed from the neutral trace, paired two-sample t tests were conducted at each time point to compare responses to fearful faces versus neutral faces for 67- and 33-ms conditions, separately. The M100 data were analyzed in a similar way.

Source localization using event-related field synthetic aperture magnetometry (SAMerf)

SAMerf is a tool to estimate source activity of event-related responses within the brain based on sensor covariance (Cheyne, Bostan, Gaetz, & Pang, 2007; Robinson, 2004). It is based on the traditional SAM technique (Vrba & Robinson, 2001), which uses a beamformer method to create a linear combination of MEG sensors, such that MEG signals from the desired source are summed constructively while those at other locations are suppressed. The procedure is repeated independently for a specified set of voxel locations (in this case 6 mm × 6 mm × 6 mm voxels covering the entire brain). Unlike traditional SAM, SAMerf attenuates phase-locked activity by averaging virtual channels at each voxel location and creating a source image based on the average. To compute the SAMerf maps, a traditional SAM covariance matrix was first calculated for a window beginning 300 ms prior to stimulus onset and ending 300 ms after stimulus onset. Since SAMerf primarily localizes the evoked response, which tends to be mainly low frequency, a frequency band of 1–30 Hz was used to compute this matrix. In order to perform the SAM analyses, high resolution anatomical MRI scans were required for each subject. These were obtained on a 1.5 Tesla or 3 Tesla GE scanner using standard SPGR (FOV = 24 cm; 256 × 256 matrix; flip angle = 20°; Bandwidth = 15.63; 124 slices; slice thickness = 1.3 mm) or MPRAGE (FOV = 22 cm; 256 × 256 matrix; flip angle = 12°; Bandwidth = 31.25; 124 slices; slice thickness = 1.2 mm) anatomical sequences. Each subject’s anatomical scan was spatially normalized to the Talairach brain atlas (Talairach & Tournoux, 1988) using AFNI (Cox, 1996).

Event Related Field SAM (SAMerf) was then computed for an active window of ± 20 ms around the peak of the M170 response for each subject and a control window spanning 40 ms of prestimulus baseline from −115 ms to −75 ms prior to stimulus onset. In SAMerf (Cheyne et al., 2007; Robinson, 2004), the estimated time series of activity at each voxel are averaged around an event before calculating mean source power in the time windows of interest. The resulting SAMerf images represent the logarithmic ratio of mean power in the active window to mean power in a control window for each condition. Each subject’s SAMerf images were then converted to z-scores (by rescaling the values such that the standard deviation equals 1 and subtracting the mean) and coregistered to that subject’s Talairach-normalized anatomical scan. These images were then entered into a group-level two-sample paired t test to identify sources that differed in their response to fearful compared to neutral targets for the 67- and 33-ms conditions separately. To correct for multiple comparisons a cluster size threshold correction was applied to the resulting statistical maps, allowing only clusters with a volume of at least 864 mm3 and a p-threshold <0.01. This threshold was determined by running Monte Carlo simulations using AlphaSim in AFNI and was associated with a probability <0.01 corrected for multiple comparisons.

Results

Of the 31 subjects scanned, four subjects exhibited considerable movement during the scan and were excluded from analysis (see Methods for exclusion criterion).

Behavior

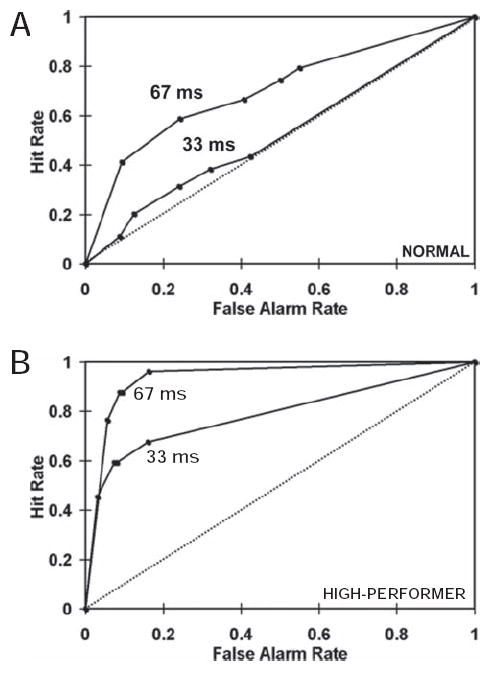

To characterize behavioral performance, for each subject, the area under the ROC curve (called A’) was determined for the 33-and 67-ms conditions, separately. A total of 12/27 subjects reliably detected 67-ms target faces (A’ values significantly greater than 0.5; p < .05), but could not detect 33-ms targets (A’ values not significantly greater than 0.5; p > .1). These subjects who were aware of masked 67-ms targets but unaware of masked 33-ms targets were referred to as “normals” (Figure 2A). Nine of the 27 subjects were able to reliably detect both 33- and 67-ms masked targets (p < .05). These subjects, who were aware of both 67- and 33-ms masked targets, were referred to as “high performers” (Figure 2B). An additional 3 participants exhibited a trend toward being high performers (0.05 < p < .1 at 33 ms) but did not qualify for inclusion in either group, and were therefore excluded from further analysis. Three subjects were discarded as they were unable to detect either 67-ms or 33-ms masked targets. Twelve “normals” and 9 “high performers” were used in the final analyses.

Figure 2.

Receiver Operating Characteristic (ROC) curves used to quantify sensitivity to fearful target faces. Chance performance is indicated by the diagonal line (i.e., the same number of false alarms and hits) and better-than-chance behavior is indicated by curves that extend to the upper left corner. The area under the ROC curve is the nonparametric sensitivity measure A.’ A. ROC curves from a representative individual from the group of 12 normals who reliably detected 67-ms targets but were not able to detect 33-ms targets. B. ROC curves from a representative individual from the group of 9 high performers who reliably detected both 67- and 33-ms targets.

MEG

Sensor analysis

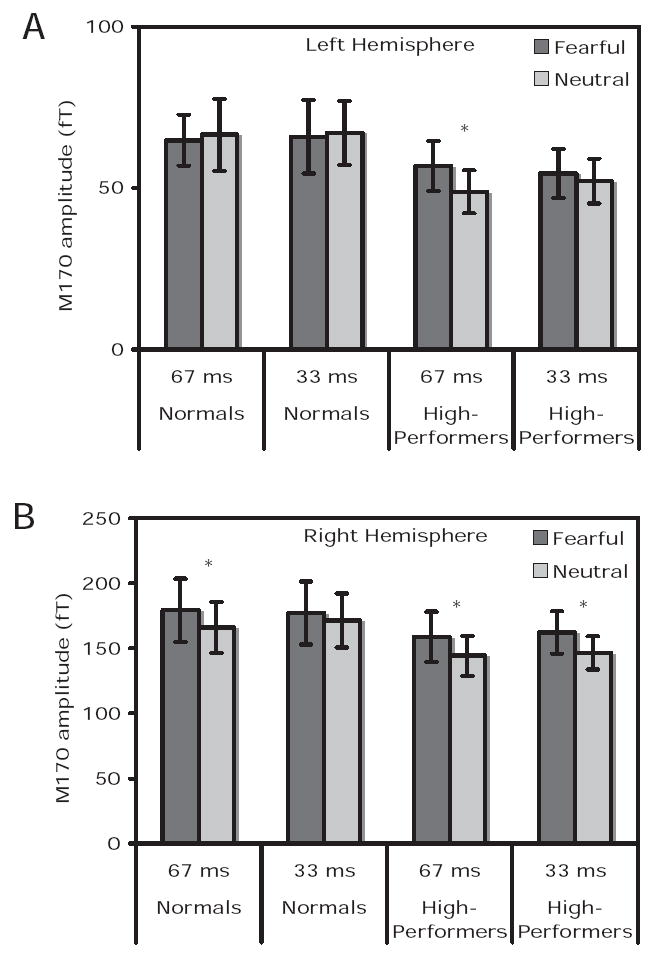

Statistical analyses of the average waveforms centered around the M170 response (see Figure 3A and B) revealed a strong laterality effect (right > left; F(1, 19) = 49.8; p = .000001), a main effect of valence (fearful > neutral; F(1, 19) = 8.5; p = .009) and a hemisphere by valence interaction (F(1, 19) = 6.7; p = .02). No significant interactions by group were observed. However, since the behavioral data provided a strong a priori hypothesis that the two groups would show differences in the M170 valence effect, these data were further explored via pairwise comparisons separately for the two groups.

Figure 3.

Average M170 amplitudes in femtoTesla (fT) within a 200-ms window centered around the peak. Panel A and B show average amplitudes for the two groups of subjects for 67 and 33 ms conditions, for left and right hemisphere sensors, respectively. Dark gray boxes are for fearful targets and light gray boxes for neutral targets. Asterisks indicate those conditions where response to fearful targets in greater than neutral targets at p < .05.

For the group of normals, for the right hemisphere data, pairwise comparisons revealed a significant difference between M170 responses to masked fearful and neutral target stimuli displayed for 67 ms. Specifically, in a 20-ms window centered about the peak, the M170 amplitude for the fearful trace was significantly greater than the neutral trace, but only for the 67-ms condition (one-tailed paired t test, t(11) = 1.8; p = .05). No difference between the fearful and neutral traces was seen for the group of normals for the 33-ms condition (t(11) = 0.8; p = .22). Left hemisphere pairwise comparisons did not reveal any significant results for this group.

Similar pairwise comparisons for the group of high-performers revealed significantly greater right hemisphere M170 responses to fearful relative to neutral targets for both 67-ms (t(11) = 2.2; p = .03) and 33-ms (t(11) = 1.8; p = .05) conditions. For the left hemisphere data, only the comparison of M170 responses for fearful relative to neutral targets displayed for 67 ms reached significance (t(11) = 2.1; p = .03).

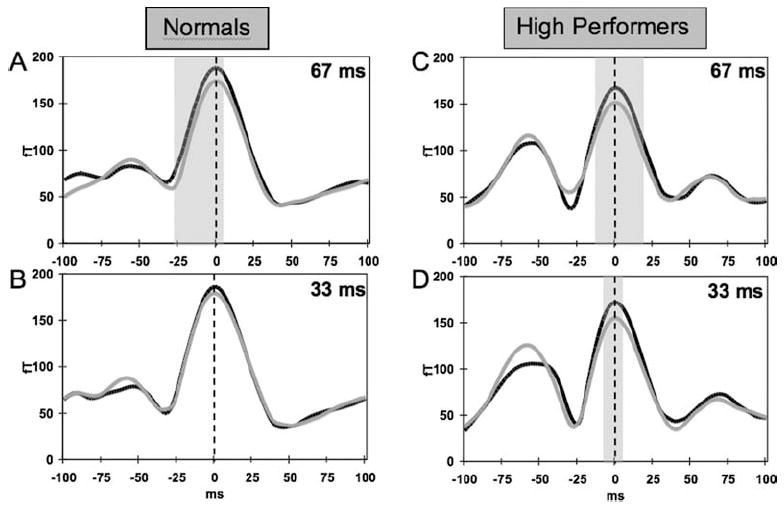

To further probe the temporal location of this difference, the right hemisphere fearful and neutral traces (which showed the stronger valence effects) were compared at each time point in the recentered data for each group using paired t tests. These comparisons showed that for the group of normals the response to fearful targets was greater than the response to neutral targets in a window ranging from −27 ms to + 3 ms around the peak of the M170 for the 67-ms condition (p < .05). No significant difference was observed for the 33-ms condition. Figures 4A and 4B show the group-averaged right hemisphere fearful and neutral waveforms for the normal group for the 67- and 33-ms conditions, respectively. For the high performing group, the response to fearful targets differed significantly from neutral targets (p < .05) in a window ranging from −12 ms to + 20 ms around the peak of the M170 for the 67-ms condition (Figure 4C). For the 33-ms condition, the valence difference for the high performing group was seen in a smaller window, ranging from about −7 ms to + 2 ms around the peak of the M170 response (Figure 4D). In summary, differential M170 responses for fearful versus neutral faces were observed only when subjects were able to reliably detect the fearful faces.

Figure 4.

Average recentered waveforms (see Methods) in right hemisphere face-sensitive sensors for the two groups of subjects for the four conditions. Panels A and B show average waveforms for the group of normals for the 67- and 33-ms target durations, respectively. Panels C and D show data for the group of high performers for the 67- and 33-ms conditions, respectively. Black traces are for fearful targets and gray traces are for neutral targets. Vertical dashed line indicates location of M170 peak. Y-axis shows waveform amplitude in femtoTesla (fT) and x-axis shows time around peak of M170 response in milliseconds (ms). Shaded areas indicate time points at which difference between fearful and neutral target faces was significant (p < .05).

The latency of the peak of the M170 response was also examined via a similar analysis of variance. No significant effects of group, hemisphere, stimulus duration or valence, or interactions between these factors were observed.

With respect to the M100, a four-way analysis of variance (group × hemisphere × duration × valence) revealed a strong laterality effect, right > left; F(1, 17) = 98.9; p = .00000002; a group by stimulus duration interaction, F(1, 17) = 7.9; p = .01, and a group by hemisphere by duration interaction, F(1, 17) = 7.1; p = .02. These interactions were further explored via pairwise comparisons between responses to fearful and neutral targets for the two groups separately. For the normal group, these tests showed no significant differences between responses to fearful and neutral targets for either the 67-ms, left: t(9) = 0.7; p = .5, right: t(9) = 0.9; p = .4; or 33-ms, left: t(9) = −0.1; p = .9, right: t(9) = 0.5; p = .6, conditions. Similar pairwise analyses of M100 data for the high-performing group, revealed a valence effect only for the 33-ms condition. In this condition, both left and right hemispheres showed a strong trend toward larger M100 magnitudes for neutral relative to fearful target, left: t(8) = −1.5; p = .08; right: t(8) = −1.7; p = .06. Pairwise comparisons for the 67-ms condition did not yield significant results, left: t(9) = −0.7; p = .5, right: t(9) = −0.4; p = .7. Latency of the M100 response was also examined, but no significant effects of group, hemisphere, duration or valence, or interactions between these factors were found.

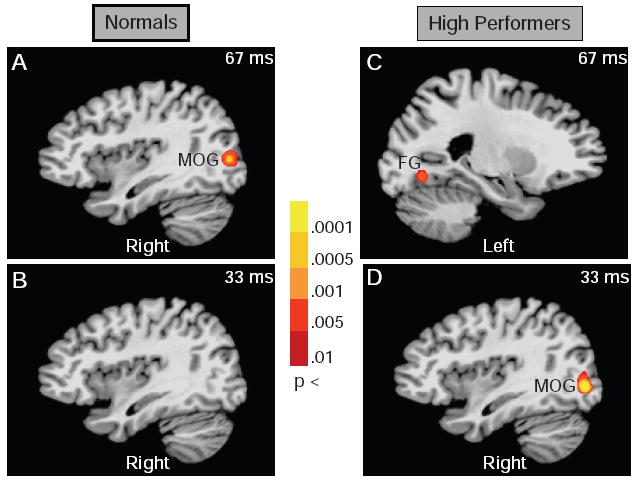

Source analysis

SAMerf images were used to localize sources of the M170 evoked response for each subject. Only eight subjects of the nine were included in the SAM analysis for the group of high performers, as an anatomical MRI scan (required for SAM computation) for one subject could not be collected due to relocation. In the normal group, all 12 subjects were included in the SAM analysis. For the normal group, a paired two-sample t test (thresholded at p < .01, corrected) between the SAM volumes for fearful targets versus neutral targets displayed for 67 ms showed a significant source localized to the right middle occipital gyrus (MOG; peak Talairach coordinates: x = 36, y = −78, z = 5; Figure 5A). Critically, however, this same location did not show a difference between fearful and neutral target faces displayed for 33 ms for this group (see Figure 5B). In contrast, for the high performing group, statistical analysis of the SAM volumes (thresholded at p < .01, corrected) for fearful versus neutral targets revealed a source localized to the left FG (peak Talairach coordinates: x = −22, y = −66, z = −8; Figure 5C) for the 67-ms condition, and one localized to the right MOG (peak Talairach coordinates: x = 36, y = −78, z = −2; Figure 5D) for the 33-ms condition. Thus, the valence effect that was observed at the sensor level was localized to face processing areas within the brain (Grill-Spector, Knouf, & Kanwisher, 2004; Haxby et al., 2001; Ishai, Ungerleider, Martin, & Haxby, 2000; Ishai, Ungerleider, Martin, Schouten, & Haxby, 1999; Kanwisher, McDermott, & Chun, 1997), but only for conditions in which the subject could reliably detect the fearful stimulus.

Figure 5.

SAMerf results: Images show paired t test comparison of SAMerf volumes of M170 evoked response for fearful versus neutral target faces. Panels A and B show SAMerf results for group of normals for 67-and 33-ms conditions, respectively. Panels C and D show SAMerf data for group of high performers for 67- and 33-ms conditions, respectively. Threshold for paired t test: p < .01 (corrected). Frequency band: 1–30 Hz; Active window: 40-ms adaptive window around M170 for each subject; Control window: −115 ms to 75 ms prestimulus baseline. In panels A and D, significant sources were localized to the right medial occipital gyrus (MOG) and in panel C to the left fusiform gyrus (FG).

Trait Anxiety Scores

Five of the 12 normal subjects and 3 of the 9 high-performing subjects completed the STAI. Trait anxiety data for these eight subjects were tabulated and compared across the two groups of subjects. A nonparametric Mann–Whitney U test showed that the trait anxiety scores for the group of high performers, N = 3; 35.3 (mean) ± 1.5 (SD), were significantly higher (U = 0.5; p = .04) than those of the group of normal individuals, N = 5; 25.4 (mean) ± 5.1 (SD). Furthermore, the trait anxiety scores for these 8 subjects showed a significant positive correlation with the A’ values for the 33-ms condition (nonparametric Spearman rank order correlation; R = 0.8; p = .03). Trait anxiety did not correlate significantly with A’ values for the 67-ms condition (R = 0.5; p = .2) or with the average M170 amplitude difference in a ± 10 ms window between fear and neutral traces for the 33-ms (R = −0.2; p = .6) or 67-ms conditions (R = −0.4; p = .4).

Discussion

The current study examined the link between individual differences in sensitivity to fearful faces and the emotional modulation of the M170 face-selective response. Subjects were grouped based on their ability to reliably detect fearful faces during a fear detection task and the difference between the evoked response to masked fearful and neutral target faces was measured.

First, it was found that, only for those conditions where subjects could reliably detect the fearful stimuli were the M170 amplitudes larger for fearful faces than neutral faces. Second, using SAM techniques, this valence effect of the M170 was localized to face processing areas located on the FG and MOG. These results parallel those of our fMRI study (Pessoa, 2005; Pessoa et al., 2006), where activation in the FG and amygdala was found only for conditions in which subjects could reliably detect the fearful faces. There has been considerable debate in the literature about the automaticity of emotion processing, with some researchers suggesting that emotional stimuli (especially fearful) may be processed without reaching conscious awareness (Dolan & Vuilleumier, 2003; Ohman, 2002). Some studies have reported brain activations in response to emotional stimuli even when these stimuli were not consciously perceived (as assessed by the subjects’ subjective report; (Morris, Ohman, & Dolan, 1998; Ohman, Esteves, & Soares, 1995; Whalen et al., 1998). The current study, along with the previous fMRI study, assessed perception of emotional stimuli via objective signal detection theory measures. Both showed brain activations only when subject’s reported having seen the emotional stimulus. Thus both studies provide important support for the alternate notion that emotion processing may not be an entirely automatic process.

Third, trait anxiety scores from a subset of subjects indicated that those subjects who were better able to detect briefly presented fearful faces had higher generalized anxiety levels. Trait anxiety is a measure of a subject’s “anxiety proneness and refers to a general tendency to respond with anxiety to perceived threats in the environment” (Spielberger, 1983). In the present study, the high performing group that was able to better and more reliably detect rapidly presented fearful faces showed higher trait anxiety scores. Not only did trait anxiety differentiate the two groups categorically, the significant correlation between trait anxiety scores and A’ at 33-ms (the condition that critically differentiated the two groups of subjects), showed that sensitivity to rapidly presented fearful faces varied parametrically with general anxiety levels. This suggests that the higher a person’s anxiety proneness, the more they are aware of the presence of threatening or fearful stimuli in their environment. Thus, it is likely that the behavioral differences observed in the two groups may reflect underlying differences in trait anxiety. Taken together, these results indicate that successful target detection is tightly linked to the effect of valence on the M170 face response and that individual differences between subjects (here generalized anxiety levels) is an important factor to consider in studies of emotion processing.

Individual Differences in M170

A survey of the literature related to the effects of emotion (especially negative) on face processing reveals mixed results. On the one hand, there is growing evidence from EEG and MEG studies that emotion modulates the N170/M170 response. For example, using EEG, several groups (Blau et al., 2007; Eger et al., 2003; Williams et al., 2006) have reported that negative facial expressions compared to neutral and positive ones elicited the largest amplitude change at the N170. Similarly, using MEG, several investigators (Lewis et al., 2003; Liu et al., 1999; Streit et al., 1999) have demonstrated that the M170 is sensitive to emotional expressions. On the other hand, there are both EEG and MEG studies (Ashley, Vuilleumier, & Swick, 2004; Esslen, Pascual-Marqui, Hell, Kochi, & Lehmann, 2004; Herrmann, Ehlis, Ellgring, & Fallgatter, 2002; Ishai, Bikle, & Ungerleider, 2006), including some masking studies of subliminal perception (Liddell et al., 2004; Williams et al., 2004), that have found no effect of emotion on the N170/M170, reporting instead only an affect-sensitive later component, typically around 230 ms poststimulus. What could be a possible explanation for this discrepancy regarding the N170/M170 in the literature?

Emotion processing is a complex process and an individual’s ability to detect and process emotional information can depend on several factors. There are of course differences in visual perception per se among subjects. For example, some studies have reported individual differences in contrast sensitivity (Peterzell & Teller, 1996) and in the ability to detect low-contrast patterns (Ress, Backus, & Heeger, 2000), Accordingly, one interpretation of the present data could be that, due to differences in visual perception, some subjects may be better at detecting rapidly presented and masked stimuli. Hence, the difference in valence modulation of the M170 response between the two groups of subjects shown here may reflect differences in speed and/or efficiency of processing of these rapidly presented faces.

Alternatively, other factors can influence emotion processing, including personality traits such as introversion and extraversion (Bono & Vey, 2007; Canli, 2004; Fink, 2005; Hamann & Canli, 2004), emotional abilities (Freudenthaler et al., 2006; Meriau et al., 2006), affective synchrony (Rafaeli, Rogers, & Revelle, 2007), neuroticism (Haas, Omura, Constable, & Canli, 2007), mood and anxiety levels (Cornwell et al., 2006; Engels et al., 2007; Etkin et al., 2004; Fox et al., 2005; McClure et al., 2007; Most, Chun, Johnson, & Kiehl, 2006; Rossignol et al., 2005; Somerville, Kim, Johnstone, Alexander, & Whalen, 2004; Vasey, el-Hag, & Daleiden, 1996; Wang, LaBar, & McCarthy, 2006), stress (Zorawski, Blanding, Kuhn, & LaBar, 2006; Zorawski, Cook, Kuhn, & LaBar, 2005) and psychopathy (Gordon, Baird, & End, 2004). All of these factors may influence performance on a task that involves emotional faces and, in turn, the dynamics of face processing itself.

Indeed, the present study specifically shows that detection of fearful faces presented in a masked fashion is linked to valence modulation of the M170 response, but that this modulation is seen only under conditions where a subject reliably detects the emotional stimuli. Anxiety measures obtained from some of the subjects, indicate that the performance differences reported in this study may in fact be driven by underlying differences in anxiety levels among the subjects. Most previous studies examining the N170/M170 and its modulation by emotion did not account for individual differences between subjects. Thus, it is conceivable that this key factor may, at least in part, account for the conflicting reports in the literature of the effects of emotion on the temporal dynamics of face processing.

Although differences in the M170 valence effects were observed between the two groups, this difference did not manifest itself as a group by duration by valence interaction. A likely reason for the lack of interaction in the ANOVA is the combination of subtle valence effects and low number of subjects in the two groups (normals = 12; high performers = 9). This limitation could be overcome by collecting data from a larger group of subjects. With respect to the correlation of the valence effects with anxiety scores, STAI trait anxiety scores have previously been reported to correlate with measures of depression (Dobson, 1985; Nitschke, Heller, Imig, McDonald, & Miller, 2001). Thus, further work is necessary to assess the relative contributions of anxiety and depression to the different behavioral profiles of the two groups of subjects in this study.

One factor that this study does not address is the issue of causality between behavior and the valence modulation of the M170. Based on the current results, one can surmise a link between a subject’s ability to detect masked fearful faces and the modulation of that subject’s M170 response by the emotional content of the masked faces. However, it remains an open question of whether successful target detection is required for valence modulation of the M170 response, or whether perception of fear depends on an enhanced M170 response for fearful compared to neutral faces. Further experiments, perhaps using transcranial magnetic stimulation (to suppress activity in one or more regions involved in emotion processing such as FG and amygdala), may be able to address this issue of causality.

Source Localization of M170

SAM results show that the peak of the M170 response was localized to the FG and MOG. Both these regions have been shown by others to be involved in face processing (Grill-Spector et al., 2004; Haxby et al., 2001; Ishai et al., 2000, 1999; Kanwisher et al., 1997). The difference between fearful and neutral conditions was localized to these regions only for situations where the subjects were able to reliably detect the fearful stimulus. It is interesting that the SAM analysis for the 67-ms data of the normal group and the 33-ms data of the high performing group localized to the same region in right MOG. This paralleled the behavioral similarity that was evident from the ROC curves (see Figure 2) and the average sensitivity for the two groups for these target durations. The average A’ for the group of normals for the 67-ms target duration was .65, which was remarkably close in value to the average A’ of 0.64 for the group of high performers for the 33-ms target duration. Thus, the behavior and M170 source location of the high performing group for the 33-ms condition mimicked those of the normal group for the 67-ms condition.

The average A’ of 0.82 for the high performing group for the 67-ms condition is a significant deviation from the value expected for chance performance (A’ = 0.5), and this was associated with a more anterior source in the left FG compared with the right MOG. It is possible that anterior regions in the face processing network are more involved in the processing of a face and its emotional content when the target object’s visibility is higher (i.e., when images are more clearly visible to subjects). Similarly, earlier areas in the face processing network may be involved when subjects, although able to detect faces better than chance, have a slightly lower sensitivity to fearful faces that are presented for short durations and masked by neutral faces.

There is some evidence for the idea of a link between “clarity” or “recognizability” of an object with the locus of cortical activity in visual processing areas. For example, in an fMRI study involving subliminal visual priming of masked object images, Bar and colleagues (Bar et al., 2001) showed that activity in the temporal lobe strengthened and was situated more anteriorly as subjects were able to more confidently identify the objects. This phenomenon may in turn be linked to the decline in temporal frequency tuning that has been observed along the object processing stream. For example, McKeeff and colleagues (McKeeff, Remus, & Tong, 2007; McKeeff & Tong, 2007) have found that early processing areas respond best to rapidly presented objects, while more anterior regions such as the FG respond best to objects presented at a slower rate. The present results are thus consistent with the idea that FG activation is linked to perception occurring under “more confident” visual awareness, compared to activation in earlier processing areas (such as the MOG in the present study), which may be linked more to perception under lower confidence.

The larger M170 amplitudes observed in the right hemisphere compared to the left hemisphere are consistent with reports of greater right hemisphere activation during face perception in general (Yovel & Kanwisher, 2004). This lateralization effect is also consistent with a model of emotion processing (Heller, 1993) that suggests that a right-hemisphere network (including frontal, parietal, temporal and occipital regions), is involved in detecting and responding to threat-related stimuli. Further, activity in the nodes of this right-hemisphere network has also been shown to depend on the level of threat and anxiety, especially anxious arousal (Engels et al., 2007; Heller, Nitschke, Etienne, & Miller, 1997; Nitschke, Heller, Palmieri, & Miller, 1999). The greater right-sided activity seen in this study, especially in the individuals with higher trait anxiety levels, may thus be related to the functional specialization of the right hemisphere in processing threat-related information.

The lateralization of SAM group results followed all the results that were observed at the scalp, except for the 67-ms condition in the high-performing group. For this condition, at the sensor level a significant difference between fearful and neutral targets was found in both hemispheres. At the source level however, only a lateralized source in left FG was found in the SAM results for this group. On further examination of these data, another source in right FG was revealed at a lower threshold (p < .05; uncorrected) Thus, an asymmetry between left and right hemisphere intersubject variance in the relative difference between fearful and neutral targets may be the reason for the left lateralization of this result observed at the source level.

Magnitude of M100

Previous studies of face perception have suggested that the M100 response, although often greater for faces than other stimuli, such as houses (Liu et al., 2002), buildings (Herrmann et al., 2005) and scrambled faces (Herrmann et al., 2005), is more sensitive to image features and reflects an early perceptual processing phase (Halgren et al., 2000; VanRullen & Thorpe, 2001). With regard to the sensitivity of the M100 to emotion, there are very few reports in the literature that find valence effects at this early latency (Williams et al., 2006). The larger M100 response observed in the present study for neutral targets compared to fearful targets for the 33-ms condition in the high-performing group is an unexpected result that warrants further investigation.

Conclusions

The M170 is characteristically associated with face processing and there is growing evidence that this component is emotion-sensitive. The present study provides evidence that individual differences in behavior among subjects (in this case sensitivity to masked fearful faces) are linked to the temporal dynamics of emotional face processing. The presence and absence of the M170 valence modulation were demonstrated simultaneously by taking into account behavioral differences between individual subjects. Specifically, those subjects that could reliably detect masked fearful faces showed larger M170 amplitudes for those fearful faces compared to neutral faces. This suggests that behavioral performance and personality measures, such as trait anxiety, are important aspects to consider when studying emotion processing.

Supplementary Material

Identification of Face-Selective Sensors. A. Butterfly plot of all responses to all stimuli across all sensors for a representative subject. X-axis represents time in milliseconds (ms) after stimulus onset. Y-axis represents strength of magnetic field in femtoTesla (fT). B. Scalp distribution of the M170 response to faces (located from about 120 ms to about 150 ms after stimulus onset for this subject) with the most face-sensitive sensors in each hemisphere circled in black (4 on right and 4 on left). Colors represent strength of magnetic field in femtoTesla (fT), red: field into (sink), blue: field out (source)).

Acknowledgments

This study was supported by the National Institute of Mental Health Intramural Research Program. Luiz Pessoa was supported in part by 1 R01 MH071589-01. We thank Alumit Ishai for making available a set of emotional faces, Tom Holroyd for help with data collection and analysis, and Tracy Jill Doty and Stacia Friedman-Hill for providing the STAI data.

References

- Ashley V, Vuilleumier P, Swick D. Time course and specificity of event-related potentials to emotional expressions. Neuroreport. 2004;15:211–216. doi: 10.1097/00001756-200401190-00041. [DOI] [PubMed] [Google Scholar]

- Bar M, Tootell RB, Schacter DL, Greve DN, Fischl B, Mendola JD, et al. Cortical mechanisms specific to explicit visual object recognition. Neuron. 2001;29:529–535. doi: 10.1016/s0896-6273(01)00224-0. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face-specific N170 component is modulated by emotional facial expression. Behavior & Brain Function. 2007;3:7. doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bono JE, Vey MA. Personality and emotional performance: Extraversion, neuroticism, and self-monitoring. Journal of Occupational Health Psychology. 2007;12:177–192. doi: 10.1037/1076-8998.12.2.177. [DOI] [PubMed] [Google Scholar]

- Botzel K, Grusser OJ. Electric brain potentials evoked by pictures of faces and non-faces: A search for “face-specific” EEG-potentials. Experimental Brain Research. 1989;77:349–360. doi: 10.1007/BF00274992. [DOI] [PubMed] [Google Scholar]

- Canli T. Functional brain mapping of extraversion and neuroticism: Learning from individual differences in emotion processing. Journal of Personality. 2004;72:1105–1132. doi: 10.1111/j.1467-6494.2004.00292.x. [DOI] [PubMed] [Google Scholar]

- Cheyne D, Bostan AC, Gaetz W, Pang EW. Event-related beamforming: A robust method for presurgical functional mapping using MEG. Clinical Neurophysiology. 2007;118:1691–1704. doi: 10.1016/j.clinph.2007.05.064. [DOI] [PubMed] [Google Scholar]

- Cornwell BR, Johnson L, Berardi L, Grillon C. Anticipation of public speaking in virtual reality reveals a relationship between trait social anxiety and startle reactivity. Biological Psychiatry. 2006;59:664–666. doi: 10.1016/j.biopsych.2005.09.015. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers & Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dobson KS. An analysis of anxiety and depression scales. Journal of Personality Assess. 1985;49:522–527. doi: 10.1207/s15327752jpa4905_10. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Vuilleumier P. Amygdala automaticity in emotional processing. Annals of the New York Academy of Sciences. 2003;985:348–355. doi: 10.1111/j.1749-6632.2003.tb07093.x. [DOI] [PubMed] [Google Scholar]

- Eger E, Jedynak A, Iwaki T, Skrandies W. Rapid extraction of emotional expression: Evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41:808–817. doi: 10.1016/s0028-3932(02)00287-7. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Engels AS, Heller W, Mohanty A, Herrington JD, Banich MT, Webb AG, et al. Specificity of regional brain activity in anxiety types during emotion processing. Psychophysiology. 2007;44:352–363. doi: 10.1111/j.1469-8986.2007.00518.x. [DOI] [PubMed] [Google Scholar]

- Esslen M, Pascual-Marqui RD, Hell D, Kochi K, Lehmann D. Brain areas and time course of emotional processing. Neuroimage. 2004;21:1189–1203. doi: 10.1016/j.neuroimage.2003.10.001. [DOI] [PubMed] [Google Scholar]

- Etkin A, Klemenhagen KC, Dudman JT, Rogan MT, Hen R, Kandel ER, et al. Individual differences in trait anxiety predict the response of the basolateral amygdala to unconsciously processed fearful faces. Neuron. 2004;44:1043–1055. doi: 10.1016/j.neuron.2004.12.006. [DOI] [PubMed] [Google Scholar]

- Fink A. Event-related desynchronization in the EEG during emotional and cognitive information processing: Differential effects of extraversion. Biological Psychology. 2005;70:152–160. doi: 10.1016/j.biopsycho.2005.01.013. [DOI] [PubMed] [Google Scholar]

- Fox E, Russo R, Georgiou GA. Anxiety modulates the degree of attentive resources required to process emotional faces. Cognitive, Affective, & Behavioral Neuroscience. 2005;5:396–404. doi: 10.3758/cabn.5.4.396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freudenthaler HH, Fink A, Neubauer AC. Emotional abilities and cortical activation during emotional information processing. Personality and Individual Differences. 2006;41:685–695. [Google Scholar]

- Furey ML, Tanskanen T, Beauchamp MS, Avikainen S, Uutela K, Hari R, et al. Dissociation of face-selective cortical responses by attention. Proceedings of the National Academy of Sciences, USA. 2006;103:1065–1070. doi: 10.1073/pnas.0510124103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George N, Evans J, Fiori N, Davidoff J, Renault B. Brain events related to normal and moderately scrambled faces. Cognitive Brain Research. 1996;4:65–76. doi: 10.1016/0926-6410(95)00045-3. [DOI] [PubMed] [Google Scholar]

- Gordon HL, Baird AA, End A. Functional differences among those high and low on a trait measure of psychopathy. Biological Psychiatry. 2004;56:516–521. doi: 10.1016/j.biopsych.2004.06.030. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. New York: Wiley; 1966. [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The fusiform face area subserves face perception, not generic within-category identification. Nature Neuroscience. 2004;7:555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Haas BW, Omura K, Constable RT, Canli T. Emotional conflict and neuroticism: Personality-dependent activation in the amygdala and subgenual anterior cingulate. Behavioral Neuroscience. 2007;121:249–256. doi: 10.1037/0735-7044.121.2.249. [DOI] [PubMed] [Google Scholar]

- Halgren E, Raij T, Marinkovic K, Jousmaki V, Hari R. Cognitive response profile of the human fusiform face area as determined by MEG. Cerebal Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- Hamann S, Canli T. Individual differences in emotion processing. Current Opinion in Neurobiology. 2004;14:233–238. doi: 10.1016/j.conb.2004.03.010. [DOI] [PubMed] [Google Scholar]

- Hanley JA, McNeil BJ. A method of comparing the areas under the receiver operating characteristic curves derived from the same cases. Radiology. 1982;148:839–849. doi: 10.1148/radiology.148.3.6878708. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293(5539):2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Heller W. Neuropsychological mechanisms of individual differences in emotion, personality and arousal. Neuropsychology. 1993;7:476–489. [Google Scholar]

- Heller W, Nitschke JB, Etienne MA, Miller GA. Patterns of regional brain activity differentiate types of anxiety. Journal of Abnormal Psychology. 1997;106:376–385. doi: 10.1037//0021-843x.106.3.376. [DOI] [PubMed] [Google Scholar]

- Herrmann MJ, Aranda D, Ellgring H, Mueller TJ, Strik WK, Heidrich A, et al. Face-specific event-related potential in humans is independent from facial expression. International Journal of Psychophysiology. 2002;45:241–244. doi: 10.1016/s0167-8760(02)00033-8. [DOI] [PubMed] [Google Scholar]

- Herrmann MJ, Ehlis AC, Ellgring H, Fallgatter AJ. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs) Journal of Neural Transmission. 2005;112:1073–1081. doi: 10.1007/s00702-004-0250-8. [DOI] [PubMed] [Google Scholar]

- Ishai A, Bikle PC, Ungerleider LG. Temporal dynamics of face repetition suppression. Brain Research Bulletin. 2006;70:289–295. doi: 10.1016/j.brainresbull.2006.06.002. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Haxby JV. The representation of objects in the human occipital and temporal cortex. Journal of Cognitive Neuroscience. 2000;12(Suppl 2):35–51. doi: 10.1162/089892900564055. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proceedings of the National Academy of Sciences, USA. 1999;96:9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D, Simos P, Sawrie SM, Martin RC, Knowlton RC. Dynamic brain activation patterns for face recognition: A magnetoencephalography study. Brain Topography. 2005;18:19–26. doi: 10.1007/s10548-005-7897-9. [DOI] [PubMed] [Google Scholar]

- Lewis S, Thoma RJ, Lanoue MD, Miller GA, Heller W, Edgar C, et al. Visual processing of facial affect. Neuroreport. 2003;14:1841–1845. doi: 10.1097/00001756-200310060-00017. [DOI] [PubMed] [Google Scholar]

- Liddell BJ, Williams LM, Rathjen J, Shevrin H, Gordon E. A temporal dissociation of subliminal versus supraliminal fear perception: An event-related potential study. Journal of Cognitive Neuroscience. 2004;16:479–486. doi: 10.1162/089892904322926809. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Stages of processing in face perception: An MEG study. Nature Neuroscience. 2002;5:910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- Liu J, Higuchi M, Marantz A, Kanwisher N. The selectivity of the occipitotemporal M170 for faces. Neuroreport. 2000;11:337–341. doi: 10.1097/00001756-200002070-00023. [DOI] [PubMed] [Google Scholar]

- Liu L, Ioannides AA, Streit M. Single trial analysis of neurophysiological correlates of the recognition of complex objects and facial expressions of emotion. Brain Topography. 1999;11:291–303. doi: 10.1023/a:1022258620435. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Ohman A. The Karolinska Directed Emotional Faces—KDEF, CD Rom from Department of Clinical Neurosciences, Psychology section, Karolinska Institute 1998 [Google Scholar]

- Macmillan NA, Creelman CD. Detection theory: A user’s guide. New York: Cambridge University Press; 1991. [Google Scholar]

- McClure EB, Monk CS, Nelson EE, Parrish JM, Adler A, Blair RJ, et al. Abnormal attention modulation of fear circuit function in pediatric generalized anxiety disorder. Archives of General Psychiatry. 2007;64:97–106. doi: 10.1001/archpsyc.64.1.97. [DOI] [PubMed] [Google Scholar]

- McKeeff TJ, Remus DA, Tong F. Temporal limitations in object processing across the human ventral visual pathway. Journal of Neurophysiology. 2007;98:382–393. doi: 10.1152/jn.00568.2006. [DOI] [PubMed] [Google Scholar]

- McKeeff TJ, Tong F. The timing of perceptual decisions for ambiguous face stimuli in the human ventral visual cortex. Cerebral Cortex. 2007;17:669–678. doi: 10.1093/cercor/bhk015. [DOI] [PubMed] [Google Scholar]

- Meriau K, Wartenburger I, Kazzer P, Prehn K, Lammers CH, van der Meer E, et al. A neural network reflecting individual differences in cognitive processing of emotions during perceptual decision making. Neuroimage. 2006;33:1016–1027. doi: 10.1016/j.neuroimage.2006.07.031. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393(6684):467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- Most SB, Chun MM, Johnson MR, Kiehl KA. Attentional modulation of the amygdala varies with personality. Neuroimage. 2006;31:934–944. doi: 10.1016/j.neuroimage.2005.12.031. [DOI] [PubMed] [Google Scholar]

- Nitschke JB, Heller W, Imig JC, McDonald RP, Miller GA. Distinguishing dimensions of anxiety and depression. Cognitive Therapy and Research. 2001;25:1–22. [Google Scholar]

- Nitschke JB, Heller W, Palmieri PA, Miller GA. Contrasting patterns of brain activity in anxious apprehension and anxious arousal. Psychophysiology. 1999;36:628–637. [PubMed] [Google Scholar]

- Ohman A. Automaticity and the amygdala: Nonconscious responses to emotional faces. Current Directions in Psychological Science. 2002;22:62–66. [Google Scholar]

- Ohman A. The role of the amygdala in human fear: Automatic detection of threat. Psychoneuroendocrinology. 2005;30:953–958. doi: 10.1016/j.psyneuen.2005.03.019. [DOI] [PubMed] [Google Scholar]

- Ohman A, Esteves F, Soares JJ. Preparedness and preattentive associative learning: Electrodermal conditioning to masked stimuli. Journal of Psychophysiology. 1995;9:99–108. [Google Scholar]

- Pessoa L. To what extent are emotional visual stimuli processed without attention and awareness? Current Opinion in Neurobiology. 2005;15:188–196. doi: 10.1016/j.conb.2005.03.002. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Sturman D, Ungerleider LG. Target visibility and visual awareness modulate amygdala responses to fearful faces. Cerebral Cortex. 2006;16:366–375. doi: 10.1093/cercor/bhi115. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Ungerleider LG. Visual awareness and the detection of fearful faces. Emotion. 2005;5:243–247. doi: 10.1037/1528-3542.5.2.243. [DOI] [PubMed] [Google Scholar]

- Peterzell DH, Teller DY. Individual differences in contrast sensitivity functions: The lowest spatial frequency channels. Vision Research. 1996;36:3077–3085. doi: 10.1016/0042-6989(96)00061-2. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Williams LM, Heining M, Herba CM, Russell T, Andrew C, et al. Differential neural responses to overt and covert presentations of facial expressions of fear and disgust. Neuroimage. 2004;21:1484–1496. doi: 10.1016/j.neuroimage.2003.12.013. [DOI] [PubMed] [Google Scholar]

- Rafaeli E, Rogers GM, Revelle W. Affective synchrony: Individual differences in mixed emotions. Personality & Social Psychology Bulletin. 2007;33:915–932. doi: 10.1177/0146167207301009. [DOI] [PubMed] [Google Scholar]

- Ress D, Backus BT, Heeger DJ. Activity in primary visual cortex predicts performance in a visual detection task. Nature Neuroscience. 2000;3:940–945. doi: 10.1038/78856. [DOI] [PubMed] [Google Scholar]

- Robinson SE. Localization of event-related activity by SAM(erf) Neurologic Clinics Neurophysiology. 2004;2004:109. [PubMed] [Google Scholar]

- Rossignol M, Philippot P, Douilliez C, Crommelinck M, Campanella S. The perception of fearful and happy facial expression is modulated by anxiety: An event-related potential study. Neuroscience letters. 2005;377:115–120. doi: 10.1016/j.neulet.2004.11.091. [DOI] [PubMed] [Google Scholar]

- Sams M, Hietanen JK, Hari R, Ilmoniemi RJ, Lounasmaa OV. Face-specific responses from the human inferior occipitotemporal cortex. Neuroscience. 1997;77:49–55. doi: 10.1016/s0306-4522(96)00419-8. [DOI] [PubMed] [Google Scholar]

- Soares JJ, Ohman A. Backward masking and skin conductance responses after conditioning to nonfeared but fear-relevant stimuli in fearful subjects. Psychophysiology. 1993;30:460–466. doi: 10.1111/j.1469-8986.1993.tb02069.x. [DOI] [PubMed] [Google Scholar]

- Somerville LH, Kim H, Johnstone T, Alexander AL, Whalen PJ. Human amygdala responses during presentation of happy and neutral faces: Correlations with state anxiety. Biological Psychiatry. 2004;55:897–903. doi: 10.1016/j.biopsych.2004.01.007. [DOI] [PubMed] [Google Scholar]

- Spielberger C. Manual for the State-Trait Anxiety Inventory (Form Y) Palo Alto, CA: Consulting Psychologists Press; 1983. [Google Scholar]

- Streit M, Ioannides AA, Liu L, Wolwer W, Dammers J, Gross J, et al. Neurophysiological correlates of the recognition of facial expressions of emotion as revealed by magnetoencephalography. Cognitive Brain Research. 1999;7:481–491. doi: 10.1016/s0926-6410(98)00048-2. [DOI] [PubMed] [Google Scholar]

- Suslow T, Ohrmann P, Bauer J, Rauch AV, Schwindt W, Arolt V, et al. Amygdala activation during masked presentation of emotional faces predicts conscious detection of threat-related faces. Brain & Cognition. 2006;61:243–248. doi: 10.1016/j.bandc.2006.01.005. [DOI] [PubMed] [Google Scholar]

- Swithenby SJ, Bailey AJ, Brautigam S, Josephs OE, Jousmaki V, Tesche CD. Neural processing of human faces: A magnetoencephalographic study. Experimental brain Research. 1998;118:501–510. doi: 10.1007/s002210050306. [DOI] [PubMed] [Google Scholar]

- Szczepanowski R, Pessoa L. Fear perception: Can objective and subjective awareness measures be dissociated? Journal of Vision. 2007;7:10. doi: 10.1167/7.4.10. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme Medical Publishers; 1988. [Google Scholar]

- VanRullen R, Thorpe SJ. The time course of visual processing: From early perception to decision-making. Journal of Cognitive Neuroscience. 2001;13:454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]

- Vasey MW, el-Hag N, Daleiden EL. Anxiety and the processing of emotionally threatening stimuli: Distinctive patterns of selective attention among high- and low-test-anxious children. Child Development. 1996;67:1173–1185. [PubMed] [Google Scholar]

- Vrba J, Robinson SE. Signal processing in magnetoencephalography. Methods. 2001;25:249–271. doi: 10.1006/meth.2001.1238. [DOI] [PubMed] [Google Scholar]

- Wang L, LaBar KS, McCarthy G. Mood alters amygdala activation to sad distractors during an attentional task. Biological Psychiatry. 2006;60:1139–1146. doi: 10.1016/j.biopsych.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams LM, Liddell BJ, Rathjen J, Brown KJ, Gray J, Phillips M, et al. Mapping the time course of nonconscious and conscious perception of fear: An integration of central and peripheral measures. Human Brain Mapping. 2004;21:64–74. doi: 10.1002/hbm.10154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams LM, Palmer D, Liddell BJ, Song L, Gordon E. The “when” and “where” of perceiving signals of threat versus nonthreat. Neuroimage. 2006;31:458–467. doi: 10.1016/j.neuroimage.2005.12.009. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: Domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Zorawski M, Blanding NQ, Kuhn CM, LaBar KS. Effects of stress and sex on acquisition and consolidation of human fear conditioning. Learning & memory. 2006;13:441–450. doi: 10.1101/lm.189106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zorawski M, Cook CA, Kuhn CM, LaBar KS. Sex, stress, and fear: Individual differences in conditioned learning. Cognitive, Affective & behavioral Neuroscience. 2005;5:191–201. doi: 10.3758/cabn.5.2.191. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Identification of Face-Selective Sensors. A. Butterfly plot of all responses to all stimuli across all sensors for a representative subject. X-axis represents time in milliseconds (ms) after stimulus onset. Y-axis represents strength of magnetic field in femtoTesla (fT). B. Scalp distribution of the M170 response to faces (located from about 120 ms to about 150 ms after stimulus onset for this subject) with the most face-sensitive sensors in each hemisphere circled in black (4 on right and 4 on left). Colors represent strength of magnetic field in femtoTesla (fT), red: field into (sink), blue: field out (source)).