Abstract

Over the past decade, renewed interest in the auditory system has resulted in a surge of anatomical and physiological research in the primate auditory cortex and its targets. Anatomical studies have delineated multiple areas in and around primary auditory cortex and demonstrated connectivity among these areas, as well as between these areas and the rest of the cortex, including prefrontal cortex. Physiological recordings of auditory neurons have found that species-specific vocalizations are useful in probing the selectivity and potential functions of acoustic neurons. A number of cortical regions contain neurons that are robustly responsive to vocalizations, and some auditory responsive neurons show more selectivity for vocalizations than for other complex sounds. Demonstration of selectivity for vocalizations has prompted the question of which features are encoded by higher-order auditory neurons. Results based on detailed studies of the structure of these vocalizations, as well as the tuning and information-coding properties of neurons sensitive to these vocalizations, have begun to provide answers to this question. In future studies, these and other methods may help to define the way in which cells, ensembles, and brain regions process communication sounds. Moreover, the discovery that several nonprimary auditory cortical regions may be multisensory and responsive to vocalizations with corresponding facial gestures may change the way in which we view the processing of communication information by the auditory system.

Keywords: prefrontal cortex, acoustic, communication, temporal lobe, complex sounds, frontal lobe

INTRODUCTION

In the past decade, new developments in auditory cortical organization and the processing of acoustic signals have changed our view of the cortical auditory system. Anatomical studies have provided a new core-belt framework for the organization of the auditory cortex revealing many more nonprimary auditory cortical areas than was previously demonstrated. With the anatomical demonstration of these areas, physiological research has shown that neurons in nonprimary auditory cortices are driven best by complex acoustic stimuli including, and sometimes in particular, species-specific vocalizations. In the following sections, we have summarized some of the salient developments in the study of nonprimary auditory cortices and the communication stimuli, which drive higher-order auditory neurons. Although substantial progress on this problem has been made, further investigation will be necessary to understand completely the way in which complex sounds, such as vocalizations or human language, are processed within the many nodes in the auditory cortical network.

ANATOMICAL ORGANIZATION OF THE CORTICAL AUDITORY NETWORK

In the past decade, several key anatomical studies have refined our view of the organization of the primate cortical auditory system. This new anatomical organization, which draws on previous notions of a core-belt system, has prompted further research to map the boundaries of the numerous fields in the temporal neocortex that are acoustically responsive. This research has clarified the physiological response properties of auditory neurons in primary and nonprimary areas and has yielded a greater understanding of auditory cortical circuitry.

Organization of Core, Belt, and Parabelt Auditory Areas

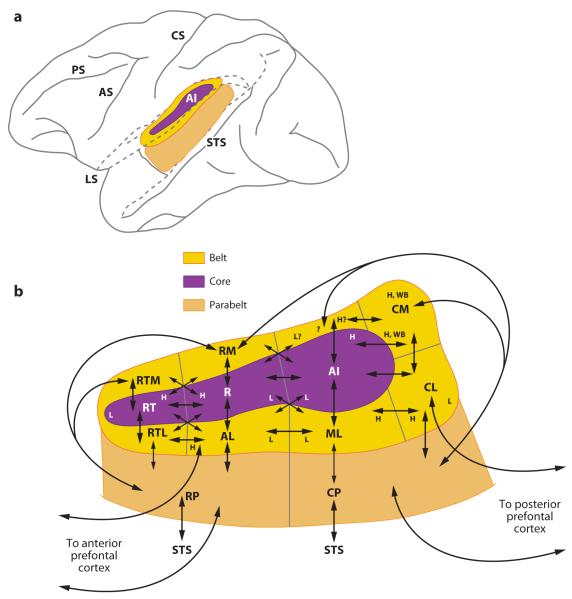

The auditory cortical system of primates contains a core region of three primary areas surrounded by a belt region of secondary areas, which are in turn bounded by a parabelt auditory cortex (Figure 1). Recent studies indicate that the centrally located core region contains three subdivisions including the primary auditory area (AI), a rostral field (R), and an even further rostral temporal field (RT) (Morel et al. 1993; Kosaki et al. 1997; Hackett et al. 1998a, 1999). The three core areas each receive direct thalamic projections and respond robustly with short latencies to pure tones. They have a well-developed layer IV of granule cells, which stain densely for parvalbumin, cytochrome oxidase, and acetylcholinesterase (Hackett et al. 1998a). Differences in the tonotopic map and in the gradation of staining for these three markers have been helpful in differentiating the core areas.

Figure 1.

Organization of auditory cortex and connections. (a) Lateral brain schemata showing the location of auditory cortical areas in the macaque brain. (b) Magnified view of the primary auditory cortex, or core (purple) surrounded by the lateral and medial belt areas (yellow), which are bounded laterally by the parabelt cortex (orange). Connections between the core and belt areas are indicated. The progression of frequency tuning across an area is indicated by an “H” (high) or “L” (low). Projections to the cortex of the superior temporal sulcus (STS) and the prefrontal cortex are indicated with arrows. Diagram in (b) adapted from Hackett et al. 1998a.

The auditory belt and its subdivisions can be differentiated from the core using anatomical and physiological methods. Anatomically, the belt cortex stains less densely than the core for parvalbumin, acetylcholinesterase, and myelin (Hackett et al. 1998a,b). Rauschecker and colleagues (Rauschecker et al. 1995, Tian et al. 2001) have used physiological characteristics, i.e., frequency reversals, to delineate three fields within the lateral belt: an antero-lateral (AL), middle-lateral (ML), and caudal-lateral field (CL) (Figure 1). In addition to the presence of frequency reversals that differentiate neighboring lateral belt fields (Figure 2b), subsequent studies have characterized AL as being selective for call type (i.e., auditory object selectivity) and CL as being sensitive to the spatial location of sounds (Tian et al. 2001). The parabelt lies adjacent and lateral to the auditory lateral belt regions, on the dorsal surface of the superior temporal gyrus (STG). There is a stepwise reduction in staining for parvalbumin, acetylcholinesterase, and cytochrome oxidase; staining is heaviest in the core, moderate in the belt, and lightest in the parabelt (Hackett 2003). The cytoarchitecture of the parabelt is distinguished from the lateral belt by the presence of large pyramidal cells in layer IIIc and layer V (Seltzer & Pandya 1978, Galaburda & Pandya 1983). Unlike the primary and belt auditory areas, the parabelt has not been reanalyzed cytoarchitectonically or physiologically for the boundaries of rostral and caudal subdivisions since the original architectonic studies of the STG (Seltzer & Pandya 1978, Galaburda & Pandya 1983), but rostral and caudal parabelt regions can be appreciated from connectional data (Hackett et al. 1999).

Figure 2.

Connections of the prefrontal cortex with physiologically characterized regions of the belt and parabelt auditory cortex. (a) Color-coded schematic of the core (purple) and belt (yellow) region of the auditory cortex. (b) Physiological map of recordings from the lateral belt region. Numbers indicate the best center frequency for each electrode penetration (black or white dots) in kHz. Injections of different anterograde and retrograde tracers (colored regions) are shown with respect to these recordings. The boundaries of antero-lateral belt auditory cortex (AL), middle-lateral belt auditory cortex (ML), and caudal-lateral belt auditory cortex (CL) are delineated by a bounded line and are derived from the frequency reversal points. (c) Three coronal sections through the prefrontal cortex indicating anterograde and retrograde labeling, which resulted from the color-coded injections placed in the lateral belt/parabelt auditory cortex in (b). (d) A summary of the projections from rostral and caudal auditory cortex showing that dual streams emanating from the caudal and rostral auditory cortex innervate dorsal and ventral prefrontal cortex, respectfully. Adapted from Romanski et al. (1999b). NCR, no clear response.

Local Cortico-Cortical Projections

The three core auditory cortical regions, AI, R, and RT, function in parallel so that lesions in one core field do not abolish pure tone responses in the other, indicating separate, parallel thalamic inputs, which drive each of the core regions independently (Rauschecker et al. 1997). The core regions of the auditory cortex are densely connected with each other (Hackett et al. 1998a, Kaas & Hackett 1998) (Figure 1), and each core region is most densely connected with the adjacent belt region (Morel et al. 1993, Hackett et al. 1998a). This pattern of local connectivity, in which areas connect to adjacent areas, is typical of the auditory cortex and typifies connectivity in other areas of the cortex (Averbeck & Seo 2008). For example, lesion studies have shown that auditory information flows from core area AI to medial belt area CM (caudal medial belt auditory cortex) and that these connections are distinct from those of rostral core area R to anterior medial belt areas RM (rostral medial belt auditory cortex) and lateral belt AL (Morel et al. 1993, Rauschecker et al. 1997). Additional anatomical studies have confirmed connections of R and RT with lateral belt areas AL and ML and of AI with ML and CL (Hackett et al. 1998a,b). Thus information leaving the auditory core does so as a series of parallel, topographically organized streams and with each core area receiving independent afferents from the acoustic thalamus.

The auditory belt continues this topographic connectivity with projections to the parabelt and beyond. In addition to its connectivity with AI, medial belt area CM has connections with somatosensory areas in retroinsular cortex (area Ri) and granular insula (Ig) as well as multisensory areas Tpt (temporo-parietal area) in the caudal STG and TPO (temporal parieto-occiptal area in STS), located on the dorsal bank of the superior temporal sulcus (STS) (Hackett et al. 2007, Smiley et al. 2007). In contrast, medial belt area RM is mainly connected with the parabelt. The caudal lateral belt (areas ML and CL) projects to the caudal parabelt, whereas the rostral belt areas AL and rostral ML are most densely connected with the rostral parabelt (Hackett et al. 1998a,b) (Figure 1). Beyond the belt and parabelt, auditory afferents target fields in the rostral STG and in the STS. The upper bank of the STS receives a dense projection from the parabelt (Hackett et al. 1999). The connections are mainly formed with the polysensory area TPO, located in the dorsal bank of the STS and also with area TAa (temporal area in STS) located on the lip of the STG. Continuing the pattern of topographic, local connectivity, the rostral STG areas TS1 (temporal lobe area 1) and TS2 (temporal lobe area 2) receive projections from the rostral parabelt, whereas the caudal parabelt projects to area Tpt, located caudally on the STG. Area Tpt appears to be multisensory and has connections with parietal cortex (Smiley et al. 2007). The topographic nature of auditory connections to caudal and rostral regions may be similar in nature to the dorsal/ventral streams of the visual system (Rauschecker 1998a,b).

Connections Beyond the Auditory Cortex

Researchers have examined in detail the connections of the temporal lobe auditory areas to the frontal lobe. Both the belt and the parabelt have connections with the prefrontal cortex that are organized as distinct rostral and caudal streams. Early anatomical studies indicated that a rostro-caudal topography exists such that caudal STG and caudal prefrontal cortex (PFC) (areas 8a and caudal area 46) are reciprocally connected (Pandya & Kuypers 1969, Chavis & Pandya 1976, Petrides & Pandya 1988, Barbas 1992, Romanski et al. 1999a, Petrides & Pandya 2002), whereas the rostral STG is reciprocally connected with rostral principalis (rostral 46 and 10) and orbito-frontal areas (areas 11 and 12) (Pandya et al. 1969, Pandya & Kuypers 1969, Chavis & Pandya 1976). In the past decade, studies have characterized these temporo-prefrontal connections in greater detail utilizing the core-belt organization (Morel et al. 1993, Kaas & Hackett 1998). These studies include the analysis of injections into the auditory belt (Romanski et al. 1999b), the parabelt (Hackett et al. 1999), and the PFC (Hackett et al. 1999, Romanski et al. 1999a). Together these studies have refined the direct rostral-caudal topography that was previously noted, showing that the rostral frontal lobe areas are densely connected with anterior belt and parabelt regions (Romanski et al. 1999a). Moreover, the caudal parabelt and belt are reciprocally connected with caudal and dorsolateral frontal lobe. The densest projections to the frontal lobe originate in higher-order auditory processing regions, including the parabelt, the rostral STG (Petrides & Pandya 1988, Romanski et al. 1999a), and the dorsal bank of the STS, including multisensory area TPO and area TAa (Romanski et al. 1999a).

Although these anatomical studies suggest that auditory information is received by PFC, more direct evidence of acoustic innervation of the frontal lobe has been obtained by combining anatomical and physiological methods. In Romanski et al. (1999b), the lateral belt auditory areas AL, ML, and CL were physiologically identified, as in previous studies by Rauschecker et al. (1995), and injections of anterograde and retrograde anatomical tracers were placed into each of the belt areas (Figure 2b). Analysis of the anatomical connections revealed that five specific regions of the frontal lobes received input from the lateral belt, including the frontal pole (area 10), the principal sulcus (area 46), ventrolateral prefrontal cortex (VLPFC) (areas 12vl/47 and 45), the lateral orbital cortex (areas 11 and 12o), and the dorsal periarcuate region (area 8a) (Figure 2c,d). Moreover, these connections were topographically organized such that projections from AL typically involved the frontal pole, the rostral principal sulcus, anterior VLPFC, and the lateral orbital cortex. In contrast, projections from area CL targeted the dorsal periarcuate cortex and the caudal principal sulcus, as well as caudal portions of the VLPFC (area 45) and, in two cases, premotor cortex (area 6d). The frontal pole and the lateral orbital cortices were devoid of anterograde labeling from injections into area CL. The topographic specificity of this rostro-caudal, frontal-temporal connectivity is indicative of separate streams of auditory information that target distinct functional domains of the frontal lobe. One pathway, originating in CL, targets caudal dorsolateral prefrontal cortex (DLPFC); the other pathway, originating in AL, targets rostral and ventral prefrontal areas (Figure 2c,d). Previous studies of the visual system have demonstrated that some spatial and nonspatial visual streams target dorsal-spatial and ventral-object prefrontal regions, respectively (Ungerleider & Mishkin 1982, Barbas 1988, Wilson et al. 1993, Webster et al. 1994). The anatomical and physiological studies of the auditory cortical system suggest that pathways originating from anterior and posterior auditory belt and parabelt cortices are analogous to the “what” and “where” streams of the visual system; the anterior auditory belt targets ventral prefrontal areas involved in processing what features of an acoustic stimulus and the posterior auditory cortex projects to caudal DLPFC and carries information regarding where an auditory stimulus is (Romanski et al. 1999b, Tian et al. 2001).

Thus, a cascade of auditory afferents from two divergent streams targets the PFC. Small streams enter the frontal lobe from early auditory processing stations (such as the lateral and medial belt), and successively larger projections originating in the parabelt, anterior temporal lobe, and the dorsal bank of the STS join the flow of auditory information destined for the frontal lobe. A cascade of inputs from increasingly more complex auditory association cortex may be necessary to represent all qualities of a complex auditory stimulus; that is, crudely processed information from early unimodal belt association cortex may arrive first at specific prefrontal targets, while complex information (such as voice identity and emotional relevance) from increasingly higher association areas may follow. This hierarchy of inputs allows for a rich representation of complex auditory stimuli to reach the PFC in an efficient manner.

RESPONSES TO COMPLEX SOUNDS AND VOCALIZATIONS IN THE TEMPORAL LOBE

Distinctions in the connectivity and chemoarchitecture of nonprimary auditory cortices prompted investigations of the electrophysiological characteristics of these regions. Although neurons in primary auditory cortex respond well to pure tones, neurons in belt and parabelt regions often produced only weak responses to these stimuli (Rauschecker et al. 1995, Rauschecker & Tian 2004). Thus, studies of the regions beyond the primary auditory cortex have been based largely on complex sounds and especially vocalizations.

Vocalization Processing in the Primary Auditory Cortex

Early studies of vocalization processing in the cortex utilized species-specific calls in squirrel monkeys and measured neuronal responses in the temporal lobe (Newman & Wollberg 1973, Wollberg & Sela 1980). These studies found robust responses to these stimuli, but they did not explore the specific features of the vocalizations encoded by auditory cortical neurons. Also, psychoacoustic studies with Japanese macaques showed that it was possible to employ vocalizations as test stimuli to study sound localization, frequency discrimination, and categorization of complex sounds (May et al. 1986, Moody et al. 1986). These early studies advanced the study of primate auditory processing and promoted the use of species-specific vocalizations.

In the past decade, careful attention to the behavioral and acoustic characteristics of non-human primate vocalizations, together with knowledge of the receptive field properties of primary auditory cortical neurons, has advanced our understanding of call coding by auditory cortical neurons (Wang 2000). Work by Wang and colleagues was aimed at understanding auditory cortical processing in the marmoset. Despite the fact that less is known about the anatomy of the cortical auditory system in marmosets, they are a valuable experimental animal for studying vocalizations because they vocalize extensively in captivity, and therefore auditory representations of vocalizations, with and without concomitant production of vocalizations, can be studied (Eliades & Wang 2005).

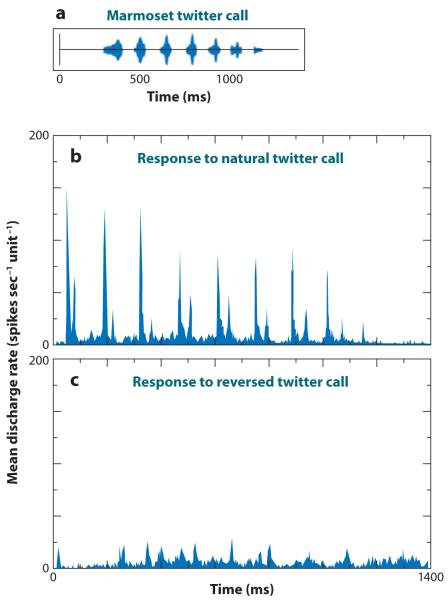

Early investigations of the neural representation of the twitter call in marmosets established several important findings. First, the responses of marmoset primary auditory cortex neurons tended to lock to the temporal envelope of the call (Figure 3) (Wang et al. 1995). Because the frequency-modulated (FM) sweep in each phrase of the twitter call spans many frequencies, neurons with a range of center frequencies responded to each phrase, and as such, there is a distributed population response to the call. Further work in this same study, using parametrically manipulated stimuli that were time-expanded, time-compressed, or time-reversed, showed that the locking of the neural response to the phrase structure of the calls was strongest, in a subset of selective neurons, for natural calls and weaker when the calls were manipulated in any way (Figure 3) (Wang et al. 1995).

Figure 3.

Averaged responses to a natural twitter call and its time-reversed version recorded from the marmoset primary auditory cortex. Sampled cells in the auditory cortex were categorized as selective if they responded more to the natural twitter call than to the reversed twitter call, whereas nonselective units responded more to the reversal. The waveform of an example twitter call is shown in (a). In (b), the averaged PSTH (bin width = 2.0 ms) for the selective population of neurons (n = 93) to the forward twitter call is shown. In (c), the selective population response to the time-reversed version of the call is shown. Adapted from Wang (2000).

Neurophysiology of the Auditory Belt

Building on the extensive body of research on the physiology of primary auditory cortex, physiological response properties of lateral belt auditory neurons have only recently been examined. Because lateral belt neurons are only weakly responsive to pure tones, characterization of multiple regions and receptive fields was delayed until other techniques and stimuli were tried. Rauschecker and colleagues (1995) used band-passed noise bursts with defined center frequencies to drive lateral belt neurons. This approach allowed them to delineate frequency reversals successfully in the tonotopic map as they moved their electrode across the lateral belt, revealing frequency reversals that would define the boundaries for three separate auditory fields AL, ML, and CL (Figure 2b). They also determined that neurons in the lateral belt responded vigorously to species-specific rhesus macaque calls digitized from a library of calls collected in the wild by Hauser (1996). In their study, Rauschecker and colleagues (1995, Tian et al. 2001) found that 89% of lateral belt neurons responded to one or more of seven different vocalization call types. More than half of the vocalization-responsive neurons preferred harmonic and noisy vocalizations over pure tones or band passed noise and were unaffected by changes in sound level of the vocalization stimuli. In further testing, calls were high-pass filtered, low-pass filtered, or segmented to test components containing the best frequency or best bandwidth for a given cell. The results showed that whereas some cells predictably altered firing levels when the best frequency was removed from the vocalization, other neurons exhibited nonlinear summation (Rauschecker et al. 1995). For example, frequencies outside a neuron’s tuning range, which by definition did not evoke a response, led to clear facilitation of the response when combined with frequencies inside the tuning range (Rauschecker et al. 1995, Rauschecker 1998b). In other cases, two complex sounds evoked a response only when combined in the right temporal order (Rauschecker et al. 1997). In other auditory systems, this facilitation in both the spectral and the temporal domains is known as combination sensitivity (Suga et al. 1978, Margoliash & Fortune 1992). Nonlinear summation seems to be the main mechanism creating such selectivity in monkey auditory cortex, although nonlinear suppression effects are also observed.

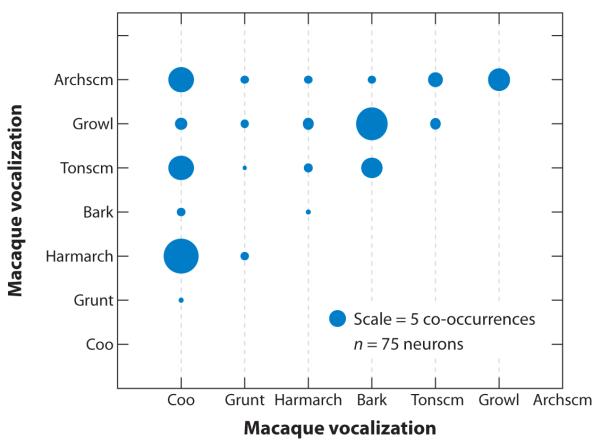

Rauschecker and colleagues also examined spatial preferences in lateral belt neurons by assessing the responses to species-specific vocalizations presented at different azimuth positions. Their findings indicated that caudal belt neurons in ML and CL show the greatest spatial selectivity, whereas anterior field AL appears to be more selective for call type (Tian et al. 2001). These results support the notion that auditory object identity and spatial selectivity may be processed by two separate auditory streams. With respect to call type selectivity, the neurons in AL, which send projections to the parabelt, STS, and directly to the VLPFC, show vocalization selectivity to calls with similar acoustic features. Furthermore, when AL neurons responded to several vocalizations, the most common grouping that evoked similar responses was coos, harmonic arches, and arched screams (Tian et al. 2001) (Figure 4), all of which have a harmonic stack as a defining acoustic feature.

Figure 4.

Bubble graph of co-occurrence of responses to macaque vocalizations in neurons that selectively responded to two out of seven macaque calls. The diameter of the circles is proportional to the number of co-occurrences (the scale bubble = 5 co-occurrences). Neurons responded with a higher probability to calls from the same phonetic category, as in the cases of “coo” and “harmonic arch,” which are both harmonic calls, or “bark” and “growl,” which are both noisy calls. Semantic category, does not seem to play a major role at this processing level. Adapted from Tian et al. (2001).

In addition to its role in complex sound processing, the lateral belt may be a first step in multisensory processing. A study by Ghazanfar et al. (2008) recorded single-unit and LFP signals in the STS and in the lateral belt auditory cortex. Of the single-unit lateral belt neurons tested with vocalizations (n = 36), ~29 cells showed some modulation by simultaneously presented face stimuli and were judged to be multisensory. Neural activity was enhanced or suppressed when a vocalization was combined with the corresponding facial gesture (Figure 5). These multisensory responses may be due to feedback projections from neurons in the dorsal bank of the STS, which are also multisensory.

Figure 5.

Multisensory responsive neuron in the lateral belt auditory cortex of the macaque monkey. A single-unit response to a species-specific vocalization, a coo (voice, green), the corresponding dynamic movie of that vocalization (face, blue), and their simultaneous presentation (face + voice, red) are shown in the top panel as smoothed histograms and in the bottom raster panels. The response to the face voice condition elicited an increase in response compared with the voiceor face-alone conditions. Adapted+from Ghazanfar et al. (2008).

Other cortical regions within the anterior temporal lobe that receive projections from belt and parabelt auditory cortices are also involved in the processing of complex sounds including vocalizations. This finding has been shown in 2-deoxy-glucose (DG) experiments where vocalizations evoked activity along the entire length of the STG as well as several other regions within the temporal, parietal, and frontal lobes (Poremba et al. 2004). Further studies have characterized responses to vocalizations and other complex sounds in the anterior temporal lobe, and within the auditory parabelt, and have found varying degrees of specificity for natural sounds and species-specific vocalizations (Kikuchi et al. 2007). As researchers move outside of primary auditory cortex and explore the full extent of auditory responsive areas in the temporal lobe, new sound-sensitive areas emerge. A recent study (Petkov et al. 2008) has suggested that the anterior temporal lobe may be specifically involved in vocalizations and voice identity processing in a manner that is similar to area TAa in the human STS, which is robustly active during vocal identity discrimination (Belin et al. 2000, Fecteau et al. 2005). This study used fMRI in macaques to delineate vocalization-sensitive areas in the anterior temporal lobe.

Complex Sound Processing in the STS

Despite the fact that auditory afferents are known to project to the STS, there have been no systematic studies of electrophysiological responses to communication-relevant auditory stimuli in the STS of awake-behaving animals. Three studies recorded single-cell responses to auditory and other modality sensory stimuli in anesthetized animals (Benevento et al. 1977, Bruce et al. 1981, Hikosaka et al. 1988). These studies targeted the posterior two-thirds of the dorsal and ventral banks of the STS. Recordings were mainly confined to polymodal area TPO and unimodal visual processing regions. The tested auditory stimuli consisted of pure tones and clicks (Benevento et al. 1977), and in two studies, complex sounds included monkey calls, human vocalizations, and environmental sounds (Bruce et al. 1981, Hikosaka et al. 1988). In Benevento et al. (1977), unimodal auditory responses, although sparse (n = 14/107 cells), were observed and included onset and offset excitatory responses and onset inhibitory responses. Hikosaka et al. (1988) also reported a small number of unimodal auditory responses in the caudal STS polysensory region. The responses in caudal STS were all broadly tuned with little stimulus specificity. Baylis et al. (1987) recorded > 2600 neurons in alert rhesus macaques across a much wider region of the temporal lobe including areas TPO and TAa. Approximately 50% of the neurons in TAa were responsive to auditory stimuli, whereas only 8% of the recorded neurons in TPO were auditory responsive, indicating that area TAa is predominantly auditory and area TPO is truly multisensory.

A recent study carefully examined the responses of STS neurons in alert nonhuman primates to complex sounds and actions, including monkeys vocalizing, lip-smacking, paper ripping, etc. (Barraclough et al. 2005). The primary goal of the study was to investigate whether STS neurons coding the sight of actions also integrated the sound of those actions. In this study, pictures or movies of an action were presented separately or combined with the accompanying sound. Approximately 32% of cells in the anterior STS responded to the auditory component of the audio-visual stimulus. However, most of these cells were multimodal, and the auditory responses were not tested further. Ghazanfar et al. (2008) also evaluated responses to faces and vocalizations in the STS with similar conclusions regarding the multisensory nature of area TPO in the STS. In summary, a number of temporal lobe recordings indicate that TAa is a likely candidate for continuing the rostral stream of auditory information from the parabelt auditory cortex and the anterior temporal lobe areas because it is more responsive to complex auditory stimuli than is area TPO (Baylis et al. 1987, Barraclough et al. 2005). Additional support for this distinction is suggested by the anatomical connections of areas TPO and TAa because TPO receives more input from visual processing regions (Seltzer & Pandya 1989, Hackett et al. 1999).

PREFRONTAL CORTEX: PROCESSING OF VOCALIZATIONS

Early Studies in Old and New World Primates

The PFC has long been thought to play a role in the processing of complex and especially communication-relevant sounds. For more than a century, the inferior frontal gyrus in the human brain (including Broca’s area) has been linked with speech and language processes (Broca 1861). Neuroimaging studies of the human brain have shown activation of ventrolateral frontal lobe areas such as Brodmann’s areas 44, 45, and 47 in auditory working memory, phonological processing, comprehension, and semantic judgment (Buckner et al. 1995, Demb et al. 1995, Fiez et al. 1996, Stromswold et al. 1996, Zatorre et al. 1996, Gabrieli et al. 1998, Stevens et al. 1998, Friederici et al. 2003). In animal studies, some investigators have reported that large lesions of lateral frontal cortical regions (which include the sulcus principalis region) in primates disrupt performance of auditory discrimination tasks (Weiskrantz & Mishkin 1958, Gross & Weiskrantz 1962, Gross 1963, Goldman & Rosvold 1970).

Several studies have demonstrated that neurons in the PFC respond to auditory stimuli or are active during auditory tasks in Old and New World primates (Newman & Lindsley 1976; Ito 1982; Azuma & Suzuki 1984; Vaadia et al. 1986; Watanabe 1986, 1992; Tanila et al. 1992, 1993; Russo & Bruce 1994; Bodner et al. 1996), but extensive analyses of the encoding of complex sounds at the single-cell level was lacking. In these studies, weakly responsive auditory neurons were found sporadically and were distributed across a wide region of the PFC. Few of the early electrophysiological studies observed robust auditory responses in macaque prefrontal neurons. A single study noted phasic responses to click stimuli in the lateral orbital cortex, area 12o (Benevento et al. 1977). The lack of auditory activity in the PFC of nonhuman primates in earlier studies may be due to the fact that studies have often confined electrode penetrations to caudal and dorso-lateral PFC (Ito 1982; Azuma & Suzuki 1984; Tanila et al. 1992, 1993; Bodner et al. 1996), where presumptive auditory inputs to the frontal lobe are more dispersed. Second, recent data has shown that the auditory responsive zone in the macaque ventral prefrontal cortex (VLPFC) is small, making this region difficult to locate unless anatomical data or other physiological landmarks are utilized (O’Scalaidhe et al. 1997, 1999; Romanski et al. 1999a, 1999b).

Auditory Responsive Domain in VLPFC

The demonstration of direct projections from physiologically characterized regions of the auditory belt cortex to distinct prefrontal targets has helped guide electrophysiological recording studies in the PFC (Romanski et al. 1999b). Using this anatomical information, an auditory responsive domain has been defined within areas 12/47 and 45 (collectively referred to as VLPFC) of the primate PFC (Romanski & Goldman-Rakic 2002). Neurons in VLPFC are responsive to complex acoustic stimuli including, but not limited to, species-specific vocalizations (Figure 6). The auditory-responsive neurons are located adjacent to a face-responsive region that has been previously described (Wilson et al. 1993; O’Scalaidhe et al. 1997, 1999), which suggests the possibility of multimodal interactions, discussed below. VLPFC auditory neurons are located in an area that has been shown to receive acoustic afferents from ventral stream auditory neurons in the anterior belt, the parabelt, and the dorsal bank of the STS (Hackett et al. 1999; Romanski et al. 1999a,b; Diehl et al. 2008). The discovery of complex auditory responses in the macaque VLPFC is in line with human fMRI studies indicating that a homologous region of the human brain, area 47 (pars orbitalis), is activated specifically by human vocal sounds compared with animal and nonvocal sounds (Fecteau et al. 2005). The precise homology between the monkey prefrontal auditory area and auditory processing areas of the human brain has not been definitively characterized and awaits further study.

Figure 6.

Location of auditory responsive cells in the ventrolateral prefrontal cortex (PFC). On the left, three coronal sections with electrode tracks (red lines) are shown with locations of auditory responsive cells indicated (black tics). On the right, the lateral surface of the macaque brain is shown, indicating the location from which the coronal sections were taken (black lines, A, B, C) and from where auditory responsive cells were recorded as red dots in areas 12 and 45, which make up the VLPFC. The blue shaded area within area 45 delimits the region in which visually responsive neurons, including face cells, were found. Abbreviations: ps, principal sulcus; as, arcuate sulcus; los, lateral orbital sulcus.

The initial study reporting auditory responses in the nonhuman primate VLPFC characterized the auditory responsive cells as being responsive to several types of complex sounds including species-specific vocalizations, human speech sounds, environmental sounds, and other complex acoustic stimuli (Figure 7) (Romanski & Goldman-Rakic 2002). Whereas 74% of the auditory neurons in this study responded to vocalizations, fewer than 10% of cells responded to pure tones or noise stimuli. Some neurons had phasic responses that peaked at the onset of the stimulus (Figure 7a), whereas other cells produced sustained responses to complex stimuli that lasted the length of, or sometimes beyond the duration of, the auditory stimulus (Figure 7b). The demonstration of neurons in the VLPFC responsive to complex auditory stimuli expanded the circuitry for complex auditory processing and prompted a number of research questions about the role of PFC in auditory processing.

Figure 7.

Types of responses to auditory stimuli by prefrontal neurons. The responses of two single units to three different exemplars of auditory stimuli are shown in raster and histogram plots. The onset of the auditory stimulus (vocalizations in the first two rows; noise and other stimuli in the last row) is at time “0” and the duration of the stimulus is depicted by the length of the gray bar. A neuron with a phasic response to the onset of auditory stimuli is shown in (a) and a neurons that produced a sustained response to auditory stimuli is depicted in (b).

Representation of Vocalizations in VLPFC

Since investigators localized a discrete sound-processing region in the PFC of nonhuman primates, research has focused on determining what the neurons in this prefrontal area encode; perhaps neurons at higher levels of the auditory hierarchy process complex stimuli in a more abstract manner than do lower-order sensory neurons or show evidence of greater selectivity. As mentioned above, studies have shown that VLPFC auditory neurons do not readily respond to simple acoustic stimuli such as pure tones (Romanski & Goldman-Rakic 2002) but are robustly responsive to vocalizations and other complex sounds (Averbeck & Romanski 2004, Gifford et al. 2005, Romanski et al. 2005, Russ et al. 2008). Would these higher-order auditory neurons be more likely to process the referential meaning within communication sounds or complex acoustic features that are a part of these and other sounds? PET and fMRI studies have suggested that the human inferior frontal gyrus, or ventral frontal lobe, plays a role in semantic processing (Demb et al. 1995, Poldrack et al. 1999). Would nonhuman primates have a frontal lobe homologue that contains neurons that encode the referents of particular vocalizations?

In playback experiments using rhesus macaque vocalizations, monkeys respond behaviorally in a manner similar to vocalizations with similar functional referents regardless of acoustic similarity (Hauser 1998, Gifford et al. 2003). Thus the neural circuit guiding this behavior may include the VLPFC, which receives auditory information and is involved in a number of complex cognitive processes. However, it is highly unlikely that individual neurons would be semantic detectors. Such representations, i.e., neurons that have a very selective response to a specific stimulus (i.e., grandmother cells) or a set of related stimuli, are rarely found in the macaque brain, where distributed representations are the norm (see, for example, Averbeck et al. 2003). However, rather sparse representations have recently been reported in the human medial temporal lobe (Quiroga et al. 2008), where a single cell may respond best to various referents of a single famous individual.

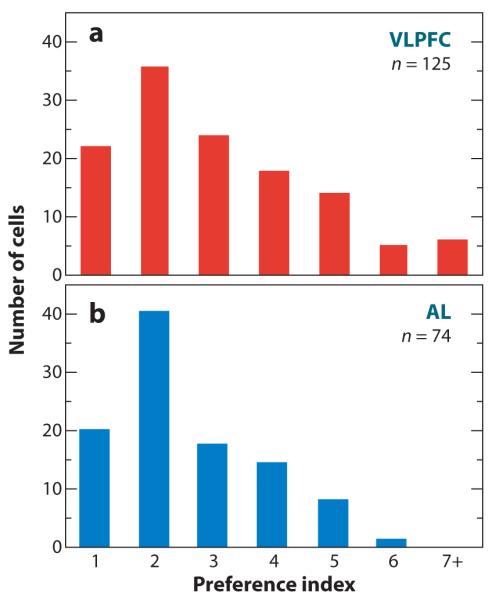

In a series of studies (Romanski et al. 2005, Averbeck & Romanski 2006), investigators have examined the coding properties of VLPFC neurons with respect to macaque vocalizations. VLPFC neurons were tested with a behaviourally and acoustically categorized library of Macaca mulatta calls (Hauser 1996, Hauser & Marler 1993), which contained exemplars from each of 10 identified call categories. Neurons in this study responded to between 1 and 4 of the different calls (Figure 8a) (Romanski et al. 2005). It is interesting to note the similarity of call selectivity in VLPFC and in the lateral belt auditory cortex, where call preference indices also range between 1 and 4 for 75% of the recorded population (Figure 8b) (Tian et al. 2001). Although these analyses suggest that prefrontal neurons are not simply call detectors, where a call detector would be defined as a cell that responded with a high degree of selectivity to only a single vocalization, they do not offer direct evidence about whether neurons provide information about multiple call categories.

Figure 8.

A comparison of selectivity to vocalizations (a) ventral prefrontal cortex (VLPFC) and (b) the anterior lateral belt (AL). The number of cells responding to one or more vocalizations on the basis of the neuron’s half-peak response to all stimuli (Romanski et al. 2005, Rauschecker et al. 1995) is shown in the bar graph.

A number of studies have related information to stimulus or, more importantly, vocalization selectivity. Although firing rates, which form the basis of the call selectivity index and information (specifically Shannon information; Cover & Thomas 2006) are related, the relationship is indirect. For example, if a neuron responded to 5 calls from a list of 10 calls but had a different response to each of those 5 calls, it may show a selectivity index of one, two, three, four, or five, even though it was actually providing considerable information about all five of the calls to which it responded. In contrast to this response profile, a neuron that responded to five of the calls but had a similar response to all five calls would show a selectivity of five, but it would provide less information because the response of the neuron would provide information only about whether one of the five responsive calls or five nonresponsive calls had been presented.

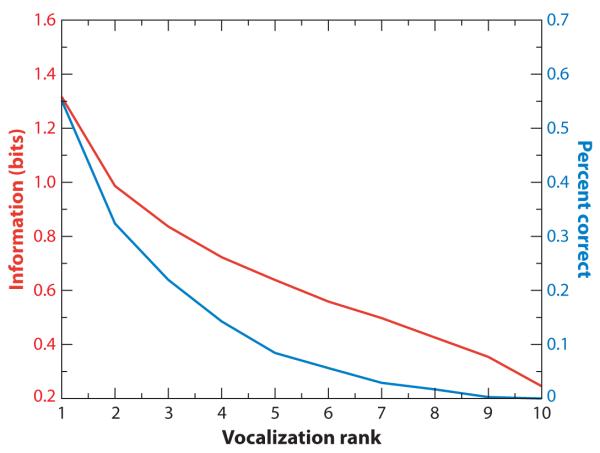

Thus, decoding analyses and associated information theoretic techniques were used to examine the amount of information single neurons provide about individual stimuli (Romanski et al. 2005). Specifically, it characterized the number of stimuli that a single cell could discriminate and how well it could discriminate them. These analyses showed that single cells, on average, could correctly classify their best call in ~55% of individual trials, where 10% is chance. Performance for the second- and third-best calls fell quickly to ~32% and 22% (Figure 9). The information estimates showed similar values and decreased accordingly. Thus, although single neurons certainly were not detecting individual calls, their classification performance dropped off quickly. This result is similar to the encoding of faces by temporal lobe “face” cells (Rolls & Tovee 1995)

Figure 9.

Total information (in bits) and average percent correct (as percent × 0.01). The graph shows a tuning curve for the population average of VLPFC cells rank-ordered according to optimum vocalization. On average, VLPFC cells contain 1.3 bits of information (red line) about the optimum vocalization and 1 bit about the second-best vocalization. This result drops abruptly for additional vocalizations. In terms of the decoding analysis (percent correct, blue line), VLPFC cells are above chance (which would be 10%) at discriminating the best vocalization for any given neuron, and this number drops abruptly beyond two vocalizations. Adapted from Romanski et al. (2005).

Auditory responsive neurons in the VLPFC have been further examined by determining how they classify different vocalization types. Investigators have used a hierarchical cluster analysis based on the neural response to exemplars from each of 10 classes of vocalizations. In this analysis, stimuli that gave rise to similar firing rates in prefrontal neurons were clustered together, and stimuli that gave rise to different firing rates fell into different clusters (Figure 10). After dendrograms were fit to individual neurons, a consensus tree (Margush & McMorris 1981) was built from the clusters that occurred most frequently across neurons. Because different neurons were tested with different lists of vocalization exemplars from each call category, any consistent clustering of particular stimuli across lists would suggest that the VLPFC neurons were really responding similarly to those classes of stimuli, rather than to individual tokens, in a consistent manner.

Figure 10.

Typical prefrontal responses to macaque vocalizations and cluster analysis of mean response. (a, d) The neuronal response to 5 of 10 vocalization stimuli that were presented during passive fixation is shown (spike density function). (b, e) The mean response to all 10 vocalizations is depicted in a bar graph. (c, f) The dendrogram created from a hierarchical cluster analysis of the mean response is shown. Auditory responses that are similar cluster together, and these clusters are color-coded to match the bar graph and spike density function graphs. The cell in (a) responded best to the warble and coo stimuli, which are acoustically similar and to which the neuron responded in a similar manner. The cell in (b) responded best to 2 types of screams. The responses to these stimuli were similar and clustered together as shown in the dendrogram (f).

At the population level, we found a few classes of stimuli that often clustered together (Figure 11a). For example, aggressive calls and grunts, coos and warbles, and copulation screams and shrill barks all clustered together relatively often. When the vocalizations themselves were analyzed for similar spectral structure, several of the same clusters emerged, notably warbles and coos and aggressive calls and grunts (Figure 11b). Thus, the clusters, which were consistent across the neural population, were all composed of stimuli that were acoustically similar. Using other analysis methods, Tian et al. (2001) found similar results in lateral belt auditory cortex responses to a subset of rhesus macaque vocalizations (Figure 4). In this study, Tian et al. (2001) demonstrated that lateral belt neurons tended to respond in a similar manner to calls that had similar acoustic morphology.

Figure 11.

Vocalization and neuronal consensus trees. Consensus trees, based on the dendrograms for the vocalizations analyzed in Averbeck & Romanski (2006) (a) and for the neuronal response to the vocalizations reported in Romanski et al. 2005 (b) are shown. (a) Dendrograms were derived for each of the 12 vocalization lists, and a consensus tree of these was generated to indicate the common groupings according to acoustic features of the vocalizations. Warbles and coos and aggressive calls and grunts are two groups that occurred in the analysis of the vocalizations and in the analysis of the neural response to those vocalizations. Adapted from Romanski et al. (2005).

Not all studies agree on the way vocalizations are categorized by neurons in the frontal lobe. One study examined responses to vocalization sequences in which one vocalization was presented several times, after which it was followed by a different vocalization that differed either semantically or acoustically (similar to the habituation/dishabituation task used previously; Saffran et al. 1996, Gifford et al. 2003). Although this task differs in important ways from the task used in Romanski et al. (2005), both are passive listening tasks. Gifford et al. (2005), however, found that summed population neural responses tended to be different when there were transitions between semantically different categories but not between semantically similar categories. Summed population responses, however, lose much of the information present in neural responses (Reich et al. 2001, Montani et al. 2007). Thus, there may be information at the single-cell level that is being lost when population responses are examined. Moreover, although the early responses to some semantically similar calls appeared similar, after ~200 ms the neural responses appear to diverge. This is about the same time that acoustic differences between the relevant stimuli emerge. Furthermore, to truly distinguish semantic categorization from acoustic categorization, it seems important to show that sounds that have a similar acoustic morphology but differ in semantic context do not evoke a similar response, which has not yet been demonstrated. Finally, it may not be possible to dissociate acoustics and semantics because some calls that share a number of acoustic features may be uttered under similar behavioral contexts (Hauser & Marler 1993).

Another recent study has used neural decoding approaches to compare how well STG and VLPFC neurons discriminate among the 10 vocalization classes (Russ et al. 2008). This study showed that STG neurons carry more information about the vocalizations than do VLPFC neurons and also suggested that information about stimuli was maximal with extremely small bin sizes. It is difficult to interpret this result, however, because one needs more trials than parameters to estimate decoding models, usually by at least a factor of 10 (Averbeck 2009), unless regularization techniques are being used (Machens et al. 2004). The small bin sizes in the analysis used in Russ et al. (2008), however, led to the opposite situation (i.e., when a bin size of 2 ms is used over a window of 700 ms, to estimate means for 10 stimuli, 3500 parameters need to be estimated; whereas, in fact, only on the order of several hundred trials were available in total to fit these models). Similar analyses (Averbeck & Romanski 2006) have found that a bin size of 60 ms is optimal using twofold cross validation, which minimizes the problem of overfitting. Overfitting is often still present with leave-one-out cross validation.

Selectivity in VLPFC neurons is also somewhat controversial. In Russ et al. (2008), a preference index was calculated for VLPFC neurons, and it showed that prefrontal neurons responded to more than 5 of 10 vocalizations, indicating very little selectivity in prefrontal neurons (Russ et al. 2008). This result stands in contrast to that of Romanski et al. (2005), which calculated an average preference index for VLPFC neurons of 2–3 call types out of 10 (Figure 8) in neurons that had first been shown to be responsive for the vocalization category. Lack of selectivity (i.e., high call preference index values) in some studies could be due to inclusion of neurons that were not responding to the vocalizations because this practice would lead to an increase in this metric.

Computational Approaches to Understanding Vocalization Feature Coding in VLPFC

Although the previous analyses have determined that prefrontal neurons respond to complex sounds including vocalizations, they still do not address the question of which features of the stimuli are actually driving the neural responses. One way to address this question is to use a feature elimination approach (Rolls et al. 1987, Rauschecker et al. 1995, Tanaka 1997, Rauschecker 1998a, Kayaert et al. 2003). In this approach, one starts with a complex stimulus that robustly drives the neuron to respond, and then one removes features from the stimulus. If the neuron still responds to the reduced stimulus, the remaining features must be driving the neural response. This approach has been used in studies of marmoset primary auditory cortex neuroons and macaque belt auditory cortex (Wang 2000; Rauschecker et al. 1995; Rauschecker 1998a,b).

One approach to this question is to use principal and independent component analysis (PCA/ICA) to rigorously define feature dimensions (Averbeck & Romanski 2004). PCA identifies features that correspond closely to the dominant spectral or second-order components of the calls, for example, the formants, whereas ICA identifies features related to components beyond second order. Specifically, ICA can be used to extract features that retain bi-spectral (thirdor higher-order) nonlinear components of the calls. This study showed that after projecting each call into a subset of the principal or independent components, the dominant Fourier components seen in the spectrogram were preserved (Figure 12a,b and 12d,f). The independent components, however, retained power across multiple harmonically related frequencies (Figure 12c,e,g). Furthermore, examination of the bi-coherence, which shows phase locking across harmonically related frequencies, showed that the ICA subspace tended to retain phase information across multiple harmonically related frequencies, whereas the PCA retained phase information only at frequencies that had more power, which tended to be lower frequencies, and phase may be highly important for stimulus identification (Oppenheim & Lim 1981).

Figure 12.

Principal and independent component filtering of a coo. (a) Spectrogram of an unfiltered coo. (b) Spectrogram of a coo after projecting into the first 10 principal components. (c) Spectrogram of a coo after projecting into the first 10 independent components. (d) Fourier representation of the first principal component. (e) Fourier representation of the first independent component. (f) Time representation of the first principal component. (g) Time representation of the first independent component.

Preliminary neurophysiological evidence suggests that the independent components preserve more of the features that are important to the neural responses. In many cases, the neural responses to the ICA-filtered calls was similar to that seen to the original call (Figure 13a), and in some cases the response to the ICA-filtered call is even stronger than the responses to the unfiltered call (Figure 13b). This result could occur if some of the feature dimensions present in the original call were actually suppressive, in which case removing them would result in a stronger response. Thus, this approach provides a tool for uncovering the important stimulus features in macaque vocalizations that may be driving neural responses. Furthermore, it allows one to compare the ability of PCA and ICA to identify features of vocalizations that are most relevant to auditory neurons.

Figure 13.

Single neuron responses to original and filtered calls. (a) Response of a single VLPFC neuron to a shrill bark and to the same shrill bark filtered with either the first 10 principal or independent components. (b,d) Mean firing rate to original and filtered calls. (c) Response of a single VLPFC neuron to a grunt and the same grunt filtered with either the first 10 principal or independent components.

Complementary to the work on feature elimination, other techniques have been used to identify which features of the vocalizations are driving the time-varying neural responses (Averbeck & Romanski 2006, Cohen et al. 2007). In this work, rather than taking a featureelimination approach to try to preserve neural responses to reduced stimuli, investigators compared models that attempt to predict bin-by-bin neural responses on the basis of the time course of the vocalizations. In one study, Averbeck & Romanski (2006) examined first the features of the vocalizations that were useful for discriminating among the classes of calls because these are likely to be the behaviourally relevant features and are therefore the features being processed by the auditory system. Models were then used to examine how these might be encoded in the responses of single neurons.

This study found that global features of the auditory stimuli, including frequency and temporal contrast, were not highly useful for discriminating among stimuli (Figure 14). Dynamic features of the stimuli, however, captured by a Hidden Markov Model (HMM) were much more effective at discriminating among stimuli (~40% discrimination performance for global features, 75% for dynamic features). Thus, the HMM captured much more of the statistical structure of the vocalizations necessary to distinguish among them.

Figure 14.

Temporal and spectral modulation of coo and gekker. (a, top) Spectrogram of coo. (Second from top) Modulation spectra of coo (i.e., Fourier transform of spectrogram). (Third from top) Average frequency modulation, computed by averaging across the time dimension in modulation spectra. (Bottom) Average temporal modulation, computed by averaging across the frequency dimension in modulation spectra.

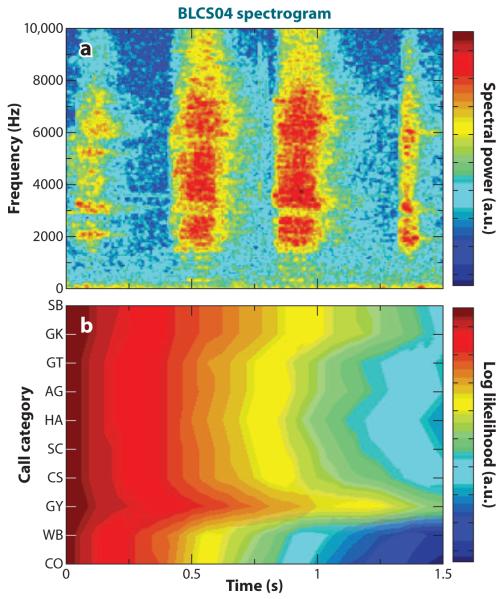

The hypothesis that VLPFC neural responses could be accounted for by using the HMM was subsequently examined. The HMM produced, for each vocalization, an estimate of the probability that the vocalization comes from each of the ten classes as a function of time (Figure 15b). Thus, the HMM maps the time-frequency representation of the vocalization onto a time-probability representation, where it tracks the probability of the vocalization belonging to each of the 10 classes as a function of time. Because there is a time-frequency representation and a corresponding time-probability representation, linear transformations of these representations to predict neural responses could be examined. The linear transformation of the time-frequency representation is often known as a spectral-temporal-receptive-field (STRF). The linear transformation of the probability representation was analogously called a linear-probabilistic receptive field (LPRF). The LPRF was better able to predict the neural responses than was the STRF (Averbeck & Romanski 2006). This finding suggests that the time-probability representation may be closer to the input of VLPFC neurons than a time-frequency representation would be, if one assumes that neurons cannot compute highly nonlinear functions of their inputs.

Figure 15.

Spectrogram (a) and time-probability plot (b) of a copulation scream. Time probability values were generated by the Hidden Markov Model.

Much of the work just described has developed out of an interaction between theoretical and experimental approaches. This work can be further developed in several directions, both theoretical and experimental. For example, one of the most fruitful ways to assess sensory processing is to try to understand how signals evolve across synapses. Therefore, a better understanding of the representation of vocalizations in the areas that send acoustic information to VLPFC, using the same tools that have been previously used to study VLPFC, would be highly useful. For example, it is well known that spectral-temporal receptive fields can accurately characterize representations very early in the auditory system, whereas the representation in VLPFC can be better characterized using an HMM, which is related specifically to categories of macaque vocalizations. Where in the brain does the processing or computation take place that develops this categorical representation from the spectral-temporal representation? Has it happened already at the level of the sensory thalamus, or is it carried out at the cortical level? Recordings at early stages of auditory processing, using similar stimulus sets and analyzed with the HMM, could help resolve this question. Clarification in this area could have direct relevance for understanding why strokes or lesions at various stages of auditory processing induce particular deficits.

Moreover, although much of the work up to this point has focused on the sensory representation in VLPFC, there may also be an associated motor representation or this sensory representation may be important for motor processing at some level. Although some studies have claimed that the ventral premotor area in the macaque (area F5), which contains mirror neurons, is the evolutionary precursor to the human language system (Rizzolatti & Arbib 1998), some features of VLPFC suggest that it too could be an important evolutionary precursor to human language areas. Specifically, it clearly contains a representation of vocalizations, which are a more likely candidate precursor to human language than are hand movements. Second, the VLPFC representation is just ventral to a significant motor-sequence representation in caudal area 46 (Isoda & Tanji 2003, Averbeck et al. 2006). Third, previous studies in nonhuman primates have suggested that VLPFC may be important in the association of a stimulus with a particular motor response, known as conditional association or action selection (Petrides 1985, Rushworth et al. 2005). One might consider the process of articulation and phonation as an elaborate series of conditional associations of specific acoustic stimuli with precise articulatory movements. Thus the combination of a motor sequence representation and a vocalization representation may be important components of a macaque system that could be precursors to the human language system.

Future studies that include recordings in more natural settings where callers and listeners can be monitored electrophysiologically may allow us to determine which features, behavioural or acoustic, are processed by VLPFC neurons. Combining vocalizations with the corresponding facial gestures may also offer clues to semantic processing by the PFC. This possibility is intriguing because of the discovery that some VLPFC neurons are, in fact, multisensory (Sugihara et al. 2006) and respond to both particular vocalizations and the accompanying facial gesture.

MULTISENSORY PROCESSING OF VOCALIZATIONS

Over the past decade, interest in multisensory processing indicates that many previously identified “unimodal” auditory cortical areas may be multisensory in nature (Ghazanfar & Schroeder 2008). Although studies have previously documented audio-visual responses in association cortices by testing auditory and visual stimuli separately (Benevento et al. 1977, Bruce et al. 1981, Hikosaka et al. 1988), more recent studies have used paradigms that rely on the comparison of simultaneous presentation of related auditory and visual stimuli with unimodal presentations. These studies have also utilized natural stimuli, including vocalizations, to search for multisensory responsive cells. For example, single neurons in the STS were found to be modulated by the sight of actions paired with the corresponding sound (Barraclough et al. 2005). In the auditory cortex, several studies have examined the neural response to vocalizations paired with corresponding facial gestures and have found multisensory activity in early auditory-processing regions. As mentioned previously, Ghazanfar and colleagues (2005, 2008) recorded single units and local field potential activity in primary and lateral belt auditory cortex and found evidence of multisensory integration for dynamic face and vocalization stimuli (Figure 5) early in the auditory cortical hierarchy.

The juxtaposition of auditory and visual responsive cells in the ventral PFC and the widespread connectivity of the frontal lobes make them a likely candidate for integrating audiovisual signals related to communication. Several single-unit recording studies demonstrated responses to both auditory and visual stimuli in the VLPFC. Benevento et al. (1977) found neurons in VLPFC (area 12o) that were responsive to simple auditory and visual stimuli (clicks and light flashes), and at least some of these interactions were due to convergence on single cortical cells. Fuster and colleagues recorded from the lateral frontal cortex during an audio-visual matching task (Bodner et al. 1996, Fuster et al. 2000). In this task, prefrontal cells responded selectively to tones, and most of them also responded to colors according to the task rule (Fuster et al. 2000). Using natural stimuli, Romanski and colleagues demonstrated multisensory responses to simultaneously presented faces and vocalizations in more than half of the recorded population of single units (Figure 16) (Sugihara et al. 2006). Neurons were tested with dynamic faces, vocalizations, or simultaneously presented faces + vocalizations. VLPFC multisensory neurons responded either to the auditory and visual stimuli when presented separately or to their simultaneous presentation (Sugihara et al. 2006). Sugihara et al. (2006) further characterized multisensory responses as an enhancement (Figure 16a) or a suppression (Figure 16b) of the unimodal response, and they determined that multisensory suppression was more common in prefrontal neurons. Furthermore, some previously identified vocalization or face-responsive cells in the PFC may, in fact, have been bimodal (O’Scalaidhe et al. 1997, Cohen et al. 2005, Romanski et al. 2005, Gifford et al. 2005). This determination would not be surprising in light of the many studies describing multisensory response in cortical and subcortical regions (Ghazanfar & Schroder 2008) and given the need to specifically test simultaneous face and vocalization stimuli to reveal these responses. The demonstration that some prefrontal neurons are responsive to both faces and species-specific vocalizations does not negate their role in complex auditory processing but merely adds to the amount of information that they may carry as part of an auditory-processing hierarchy.

Figure 16.

Multisensory neuronal responses in prefrontal cortex (PFC). The responses of two single units are shown in (a) and (b) as raster/spike density plots to a vocalization alone (Audio, A) and a face (Visual, V) and both presented simultaneously (Audio-Visual, AV). A bar graph of the mean response to these stimuli is shown at the right. The cell in (a) exhibited multisensory enhancement, and the cell in (b) demonstrated multisensory suppression. The location where multisensory neurons (red circles) were found in the VLPFC is depicted on a lateral view of the frontal lobe in (c).

CONCLUSIONS

Anatomical and physiological analyses of nonprimary auditory cortex has expanded our knowledge of the processing of complex sounds, including communication-relevant vocalizations. Neurophysiological recording and neuroimaging studies have revealed that many auditory cortical areas show a preference for species-specific vocalizations. With the increasing use of neuroimaging techniques, including fMRI and magneto-encephalography, knowledge gained from animal studies is being applied to the study of the human brain with encouraging results that point to a similarity in the organization of the auditory cortex among primates (Hackett et al. 2001). In the years ahead, knowledge of which auditory features, or other aspects of a stimulus, are encoded by neurons along every point in the auditory hierarchy, up to and including the frontal lobe, will allow us to understand the neuronal mechanisms underlying the processing of complex sounds, including those of human language.

SUMMARY POINTS.

Anatomical analysis of the auditory cortex in the temporal lobe has indicated that it is organized as a core region of the primary auditory cortex surrounded by a belt of association auditory cortical areas and further bounded by a parabelt auditory region. These cortical regions are reciprocally connected with each other in a topographic manner.

Connections exist between the belt and the parabelt and higher auditory-processing regions in the STS and prefrontal cortical regions. The projections from the auditory cortex to the prefrontal cortex appear to be organized as dorsal and ventral streams analogous to the visual system: Caudal auditory cortical regions project more densely to dorsal-caudal prefrontal cortex, whereas anterior belt and parabelt auditory cortices are reciprocally connected with rostral and ventral prefrontal cortex.

Species-specific vocalizations have been used to understand auditory processing in early and late auditory cortical processing regions. Neurons in several regions within the temporal lobe auditory cortical system have shown a preference for species-specific vocalization stimuli compared with other complex and simple sounds.

An auditory responsive domain exists in the primate prefrontal cortex, located in areas 12 and 45 (ventrolateral prefrontal cortex, VLPFC), where neurons show robust responses to complex acoustic stimuli, including species-specific vocalizations, human vocalizations, environmental sounds, and other complex sounds.

Auditory neurons in the VLPFC respond to species-specific vocalizations from a behaviorally and acoustically organized library of 10 call categories. Prefrontal neurons typically respond to species-specific 2–3 vocalization types, which appear to have similar acoustic morphology.

Predictions of the responses of ventrolateral prefrontal neurons with hidden Markov models suggest that the responses of these neurons reflect sophisticated, dynamic processing of information relevant to the behavioral/acoustic categories of the calls.

Some neurons in the ventrolateral prefrontal cortex are multisensory and respond to both vocalizations and the corresponding facial gestures. Multisensory neurons have been localized to areas 12 and 45 and include some of the same prefrontal areas where unimodal auditory or visual neurons were previously recorded.

FUTURE ISSUES.

Which key features do auditory neurons in different regions of the auditory cortical hierarchy encode?

What role does the ventral prefrontal cortex play in complex auditory object encoding?

How do auditory cortical regions respond to sounds during natural behaviors such as discrimination of conspecific calls in a group setting?

Are all auditory association neurons multisensory?

Are there auditory responsive regions in other portions of the prefrontal cortex, such as the medial prefrontal cortex, and how might their role differ from those in ventral-lateral prefrontal cortex?

Are the frontal auditory areas important for call production as well as call processing?

How do neural representations of vocalizations evolve and change with experience?

Glossary

- AI

primary or core auditory area

- R

rostral core auditory area

- RT

rostral temporal core auditory area

- AL

antero-lateral belt auditory cortex

- ML

middle-lateral belt auditory cortex

- CL

caudal-lateral belt auditory cortex

- STG

superior temporal gyrus

- TPO

temporal parieto-occiptal area in STS

- STS

superior temporal sulcus

- TAa

temporal area in STS

- PFC

prefrontal cortex

- VLPFC

ventrolateral prefrontal cortex

- DLPFC

dorsolateral prefrontal cortex

Footnotes

DISCLOSURE STATEMENT The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review.

LITERATURE CITED

- Averbeck BB. Noise correlations and information encoding and decoding. In: Rubin J, Josic K, Matias M, Romo R, editors. Coherent Behavior in Neuronal Networks. Springer; New York: 2009. In press. [Google Scholar]

- Averbeck BB, Crowe DA, Chafee MV, Georgopoulos AP. Neural activity in prefrontal cortex during copying geometrical shapes. II. Decoding shape segments from neural ensembles. Exp. Brain Res. 2003;150:142–53. doi: 10.1007/s00221-003-1417-5. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Romanski LM. Principal and independent components of macaque vocalizations: constructing stimuli to probe high-level sensory processing. J. Neurophysiol. 2004;91:2897–909. doi: 10.1152/jn.01103.2003. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Romanski LM. Probabilistic encoding of vocalizations in macaque ventral lateral prefrontal cortex. J. Neurosci. 2006;26:11023–33. doi: 10.1523/JNEUROSCI.3466-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Seo M. The statistical neuroanatomy of frontal networks in the macaque. PLoS Comput. Biol. 2008;4:e1000050. doi: 10.1371/journal.pcbi.1000050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Sohn JW, Lee D. Activity in prefrontal cortex during dynamic selection of action sequences. Nat. Neurosci. 2006;9:276–82. doi: 10.1038/nn1634. [DOI] [PubMed] [Google Scholar]

- Azuma M, Suzuki H. Properties and distribution of auditory neurons in the dorsolateral prefrontal cortex of the alert monkey. Brain Res. 1984;298:343–46. doi: 10.1016/0006-8993(84)91434-3. [DOI] [PubMed] [Google Scholar]

- Barbas H. Anatomic organization of basoventral and mediodorsal visual recipient prefrontal regions in the rhesus monkey. J. Comp. Neurol. 1988;276:313–42. doi: 10.1002/cne.902760302. [DOI] [PubMed] [Google Scholar]

- Barbas H. Architecture and cortical connections of the prefrontal cortex in the rhesus monkey. Adv. Neurol. 1992;57:91–115. [PubMed] [Google Scholar]

- Barraclough NE, Xiao D, Baker CI, Oram MW, Perrett DI. Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J. Cogn. Neurosci. 2005;17:377–91. doi: 10.1162/0898929053279586. [DOI] [PubMed] [Google Scholar]

- Baylis GC, Rolls ET, Leonard CM. Functional subdivisions of the temporal lobe neocortex. J. Neurosci. 1987;7:330–42. doi: 10.1523/JNEUROSCI.07-02-00330.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–12. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Benevento LA, Fallon J, Davis BJ, Rezak M. Auditory-visual interaction in single cells in the cortex of the superior temporal sulcus and the orbital frontal cortex of the macaque monkey. Exp. Neurol. 1977;57:849–72. doi: 10.1016/0014-4886(77)90112-1. [DOI] [PubMed] [Google Scholar]

- Bodner M, Kroger J, Fuster JM. Auditory memory cells in dorsolateral prefrontal cortex. Neuroreport. 1996;7:1905–8. doi: 10.1097/00001756-199608120-00006. [DOI] [PubMed] [Google Scholar]

- Broca P. Remarques su le siege defaulte de langage articule suivies d’une observation d’aphemie (perte de la parole) Bull. Societe’ d’Anthropol. 1861;2:330–37. [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J. Neurophysiol. 1981;46:369–84. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Raichle ME, Petersen SE. Dissociation of human prefrontal cortical areas across different speech production tasks and gender groups. J. Neurophysiol. 1995;74:2163–73. doi: 10.1152/jn.1995.74.5.2163. [DOI] [PubMed] [Google Scholar]

- Chavis DA, Pandya DN. Further observations on corticofrontal connections in the rhesus monkey. Brain Res. 1976;117:369–86. doi: 10.1016/0006-8993(76)90089-5. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Russ BE, Gifford GW., 3rd Auditory processing in the posterior parietal cortex. Behav. Cogn. Neurosci. Rev. 2005;4:218–31. doi: 10.1177/1534582305285861. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Theunissen F, Russ BE, Gill P. Acoustic features of rhesus vocalizations and their representation in the ventrolateral prefrontal cortex. J. Neurophysiol. 2007;97:1470–84. doi: 10.1152/jn.00769.2006. [DOI] [PubMed] [Google Scholar]

- Cover TA, Thomas JA. Elements of Information Theory. 2nd ed Wiley Intersci.; New York: 2006. [Google Scholar]

- Demb JB, Desmond JE, Wagner AD, Vaidya CJ, Glover GH, Gabrieli JD. Semantic encoding and retrieval in the left inferior prefrontal cortex: a functional MRI study of task difficulty and process specificity. J. Neurosci. 1995;15:5870–78. doi: 10.1523/JNEUROSCI.15-09-05870.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diehl MM, Bartlow-Kang J, Sugihara T, Romanski LM. Distinct temporal lobe projections to auditory and visual regions in the ventral prefrontal cortex support face and vocalization processing. Soc. Neurosci. Abstr. 2008;34:387–25. [Google Scholar]

- Eliades SJ, Wang X. Dynamics of auditory-vocal interaction in monkey auditory cortex. Cereb. Cortex. 2005;15:1510–23. doi: 10.1093/cercor/bhi030. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Armony JL, Joanette Y, Belin P. Sensitivity to voice in human prefrontal cortex. J. Neurophysiol. 2005;94:2251–54. doi: 10.1152/jn.00329.2005. [DOI] [PubMed] [Google Scholar]

- Fiez JA, Raife EA, Balota DA, Schwarz JP, Raichle ME, Petersen SE. A positron emission tomography study of the short-term maintenance of verbal information. J. Neurosci. 1996;16:808–22. doi: 10.1523/JNEUROSCI.16-02-00808.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD, Ruschemeyer SA, Hahne A, Fiebach CJ. The role of left inferior frontal and superior temporal cortex in sentence comprehension: localizing syntactic and semantic processes. Cereb. Cortex. 2003;13:170–77. doi: 10.1093/cercor/13.2.170. [DOI] [PubMed] [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–51. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Gabrieli JDE, Poldrack RA, Desmond JE. The role of left prefrontal cortex in language and memory. Proc. Natl. Acad. Sci. USA. 1998;95:906–13. doi: 10.1073/pnas.95.3.906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galaburda AM, Pandya DN. The intrinsic architectonic and connectional organization of the superior temporal region of the rhesus monkey. J. Comp. Neurol. 1983;221:169–84. doi: 10.1002/cne.902210206. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Chandrasekaran C, Logothetis NK. Interactions between the superior temporal sulcus and auditory cortex mediate dynamic face/voice integration in rhesus monkeys. J. Neurosci. 2008;28:4457–69. doi: 10.1523/JNEUROSCI.0541-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J. Neurosci. 2005;25:5004–12. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroder CE. Is neocortex essentially multisensory? Trends Cog. Sci. 2008;10:278–85. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Gifford GW, III, Hauser MD, Cohen YE. Discrimination of functionally referential calls by laboratory-housed rhesus macaques: implications for neuroethological studies. Brain Behav. Evol. 2003;61:213–24. doi: 10.1159/000070704. [DOI] [PubMed] [Google Scholar]

- Gifford GW, III, Maclean KA, Hauser MD, Cohen YE. The neurophysiology of functionally meaningful categories: Macaque ventrolateral prefrontal cortex plays a critical role in spontaneous categorization of species-specific vocalizations. J. Cogn. Neurosci. 2005;17:1471–82. doi: 10.1162/0898929054985464. [DOI] [PubMed] [Google Scholar]

- Goldman PS, Rosvold HE. Localization of function within the dorsolateral prefrontal cortex of the rhesus monkey. Exp. Neurol. 1970;27:291–304. doi: 10.1016/0014-4886(70)90222-0. [DOI] [PubMed] [Google Scholar]

- Gross CG. A comparison of the effects of partial and total lateral frontal lesions on test performance by monkeys. J. Comp. Physiol. Psychol. 1963;56:41–47. doi: 10.1037/h0048041. [DOI] [PubMed] [Google Scholar]

- Gross CG, Weiskrantz L. Evidence for dissociation of impairment on auditory discrimination and delayed response following lateral frontal lesions in monkeys. Exp. Neurol. 1962;5:453–76. doi: 10.1016/0014-4886(62)90057-2. [DOI] [PubMed] [Google Scholar]

- Hackett TA. Primate Audition: Ethology and Neurobiology. CRC Press; New York: 2003. The comparative anatomy of the primate auditory cortex; pp. 199–225. [Google Scholar]

- Hackett TA, De La Mothe LA, Ulbert I, Karmos G, Smiley J, Schroeder CE. Multisensory convergence in auditory cortex. II. Thalamocortical connections of the caudal superior temporal plane. J. Comp. Neurol. 2007;502:924–52. doi: 10.1002/cne.21326. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Preuss TM, Kaas JH. Architectonic identification of the core region in auditory cortex of macaques, chimpanzees, and humans. J. Comp. Neurol. 2001;441:197–222. doi: 10.1002/cne.1407. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Subdivisions of auditory cortex and ipsilateral cortical connections of the parabelt auditory cortex in macaque monkeys. J. Comp. Neurol. 1998a;394:475–95. doi: 10.1002/(sici)1096-9861(19980518)394:4<475::aid-cne6>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]