Abstract

Rhythmic local field potentials (LFP) arise from coordinated neural activity. Inference of neural function based on the properties of brain rhythms remains a challenging data analysis problem. Algorithms that characterize non-stationary rhythms with high temporal and spectral resolution may be useful for interpreting LFP activity on the timescales in which they are generated. We propose a Kalman smoother based dynamic autoregressive model for tracking the instantaneous frequency (iFreq) and frequency modulation (FM) of noisy and non-stationary sinusoids such as those found in LFP data. We verify the performance of our algorithm using simulated data with broad spectral content, and demonstrate its application using real data recorded from behavioral learning experiments. In analyses of ripple oscillations (100-250 Hz) recorded from the rodent hippocampus, our algorithm identified novel repetitive, short timescale frequency dynamics. Our results suggest that iFreq and FM may be useful measures for the quantification of small timescale LFP dynamics.

Keywords: autoregressive model, Kalman smoother, frequency modulation, adaptive filter, hippocampus, ripple oscillation

1 Introduction

Understanding how local field potentials (LFPs) are modulated in amplitude and frequency may facilitate the use of LFP activity to infer neural function. To this end, determining whether or not small timescale variations in LFP activity correspond to functional or noise processes is important. In particular, the instantaneous oscillation frequency (iFreq) and frequency modulation (FM) of brain rhythms may be informative measures of network state. Here, we derive and discuss a novel analytical framework that will facilitate the characterization of LFP activity using iFreq and FM.

The activation of neural circuits and the characteristics of LFP activity are intimately tied to cognitive function and behavioral state. For example, during sleep, slow oscillations (< 1 Hz) observed in the neocortex emerge when thalamocortical circuits are engaged (Steriade et al., 1993); in addition, delta waves (1-4 Hz) and spindles (7-14 Hz) are found to be superimposed on the positive half-wave of such slow oscillations (Steriade, 2006). In the hippocampus (HPC), the prominent θ -rhythm (6-12 Hz) is associated with sensorimotor integration during locomotion and can be sufficiently driven by cholinergic activity from the medial septal nuclei and diagonal band of Broca (Bland, 1986). The θ -rhythm has also been linked to cognitive function; Jones et. al. demonstrated that the θ -rhythm in the prefrontal cortex and hippocampus became more coherent during behavioral epochs where more demand is placed on working memory (Jones and Wilson, 2005). Communication between the hippocampus and prefrontal cortex may also be an important component of the memory consolidation circuit; Molle et. al. found that the phase of the slow oscillation and the amount of multi-unit activity in the prefrontal cortex was strongly correlated with the activation of hippocampal sharp-wave ripple events (Molle et al., 2006). Coherent gamma band activity (20-70 Hz) between brain regions have been shown to coordinate activity between brain structures during learning (Miltner et al., 1999; Popescu et al., 2009). The ability to characterize LFP activity with both temporal and spectral precision may help us to understand the neural computations underlying brain function.

The spectrogram, which is commonly used to quantify and visualize LFP characteristics, may be computed using two families of algorithms: parametric and non-parametric. The non-parametric class of algorithms generally require very few assumptions to be made about the data, but have well-known trade-offs between temporal resolution, spectral resolution, and estimation uncertainty (Percival and Walden, 1993). Because of these trade-offs, it has been difficult to systematically explore rhythmic field potential activity on the same timescale of neuronal interactions. Alternatively, parametric methods involve the use of an explicit data-generating model. More specifically, an autoregressive (AR) process may be used to model activity that is generated by one or more superimposed and noisy sinusoids, like the activity found in LFP recordings. The AR model is composed of parameters, which define the general structure of the model, and coefficients, which are realized by fitting the AR model to the data (Percival and Walden, 1993). Although visualization of the power spectral density (PSD) is a powerful inference tool, the primary advantage of the AR model is that the underlying process that produced the observed data can be inferred directly from the AR parameters and coefficients without ever constructing a PSD plot or spectrogram. Therefore, it is advantageous to estimate quantities such as iFreq and FM from LFP data using AR modelling.

In this paper, we are most interested in a group of solutions that fall under the class of “time-varying autoregressive models” or TVARs (Arnold et al., 1998; Bartoli and Cerutti, 1983; Baselli et al., 1997; Bohlin, 1977; Foffani et al., 2004; Tarvainen et al., 2004; Zetterberg, 1969). More specifically, TVARs explicitly model LFP activity as being generated by one or more superimposed, stochastic, dynamic oscillators. In general, TVARs largely consist of a three-step process: 1) assuming a general structure for the AR model, 2) employing an adaptive filter framework for dynamic AR model estimation, and 3) using the resulting TVAR model to infer oscillatory dynamics of the data. Although considerable progress has been made in these areas, with respect to LFP analysis, there are non-trivial obstacles to overcome before we can achieve the goal of performing reliable estimation of iFreq and FM from single-trial data.

In particular, the algorithm we developed addresses the following significant challenges. First, the combination of brain rhythms that are activated, and their relative amplitude of activation, is truly dynamic over small time-intervals; therefore, an important question is how to choose the AR model parameters so that the resulting TVAR model characterizes the data correctly during times of interest. Second, brain rhythms are characterized by activity occurring in well established frequency bands, however, no elegant methodology exists for constraining the peaks of the AR-PSD to pre-defined frequency bands of interest; for example, when there are zero or multiple peaks within a frequency band, the interpretation of the model may be ambiguous. Third, the choice of adaptive filter framework can greatly affect the quality of the TVAR model estimates. Last, a challenge is to understand the assumptions of the adaptive filter, and to ensure that the adaptive filter and data input are mutually compatible.

Hereafter, we derive and apply a fixed-interval Kalman smoother based TVAR model to track the iFreq and compute the FM of oscillations present in real and simulated LFP data. A Kalman filter based approach was chosen over other adaptive filters for the wealth of existing theoretical and practical knowledge from which to draw from (Arnold et al., 1998; Mendel, 1987; Tarvainen et al., 2004), and more specifically, for its ability to reduce estimation delays that are known to occur with adaptive filtering (Tarvainen et al., 2004). During the process, we illustrate common situations that would lead to the erroneous estimation of iFreq and FM. We discuss and provide examples of when these situations may arise and provide concrete methods for detecting and side-stepping these conditions. The final algorithm was independently validated by comparing our AD-KS algorithm to Hilbert transform and short-time Fourier transform algorithms. Finally, application of the AD-KS was demonstrated by quantifying the frequency structure of brief ripple oscillations recorded from the CA1 subfield of the rat hippocampus.

2 Methods

We begin the methodology section by defining a general model for a single stochastic and dynamic oscillation that is appropriate for characterizing rhythmic field potential activity. The narrow-band oscillatory model is fully defined by two functions of time: instantaneous frequency (iFreq) and instantaneous amplitude (iAMP). Given a brain rhythm of interest, the iAMP of the rhythm may be functionally related to behavioral state (Sinnamon, 2006; Wyble et al., 2004). Unlike the iAMP measure, iFreq and frequency modulation (FM) have been far less utilized to characterize the fine-timescale and physiological meaning of LFP dynamics. This may be due to the lack of established methods for computing iFreq and FM in the neuroscience literature. The goal of this paper is to put forth an algorithm for computing reliable, single-trial, and readily interpretable estimates of iFreq and FM.

We estimate iFreq by applying a time-varying autoregressive (TVAR) model based on a fixed-interval Kalman smoother (KS) framework. The abbreviation KS will refer to the fixed-interval Kalman smoother as multiple smoothing strategies exist (Anderson and Moore, 1971). We formulate the univariate AR model and state precisely how the AR model parameterizes iFreq. Then, we derive the KS for adapting the AR model over time and, thereby, describe how to estimate iFreq and FM.

2.1 Sinusoidal process with time-varying amplitude and frequency

A sinusoid that is modulated in amplitude and frequency may be written in discrete time as

| (1.1) |

where m(n) is the amplitude envelope or iAMP signal and f (n) is the time-varying frequency.

We are interested in the estimation of f (n), as a measure to characterize LFP data as well as a prerequisite to the computation of FM,

| (1.2) |

where Δt is the sampling interval associated with the discrete-time observations y(n)

With respect to a narrow-band LFP rhythm, the amplitude envelope, m(n), contains information about the instantaneous power of the rhythm. In the brain, oscillatory power is highly correlated with behavioral and cognitive state, and can be modulated differentially depending on task stages (Sinnamon, 2006; Wyble et al., 2004). In addition to providing physiological information, the signal m(n) is useful for normalization procedures that remove abrupt amplitude changes that are difficult for our model to fully capture.

Let y (n) be the signal of interest and let H {y (n)} be the Hilbert transform of y (n). In order to estimate m(n), the real data signal is converted into a complex representation using the Hilbert transformer. For a narrow-band signal, the Hilbert transformer converts an oscillation on the real axis into a signal on the complex plane, ξ (n) = y (n) + i·H {y (n)}, where the modulus, m(n) = |ξ(n)| = |y (n) + i·H {y (n)}|, is the amplitude envelope of the signal and the argument, ϕ(n)=arctan(ξimag(n)/ξreal(n)), is the phase (Oppenheim et al., 1999).

We established that the estimation of iFreq, f (n), is required for obtaining FM in our proposed framework. Consequently, the accuracy of frequency estimates is the primary focus of this section. We chose to use the TVAR framework for its ability to explicitly model dynamically evolving sinusoids (Arnold et al., 1998; Bencheqroune et al., 1999; Foffani et al., 2004; Ko and Li, 1994; Mainardi et al., 1995; Tarvainen et al., 2004). In this section, we first cover the static univariate AR model, which assumes that the data stream comes from one electrode and the power spectrum is constant over time. Subsequently, we derive the Kalman framework for adapting the AR model coefficients over time and on a sample-by-sample basis.

2.2 The autoregressive process

In the observation interval t∈(0,T], let time be discretized such that the discrete time index, n, obeys the sampling interval equation t = nΔ, fs=1/Δ is the sampling frequency, and J = T / Δ is the largest discrete time index. Let y (n) denote the measured LFP signal, v (n) denote the zero-mean Gaussian measurement white noise with variance , and the operator x* denote the complex conjugate value of x. Then the AR model of order p or AR(p) model is

| (1.3) |

with z-transform and power spectral density (PSD) functions, respectively,

| (1.4) |

| (1.5) |

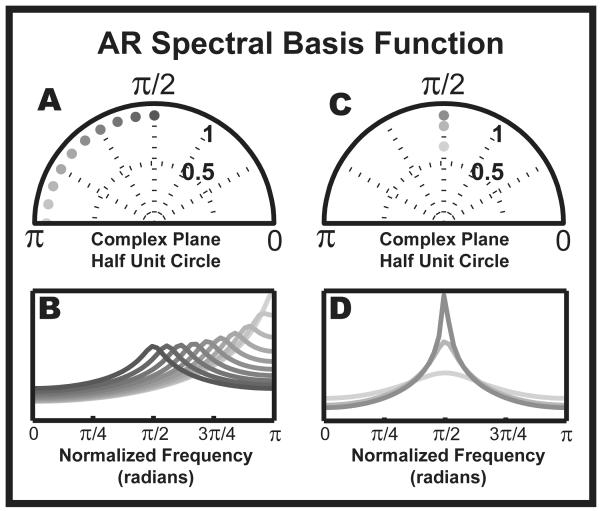

It is important to note the relationship between the AR model order and the assumed structure of the data, as well as how the AR coefficients (a1,…,ap) specify the shape of the PSD. The denominator of (1.4) is referred to as the characteristic polynomial as it fully captures the behavior of the model. Once the polynomial is factored, the roots (referred to as poles) will indicate if y (n) has unstable, oscillatory, or damped components (Box et al., 2008). We are most interested in the oscillatory components, which are given when two roots of the characteristic polynomial have non-zero imaginary components and are complex conjugates of each other. In practice, the model order p is generally constrained to be even such that when all the roots have complex conjugates amongst themselves, the PSD of an AR(p) process, S (z), will be a multi-modal function that resembles a summation of peaks, where each peak represents a brain rhythm. The relative height, shape, and location of the peaks are entirely determined by the poles of the transfer function. The phase of the pole determines where the center of the peak is positioned on the frequency axis with 0 phase corresponding to 0 Hz and π phase corresponding to fs/2 Hz, the Nyquist frequency. The height and width of the peak are determined by the modulus of the pole (Figure 1). The peak sharpens as the pole approaches the unit circle. Ultimately, the shape of the PSD is only as valid as the data is stationary in structure. Therefore, for LFP recordings, which are both non-stationary and stochastic, we must extend the AR model to a TVAR model in order to explicitly describe the temporally evolving harmonics of the time series.

Figure 1.

Parametric spectral basis functions. The AR poles completely specify the basis function for the AR PSD. The parameters which define the basis are pole phase and pole magnitude. This figure explores the parameter space and visualizes the range of the AR spectral basis function. (A) and (B): Altering the pole phase results in a systematic shift in the location of the peak. (C) and (D): Altering the pole magnitude alters the spread of the basis function. The differences in peak basis power in (B) and (D) can be viewed as distributions for observed-frequency variance. When the basis peak is sharp, the variance in the frequency of oscillation is small, like that of a narrow-band oscillation. Otherwise, the AR model indicates that the data is a noisy oscillator that possibly contains wide-band background activity. The value of radians is equivalent to half the sampling frequency of the data.

2.3 Instantaneous frequency estimation using the fixed interval Kalman smoother

Some methods of estimation assume that ak is fixed over time (Oppenheim et al., 1999), but the analysis of nonstationary brain rhythms requires a time-varying approach such that ak→ak (n). The Kalman filter (KF) framework can be thought of as a sequential Bayesian estimator that optimizes the AR model coefficients given incremental observations of data with Gaussian variability (Arnold et al., 1998). Here, we derive a KF version of the TVAR.

We begin by writing the AR process in discrete state-space form, a representation that is convenient for formulating adaptive filters,

| (1.6) |

| (1.7) |

where x(n) = [a1(n),…,ap(n)]T is the hidden or state variable which corresponds to the AR coefficients at discrete-time n, C(n) = [y(n − 1),… y(n − p)] is the observation transformation vector, w(n) is the state transition noise with covariance , and v (n) is the observation noise with covariance . The diagonal form of Σw is an assumption that allows the AR coefficients to evolve independently of one another as well as simplifies the algorithm by reducing the number of parameters. Given the values for , , p, and the data y (n), the KF estimates the variable x(n) for each discrete-time point in the observation interval, n∈[1, J].

The one-step prediction equations use past information to predict the state variables and their statistics under a zero mean Gaussian random walk assumption:

| (1.8) |

| (1.9) |

| (1.10) |

| (1.11) |

The Kalman gain, K (n), scales the innovation term, y (n) − y (n | n − 1), such that x (n | n) is the minimum mean-square error estimate of x(n):

| (1.12) |

| (1.13) |

| (1.14) |

This classic KF uses only past and present observations, [y (0),…, y (n)], to estimate the state x(n). As a consequence, the estimate of x(n) will incur some lagged response. In order to compensate for this lag, an additional temporal smoothing step, called the Kalman smoother (KS), can be used to reduce delays and decrease the variance of state estimates. All together, the KS estimation procedure begins with a forward pass, where x (n | n) is computed from n=1 to n = J; then transitions to a backward pass that propagates future information into the past from n = J−1 to n=1:

| (1.15) |

| (1.16) |

From the smoothed AR coefficient estimates, x(n|J)=[a1(n|J),…,ap(n|J)]T, the dominant frequency components of the signal may be estimated at time index n. Let the frequency corresponding to oscillation k be fk (n|J) for 1 ≤ k ≤ p / 2 and p even. We solve for iFreq, fk(n|J), as

| (1.17) |

| (1.18) |

| (1.19) |

In the case where the poles are complex conjugates, , for i ≠ k and 1 ≤ i,k ≤ p, the TVAR contains p / 2 oscillators. The complex phase of the poles, αk(n|J), are proportional to the dominant oscillation frequency according to (1.19). Here, rk(n|J) is the magnitude of the pole, ωk(n|J) is the phase of the pole on the complex plane, and fs is the sampling frequency of the observed signal. If p is chosen to be odd, at least one pole must be real. Very low frequency or baseline components in the time series are captured by real poles. For narrow band signals of higher frequency, it is generally more appropriate to choose p to be even.

FM is defined as the derivative of iFreq and is computed by taking the approximated derivative of f (n | J),

| (1.20) |

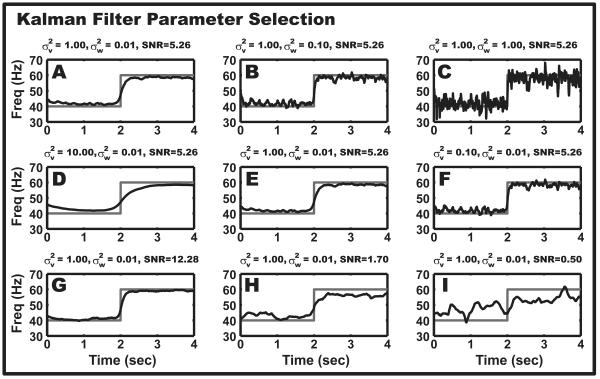

For an AR(p) process, the KF parameters are initialized as follows: n0 = p + 1 and C(n0) = [y(p),…, y(2), y(1)]. The initial state and state covariance matrix, x(n0|n0) and Σx(n0|n0), are initialized using the Yule-Walker equations. An initial guess for the observation variance, , may be determined by computing the variance of the temporal difference of the LFP: . We found reasonable starting values for the state transition variance to range between and depending upon the amount of noise in the system (Figure 3). These variance parameters may be fine tuned by choosing a small snippet of representative data, performing the Kalman smoother estimation, and using the goodness-of-fit criteria to determine the validity of the parameter values (see Testing Goodness-of-Fit). In addition, visual inspection of the frequency estimate is helpful for verifying the degree of desired smoothness. Increasing informs the Kalman filter that the observations are less reliable and, therefore, the state transition should be less affected by perturbations in the observation; the result is a smoother frequency estimate (Figure 3, Panel D-F). Decreasing informs the Kalman filter that the state vector should evolve more slowly over time, which directly results in a smoother frequency estimate (Figure 3, Panel A-C).

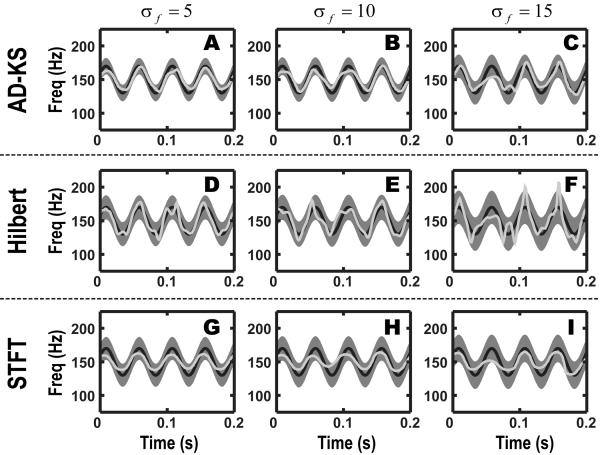

Figure 3.

Parameter selection for Kalman smoother. Behavior of the Kalman smoother as defined by the interaction between KF variances and data SNR. Each analysis is based on the same deterministic, narrow-band oscillation with a 40Hz to 60Hz step change. The data is simulated at 100Hz. The differences in the data sets, as noted by the SNR, are the amount of noise added to the signal before filtering by the KS. (A-C): The first row of panels shows the inverse relationship between the value of and the smoothing of the iFreq estimate. (D-F): The second row of panels shows that smoothing of the iFreq estimate increases as increases. (G-I): The third row of panels demonstrates the behavior of the KS as the signal becomes increasingly masked by noise.

2.4 Interactions between AR components

AR models are advantageous in time-frequency analysis because they represent a flexible class of PSD functions that are best described as a linear summation of peaks, where the location, height, and width of each peak is dictated by the poles of the AR model. The overlap between side lobes of the peaks consequently means that the power at any given frequency is based on the interaction between multiple poles (Box et al., 2008; Nguyen et al., 2008). The 1/ f rule, which is well known in the analysis of brain rhythms, states that the expected power of an oscillation is inversely proportional to the frequency of the oscillation (Buzsáki, 2006). This has strong implications for frequency estimation where the side lobes of poles at lower frequencies may dominate poles at higher frequencies; higher frequency poles can therefore vary as a function of activity at lower frequencies. For example, if the KF (p ≥ 4) reveals changes in frequency in the γ -rhythm, these changes may be due to actual γ -rhythm modulation or may be a side effect of activity in θ, α, or β -rhythms.

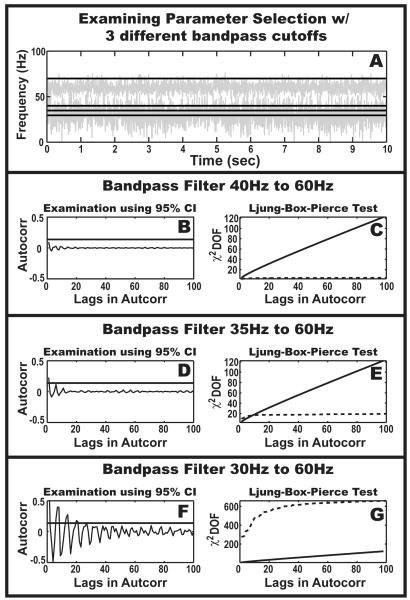

An effective way to address this interaction is to isolate and analyze each rhythm individually. Bandpass filtering the observation signal prior to Kalman filtering by assuming an AR(2) model, which is appropriate for modeling a single oscillation, helps to ensure that changes in pole amplitude and location within a frequency band of interest are truly a result of only one brain rhythm. In general, the parameters for bandpass filtering will affect the quality of the iFreq estimate. Choosing a pass band that is too large may result in the presence of more than one oscillation. In this case, the frequency represented by the AR(2) parameter estimates will be a weighted average of all the oscillations that are present. The Ljung-Box-Pierce test can be used to detect when such a condition has occurred so that the user may readjust the limits of the bandpass filtering (see Testing Goodness-of-Fit).

When the cutoff frequencies for the bandpass filter are appropriate, the next step is resampling the data to create a symmetric power distribution in the frequency domain about the point at π / 2 radians. This geometry is necessary because AR components become more asymmetric in shape as their center frequency moves closer to either 0 or π radians (Figure 1), and the interplay between data and AR model is not uniform over all frequencies. As bandpass filtering the data may create sharp edges in the PSD that can add to the complexity of the data-model interactions, a symmetric power distribution will help to balance these interactions on the frequency axis, such that the estimator does not a-priori have a preference for lower or higher frequencies.

To obtain an approximately symmetric power distribution about π / 2 radians, we first define the frequency range for the brain rhythm of interest (f̃1, f̃2), and then evaluate the target central frequency parameter f̃c = 0.5(f̃1 + f̃2). Second, we define the new Nyquist and sampling frequencies to be f̃n≃2f̃c and f̃s ≜ 2f̃n = 2(f̃1 + f̃2), respectively. Resampling the signal to approximately f̃s and then bandpass filtering between f̃1 and f̃2 will provide the largest symmetric range of motion for the pole in the frequency band of interest. In particular, we use a Hamming window bandpass filter which provides a flat passband; the length of the filter is chosen to provide a transition band that is ~5-6% of the Nyquist frequency.

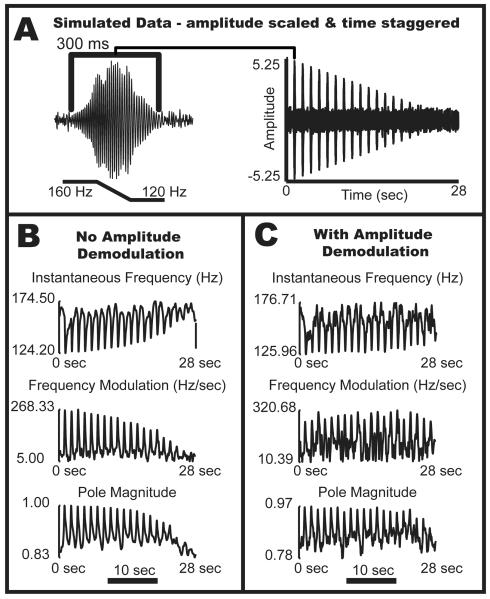

2.5 The amplitude-demodulated Kalman smoother

The Kalman gain, K (n), in (1.12) provides the minimum mean square estimate of the state vector at each timestep by utilizing the Gaussian statistics of the state evolution equation, (1.6), and the observation equation, (1.7). More specifically, the Kalman gain can be thought of as a ratio between the 1) scaled one-step state variance and 2) expected observation variance given the observation equation, one-step state variance, and the a-priori observation variance. Normally, the Kalman gain is independent of the observations, y (n), however, the Kalman gain in (1.7) is expressed as a function of the observations [y (n−1),…, y (n−p)]. A critical consequence is that the Kalman gain is no longer solely determined by the Gaussian noise statistics of the linear system equations. In Figure 2, we demonstrate how variations in observation amplitude may bias the estimates of the pole phase through the Kalman gain. Ignoring this condition will still allow the KF to track oscillation dynamics over time, but in a consistently biased manner (Figure 2, Panel B).

Figure 2.

Amplitude demodulation improves model-data agreement. Biased instantaneous frequency (iFreq) estimation may occur when large, fast variations in the data are not adequately modeled by a single noisy oscillator model. In order to demonstrate this scenario, (A) simulated data was created by combining a fast, frequency and amplitude modulated signal with non-additive but temporally flanking background noise. The maximum amplitude of the chirps linearly decreased from 5 to 0.25, with 20 chirps in total. The noise surrounding the chirps is white with variance 0.25. The sampling frequency of this simulated data was 800 Hz. (B): Kalman-Smoother based estimates without amplitude demodulation yields biased frequency estimates (p=2, , ) (C): Kalman-Smoother based estimates after amplitude demodulation resulted in more consistent estimates of frequency dynamics considering a 20-fold amplitude change between the first and last chirps (p=2, , ).

The bias may be corrected by making the variances Σx(n|n − 1) and consistent with the statistics of the observations around the time point n. One solution is to explicitly model the heteroskedasticity of the data using dynamic variance models such as GARCH or to use an expectation-maximization to optimize the local variance values according to some likelihood function (Amisigo and van de Giesen, 2005; Wong et al., 2006). While such approaches are valid, they introduce an additional set of “hyper” parameters and a layer of complexity that may not be necessary.

A straightforward alternative is to regularize the amplitude statistics of the LFP observations while preserving its frequency information. This may be accomplished by amplitude demodulating the input signal y (n) using m(n), the modulus of the Hilbert transformer:

| (1.21) |

The signal yAD(n) has variation in peak-amplitude that is extremely predictable and well modeled by the KF. This process of amplitude demodulation combined with Kalman filtering and smoothing will be referred to as the amplitude-demodulated Kalman smoother (AD-KS). In tracking sinusoids in noise, this manipulation decreases the systematic scaling of the Kalman gain with amplitude. The trade off here, as shown in Figure 2-C, is the variance of the estimate may also increase. These variations can be systematically reduced by either increasing the value of or decreasing the value of which would lead to smoother estimates in AR parameter estimation (Figure 3).

2.6 Testing goodness-of-fit

Post-hoc analyses of the autoregressive model fit may be used to determine the suitability of the chosen bandpass filtering parameters given an observation interval. More specifically, a goodness-of-fit test can indicate when additional harmonic structure in the data was not captured by the dynamic AR model, thus indicating that the pass-band of the filter is too wide.

One output of the Kalman filter is the residual time-series which contains the component of the input signal that is not captured by the adaptive AR(2) process. Ideally, if the filter captures all the rhythmic structure in the data, the residual should be uncorrelated with itself and the autocorrelation of the residual should be flat, which corresponds to a white process. It has been shown that the Ljung-Box-Pierce statistic (Box et al., 2008),

| (1.22) |

(where Nw is the length of the data segment used to compute the autocorrelation, v̂ is the residual output of the Kalman filter and is assumed to be a white noise process, γk is the value of the autocorrelation at lag k, and K is the number of lags in the autocorrelation used to compute the statistic), is distributed according to χ2 (K − 2) for an AR(2) process residual. When v̂ is highly autocorrelated, the distribution of γk will be inflated and Q(K) will pass outside the right-sided 95% confidence interval of the χ2 (K − 2) distribution. In Figure 4, an example is shown where the pass-band of the pre-filter is expanded to gradually include another oscillation. As the presence of a second oscillation increases, the AR(2) model attempts to capture both oscillations but fails. This inability is quantified in Figure 4-(F,G), where the autocorrelation of the residual clearly shows harmonic structure and the test statistic, Q(20), is clearly outside of the expected range of that given by white noise.

Figure 4.

Ljung-Box-Pierce test for lack-of-model-fit. (A): As the passband becomes larger from 40-70Hz, to 35-70Hz, and then 30-70Hz, more of the low frequency oscillation is included in the input to the AR(2) Kalman filter. The addition of another component breaks the AR(2) assumption of a single oscillation. The Ljung-Box-Pierce test can be used to detect when the single oscillation assumption is violated. (B),(D),(F): The right-half of the autocorrelation of the filter residuals is shown for three scenarios. The solid horizontal line is the theoretical 95% confidence interval of the autocorrelation of a white noise process based on Ljung-Box-Pierce statistic. If multiple lagged-peaks lie above the confidence interval, this roughly indicates a violation of the model. (C),(E),(G): The output of the Ljung-Box-Pierce Test quantifies the lack of fit by comparing a null model of uncorrelated residuals with the empirically computed residuals as function of lags in the residual-autocorrelation function.

2.7 Algorithm summary

We now summarize the steps outlined above for addressing the problem of instantaneous frequency estimation using AD-KS algorithm.

Determine the spectral-band of interest, down-sample the data, and then band-pass filter between f̃1 and f̃2 to obtain yBP(n).

Compute the amplitude demodulated signal, yAD(n), by normalizing yBP(n) with its respective amplitude envelope, m(n), which is computed with the Hilbert transformer.

Run the AD-KS algorithm on yAD(n), and obtain the residual, ε(n) , and the time-varying AR coefficients, A(n), (1.6)-(1.16).

Confirm that the residual error, ε(n), is approximately white noise by using the Ljung-Box-Pierce test. If the test fails, re-adjust the band-pass filtering parameters, re-adjust the AD-KS state variance or observation variance, or decrease the size of the observation window for analysis.

Convert the AR coefficient estimates, A(n), into iFreq estimates using (1.19), and FM estimates using (1.20).

2.8 Comparison of methods

In order to validate the performance of our proposed methodology, we compare iFreq estimates obtained using the AD-KS algorithm with more conventional methods, 1) the Hilbert transform and 2) short-time Fourier transform (STFT). It is well known that frequency can be computed using the derivative of the instantaneous phase, we therefore estimate iFreq using the Hilbert transform by taking the two-point sample derivative of phase. The STFT is a commonly used method for describing time-varying spectral activity. We use a STFT with a window size of 40 points, which corresponds to 50 ms of data (sampling frequency of data is 800 Hz). The STFT is advanced by one data point at a time such that the overlap is 39 points. The spectrogram obtained via the STFT is interpolated on the frequency axis using a piecewise cubic spline at a resolution of 0.4 Hz. The iFreq estimate is then taken to be the frequency of maximum power at each time point. The AD-KS variance parameters were set to and .

The simulated data for this comparison is generated from the following equations:

| (1.23) |

| (1.24) |

The observation, y(n), is a simulated oscillation with noisy, time-varying frequency with additive observation noise. The terms ey (n) and ef (n) are white noise terms with standard deviations set to: s.d.(ey)=0.4 and s.d.(ef)=[5, 10, 20] Hz . The term t(n)=nΔ converts the time index n into units of seconds, where Δ=1/800 Hz and n=[1,800]. The resulting 1 second of simulated data is a wide-band oscillation with frequency modulated between 130 Hz and 170 Hz at a rate of 40 Hz; the width of the spectral-band is determined by the s.d. of ef(n). The signal-to-noise ratio of the observed time-domain signal is approximately 6 dB. The goal of the simulation is to track f(n) despite the presence of noise ef(n).

The relative performance of the three algorithms is visually summarized in Figure 5. The mean-squared-error (MSE) for the smallest to largest noise conditions were 35.40, 40.34, and 60.13 Hz2 for the AD-KS algorithm; 197.72, 169.80, and 176.95 Hz2 for the Hilbert transform; 71.65, 74.37, and 92.23 Hz2 for the interpolated-STFT. Qualitatively, we found that the Hilbert transform was most sensitive to noise, and the interpolated-STFT was biased towards the baseline frequency of 150Hz and incurred some delay in response. The AD-KS performed statistically better than the other two methods under all three noise conditions (p < 0.05, Bootstrap sample-mean test of MSE distribution).

Figure 5.

Comparison of methods. Each row represents a different method of instantaneous frequency estimation, while each column represents a different signal-to-noise condition. The respective methods are (A-C): Kalman smoother, (D-F): Hilbert transform, (G-I): interpolated short-time Fourier transform. The simulation data is a wide-band oscillation of variable center frequency that is modulated at 20 Hz (solid gray trace) and sampled at 800 Hz. The shaded area represents the 95% confidence bounds of the frequency estimates (n=100 simulations). The black line represents the true oscillation frequency over time. The light-gray line represents a single-trial estimate.

3 Results

Information transfer between the hippocampus and neocortex is important for the consolidation of spatial and episodic memory. This process of information transfer is referred to as memory consolidation and may be mediated by a phenomena called ensemble sequence “replay” (Foster and Wilson, 2006; Lee and Wilson, 2002). We know that this process of replay is associated with a rise in multi-unit activity and the presence of ripples (100-250 Hz oscillations with marked increases in power lasting, on average, from 75ms to 100ms) in the CA1 region of the hippocampus (Chrobak and Buzsaki, 1996; Vanderwolf, 1969). Because ripple oscillations may be generated by the same neural circuits as ensemble replay activity, the features of the ripple may allow us to deduce the mechanisms for replay induction and the nature of information transmission during memory consolidation processes.

Although the frequency content of ripples is defined by the 100-250 Hz band, the dynamics of ripples oscillations within that band has not been fully characterized. Past studies that have advanced the analysis of ripples using wavelet methods did not identify fine timescale structure of ripple events (Gillis et al., 2005; Ponomarenko et al., 2004; Sirota et al., 2003). Here, we apply the AD-KS filter to briefly demonstrate how iFreq and FM may be used to quantify the dynamic frequency structure of ripple events and possibly extend the definition of ripples into the spectral-temporal domain (Nguyen et al., 2009).

LFPs were recorded from electrodes placed in stratum oriens of dorsal CA1 hippocampus of a rat. The LFP data presented here was obtained during one 45 minute epoch where the rat was spatially restricted in a behavior box. During this time, the rat could either be awake, sleeping in REM, or sleeping in slow-wave sleep. The LFP signal was bandpass filtered between 1 and 475 Hz, digitized at a rate of 1 kHz, and recorded to hard disk. Ripple events were detected by thresholding the root-mean-square power in the 100-250 Hz band as in Csicsvari et al. (1999). All experimental and surgical procedures were approved by the Committee on Animal Care at Massachusetts Institute of Technology and followed NIH guidelines.

3.1 Analysis of Hippocampal Ripple Events

A single LFP channel was chosen for analysis and the data trace was resampled to 800 Hz and then bandpass filtered with cutoffs of f̄1=100 Hz and f̄2=250 Hz and filter length 100 as prescribed by the section Interactions between AR components. A total of 1932 ripple events were detected. The KS parameters were chosen to be and and the AD-KS was applied to the data. The KS parameters were validated with the Ljung-Box-Pierce test using only the AR residuals during ripple events (see Testing Goodness-of-Fit). The measures of iFreq and FM were only considered within the temporal bounds of each ripple event.

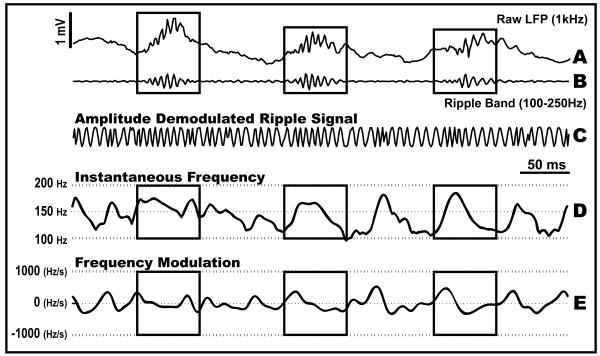

In Figure 6, we plot three typical ripple events occurring over a time span of 0.5 sec in addition to their respective iFreq and FM dynamics. The beginning and end of each ripple were determined by an amplitude threshold equal to the mean of the rectified-ripple band signal added to the s.d. of the rectified-ripple band signal (Csicsvari et al., 1999). Within the last two boxes in Figure 6, we find in these examples that at the beginning of the ripple, the iFreq increases abruptly with a maximal rate of approximately 500Hz/sec and then decreases with a maximal rate of approximately −300Hz/sec.

Figure 6.

Real data analysis: ripple example. The LFP was recorded from the CA1 area in stratum oriens. (A): 500 ms trace of raw data sampled at 1kHz. (B): Bandpass filtered signal between 100-250 Hz retains high frequency oscillations while removing sharp wave activity. (C): The ripple signal is normalized by its amplitude envelope to produce a sinusoid that is mostly modulated in frequency. (D): The iFreq estimate given by the ADKF ( p=2, , ). (E): The respective FM estimate derived from the iFreq estimate above.

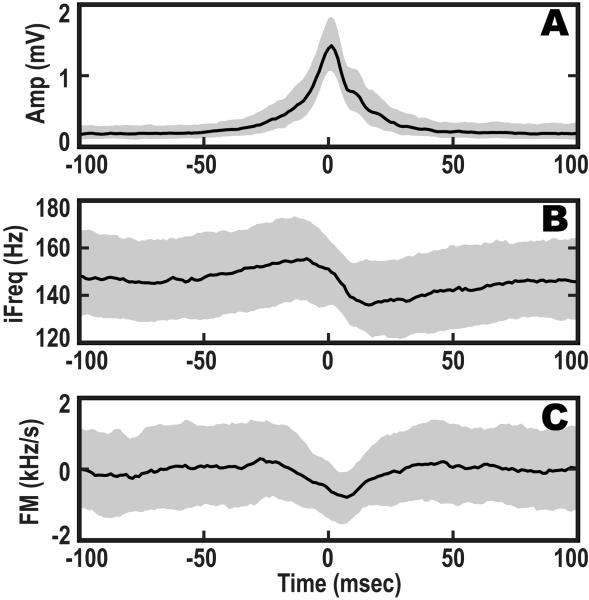

In order to investigate the generality of this dynamic frequency structure, we computed amplitude-peak triggered-averages of the ripple amplitude envelope, iFreq, and FM traces over all detected ripples (Figure 7). Here, we compute novel statistics of small timescale amplitude and frequency dynamics. In Figure 7-A,B, we see that the iFreq increases before the peak amplitude, and then decreases abruptly across the amplitude peak of the ripple. This is recapitulated in Figure 7-C, where the FM is generally positive before the amplitude-peak and then becomes negative.

Figure 7.

Real data analysis: ripple population statistics. The ripple events (N=931) were aligned by the temporal location of the peak-amplitude. The solid lines represent the median activity, while the solid gray background represents the 25% to 75% probability boundaries. (A): distribution of the ripple amplitude envelope dynamics, (B): distribution of the instantaneous frequency dynamics, and (C): distribution of the frequency modulation dynamics.

In Figure 7, the gray boundaries report the 25% and 75% confidence intervals instead of the standard deviations because over time the distribution shape for the respective amplitude or frequency dynamics may change in a nondeterministic fashion. The variation in the ripple amplitude was greatest at approximately 3.7 ms after center of the ripple statistics (Figure 7-A), which is marked by a 95% confidence interval that spans 0.22 mV. The variation in ripple frequency is smallest at ~2.4 ms, where the width of the 95% confidence interval was ~65 Hz (Figure 7-B). While the point of smallest variation for FM, (Figure 7-C), was at 0 ms with a 95% confidence interval spanning ~4.6 kHz/s. These results suggest that ripple oscillations of CA1 may be structured in the temporal-spectral domain with highly organized stages of start (increasing frequency), middle (negative frequency modulation with increase in synchrony), and end (decrease in oscillation amplitude to zero) (Klausberger et al., 2003; Nguyen et al., 2009).

4 Discussion

We developed a compact Kalman smoother algorithm for estimating iFreq with minimized bias. Our method was designed to be simple and interpretable, so that it may be more accessible to the neuroscience community. Furthermore, the interpretation of the iFreq and FM measures is facilitated by the fact that brain rhythms are generally band-limited phenomena, and that our measurements are restricted to physiologically relevant frequency bands.

We illustrated several characteristics of the general AD-KS framework that may lead to erroneous estimates of iFreq. For example, we noted that an amplitude-induced bias may result from a mismatch between the assumed observation and state variances, (), and the actual data observation and state variances over short time intervals. In particular, if there is a variance mismatch such that the variations in the data are smaller than expected, the tracking of iFreq will be diminished (Figure 2). We addressed this model mismatch by amplitude demodulating the signal such that the variation in amplitude was constant throughout the entire dataset. Our simulations show that amplitude-induced tracking bias was greatly reduced as a result. The second issue of importance was the fact that interactions between poles can have undesired effects on iFreq estimates. By first bandpass filtering the observation signal in some band of interest, we were able to reduce estimation bias due to cross-talk between oscillations. As two additional benefits, we were able to avoid the prerequisite problem of AR model identification, and also avoid the pole tracking problem (Mainardi et al., 1995).

In contrast to previous reports that define iFreq and FM to be identically the same (Foffani et al., 2005), our framework defines iFreq and FM to be different measures. Considering the amplitude-demodulation step, the structure of the AR model, and the smooth adaptation of the AD-KS, the iFreq quantity may be interpreted as the most likely oscillation frequency of a noisy oscillator at any point in time. The term FM, measured in Hz/s, as we have defined here can be interpreted as the best estimate of the instantaneous frequency change afforded by both the temporal precision of the sampling rate and the smoothness of the AD-KS estimation procedure. FM may be more useful than iFreq when the LFP event has systematic frequency sweeps but vary greatly in average frequency, like in the hippocampal ripple example. In addition, FM is particularly unique and useful because it measures the rate of change in frequency and is, therefore, less sensitive to offsets in frequency. Thus, we expect FM activity to identify instances of strong input into a neural system that results in transitions in population synchrony.

Our analysis of ripple events demonstrated that iFreq and FM are useful measures for quantifying dynamic, short-timescale frequency structure in brain oscillations. The ripple event has been studied extensively in-vivo and in-vitro (Behrens et al., 2005; Chrobak et al., 2000; Csicsvari et al., 1999; Foffani et al., 2007; Klausberger et al., 2003; Molle et al., 2006; Nguyen et al., 2009) as an important mechanism for information processing and memory consolidation (Buzsáki and Chrobak, 1995; Foster and Wilson, 2006; Ji and Wilson, 2007; Lee and Wilson, 2002). However, to the best of our knowledge, only one previous technical paper has offered an algorithm for addressing the time-frequency analysis of ripple events (Gillis et al., 2005). The approach of Gillis et. al. used the wavelet transform to characterize time-frequency activity and used the separation of a two-component mixture model, which models background noise and the ripple event, to determine the best filter parameters for analyzing ripples. In this way, Gillis et al. (2005) treat the parameters of the wavelet transform as the descriptor of ripple associated timescales. In contrast, our approach first characterizes individual ripple events with continuous spectral resolution and with temporal resolution limited only by the Nyquist frequency. The resulting discretized estimates of oscillation dynamics, iFreq and FM, are powerful characterizations because they measure modulations of brain rhythms on the timescale of neuronal interactions. In Figure 7, we show that some ripple events have frequency dynamics that may be described with the same template. The unique iFreq trace of individual ripple events may be considered “noise”; however, we are working to understand if it is possible to use these variations from the mean to infer information about the unique conditions under which each oscillatory event was generated (Nguyen et al., 2009).

Brain rhythms are thought to be bounded in frequency; however, since brain rhythms are frequency modulated, a constant passband may not be sufficient to isolate a single brain rhythm. The possibility of shrinking the passband may clip oscillations of interest, while widening the passband may allow undesired activity to be included. One possibility is to develop a data driven algorithm to automatically choose the parameters of the bandpass filter in light of the signal isolation problem. Alternatively, one could consider using time-varying passbands to continuously isolate LFP activity of interest. The capacity for such a filter potentially exists in this AD-KS algorithm; by subtracting the residuals, v(n) , in (1.7) from the input signal yAD(n) one obtains a signal that has been filtered with a dynamic passband. Thus, the AD-KS algorithm may be used to “shape” the passband slowly over several iterations if constrained properly. Aside from this methodology, it is currently unclear how to verify if a dynamic passband filter is correct. Thus future development in this direction will also require integrating physiological results to construct informative models in which to identify the time-varying frequency components associated with the neural circuit(s) under study.

In practice, it is possible to implement a modified version of the algorithm in real-time. The AR(2)-KF requires as its most complex operation, the inversion of a 2×2 matrix. On the other hand, implementing the AR(2)-KS in real-time using a fixed-interval KS is not possible since we cannot define the end time of the fixed-interval. Alternatively, a fixed-lag KS can be used in real-time applications with a penalty of increased computational complexity and some temporal delay, where the delay is equal to the number of lags multiplied by the sampling interval of the data (Mendel, 1987). Regardless, such a delay would be necessary as the Hilbert transform requires a window of data around the current sample for the computation of the fast Fourier transform.

The measures of iFreq and FM may be useful in a wide range of applications in the neurosciences. For example, in order to understand the fundamental timescale on which functional neural computations are reflected in LFP signals, we require methods that can examine both small and large scales in time. In addition, this algorithm may provide a framework for bridging results between in-vivo and ex-vivo experiments. For instance, repeatable iFreq and FM signatures that are found under specific pharmacological manipulations ex-vivo may be used to decode LFP signals recorded in-vivo (Atallah and Scanziani, 2009). Understanding how to better decode LFPs may lead to multimodal solutions to the neural prosthetic problem. For instance, it may be possible to decode parallel spike trains conditional on the computational state of the neural circuit (Kemere et al., 2008) in addition to using features extracted from the LFP (Rasch et al., 2008).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Amisigo BA, van de Giesen NC. Using a spatio-temporal dynamic state-space model with the EM algorithm to patch gaps in daily riverflow series. Hydrology and Earth System Sciences. 2005;9:209–24. [Google Scholar]

- Anderson BDO, Moore JB. Linear optimal control. Prentice-Hall; Englewood Cliffs, N.J.: 1971. [Google Scholar]

- Arnold M, Miltner WHR, Witte H, Bauer R, Braun C. Adaptive AR modeling of nonstationary time series by means of Kalman filtering. IEEE Trans. Biomed. Eng. 1998;45:553–62. doi: 10.1109/10.668741. [DOI] [PubMed] [Google Scholar]

- Atallah BV, Scanziani M. Instantaneous Modulation of Gamma Oscillation Frequency by Balancing Excitation with Inhibition. Neuron. 2009;62:566–77. doi: 10.1016/j.neuron.2009.04.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartoli F, Cerutti S. An Optimal Linear Filter for the Reduction of Noise Superimposed to the Eeg Signal. J. Biomed. Eng. 1983;5:274–80. doi: 10.1016/0141-5425(83)90001-8. [DOI] [PubMed] [Google Scholar]

- Baselli G, Porta A, Rimoldi O, Pagani M, Cerutti S. Spectral decomposition in multichannel recordings based on multivariate parametric identification. IEEE Trans. Biomed. Eng. 1997;44:1092–101. doi: 10.1109/10.641336. [DOI] [PubMed] [Google Scholar]

- Behrens CJ, van den Boom LP, de Hoz L, Friedman A, Heinemann U. Induction of sharp wave-ripple complexes in vitro and reorganization of hippocampal networks. Nat. Neurosci. 2005;8:1560–7. doi: 10.1038/nn1571. [DOI] [PubMed] [Google Scholar]

- Bencheqroune A, Benseddik M, Hajjari A. Tracking of time-varying frequency of sinusoidal signals. Signal Processing. 1999;78:191–9. [Google Scholar]

- Bland BH. The Physiology and Pharmacology of Hippocampal-Formation Theta Rhythms. Prog. Neurobiol. 1986;26:1–54. doi: 10.1016/0301-0082(86)90019-5. [DOI] [PubMed] [Google Scholar]

- Bohlin T. Analysis of EEG signals with changing spectra using a short-word Kalman estimator. Math. Biosci. 1977;35:221–59. [Google Scholar]

- Box GEP, Jenkins GM, Reinsel GC. Time series analysis : forecasting and control. 4th ed. John Wiley; Hoboken, N.J.: 2008. [Google Scholar]

- Buzsáki G. Rhythms of the brain. Oxford University Press; Oxford ; New York: 2006. [Google Scholar]

- Buzsáki G, Chrobak JJ. Temporal structure in spatially organized neuronal ensembles: a role for interneuronal networks. Curr. Opin. Neurobiol. 1995;5:504–10. doi: 10.1016/0959-4388(95)80012-3. [DOI] [PubMed] [Google Scholar]

- Chrobak JJ, Buzsaki G. High-frequency oscillations in the output networks of the hippocampal-entorhinal axis of the freely behaving rat. J. Neurosci. 1996;16:3056–66. doi: 10.1523/JNEUROSCI.16-09-03056.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chrobak JJ, Lorincz A, Buzsaki G. Physiological patterns in the hippocampo-entorhinal cortex system. Hippocampus. 2000;10:457–65. doi: 10.1002/1098-1063(2000)10:4<457::AID-HIPO12>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Csicsvari J, Hirase H, Czurko A, Mamiya A, Buzsaki G. Fast network oscillations in the hippocampal CA1 region of the behaving rat. J. Neurosci. 1999;19:RC20. doi: 10.1523/JNEUROSCI.19-16-j0001.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foffani G, Bianchi AM, Baselli G, Priori A. Movement-related frequency modulation of beta oscillatory activity in the human subthalamic nucleus. J. Physiol.-London. 2005;568:699–711. doi: 10.1113/jphysiol.2005.089722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foffani G, Bianchi AM, Priori A, Baselli G. Adaptive autoregressive identification with spectral power decomposition for studying movement-related activity in scalp EEG signals and basal ganglia local field potentials. J. Neural Eng. 2004;1:165–73. doi: 10.1088/1741-2560/1/3/006. [DOI] [PubMed] [Google Scholar]

- Foffani G, Uzcategui YG, Gal B, Menendez de la Prida L. Reduced Spike-Timing Reliability Correlates with the Emergence of Fast Ripples in the Rat Epileptic Hippocampus. Neuron. 2007;55:930–41. doi: 10.1016/j.neuron.2007.07.040. [DOI] [PubMed] [Google Scholar]

- Foster DJ, Wilson MA. Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature. 2006;440:680–3. doi: 10.1038/nature04587. [DOI] [PubMed] [Google Scholar]

- Gillis JA, Luk WP, Zhang L, Skinner FK. Characterizing in vitro hippocampal ripples using time-frequency analysis. Neurocomputing. 2005;65-66:357–64. [Google Scholar]

- Ji DY, Wilson MA. Coordinated memory replay in the visual cortex and hippocampus during sleep. Nat. Neurosci. 2007;10:100–7. doi: 10.1038/nn1825. [DOI] [PubMed] [Google Scholar]

- Jones MW, Wilson MA. Theta rhythms coordinate hippocampal-prefrontal interactions in a spatial memory task. PLoS Biol. 2005;3:402. doi: 10.1371/journal.pbio.0030402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kemere C, Santhanam G, Yu BM, Afshar A, Ryu SI, Meng TH, Shenoy KV. Detecting neural-state transitions using hidden Markov models for motor cortical prostheses. J. Neurophysiol. 2008;100:2441–52. doi: 10.1152/jn.00924.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klausberger T, Magill PJ, Marton LF, Roberts JDB, Cobden PM, Buzsaki G, Somogyi P. Brain-state- and cell-type-specific firing of hippocampal interneurons in vivo. Nature. 2003;421:844–8. doi: 10.1038/nature01374. [DOI] [PubMed] [Google Scholar]

- Ko CC, Li CP. An Adaptive Iir Structure for the Separation, Enhancement, and Tracking of Multiple Sinusoids. IEEE Trans. Signal Processing. 1994;42:2832–4. [Google Scholar]

- Lee AK, Wilson MA. Memory of sequential experience in the hippocampus during slow wave sleep. Neuron. 2002;36:1183–94. doi: 10.1016/s0896-6273(02)01096-6. [DOI] [PubMed] [Google Scholar]

- Mainardi LT, Bianchi AM, Baselli G, Cerutti S. Pole-Tracking Algorithms for the Extraction of Time-Variant Heart-Rate-Variability Spectral Parameters. IEEE Trans. Biomed. Eng. 1995;42:250–9. doi: 10.1109/10.364511. [DOI] [PubMed] [Google Scholar]

- Mendel JM. Lessons in digital estimation theory. Prentice-Hall; Englewood Cliffs, N.J.: 1987. [Google Scholar]

- Miltner WHR, Braun C, Arnold M, Witte H, Taub E. Coherence of gamma-band EEG activity as a basis for associative learning. Nature. 1999;397:434–6. doi: 10.1038/17126. [DOI] [PubMed] [Google Scholar]

- Molle M, Yeshenko O, Marshall L, Sara SJ, Born J. Hippocampal sharp wave-ripples linked to slow oscillations in rat slow-wave sleep. J. Neurophysiol. 2006;96:62–70. doi: 10.1152/jn.00014.2006. [DOI] [PubMed] [Google Scholar]

- Nguyen DP, Barbieri R, Wilson MA, Brown EN. Instantaneous frequency and amplitude modulation of EEG in the hippocampus reveals state dependent temporal structure. Engineering in Medicine and Biology Society, 2008; 30th Annual International Conference of the IEEE; 2008; pp. 1711–5. EMBS 2008. [DOI] [PubMed] [Google Scholar]

- Nguyen DP, Kloosterman F, Barbieri R, Brown EN, Wilson MA. Characterizing the dynamic frequency structure of fast oscillations in the rodent hippocampus. Front Integr Neurosci. 2009;3:11. doi: 10.3389/neuro.07.011.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oppenheim AV, Schafer RW, Buck JR. Discrete-time signal processing. 2nd ed. Prentice Hall; Upper Saddle River, N.J.: 1999. [Google Scholar]

- Percival DB, Walden AT. Spectral analysis for physical applications : multitaper and conventional univariate techniques. Cambridge University Press; Cambridge ; New York, NY, USA: 1993. [Google Scholar]

- Ponomarenko AA, Korotkova TM, Sergeeva OA, Haas HL. Multiple GABA(A) receptor subtypes regulate hippocampal ripple oscillations. Eur. J. Neurosci. 2004;20:2141–8. doi: 10.1111/j.1460-9568.2004.03685.x. [DOI] [PubMed] [Google Scholar]

- Popescu AT, Popa D, Pare D. Coherent gamma oscillations couple the amygdala and striatum during learning. Nat. Neurosci. 2009;12:801–U161. doi: 10.1038/nn.2305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rasch MJ, Gretton A, Murayama Y, Maass W, Logothetis NK. Inferring spike trains from local field potentials. J. Neurophysiol. 2008;99:1461–76. doi: 10.1152/jn.00919.2007. [DOI] [PubMed] [Google Scholar]

- Sinnamon HM. Decline in hippocampal theta activity during cessation of locomotor approach sequences: Amplitude leads frequency and relates to instrumental behavior. Neuroscience. 2006;140:779–90. doi: 10.1016/j.neuroscience.2006.02.058. [DOI] [PubMed] [Google Scholar]

- Sirota A, Csicsvari J, Buhl D, Buzsaki G. Communication between neocortex and hippocampus during sleep in rodents. Proc. Natl. Acad. Sci. U. S. A. 2003;100:2065–9. doi: 10.1073/pnas.0437938100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steriade M. Grouping of brain rhythms in corticothalamic systems. Neuroscience. 2006;137:1087–106. doi: 10.1016/j.neuroscience.2005.10.029. [DOI] [PubMed] [Google Scholar]

- Steriade M, Nunez A, Amzica F. A Novel Slow (Less-Than-1 Hz) Oscillation of Neocortical Neurons in-Vivo - Depolarizing and Hyperpolarizing Components. J. Neurosci. 1993;13:3252–65. doi: 10.1523/JNEUROSCI.13-08-03252.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tarvainen MP, Hilturien JK, Ranta-Aho PO, Karjalainen PA. Estimation of nonstationary EEG with Kalman smoother approach: An application to event-related synchronization (ERS) IEEE Trans. Biomed. Eng. 2004;51:516–24. doi: 10.1109/TBME.2003.821029. [DOI] [PubMed] [Google Scholar]

- Vanderwolf CH. Hippocampal Electrical Activity and Voluntary Movement in Rat. Electroencephalogr. Clin. Neurophysiol. 1969:407–18. doi: 10.1016/0013-4694(69)90092-3. [DOI] [PubMed] [Google Scholar]

- Wong KFK, Galka A, Yamashita O, Ozaki T. Modelling non-stationary variance in EEG time series by state space GARCH model. Comput. Biol. Med. 2006;36:1327–35. doi: 10.1016/j.compbiomed.2005.10.001. [DOI] [PubMed] [Google Scholar]

- Wyble BP, Hyman JM, Rossi CA, Hasselmo ME. Analysis of theta power in hippocampal EEG during bar pressing and running behavior in rats during distinct behavioral contexts. Hippocampus. 2004;14:662–74. doi: 10.1002/hipo.20012. [DOI] [PubMed] [Google Scholar]

- Zetterberg LH. Estimation of parameters for a linear difference equation with application to EEG analysis. Math. Biosci. 1969;5:227–75. [Google Scholar]