Abstract

Reward information is represented by many subcortical areas and neuron types, which constitute a complex network. Its output is usually mediated by the basal ganglia where behaviors leading to rewards are disinhibited and behaviors leading to no reward are suppressed. Midbrain dopamine neurons modulate these basal ganglia neurons differentially using signals related to reward-prediction error. Recent studies suggest that other types of subcortical neurons assist, instruct, or work in parallel with dopamine neurons. Such reward-related neurons are found in the areas which have been associated with stress, pain, mood, emotion, memory, and arousal. These results suggest that reward needs to be understood in a larger framework of animal behavior.

Introduction

Animals can maintain their body states only by actively foraging for food and water and can ensure the survival of their own species only by actively acquiring appropriate mates. This suggests that many animals share common neural mechanisms of such reward-directed behaviors. Over the course of evolution many new brain areas have emerged, notably the cerebral cortex. However, it is likely that phylogenetically older structures (collectively called subcortical structures) have retained fundamental mechanisms for reward-directed behavior. Indeed, lesions in subcortical structures such as the hypothalamus and the basal ganglia render animals unable to control goal-directed behaviors even when their basic sensory and motor functions appeared normal. On the other hand, mammals whose cerebral cortex was removed in infancy could perform many reward-directed behaviors normally. Supporting these earlier discoveries, recent studies have provided evidence that many subcortical areas represent reward information. In this review we first characterize the nature of reward representation in each area and discuss the possible subcortical mechanisms of reward-directed behavior.

Striatum

Neurons in the dorsal part of the striatum are activated both by preparing and executing actions, and by anticipating and receiving rewards. Thus the dorsal striatum is well-positioned to guide motivated behavior, since its neural information could be used to select the action whose reward value is greatest. Indeed, although some striatal neurons act as pure reward predictors, others anticipate the reward value of specific cues and actions [1–3]. These signals are probably computed within the dorsal striatum, as they are different from reward representations in striatum-projecting regions of frontal cortex [4•,5]. Interestingly, some neurons track the value of specific actions, regardless of whether the animal chooses to perform them [2]; but other neurons track values in terms of choice, signaling the ‘chosen value’ or ‘non-chosen value’ [6•]. Also, although many neurons link actions to their expected rewards, fewer neurons appear to link actions to their resulting actual rewards [7•]. This is consistent with recent findings that electrical stimulation of the caudate nucleus can act much like a reinforcer, biasing animals to prepare actions that were ‘rewarded’ with stimulation [8•,9•], but that stimulation is equally reinforcing for both contralateral and ipsilateral actions [9•].

Compared to the dorsal striatum, the ventral striatum has been linked more clearly with stimulus-outcome learning than with action selection. At the level of single cells, however, neurons in both regions encode a similar mixture of signals, responding to both actions and rewards [10]. The different functions of dorsal and ventral striatum may emerge more clearly when viewed at the level of neural populations. Functional imaging studies in humans have found striatal activity to correlate with an astounding variety of reward-related variables [11–13]. These studies have led to theories that different parts of the striatum predict rewards at different timescales [14•]; or preferentially encode predictions vs. deviations from predictions [15]; or have distinct selectivity for the outcomes of freely chosen actions [16]. Functional segregation within the striatum has also been investigated in lesion and inactivation studies in rats, suggesting that different parts of the dorsal striatum have distinct roles in learning stimulus-action vs. action-outcome associations [17•], and that dorsal and ventral striatum may make different contributions to skill learning and expression [18•].

Dopamine neurons

A series of pioneering studies by Schultz and colleagues have shown that midbrain dopamine neurons behave like a ‘reward-prediction error’ signal – they fire a burst of spikes when the reward value is higher than expected, but are inhibited when the value is lower [19]. These dopaminergic value signals combine information about several aspects of rewards, including their probability, magnitude, and delay [20,22••].

Yet if dopamine neurons are to guide decision-making, they must not only signal the value of individual reward outcomes, but also the value of an opportunity to choose between them. A recent investigation found that when monkeys are presented with a pair of potential actions, dopamine neurons signal the value of the monkey’s chosen action, even if it is the less-valuable option [21••]. However, a second study in rats reported a very different result: that dopamine neurons initially signal the value of the better action [22••]. One possible reason for this difference is that the two studies recorded from different populations of dopamine neurons [22••] – one in the substantia nigra pars compacta (SNc), and the other in the ventral tegmental area (VTA).

Indeed, there is evidence that the SNc and VTA have distinct roles in reward learning. For example, rats learn and perform orienting to reward-predictive cues via a pathway from the central amygdala to the SNc to the dorsolateral striatum [23]. A similar circuit may exist in monkeys, where reward-motivated orienting depends on striatal dopamine transmission, with distinct contributions from different dopamine receptors [24••,25]. In contrast to the SNc, the VTA is not a part of this pathway, but VTA lesions reduce the potency of reward-associated cues to drive reward-seeking actions [26,23].

Lateral habenula

The lateral habenula has been implicated in many emotional and cognitive functions including anxiety, stress, pain, learning and attention [27]. In addition, recent studies reported that the lateral habenula also plays a crucial role in reward processing, especially in relation to midbrain dopamine neurons. Matsumoto and Hikosaka [28••] found that lateral habenula neurons in monkeys responded to rewards and reward-predicting sensory stimuli. They were excited by non-reward-predicting stimuli and inhibited by reward-predicting stimuli. In addition, lateral habenula neurons were excited when the expected reward was omitted, and inhibited when a reward was given unexpectedly. All of these responses were opposite to those of dopamine neurons.

The opposite response pattern led to the hypothesis that the response of lateral habenula neurons are causally related with the response of dopamine neurons. On unrewarded trials, the excitation of lateral habenula neurons started earlier than the inhibition of dopamine neurons [28••]. Electrical stimulation of the lateral habenula inhibits dopamine neurons [28••,29•]. These observations suggest that the excitation of lateral habenula neurons can trigger the inhibition of dopamine neurons. Thus, the lateral habenula is likely to be a major source of negative reward-related signals in dopamine neurons, and perhaps in other subcortical areas as well.

Hypothalamus

Damage to the hypothalamus, especially the lateral hypothalamus and the mediodorsal hypothalamus, disrupts feeding behavior. Earlier studies showed that neurons in the lateral hypothalamus become active in anticipation of food rewards, responding to the sight of foods or the arbitrary sensory cues that predict the upcoming food rewards. It was subsequently discovered that a group of lateral hypothalamic neurons contain orexin (hypocretin) and serve both to maintain arousal level and to promote feeding [30]. Recent studies have revealed that reward-seeking behavior is, at least partly, mediated by the orexin neuron-induced activation of VTA dopamine neurons projecting to the nucleus accumbens [31,32•]. Mice lacking the orexin precursor gene showed no morphine-induced place preference [32•]. Orexin also mediates rewarding effects of sexual behavior. In rats orexin neurons were activated during copulation, which in turn increased the dopamine level in the nucleus accumbens [33•].

Amygdala

Although previous research on the amygdala tended to focus on the influence of emotions on perception and cognition, recent studies by Salzman and his colleagues highlighted the value representation of the amygdala. Paton et al. [34••] examined the value representation while monkeys were conditioned in a Pavlovian procedure in which the monkeys formed associations between conditioned stimuli and reward or aversive-airpuffs. They found that separate populations of neurons in the amygdala represent the positive and negative values assigned with the conditioned stimuli. Belova et al. [35•] examined the response of amygdala neurons to reward and airpuffs themselves under two conditions: one in which the outcomes occurred predictably, the other in which the outcomes occurred unpredictably. They found that many amygdala neurons responded differently to reward and airpuffs, and that these responses were frequently modulated by the prediction. The reward representation in the amygdala may serve to consolidate memory formation [36]. Paz et al. [37••] demonstrated that reward-dependent activation of baso-lateral amygdala neurons facilitates impulse transmission from perirhinal to entorhinal neurons. Because the rhinal cortices constitute the main route for impulse traffic into and out of the hippocampus, the perirhinal and entorhinal interaction seems likely to be linked to memory formation. Indeed, the strength of the interaction was tightly correlated with animals’ associative learning. This finding may explain how animals form more vivid memories of emotionally charged events.

Serotonin neurons

Serotonin is involved in many functions, ranging from the development of the brain [38] to social behaviors [39]. There is no consensus so far on the exact roles and mechanisms of serotonin function. Some of the recognized theories include, (1) defense mechanisms [40], (2) temporal discounting of reward value [41], and (3) negative reward signal as an opponent of dopamine signals [42]. The last theory postulates that the phasic discharge of serotonin acts as a negative prediction-error signal. However, pure opponency seems too simple, considering the fact that serotonin and dopamine systems interact in various levels [41]. Recent studies seem to support the temporal discounting theory [14].

Despite these many experiments and theories, it was unclear whether serotonin neurons carried reward information. Using reward-biased saccade tasks Nakamura et al. [43••] clarified that neurons in the monkey dorsal raphe, presumably including serotonin neurons, changed their activity differentially depending on the value of the expected reward, as well as the received reward. In striking contrast to dopamine neurons, the response to the reward was invariant whether or not it was expected.

Pallidum

The pallidum is divided into the dorsal pallidum (internal and external globus pallidus) and the ventral pallidum. A series of studies from Berridge and colleagues have suggested that reward information is strongly represented in the ventral pallidum. Their recent work has teased apart the ‘liking’ (hedonic fillings) and ‘wanting’ (motivation) systems in the limbic part of the basal ganglia [44•,45]. They concluded that while nucleus accumbens and ventral pallidum acted together to represent ‘liking’, nucleus accumbens alone could represent ‘wanting’, independent of the ventral pallidum. One tempting conclusion drawn from recent experiments is that the opioid system is necessary for hedonic experience, ‘liking’, whereas the dopamine system is important for motivation, ‘wanting’ [46].

While less well known, reward signals also infiltrate into the dorsal pallidum. The dorsal pallidal neurons may inherit reward information from the dorsal striatum where neurons are known to be modulated quite strongly by expected rewards [1,15]. It should be noted that a portion of neurons in the internal segment of the globus pallidus (GPi) project to the lateral habenula [47]. A recent study has shown that the lateral habenula-projecting GPi neurons encode strong reward prediction signals similar to the lateral habenula neurons (S Hong and O Hikosaka, abstract in Soc Neurosci Abstr 2007, 749.25). Moreover the latency of this reward-related modulation was shorter for these neurons compared to the lateral habenula neurons indicating excitatory connection. These results suggest that GPi may initiate reward-related signals through its effects on the lateral habenula, which then influences the dopaminergic and serotonergic systems.

Conclusions

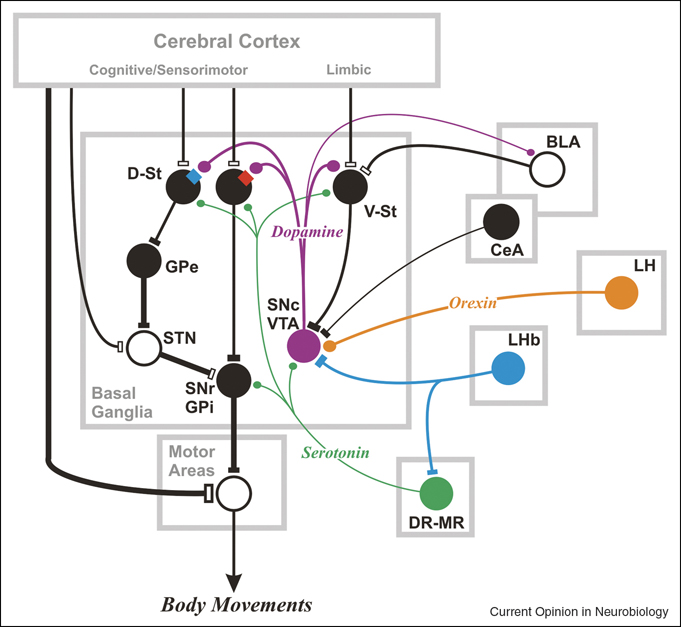

Recent studies suggest that a number of subcortical areas and neuron types represent reward information and constitute complex networks (Figure 1). As theoretical studies have suggested [48], different types of neurons appear to contribute to different aspects of reward-based learning and decision-making. Unlike dopamine neurons and lateral habenula neurons, dorsal raphe neurons (including serotonin neurons) do not represent reward-prediction error, and amygdala neurons do so only partially. Unlike neurons in the striatum and the amygdala, dopamine and serotonin neurons as well as lateral habenula neurons do not encode specific sensorimotor signals, such as target direction. Unique among subcortical areas (as tested so far), dorsal striatal neurons have activity related to the value of specific actions, goal positions or object identities [1–3]. These signals would create a bias in the basal ganglia network so that the action preferred by the striatal neurons is more likely to occur [49]. This may be the ultimate mechanism of action for subcortical reward-directed activity.

Figure 1.

Subcortical areas and their connections that can control reward-directed behavior. Central to the subcortical network is the basal ganglia which are capable of disinhibiting actions that lead to rewarding outcomes and suppressing actions that lead to non-rewarding or punishing outcomes. The basal ganglia mechanism becomes functional owing to signals mediated by neuromodulators (e.g. dopamine, serotonin, orexin, acetylcholine) and signals originating outside the basal ganglia (e.g. amygdala, lateral habenula), in addition to the inputs from the cerebral cortical areas. The target of the SNr-GPi, denoted as ‘Motor Areas’, may be subcortical motor areas, such as the superior colliculus, or ventral thalamic nuclei which are connected with motor cortical areas. Direct pathway neurons in D-St express dopamine D1 receptors (red square); indirect pathway neurons express D2 receptors (blue square). V-St may include striosomes (or patches) in the D-St. For clarity, many connections and neuron types are omitted. BLA: basalateral amygdala, CeA: central amygdala, DR: dorsal raphe, D-St: dorsal striatum, GPe: external segment of globus pallidus, GPi: internal segment of globus pallidus, LH: lateral hypothalamus, LHb: lateral habenula, MR: medial raphe, SNc: substantia nigra pars reticulata, SNr: substantia nigra pars reticulata, STN: subthalamic nucleus, V-St: ventral striatum, VTA: ventral tegmental area. Filled circles indicate inhibitory neurons; open circles indicate excitatory neurons. LHb neurons inhibit SNc-VTA and DR-MR neurons, but their action is mediated by inhibitory interneurons. SNc-VTA contains dopamine neurons, DR-MR contains serotonin neurons, and LH contains orexin neurons, all of which exert modulatory effects on their target neurons.

Interestingly, the new players in the subcortical reward network have traditionally been associated with other functions: serotonin neurons for mood, stress, and sleep; amygdala for emotion and memory; lateral habenula for circadian rhythm, pain, and stress; orexin neurons for arousal. This implies that reward needs to be understood in a larger framework of animal behaviors. For example, omission of expected reward is similar to punishment [50] which animals would want to avoid. Arousal is the state where motor behaviors are activated in general, while reward-seeking behaviors largely involve a large part of motor behaviors including locomotion and orienting. It is thus feasible that the reward network and the arousal (or circadian) network have evolved by sharing the same mechanism.

Acknowledgement

This work was supported by the intramural research program of the National Eye Institute.

References and recommended reading

Papers of particular interest, published within the period of review, have been highlighted as:

• of special interest

•• of outstanding interest

- 1.Lauwereyns J, Watanabe K, Coe B, Hikosaka O. A neural correlate of response bias in monkey caudate nucleus. Nature. 2002;418:413–417. doi: 10.1038/nature00892. [DOI] [PubMed] [Google Scholar]

- 2.Samejima K, Ueda Y, Doya K, Kimura M. Representation of action-specific reward values in the striatum. Science. 2005;310:1337–1340. doi: 10.1126/science.1115270. [DOI] [PubMed] [Google Scholar]

- 3.Lau B, Glimcher PW. Value representations in the primate striatum during matching behavior. Neuron. 2008;58:451–463. doi: 10.1016/j.neuron.2008.02.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ding L, Hikosaka O. Comparison of reward modulation in the frontal eye field and caudate of the macaque. J Neurosci. 2006;26:6695–6703. doi: 10.1523/JNEUROSCI.0836-06.2006. This study compared the activity of FEF and caudate neurons during an eye movement task in which the direction of the saccade target indicated the reward size. Both areas had direction-selective neurons that were modulated by reward, but only the caudate had neurons that were selective purely for reward size.

- 5.Kobayashi S, Kawagoe R, Takikawa Y, Koizumi M, Sakagami M, Hikosaka O. Functional differences between macaque prefrontal cortex and caudate nucleus during eye movements with and without reward. Exp Brain Res. 2007;176:341–355. doi: 10.1007/s00221-006-0622-4. [DOI] [PubMed] [Google Scholar]

- 6. Pasquereau B, Nadjar A, Arkadir D, Bezard E, Goillandeau M, Bioulac B, Gross CE, Boraud T. Shaping of motor responses by incentive values through the basal ganglia. J Neurosci. 2007;27:1176–1183. doi: 10.1523/JNEUROSCI.3745-06.2007. This study compared the activity of putamen and GPi neurons during free choices between arm movements, where the available reward for each movement was instructed by a visual cue. Both regions contained neurons that encoded the reward value of the chosen action, but surprisingly, a similar number of neurons encoded the value of the non-chosen action (or even a combination of the two).

- 7. Lau B, Glimcher PW. Action and outcome encoding in the primate caudate nucleus. J Neurosci. 2007;27:14502–14514. doi: 10.1523/JNEUROSCI.3060-07.2007. This study recorded from caudate neurons during a delayed saccade task in which rewards were delivered on a random fraction of trials. During the reward period, many neurons were tuned to saccade direction or reward value, but surprisingly, these two signals occurred primarily in separate neurons.

- 8. Williams ZM, Eskandar EN. Selective enhancement of associative learning by microstimulation of the anterior caudate. Nat Neurosci. 2006;9:562–568. doi: 10.1038/nn1662. This study found that during stimulus-response learning, many caudate neurons signaled the strength of a learned association, but during the feedback period, more neurons were correlated with the learning rate. Furthermore, if feedback was supplemented with electrical stimulation of the caudate, it strengthened the association between stimulus and response. This could be used to either enhance or impair learning (by stimulating after correct trials or error trials, respectively). Interestingly, these effects were found in the anterior caudate, but not the anterior putamen.

- 9. Nakamura K, Hikosaka O. Facilitation of saccadic eye movements by postsaccadic electrical stimulation in the primate caudate. J Neurosci. 2006;26:12885–12895. doi: 10.1523/JNEUROSCI.3688-06.2006. This study found that electrical stimulation of an oculomotor region of the caudate nucleus can reinforce saccadic eye movements. If saccades to a specific spatial location were ‘rewarded’ with electrical stimulation, then future saccades to that location were facilitated, while saccades to other locations were suppressed. Interestingly, stimulation was equally good at reinforcing both contraversive and ipsiversive saccades.

- 10.Taha SA, Nicola SM, Fields HL. Cue-evoked encoding of movement planning and execution in the rat nucleus accumbens. J Physiol. 2007;584:801–818. doi: 10.1113/jphysiol.2007.140236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Knutson B, Cooper JC. Functional magnetic resonance imaging of reward prediction. Curr Opin Neurol. 2005;18:411–417. doi: 10.1097/01.wco.0000173463.24758.f6. [DOI] [PubMed] [Google Scholar]

- 12.Delgado MR. Reward-related responses in the human striatum. Ann N Y Acad Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- 13.Fisher HE, Aron A, Brown LL. Romantic love: a mammalian brain system for mate choice. Philos Trans R Soc Lond B Biol Sci. 2006;361:2173–2186. doi: 10.1098/rstb.2006.1938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Tanaka SC, Schweighofer N, Asahi S, Shishida K, Okamoto Y, Yamawaki S, Doya K. Serotonin differentially regulates short- and long-term prediction of rewards in the ventral and dorsal striatum. PLoS ONE. 2007;2:e1333. doi: 10.1371/journal.pone.0001333. This fMRI study replicated a previous result that the BOLD signal in the dorsal striatum is correlated with long-term reward predictions, while ventral striatum is more correlated with short-term predictions. Moreover, the correlation between the short-term reward seeking and ventral striatum activity became stronger when the level of serotonin was lower. Also, a higher than average serotonin level boosted the correlation between the long-term reward seeking and dorsal striatum activity.

- 15.Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. J Neurophysiol. 2006;95:948–959. doi: 10.1152/jn.00382.2005. [DOI] [PubMed] [Google Scholar]

- 16.O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 17. Yin HH, Knowlton BJ, Balleine BW. Inactivation of dorsolateral striatum enhances sensitivity to changes in the action-outcome contingency in instrumental conditioning. Behav Brain Res. 2006;166:189–196. doi: 10.1016/j.bbr.2005.07.012. This study allowed rats to gain extensive experience with pressing a lever to get rewards, then changed the task so that pressing the lever instead delayed rewards. Normal rats obstinately persisted in pressing the lever, but inactivating the dorsolateral striatum allowed rats to overcome their habit and learn the new task.

- 18. Atallah HE, Lopez-Paniagua D, Rudy JW, O’Reilly RC. Separate neural substrates for skill learning and performance in the ventral and dorsal striatum. Nat Neurosci. 2007;10:126–131. doi: 10.1038/nn1817. This study manipulated striatal activity in rats as they used odor cues to locate food rewards. In well-trained rats, inactivating either dorsal or ventral striatum had only small effects on performance; but if rats were still learning the task, inactivating either structure caused large impairments. Remarkably, when the dorsal striatum was later returned to normal function, these impaired rats immediately began performing as well as experts, indicating that they had been learning all along despite their blocked performance.

- 19.Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- 20.Schultz W. Multiple dopamine functions at different time courses. Annu Rev Neurosci. 2007;30:259–288. doi: 10.1146/annurev.neuro.28.061604.135722. [DOI] [PubMed] [Google Scholar]

- 21. Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci. 2006;9:1057–1063. doi: 10.1038/nn1743. This study compared SNc dopamine neuron activity during instructed and free-choice versions of a reward-guided arm-movement task. When a monkey was shown cues indicating each action’s reward value, dopamine neurons signaled the value of the monkey’s later action, regardless of whether it was instructed or freely chosen, and regardless of whether it was the best available choice. Their results have the surprising implication that dopamine neurons start receiving information about an animal’s choice a mere 130 ms after the options are presented.

- 22. Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. This study compared VTA dopamine neuron activity in rats during instructed and free choices between rewards with different magnitudes and delays. This is the first study to show that dopamine neurons signal the preference for immediate rather than delayed rewards. In addition, when rats were given a free choice, the initial response of dopamine neurons always signaled a positive value, even on trials when rats chose the low-value option. According to simple action-value theories, this task is formally quite similar to the one used by Morris et al. (2006); the theories may need to be revised to account for their conflicting results.

- 23.El-Amamy H, Holland PC. Dissociable effects of disconnecting amygdala central nucleus from the ventral tegmental area or substantia nigra on learned orienting and incentive motivation. Eur J Neurosci. 2007;25:1557–1567. doi: 10.1111/j.1460-9568.2007.05402.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Nakamura K, Hikosaka O. Role of dopamine in the primate caudate nucleus in reward modulation of saccades. J Neurosci. 2006;26:5360–5369. doi: 10.1523/JNEUROSCI.4853-05.2006. This study injected dopamine receptor antagonists into an oculotomor region of the caudate nucleus while monkeys saccaded to visual targets, in a task where the target location indicated the upcoming reward size. Blocking D1 receptors selectively slowed reaction times to high-value targets, whereas blocking D2 receptors slowed reaction times to contralateral low-value targets. This result has implications for subcortical reward circuitry, since D1 and D2 receptors are expressed on separate striatal neurons, which project to separate ‘direct’ and ‘indirect’ pathways through the basal ganglia (see Figure 1).

- 25.Hikosaka O. Basal Ganglia Mechanisms of Reward-oriented Eye Movement. Ann N Y Acad Sci. 2007;1104:229–249. doi: 10.1196/annals.1390.012. [DOI] [PubMed] [Google Scholar]

- 26.Corbit LH, Janak PH, Balleine BW. General and outcome-specific forms of Pavlovian-instrumental transfer: the effect of shifts in motivational state and inactivation of the ventral tegmental area. Eur J Neurosci. 2007;26:3141–3149. doi: 10.1111/j.1460-9568.2007.05934.x. [DOI] [PubMed] [Google Scholar]

- 27.Lecourtier L, Kelly PH. A conductor hidden in the orchestra? Role of the habenular complex in monoamine transmission and cognition. Neurosci Biobehav Rev. 2007;31:658–672. doi: 10.1016/j.neubiorev.2007.01.004. [DOI] [PubMed] [Google Scholar]

- 28. Matsumoto M, Hikosaka O. Lateral habenula as a source of negative reward signals in dopamine neurons. Nature. 2007;447:1111–1115. doi: 10.1038/nature05860. This study compared the activity of lateral habenula neurons and that of dopamine neurons while monkeys were performing a reward-biased saccade task. Lateral habenula neurons were excited by a noreward-predicting target and inhibited by a reward-predicting target. Dopaminergic neurons in the substantia nigra pars compacta exhibit the opposite pattern of activity. Furthermore, weak electrical stimulation of the lateral habenula elicited strong inhibitions in dopamine neurons. Their findings suggest that the lateral habenula transmits negative reward signals to dopamine neurons, thereby controlling oculomotor behavior.

- 29. Ji H, Shepard PD. Lateral habenula stimulation inhibits rat midbrain dopamine neurons through a GABA(A) receptor-mediated mechanism. J Neurosci. 2007;27:6923–6930. doi: 10.1523/JNEUROSCI.0958-07.2007. This study demonstrated that electrical stimulation of the lateral habenula inhibits dopamine neurons, and suggested that this habenular-induced inhibition is mediated indirectly by activation of putative GABAergic neurons in the ventral midbrain. This indirect pathway from the lateral habenula to dopamine neurons is a major candidate for a source of reward-related signals in dopamine neurons.

- 30.Willie JT, Chemelli RM, Sinton CM, Yanagisawa M. To eat or to sleep? Orexin in the regulation of feeding and wakefulness. Annu Rev Neurosci. 2001;24:429–458. doi: 10.1146/annurev.neuro.24.1.429. [DOI] [PubMed] [Google Scholar]

- 31.Harris GC, Wimmer M, Aston-Jones G. A role for lateral hypothalamic orexin neurons in reward seeking. Nature. 2005;437:556–559. doi: 10.1038/nature04071. [DOI] [PubMed] [Google Scholar]

- 32. Narita M, Nagumo Y, Hashimoto S, Khotib J, Miyatake M, Sakurai T, Yanagisawa M, Nakamachi T, Shioda S, Suzuki T. Direct involvement of orexinergic systems in the activation of the mesolimbic dopamine pathway and related behaviors induced by morphine. J Neurosci. 2006;26:398–405. doi: 10.1523/JNEUROSCI.2761-05.2006. Injection of orexin A or orexin B in the VTA facilitated morphine-induced place preference and increased the level of dopamine in the nucleus accumbens, and the former effect was suppressed by an orexin A antagonist.

- 33. Muschamp JW, Dominguez JM, Sato SM, Shen RY, Hull EM. A role for hypocretin (orexin) in male sexual behavior. J Neurosci. 2007;27:2837–2845. doi: 10.1523/JNEUROSCI.4121-06.2007. Dopamine neurons in the anterior VTA, which receive inputs from hypothalamic orexin neurons, increased activity (assessed by Fos-ir) during copulation. Systemic administration of an orexin antagonist impaired copulatory behavior.

- 34. Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. This study demonstrated that amygdala neurons represent the positive and negative value of predicted outcomes. They used a Pavlovian procedure in which monkeys formed associations between conditioned visual stimuli and reward or aversive-airpuff. Separate populations of neurons in the amygdala represented the value of reward and airpuff assigned with the conditioned stimuli. Notably, when the association between the conditioned stimuli and the outcomes was reversed, the value representation in the amygdala was updated rapidly enough to account for monkeys’ associative learning.

- 35. Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. This study demonstrated that amygdala neurons responded to reward and aversive-airpuff themselves, and that these responses were frequently modulated by expectation. Specifically, the responses were larger when the outcomes were unexpected than when the outcomes were expected.

- 36.McGaugh JL. The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annu Rev Neurosci. 2004;27:1–28. doi: 10.1146/annurev.neuro.27.070203.144157. [DOI] [PubMed] [Google Scholar]

- 37. Paz R, Pelletier JG, Bauer EP, Pare D. Emotional enhancement of memory via amygdala-driven facilitation of rhinal interactions. Nat Neurosci. 2006;9:1321–1329. doi: 10.1038/nn1771. The question of interest is how animals make a vivid memory of emotionally charged events. This study suggested that the value representation in the amygdala is involved in such a process. They found that reward-dependent activation of basolateral amygdala neurons facilitates impulse transmission from perirhinal to entorhinal neurons, and that the interaction between perirhinal and entorhinal cortices is linked memory formation. Thus, the basolateral amygdala would promote memory formation by facilitating the perirhinal and entorhinal interaction using its reward-dependent activation.

- 38.Bonnin A, Torii M, Wang L, Rakic P, Levitt P. Serotonin modulates the response of embryonic thalamocortical axons to netrin-1. Nat Neurosci. 2007;10:588–597. doi: 10.1038/nn1896. [DOI] [PubMed] [Google Scholar]

- 39.Edwards DH, Kravitz EA. Serotonin, social status and aggression. Curr Opin Neurobiol. 1997;7:812–819. doi: 10.1016/s0959-4388(97)80140-7. [DOI] [PubMed] [Google Scholar]

- 40.Deakin JFW, Graeff FG. 5-HT and mechanisms of defence. J Psychopharmacol. 1991;5:305–316. doi: 10.1177/026988119100500414. [DOI] [PubMed] [Google Scholar]

- 41.Doya K. Metalearning and neuromodulation. Neural Netw. 2002;15:495–506. doi: 10.1016/s0893-6080(02)00044-8. [DOI] [PubMed] [Google Scholar]

- 42.Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15:603–616. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- 43. Nakamura K, Matsumoto M, Hikosaka O. Reward-dependent modulation of neuronal activity in the primate dorsal raphe nucleus. J Neurosci. 2008;28:5331–5343. doi: 10.1523/JNEUROSCI.0021-08.2008. Using monkeys performing a reward-biased saccade task, this study showed that the activity of DRN neurons were different from the activity of dopamine neurons. Whereas the dopamine neurons predominantly responded to a reward-predicting sensory stimulus, DRN neurons responded to both the reward-predicting stimulus and the reward itself. Whereas dopamine neurons responded to a reward only when it was larger or smaller than expected, DRN neurons reliably coded the value of the received reward whether or not it was expected. These results suggest that the DRN, probably including serotonin neurons, signals the reward value associated with the current behavior.

- 44. Tindell AJ, Smith KS, Pecina S, Berridge KC, Aldridge JW. Ventral pallidum firing codes hedonic reward: when a bad taste turns good. J Neurophysiol. 2006;96:2399–2409. doi: 10.1152/jn.00576.2006. The authors demonstrate that the neural activity of the ventral pallidum (VP) positively correlates the animal’s behavioral ‘liking’. This was done by recording neural activity in the VP while assessing the behavioral hedonic impact of intense salt water (normally ‘disliked’) versus sucrose solution (always ‘liked’). When they were deprived of salt (NaCl), the animals ‘liked’ the salt water almost as much as sucrose. This behavioral change was reflected in the neural activity in the VP, dramatically increasing the firing rate after the delivery of salt water. The authors concluded that the neural activity in the VP may represent hedonic pleasures.

- 45.Smith KS, Berridge KC. Opioid limbic circuit for reward: interaction between hedonic hotspots of nucleus accumbens and ventral pallidum. J Neurosci. 2007;27:1594–1605. doi: 10.1523/JNEUROSCI.4205-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Leknes S, Tracey I. A common neurobiology for pain and pleasure. Nat Rev Neurosci. 2008;9:314–320. doi: 10.1038/nrn2333. [DOI] [PubMed] [Google Scholar]

- 47.Parent M, Levesque M, Parent A. Two types of projection neurons in the internal pallidum of primates: single-axon tracing and three-dimensional reconstruction. J Comp Neurol. 2001;439:162–175. doi: 10.1002/cne.1340. [DOI] [PubMed] [Google Scholar]

- 48.Doya K. Modulators of decision making. Nat Neurosci. 2008;11:410–416. doi: 10.1038/nn2077. [DOI] [PubMed] [Google Scholar]

- 49.Hikosaka O, Nakamura K, Nakahara H. Basal Ganglia orient eyes to reward. J Neurophysiol. 2006;95:567–584. doi: 10.1152/jn.00458.2005. [DOI] [PubMed] [Google Scholar]

- 50.Seymour B, Singer T, Dolan R. The neurobiology of punishment. Nat Rev Neurosci. 2007;8:300–311. doi: 10.1038/nrn2119. [DOI] [PubMed] [Google Scholar]