Abstract

Objective

To evaluate an instructional module's effectiveness at changing third-year doctor of pharmacy (PharmD) students' ability to identify and correct prescribing errors.

Design

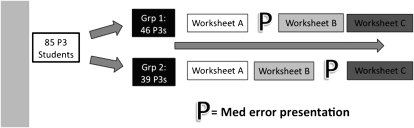

Students were randomized into 2 groups. Using a computer-based module, group 1 completed worksheet A, watched a presentation on medication errors, and then completed worksheets B and C. Group 2 completed worksheets A and B, watched the presentation, and then completed worksheet C.

Assessment

Both groups scored a median 50% on worksheet A and 66.7% on worksheet C (p < 0.001). Median scores on worksheet B differed between groups (p = 0.0014). Group 1 viewed the presentation before completing worksheet B and scored 62.5%, while group 2 viewed the presentation after scoring 50% on worksheet B.

Conclusion

The module effectively taught pharmacy students to identify and correct prescribing errors.

Keywords: prescribing errors, computer-based learning, computer-assisted instruction, medication errors

INTRODUCTION

The principles of evidence-based medicine have pervaded medical research and practice, and the gold standard of evidence-based medicine is the randomized controlled trial.1 By definition, the randomized controlled trial involves random assignment of subjects to groups so that, theoretically, any variability (or bias) due to known or unknown factors is evenly distributed across groups and can therefore be eliminated as a possible source of differences among the groups. Using the randomized controlled trial in medical research provides a more rigorous evaluation of different treatments and services and more clearly demonstrates cause-effect inferences.2 For the same reason, the randomized controlled trial is encouraged (although rarely implemented) in educational research.3-5 This study was an attempt to implement the randomized controlled trial in the evaluation of a curricular intervention aimed at improving third-year (P3) doctor of pharmacy (PharmD) students' ability to identify and correct prescribing errors.

The rationale for using the randomized controlled trial in the current study was based on the need to obtain evidence that would enable educators to make course-related decisions based on defensible cause-effect inferences. Otherwise, educators' inferences would follow from findings at the level of non-experimental descriptive and correlational evidence. While non-experimental studies do have a role among education-related investigations, definitive causal inferences cannot be made from correlational data. Evidence from correlational data is much less convincing than randomized controlled trial-based evidence, especially when decisions regard the effectiveness of an instructional module and whether its use should be continued within a college course. In a quest to identify effective instructional strategies, different instructional modules should be attempted and evaluated using the most rigorous research designs where possible. Evaluation outcomes should inform future instructional revisions; thus, the randomized controlled trial may best inform academicians as to whether an educational intervention such as an instructional strategy has a noteworthy effect on an outcome among learners.

The specific focus of the present study on a prescribing error instructional module was prompted by a noted general discrepancy between the status of medication error instruction in pharmacy curricula and the occurrence of medication errors in patient care. While medication error instruction has been developed to a limited extent within pharmacy curricula across the United States,6 medication errors are ubiquitous in healthcare, and prescribing errors are the most problematic subset of medication errors.7 The eminent Institute of Medicine has acknowledged this problem,8,9 and The Joint Commission has disseminated medication safety standards (including those to avoid prescribing errors) with which all accredited healthcare institutions must comply.10 Hence, it is both critical that PharmD students are exposed to instruction on prescribing errors, and that academicians provide rigorous evidence for the effectiveness of any such change to instruction in any coursework.

The purpose of the current investigation, therefore, was to evaluate the effectiveness of a prescribing error instructional module in improving students' ability to identify and correct prescribing errors. Effectiveness was determined by comparing 2 groups of students exposed to a presentation on prescribing errors at different times. The following specific research questions were addressed: (1) Did P3 students who were exposed to the prescribing error module perform better at identifying and correcting prescribing errors compared to students who were not exposed to this instructional module? (2) While still providing students with the same educational experience, can a randomized controlled trial design be feasibly implemented into an educational setting?

DESIGN

During the 2007-2008 academic year, 96 third-year PharmD students completed the prescribing errors instructional module evaluated in this study. The students were exposed to this module during the laboratory component of the Patient Care Rounds course at the University of Toledo College of Pharmacy. The University's Social, Behavioral, and Education Institutional Review Board approved this study. Students were informed regarding the reasons and methods for this study, and signed an informed consent for the use of their data in this research.

Prescribing Error Module

The Patient Care Rounds course only included an in-class discussion component that focused on therapeutic problems within medication prescriptions and did not highlight the essential parts of a medication order (ie, drug, dose, route, and frequency) when first offered. Understanding these prescription elements might foster students' ability to identify and correct medication order problems. Content related to medication safety was added in the form of the prescribing error module evaluated in this study and developed by the investigators. The goal of this module was to enable students to actively engage with prescribing error problems, as well as provide the course instructors with a meaningful evaluation strategy for identifying and correcting prescribing errors.

The module on prescribing errors used a PowerPoint presentation that provided a background of medical and medication errors in general, described the medication use process and error-prone components, and reviewed the Institute of Medicine recommendations and Joint Commission standards. Specific prescribing error types, rationale for institutional policies, and policy specifics were explained as well. The PowerPoint presentation was embedded with audio and converted into a Flash presentation, which was then uploaded to Blackboard (Blackboard Inc, Washington DC). To encourage active engagement of the students with the course material, 3 application exercises in the form of worksheets (described below) were created using the quiz feature within Blackboard. Students accessed the presentations and worksheets during regular class time in a networked classroom with individual computers.

Worksheets

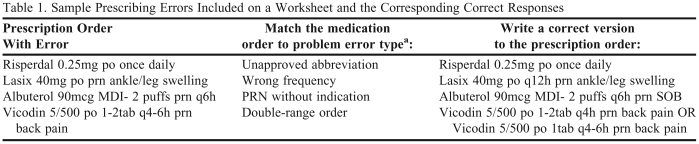

Each worksheet included 20 questions that were based on actual medication errors identified by pharmacists at an affiliated academic medical center. The errors were defined by the American Society of Health-System Pharmacists and/or The Joint Commission.10,11 Two questions were asked about each of the 10 errors included on each worksheet. The first question required students to match an incorrect prescription order to the type of error they identified from it. The second question required students to write the corrected version of the prescription order. Examples are given in Table 1.

Table 1.

Sample Prescribing Errors Included on a Worksheet and the Corresponding Correct Responses

aError types: wrong drug, wrong dose, wrong route, wrong frequency, double-range order, PRN without indication, unapproved abbreviation, correct order

In creating the worksheet questions, Bloom's Taxonomy was employed as a framework.12 The questions asking students to match the error to its error type required that students at least comprehend prescribing error types because the answers to the questions were not explicitly stated in the presentation. By using actual institutional order errors already flagged and corrected by pharmacists, students simulated “real world” experiences and used higher-level thinking skills within Bloom's Taxonomy.

The worksheets added an active-learning component to an otherwise didactic presentation module. All 3 worksheets (A, B and C) were designed for students to complete in less than 10 minutes during regular class time. Although the format used for each worksheet was the same, the worksheets differed from one another in the specific prescribing errors used. By asking students different medication errors in the same pattern on the each worksheet, students could not simply memorize content between worksheets but instead had to use a process of identifying and correcting prescribing errors. In doing this, a meaningful comparison could be made for each group's performance on subsequent worksheets.

Randomized Controlled Trial Design

When P3 students completed the course registration process, they selected a preferred laboratory section day: either Wednesday or Friday. For this module, students on each day were later assigned randomly (via coin toss) into 1 of 2 study groups. Students in both groups 1 and 2 in the Wednesday laboratory section completed worksheet A first. Next, students in group 1 viewed a presentation while students in group 2 completed worksheet B. Afterwards, students in group 1 completed worksheet B while students in group 2 watched the presentation. Following this, both groups of students completed worksheet C. This same procedure was repeated with the students who had selected the Friday laboratory section. As seen in Figure 1, randomization of students into the 2 groups ensured that any differences identified between groups 1 and 2 could be confidently attributed to the module and not to any potential confounding factors. Delaying the time at which group 2 completed worksheet B allowed group 2 to be treated as a control group without denying these students the same educational experience as students in group 1.

Figure 1.

Methodology: Randomization and Worksheet Sequence

EVALUATION AND ASSESSMENT

Data Analysis Methods

For statistical analyses, Analyse-it, version 2.11 (Analyse-it, Leeds, UK) was used. To answer the first research question and measure change in the students' ability to identify and correct prescribing errors, students' performance on each worksheet was scored, using only the correct matches from odd-numbered questions. Guessing was excluded by double-checking student responses to the even-numbered questions. If, for example, a correct answer was chosen for an odd-numbered matching question, the related even-numbered question required an answer that adequately addressed the prescribing error. Percent correct was used as the cumulative score for each worksheet. A Mann-Whitney test was conducted between the 2 groups for each of the 3 worksheets. Additionally, each student's score on worksheet A was paired with their scores on worksheets B and C with separate Friedman ANOVA tests conducted for students in groups 1 and 2. Significant Friedman ANOVA differences were followed up with post-hoc pairwise Wilcoxon signed rank tests to find where the differences existed. Because of the multiple planned tests, the experiment-wise alpha was set at 0.05, and the Bonferroni-adjusted significance threshold became 0.01 for each a priori test in the analysis (5 tests performed).

Student Outcomes

Eighty-five students were analyzed in this study, while 11 students did not give consent (5 students from group 1 and 6 students from group 2). The median scores in group 1 (students who completed worksheet B after watching the presentation) were 50% on worksheet A, 62.5% on worksheet B, and 66.7% on worksheet C. For group 2, the median scores were 50% on worksheet A, 50% on worksheet B, and 66.7% on worksheet C. Differences in scores between groups 1 and 2 were not significant on either worksheets A or C (p = 0.24 and p = 0.98, respectively), but a significant difference was noted for worksheet B (p = 0.0014). Importantly, the effect size (Cohen's d) between group 1 and group 2 on worksheet B was 0.85. As well, each group demonstrated a significant change in percent correct scores over the worksheet series (p < 0.0001 for either group). The post-hoc comparisons were significant in group 1 for scores on worksheets A vs B and A vs C, but not for scores on B vs C (p < 0.0001, p < 0.0001, p = 0.28 , respectively). For group 2, the post-hoc comparisons were significant for scores on worksheets A vs C and B vs C, but not for scores on A vs B (p < 0.0001, p = 0.0002, p = 0.07, respectively).

Prescribing Error Module

The module was smoothly and easily implemented in both groups as evidenced by the absence of any substantial time delays incurred during the module's use. All students took a similar amount of time (<10 minutes) to complete each worksheet and no relationship was identified between performance and time taken to complete the worksheets. Randomization appeared sufficient as evidenced by the 2 groups' scores on worksheet A not differing significantly. Additionally, there was no indication that randomization was compromised or had introduced artificiality into the study (ie, the order of computer access times for students in both groups did not suggest that students in either group had taken the presentation or series of worksheets out of order and no students had reassigned themselves to the other group).

DISCUSSION

Computers have been widely used as a medium for delivering instruction within a technology-driven healthcare setting. While students' perceptions of computer-based learning appear favorable, few strong experimental designs have been used to evaluate student learning outcomes.13,14 Three major limitations of past research were (1) non-comparative or descriptive results, (2) lack of reported reliability, and (3) lack of an assessment of the study's effectiveness.

The present study attempted to overcome these weaknesses. First, this study used an experimental design with randomization and comparison groups. Second, generalizability and reliability/replicability of the study results are suggested through using the same pedagogy that was shown to decrease institutional prescribing errors among a cohort of internal medicine residents.15 Third, the outcome of students identifying and correcting prescribing errors simulates a practice of pharmacists revising errors with the potential to affect patient safety.

A number of internal validity concerns were addressed.16 Bias from selection, maturation, and history were mitigated using randomization. Testing bias was accounted for by using 3 different worksheets. Instrumentation bias was minimized by using the same worksheets in both groups to allow an effective comparison of exposure to this prescribing error presentation. Lastly, ambiguous temporal precedence was prevented using explicit, specific ordering of the module and worksheets for each group without any deviations (ie, treating both groups the same).

Important to using randomized controlled trials in education is that both groups receive the same educational experience. As a result, many studies lack randomization. As well, other education-related randomized controlled trial designs had used concurrent exposure to different educational formats (eg, in-person didactic lectures versus computer-based modules). This study provides a novel experimental design.

This study has noted limitations and delimitations. Only technical errors due to prescription writing were assessed. This focus leaves out prescribing error problems that involve pharmacotherapy concerns such as omitted medications, duplicate medications, and medication administration timing issues. Furthermore, this instructional module highlighted prescribing errors, and did not spend substantial time elucidating other medication-related errors. However, within the medication use process, prescribing errors do form a prominent and avoidable error type.7,9 Instruction related to this common error type appears prudent.

While the quality of the evidence supporting the effect of the module is fairly strong, the study has a major limitation regarding the breadth of high-quality evidence. Only this single study supports this module in preparing third-year pharmacy students to identify and correct prescribing errors. The module was implemented in a natural clinical skills laboratory setting and was implemented once and only during one week's class session of this course. However, both groups received the same (optimal) educational experience because of this randomized controlled trial design over that short time period. No longer-term analyses such as knowledge retention could be done because, following the study, both groups were exposed to the instruction intervention.

Even after completing the module, the average score (percent of correct answers) was 67%, suggesting that some questions on each worksheet remained challenging among this class of students. Thus, 33% of questions may still provide additional room for improvement by future classes of students using the same worksheets. In controlling as many confounders as possible, investigators chose to not allow computer-based feedback to students once each worksheet was completed. Students were not aware of the extent of their performance. Learning from tests can be influencial.17 Not allowing students to learn from past worksheet performances was a major limitation of this study design (though it removed “learning from the test” as a confounder of the module instruction in causing an improvement in students' performance). Providing feedback to students after each worksheet performance should substantially build performances further. To bolster students' understanding and performance on these worksheets further, instructors might also encourage students to use a drug information source for looking up accepted ranges of dosing, frequency, and available routes.

SUMMARY

This paper describes evaluation of a module for instruction in identifying and preventing prescribing errors using a stringent randomized controlled research design. Upon module completion, PharmD students' ability to identify and correct prescribing errors had improved as documented through a series of worksheets. Course instructors hope that future students can use this computer-based module prior to the scheduled class time for this topic and allow in-class discussion to build beyond the technical prescribing errors illustrated in the module to include discussion of therapeutic errors as well.

ACKNOWLEDGEMENTS

The authors would like to thank Sharrel Pinto, PhD, for her assistance with initial study design conception, and Mary Powers, PhD, for reviewing this manuscript.

This study was presented at the 2008 Annual Meeting of the American College of Clinical Pharmacy in Louisville, KY.

REFERENCES

- 1.Moayyedi P. Evidence-based medicine- the emperor's new clothes? Am J Gastroenterol. 2008;103(12):2967–9. doi: 10.1111/j.1572-0241.2008.02193_3.x. [DOI] [PubMed] [Google Scholar]

- 2.Ho PM, Peterson PN, Masoudi FA. Evaluating the evidence: is there a rigid hierarchy? Circulation. 2008;118(16):1675–84. doi: 10.1161/CIRCULATIONAHA.107.721357. [DOI] [PubMed] [Google Scholar]

- 3. Coalition for Evidence-Based Policy. Bringing Evidence-Driven Progress to Education: a Recommended Strategy for the U.S. Department of Education. Washington, DC: U.S. Department of Education, 2002. Available at: http://coalition4evidence.org/wordpress/?page_id=18. Accessed January 21, 2009.

- 4. Coalition for Evidence-Based Policy. Identifying and implementing educational practices supported by rigorous evidence: A user friendly guide. Washington, DC: U.S. Department of Education, 2003. Available at: http://coalition4evidence.org/wordpress/?page_id=18. Accessed January 21, 2009.

- 5.Baron J. Making policy work: the lesson from medicine. Educ Week. 2007;26(38):32–3. [Google Scholar]

- 6.Johnson MS, Latif DA. Medication error instruction in schools of pharmacy curricula: a descriptive study. Am J Pharm Educ. 2002;66(4):364–70. [Google Scholar]

- 7.Leape LL, Bates DW, Cullen DJ, et al. Systems analysis of adverse drug events. JAMA. 1995;274(1):35–3. [PubMed] [Google Scholar]

- 8.Institute of Medicine. To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press; 1999. [Google Scholar]

- 9.Institute of Medicine. Preventing Medication Errors: Quality Chasm Series. Washington, DC: National Academy Press; 2006. [Google Scholar]

- 10.The Joint Commission. Comprehensive Accreditation Manual for Hospitals. Oakbrook Terrace, IL: Joint Commission on Accreditation of Healthcare Organizations; 2004. [PubMed] [Google Scholar]

- 11.ASHP. Standard definition of a medication error. Am J Hosp Pharm. 1982;39(2):321. [PubMed] [Google Scholar]

- 12.Bloom BS, editor. Taxonomy of Educational Goals. Handbook I: Cognitive Domain. New York, NY: McKay; 1956. [Google Scholar]

- 13.Embi PJ, Biddinger PW, Goldenhar LM, Schick LC, Kaya B, Held JD. Preferences regarding the computerized delivery of lecture content: a survey of medical students. American Medical Informatics Association 2006 Symposia Proceedings. [abstract] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee WR. Computer-based learning in medical education: a critical review. J Am Coll Radiol. 2006;3(10):793–8. doi: 10.1016/j.jacr.2006.02.010. [DOI] [PubMed] [Google Scholar]

- 15.Peeters MJ, Pinto SL. Assessing the impact of an educational program on decreasing prescribing errors at a university hospital. J Hosp Med. 2009;4(2):97–101. doi: 10.1002/jhm.387. [DOI] [PubMed] [Google Scholar]

- 16.Shadish WR, Cook TD, Campbell DT. Experimental and quasi-experimental designs for generating causal inference. Boston, MA: Houghton Mifflin Company; 2002. [Google Scholar]

- 17.Larson DP, Butler AC, Roediger HL. Test-enhanced learning in medical education. Med Educ. 2008;42(10):959–66. doi: 10.1111/j.1365-2923.2008.03124.x. [DOI] [PubMed] [Google Scholar]