Abstract

Objectives

To create a valid assessment tool to evaluate the readiness of pharmacy students for advanced pharmacy practice experiences (APPEs).

Design

The Triple Jump Examination (TJE) was tailored to the 4-year, 2-plus-2 curriculum of the College. It consisted of (1) a written, case-based, closed-book examination, (2) a written, case-based open-book examination, and (3) an objective structured clinical examination (OSCE). The TJE was administered at the end of each 4 academic semesters. Progression of students to APPEs was dependent on achieving a preset minimum cumulative (weighted average) score in the 4 consecutive TJE examinations.

Assessment

The predictive utility of the examination was demonstrated by a strong correlation between the cumulative TJE scores and the preceptor grades in the first year (P3) of APPEs (r = 0.60, p > 0.0001). Reliability of the TJE was shown by strong correlations among the 4 successive TJE examinations. A survey probing the usefulness of TJE indicated acceptance by both students and faculty members.

Conclusion

The TJE program is an effective tool for the assessment of pharmacy students' readiness for the experiential years. In addition, the TJE provides guidance for students to achieve preparedness for APPE.

Keywords: assessment, examination, predictive validity, objective structured clinical examination (OSCE), advanced pharmacy practice experience (APPE)

INTRODUCTION

The importance of assessing pharmacy students' progress toward desired outcomes is gaining increasing attention. Assessment of readiness to begin experiential education, including comprehensive, formative, and summative testing of ability-based learning is also emphasized in the current Accreditation Council for Pharmacy Education (ACPE) guidelines.1 In US medical education, advancement to a higher level of learning is dependent on the passage of the United States Medical Licensing Examination (USMLE Step I). Despite the apparent need in pharmacy education for a similar standardized examination to document readiness for experiential learning, currently there is no established national examination. Colleges and schools of pharmacy therefore are left to establish the criteria or develop their own test to determine readiness to progress to advanced pharmacy practice experiences (APPEs). To date, most schools have not implemented such a progress examination, as evidenced by a 2006 survey of US colleges and schools of pharmacy, which found that only 13 out of 68 used any high-stakes examination prior to APPEs.2

Progress examinations at different pharmacy schools have recently been reviewed by Plaza.3 To date, the most studied comprehensive pharmacy examination preceding experiential education is the “Milemarker” examination.4-6 The Milemarker is a cumulative, case-based examination used by the College of Pharmacy at the University of Houston. This high-stakes assessment is administered yearly at the end of the spring semester. While the Milemarker is innovative with regard to its scope and schedule, it has the traditional multiple-choice, closed-book format.

Several other examinations are administered by other colleges and schools prior to students beginning APPEs, however, advancement is not dependent on passing the examination. A case-based “progress assessment” using an audience response system was considered useful by students and pharmacy practice residents.7 The Pharmacy Curriculum Outcomes Assessment (PCOA), a comprehensive multiple-choice examination administered by the National Association of Boards of Pharmacy, has been offered nationwide since 2007.8 None of the above assessments has a live patient interaction component, and no claim has been made regarding their predictive validity toward subsequent performance in APPEs or the North American Pharmacist Licensure Examination (NAPLEX).

In the spirit of the ACPE guidelines, the College of Pharmacy at Touro University - California (TUCOP) has sought to develop its own progress examination program to evaluate student readiness for APPEs. The TUCOP assessment includes 2 written examinations (closed-book and open-book format), as well as a live patient-interaction component in the form of an objective structured clinical examination (OSCE).9,10 The three-tiered evaluation is called the Triple Jump Examination (TJE), and is tailored to the curriculum of TUCOP. Briefly, TUCOP has a 4-year (2-plus-2) curriculum, with 2 years (P1-P2) of foundational studies and introductory pharmacy practice experiences (IPPEs), followed by 2 years (P3-P4) of advanced pharmacy practice experiential years (APPEs). The P1and P2 curriculum is horizontally integrated among 4 core course areas, called tracks: Biological Sciences (Track I), Pharmaceutical Sciences (Track II), Social, Behavioral and Administrative Sciences (Track III), and Clinical Sciences (Track IV). There is emphasis on case-based learning and small group discussions with active-learning exercises. Students write short essays, give presentations, and practice OSCEs in preparation for APPEs (as well as for the TJE). Since TUCOP students enter the experiential part of the program after only 2 years of professional education, it is especially important to evaluate their readiness for APPEs. The goal of TJE is to assess all competencies necessary for success in APPEs, including competencies that are ill-suited for assessment by traditional examinations and course grades. The TJE includes both formative and summative elements. The features of the TJE, including schedule and grading, are presented here in detail, along with evidence of the effectiveness, reliability, and predictive validity of this new assessment tool.

DESIGN

Format and Schedule of the Triple Jump Examination (TJE)

The TJE was administered at the end of each academic (P1-P2) semester. Each TJE had 3 components: a case-based closed-book written examination (in the morning), followed by an open-book written examination based on the same clinical case (in the afternoon), and an OSCE (on the following day). The formats of the written examinations were similar. Students received a clinical case, including patient presentation, complaints, signs, symptoms, and laboratory results. The tests included 1 to 3 questions from each track. Through the 4 consecutive semesters, the cases (as well as the relevant questions) were made increasingly complex, paralleling the increasing knowledge and experience of the students. The questions tested the knowledge and understanding of concepts from all previous semesters, thus emphasizing the cumulative nature of the assessment. The closed-book session was designed to evaluate the students' knowledge and ability to analyze a case. The open-book test was designed to evaluate research skills, problem solving, and the ability to organize and communicate acquired information. The open-book questions were more complex and demanding. These questions generally extended beyond the material taught in the classroom and were therefore not amenable to targeted learning. In order to mimic real-life scenarios of information gathering, the students were allowed to use notes, textbooks, and electronic resources including computers with Internet connections. (All students were required to have a laptop computer, and wireless Internet connection was available in the classrooms.) Students were graded on knowledge of the material (knowledge and comprehension), correct interpretation of the supplied data (analysis and synthesis), and written communication skills (organization and clarity).

The OSCE component of the TJE took place on the following day, and included scenarios designed to mimic patient consultations in a pharmacy (eg, a parent asking for a cough medicine recommendation for a child, or advice on receiving immunization against influenza). Clinical faculty members created the cases, and patient-actors (standardized patients9,10) were trained to portray the patients. Based on the “patient” presentation, the students were expected to ask relevant questions (eg, about allergies), recommend appropriate medication therapy, or refer the patient to a physician. Each student participated in 2 simulated one-on-one encounters with different patients, each presenting a different case. Students were graded on knowledge of the material relevant to the case (knowledge and comprehension), their behavior and interaction with the patient (professionalism), and their communication skills. The OSCE assessment for the first 2 semesters focused primarily on professionalism and communication skills in an effort to identify potential problems in conduct and demeanor that were hard to detect in traditional evaluations.

Grading the TJE: The Semester Grades

Performances in the above categories were graded on an ordinal integer scale of 1 to 4, defined as 1 = insufficient, 2 = approaching proficient, 3 = proficient, and 4 = outstanding. As an anchor-point on this scale, “proficient” was interpreted as a level that should be attained toward the end of APPE years. This same 4-level scale was applied throughout the TJE scoring system in order to increase uniformity and clarity of the results. The written answers were graded by faculty members. The students' performance in the OSCE was first evaluated by the standardized patient using an objective structured questionnaire, which typically required only yes/no markings (eg, “Did the student ask about allergies?”). The role of the standardized patient in the OSCE grading process has been discussed recently.10 The results were reviewed and finalized by clinical science faculty members. All grades were entered into a report card (for a sample, see http://209.209.34.25/webdocs/COP/TJE.htm).

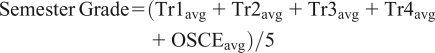

A semester grade for each semester-end examination (TJE1 to TJE4) was calculated from the track and OSCE grades as follows. In each track the students received 3 grades for the open-book part (for knowledge and comprehension, analysis and synthesis, and organization and clarity) and 3 more grades for the closed-book part (using the same criteria). From these 6 grades, the track average (Travg) was calculated for each of the 4 tracks (Tr1-Tr4). The OSCE average (OSCE avg) was calculated from 3 grades only (knowledge and comprehension, professionalism, and communication). The semester grade was calculated as the mean of these 5 averages.

|

In this mean value, the weight of an OSCE grade (eg, for professionalism) was twice as much as that of a single track grade (eg, for organization and clarity in the Track 1 open-book test).

The “passing” semester grade has been defined as 2.5, ie, halfway between “proficient” and “approaching proficient.” The TJE semester grades were not included in the official transcript or the GPA. No retakes were offered to students in any of the first 3 semesters. However, all students were required to review their TJE results with their faculty advisor after each TJE, emphasizing the formative function of TJEs in the first 3 semesters. The 4 TJE track component scores allowed insight into a particular student's learning challenges. Low OSCE scores might reveal issues regarding the student's professional demeanor that needed addressing prior to participating in experiential programs. The faculty advisor could recommend a student for individual tutoring and remediation, based on either specific deficiencies, or weak general performance.

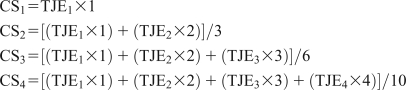

Weighted Cumulative Scores and the TJE Criteria of Progression

The summative goal of the TJE was to determine whether students were sufficiently prepared for experiential education. It was reflected in a calculated cumulative TJE score (CS), computed as an increasingly weighted average of the successive semester TJE grades. The objective of this calculation was to integrate the final (summative) assessment with the preceding series of (mostly) formative TJEs. Increasing the importance (weight) of successive TJE semester grades allowed slowly maturing students to improve without overly penalizing them for their early shortcomings. The cumulative scores (CSn) were calculated from the successive examinations (TJE1 to TJE4) using a 1:2:3:4 ratio as follows:

|

Thus, at the conclusion of the TJE series the relative weight of the first examination in CS4 was only 10%, while that of the last TJE was much higher, 40%. (For example, a student with TJE1=2.8, TJE2=3.1, TJE3=3.4, and TJE4=3.7, would have a CS1=2.8, CS2=3.0, CS3=3.2, and a CS4=3.4.) After each TJE the students received a report card, showing all the component scores, semester grades, and cumulative scores (see sample report card in http://209.209.34.25/webdocs/COP/TJE.htm). The cumulative scores also served as diagnostic tools that helped the students as well as the faculty to assess overall progress and decide whether an intervention was needed. Thus the cumulative grading scheme was the mathematical expression of the deliberate, gradual shift in the character of the TJE over the first 2 years of education from a mostly formative evaluation toward a summative, high-stakes assessment.

The minimum passing grade for CS4, which allowed the student to progress to the experiential part of the program was set at 2.5, ie, halfway between “approaching proficient” and “proficient.” In addition, grades of 1 (“insufficient”) in any of the final OSCE components prohibited advancement to APPEs, since the OSCE was considered to model the situations that students would encounter in APPEs and must be prepared for. Students not meeting these passing criteria had the opportunity to sit for one more TJE prior to the start of APPEs. A prerequisite for the TJE retake was that the student must have passed all other courses. Progression was dependent on achieving an (unweighted) TJE grade equal to or higher than 2.5 on the retake and no scores of 1 on any OSCE component. Failure of the retake examination triggered a mandatory evaluation of the student's preparedness, led by the Assistant Dean of Student Affairs.

ASSESSMENT

TJE Outcomes

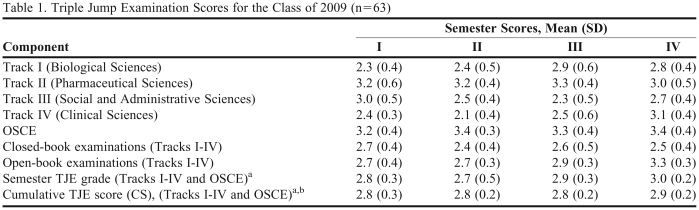

Sixty-three of 64 students of the Class of 2009 completed the four P1-P2 semesters, as well as four TJEs in sequence in the spring of 2007. Table 1 shows the average TJE scores for Tracks I-IV, OSCE, closed-book, and open-book tests across the 4 semesters. Also shown are the semester TJE grades and weighted cumulative scores (CS1-CS4). The range of mean grades for different tracks in the course of 4 semesters was 2.1 to 3.3; for OSCEs, the range was 3.2 to 3.4. Both the unweighted and weighted TJE averages were relatively consistent over the 4 semesters. The average of cumulative TJE scores by the end of the fourth semester (CS4), ie, at the summative point, was 2.9 ± 0.2. In terms of the grading definitions, this meant that the majority of students were either close to, or surpassed the “proficient” level.

Table 1.

Triple Jump Examination Scores for the Class of 2009 (n=63)

Abbreviations: TJE = Triple Jump Examination;

aSee text for definition of semester grade and cumulative score

bNumber of students with cumulative TJE score below 2.5 for semester I = 5 (7.9%); semester II = 3 (4.8%); semester III = 4 (8.3%); semester IV = 2 (3.2%).

At the end of the P2 year, 4 students failed to meet the TJE passing criteria. Two students had CS4 scores below the 2.5 cutoff, and 2 different students scored a 1 (insufficient) on one of their final OSCE components. Three of these 4 students passed on re-examination. The student who failed the re-examination and another student who did not complete the coursework were not allowed to progress to APPEs and were directed to repeat several courses.

Validity

Predictive validity was a central issue with the TJE program since one of its principal objectives was to determine readiness for the experiential part of the curriculum. Therefore, students' TJE performances (CS4) were compared with preceptor evaluations (APPE grades) during their first APPE year (P3). Sixty-one students completed 324 APPEs in community pharmacy practice, ambulatory care, acute care, and institutional pharmacy practice during their first APPE year. (The first community pharmacy APPE in the P3 year was technically part of the IPPE program. Nevertheless, this APPE was included in the calculations.) Five failing APPE grades (1.5%) were received by 4 students (out of 61; 6.6%). Three students received 1 failing APPE grade each, and a single student received 2 failing APPE evaluations. The cumulative TJE scores (CS4) of these 4 students were 2.8, 2.7, 2.7, and 2.5.

The students' average APPE grades were calculated as follows. The grade for each APPE was expressed as a percentage score (from 70% to 100%). Since failing grades were reported non-numerically as “unsatisfactory,” for the purpose of the following calculations they were set equal to 65%. For each student, the average APPE grade for the P3 year was calculated as the average of all (5 or 6) APPE grades. The average APPE grade for the class was 88.2% ± 4.8%, which in terms of letter grades corresponded to a high B.

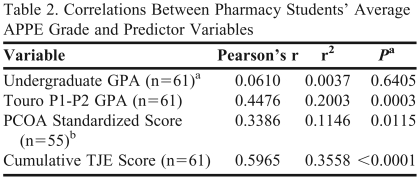

The correlations of APPE grades with TJE scores and other available variables were examined in detail (Table 2). There was a strong correlation between the students' cumulative TJE scores and P3 APPE grades (r = 0.6, p < 0.0001). As interpreted from the coefficient of variance (r2), TJE explained 36% of the variability in APPE performance. TJE was a much stronger predictor of APPE performance than any other variable examined (Table 2). The correlation of APPE grades with P1-P2 GPA was also strong (r = 0.45, p = 0.0003), accounting for 20% of the variance. A somewhat smaller but significant correlation (r = 0.34, p = 0.0115) was found with students' score on PCOA.8 Undergraduate GPA showed a weak and nonsignificant correlation with APPE grades (r = 0.06, p = 0.64).

Table 2.

Correlations Between Pharmacy Students' Average APPE Grade and Predictor Variables

Abbreviations: APPE = advanced pharmacy practice experience; GPA = grade point average; PCOA = Pharmacy Curriculum Outcomes Assessment; TJE = Triple Jump Examination.

aP value for r = 0 between first year average APPE grade and associated predictor variable

bPCOA, a comprehensive multiple-choice examination for pharmacy students, offered nationally since 2007,8 was recommended although not mandatory at Touro University College of Pharmacy.

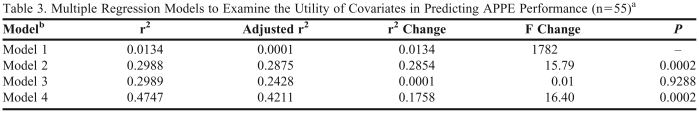

To further assess the impact of various independent variables, a set of hierarchical multivariate models were developed to predict student APPE performance (Table 3). In addition to the previously discussed covariates, age was included as some studies have suggested it may be a predictor of pharmacy student performance.11 Due to concerns of colinearity in the model, the variation inflation factor (VIF) for each predictor variable was calculated. None of the VIFs were greater than 2.98, indicating that colinearity among the independent variables was not a critical problem. After controlling for age and undergraduate GPA, the Touro P1-P2 GPA was a significant predictor of APPE performance (Model 2; Table 3). In contrast, the addition of the PCOA standardized score8 (Model 3) did not increase the predictive power of the model (p = 0.9288). However, after controlling for all other variables (age, undergraduate GPA, Touro GPA, and standardized PCOA score), TJE was a significant predictor of APPE performance (Model 4). In fact, the inclusion of TJE scores nearly doubled the predictive ability of the model, from 24% to 42%, as measured by adjusted r2 values (p = 0.0002).

Table 3.

Multiple Regression Models to Examine the Utility of Covariates in Predicting APPE Performance (n=55)a

Models include only the students who took the PCOA8 (Pharmacy Curriculum Outcomes Assessment), which was recommended although not mandatory at TUCOP for the Class of 2009.

bModel 1: Constant, Age, Undergrad GPA; Model 2: Constant, Age, Undergrad GPA, Touro P1-P2 GPA; Model 3: Constant, Age, Undergrad GPA, Touro P1-P2 GPA, PCOA Score; Model 4: Constant, Age, Undergrad GPA, Touro P1-P2 GPA, PCOA Score, TJE [Cumulative TJE score (CS4)]

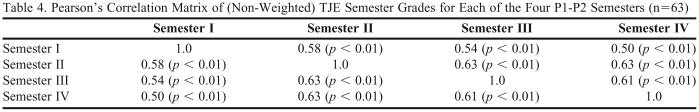

Other aspects of validity and reliability of the TJE program are briefly considered here, applying the terms as defined in a recent review.3 Content validity of the TJE was assured by involving all teaching faculty members in formulating TJE questions and creating cases that resembled scenarios relevant to clinical practice and discussed during the preceding semesters. The same faculty members performed the grading, thereby providing a measure of construct validity. Reliability was investigated by calculating the correlations among (nonweighted) TJE semester grades. The correlation matrix indicates a reasonable degree of reliability as shown by the relatively high Pearson's correlation coefficients across the 4 successive P1-P2 semesters (Table 4).

Table 4.

Pearson's Correlation Matrix of (Non-Weighted) TJE Semester Grades for Each of the Four P1-P2 Semesters (n=63)

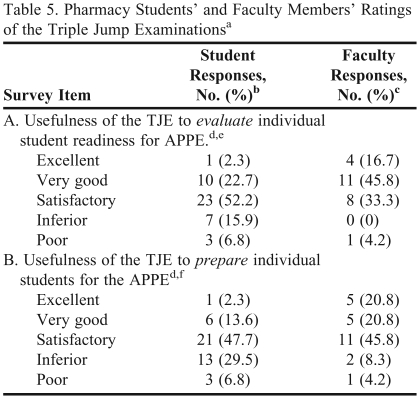

The perceptions of students and faculty members regarding the usefulness of TJE were surveyed using the Blackboard Academic Suite (Blackboard Inc, Washington, DC; see Table 5). The surveys were conducted at the conclusion of the first of 2 APPE years (P3) of the inaugural student cohort. At the time the survey was conducted, neither students nor faculty members were aware of the predictive validity of these examinations. The usefulness of TJE in evaluating the readiness for APPE (ie, the summative goal) was rated as satisfactory, very good, or excellent by 77% of the students, and 96% of the faculty members (p < 0.01; Fisher's Exact Test). The usefulness of TJE in preparing students for APPEs (ie, the formative value; Table 5B) was rated as satisfactory, very good, or excellent by 64% of the students and 87% of faculty members (p < 0.01; Fisher's Exact Test). Overall, faculty ratings were significantly higher for both items.

Table 5.

Pharmacy Students' and Faculty Members' Ratings of the Triple Jump Examinationsa

Abbreviations: TJE = Triple Jump Examinations; APPE = advanced pharmacy practice experience

Survey was administered at the conclusion of the first APPE year (end of P3 year).

bStudent response rate was 73% (44 out of 60; 1 student left the program during the P3 Year).

cFaculty response rate was 96% (24 out of 25 faculty members)

dRating scale: excellent = 5, very good = 4, satisfactory = 3, inferior = 2, poor = 1.

eMean (SD) response to survey item A: students = 3.0 (0.9); faculty = 3.7 (0.9); p < 0.01

fMean (SD) response to survey item B: students = 2.8 (0.9); faculty = 3.5 (1.0); p < 0.01

DISCUSSION

The Triple Jump Examination was designed in response to a call for outcomes assessment by ACPE,1 and in recognition of the increased need for assessment in higher education in general.12 Recent reviews of progress examinations3 and cumulative examinations13 at US colleges and schools of pharmacy, as well as the assessment of professional competence in healthcare professions14 are available in the literature. In comparison, the TJE series at TUCOP is one of the most comprehensive assessments used in pharmacy education. The main distinguishing features of TJE are the wide range of competencies tested, the longitudinal dynamics of the program, and TJE's demonstrated ability to predict students' subsequent performance in APPEs.

An important aspect of any assessment is whether it evaluates the desired educational outcomes and competencies.15 Most traditional examinations are limited to probing the knowledgebase using multiple-choice tests. In contrast, the TJE assesses a wide range of competencies using a variety of testing methods: case-based closed-book and open-book tests, and patient care skills examination. The TJE program conforms to the current, broad guidelines developed by CAPE16 and ACPE.1 A more specific list of competencies was compiled in the 1998 version of the CAPE Educational Outcomes.17 Of these, the TJE addresses most of the general ability-based outcomes, notably those related to thinking, communication, ethical decision making, social awareness and interaction, and self-learning abilities. Several of the professional practice-based outcomes are also assessed, including most of those listed under providing pharmaceutical care (gather and organize information, interpret and evaluate pharmaceutical data, document pharmaceutical care activity, and display the attitudes required to render pharmaceutical care).

Longitudinal assessment across all four P1-P2 semesters is an important feature of TJE. Each semester's TJE provides a stage-appropriate appraisal of student development. The increasing complexity of TJEs reflects how learning is progressing from lower levels (knowledge and comprehension) to higher levels (application, analysis, and synthesis).18,19 The scheme of weighted average scores emphasizes the cumulative character of the TJE series. The first 2 TJEs have relatively low weights (10% and 20%) in the final score (CS4). Thus, both students and faculty members get an early signal if improvement is necessary, and corrective measures can be implemented in a timely manner. On the other hand, since students tend to discount no-stakes examinations,4 the inclusion of early TJE results into the final cumulative score (CS4) provides a powerful incentive for students to perform to the best of their ability in all 4 TJEs. Another implication of the increasing weight of successive TJEs is that the character of the examinations is gradually shifting from a mostly formative to an essentially summative evaluation. At its conclusion, the series of four TJEs adds up to a cumulative high-stakes evaluation of their readiness for the experiential component of the PharmD program.

In the series of TJE examinations presented here, the class average was 2.9 at the end of the P2 year. The majority of students were either close to, or surpassed the “proficient” level. Predicting poor or failing performance in clinical internships is difficult.20,21 A related problem is the setting of the passing grade, which is somewhat arbitrary in any untested instrument. Nevertheless, in the present assessment, the passing cumulative TJE score of 2.5 turned out to be a realistic cut-off point. This is supported by the finding that failures occurred in only 5 (1.5%) of 324 APPEs. Furthermore, out of the 2 students who embarked on the APPEs after barely meeting the minimum TJE requirement (CS4=2.5), 1 was unsuccessful and failed 2 APPEs, while the other showed satisfactory performance. Setting the bar markedly higher or lower probably would have resulted in poorer promotion decisions.

Predictive validity toward performance in subsequent stage(s) of education is a good indicator of an assessment's effectiveness. There has been considerable interest in the predictive utility of preadmission and preclinical tests and other variables with regard to success in medical and pharmacy education. Several of those studies bear relevance to TJE's predictive value for APPEs and to our methodology. In the medical field, USMLE Step 1 results and the composite clinical evaluation score (CES) given by preceptors at the end of family medicine APPEs in the third year showed only a weak correlation (r2 = 0.03, p < 0.006).22 Data commonly collected before and during medical school had only modest predictive ability of competency during internships.21 In pharmacy education, McCall and coworkers reported that from among various preadmission indicators (age, Pharmacy College Admission Test (PCAT) scores, prepharmacy GPA, and California Critical Thinking Skills Test), the strongest predictor of NAPLEX results was the composite PCAT score (r = 0.40).11 Using stepwise regression models, Lobb and coworkers reported that prepharmacy math/science GPA combined with PCAT score strongly correlated with a student's first-year academic performance (adjusted r2 = 0.34), predictably showing similarity in consecutive academic achievements.23 Other, nontraditional test scores did not improve the predictive utility of the analysis. Relatively little attention has been paid to the predictors of performance of pharmacy students in APPEs (clerkship or clinical APPEs). The now defunct Basic Pharmaceutical Sciences Examination (BPSE) showed no correlation with performance in clinical coursework.24 Low achievement in timed case-based tasks has been shown to be a better indicator of poor performance in APPEs than lecture-based examination scores, a finding that is similar to our experience.20

We found that the TJE was a robust predictor of APPE performance, explaining 36% of variability. The correlation of preceptor grades (during the first experiential year) with TJE scores was considerably stronger than their correlation with students' GPA in the first 2 years in pharmacy school. Multivariate linear regression analysis showed that the addition of TJE scores to other available predictors nearly doubled the ability to predict the students' APPE performance (from 24% to 42%, near the high end of the range seen in educational literature). While the quantitative results of this study support the effectiveness of the TJE program, so far the findings are limited to a single cohort of approximately 60 students.

Beyond the assessment of student preparedness (summative function), the implementation of TJE had several other beneficial effects. Students were made aware of the need for multiple skills required in APPEs and professional practice, and were supported in developing those proficiencies (formative function). The opinion survey indicated acceptance of the TJE by the majority of students (Table 5). As for the faculty, the creation and evaluation of the TJE compelled the teachers to regularly reflect on curricular goals and outcomes. Involvement of the whole faculty in the challenge of building an assessment system from the ground up fostered interdepartmental cooperation and collegiality, and prevented the overburdening of individual faculty members.25 Accordingly, in the survey (Table 5) the faculty members rated the TJE program relatively high even before knowing the predictive validity of the examination.

The examination program presented here, or elements of it, should be adaptable for use by most colleges of pharmacy or other healthcare professions as a useful complement to traditional multiple-choice examinations.

SUMMARY

The current ACPE guidelines emphasize the need for comprehensive, formative, and summative testing of ability-based learning. The Triple Jump Examination (TJE) program serves these aims during the P1-P2 years. A strong correlation of TJE scores with preceptor grades in the first APPE year, as well as the hierarchical multivariate model, demonstrate the efficacy of this new program. The results support the use of TJE as a separate and valid tool for the assessment of readiness of pharmacy students for APPEs.

ACKNOWLEDGEMENT

The authors wish to thank Ruth Nemire, PharmD, PhD, for her support and review of this manuscript.

REFERENCES

- 1. Accreditation Council for Pharmacy Education. Accreditation Standards and Guidelines for the Professional Program in Pharmacy Leading to the Doctor of Pharmacy Degree. The Accreditation Council for Pharmacy Education Inc. Available at: http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.pdf. Accessed May 8, 2009.

- 2.Kirschenbaum HL, Brown ME, Kalis MM. Programmatic curricular outcomes assessment at colleges and schools of pharmacy in the United States and Puerto Rico. Am J Pharm Educ. 2006;70(1) doi: 10.5688/aj700108. Article 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;71(4) doi: 10.5688/aj710466. Article 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sansgiry SS, Nadkarni A, Lemke T. Perceptions of PharmD students towards a cumulative examination: the Milemarker process. Am J Pharm Educ. 2006;68(4) Article 93. [Google Scholar]

- 5.Sansgiry SS, Chanda S, Lemke T, Szilagyi J. Effect of incentives on student performance on Milemarker examinations. Am J Pharm Educ. 2004;70(5) doi: 10.5688/aj7005103. Article 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Szilagyi JE. Curricular progress assessments: the Milemarker. Am J Pharm Educ. 2008;72(5) doi: 10.5688/aj7205101. Article 101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kelley KA, Beatty SJ, Legg JE, McAuley JW. A progress assessment to evaluate pharmacy students' knowledge prior to beginning advanced pharmacy practice experiences. Am J Pharm Educ. 2008;72(4) doi: 10.5688/aj720488. Article 88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Pharmacy Curriculum Outcomes Assessment (PCOA) examination is described in the website of the National Association of Boards of Pharmacy (NABP). http://www.nabp.net/ under Assessment programs, PCOA. Accessed May 8, 2009.

- 9.Barrows HS. An overview of the uses of standardized patients for teaching and evaluating clinical skills. Acad Med. 1993;68(6):443–51. doi: 10.1097/00001888-199306000-00002. [DOI] [PubMed] [Google Scholar]

- 10.Austin Z, Gregory P, Tabak D. Simulated patients vs. standardized patients in objective structured clinical examinations. Am J Pharm Educ. 2006;70(5) doi: 10.5688/aj7005119. Article 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McCall KL, MacLaughlin EJ, Fike DS, Ruiz B. Preadmission predictors of PharmD graduates' performance on the NAPLEX. Am J Pharm Educ. 2007;71(1) doi: 10.5688/aj710105. Article 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Anderson HM, Anaya G, Bird E, Moore DL. A review of educational assessment. Am J Pharm Educ. 2005;69(1) Article 12. [Google Scholar]

- 13.Ryan GJ, Nykamp D. Use of cumulative examinations at U.S. schools of pharmacy. Am J Pharm Educ. 2000;64(4):409–12. [Google Scholar]

- 14.Epstein RM, Hundert EM. Defining and Assessing Professional Competence. JAMA. 2002;287(2):226–35. doi: 10.1001/jama.287.2.226. [DOI] [PubMed] [Google Scholar]

- 15.Smith SR, Dollase RH, Boss JA. Assessing students' performances in a competency-based curriculum. Acad Med. 2003;78(1):97–107. doi: 10.1097/00001888-200301000-00019. [DOI] [PubMed] [Google Scholar]

- 16. American Association of Colleges of Pharmacy, Center for the Advancement of Pharmaceutical Education (CAPE), Advisory Panel on Educational Outcomes. Educational outcomes 2004. Available from: http://www.aacp.org/resources/education/Documents/CAPE2004.pdf Accessed September 8, 2009.

- 17. American Association of Colleges of Pharmacy, Center for the Advancement of Pharmaceutical Education (CAPE), Advisory Panel on Educational Outcomes. Educational outcomes, revised version 1998. Available from http://www.aacp.org/resources/education/Documents/CAPE1994.pdf Accessed September 8, 2009.

- 18.Bloom BS, Krathwohl DR. New York, Longmans, Green: 1956. Taxonomy of educational objectives: the classification of educational goals, by a committee of college and university examiners. Handbook I: Cognitive Domain. [Google Scholar]

- 19.Miller GE. The assessment of clinical skills/competence/performance. Acad Med. 1990;65(9 Suppl):S63–76. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 20.Culbertson VL. Pharmaceutical care plan examinations to identify students at risk for poor performance in advanced pharmacy practice experiences. Am J Pharm Educ. 2008;72(5) doi: 10.5688/aj7205111. Article 111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Greenburg DL, Durning SJ, Cohen DL, Cruess D, Jackson JL. Identifying medical students likely to exhibit poor professionalism and knowledge during internship. J Gen Intern Med. 2007;22(12):1711–7. doi: 10.1007/s11606-007-0405-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Myles T, Galvez-Myles R. USMLE Step 1 and 2 scores correlate with family medicine clinical and examination scores. Fam Med. 2003;35(7):510–3. [PubMed] [Google Scholar]

- 23.Lobb WL, Wilkin NE, McCaffrey DJ, Wilson MC, Bentley JP. The predictive utility of nontraditional test scores for first-year pharmacy student academic performance. Am J Pharm Educ. 2006;70(6) doi: 10.5688/aj7006128. Article 128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fassett WE, Campbell WH. Basic Pharmaceutical Science Examination as a predictor of student performance during clinical training. Am J Pharm Educ. 1984;48:239–42. [Google Scholar]

- 25.Garavalia LS. Selecting appropriate assessment methods: Asking the right questions. Am J Pharm Educ. 2003;66(2):108–12. [Google Scholar]