Abstract

Faculty members' contributions to research and scholarship are measured by a variety of indices. Assessment also has become an integral part of the Accreditation Council for Pharmacy Education's accreditation process for professional programs. This review describes some of the newer indices available for faculty scholarship assessment. Recently described metrics include the h-index, m-quotient, g-index, h(2) index, a-index, m-index, r-index, ar index, and the creativity index. Of the newer scholarship metrics available, the h-index and m-quotient will likely have the most widespread application in the near future. However, there is no substitute for thoughtful peer review by experienced academicians as the primary method of research and scholarship assessment.

Keywords: research, literature, scholarship, assessment, evaluation

INTRODUCTION

Strategic planning and assessment are essential elements of academic pharmacy. Measurement of outcome parameters in a strategic plan can help determine how well goals are being met. The Accreditation Council for Pharmacy Education (ACPE) has expanded the nature of assessment in academic pharmacy with its 2007 guidelines.1 The ACPE changes have recently prompted the American Association of Colleges of Pharmacy to devote their 11th annual Institute to evaluation, assessment, and outcomes.2 However, it is often difficult to know how to measure a particular area or goal and which parameter more accurately measures change than others.3,4 For example, scholarship can be measured by such parameters as the amount and type of grant support for research, number of books, book chapters, or abstracts published, and most classically, the number of journal articles published.5 It is this last parameter of measuring scholarship that has undergone a recent renaissance. Interesting new measures of the depth, breadth, and creativity in journal article publishing have been developed in recent years. The purpose of this paper is to describe these new scholarship metrics.

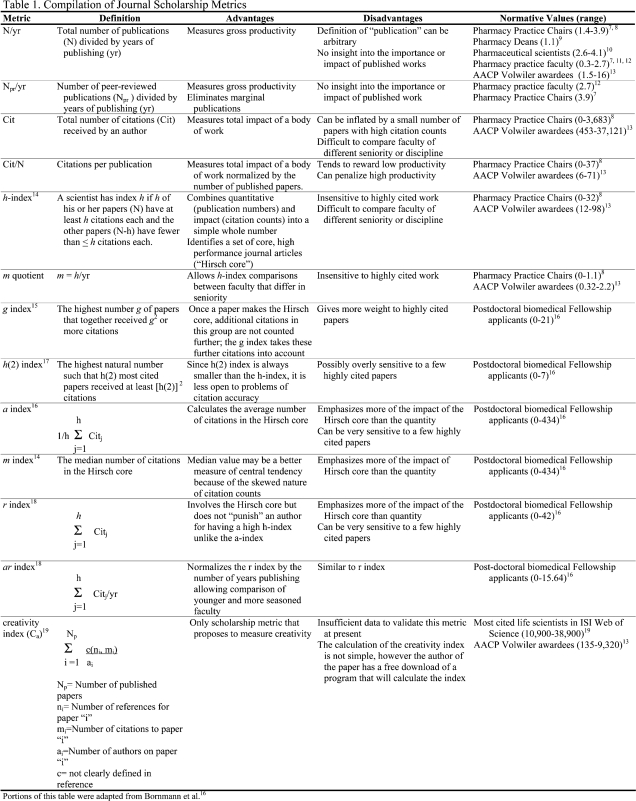

Journal article publishing, as a measure of faculty scholarship, has historically been tracked as simply the number of papers published by a faculty member. Further refinement of this evaluation process has involved differentiating peer-reviewed and non-peer-reviewed publications, discriminating between research publications and other types of scholarship (eg, review articles, case reports, letters to the editor), and counting the number of papers on which the faculty member was the first or senior author. Citation analysis expanded the evaluation of journal article publishing to include the impact or usefulness of a faculty member's work by measuring the surrogate marker of how many times a paper has been cited by other authors.6 The Institute for Scientific Information (ISI) began compiling and publishing citation data and, as a result, the number of times a faculty member's articles had been cited in the literature became part of the metrics of scholarship assessment. In the last 5 years, a host of new parameters have been introduced to analyze and quantify a faculty member's impact and standing in a particular discipline. Table 1 lists these various parameters and describes their calculation, advantages, disadvantages, and their normative values in available disciplines.7-19 While there are too many of these parameters to fully describe all of them here, we highlight several of the newer indices that hold promise as useful scholarship metrics.

Table 1.

NEW INDICES

h-index and m quotient

Created by Hirsch in 2005, the h-index combines the quantitative aspects of the number of published papers with the impact features of citation counts.14 The definition of the h-index is listed in Table 1. If a faculty member has an h-index of 20, the faculty member has published 20 papers, each of which has ≥20 citations. These “h” papers are considered a group of high performance publications and have been given the name “Hirsch core.”20 The h-index is easily calculated and is now available as a regular feature of the ISI Web of Science. The h-index is a “balanced” metric that is insensitive to an extensive body of work that is largely uncited or a small number of publications that have abnormally high citation counts. The h-index correlates well with peer assessment and can be predictive of future academic success.21,22 One drawback of the h-index is that it favors scholars who have consistently published papers over many years.23,24 Therefore, it is difficult to compare the h-index of a junior faculty member to that of a senior faculty member. The m quotient was introduced to normalize the h-index by taking into account the number of publishing years.14 The m quotient allows comparisons of faculty members' h-indices across a wide range of tenure situations. The h-indices and m quotients for pharmacy practice chairs have recently been published.8 Data also are available in the field of physics, where these metrics were originally developed.16 These parameters are usually discipline specific and it is not generally useful to compare them among faculty members from different disciplines.23-28

a-index

A variation of the h-index, the a-index is calculated as the average number of citations received by the Hirsch core publications. The a-index provides an assessment of the impact of the most productive core publications of a faculty member. The a, m, r, and ar indices (see Table 1 for definitions) all similarly measure the impact of the Hirsch core publications in various ways. Bornmann and colleagues16 found that peer assessments correlated better with indices that measure the quality of the productive core rather than indices that measure the quantity of the productive core (h, g, h(2) indices and m-quotient, Table 1).

Creativity Index

The creativity index (Ca) was developed recently by Soler and is the only metric that claims to measure a faculty member's creativity.19 This metric involves counting the number of citations a paper receives and the number of references the paper cited, normalized by the number of authors of the paper. Soler has described it as follows:

…imagine that two scientists, Alice and Bob, address independently an important and difficult problem in their field. Bob takes an interdisciplinary approach and discovers that a method developed in a different field just fits their need. Simultaneously, Alice faces the problem directly and reinvents the same method by herself (thus making less references in her publication). All other factors being equal, both papers will receive roughly the same number of citations, since they transmit the same knowledge to their field. But it may be argued that Alice's work was more creative in some sense, and that her skills might possibly (but not necessarily) be more valuable in a given selection process. Eventually, the usefulness of different merit indicators will depend on how well they correlate with real human made selections.19

The calculation of the creativity index is somewhat complicated. The everyday use of this calculation for assessing departmental faculty members is not very practical. However, Soler has a Web page with a downloadable program that will calculate the index from an ISI Web of Science file. The instructions for this are available in a paper.19

DISCUSSION

The ISI has historically been the exclusive provider of citation analysis data. However, other providers of scholarly analysis of citations have emerged. Scopus (Elsevier B.V., New York, NY) and Google Scholar (Google, Inc, Mountain View, CA) have challenged ISI as the only source of citation data. Unfortunately, the same search done on all 3 databases would probably generate 3 different results from the available citation networks.29 This must be kept in mind when evaluating faculty citation counts using different sources.

Citation analysis, as a technique to measure impact, has limitations.30-34 Methodological issues, such as misspelled author names, homographs (ie, scientists with the same names in different disciplines), inconsistent use of author initials, or author name changes are potential problems.30,34 Databases, such as ISI, Scopus, and Google Scholar, have limitations and differences in their journal coverage.33 Additionally, publication errors in source journals and publication errors in ISI, Scopus, or Google Scholar databases can confuse results. Finally, self-citations, negative citations, and lack of citations from emerging new sources (ie, Web metrics) can further complicate results. However, taken in context, citation analysis is a useful addition to the overall assessment of a faculty member's scholarly work.33,35 Many of the metrics outlined in this paper utilize citation analysis as part of the calculation and the drawbacks of this method must be understood.

The new indices for measuring scholarship can provide unique insights into evaluating journal scholarship. However, no parameter, on its own, fully represents all aspects of a particular scholar's work. Each metric has strengths and weaknesses as described in Table 1. Further, nothing replaces the thoughtful review of unbiased senior peers and colleagues in assessing scholarship.36,37 Peer review, although imperfect, is the gatekeeper of scholarly publishing and remains the foundation of the academic tenure and promotion system. Of the available new indices, the h-index and its derivative, the m quotient appear to be finding the most acceptance among academic and research establishments. The h-index is a simple, whole number that is easily calculated, correlates well with peer assessments, and has some predictive value for future success.21,38-40 In addition, the h-index takes into account both quantitative (number of papers) and qualitative (citations received) aspects of scholarship. The original Hirsch paper describing the h-index was cited 385 times as of January 2009 (Google Scholar, January 23, 2009). The majority of the newer indices do not have a sufficient track record to warrant routine calculation. An intriguing aspect of the new indices is the possibility of calculating departmental or college-wide parameters.41 For example, h-index data from a sufficient number of specific departments may be a marker to compare departments across a wide range of colleges of pharmacy. Of course, these data would need to be normalized by the number of departmental faculty members and type of college (eg, public, private, health-sciences based, non-health-sciences based). Caution should be exercised when comparing metrics across colleges with varying missions and goals. The skewed nature of publication data should also be taken into account. As a rough guide, approximately 20% of faculty members from a given college or department will normally account for 80% of the publications.8 Therefore, measures of central tendency should be evaluated with caution. Appropriately normalized data may eventually be helpful in assessing the amount and quality of scholarship being conducted at various colleges of pharmacy and establishing institutional goals.

CONCLUSION

While there are many new indices with interesting, unique attributes, none is a perfect metric for measuring scholarship. Nothing replaces thoughtful peer assessment of the works in making judgments concerning the quality of faculty scholarship. However, the new metrics, combined with discipline-specific normative values, may aid administrators and department chairs in evaluating individual faculty members in the larger realm of their scientific discipline. These measures can also be applied to individual faculty members, departments, research groups, or an entire college of pharmacy. To be more useful for these purposes, normative values for academic pharmacy would need to be generated.

REFERENCES

- 1. Accreditation Standards and Guidelines for the professional program in pharmacy leading to the Doctor of Pharmacy Degree. Adopted: January 15, 2006. Effective: July 1, 2007. American Council for Pharmacy Education, Chicago, IL 2006. http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.pdf Accessed April 24, 2009.

- 2.MacKinnon GE., III Evaluation, Assessment, and Outcomes in Pharmacy Education: The 2007 AACP Institute. Am J Pharm Educ. 2008;72(5) doi: 10.5688/aj720596. Article 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Lehmann S, Jackson AD, Lautrup B. Measures and mismeasures of scientific quality. Available at: http://arxiv.org/abs/physics/0512238 Accessed April 24, 2009.

- 4.Boyce EG. Finding and Using Readily Available Sources of Assessment Data. Am J Pharm Educ. 2008;72(5) doi: 10.5688/aj7205102. Article 102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Leslie SW, Corcoran GB, MacKichan JJ, Undie AS, Vanderveen RP, Miller KW. Pharmacy scholarship reconsidered: The report of the 2003-2004 research and graduate affairs committee. Am J Pharm Educ. 2004;68(3) Article S6. [Google Scholar]

- 6.Garfied E. Citation indexes in sociological and historical research. American Documentation. 1963;14:289–91. [Google Scholar]

- 7.Jungnickel PW. Scholarly performance and related variables: A comparison of pharmacy practice faculty and departmental chairpersons. Am J Pharm Educ. 1997;61(1):34–44. [Google Scholar]

- 8.Thompson DF, Callen EC, Nahata MC. Publication Metrics and Record of Pharmacy Practice Chairs. Ann Pharmacother. 2009:43268–75. doi: 10.1345/aph.1L400. [DOI] [PubMed] [Google Scholar]

- 9.Thompson DF, Callen EC. Publication records among college of pharmacy deans. Ann Pharmacother. 2008;42(1):142–3. doi: 10.1345/aph.1K431. [DOI] [PubMed] [Google Scholar]

- 10.Thompson DF, Harrison KE. Basic science pharmacy faculty publication patterns from research-intensive US Colleges, 1999-2003. Pharm Educ. 2005;5(2):83–6. [Google Scholar]

- 11.Thompson DF, Segars LW. Publication rates in US schools and colleges of pharmacy, 1976-1992. Pharmacotherapy. 1995;15(4):487–94. [PubMed] [Google Scholar]

- 12.Coleman CI, Schlesselman LS, Lao E, White CM. Numbers and impact of published scholarly works by pharmacy practice faculty members at accredited US colleges and schools of pharmacy (2001-2003) Am J Pharm Educ. 2007;71(3) doi: 10.5688/aj710344. Article 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Thompson DF, Callen EC. Bibliometric analysis of Volwiler awardees [abstract]. Meeting Abstracts. 110th Annual Meeting of the American Association of Colleges of Pharmacy, Boston, Massachusetts, July 18-22, 2009. Am J Pharm Educ. 2009;73(4) Article 39. [Google Scholar]

- 14.Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci. 2005;102:16569–72. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Egghe L. Theory and practice of the g-index. Scientometrics. 2006;69(1):131–52. [Google Scholar]

- 16.Bornmann L, Mutz R, Daniel H-D. Are there better indices for evaluation purposes than the h index? A comparison of nine different variants of the h index using data from biomedicine. J Am Soc Info Sci Technol. 2008;59:830–7. [Google Scholar]

- 17.Jin B. h-index: An evaluation indicator proposed by scientists. Science Focus. 2006;1(1):8–9. [Google Scholar]

- 18.Jin B, Lian L, Rousseau R, Egghe L. The r and ar-indices: complementing the h-index. Chinese Sci Bull. 2007;52(6):855–63. [Google Scholar]

- 19. Soler JM. A rational indicator of scientific creativity. Available at: http://www.citebase.org/fulltext?format=application%2Fpdf&identifier=oai%3AarXiv.org%3Aphysics%2F0608006 Accessed April 24, 2009.

- 20.Burrell Q. On the h-index, the size of the Hirsch core and Jin's a-index. J Informetrics. 2007;1(2):170–7. [Google Scholar]

- 21.Hirsch JE. Does the h index have predictive power? Proc Natl Acad Sci. 2007;104:19193–8. doi: 10.1073/pnas.0707962104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.van Raan AFJ. Comparison of the Hirsch-index with standard bibliometic indicators and with peer judegement for 147 chemistry research groups. Scientometrics. 2006;67(3):491–502. [Google Scholar]

- 23.Wendl MC. h-index: However ranked, citations need context [letter] Nature. 2007;449(7161):403. doi: 10.1038/449403b. [DOI] [PubMed] [Google Scholar]

- 24.Kelly CD, Jennions MD. h-index: age and sex make it unreliable [letter] Nature. 2007;449(7161):403. doi: 10.1038/449403c. [DOI] [PubMed] [Google Scholar]

- 25.Bornmann L, Hans-Dieter D. Does the h-index for ranking scientists really work? Scientometrics. 2005;65(3):391–2. [Google Scholar]

- 26.Oppenheim C. Using the h-index to rank influential British researchers in information science and librarianship. J Am Soc Info Sci Technol. 2007;58(3):297–301. [Google Scholar]

- 27.Imperial J, Rodriguez-Navarro A. Usefulness of Hirsch's h-index to evaluate scientific research in Spain. Scientometrics. 2007;71(2):271–82. [Google Scholar]

- 28.Iglesias JE, Pecharroman C. Scaling the h-index for different scientific ISI fields. Scientometrics. 2007;73(3):303–20. [Google Scholar]

- 29.Meho LI, Yang K. Impact of data sources on citation counts and rankings of LIS faculty: Web of Science versus Scopus and Google Scholar. J Am Soc Info Sci Technol. 2007;58(13):2105–25. [Google Scholar]

- 30.MacRoberts MH, MacRoberts BR. Problems of citation analysis: A critical review. J Am Soc Info Sci. 1989;40(5):342–9. [Google Scholar]

- 31.Seglen PO. Citation frequency and journal impact: Valid indicators of scientific quality? J Intern Med. 1991;229(2):109–11. doi: 10.1111/j.1365-2796.1991.tb00316.x. [DOI] [PubMed] [Google Scholar]

- 32.Seglen PO. Citation rates and journal impact factors are not suitable for evaluation of research. Acta Orthop Scand. 1998;69(3):224–9. doi: 10.3109/17453679809000920. [DOI] [PubMed] [Google Scholar]

- 33.Meho LI, Rogers Y. Citation counting, citation ranking, and h-index of human-computer interaction researchers: A comparison between Scopus and Web of Science. J Am Soc Info Sci Technol. 2008;59(11):1711–26. [Google Scholar]

- 34.Leydesdorff L. Caveats for the use of citation indicators in research and journal evaluations. J Am Soc Info Sci Technol. 2008;59(2):278–87. [Google Scholar]

- 35.Meho LI. The rise and rise of citation analysis. Physics World. 2007;20(1):32–6. [Google Scholar]

- 36.Kassirer JP, Campion EW. Peer review: Crude and understudied, but indispensable. JAMA. 1994;272(2):96–7. doi: 10.1001/jama.272.2.96. [DOI] [PubMed] [Google Scholar]

- 37.Jefferson T, Wager E, Davidoff F. Measuring the quality of editorial peer review. JAMA. 2002;287(21):2786–9. doi: 10.1001/jama.287.21.2786. [DOI] [PubMed] [Google Scholar]

- 38.Costas R, Bordons M. The h-index: Advantages, limitations, and its relation with other bibliometric indicators at the micro level. J Informetrics. 2007;1(38):193–203. [Google Scholar]

- 39.Vanclay JK. On the robustness of the h-index. A Am Soc Info Sci Technol. 2007;58(10):1547–50. [Google Scholar]

- 40.Bornmann L, Daniel H-D. Does the h-index for ranking of scientists really work? Scientometrics. 2005;65(3):391–2. [Google Scholar]

- 41.Arencibia-Jorge R, Barrios-Almaguer I, Fernandez-Hernandez S, Carvajal-Espino R. Applying successive h-indices in the institutional evaluation: A case study. J Am Soc Info Sci Technol. 2008;59(1):155–7. [Google Scholar]