Abstract

Background

Researchers and medical practitioners have long sought the ability to continuously and automatically monitor patients beyond the confines of a doctor's office. We describe a smart home monitoring and analysis platform that facilitates the automatic gathering of rich databases of behavioral information in a manner that is transparent to the patient. Collected information will be automatically or manually analyzed and reported to the caregivers and may be interpreted for behavioral modification in the patient.

Method

Our health platform consists of five technology layers. The architecture is designed to be flexible, extensible, and transparent, to support plug-and-play operation of new devices and components, and to provide remote monitoring and programming opportunities.

Results

The smart home-based health platform technologies have been tested in two physical smart environments. Data that are collected in these implemented physical layers are processed and analyzed by our activity recognition and chewing classification algorithms. All of these components have yielded accurate analyses for subjects in the smart environment test beds.

Conclusions

This work represents an important first step in the field of smart environment-based health monitoring and assistance. The architecture can be used to monitor the activity, diet, and exercise compliance of diabetes patients and evaluate the effects of alternative medicine and behavior regimens. We believe these technologies are essential for providing accessible, low-cost health assistance in an individual's own home and for providing the best possible quality of life for individuals with diabetes.

Keywords: activity recognition, assessment consoles, connected health, health monitoring, sensor data analysis, smart homes

Introduction

Medical researchers and practitioners have long sought the ability to continuously and automatically monitor diabetes patients. Additionally, the use of monitoring data to influence treatment dosage or regimen in real time is an important objective of behavior modification practice. These capabilities can help healthcare systems to overcome key obstacles to delivering acceptable quality of service at a reasonable unit cost per patient. A primary obstacle is patient noncompliance with respect to diet, exercise, and medication. For example, since the 1980s, the prevalence of overweight and obese people has increased at an alarming rate. Data1 show that 60% of all adults in Florida (the retirement capital of the world) are overweight or obese. Excess body weight contributes to diabetes and the resulting $312 billion annual cost to the U.S. economy.

In practice, implementing semi-automated, pervasive healthcare monitoring and delivery has proven to be a very difficult process. The reasons for this include, but are not limited to, economic capabilities, lifestyle choices, ethnic customs, information access, and physical limitations, as well as the generalized structure of our health care delivery system.2,3 While all persons with type 1 diabetes rely on daily insulin injections, most people with type 2 diabetes can control their diabetes by pursuing a healthy meal plan and exercise program, losing excess weight, and taking oral medication. Many people with diabetes also need to take medications to control their cholesterol and blood pressure.

Government, academia, and business professionals have responded to this situation by designing educational programs,4–9 personal monitoring devices such as glucose monitors and calorie counters, and technology that transmits patient health data to the care provider. Unfortunately, monitoring and connectivity devices are often applied in isolation, enforce rigid hardware requirements, and must be used, operated, and maintained by patients consistently with monitoring, diagnosis, or treatment requirements. Additionally, apart from self-reports to researchers, doctors, nurses, or caregivers, there is little or no knowledge of patient behavior in response to these monitoring devices, which means an absence of verification whether behavior modulation is being implemented correctly. Commercializing such technologies will require a high level of interoperability, zero configuration (plug and play), transparency, flexibility, and extensibility.

A promising direction for subject or patient monitoring utilizes emerging technologies for connecting sensors, monitoring personnel, and caregivers via smart spaces (also called intelligent environments). Several academic and industrial research projects have developed concepts and technology for smart homes with healthcare plugins to provide graphical feedback on behavioral patterns,10 to monitor residents' health status,11–14 to provide reminders of daily activities,15,16 and to perform assessment of cognitive abilities.17 Synergistically, underlying hardware and software development has enabled smart healthcare.18–20

In response to the preceding challenges, we developed a monitoring and analysis platform consisting of economically deployable connectivity technology and personal wearable devices. This will enable automatic gathering of rich databases of behavioral information in a manner transparent to the patient. This health data will be analyzed and reported to caregivers for diagnosis and treatment. The technology is interoperable (usable across many hardware and software platforms), self-integrating (able to insert itself into existing and future sensor suites or networks), transparent (to the subject, patient, and researcher for ease of applications development), and plug and play (for ease of installation and commercialization).

In this article, we introduce our health platform architecture and explain how information from smart homes, personal monitoring devices, and data analysis software can be combined to provide a comprehensive approach to behavioral monitoring.

Methods

We have developed approaches and technologies that support transparent self-integration of a very wide variety of devices to implement interoperability, remote monitoring, and remote programmability. This supports wide-area deployment in laboratory, clinical, and residential/occupational monitoring, diagnostic, and behavior modification applications. Our approaches also use machine learning technologies to automatically create models of resident behaviors, find interesting patterns in collected data, and generate inferences from these patterns.

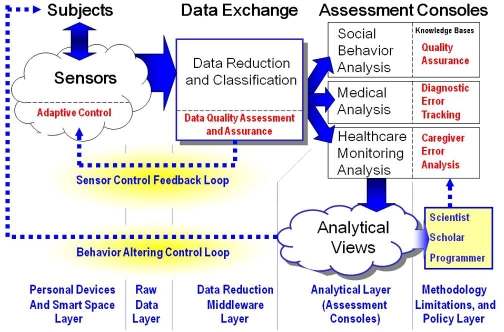

Our health platform architecture, shown in Figure 1, consists of five layers. First, the “personal devices and smart space layer” contains the set of available smart space or personal sensors. Second, the “raw data layer” accepts data streams generated by the sensors in Layer 1. These data are continuously streamed out of smart spaces into the third layer, “data reduction middleware layer,” which performs statistical analysis as well as data reduction and classification. For example, software at this layer performs error analysis to measure data quality and data fusion to combine multisensor outputs into an intelligible data stream.

Figure 1.

Health platform architecture.

Fourth, the “analytical layer” includes monitoring consoles and assessment consoles. These consoles can assist social and healthcare researchers and practitioners to monitor their patients, perform domain-specific analyses, and report relevant information and recommendations concerning their health and behavior back to researchers, practitioners, and caregivers. Fifth, the “methodology limitations and policy layer” is a knowledge base that achieves data quality assurance and compliance with stated requirements.

The key distinguishing features of our health monitoring architecture are as follows:

Flexibility and Extensibility: Our approach is sufficiently flexible that heterogeneous monitoring devices (e.g., cameras, spectral and thermal sensors, location sensors, and physiological monitors) and their supporting technologies need only be integrated once, then can be deployed anywhere without significant additional effort. This directly facilitates system extensibility and maintainability, which is not a feature found in previous technologies.21,22

Interoperability and Transparency: Our approach facilitates transparent self-integration, while overcoming legacy system limitations usually found in hospital, clinical, and laboratory monitoring, diagnostic, and information systems.21,22

Self-Integration: Our technology allows devices to transparently self-integrate into back-end systems (including legacy systems) without the help of system integrators or engineers,23,24 thus facilitating plug-and-play expansion over a wide variety of standards and protocols.

Remote Monitoring and Programming: Our technology also supports remote programming and status monitoring of all devices, sensors, or communication networks in the system to facilitate interactive monitoring and configuration in response to study, clinical, or regulatory constraints.21,22

Model Generation and Analysis: Our machine learning algorithms analyze collected data on each patient to build a model of patient diet, exercise, activity, and health profile. Other programs can use this model to classify the data into predetermined categories (e.g., compliant or noncompliant and health risk or healthy), predict upcoming events, and detect trends in data values.25,26

In the following sections, we detail each layer of our architecture and illustrate how it enables behavioral health monitoring and facilitates behavioral alteration.

Personal Devices and Smart Spaces Layer

Layer 1 of our architecture contains physical components that capture data related to the health of an individual. These components may include commercially available wearable sensors or sensor-rich smart spaces. Many currently available wearable sensors are worn next to the skin and are noninvasive, such as an Actigraph watch that monitors energy expenditure using an accelerometer and a wireless pulse monitor. Other sensors are currently being developed that can be woven into clothing,27 allowing any number of sensors to be worn without additional burden placed on the patient.

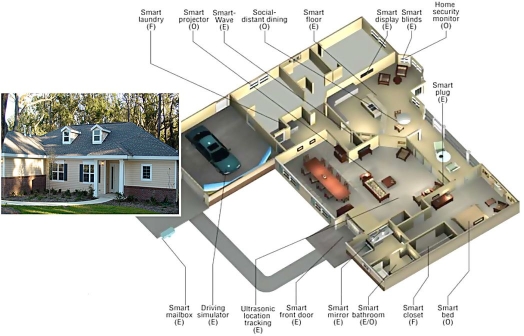

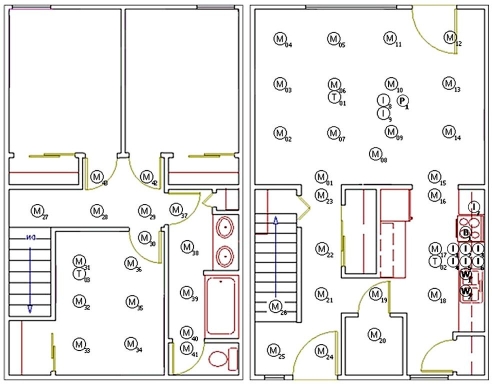

As an example, we have equipped two physical smart space test beds for our health platform. The first is the Gator Tech smart house (GTSH, see Figure 2),21,22 a 2500 sq ft house located in the Oak Hammock retirement community. The second is the CASAS smart apartment located on the Washington State University campus (see Figure 3).28 Both test beds have common features, including sensors for motion, light, temperature, humidity, and door usage, and power-line controllers to automate control of lights and devices. These coordinated research facilities are two of a very few research facilities in the United States, where human subjects are engaged in leading-edge healthcare research by living in the smart space for varying periods of time.

Figure 2.

The Gator Tech smart house.

Figure 3.

The CASAS apartment. Circles indicate the positions of motion (M), temperature (T), and item (I) sensors throughout the environment.

Raw Data Layer

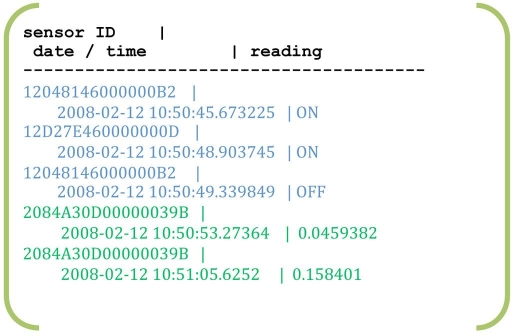

The amount of data that is generated by wearable devices and by smart spaces is enormous, amounting to thousands of readings per day. Figure 4 shows a small sample of data that captured in the CASAS smart apartment. Capturing raw data in a form that is quickly accessible for manual interpretation or automatic analysis is the responsibility of the raw data layer. However, pushing the raw data to the software component that processes this data is the responsibility of the middleware layer, which we describe next.

Figure 4.

Sensor data generated while the resident was washing hands, including motion ON/OFF readings and water flow amounts.

Data Reduction Middleware Layer

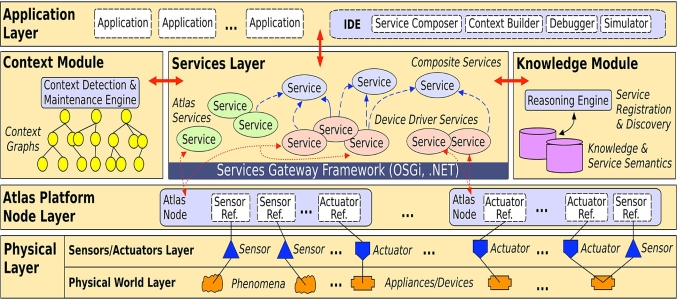

In order to achieve our goal of creating a smart home-based health platform that is flexible, extensible, transparent, and interoperable, we treat physical sensors, physical actuators, and software components as software services. We achieve this with our Atlas sensor platform (shown in Figure 5).

Figure 5.

The Atlas middleware.

Atlas contains hardware and software adaptors, which abstract devices, sensors, and networks into software entities that can be readily manipulated by programmers without additional effort by systems integrators or hardware engineers.29 Atlas supports a plug-and-play approach. When powered on, a device such as a sensor or controller automatically registers itself, allowing programmers to access it in the same manner as a software service.

Once selected, the devices are adapted via Atlas into our service-oriented device architecture.30 Atlas then enables these devices to connect to a smart space through wireless local connectivity, which makes the devices securely accessible from remote internet sites as software services. This conversion from devices (hardware) to services (software) is a major enabler and a unique capability for the creation and programming of our health assessment consoles. In addition, mobile phones can be integrated into the system and used as a channel to deliver health data and recommendations to the subjects or patients.

Analytical Layer

Care providers usually do not have the time or expertise to interpret large volumes of raw data generated by smart spaces. Fortunately, the analytical layer of our health platform provides configurable, friendly consoles that present data in an understandable format. For example, one graphical tool will show the energy consumed during each labeled activity and the total summarized over the past day, week, and month. This type of feedback provides patients with real-time feedback on their behavioral habits and the effects of their lifestyle choices and changes.

The consoles also allow caregivers to remotely interact with the patients and their environments. For example, caregivers might want to change parameters in the patient's profile, advise the patient, or alter the patient's prescribed behavior. Such closed-loop feedback is a powerful tool with which physicians and caregivers can study the impact of different regimens.

An issue that arises in the collection and analysis of this data is the concern of maintaining the privacy of the data. To ensure that only the intended recipients view the information, all collected data is encrypted before it is stored or transmitted. Healthcare providers, insurance companies, and family members can access only the data sources and reports to which they have been granted access by the patient.

To date, we have designed two types of assessment programs that provide insights relevant for behavioral monitoring of diabetes patients. The first is an algorithm that tracks an individual's daily activities, which allows the caregiver to monitor behavioral compliance and judge functional well-being of the patient. The second is an algorithm that recognizes chewing behaviors from video, which is a useful step in monitoring the overall dietary habits of an individual.

Activity Recognition

In order to recognize activities being performed by smart home residents, mechanisms such as naïve Bayes classifiers, Markov models, and dynamic Bayes networks could be employed to mathematically combine and classify sensor data streams.31–33 However, existing approaches must be enhanced to work in real-world situations, where tasks are interleaved, activities are incomplete, and learned models must be adapted for new individuals.

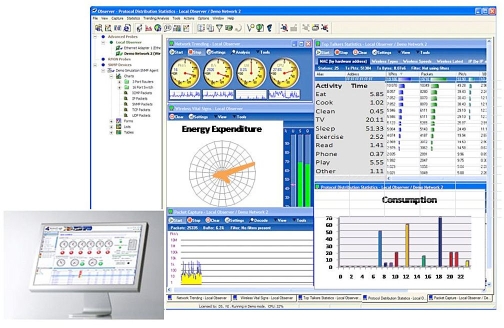

We have designed an approach to probabilistically identify activities in a smart space from sensor data while the activity is being performed. Specifically, a hidden Markov model is constructed for each task to be recognized. Since a fixed number of prior sensor events provide context for activity recognition, an activity can be identified even as the resident moves from one task to another. By quantifying probabilistic anomalies in the received sensor data, we can compute a metric of how completely an activity is performed, with a qualitative description of omitted steps. The results of tracking activities will be displayed in an assessment console (shown in Figure 6).

Figure 6.

Generic assessment consoles.

Analysis of Chewing Motions

Our second analysis program focuses on assessment of diet routines and behavior compliance. Here, video imagery is processed to detect and analyze chewing behavior by segmenting mouth regions of video sequences containing chewing-related features. Next, we compute frequency spectra in spatial and temporal dimensions that directly support classification of oral activity into categories such as chewing, talking, or yawning. The analyzed chewing activity can be used to estimate the quantity and type of food an individual is consuming.

Feedback Loops

For complete patient data analysis, closed-loop feedback or the ability to change the analysis approach based on prior results and user response must be provided. As shown in Figure 6, the health platform should offer the following types of feedback:

The Sensor Control Feedback Loop will be directed by data quality assessment implemented in the data reduction and middleware layer. This will allow sensors to be automatically and adaptively configured from a remote monitoring station in response to performance requirements. For example, suppose a blood pressure monitor exhibits wide fluctuations in output. In this case, the history of blood pressure data from that sensor would be analyzed to determine whether or not the sensor exhibits failure characteristics or whether the patient was being medicated or exercised in ways that might cause such fluctuations. In the former case, the sensor could be reconfigured or replaced. In the latter case, the patient's caregiver, researcher, or physician would be notified, and corrective behaviors would be recommended.

The Behavior Altering Control Loop transforms data from the assessment consoles into behavior modification directives for each patient. For example, suppose that a patient's wearable glucose sensor produces data whose analysis indicates an abnormally high glucose level. Further assume that the patient is about to prepare dinner. After receiving salient sensor data, the analytical layer would inform the caregiver of the elevated glucose level (sensor data) and impending dinner preparation (context). An analysis console would then generate the recommendation that sucrose levels in the patient's dinner be reduced, for example, by elimination of sweet desserts. The healthcare personnel would then employ a monitoring console to inform the patient that his or her glucose level is high and that sweet desserts should be avoided that evening.

Results

Our smart home-based health platform has been implemented and offers a health monitoring technology that is currently being investigated for diabetes patients. At the physical and raw data layers, we have implemented and successfully demonstrated our technologies in the GTSH and the CASAS smart apartment. At the middleware layer, we have utilized the Atlas architecture. The resulting software entities can be readily manipulated by programmers in commonly available languages such as Java, C, or C++.

Because a key to the health application of this technology is the development of analysis tools, we have implemented and tested our activity recognition and chewing classification technologies. In the case of activity recognition, we brought 20 adult participants into the CASAS smart apartment one at a time. In each case, the participant was asked to perform a sequence of five activities: (1) look up a number in the phone book, dial the number, and write down the cooking instructions heard on the recording; (2) wash hands in the kitchen sink; (3) cook a pot of oatmeal as specified from the phone directions; (4) eat the oatmeal while taking medicine; and (5) clean the dishes.

Sensor information was collected from the apartment during the study, and Markov models were learned for each of the five activities. Using three-fold cross validation, the Markov model classification algorithm achieved 98% recognition accuracy.

In a separate experiment, we brought an additional 20 participants into the apartment, then we asked them to perform the activities while we injected an error such as misdialing the number, leaving the water or stove burner on, forgetting the medicine, or cleaning dishes without water. When we tested our algorithm's ability to recognize these errors, all injected errors were detected except for one case among the last four tasks. In this “mistaken” case, the participant actually forgot to perform the task erroneously, instead performing correctly.

Our chewing classification algorithm was tested on collected video sequences for five different activities (chewing with mouth open, chewing with mouth closed, talking, making faces, and no facial motion). In the preliminary study, the behavioral classification results were widely separated in pattern space for chewing versus clutter data such as talking, making faces, or no facial motion. We were also able to automatically distinguish chewing with mouth closed versus chewing with mouth open. In one case, chewing was confused with talking that occurred at the same temporal frequency and pattern of jaw motion as chewing.

Conclusions

We have designed a smart home-based software architecture that assists in behavioral monitoring for diabetes patients. Through personal connected devices and smart home technologies, we are implementing ways to gather data that will help us overcome many of the obstacles of adequate diabetes care. Through advances in analysis and diagnosis, this information will be utilized to improve the effectiveness of diabetes self-management education as well as the information available to health care professionals providing diabetes care.

We successfully demonstrated a software architecture that provides flexible, interoperable, plug-and-play integration of health monitoring components from smart home data collection through data analysis and recommendation. This architecture will support behavioral monitoring for diabetes patients beyond information that can be collected by self-reports or measured in a medical practitioner's office. Although software components have not yet been designed for the methodology limitations and policy layer, the integration of these components to support behavior alteration can be readily achieved. Application of these innovative, emerging technologies can thus produce dramatic improvements in the lives of those living with diabetes and reduce the public and private health care costs associated with treating the disease.

While we have demonstrated the ability to integrate health monitoring software components together into a comprehensive system, we still have many issues to address related to the individual behavioral monitoring algorithms. In the next stage of this project, we will perform intensive studies to validate the accuracy and understandability of our individual health assessment consoles with participants from target populations. We will also gather initial feedback from healthcare providers based on data collected from these participants.

Abbreviations

- GTSH

Gator Tech smart house

References

- 1.Centers for Disease Control and Prevention. www.cdc.gov/diabetes. Accessed June 19, 2008.

- 2.National Institute of Diabetes and Digestive and Kidney Diseases of the National Institutes of Health. www.niddk.nih.gov. Accessed June 19, 2008.

- 3.U.S. Department of Health and Human Services, Office of Minority Health. www.omhrc.gov. Accessed June 19, 2008.

- 4.Children with Diabetes. www.childrenwithdiabetes.com/index_cwd.htm. Accessed June 19, 2008.

- 5.American Diabetes Association. www.diabetes.org/weightloss-and-exercise.jsp. Accessed June 19, 2008.

- 6.National Institute of Diabetes and Digestive and Kidney Diseases. www.niddk.nih.gov/health/nutrit/nutrit.htm. Accessed June 19, 2008.

- 7.Shape Up America. www.shapeup.org. Accessed June 19, 2008.

- 8.National Institutes of Health National Diabetes Education Program. ndep.nih.gov/about/factsheet.htm. Accessed June 19, 2008.

- 9.Centers for Disease Control and Prevention Diabetes Public Health Resource. www.cdc.gov/diabetes/index.htm. Accessed June 19, 2008.

- 10.Barger TS, Brown DE, Alwan M. Health-status monitoring through analysis of behavioral patterns. IEEE Trans Syst Man Cybern Part A. 2005;35(1):22–27. [Google Scholar]

- 11.Carter J, Rosen M. Unobtrusive sensing of activities of daily living: a preliminary report. Proc First Joint BMES/EMBS Conf. (IEEE, 1999); Oct. 13–16, 1999; p. 678. [Google Scholar]

- 12.Cook DJ, Das SK, editors. Smart environments: technologies, protocols and applications. Hoboken: John Wiley and Sons; 2004. [Google Scholar]

- 13.Helal A, Mokhtari M, Abdulrazak B. Hoboken: John Wiley and Sons; 2007. The engineering handbook on smart technology for aging, disability and independence. [Google Scholar]

- 14.Ogawa M, Suzuki R, Otake S, Izutsu T, Iwaya T, Togawa T. Long-term remote behavioral monitoring of elderly by using sensors installed in ordering houses. Proc Int IEEE-EMBS Special Topic Conf Microtechnologies in Medicine and Biology (IEEE, 2002); May 2–4, 2002; pp. 322–335. [Google Scholar]

- 15.Kautz H, Arnstein L, Borriello G, Etzioni O, Fox D. An overview of the assisted cognition project. Proc AAAI Workshop on Automation as Caregiver: The Role of Intelligent Technology in Elder Care (AAAI, 2002; July 29, 2002; pp. 60–65. [Google Scholar]

- 16.Mihailidis A, Barbenel JC, Fernie G. The efficacy of an intelligent cognitive orthosis to facilitate handwashing by persons with moderate to severe dementia. Neuropsychological Rehabilitation. 2004;14(1-2):135–171. [Google Scholar]

- 17.Pollack ME, Brown L, Colbry D, McCarthy CE, Orosz C, Peintner B, Ramakrishnan S, Tsamardinos I. Autominder: An intelligent cognitive orthotic system for people with memory impairment. Robot Auton Syst. 2003;44(3-4):273–282. [Google Scholar]

- 18.Mann WC, Helal A. Technology and chronic conditions in later years: reasons for new hope. In: Wahl HW, Tesch-Roemer C, Hoff A, editors. Aging and society. New York: Baywood Publishing Company; 2004. [Google Scholar]

- 19.Noel HC, Vogl DC, Erdos JJ, Cornwall D, Levin F. Home telehealth reduces healthcare costs. Telemed J E Health. 2004;10(2):170–183. doi: 10.1089/tmj.2004.10.170. [DOI] [PubMed] [Google Scholar]

- 20.Warren S, Craft RL. Designing smart healthcare technology into the home of the future. Proc Int BMEEMBS Conf Serving Humanity, Advancing Technology (IEEE, 1999); Oct. 12, 1999; pp. 12–16. [Google Scholar]

- 21.Helal S, Mann W, El-Zabadani H, King J, Kaddoura Y, Jansen E. Gator Tech smart house: a programmable pervasive space. IEEE Computer Magazine. 2005;38(3):50–60. [Google Scholar]

- 22.Helal A, Mann W, Lee C. Assistive environments for individuals with special needs. In: Cook D, Das SK, editors. Smart environments: technology, protocols and applications. Hoboken: John Wiley and Sons; 2005. pp. 361–383. [Google Scholar]

- 23.El-Zabadani H, Helal A, Abdulrazak B, Jansen E. Self-sengin spaces: smart plugs for smart environments. Proc Third International Cnf Smart homes and Health Telematics (ICOST) (IOS, 2005); July 4-6, 2005; pp. 91–98. [Google Scholar]

- 24.El-Zabadani H, Helal A, Schmaltz M. PerVision: an integrated pervasive computing/computer vision approach to tracking objects in a self-sensing space. Proc Fourth International Conf Smart Homes and Health Telematics (ICOST) (IOS, 2006); June 2006. [Google Scholar]

- 25.Gopalratnam K, Cook DJ. Online sequential prediction via incremental parsing: the active LeZi algorithm. IEEE Intell Syst. 2007;22(1):52–58. [Google Scholar]

- 26.Jakkula V, Cook DJ. Anomaly detection using temporal data mining in a smart home environment. Methods Inf Med. 2008;47(1):70–75. doi: 10.3414/me9103. [DOI] [PubMed] [Google Scholar]

- 27.Park S, Jayaraman S. Wearable sensor systems: opportunities and challenges. Proc IEEE Engineering in Medicine and Biology Annual Conf; 2005. pp. 4153–4155. [DOI] [PubMed] [Google Scholar]

- 28.Singla G, Cook DJ, Schmitter-Edgecombe M. Incorporating temporal reasoning into activity recognition for smart home residents. Proc AAAI Workshop on Spatial and Temporal Reasoning (AAAI, 2008); July 13, 2008. [Google Scholar]

- 29.King J, Bose R, Pickles S, Helal A, Vanderploeg S, Russo J. Atlas – a service-oriented sensor platform. Proc First IEEE International Workshop on Practical Issues in Building Sensor Network Applications (AAAI, 2006); Nov. 12-16, 2006. [Google Scholar]

- 30.de Deugd S, Carroll R, Kelly KE, Millett B, Ricker J. SODA: service oriented device architecture. IEEE Pervasive Computing. 2006;5(3):94–96. [Google Scholar]

- 31.Liao L, Fox D, Kautz H. Location-based activity recognition using relational Markov networks. Proc Int Joint Conf Artificial Intelligence; 2005. pp. 773–778. [Google Scholar]

- 32.Philipose M, Fishkin KP, Perkowitz M, Patterson DJ, Fox D, Kautz H, Hähnel D. Inferring activities from interactions with objects. IEEE Pervasive Computing. 2004;3(4):50–57. [Google Scholar]

- 33.Wren CR, Munguia-Tapia E. Toward scalable activity recognition for sensor networks. Proc Int Workshop in Location and Context-Awareness; 2006. pp. 168–185. [Google Scholar]