Abstract

Existing optimal estimators of nonequilibrium path-ensemble averages are shown to fall within the framework of extended bridge sampling. Using this framework, we derive a general minimal-variance estimator that can combine nonequilibrium trajectory data sampled from multiple path-ensembles to estimate arbitrary functions of nonequilibrium expectations. The framework is also applied to obtain asymptotic variance estimates, which are a useful measure of statistical uncertainty. In particular, we develop asymptotic variance estimates pertaining to Jarzynski’s equality for free energies and the Hummer–Szabo expressions for the potential of mean force, calculated from uni- or bidirectional path samples. These estimators are demonstrated on a model single-molecule pulling experiment. In these simulations, the asymptotic variance expression is found to accurately characterize the confidence intervals around estimators when the bias is small. Hence, the confidence intervals are inaccurately described for unidirectional estimates with large bias, but for this model it largely reflects the true error in a bidirectional estimator derived by Minh and Adib.

INTRODUCTION

Path-ensemble averages play a central role in nonequilibrium statistical mechanics, akin to the role of configurational ensemble averages in equilibrium statistical mechanics. Expectations of various functionals over processes where a system is driven out of equilibrium by a time-dependent external potential have been shown to be related to equilibrium properties, including free energy differences1, 2 and thermodynamic expectations.3, 4 The latter relationship, between equilibrium and nonequilibrium expectations, has been applied to several specific cases, such as the potential of mean force (PMF) along the pulling coordinate5, 6, 7 (or other observed coordinates8) in single-molecule pulling experiments, RNA folding free energies as a function of a control parameter,9 the root mean square deviation from a reference structure,10 the potential energy distribution10 and average,11 and the thermodynamic length.12

Compared to equilibrium sampling, nonequilibrium processes may be advantageous for traversing energetic barriers and accessing larger regions of phase space per unit time. This is useful, for example, in reducing the effects of experimental apparatus drift or increasing the sampling of barrier-crossing events. Thus, there has been interest in calculating equilibrium properties from nonequilibrium trajectories collected in simulations or laboratory experiments. Indeed, single-molecule pulling data have been used to experimentally verify relationships between equilibrium and nonequilibrium quantities.13, 14

While many estimators for free energy differences3, 15, 16, 17 and equilibrium ensemble averages can be constructed from nonequilibrium relationships, they will differ in the efficiency with which they utilize finite data sets, leading to varying amounts of statistical bias and uncertainty. Characterization of this bias and uncertainty is helpful in comparing the quality of different estimators18 and assessing the accuracy of a particular estimate. The statistical uncertainty of an estimator is usually quantified by its variance in the asymptotic, or large sample, limit, where estimates from independent repetitions of the experiment often approach a normal distribution about the true value due to the central limit theorem. It is an important goal to find an optimal estimator which minimizes this asymptotic variance.

Although numerical estimates of the asymptotic variance may be provided by bootstrapping (e.g., Ref. 19), closed-form expressions can provide computational advantages in the calculation of confidence intervals, allow comparison of asymptotic efficiency,18, 20 and facilitate the design of adaptive sampling strategies to target data collection in a manner that most rapidly reduces statistical error.21, 22, 23 In the asymptotic limit, the statistical error in functions of the estimated parameters can be estimated by propagating this variance estimate via a first-order Taylor series expansion. While this procedure is relatively straightforward for simple estimators, it can be difficult for estimators that involve arbitrary functions (e.g., nonlinear or implicit equations) of nonequilibrium path-ensemble averages.

Fortunately, the extended bridge sampling (EBS) estimators,20, 24, 25, 26 a class of equations for estimating the ratios of normalizing constants, are known to have both minimal-variance forms and associated asymptotic variance expressions. Recently, Shirts and Chodera27 applied the EBS formalism to generalize the Bennett acceptance ratio,15 producing an optimal estimator combining data from multiple equilibrium states to compute free energy differences, thermodynamic expectations, and their associated uncertainties. Here, we apply the EBS formalism to estimators utilizing nonequilibrium trajectories. We first construct a general minimal-variance path-average estimator that can use samples collected from multiple nonequilibrium path ensembles. We then show that some existing path-average estimators using uni- and bidirectional data are special cases of this general estimator, proving their optimality. This also allows us to develop asymptotic variance expressions for estimators based on Jarzynski’s equality1, 2 and the Hummer–Szabo expressions for the PMF.5, 6, 28 We then demonstrate them on simulation data from a simple one-dimensional system and comment on their applicability.

EXTENDED BRIDGE SAMPLING

Suppose that we sample Ni paths (trajectories) from each of K path ensembles indexed by i=1,2,…,K. The path-ensemble average of an arbitrary functional F[X] in path ensemble i is defined by

| (1) |

where ρi[X] is a probability density over trajectories,

| (2) |

with unnormalized density qi[X]>0 and the normalization constant ci (a path partition function). The above integrals, in which dX is an infinitesimal path element, are taken over all possible paths, X. EBS estimators provide a way of estimating ratios of normalization constants ci∕cj, which will prove useful in estimating free energies and thermodynamic expectations.

To construct these estimators, we first note the importance sampling identity,

| (3) |

where j is another path-ensemble index, αij[X] is an arbitrary functional of X, and all normalization constants are nonzero.

Summing over the index j in Eq. 3 and using the sample mean, , as an estimator for ⟨F⟩i, we obtain a set of K estimating equations, indexed byi=1,…,K,

| (4) |

whose solutions yield estimates for the normalization constants ci, up to an irrelevant scalar multiple. Each path, Xin, is indexed by the ensemble i from which it is sampled, and the sample number n=1,2,…,Ni. This coupled set of nonlinear equations defines a family of estimators parametrized by the choice of αij[X], all of which are asymptotically consistent, but whose statistical efficiencies will vary.20

With the choice,

| (5) |

Eq. 4 simplifies to the optimal EBS estimator,

| (6) |

This choice for αij[X] is optimal in that the asymptotic variance of the ratios is minimal.20, 27 These equations may be solved by any appropriate algorithm, including a number of efficient and stable methods suggested by Shirts and Chodera.27

The asymptotic covariance of Eq. 6 is estimated by

| (7) |

where the elements of Θ are the covariances of the logarithms of the estimated normalization constants, , and .26 The superscript (⋯)+ denotes an appropriate generalized inverse, such as the Moore–Penrose pseudoinverse, IN is the N×N identity matrix (where is the total number of samples), N≡diag(N1,N2,…,NK) is the diagonal matrix of sample sizes, and M is the N×K weight matrix with elements,

| (8) |

In this matrix, the distribution from which samples are drawn from is irrelevant and X is only indexed by n=1,…,N. We note that the sum over each column, , is unity. (Efficient methods for computing are discussed in Appendix D of Ref. 27.)

For arbitrary functions of the logarithms of the normalization constants, and , the asymptotic covariance can be estimated from according to

| (9) |

through first-order Taylor series expansion of ϕ and ψ.

GENERAL PATH-ENSEMBLE AVERAGES

Following previous work,27, 29 we estimate nonequilibrium expectations by defining additional path ensembles with “unnormalized densities,”

| (10) |

Using Eqs. 1, 2, 10, we can express nonequilibrium expectations as a ratio of the appropriate normalization constants, ⟨F⟩i=cFi∕ci. Notably, this can be estimated without actually sampling path ensembles biased by some function of F[X] (although it is sometimes possible to do so in computer simulations30, 31 via transition path sampling32, 33). If no paths are drawn from the path ensemble corresponding to qFi[X], then NFi=0 and it is no longer required that qFi[X]>0.20, 27

For each defined path ensemble, the weight matrix M is augmented by one column with elements,

| (11) |

The estimator for the path-ensemble average, , can be expressed in terms of weight matrix elements,

| (12) |

and its uncertainty estimated by

| (13) |

EXPERIMENTALLY RELEVANT PATH ENSEMBLES

The above formalism is fully general, and may be applied to any situation where the ratio qi[X]∕qj[X] can be computed. For arbitrary path ensembles, unfortunately, calculating this ratio is only possible in computer simulations unless certain assumptions are made about the dynamics.34 In a few special path ensembles, however, we can use the Crooks fluctuation theorem35, 36 to estimate this ratio, allowing us to apply the EBS estimator to laboratory experiments. We examine these here.

First, consider a forward process, in which a system, initially in equilibrium, is propagated under some time-dependent dynamics for a time τ, which may cause it to be driven out of equilibrium. The time dependence of the evolution law (e.g., Hamiltonian dynamics in a time-dependent potential) is the same for all paths sampled from this ensemble.

For a sample of paths only drawn from this ensemble, the optimal EBS estimator of ⟨F⟩f reduces to the sample mean estimator, which we call the unidirectional path-ensemble average estimator,

| (14) |

and the associated asymptotic variance from Eq. 9 reduces to the variance of the sample mean (see Appendix A)

| (15) |

The forward process has a unique counterpart known as the reverse process. Here, the system moves via the opposite protocol in thermodynamic state space; after initial configurations are drawn from the final thermodynamic state of the forward path ensemble, they are driven toward the initial state. If the dynamical law satisfies detailed balance when the control parameters are held constant at each fixed time t, the path probabilities in the conjugate forward and reverse path ensembles are related according to the Crooks fluctuation theorem,35, 36

| (16) |

in which is the time reversal, or conjugate twin,37 of X, Δft=−ln(ct∕c0) is the dimensionless free energy difference between thermodynamic states at times 0 and t (with τ being the fixed total trajectory length), and wt[X] is the appropriate dimensionless work. With Hamiltonian dynamics, for example, this work is . For convenience, we define the total dissipative work as Ω[X]≡wτ[X]−Δfτ.

We will refer to data sets which only include realizations from the forward path ensemble as “unidirectional,” and those with paths from both path ensembles as “bidirectional.” Notably, sampling paths from these conjugate ensembles and calculating the associated work wt[X] are possible in single-molecule pulling experiments as well as computer simulations (cf. Refs. 6, 14). To combine bidirectional data to estimate ⟨F⟩f, we apply the Crooks fluctuation theorem35, 36 to Eq. 6 and divide by , leading to

| (17) |

which is bidirectional path-average estimator of Minh and Adib,28 derived here by a different route which demonstrates its optimality. (The asymptotic variance estimator for this equation is written in a closed form in Appendix B.) In these bidirectional expressions, samples drawn from the reverse path ensemble are time reversed to obtain the paths Xrn. The dissipated work estimate, , requires an estimate of Δfτ . A method for obtaining this estimate will be described next.

FREE ENERGY

| (18) |

relates nonequilibrium work and free energy differences. To facilitate the use of EBS in Jarzynski’s equality, we define a path ensemble by choosing F[X]=e−wt[X] in Eq. 10, leading to

| (19) |

When only unidirectional data is available, the optimal EBS estimator for Jarzynski’s equality is

| (20) |

and its asymptotic variance is straightforwardly given by error propagation.38Estimators30, 31, 39 and asymptotic variances39, 40 have also been developed for unidirectional importance sampling forms of the equality.

When bidirectional data is available, the same choice of F[X] in Eq. 17 gives the estimator

| (21) |

In this equation, choosing t=0 or t=τ leads to an implicit function mathematically equivalent to the Bennett acceptance ratio method,3, 15 as previously explained.28, 41 The asymptotic variance of is calculated by augmenting the matrices M and and using ϕ=ψ=Δft=−ln(cwt∕cf) in Eq. 9, such that

| (22) |

POTENTIAL OF MEAN FORCE

Building on Jarzynski’s equality, Hummer and Szabo developed expressions for the PMF,5, 6 the free energy as a function of a order parameter rather than a thermodynamic state, which may be used to interpret single-molecule pulling experiments. In these experiments, a molecule is mechanically stretched by a force-transducing apparatus, such as a laser optical trap or atomic force microscope tip (cf. Ref. 6). The Hamiltonian governing the time evolution in these experiments, H(x;t)=H0(x)+V(z(x);t), is assumed to contain both a term corresponding to the unperturbed system, H0(x), and a time-dependent (typically harmonic) external bias potential imposed by the apparatus, V(z;t), which acts along a pulling coordinate, z(x). As the coordinate zt≡z(x(t)) is observed at fixed intervals Δt over the course of the experiment, we will henceforth use t=0,1,…,T as an integer time index. We calculate the work with a discrete sum as , where Vn(z)≡V(z;nΔt).

While the expressions in Sec. 5 provide an estimate of relative free energies of the equilibrium thermodynamic states defined by H(x;t), they are not immediately useful as an estimate for the PMF along z.5, 6, 42 By applying the nonequilibrium estimator for thermodynamic expectations,3, 4 it was shown that the PMF in the absence of an external potential is given by5, 6

| (23) |

where the dimensionless PMF, g0(z), is defined in relation to the normalized density as g0(z)=−ln p0(z)−δg. In this equation, δg is a time-independent constant, e−δg=∫dxe−H(x;0)∕∫dxe−H0(x).6

This theorem [Eq. 23] can be used to develop estimators for the PMF by replacing the delta function using a kernel function of finite width, such as

| (24) |

The width Δz must be small so that eV(z;t) does not vary substantially across it.

As this theorem is valid at all times, it is possible to obtain an asymptotically unbiased density estimate from each time slice. It is far more efficient, however, to estimate the PMF using all recorded time slices. While any linear combination of time slices will lead to a valid estimate, certain choices will be more statistically efficient (leading to lower variance) than others. One way to combine time slices is to use the asymptotic covariance matrix in the method of control variates,20 leading to a generalized least-squares optimal estimate of the PMF. Unfortunately, we empirically found this approach to be numerically unstable. A more numerically stable approach, which was proposed by Hummer and Szabo,5, 6 is based on the weighted histogram analysis method,43, 44 which was generalized to,45, 46, 47

| (25) |

While this weighting scheme is optimal, in a minimal-variance sense, for independent samples from multiple equilibrium distributions, these assumptions do not hold for time slices from nonequilibrium trajectories. However, Oberhofer and Dellago did not observe substantial improvement in PMF estimates when using other time-slice weighting schemes.48

By defining the path ensemble,

| (26) |

and making use of Jarzynski’s equality [Eq. 18] for , we can write Hummer and Szabo’s PMF estimator as

| (27) |

which can be readily analyzed in terms of EBS. While Hummer and Szabo proposed using the unidirectional path average estimator [Eq. 14] to estimate the expectations in Eq. 27, Minh and Adib later applied a bidirectional estimator [Eq. 17], leading to significantly improved statistical properties.28

The asymptotic variance of these estimators can be determined by choosing ϕ=ψ=p0(z) in Eq. 9. For the bidirectional estimator, the matrices M and will contain one column each for the f and r path ensembles, and T+1 columns each for the path ensembles associated with and . The relevant partial derivatives are

| (28) |

| (29) |

| (30) |

where γi=ln ci, N=∑t(czt∕cwt) is the numerator of Eq. 27, and D=∑te−V(z;t)(cf∕cwt) is its denominator. These lead to an estimate for . Finally, the asymptotic variance in the PMF is given by the error propagation formula, .

ILLUSTRATIVE EXAMPLE

We demonstrate these results with Brownian dynamics simulations on a one-dimensional potential with U0(z)=(5z3−10z+3)z, which were run as previously described.28 A time-dependent external perturbation, , with ks=15 is applied, such that the total potential is U(z;t)=U0(z)+V(z;t). After 100 steps of equilibration at the initial , is linearly moved over 750 steps from −1.5 to 1.5 in forward processes and 1.5 to −1.5 in the reverse. The position at each time step is calculated using the equation zt=zt−1−(dU(xt−1)∕dx)DΔt+(2DΔt)1∕2Rt, where the diffusion coefficient is D=1, the time step is Δt=0.001, and Rt∼N(0,1) is a random number from the standard normal distribution.

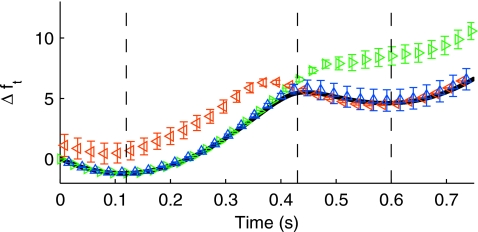

As previously noted,28, 49, 50, 51 unidirectional sampling leads to significant apparent bias in estimates of Δft (Fig. 1). In addition to the increased bias as the system is driven further from equilibrium, we further observe that the estimated variance also increases. Bidirectional sampling, on the other hand, leads to a significant reduction in bias and variance,28 such that free energy estimate is within error bars of the actual free energy. Because represents the estimated free energy difference with respect to t, the estimated increases with t, becoming equal to the well-known Bennett acceptance ratio asymptotic variance estimate15, 41 when t=τ.

Figure 1.

Comparison of estimators for Δft. This figure is similar to Fig. 1 of Ref. 28, except that error bars are now included and the sample size is halved. The unidirectional estimator [Eq. 20] is applied to 250 forward (rightward triangles) or reverse (leftward triangles, time reversed) sampled paths, and the bidirectional estimator [Eq. 21] to 125 paths in each direction (upward triangles). The exact Δft is shown as a solid line. Error bars (sometimes smaller than the markers) denote one standard deviation of , estimated using the expressions presented here. The vertical dashed lines are at the times considered in Fig. 4.

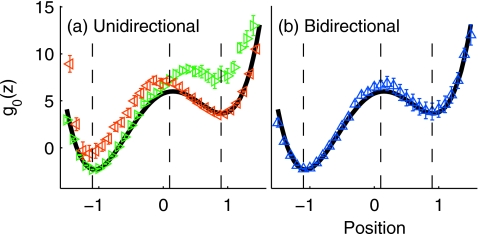

Similar trends are observed with the Hummer–Szabo PMF estimates (Fig. 2). For unidirectional sampling, the finite-sampling bias and estimated variance increases when the PMF is far from the region sampled by the initial state. With bidirectional sampling, the bias is significantly reduced; the PMF estimate is largely within error bars of the actual PMF.

Figure 2.

Comparison of PMF estimators. This figure is similar to Fig. 2 of Ref. 28, except that error bars are now included and the sample size is halved. In the left panel, the unidirectional Hummer and Szabo estimator is applied to (a) 250 forward (rightward triangles) or 250 reverse (leftward triangles) sampled paths. In the right panel, the bidirectional estimator is applied to 125 sampled paths in each direction (upward triangles). The exact PMF is shown as a solid line in both panels. Error bars (sometimes smaller than the markers) denote one standard deviation of , estimated using the expressions presented here. The vertical dashed lines are at the positions considered in Fig. 4.

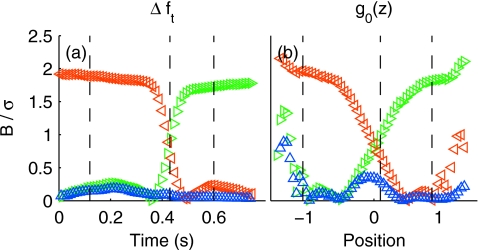

To analyze these trends more quantitatively, we repeated the experiment 1000 times. For both Δft and g0(z), we calculated the bias as and the standard deviation as , where S=1000 is the number of replicates. The results from these more extensive simulations support our described trends (Fig. 3). For unidirectional sampling, the bias in both Δft and g0(x) appear to significantly increase around the barrier crossing. In the bidirectional free energy estimate, however, the bias is small relative to the variance at all times. Notably, in the bidirectional PMF estimate, there is a small spike in the bias near the barrier, potentially due to reduced sampling in the region.

Figure 3.

Ratio of estimator bias to standard deviation. This ratio is calculated for the (a) free energy and (b) PMF, using 1000 independent estimates. Each estimate is obtained and the type of path sample is indicated as in Figs. 12. The vertical dashed lines are at the times/positions considered in Fig. 4.

While in the large sample limit, the bias in the unidirectional estimate is expected to be small compared to the variance,50 our distribution of unidirectional e−Δft estimates is significantly skewed and does not resemble a Gaussian distribution expected by the central limit theorem (data not shown). Hence, the asymptotic limit has not been reached and the large relative bias is caused by insufficient sampling of rare events with low work values that dominate the exponential average.37 Larger sample sizes would be necessary for the distribution of estimates to be normally distributed and for the error to be dominated by the variance (which we estimate here) rather than the bias.

The accuracy of variance estimates may be assessed by comparing predicted and observed confidence intervals. If the estimates are indeed normally distributed about the true value, about 68% of estimates from many independent replicates of the experiment should be within one standard deviation of the true value, 95% within two, and so forth. Figure 4 compares confidence intervals predicted using the described asymptotic variance estimators and the actual fraction of estimates within the interval.

Figure 4.

Validation of asymptotic variance estimators. Predicted vs observed fraction of 1000 independent estimates that are within an interval of the true value for [(a)–(c)] Δft and [(d)–(f)] g0(z) at the indicated times or positions. Each estimate is obtained and the type of path sample is indicated as in Figs. 12. Error bars on these fractions are 95% confidence intervals calculated using a Bayesian scheme described in Appendix B of Chodera et al. (Ref. 62) except that, for numerical reasons, the confidence interval was estimated from the variance of the beta distribution assuming approximate normality, rather than from the inverse beta cumulative distribution function.

We observe that the accuracy of our asymptotic variance estimate in characterizing the confidence interval largely depends on the presence of bias. In the bidirectional estimate, where there is little bias, the asymptotic variance estimate works very well. For the unidirectional estimates, it works well near the initial state but underestimates the error as the system is driven further away from equilibrium, concurring with the bias trend. In the bidirectional PMF estimate, the asymptotic variance estimate accurately describes the confidence interval except near the barrier, where it slightly underestimates the uncertainty, probably due to the small spike in bias.

In the regime where the bias is much smaller than the variance, , the asymptotic variance estimate provides a good estimate of the actual statistical error in the estimate. This also permits us to model the posterior distribution of quantity being estimated as a multivariate normal distribution with mean and covariance . Doing so provides a route to combining estimates from independent data sets collected from different path ensembles—such as different pulling speeds or from equilibrium and nonequilibrium path ensembles—without knowledge of path probability ratios. This is achieved by maximizing the product of these posterior distributions in a manner similar to the Bayesian approach for estimating Δfτ described in Ref. 17.

DISCUSSION

In addition to the described experimentally relevant applications, we anticipate the use of our estimator in conjunction with a number of computational techniques. Recent work suggested30, 31, 40, 52, 53 that the convergence of Jarzynski’s equality may be improved by sampling from an alternate path ensemble, biased according to the work. Unfortunately, the optimal bias for this path ensemble includes the free energy itself,53 limiting its practicality. However, an iterative procedure in which progressively improving free energy estimates are used in successive biased path ensembles is feasible, and our estimator provides a way to combine all of the data. Free energy estimates may also be improved by optimizing the switching protocol in thermodynamic state space to minimize the dissipated work.54, 55, 56, 57, 58, 59, 60, 61 When the optimal protocol is known,59, 60, 61 the bidirectional form of our estimator is likely to improve free energy estimates over a unidirectional procedure. In the general case, protocol optimization may require an iterative procedure for which our estimator can also be used to combine the data.

Lastly, while we emphasized the use of EBS in nonequilibrium path-ensemble averages, the formalism described in Secs. 2, 3 is equally applicable to other path ensembles. Given the explosion in popularity of transition path sampling,32, 33 in which novel path ensembles can be designed and sampled from, our estimator should find use in some of its applications.

ACKNOWLEDGMENTS

We thank Attila Szabo and Zhiqiang Tan for helpful discussions, Christopher Calderon for useful comments on the manuscript, and an anonymous reviewer for suggesting a potential application of the estimator. D.M. thanks Artur Adib for being a supportive postdoctoral adviser. This research was supported by the Intramural Research Program of the NIH, NIDDK. J.D.C. acknowledges support from a distinguished postdoctoral fellowship from the California Institute for Quantitative Biosciences (QB3) at the University of California, Berkeley.

APPENDIX A: CLOSED-FORM EXPRESSION FOR THE ASYMPTOTIC VARIANCE, GIVEN UNIDIRECTIONAL DATA

In this appendix, we show that given unidirectional data, the optimal EBS estimate is the sample mean and its variance simplifies to the variance of a sample mean. First, consider the application of the optimal EBS estimator, Eq. 6, to estimating a nonequilibrium path-ensemble average from a unidirectional data set,

| (A1) |

| (A2) |

Dividing both sides by , we obtain the sample mean estimator,

| (A3) |

We shall now simplify the asymptotic variance estimate by closely following the procedure of Shirts and Chodera.27 When M has full column rank, can be written as (Eq. D7 of Ref. 27)

| (A4) |

where b is an arbitrary multiplicative factor and 1K is a 1×K matrix of ones.

The weight matrix M consists of two columns,

| (A5) |

| (A6) |

obtained by applying Eqs. 8, 11. This leads to,

| (A7) |

The matrix MTM has the determinant

| (A8) |

which allows us to write the inverse covariance matrix as

| (A9) |

By applying the same steps as Appendix E of Shirts and Chodera,27 we then obtain the determinant

| (A10) |

where a=a12=a21. We then obtain the asymptotic covariance estimate

| (A11) |

To estimate the variance, we apply Eq. 13, leading to

| (A12) |

| (A13) |

| (A14) |

| (A15) |

| (A16) |

which is the variance of a sample mean estimate.

APPENDIX B: CLOSED-FORM EXPRESSION FOR THE ASYMPTOTIC VARIANCE, GIVEN BIDIRECTIONAL DATA

In this appendix, we obtain a closed-form expression for the asymptotic variance of the optimal EBS estimate for , given bidirectional data. We will follow a similar procedure as in Appendix A. For the bidirectional case, the weight matrix M consists of three columns, M=[mfmrmFf], where mi is a column matrix of weights from Eqs. 8, 11 corresponding to path ensemble i. The elements of M are

| (B1) |

| (B2) |

| (B3) |

where ϵ is defined as the Fermi function, ϵ(Ln)=1∕1+e−Ln, and we define . This allows us to write MTM as

| (B4) |

Using the determinant,

| (B5) |

we write the inverse covariance matrix estimator as

| (B6) |

By applying the same steps as Appendix E of Shirts and Chodera,27 we obtain the determinant

| (B7) |

Applying Eq. 13 to and simplifying, it can be shown that the variance estimate is

| (B8) |

| (B9) |

References

- Jarzynski C., Phys. Rev. Lett. 78, 2690 (1997). 10.1103/PhysRevLett.78.2690 [DOI] [Google Scholar]

- Jarzynski C., Phys. Rev. E 56, 5018 (1997). 10.1103/PhysRevE.56.5018 [DOI] [Google Scholar]

- Crooks G. E., Phys. Rev. E 61, 2361 (2000). 10.1103/PhysRevE.61.2361 [DOI] [Google Scholar]

- Neal R. M., Stat. Comput. 11, 125 (2001). 10.1023/A:1008923215028 [DOI] [Google Scholar]

- Hummer G. and Szabo A., Proc. Natl. Acad. Sci. U.S.A. 98, 3658 (2001). 10.1073/pnas.071034098 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hummer G. and Szabo A., Acc. Chem. Res. 38, 504 (2005). 10.1021/ar040148d [DOI] [PubMed] [Google Scholar]

- Minh D. D. L., Phys. Rev. E 74, 061120 (2006). 10.1103/PhysRevE.74.061120 [DOI] [PubMed] [Google Scholar]

- Minh D. D. L., J. Phys. Chem. B 111, 4137 (2007). 10.1021/jp068656n [DOI] [PubMed] [Google Scholar]

- Junier I., Mossa A., Manosas M., and Ritort F., Phys. Rev. Lett. 102, 070602 (2009). 10.1103/PhysRevLett.102.070602 [DOI] [PubMed] [Google Scholar]

- Lyman E. and Zuckerman D. M., J. Chem. Phys. 127, 065101 (2007). 10.1063/1.2754267 [DOI] [PubMed] [Google Scholar]

- Nummela J., Yassin F., and Andricioaei I., J. Chem. Phys. 128, 024104 (2008). 10.1063/1.2817332 [DOI] [PubMed] [Google Scholar]

- Feng E. H. and Crooks G. E., Phys. Rev. E 79, 012104 (2009). 10.1103/PhysRevE.79.012104 [DOI] [PubMed] [Google Scholar]

- Liphardt J., Dumont S., Smith S. B., I.Tinoco, Jr., and Bustamante C., Science 296, 1832 (2002). 10.1126/science.1071152 [DOI] [PubMed] [Google Scholar]

- Collin D., Ritort F., Jarzynski C., Smith S. B., Tinoco I., and Bustamante C., Nature (London) 437, 231 (2005). 10.1038/nature04061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett C. H., J. Comput. Phys. 22, 245 (1976). 10.1016/0021-9991(76)90078-4 [DOI] [Google Scholar]

- Maragakis P., Spichty M., and Karplus M., Phys. Rev. Lett. 96, 100602 (2006). 10.1103/PhysRevLett.96.100602 [DOI] [PubMed] [Google Scholar]

- Maragakis P., Ritort F., Bustamante C., Karplus M., and Crooks G. E., J. Chem. Phys. 129, 024102 (2008). 10.1063/1.2937892 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirts M. R. and Pande V. S., J. Chem. Phys. 122, 144107 (2005). 10.1063/1.1873592 [DOI] [PubMed] [Google Scholar]

- Calderon C. P., Janosi L., and Kosztin I., J. Chem. Phys. 130, 144908 (2009). 10.1063/1.3106225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan Z., J. Am. Stat. Assoc. 99, 1027 (2004). 10.1198/016214504000001664 [DOI] [Google Scholar]

- Singhal N. and Pande V. S., J. Chem. Phys. 123, 204909 (2005). 10.1063/1.2116947 [DOI] [PubMed] [Google Scholar]

- Hinrichs N. S. and Pande V. S., J. Chem. Phys. 126, 244101 (2007). 10.1063/1.2740261 [DOI] [PubMed] [Google Scholar]

- Hahn A. M. and Then H., e-print arXiv:0904.0625v2.

- Vardi Y., Ann. Stat. 13, 178 (1985). 10.1214/aos/1176346585 [DOI] [Google Scholar]

- Gill R. D., Vardi Y., and Wellner J. A., Ann. Stat. 16, 1069 (1988). 10.1214/aos/1176350948 [DOI] [Google Scholar]

- Kong A., McCullagh P., Meng X. -L., Nicolae D., and Tan Z., J. R. Stat. Soc. Ser. B (Stat. Methodol.) 65, 585 (2003). 10.1111/1467-9868.00404 [DOI] [Google Scholar]

- Shirts M. R. and Chodera J. D., J. Chem. Phys. 129, 124105 (2008). 10.1063/1.2978177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minh D. D. L. and Adib A. B., Phys. Rev. Lett. 100, 180602 (2008). 10.1103/PhysRevLett.100.180602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- H. Doss makes this suggestion in the conference discussion of Ref. .

- Sun S., J. Chem. Phys. 118, 5769 (2003). 10.1063/1.1555845 [DOI] [Google Scholar]

- Ytreberg F. M. and Zuckerman D. M., J. Chem. Phys. 120, 10876 (2004). 10.1063/1.1760511 [DOI] [PubMed] [Google Scholar]

- Pratt L., J. Chem. Phys. 85, 5045 (1986). 10.1063/1.451695 [DOI] [Google Scholar]

- Dellago C., Bolhuis P. G., Csajka F. S., and Chandler D., J. Chem. Phys. 108, 1964 (1998). 10.1063/1.475562 [DOI] [Google Scholar]

- Nummela J. and Andricioaei I., Biophys. J. 93, 3373 (2007). 10.1529/biophysj.107.111658 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crooks G. E., J. Stat. Phys. 90, 1481 (1998). 10.1023/A:1023208217925 [DOI] [Google Scholar]

- Crooks G. E., Phys. Rev. E 60, 2721 (1999). 10.1103/PhysRevE.60.2721 [DOI] [PubMed] [Google Scholar]

- Jarzynski C., Phys. Rev. E 73, 046105 (2006). 10.1103/PhysRevE.73.046105 [DOI] [PubMed] [Google Scholar]

- Lu L. and Woolf T. B., in Free Energy Calculations, edited by Chipot C. and Pohorille A. (Springer, Berlin, 2007), Vol. 86. [Google Scholar]

- Minh D. D. L., J. Chem. Phys. 130, 204102 (2009). 10.1063/1.3139189 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberhofer H., Dellago C., and Geissler P., J. Phys. Chem. B 109, 6902 (2005). 10.1021/jp044556a [DOI] [PubMed] [Google Scholar]

- Shirts M. R., Bair E., Hooker G., and Pande V. S., Phys. Rev. Lett. 91, 140601 (2003). 10.1103/PhysRevLett.91.140601 [DOI] [PubMed] [Google Scholar]

- Minh D. D. L. and McCammon J. A., J. Phys. Chem. B 112, 5892 (2008). 10.1021/jp0733163 [DOI] [PubMed] [Google Scholar]

- Ferrenberg A. M. and Swendsen R. H., Phys. Rev. Lett. 63, 1195 (1989). 10.1103/PhysRevLett.63.1195 [DOI] [PubMed] [Google Scholar]

- Kumar S., Bouzida D., Swendsen R. H., Kollman P. A., and Rosenberg J. M., J. Comput. Chem. 13, 1011 (1992). 10.1002/jcc.540130812 [DOI] [Google Scholar]

- Veach E. and Guibas L. J., Optimally combining sampling techniques for Monte Carlo rendering, SIGGRAPH '95: Proceedings of the 22nd annual conference on computer graphics and interactive techniques (ACM, New York, 1995), pp. 419–428.

- Veach E., Ph.D. dissertation, Stanford University, 1997. [Google Scholar]

- Souaille M. and Roux B., Comput. Phys. Commun. 135, 40 (2001). 10.1016/S0010-4655(00)00215-0 [DOI] [Google Scholar]

- Oberhofer H. and Dellago C., J. Comput. Chem. 30, 1726 (2009). 10.1002/jcc.21290 [DOI] [PubMed] [Google Scholar]

- Zuckerman D. M. and Woolf T. B., Phys. Rev. Lett. 89, 180602 (2002). 10.1103/PhysRevLett.89.180602 [DOI] [PubMed] [Google Scholar]

- Gore J., Ritort F., and Bustamante C., Proc. Natl. Acad. Sci. U.S.A. 100, 12564 (2003). 10.1073/pnas.1635159100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zuckerman D. M. and Woolf T. B., J. Stat. Phys. 114, 1303 (2004). 10.1023/B:JOSS.0000013961.84860.5b [DOI] [Google Scholar]

- Atilgan E. and Sun S. X., J. Chem. Phys. 121, 10392 (2004). 10.1063/1.1813434 [DOI] [PubMed] [Google Scholar]

- Oberhofer H. and Dellago C., Comput. Phys. Commun. 179, 41 (2008). 10.1016/j.cpc.2008.01.017 [DOI] [Google Scholar]

- Mark A. E., van Gunsteren W. F., and Berendsen H. J. C., J. Chem. Phys. 94, 3808 (1991). 10.1063/1.459753 [DOI] [Google Scholar]

- Reinhardt W. P. and Hunter J. E., J. Chem. Phys. 97, 1599 (1992). 10.1063/1.463235 [DOI] [Google Scholar]

- Hunter J. E., Reinhardt W. P., and Davis T. F., J. Chem. Phys. 99, 6856 (1993). 10.1063/1.465830 [DOI] [Google Scholar]

- Schon J. C., J. Chem. Phys. 105, 10072 (1996). 10.1063/1.472836 [DOI] [Google Scholar]

- Jarque C. and Tidor B., J. Phys. Chem. B 101, 9402 (1997). 10.1021/jp9716795 [DOI] [Google Scholar]

- Schmiedl T. and Seifert U., Phys. Rev. Lett. 98, 108301 (2007). 10.1103/PhysRevLett.98.108301 [DOI] [PubMed] [Google Scholar]

- Then H. and Engel A., Phys. Rev. E 77, 041105 (2008). 10.1103/PhysRevE.77.041105 [DOI] [Google Scholar]

- Gomez-Marin A., Schmiedl T., and Seifert U., J. Chem. Phys. 129, 024114 (2008). 10.1063/1.2948948 [DOI] [PubMed] [Google Scholar]

- Chodera J. D., Swope W. C., Pitera J. W., Seok C., and Dill K. A., J. Chem. Theory Comput. 3, 26 (2007). 10.1021/ct0502864 [DOI] [PubMed] [Google Scholar]