Abstract

In order to understand how emotional state influences the listener’s physiological response to speech, subjects looked at emotion-evoking pictures while 32-channel EEG evoked responses (ERPs) to an unchanging auditory stimulus (“danny”) were collected. The pictures were selected from the International Affective Picture System database. They were rated by participants and differed in valence (positive, negative, neutral), but not in dominance and arousal. Effects of viewing negative emotion pictures were seen as early as 20 msec (p = .006). An analysis of the global field power highlighted a time period of interest (30.4–129.0 msec) where the effects of emotion are likely to be the most robust. At the cortical level, the responses differed significantly depending on the valence ratings the subjects provided for the visual stimuli, which divided them into the high valence intensity group and the low valence intensity group. The high valence intensity group exhibited a clear divergent bivalent effect of emotion (ERPs at Cz during viewing neutral pictures subtracted from ERPs during viewing positive or negative pictures) in the time region of interest (rΦ = .534, p < .01). Moreover, group differences emerged in the pattern of global activation during this time period. Although both groups demonstrated a significant effect of emotion (ANOVA, p = .004 and .006, low valence intensity and high valence intensity, respectively), the high valence intensity group exhibited a much larger effect. Whereas the low valence intensity group exhibited its smaller effect predominantly in frontal areas, the larger effect in the high valence intensity group was found globally, especially in the left temporal areas, with the largest divergent bivalent effects (ANOVA, p < .00001) in high valence intensity subjects around the midline. Thus, divergent bivalent effects were observed between 30 and 130 msec, and were dependent on the subject’s subjective state, whereas the effects at 20 msec were evident only for negative emotion, independent of the subject’s behavioral responses. Taken together, it appears that emotion can affect auditory function early in the sensory processing stream.

INTRODUCTION

Emotion, a powerful modulator of almost every human experience, has recently been increasingly shown to play an active role in sensory processing in multiple modalities (for reviews, see Vuilleumier, 2005; Dolan, 2002). That emotion, defined as subjectively experienced affective states, influences sensory processing is well-established in animal experiments (Brandao et al., 2005; Macedo, Cuadra, Molina, & Brandao, 2005; Nobre, Sandner, & Brandao, 2003; Poremba et al., 2003; Marsh, Fuzessery, Grose, & Wenstrup, 2002; Brandao, Coimbra, & Osaki, 2001; Kaas, Hackett, & Tramo, 1999; Erhan, Borod, Tenke, & Bruder, 1998; Kline, Schwartz, Fitzpatrick, & Hendricks, 1993; Ledoux, Sakaguchi, & Reis, 1984; Young & Horner, 1971). In studies using humans, converging evidence from a variety of methodologies has demonstrated the influence of emotion on sensory processing of visual (Williams et al., 2004; Balconi & Pozzoli, 2003; Batty & Taylor, 2003; Fischer et al., 2003; Holmes, Vuilleumier, & Eimer, 2003; Schupp, JunghÖfer, Weike, & Hamm, 2003a, 2003b; Campanella, Quinet, Bruyer, Crommelinck, & Guerit, 2002; Sato, Kochiyama, Yoshikawa, & Matsumura, 2001; Davidson & Slagter, 2000; Pizzagalli, Koenig, Regard, & Lehmann, 1998; Joost, Bach, & Schulte-Monting, 1992) and audiovisual (Armony & Dolan, 2001), and auditory stimuli (Alexandrov, Klucharev, & Sams, 2007). The purpose of this study was to investigate the effects of emotion on auditory processing in humans, specifically to characterize when (time after stimulus onset) and where (topographically) the influence takes place. With respect to the when question, a growing body of literature clearly elucidates the early emotional influence in the auditory modality in animals (Macedo et al., 2005; Nobre et al., 2003; Poremba et al., 2003; Marsh et al., 2002; Brandao et al., 2001; Kaas et al., 1999; Erhan et al., 1998; Kline et al., 1993; Ledoux et al., 1984; Young & Horner, 1971); the specific effects of emotion on early auditory processing have not been characterized in humans. For humans, the earliest known influence of emotion on sensory processing is in the visual modality and hundreds of milliseconds after the stimulus onset (Müller, Andersen, & Keil, 2008), but the auditory system works on a faster time scale than the visual system. With respect to the where question, laterality effects have been generally observed during emotion (Lee et al., 2004; Canli, Desmond, Zhao, Glover, & Gabrieli, 1998; Jones & Fox, 1992), and the hemisphere involved depends on the valence of emotional content of speech (Pell, 1999; Starkstein, 1994; Lancker & Sidtis, 1992; Scherer, 1986). Here we report evidence that emotional modulation is early and lateralized in a manner consistent with earlier studies (Lee et al., 2004), and interpret our results to suggest that the incoming auditory stream is modulated by a fast pathway from the limbic system.

Emotion has been shown to play a modulatory effect in sensory processing. In the visual system, the influence of emotion on evoked potentials and fMRI has been firmly established in a variety of paradigms (Williams et al., 2004; Balconi & Pozzoli, 2003; Batty & Taylor, 2003; Fischer et al., 2003; Holmes et al., 2003; Schupp et al., 2003a, 2003b; Campanella et al., 2002; Sato et al., 2001; Davidson & Slagter, 2000; Pizzagalli et al., 1998; Joost et al., 1992). Moreover, emotion facilitates visual search by reducing the cost of additional distracting features (Eastwood, Smilek, & Merikle, 2001), and emotional cues improve the detection threshold for low-contrast stimuli (Phelps, Ling, & Carrasco, 2006). In the auditory system, tuning properties of neurons in the primary auditory cortex change to reflect sensory information that is meaningful to an organism (Bao, Chang, Davis, Gobesker, & Merzenich, 2003; Fritz, Shamma, Elhilali, & Klein, 2003; Irvine, Rajan, & Brown, 2001; Weinberger, Hopkins, & Diamond, 1984). Furthermore, in rats, effects of emotion on the afferent auditory stream were measured in the midbrain, specifically the inferior colliculus (Brandao et al., 2001).

An anatomical mechanism by which emotion, typically associated with limbic areas, influences the afferent auditory stream is likely an evolutionarily ancient, fast subcortical pathway rather than the high-resolution, but slower cortical pathway. In the auditory system, anatomical evidence supports the existence of a fast subcortical pathway in addition to the slower pathway to the cortex, like the parallel pathways for the visual system (for a review, see Johnson, 2005). Retrograde trace labeling has indicated direct and widespread projections to the inferior colliculus, from the basal nucleus of the amygdala (Marsh et al., 2002). This is a projection from a part of the limbic system, which is typically involved in emotional processing in the mammalian brain, to a subcortical auditory relay station. Moreover, in rodents, lesion studies indicate that emotionally aversive inputs from the inferior colliculus receive serotoninergic modulatory projections from the basolateral nucleus and the central nucleus of the amygdala (Macedo et al., 2005). Lesions of the auditory midbrain and thalamus have been shown to block autonomic and conditioned emotional responses coupled to acoustic stimuli (Ledoux et al., 1984). Even in primates, it has been shown that there is a distributed set of connections from the auditory periphery to limbic areas (Kaas et al., 1999). In rhesus monkeys, the amygdala is activated with auditory stimulation, but interestingly, the amygdala is not activated if the inferior colliculus is ablated (Poremba et al., 2003).

Functional characterization of this fast subcortical pathway, however, has been diffuse. In auditory evoked potentials (AEPs), there is a direct relation between the neuroanatomical region of the processing stream and the time of the response. Remarkably, auditory responses measured in the inferior colliculus of rats are modulated by fear-inducing stimuli as early as 8 msec poststimulus onset (Brandao et al., 2001). Furthermore, it is known that the inferior colliculus is under tonic GABAergic control, and that a reduction of GABA levels not only induces freezing behavior (a typical fear behavior in rodents) but also enhances AEPs and impairs the startle reaction to a loud sound (Nobre et al., 2003). This tonic control of the inferior colliculus provides a mechanism for the limbic system to modulate the incoming response.

Because emotion exhibits laterality effects in sensory processing in both the auditory and visual modalities, it makes sense for emotional modulation of the afferent auditory processing stream to also be lateralized. In the auditory system, laterality effects have been observed for prosodic cues, the emotional content of speech (Pell, 1999; Starkstein, 1994; Lancker & Sidtis, 1992; Scherer, 1986), specifically in the lateral temporal lobes (Mitchell, Elliot, Barry, Cruttenden, & Woodruff, 2003). In the visual system, there is additional evidence for inherent laterality in emotional processing. Valence-dependent laterality has been established for visual (Lee et al., 2004; Canli et al., 1998) as well as audiovisual stimuli (Jones & Fox, 1992), where negative emotions appear to be processed consistently by the right hemisphere and positive emotions by the left hemisphere. This pattern of laterality was established with fMRI in the visual system by Lee et al. (2004) using pictures from the International Affective Picture System (IAPS), a set of visual stimuli normed for a large number of emotional dimensions, including valence (pleasant, unpleasant, or neutral), intensity, and other affective dimensions. Pictures from this database have previously been used to demonstrate the effects of picture valence and arousal on the AEP, auditory evoked mismatch response (Lang & Öhman, 1988).

Thus, to investigate the effects of emotion on auditory processing in humans, we used the IAPS pictures to elicit emotional states visually while neural responses to an unchanging sound were measured. Laterality effects similar to those already shown in the visual system are observed here. Furthermore, because there is a direct relationship between time of response and neuroanatomical region in AEPs, it is concluded that the emotional state modulates the incoming auditory stream.

METHODS

Subjects

Thirty-three adults (18 men), 20 to 35 years of age, right-handed, with normal vision and hearing, were asked to complete a brief questionnaire on caffeine intake, general medication, exercise level, circadian rhythm. They should not have any learning impairment or psychiatric disorders, and are not taking medication that influences cortisol levels including (but not limited to) hydrocortisone or selective serotonin reuptake inhibitors.

Division into Groups

Behavioral

The subjects are asked to rate experiences evoked by the stimuli using standardized 9-point valence and arousal scales. “High valence intensity” subjects (n = 13) are defined as those who rate each picture set at the expected values (negative as 9, positive as 1 or 2, and neutral as 4–6), and “low valence intensity” subjects (n = 20) are those who fail to meet these criteria. Although the visual stimuli were chosen such that the valence ratings of negative and positive stimuli were equivalently intense according to the published norms, the division of subjects into group is dependent on the variability of ratings given by this particular set of subjects.

Physiological

Subjects were alternately divided into “high-shift” (n = 17) and “low-shift” (n = 16) groups on the basis of the root mean square (RMS) of the difference waveform at Cz.

Auditory Stimulus

The acoustic stimulus is an emotionally neutral, natural 457-msec “Danny,” [dæni] spoken by a female speaker (average F0 = 189.8 Hz). This fully voiced acoustic stimulus elicits clear and characteristic evoked responses. The Kraus lab has considerable experience evaluating and analyzing brainstem and cortical responses to speech stimuli (reviewed in Abrams, Nicol, Zecker, & Kraus, 2006; Johnson, Nicol, & Kraus, 2005; Kraus & Nicol, 2005; Russo, Nicol, Zecker, Hayes, & Kraus, 2005; Wible, Nicol, & Kraus, 2005). The stimulus was chosen because it can be articulated without heavy aspiration. The specific recording was chosen because the fundamental frequency (F0) is especially steady within the first syllable. Auditory stimuli are presented at a binaurally comfortable listening level (70 dB peak SPL) through ER-3 ear inserts (Etymotics).

Visual Stimuli

Visual stimuli are digitized color pictures chosen from the IAPS (Lang & Öhman, 1988). The IAPS contains close to 1000 different pictures which have previously been experimentally evaluated and normed on two dimensions: valence and arousal (Ito, Cacioppo, & Lang, 1998). Pictures have been evaluated very similarly by different subject groups (Ito et al., 1998; Davis et al., 1995; Lang et al., 1993) and elicited emotional responses in the viewers. The modification of subjects’ emotions is supported by results demonstrating that autonomic responses, facial muscle activity, and amplitude of the startle reflex correlate with arousal and valence dimensions (Lang et al., 1993; Lang, Bradley, & Cuthbert, 1990). In this study, three emotional categories are used from this database: negative (e.g., mutilations), neutral (e.g., mushrooms), and positive (e.g., pleasant sceneries). Within a category, images (30 each) are selected to evoke the same arousal level.

Stimulus Presentation

Visual stimuli are presented in monovalent (positive, negative, or neutral IAPS pictures) blocks (random order), followed by a 5-min cool-down period to attenuate the emotional effects of the block. Four auditory alone blocks are inserted before, after, and between emotional blocks. In the auditory-alone condition, the subject is asked to fixate on a white asterisks at the center of a green screen. The auditory stimuli are presented while the subject looks at the screen, with variable ISI (range = 779–863 msec) to a total of 420 presentations per emotional condition and 1260 auditory-alone presentations. Each picture is presented one time only for 20 sec, during which the auditory stimulus is played 14 times. Stimuli are presented with Presentation (NeuroBehavioral Systems), which directly communicates with the AEP recording system to allow stimulus-synchronized recordings. Visual stimuli are projected onto a 97 cm × 122 cm screen using an LCD projector in a soundproof room, with the subject seated in a comfortable chair, 3 m away.

Data Collection

EEG

Responses are recorded in continuous mode with a PC-based system running Neuroscan Acquire 4 (Neuroscan; Compumedics) software through a SynAmp2 amplifier (Neuroscan; Compumedics). Responses are collected at a sampling rate of 5000 Hz with 31 tin electrodes affixed to an Electro-Cap International (Eaton, OH) brand cap (impedance < 5 kΩ). Additional electrodes were placed on the earlobes (for reference) and superior and outer canthus of the left eye (eye-blink monitor). Statistical anal yses were performed using Matlab and Microsoft Excel.

Perception

The subjects are asked to rate experiences evoked by the stimuli using standardized 9-point valence and arousal scales. Subjects do this at the end of each monovalent block (neutral, positive, negative).

RESULTS

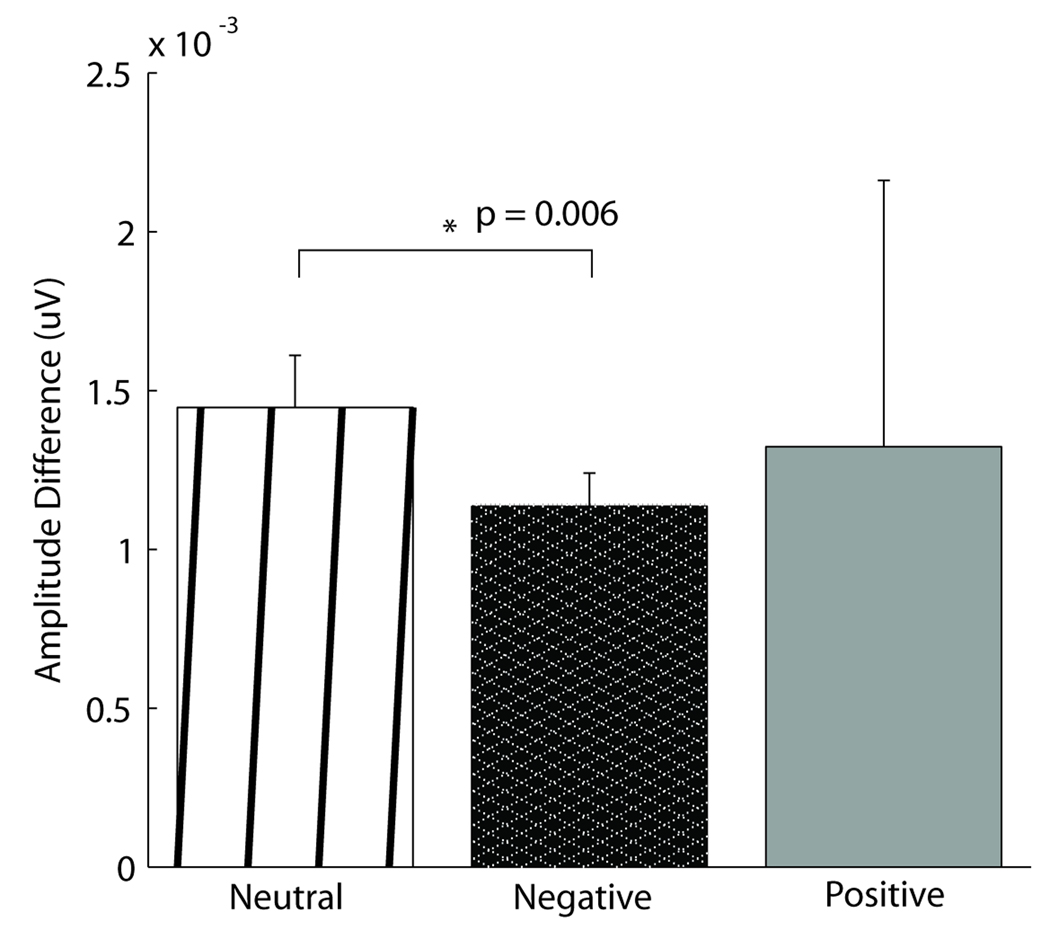

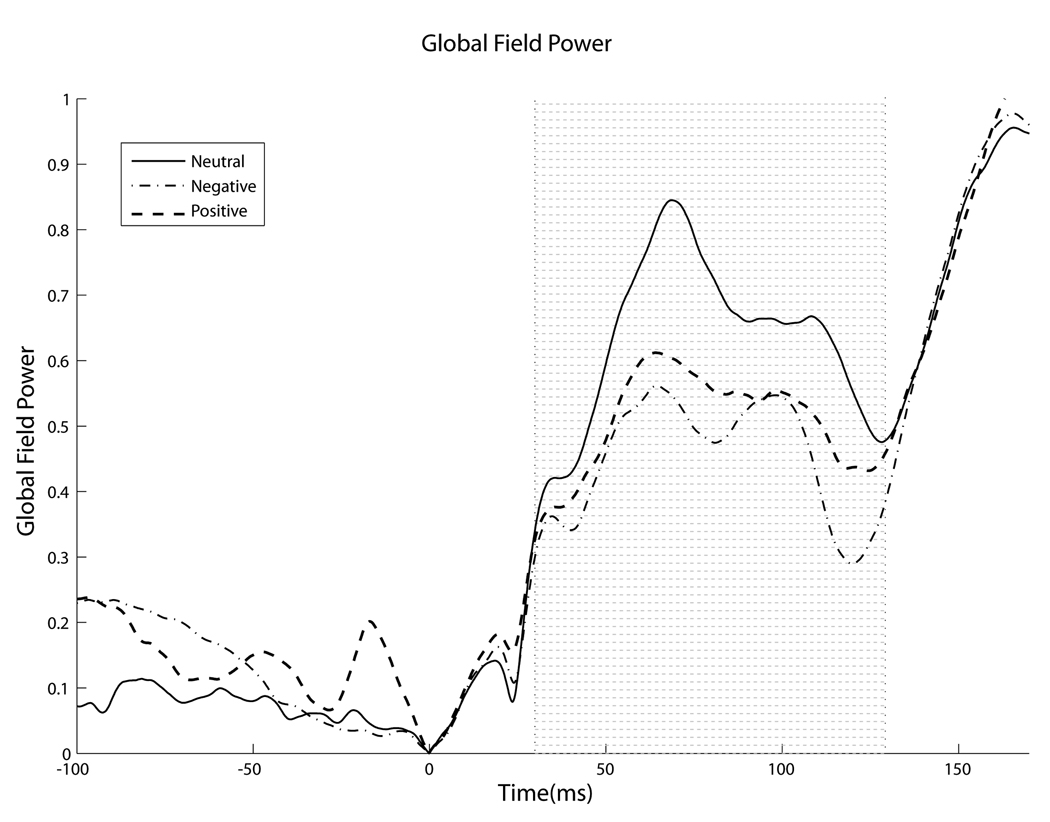

Across all subjects, emotion generated small but noticeable differences in the auditory evoked response as early as 20 msec after stimulus onset, whereas later (30.4–129.0 msec) larger valence-dependent and laterality effects were found to relate significantly to the reported subjective affective state. In the overall subject population, effects of emotion, specifically of negative valence, were seen as early as 20 msec after stimulus onset, in a time period of the middle latency response, implicating a role for the fast pathway. The effect, calculated by the peak-to-trough amplitude of the positive deflection in the 9.4–10.2 msec range and the negative deflection in the 20.0–22.0 msec range (typically called Na), is illustrated in Figure 1. The peak-to-trough amplitude is significantly smaller for subjects while in the induced negative state than in the neutral state. Furthermore, a strong emotional state, regardless of valence, decreased the distribution of activity across the scalp, measured by variation of voltage distribution. Namely, global field power (GFP), computed by taking the spatial standard deviation of the response waveforms across all channels at each point in time, was reduced by a strong emotional state in a specific portion of the response (30.4–129.0 msec) (Figure 2). It is important to note that a similar decrease in GFP between valences does not imply that emotion affects encoding similarly irrespective of valence, merely that the different valences have a similar magnitude of effect on global voltage variation.

Figure 1.

Early effects of negative emotion. Effects of negative emotion were seen as early as 20 msec in a precortical time range. There was an overall effect of condition (ANOVA, p = .05) in the average peak-to-trough amplitude difference between the peak in the 9.4–10.2 msec range and the trough 20–22.0 msec range (typically called Na), which was significantly larger in the neutral condition than in the negative condition, as indicated by a t test (p = .006). The positive condition did not differ from either neutral or negative.

Figure 2.

Emotional condition decreases global field power. In the early portion of the response (yellow shaded region, 30.4–129.0 msec), emotions decrease global field power of the auditory response, independent of emotional valence (ANOVA, p < .001).

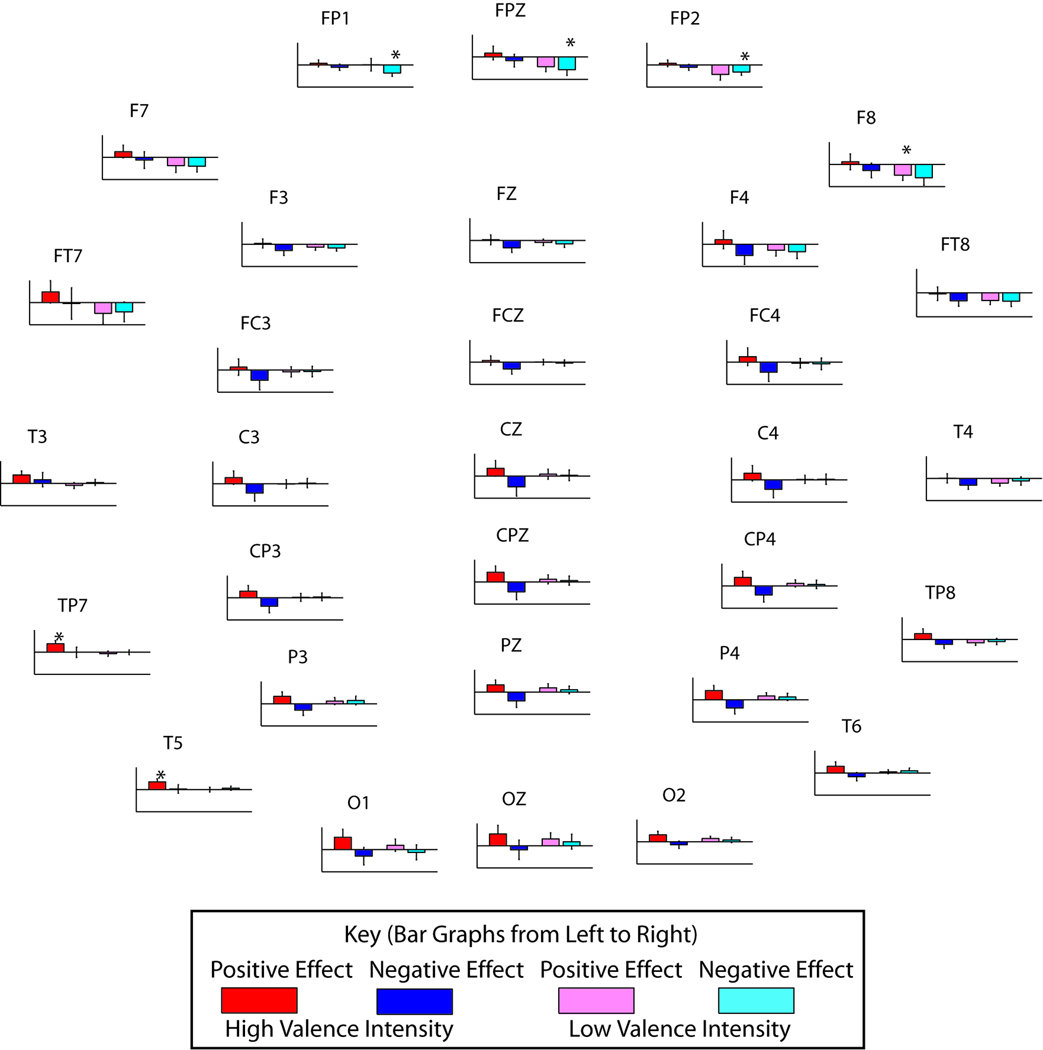

At the individual level, in the time range indicated by GFP, the high valence intensity (VI) group exhibited strong global effects of emotion for both valences, unlike the less affected subjects, who showed large frontal effects for negative emotion only. The global effects of emotion were calculated by subtracting the neutral response waveform from the responses in the positive and negative conditions for each subject for all channels. The resulting difference waveform, the effect of emotion, has the largest amplitude in the time range of interest identified by GFP. When subjects were divided by their emotion ratings into two groups: high and low VI, significant valence-dependent cortical effects in GFP emerged in the early portion of the response for both groups (ANOVA, low VI, p = .004, and high VI, p = .006). The integrated areas under the curve for positive-neutral and negative-neutral difference waves are shown in Figure 3, contrasting the two groups. High VI subjects exhibited a large shift due to emotion, whereas low VI subjects did not. Moreover, in the high VI group, bivalent divergent shifts are easily observable. Globally, positive valence shifted the response waveform in a positive direction, whereas negative valence shifted the response waveform in a negative direction, relative to neutral. Although strong global effects were seen in the high VI group, some regions were affected more strongly than others in these subjects. Whereas the overall strongest effects for the high VI group (red and blue) were generally left temporal in the positive condition (T3, TP7, and T5), the strongest effects for the low VI group (pink and cyan) were frontal for the negative condition (p < .05, post hoc t test). In addition to strong positive effects in left temporal electrodes, high VI subjects also exhibited divergent bivalent effects along the midline (ANOVA, p < .00001), which means the positive emotion and negative emotion diverge in their effect on the auditory response.

Figure 3.

Behavior predicts valence-dependent cortical effects of emotion. Although both the high valence intensity subjects (n = 13, red and blue) and low valence intensity subjects (n = 20, pink and cyan), as categorized by questionnaire responses, showed a significant global effect of negative emotion (ANOVA, p = .004 and .006, low and high valence intensity, respectively), only the high valence intensity subjects exhibited a significant effect of positive emotion (ANOVA, p = .0002). Divergent bivalent effects (ANOVA, p < .00001) are strongest in high valence intensity subjects at the midline. Whereas the overall strongest effects for the high valence intensity group are generally left temporal in the positive condition (T3, TP7, and T5), the strongest effects for the low valence intensity group are frontal for the negative condition. (* indicates p < .05 for results of individual t tests.)

The behavioral responses of the subjects also varied significantly with the root mean square (RMS) of the difference wave, considered a measure of emotional effect size, along the midline (rΦ = .534, p < .01) (Table 1). With respect to divergent bivalent effects, a trend emerged among the subjects. Subjects with strong waveform shifts due to positive emotion also tended to show strong shifts due to negative emotion. The effect was such that it was possible to use the neurophysiological data, specifically the RMS of the effect-of-emotion difference waveform at Cz, to divide the subjects into high-shift (n = 17) and low-shift (n = 16) groups. The neurophysiological grouping (high and low shift) varied directly and significantly (rΦ = .534, p < .01) with the behavioral grouping described previously (high and low VI) (Table 1B). Because the divergent bivalent effects on the midline are particularly pronounced at the central electrode “Cz,” the effect of emotion waveform from “Cz” (i.e., the difference waveform) was used to determine the high-shift and low-shift groups. When the RMS of the difference waveform at “Cz” was used as the grouping criterion (minimum threshold for both valences), subjects in the high-shift group exhibited a significant divergent bivalent effect of emotion, as shown by a binomial test (p = .015), that is, the valence of emotion indicates the direction of shift (Table 1A). Specifically, only the high-shift group (e.g., strong effects of emotion) exhibited the effect of positive emotion increasing amplitude and negative emotion decreasing amplitude, the same divergent bivalent trend seen in Figure 3. Taken together, it is evident that the subjectively reported affective state has a direct bearing on the objective neurophysiological measures.

Table 1.

Neurophysiology Relates to Behavior

| A) | |||

|---|---|---|---|

| Neurophysiological Shift Direction | |||

| Shift Group | Positive > Negative |

Negative > Positive |

Total |

| Low | 8 | 8 | 16 |

| High | 14 | 3 | 17 |

| B) | |||

| Neurophysiological Shift Amplitude | |||

| Valence Intensity | Low | High | Total |

| Low | 14 | 6 | 20 |

| High | 2 | 11 | 13 |

| Total | 16 | 17 | 33 |

(A) Neurophysiological categorization (based on RMS of emotion difference waveform) divides the subjects into a high shift (n = 17) and low shift (n = 16) group. The high valence intensity group demonstrates divergent bivalent effects (p = .015), as indicated by most of the subjects in that group (14 of 17). (B) Moreover, the behavioral grouping (high and low valence intensity) and neurophysiological division (high and low shift) of subjects associate significantly with a phi coefficient of 0.534 (p < .01).

DISCUSSION

Negative emotion generated a significant effect as early as 20 msec after the stimulus onset in all subjects, in a precortical time period (Figure 1). Although studies in rats have documented effects of emotion on auditory processing as early as 8 msec after the stimulus onset (Brandao et al., 2001), there have been no real attempts to measure the early effects of emotion in humans. This is the earliest known effect of emotion on human sensory processing; previously, emotional influence has been shown hundreds of milliseconds after stimulus onset (Müller et al., 2008). An early effect is interesting because it implies limbic modulation of the afferent processing stream through the fast pathway.

At the cortical level, the subjectively reported affective state has a direct bearing on the size of the divergent bivalent shift. The neurophysiological grouping (high and low shift) varies directly and significantly (rΦ = .534, p < .01) with the behavioral grouping (high and low VI). That the magnitudes of subjective affect and neurophysiological measures are thus related could be indicative of a causal relationship between the two with the observed effects being attributed to limbic projections to cortical regions. It is also possible that they are influenced by a common factor, which is to say that both neurophysiological measures and subjective affect are dependent on some aspect of limbic activity, or that to some extent, they may even be equivalent, such that the shift observed in emotional conditions literally is a measurement of subjective affect.

Despite the relationship between subjective affect and neurophysiological measures at the cortical level, however, the behavioral responses do not relate to neurophysiological responses during the precortical time period. It is possible that the subjectively reported affect is a high-level phenomenon, and the fast pathway functions independent of these higher-level processes. It implies that some level of emotional effects on auditory processing is preconscious, that an emotional state, once it is in existence, modulates the afferent auditory stream at structures below conscious awareness. These early effects do not depend on the subjective state reported by the subject. Naively, this may seem counterintuitive: Emotion affects the processing stream independent of whether the emotion is “felt”? In the visual domain, however, there is a phenomenon called blindsight, where blind spots result from lesions in the visual cortex. Although subjects do not report observing a stimulus in their blind spot, in a forced-choice paradigm, they are able to detect features presented in the blind spot above chance level. Similarly, subjects may experience an emotional state without being consciously aware of it. It is not impossible that such a mechanism is responsible for the effects of emotion during the preconscious processing connoted by the precortical time period.

The size of the divergent bivalent shift also depends on the amplitude of the response at particular locations on the scalp. Despite the lower GFP of both the positive and negative conditions, the shift of the actual waveforms was in different directions. The time region indicated by GFP (30.4–129.0 msec) includes major AEP peaks such as N1, typically observed at 100 msec after stimulus onset, which are not represented equally in all places on the scalp but exhibit the largest amplitude along the midline. The valence-independent decrease in GFP in that time range informs the nature of the dramatic bivalent divergent shift, that positive valence shifted the response waveform in a positive direction, whereas negative valence shifted the response waveform in a negative direction. This can be explained by the dependency of the size of the bivalent divergent shift on the amplitude of the waveform in that particular channel, that is, larger change to “response components” are shifted to a greater extent. Naturally, that reduces the variation in voltage across the scalp, thereby similarly reducing GFP for both affects, even though the directionality of the shift is different.

Also dependent on recording location is the relative shifts due to each emotional valence, and the laterality effects were found to be consistent with visual system results reported by Lee et al. (2004). The overall strongest effects for the high VI group were generally left temporal in the positive condition (T3, TP7, and T5), the strongest effects for the low VI group are frontal for the negative condition (p < .05, post hoc t test). The direction of the modulation, that is, positively for the positive condition and negatively for the negative condition, indicates the presence of more positive charges in closer proximity to the scalp in the positive condition and fewer in the negative condition, which can imply a plethora of possibilities. There may be a population code for emotional valence such that more or fewer neurons fire in a valence-dependent manner. There may, instead, be a place code for emotional valence such that positive valence is typically represented closer to the scalp than negative valence. However, the technical limitations of currently existing noninvasive methods usable on human subjects confine the ability to distinguish between such explanations. In future studies, it would also be interesting to determine whether these effects are purely due to emotion and are independent of the visual system. For instance, emotionally salient music selected by the subject can be used to elicit an emotional state in the subject (Blood & Zatorre, 2001). In this manner, it will be possible to show that the effects of an emotional state do not depend on the modality with which it is elicited.

REFERENCES

- Abrams DA, Nicol T, Zecker SG, Kraus N. Auditory brainstem timing predicts cerebral asymmetry for speech. Journal of Neuroscience. 2006;26:11131–11137. doi: 10.1523/JNEUROSCI.2744-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexandrov YI, Klucharev V, Sams M. Effect of emotional context in auditory-cortex processing. International Journal of Psychophysiology. 2007;65:261–271. doi: 10.1016/j.ijpsycho.2007.05.004. [DOI] [PubMed] [Google Scholar]

- Armony JL, Dolan RJ. Modulation of auditory neural responses by a visual context in human fear conditioning. NeuroReport. 2001;12:3407–3411. doi: 10.1097/00001756-200110290-00051. [DOI] [PubMed] [Google Scholar]

- Balconi M, Pozzoli U. Face-selective processing and the effect of pleasant and unpleasant emotional expressions on ERP correlates. International Journal of Psychophysiology. 2003;49:67–74. doi: 10.1016/s0167-8760(03)00081-3. [DOI] [PubMed] [Google Scholar]

- Bao SW, Chang EF, Davis JD, Gobeske KT, Merzenich MM. Progressive degradation and subsequent refinement of acoustic representations in the adult auditory cortex. Journal of Neuroscience. 2003;23:10765–10775. doi: 10.1523/JNEUROSCI.23-34-10765.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences, U.S.A. 2001;98:11818. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brandao ML, Coimbra NC, Osaki MY. Changes in the auditory-evoked potentials induced by fear-evoking stimulations. Physiology & Behavior. 2001;72:365–372. doi: 10.1016/s0031-9384(00)00418-2. [DOI] [PubMed] [Google Scholar]

- Brandao ML, Borelli KG, Nobre MJ, Santos JM, Albrechet-Souza L, Oliveira AR, et al. Gabaergic regulation of the neural organization of fear in the midbrain tectum. Neuroscience and Biobehavioral Reviews. 2005;29:1299–1311. doi: 10.1016/j.neubiorev.2005.04.013. [DOI] [PubMed] [Google Scholar]

- Campanella S, Quinet P, Bruyer R, Crommelinck M, Guerit JM. Categorical perception of happiness and fear facial expressions: An ERP study. Journal of Cognitive Neuroscience. 2002;14:210–227. doi: 10.1162/089892902317236858. [DOI] [PubMed] [Google Scholar]

- Canli T, Desmond JE, Zhao Z, Glover G, Gabrieli JD. Hemispheric asymmetry for emotional stimuli detected with fMRI. NeuroReport. 1998;9:3233–3239. doi: 10.1097/00001756-199810050-00019. [DOI] [PubMed] [Google Scholar]

- Davidson RJ, Slagter HA. Probing emotion in the developing brain: Functional neuroimaging in the assessment of the neural substrates of emotion in normal and disordered children and adolescents. Mental Retardation and Developmental Disabilities Research Reviews. 2000;6:166–170. doi: 10.1002/1098-2779(2000)6:3<166::AID-MRDD3>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Davis WJ, Rahman MA, Smith LJ, Burns A, Senecal L, McArthur D, et al. Properties of human affect induced by static color slides (IAPS)—Dimensional, categorical and electromyographic analysis. Biological Psychology. 1995;41:229–253. doi: 10.1016/0301-0511(95)05141-4. [DOI] [PubMed] [Google Scholar]

- Dolan RJ. Emotion, cognition, and behavior. Science. 2002;298:1191–1194. doi: 10.1126/science.1076358. [DOI] [PubMed] [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotion. Perception & Psychophysics. 2001;63:1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Erhan H, Borod JC, Tenke CE, Bruder GE. Identification of emotion in a dichotic listening task: Event-related brain potential and behavioral findings. Brain and Cognition. 1998;37:286–307. doi: 10.1006/brcg.1998.0984. [DOI] [PubMed] [Google Scholar]

- Fischer H, Wright CI, Whalen PJ, McInerney SC, Shin LM, Rauch SL. Brain habituation during repeated exposure to fearful and neutral faces: A functional MRI study. Brain Research Bulletin. 2003;59:387–392. doi: 10.1016/s0361-9230(02)00940-1. [DOI] [PubMed] [Google Scholar]

- Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nature Neuroscience. 2003;6:1216–1223. doi: 10.1038/nn1141. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Cognitive Brain Research. 2003;16:174–184. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Irvine DRF, Rajan R, Brown M. Injury- and use-related plasticity in adult auditory cortex. Audiology & Neuro-Otology. 2001;6:192–195. doi: 10.1159/000046831. [DOI] [PubMed] [Google Scholar]

- Ito T, Cacioppo JT, Lang PJ. Eliciting affect using the international affective picture system: Trajectories through evaluative space. Personality and Social Psychology Bulletin. 1998;24:855–879. [Google Scholar]

- Johnson KL, Nicol TG, Kraus N. Brain stem response to speech: A biological marker of auditory processing. Ear and Hearing. 2005;26:424–434. doi: 10.1097/01.aud.0000179687.71662.6e. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Subcortical face processing. Nature Reviews Neuroscience. 2005;6:766–774. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- Jones NA, Fox NA. Electroencephalogram asymmetry during emotionally evocative films and its relation to positive and negative affectivity. Brain and Cognition. 1992;20:280–299. doi: 10.1016/0278-2626(92)90021-d. [DOI] [PubMed] [Google Scholar]

- Joost W, Bach M, Schulte-Monting J. Influence of mood on visually evoked potentials: A prospective longitudinal study. International Journal of Psychophysiology. 1992;12:147–153. doi: 10.1016/0167-8760(92)90005-v. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA, Tramo MJ. Auditory processing in primate cerebral cortex. Current Opinion in Neurobiology. 1999;9:164–170. doi: 10.1016/s0959-4388(99)80022-1. [DOI] [PubMed] [Google Scholar]

- Kline JP, Schwartz GE, Fitzpatrick DF, Hendricks SE. Defensiveness, anxiety and the amplitude/intensity function of auditory-evoked potentials. International Journal of Psychophysiology. 1993;15:7–14. doi: 10.1016/0167-8760(93)90090-c. [DOI] [PubMed] [Google Scholar]

- Kraus N, Nicol T. Brainstem origins for cortical “what” and “where” pathways in the auditory system. Trends in Neurosciences. 2005;4:5. doi: 10.1016/j.tins.2005.02.003. [DOI] [PubMed] [Google Scholar]

- Lancker D, Sidtis J. The identification of affective-prosodic stimuli by left-and right-hemisphere-damaged subjects all errors are not created equal. Journal of Speech, Language and Hearing Research. 1992;35:963–970. doi: 10.1044/jshr.3505.963. [DOI] [PubMed] [Google Scholar]

- Lang P, Öhman DV. The International Affective Picture System. 1988 [photographic slides] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Emotion, attention, and the startle reflex. Psychological Review. 1990;97:377–395. [PubMed] [Google Scholar]

- Lang PJ, Greenwald MK, Bradley MM, Hamm AO. Looking at pictures—Affective, facial, visceral, and behavioral reactions. Psychophysiology. 1993;30:261–273. doi: 10.1111/j.1469-8986.1993.tb03352.x. [DOI] [PubMed] [Google Scholar]

- Ledoux JE, Sakaguchi A, Reis DJ. Subcortical efferent projections of the medial geniculate-nucleus mediate emotional responses conditioned to acoustic stimuli. Journal of Neuroscience. 1984;4:683–698. doi: 10.1523/JNEUROSCI.04-03-00683.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee GP, Meador KJ, Loring DW, Allison JD, Brown WS, Paul LK, et al. Neural substrates of emotion as revealed by functional magnetic resonance imaging. Cognitive and Behavioral Neurology. 2004;17:9–17. doi: 10.1097/00146965-200403000-00002. [DOI] [PubMed] [Google Scholar]

- Macedo CE, Cuadra G, Molina V, Brandao M. Aversive stimulation of the inferior colliculus changes dopamine and serotonin extracellular levels in the frontal cortex: Modulation by the basolateral nucleus of amygdala. Synapse. 2005;55:58–66. doi: 10.1002/syn.20094. [DOI] [PubMed] [Google Scholar]

- Marsh RA, Fuzessery ZM, Grose CD, Wenstrup JJ. Projection to the inferior colliculus from the basal nucleus of the amygdala. Journal of Neuroscience. 2002;22:10449–10460. doi: 10.1523/JNEUROSCI.22-23-10449.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell RL, Elliot R, Barry M, Cruttenden A, Woodruff PW. The neural response to emotional prosody, as revealed by functional magnetic resonance imaging. Neuropsychologia. 2003;41:1410–1421. doi: 10.1016/s0028-3932(03)00017-4. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen SK, Keil A. Time course of competition for visual processing resources between emotional pictures and foreground task. Cerebral Cortex. 2008:1892–1899. doi: 10.1093/cercor/bhm215. [DOI] [PubMed] [Google Scholar]

- Nobre MJ, Sandner G, Brandao ML. Enhancement of acoustic evoked potentials and impairment of startle reflex induced by reduction of GABAergic control of the neural substrates of aversion in the inferior colliculus. Hearing Research. 2003;184:82–90. doi: 10.1016/s0378-5955(03)00231-4. [DOI] [PubMed] [Google Scholar]

- Pell MD. Fundamental frequency encoding of linguistic and emotional prosody by right hemisphere-damaged speakers. Brain and Language. 1999;69:161–192. doi: 10.1006/brln.1999.2065. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Research article emotion facilitates perception and potentiates the perceptual benefits of attention. Psychological Science. 2006;17:292. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pizzagalli D, Koenig T, Regard M, Lehmann D. Faces and emotions: Brain electric field sources during covert emotional processing. Neuropsychologia. 1998;36:323–332. doi: 10.1016/s0028-3932(97)00117-6. [DOI] [PubMed] [Google Scholar]

- Poremba A, Saunders RC, Crane AM, Cook M, Sokoloff L, Mishkin M. Functional mapping of the primate auditory system. Science. 2003;299:568–571. doi: 10.1126/science.1078900. [DOI] [PubMed] [Google Scholar]

- Russo NM, Nicol TG, Zecker SG, Hayes EA, Kraus N. Auditory training improves neural timing in the human brainstem. Behavioural Brain Research. 2005;156:95–103. doi: 10.1016/j.bbr.2004.05.012. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Matsumura M. Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. NeuroReport. 2001;12:709–714. doi: 10.1097/00001756-200103260-00019. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Vocal affect expression: A review and a model for future research. Psychological Bulletin. 1986;99:143–165. [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. Attention and emotion: An ERP analysis of facilitated emotional stimulus processing. NeuroReport. 2003a;14:1107–1110. doi: 10.1097/00001756-200306110-00002. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. Emotional facilitation of sensory processing in the visual cortex. Psychological Science. 2003b;14:7–13. doi: 10.1111/1467-9280.01411. [DOI] [PubMed] [Google Scholar]

- Starkstein SE. Neuropsychological and neuroradiologic correlates of emotional prosody comprehension. Neurology. 1994;44:515–522. doi: 10.1212/wnl.44.3_part_1.515. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: Neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Weinberger NM, Hopkins W, Diamond DM. Physiological plasticity of single neurons in auditory cortex of the cat during acquisition of the pupillary conditioned response: I. Primary field. Behavioral Neuroscience. 1984;98:171–188. doi: 10.1037//0735-7044.98.2.171. [DOI] [PubMed] [Google Scholar]

- Wible B, Nicol T, Kraus N. Correlation between brainstem and cortical auditory processes in normal and language-impaired children. Brain. 2005;128:417–423. doi: 10.1093/brain/awh367. [DOI] [PubMed] [Google Scholar]

- Williams LM, Liddell BJ, Rathjen J, Brown KJ, Gray J, Phillips M, et al. Mapping the time course of nonconscious and conscious perception of fear: An integration of central and peripheral measures. Human Brain Mapping. 2004;21:64–74. doi: 10.1002/hbm.10154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young LL, Jr, Horner JS. A comparison of averaged evoked response amplitudes using nonaffective and affective verbal stimuli. Journal of Speech and Hearing Research. 1971;14:295–300. doi: 10.1044/jshr.1402.295. [DOI] [PubMed] [Google Scholar]