Abstract

In the last decades, researchers have proposed a large number of theoretical models of timing. These models make different assumptions concerning how animals learn to time events and how such learning is represented in memory. However, few studies have examined these different assumptions either empirically or conceptually. For knowledge to accumulate, variation in theoretical models must be accompanied by selection of models and model ideas. To that end, we review two timing models, Scalar Expectancy Theory (SET), the dominant model in the field, and the Learning-to-Time (LeT) model, one of the few models dealing explicitly with learning. In the first part of this article, we describe how each model works in prototypical concurrent and retrospective timing tasks, identify their structural similarities, and classify their differences concerning temporal learning and memory. In the second part, we review a series of studies that examined these differences and conclude that both the memory structure postulated by SET and the state dynamics postulated by LeT are probably incorrect. In the third part, we propose a hybrid model that may improve on its parents. The hybrid model accounts for the typical findings in fixed-interval schedules, the peak procedure, mixed fixed interval schedules, simple and double temporal bisection, and temporal generalization tasks. In the fourth and last part, we identify seven challenges that any timing model must meet.

Keywords: Learning-to-Time (LeT) model, Scalar Expectancy Theory (SET), mathematical models, temporal discrimination, timing

“Stated more generally the problem is how time as a dimension of nature enters into discriminative behavior and hence into human knowledge.”

(B. F. Skinner 1938, p. 263)

The capacity to adjust behavior to temporal regularities in the environment in the range of seconds to minutes is called interval timing, or timing for short. This capacity is expressed in a variety of ways such as in anticipating an important event once a specific interval of time has elapsed, judging which of two events lasted longer, performing an action for a given duration, or choosing which of two cues signals a shorter delay to a reward. In each case, timing is said to take place because behavior is a function of one or more arbitrary intervals between events or durations of events. To say that an animal or person is timing is not to say simply that its behavior occurs in time, but that the best predictor of its behavior is an interval of time.

After several decades of research, scientists still debate the properties that characterize timing (e.g., Lejeune & Wearden, 2006; Staddon & Cerutti, 2003; Zeiler, 1998; Zeiler & Powell, 1994), the processes and neural mechanisms that underlie it (Buhusi & Meck, 2005; Ivry & Spencer, 2004; Matell & Meck, 2000; Meck, 1996), how the capacity is disrupted by pharmacological agents and disease (e.g., Cevik, 2003; Meck, 1983; McClure, Saulsgiver, & Wynne, 2005), and which quantitative models and theories best describe it (e.g., Lejeune, Richelle, & Wearden, 2006; Staddon & Higa, 1999, 2006). Although much remains to be discovered, it is also the case that during the last decades psychologists have made substantial progress in the study of timing. First, they have developed a rich set of procedures to study the different expressions of the timing capacity (e.g., Church, 1984, 2004; Gallistel, 1990; Richelle & Lejeune, 1980; Roberts, 1998). Some of these procedures, described in greater detail below, include the fixed-interval schedule and the peak procedure to study concurrent timing (i.e., the timing of ongoing events), or the temporal generalization and temporal bisection procedures to study retrospective timing (i.e., the timing of elapsed events). Second, they have collected a large amount of orderly data on timing and from them advanced a few empirical generalizations. One of them, perhaps the most important, is the scalar property, the fact that timing is relative to the standard being timed (Church, 2003; Gibbon, 1977, 1991; Lejeune & Wearden, 2006). To illustrate, timing performances R1(t) and R2(t) on intervals 30- and 90-s long, respectively, are scale transforms of each other—R2(t) is proportional to R1(t/3). Third, they have proposed a significant number of models and theories of timing. These models come from different theoretical perspectives (behavioral, cognitive, computational, and neurobiological), propose different processes and mechanisms, stress different subsets of research findings, and have different depths of analysis. A nonexhaustive list includes the Scalar Expectancy Theory (Gibbon, 1991; Gibbon, Church, & Meck, 1984), the Behavioral theory of Timing (Killeen & Fetterman, 1988), the Spectral Model (Grossberg & Schmajuk, 1989), the Diffusion model (Staddon & Higa, 1991), the Multiple Oscillator model (Church & Broadbent, 1990), the Learning-to-Time model (Machado, 1997), the Multiple Time Scales model (Staddon & Higa, 1999), the Packet Theory (Kirkpatrick, 2002) and its descendant, the Modular Theory of Learning (Guilhardi, Yi, & Church, 2007), the Active Time Model (Dragoi, Staddon, Palmer, & Buhusi, 2003), and the list could continue with the neurobiological models. And fourth, they have started the thorny process of comparing and contrasting these models with each other and with data (e.g., Bizo, Chu, Sanabria, & Killeen, 2006; Fetterman & Killeen, 1995; Leak & Gibbon, 1995; Lejeune et al., 2006; Staddon & Higa, 1999; Yi, 2007). If doing experiments (point 2 above) explores the empirical space of timing, and proposing models (point 3 above) explores the theoretical space of timing, comparing and contrasting models with data coordinates the two spaces in an attempt to design more informative experiments and build more powerful theories.

The present article fits in this last category, for it reviews a series of studies that compared and contrasted the Scalar Expectancy Theory (SET), the leading model in the field of animal and human timing, with the Learning-to-Time (LeT) model, a derivative of Killeen and Fetterman's (1988) behavioral theory of timing. As we shall see, these two models make different assumptions about the processes underlying timing in general and what animals learn in timing tasks in particular. For this reason, examining the two models jointly has proved to be a fruitful exercise because it has led us to identify not only serious problems with each model but also important but unknown properties of timing and temporal memory. It has also helped to clarify problems that future research should solve.

Other studies have designed experiments specifically to contrast timing models, but most of them have not addressed issues of learning, or explored the models' distinct conceptions of learning. For example, one set of these studies contrasted SET and the behavioral theory of timing on the issue of whether the rate of a hypothetical internal clock is influenced by global and local reinforcement rates and how that influence might account for certain aspects of timing performance related to the scalar property (Fetterman & Killeen, 1991, 1995; Leak & Gibbon, 1995; Morgan, Killeen, & Fetterman, 1993; see also Bizo & White, 1994a, 1994b; 1995a, 1995b, 1997). By comparison, the issues in the present article have been examined less. They are, namely, how animals learn to time, how this learning affects their temporal memories, how temporal memories are accessed and their contents retrieved, and which experimental findings may help researchers choose among distinct conceptualizations of learning to time. But even the domain of learning to time is too broad to be covered in one single article. We further narrow our focus to issues related to memory, the precipitate of learning. We pay special attention to the contents of memory, the (often tacit) rules to form new memories, access them, and retrieve their contents. We will not discuss other important matters such as the nature of time markers (e.g., Staddon & Higa, 1999) or the timing of multiple signals (Meck & Church, 1984). And, for the most part, we restrict our remarks to timing in animals.

The article is divided into four parts. In the first part, we describe the structure of each model and how they work in two prototypical tasks, one of concurrent timing, the fixed-interval schedule, and the other of retrospective timing, the temporal bisection task1. Describing how the models work in these simple tasks will reveal their similarities and differences. In the second part, we summarize experiments that exploited some of the differences between the learning assumptions of the two models, and from their results we draw some implications for our understanding of timing. In the third part, we propose a new model of timing that integrates features of SET and LeT and show how the hybrid model overcomes some of the shortcomings of its two parents. In the fourth and final part, we identify some of the challenges that any model of timing must meet and thereby hope to pave the way for a better account of timing.

I. TWO MODELS OF TIMING, SET AND LET

To introduce the two models, we consider the simplest time-based task, the fixed-interval (FI) reinforcement schedule. In FI T-s, a reinforcer becomes available T s after the trial onset. Responses emitted at times t ≤ T are recorded but not reinforced, whereas the first response emitted at t > T earns the reinforcer and, usually, starts a new trial. Of interest is how the animal distributes its responses during the trial. Typically, at the steady state, the animal pauses or responds at a low rate during the first half to two thirds of the trial and then it either responds at a constant but significantly higher rate until the end of the trial, yielding the break-and-run pattern (Schneider, 1969), or it accelerates until the end of the trial, yielding the FI scallop (Dews, 1978). Averaged across trials, response rate follows a smooth, monotonically increasing, sigmoid curve. Moreover, when the same animal is exposed to different FI schedules, the average rate curves superimpose when plotted with normalized axes (Dews, 1970). Superimposition means that relative response rate at time t into the trial is a function of the ratio t/T. How do SET and LeT explain this performance?

SET in FI Schedules

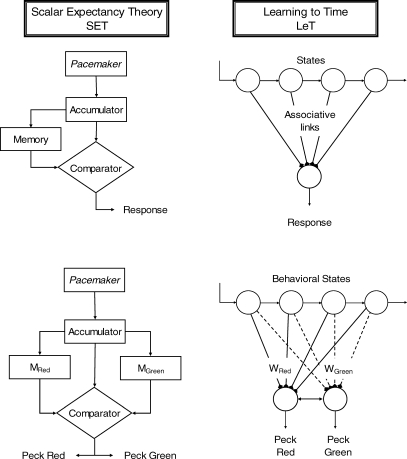

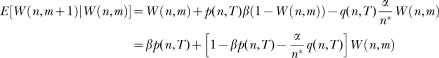

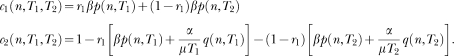

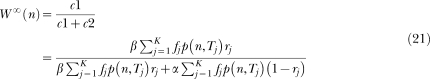

SET is an elegant information-processing model developed by John Gibbon, Russell Church, and their collaborators (for summaries, see Church, 2003; Gallistel, 1990; Gibbon 1991). In its most basic form, the model postulates an internal clock composed by the three devices displayed in the top left panel of Figure 1, a pacemaker–accumulator unit, a memory, and a comparator. The pacemaker generates pulses at a high and variable rate (λ). The accumulator, which is reset to 0 at the beginning of each trial, adds the pacemaker pulses throughout the trial. When the reinforcer is delivered, the value in the accumulator is multiplied by a random factor (k*) and saved in a long-term memory store. Because the pacemaker rate λ and the memory factor k* are random variables (typically Gaussian), the value in the accumulator at the end of an interval and the value stored in memory also will be variable, even when the timed interval has constant duration. More important, both random variables induce scalar variability in the subject's representation of time, meaning that both change multiplicatively the duration of the physical interval2.

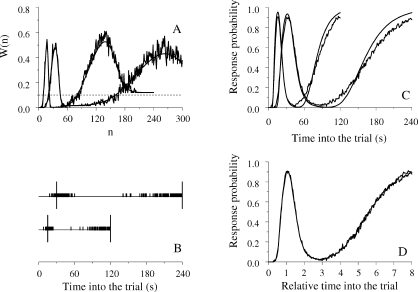

Fig 1.

The left panels show the structure of SET. A pacemaker generates pulses which are added in an accumulator and stored at the end of the to-be-timed interval in one or more long-term memories. To decide when to respond, the animal compares the number currently in the accumulator with samples extracted from the memories. In FI schedules (top left) only one memory is formed; in the bisection procedure (bottom left) two memories are formed. The right panels show the structure of LeT. After a time marker, a set of states (top circles) is activated in series. The states may be coupled to various degrees (associative links) with one or more operant responses (bottom circles). The strength of each response is determined by the dot product between the vectors of state activation and coupling. In FI schedules (top right) only one vector of couplings is formed; in the bisection procedure (bottom right) two vectors are formed.

Because each trial adds one value to the memory store, after a few trials the memory will contain a distribution of values representing the reinforcement times. According to SET, to decide whether or not to respond, the animal extracts a sample from its memory at trial onset and then compares the sample with the current value in the accumulator. The memory value, M, represents the reinforcement time; the accumulator value, xt, represents elapsed time during the trial. When the ratio between the accumulator value and the memory value crosses a threshold, Θ, responding changes from a low (or possibly zero) rate to a high rate. The threshold parameter Θ also is a random variable.

At the steady state, SET predicts on each trial a break-and-run response pattern, represented graphically by a step function. The moment of the break (graphically, the time when the step occurs) is a random variable with mean equal to a constant proportion of the FI and standard deviation proportional to the mean. The latter statement expresses Weber's law in the time domain. Averaging the individual trial step functions yields the session response rate curve with its typical sigmoid shape. SET also predicts that the average rate curves produced by the same animal on different FI schedules superimpose when plotted in relative time.

LeT in FI Schedules

Like its ancestor, the behavioral theory of timing (Killeen, 1991; Killeen & Fetterman, 1988), LeT postulates three elements, a series of states, a vector of associative links connecting the states to the operant response, and the operant response itself (see the top right panel of Figure 1; Machado 1997). The states embody the concepts of elicited, induced, adjunctive, interim, and terminal classes of behavior (Falk, 1977; Staddon, 1977; Staddon & Simmelhag, 1971; Timberlake & Lucas, 1985) and according to LeT they underlie the temporal organization of behavior. At present, we do not know how precisely the states relate to measurable behavior or what their neural basis is; they remain intervening variables (for further discussion of the role of the states in timing and their connection with mediating behaviors, see Fetterman, Killeen, & Hall, 1998; Killeen & Fetterman, 1988; Matthews & Lerer, 1987; Richelle & Lejeune, 1980).

The states are aroused or activated serially. Thus, when the trial begins only the first state is active, but, as time elapses, the activation of each state spreads with rate λ to the next state. Each state (n = 1, 2,…) is coupled with the operant response and the degree of the coupling, represented by variable W(n), changes in real time, decreasing to 0 at rate α during extinction, and increasing to 1 at rate β during reinforcement. Thus states that are strongly active when food is unavailable lose their coupling to, and eventually may not support, the operant response, whereas states strongly active when food is available increase their coupling and may therefore sustain the response. Finally, the strength of the operant response is obtained by adding the cueing or discriminative function of all states, that is, their associative links, each multiplied by the degree of activation of the corresponding state. States that are both strongly active and strongly associated with the operant response exert more control over that response than less active or conditioned states3.

According to LeT, in the FI schedule the couplings between the early states and the operant response decrease because food is not available when these states are maximally active, but the couplings between the later states and the operant response increase because later states are the most active when food occurs. At the steady state, as successive states become active during the trial, their stronger couplings sustain increasing response rates. To predict superimposition of the rate curves for different FI schedules, LeT further assumes that parameter λ (i.e., how fast the activation spreads across states) and the ratio of the learning parameters, α/β, are both inversely proportional to T. This assumption means that as the FI increases (and overall reinforcement rate decreases), the activation spreads more slowly across the states and extinction becomes relatively less effective than reinforcement, a sort of partial reinforcement extinction effect.

We have described how SET and LeT handle the FI schedule, the prototypical concurrent timing task. Next, we describe how they handle temporal bisection, the prototypical retrospective timing task. Then we will have enough information about each model to identify their similarities and differences.

A temporal bisection task is a conditional discrimination task in which two sample stimuli differing only in duration are mapped to two comparison stimuli. A pigeon sees a center key lit for either 1 s or for 4 s and then chooses between two side keys, one red and the other green. Choices of Red are rewarded after 1-s samples and choices of Green are rewarded after 4-s samples. After the pigeon has learned to discriminate the two samples, stimulus generalization is examined by introducing samples with intermediate durations and measuring the subject's preference for one of the keys, say, Red. The psychometric function relating the proportion of Red choices to sample duration t, P(Red|t), has three features (Catania, 1970; Church & Deluty, 1977; Fetterman & Killeen, 1991; Killeen & Fetterman, 1988; Machado, 1997; Morgan, Killeen, & Fetterman, 1993; Platt & Davis, 1983; Stubbs, 1968). First, as t increases, P(Red|t) decreases monotonically and in a sigmoid way from about 1 to about 0. Second, the point of subjective equality, or PSE, is close to the geometric mean of the two training stimuli (i.e., the square root of their product). In the example at hand, P(Red|t) = 0.5 when t = 2 s. Third, individual subject psychometric functions obtained with samples holding the same ratio, for example, “1 vs. 4” and “4 vs. 16”, generally are scale transforms. This means that if the test durations from the “4 vs.16” discrimination are divided by 4, bringing them into the same range as the “1 vs. 4” test durations, then the two psychometric functions will superimpose. Superimposition reveals Weber's law for timing in the sense that equal ratios yield equal discriminabilities. How do SET and LeT explain this performance?

SET in the Temporal Bisection Task

The extension of SET to the bisection task requires one additional memory store and a more complex decision rule (see the bottom left panel of Figure 1; Gibbon, 1981, 1991; also Church, 2003; Gallistel, 1990). Specifically, in the “1 vs. 4” bisection task, there will be two memories, one containing the numbers that are in the accumulator when a choice of Red is rewarded and another containing the numbers that are in the accumulator when a choice of Green is rewarded. We identify the two memory stores by MRed and MGreen to stress the fact that they are indexed by the choice alternatives. Because the pacemaker speed λ and the memory factor k* are random variables, the values stored in each memory will vary across trials. At the steady state, each memory will contain a distribution of values whose mean represents the corresponding sample duration, and whose standard deviation represents the uncertainty associated with the sample duration due to the noise inherent in the timing process.

According to SET, after a sample with duration t the pigeon's choice will depend on three numbers, xt, the number of pulses in the accumulator at the end of the sample, XS, a number extracted from MRed and representing the short stimulus, and XL, a number extracted from MGreen and representing the long stimulus. If (xt/XS) < (XL/xt), then the pigeon is more likely to choose the Red or “Short” response, but if (xt/XS) > (XL/xt), then the pigeon is more likely to choose the Green or “Long” response. SET predicts indifference when (xt/XS) = (XL/xt), which is equivalent to xt = √(XS × XL), the geometric mean of the (subjective) training durations. SET also predicts sigmoid-shaped psychometric functions and superimposition of functions obtained with samples holding the same ratio (Gibbon, 1981; Church, 2003)

LeT in the Temporal Bisection Task

The model's extension to the bisection task requires one extra vector of associative links and a more complex decision rule (see bottom right panel of Figure 1; Machado, 1997). The states become active at sample onset, the time marker, and each state is now coupled with two responses. The strength of the link connecting state n with response r, Wr(n), changes only after the animal chooses. If the choice response is reinforced, the links between the states and that response increase, whereas the links between the states and the other response decrease, always in proportion to each state's activation. Conversely, if the choice response is extinguished, the links between the states and that response decrease, whereas the links between the states and the other response increase. In other words, the model assumes that, in bisection tasks, when the link between a state and one response changes, the link between the same state and the other response also changes, albeit in the opposite direction. Hence, the model's learning rule implements a strong form of response competition4.

On each trial, choice depends on which states are most active at the end of the sample and on the strength of the links between those states and the two responses. To illustrate, in a “1 vs. 4” task, after the 1-s sample the initial states are the most active and because of the reinforcement contingencies their link with Red will be strong whereas their link with Green will be weak—hence the preference for Red after short samples. However, after the 4-s samples, later states will be the most active and because of the reinforcement contingencies their link with Red will be weak whereas their link with Green will be strong—hence the preference for Green after the long samples. LeT predicts that preference for Red decreases as sample duration ranges from 1 to 4 s. Moreover, it also predicts (see Machado, 1997) a PSE close to, but slightly greater than, the geometric mean of the training stimuli and, when λ is proportional to the overall reinforcement rate during the trials, that psychometric functions obtained with samples holding the same ratio will superimpose when plotted on a common axis.

Similarities and Differences between SET and LeT

To analyze and test models by experiment, we need to understand first their similarities and differences. To that end, it is useful to compare the models' corresponding structures. Table 1 summarizes the information. The pacemaker–accumulator unit in SET corresponds to the serial organization of states in LeT: As the pacemaker emits pulses at rate λ (SET), the activation spreads across states at rate λ (LeT); at the beginning of each trial, as the accumulator is reset (SET), the first state in the series is aroused (LeT); during the trial, as the accumulator adds pulses (SET), successive states along the series become the most active states (LeT). And as the current number in the accumulator represents elapsed time (SET), the currently most active state also represents elapsed time (LeT). Furthermore, the memory store in SET corresponds to the vector of associative links in LeT. Both represent the subject's learning history, one as a distribution of subjective times of reinforcement (SET), the other as a vector of links with different strengths (LeT).

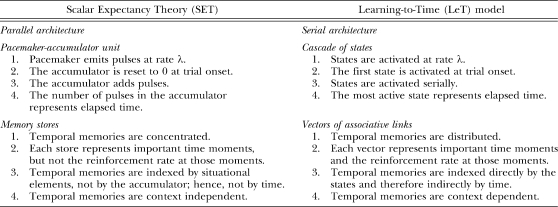

Table 1.

Similarities and differences between SET and LeT.

| Scalar Expectancy Theory (SET) | Learning-to-Time (LeT) model |

| Parallel architecture | Serial architecture |

| Pacemaker-accumulator unit | Cascade of states |

| 1. Pacemaker emits pulses at rate λ. | 1. States are activated at rate λ. |

| 2. The accumulator is reset to 0 at trial onset. | 2. The first state is activated at trial onset. |

| 3. The accumulator adds pulses. | 3. States are activated serially. |

| 4. The number of pulses in the accumulator represents elapsed time. | 4. The most active state represents elapsed time. |

| Memory stores | Vectors of associative links |

| 1. Temporal memories are concentrated. | 1. Temporal memories are distributed. |

| 2. Each store represents important time moments, but not the reinforcement rate at those moments. | 2. Each vector represents important time moments and the reinforcement rate at those moments. |

| 3. Temporal memories are indexed by situational elements, not by the accumulator; hence, not by time. | 3. Temporal memories are indexed directly by the states and therefore indirectly by time. |

| 4. Temporal memories are context independent. | 4. Temporal memories are context dependent. |

Despite the structural similarities between the two models, and the fact that they often predict similar outcomes, the models differ in how they conceptualize what animals learn in timing tasks. To exploit these differences empirically and conceptually, we classify them in four types. These types are interrelated, and may be seen as different expressions of the same face, but because each focuses on a slightly different issue related to temporal learning and memory, we present them separately (see Table 1).

Concentrated (SET) vs. distributed (LeT) memory

Perhaps the most obvious difference between the two models is that whereas in SET memory is concentrated in stores or bins (e.g., MRed and MGreen in the bisection task described above), in LeT it is distributed across links. Moreover, in SET the memory bins have no internal structure. Their contents are like numbered balls mixed in an urn, with the numbers representing subjective time moments. Regardless of when the memory is sampled, each ball has the same probability of being selected5. In LeT, memories are distributed among links that couple the states to the operant response. The states structure the memory. Metaphorically speaking, memory sampling takes place one link at a time —when the first state is the most active, the first link is sampled and may be expressed in behavior; when the second state is the most active, the second link is sampled, and so on.

Retrieval: time independent (SET) vs. time dependent (LeT)

As a consequence of the previous point, the role of time in memory retrieval also differs. In SET, memory receives numbers from the accumulator, but otherwise the two structures are not related. In particular, accessing the memory and retrieving its contents does not depend on the contents of the accumulator. Because the accumulator represents elapsed time, we conclude that memory access and retrieval is time independent. In contrast, in LeT a behavioral state must be active for its link to be expressed behaviorally. One could say that the most active state (the equivalent of the accumulator content) retrieves the associative link (the equivalent of the memory content). Pursuing the analogy, because the links are sampled by the states, which represent time, one could say that in LeT retrieval is time dependent. This difference epitomizes the parallel and serial architectures of SET and LeT, respectively.

What is represented in memory? Relative (SET) vs. absolute (LeT) local reinforcement rates

In SET, the memory contents in time-based schedules depend only on the moments of reinforcement; if a reinforcer is collected at time t, a count representing t is added to memory; but if no reinforcer is collected at t, memory does not change. Because extinction plays no role in the model, the memory in SET can represent only local relative rates of reinforcement. In contrast, in LeT, the associative vectors represent not only the moments of reinforcement (via which link is strengthened), but also the absolute reinforcement rates at those moments (via how strong each link is). In LeT, the local rate is, in a sense, part of what the animal effectively learns in timing tasks. Another way to see this difference is to realize that for all practical purposes the memory stores in SET are like a relative frequency histogram, or a probability distribution. From it one can determine whether reinforcement is more likely to occur at time t1 or time t2 into the trial, but not how frequent reinforcement is at time t1. In LeT, the strengths of the links are more like an absolute frequency histogram, not a probability distribution, and from it one can determine not only whether reinforcement is more likely to occur at time t1 or time t2, but also how frequent reinforcement is at time t1.

Context independent (SET) vs. context dependent (LeT) memories

This is perhaps the least obvious difference between the two models. To illustrate it, consider the bisection task described above. According to SET the contents of the MRed and MGreen memory stores depend only on the duration of the two samples. The contents of the MGreen, for example, depend on the duration of the long sample (4 s) and are not affected by the duration of the short sample (1 s). This means that if the pigeons were trained with a short sample of 2 s, instead of 1 s, the contents of MGreen would remain the same because the 4-s sample did not change. We refer to the assumption that the contents of a memory store depend exclusively on the duration of its associated sample and not on the duration of the alternative sample as “context-independent memories”.

In contrast, in LeT the strengths of the links are context dependent. To understand this point, consider the links between the states and the Green response (see Figure 1, bottom right panel). Their final values will depend on the duration of the long and short samples. Given the model's learning rule, the links with the Green response change not only after 4-s samples, when Green is reinforced and Red extinguished, but also after 1-s samples, when Green is extinguished and Red is reinforced. Hence, if the duration of the short sample changes, the final values of the links connecting the states to the Green response will also change. Because the link vectors in LeT correspond to the memory stores in SET, we conclude that temporal memory is context sensitive in LeT but not in SET.

Given these differences, researchers would naturally like to know whether they are sensitive to empirical test and, equally important, whether they have theoretical import. We address these issues next.

II. EMPIRICAL TESTS: SET VERSUS LET

The first and larger set of studies described below deals mainly with the issue of context sensitivity. The second set of studies deals with the issue of what is represented in memory. The conceptual analyses that follow them deal with the issue of concentrated versus distributed memories and how temporal memories are formed and accessed.

Is Temporal Memory Context Sensitive? The Double Bisection Studies

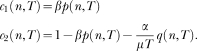

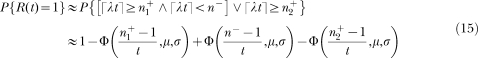

To examine empirically the difference between the two models regarding context sensitivity, we developed the double bisection task (e.g., Machado & Keen, 1999). Its key idea is to vary the context of a sample in two temporal discriminations and see if that variation affects the generalization tests. Figure 2 shows the details. In a matching to sample task, a pigeon initially learns to choose a Red key after 1-s samples and a Green key after 4-s samples6. This discrimination may be represented by a mapping between the stimulus pair (S1, S4) and the response pair (Red, Green), {S1, S4} → {Red, Green}, where the subscripts identify the sample durations and the arrow means that the first response is rewarded following the first sample and the second response is rewarded following the second sample. The pigeon then learns a second discrimination, to choose a Blue key after 4-s samples and a Yellow key after 16-s samples, {S4, S16} → {Blue, Yellow}. Finally, the two discriminations are integrated in the same session. Half of the trials are the relatively short trials {S1, S4} → {Red, Green}, henceforth referred to as “Short” trials, and half of the trials are the “Long” trials {S4, S16} → {Blue, Yellow}.

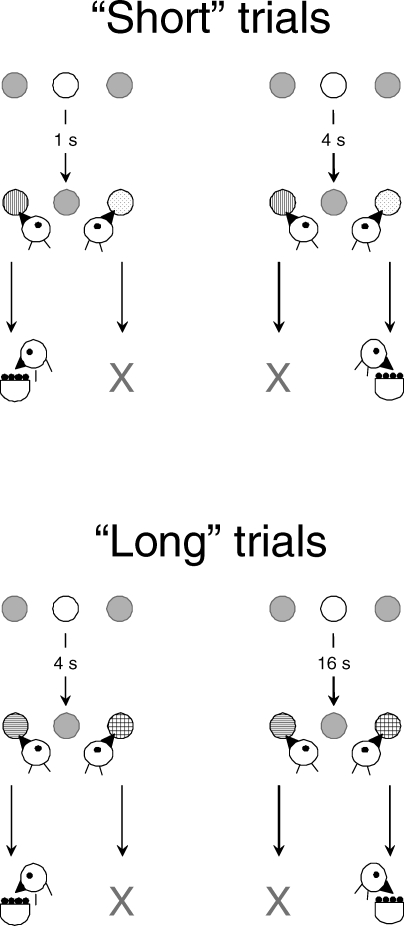

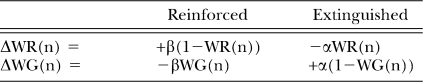

Fig 2.

A double bisection task is a conditional discrimination in which the animal learns two mappings, {S1, S4}→{Red, Green} on “Short” trials, and {S4, S16}→{Blue, Yellow} on “Long” trials. The subscripts indicate the sample duration; the arrow indicates that the first and second responses in each pair are correct following the first and second samples, respectively.

Having learned the two discriminations, what will the pigeon do in generalization tests in which the duration of the sample ranges from 1 to 16 s and the choice keys are Green and Blue? Both keys were associated with the same sample duration, 4 s, but their contexts differed. The context for the Green choices was the 1-s sample associated with Red, whereas the context for the Blue choices was the 16-s sample associated with Yellow. Will a sample be represented differently when it is embedded in different contexts?

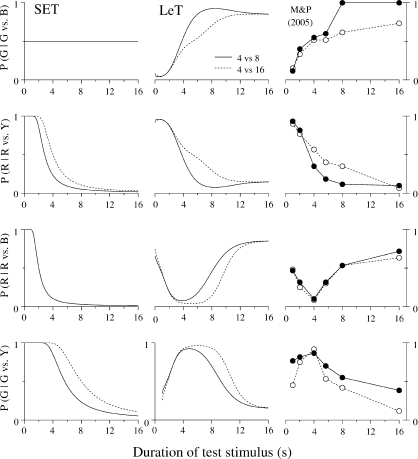

SET is readily extended to the double bisection task. Instead of two, the animal forms four memories, each indexed by a different key (i.e., MRed, MGreen, MBlue and MYellow) and associated with one sample (1 s, 4 s, 4 s, and 16 s, respectively). Because the memories are context independent, the contents of MGreen and MBlue will be statistically identical. That is, the distributions of counts in the two stores will have the same mean and standard deviation. Hence, when the pigeon has a choice between the Green and Blue keys after a sample t-s long, it will compare the number in the accumulator with two samples extracted from identical distributions. The net result will be that preference will not change with sample duration. As the dotted line in the top left panel of Figure 3 shows, the function plotting the preference for Green over Blue, P(G|G vs. B), against t will be a horizontal line.

Fig 3.

The left panels show the predictions of SET and LeT for the generalization tests. In these tests, the sample ranges from 1 to 16 s and the comparison stimuli are one of the four pairs Green/Blue, Red/Yellow, Red/Blue, and Green/Yellow. The test with Green/Blue (top) is critical because the two keys were associated with the same 4-s sample duration. On these tests, SET predicts no effect of sample duration, whereas LeT predicts stronger preference for Green with longer samples, the context effect. The right panels show the data from five studies: Machado & Keen (1999), Machado & Pata (2005), Oliveira & Machado (2008), Arantes & Machado (2008) and Arantes (2008).

LeT also is readily extended to the double bisection task. Instead of two, there will be four link vectors (WRed, WGreen, WBlue and WYellow) coupling the states with the operant responses. Due to the contingencies of reinforcement and the model's learning rule, these vectors will change during training. Table 2 helps to understand how. We divide the states into three classes, those most active after 1-s samples (“Early”), 4-s samples (“Middle”), and 16-s samples (“Late”). Initially, they are all equally associated with the four responses (i.e., Wr(n) = 0.5 for all r responses and n states). Then, during the “Short” trials, the “Early” states will become coupled strongly with Red and weakly with Green; the initial coupling of these states with Blue and Yellow will remain roughly unchanged because, when these states are the most active, rarely is the pigeon given a choice between Blue and Yellow. Hence, as the first column of Table 2 shows, at the end of training WRed(“Early”) ≈ 1, WGreen(“Early”) ≈ 0, and WBlue(“Early”) ≈ WYellow(“Early”) ≈ 0.5. The remaining columns show how the “Middle” and “Late” states become coupled with the responses. At the steady state, the “Early” states will be coupled more with Blue than with Green and therefore, after 1-s samples, the pigeon will prefer Blue to Green. Conversely, the “Late” states will be coupled more with Green than with Blue and therefore, after 16-s samples, the pigeon will prefer Green to Blue. More generally, as the solid line in the top left panel of Figure 3 shows, LeT predicts that preference for Green should increase with sample duration.

Table 2.

Strength of the links (W) between the states and the choice responses.

| States | ||||

| “Early” | “Middle” | “Late” | ||

| Responses | Red | W → 1 | W → 0 | W ≈ 0.5 |

| Green | W → 0 | W → 1 | W ≈ 0.5 | |

| Blue | W ≈ 0.5 | W → 1 | W → 0 | |

| Yellow | W ≈ 0.5 | W → 0 | W → 1 | |

Note. “Early”, “Middle” and “Late” represent the states most active after 1-, 4-, and 16-s samples, respectively. Initially, all links equal 0.5. The arrows show the effects of training in the double discrimination {S1, S4} → {Red, Green} and {S4, S16} → {Blue, Yellow}.

Another way to understand LeT's predictions is in terms of approach and avoidance. During the “Short” trials, the pigeon learns to approach Red and avoid Green after 1-s samples, but it learns little if anything regarding Blue and Yellow. Hence, during the tests with 1-s samples and the Blue and Green keys, the pigeon, deprived of the opportunity to choose Red, avoids Green and therefore chooses Blue. By the same token, during the “Long” trials, the pigeon learns to approach Yellow and avoid Blue after 16-s samples, but it learns little if anything regarding Red and Green. Hence, during the tests with 16-s samples and the Blue and Green keys, the pigeon, deprived of the opportunity to choose Yellow, avoids Blue and therefore chooses Green. Preference for Green should increase with the sample duration, the context effect.7

Although the tests with the Green and Blue keys are the most critical to examine the context sensitivity issue, three other tests may be run to further compare and contrast the models. After samples ranging from 1 to 16 s, the pigeon is given a choice between two other keys that have not been paired before, Red and Yellow, Red and Blue, or Green and Yellow. As the dotted lines in the left panels of Figure 3 show, SET predicts that the psychometric functions for the three tests will have the same shape—in fact, it predicts that they will be scale transforms. In contrast, LeT predicts a descending curve when the choice is between Red and Yellow, a U-shaped curve when the choice is between Red and Blue, and an inverted U-shaped curve when the choice is between Green and Yellow. The general trend of these predictions is readily understood by comparing the rows of the two responses in Table 2 (see Machado & Pata, 2005, for quantitative details).

The basic finding: The Context Effect

The right panels in Figure 3 show the average results of five studies. Machado and Keen's (1999) study used the basic procedure described above. The other four studies changed the basic procedure as follows: a) Arantes (2008) replaced the simultaneous discrimination task by its successive (or go/no-go) version ; b) Arantes and Machado (2008) never integrated the “Short” and “Long” training trials in the same session; c) Oliveira and Machado (2008) used visually different sample stimuli during the “Short” and “Long” trials; and d) Machado and Pata (2005) ran the test trials under nondifferential reinforcement instead of extinction.

Despite marked procedural differences, the results were similar. When the choice was between the Green and Blue keys (top right panel), the keys associated with the same sample durations but in different contexts, the preference for Green increased with sample duration. The result has substantial generality and it is consistent with LeT but not with SET. In the test with Red and Yellow, the keys associated with the shortest and longest samples, respectively, the results show that preference for Red decreased with sample duration, a result consistent with both models. In the remaining two tests there was more variation across pigeons. In the Red/Blue case, the psychometric function was roughly U-shaped, whereas in the Green/Yellow case it was roughly inverted U-shaped. Again, this pattern of results is qualitatively closer to LeT than SET.

Quantifying the context effect

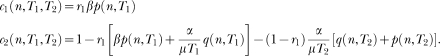

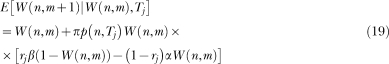

In addition to predicting the context effect, LeT can go one step further and quantify it. Suppose two groups of pigeons learn the double temporal bisection task. The “Short” trials are the same for both groups but the “Long” trials differ. For Group 16 they are {S4, S16} → {Blue, Yellow}, as in the previous experiments. For Group 8 they are {S4, S8} → {Blue, Yellow}. The only difference between the groups is the duration of the longest sample, 16 s or 8 s. Will both groups show the context effect? And if so, will the magnitude of the effect differ between them?

The left and middle panels of Figure 4 show the predictions of each model. For the critical test between Green and Blue, SET again predicts no effect of sample duration. LeT predicts that preference for Green should increase with sample duration in both groups (the context effect), and that preference for Green should increase faster in Group 8 than in Group 16. That is, Group 8 should show a stronger effect. The reason is that, according to the model, avoidance of Blue at 8 s will be stronger in Group 8 than Group 16; hence, at t = 8 s, preference for Green over Blue will be stronger in Group 88.

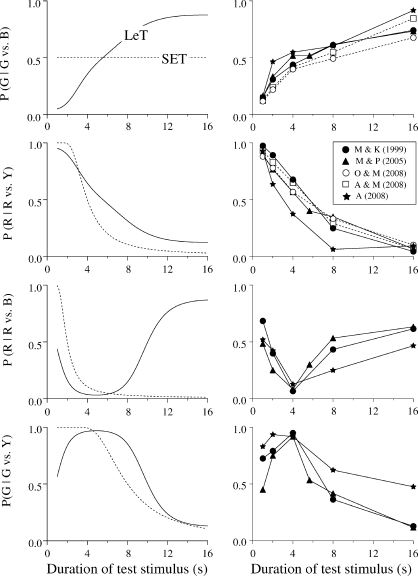

Fig 4.

The left and middle panels show the predictions of SET and LeT, respectively, for the test trials of two groups exposed to a double bisection task. Both groups learned the mapping {S1, S4}→{Red, Green} on “Short” trials, but, on “Long” trials, Group 8 learned the mapping {S4, S8}→{Blue, Yellow}, whereas Group 16 learned the mapping {S4, S16}→{Blue, Yellow}. The right panels show the data from Machado & Pata (2005).

For the remaining tests, both models predict that preference for Red over Yellow will decrease with stimulus durations faster for Group 8 than for Group 16. Given a choice between Red and Blue, SET predicts the same monotonic decreasing function for the two groups, whereas LeT predicts two distinct U-shaped functions. The function for Group 16 should be wider than the function for Group 8. And given a choice between Green and Yellow, SET predicts that preference for Green should decrease with stimulus duration, but faster for Group 8 than for Group 16. LeT predicts two inverted U-shaped functions, with the function for Group 16 being wider than the function for Group 8.

The rightmost panels of Figure 4 show the experimental results (Machado & Pata, 2005). The top panel reveals the context effect in both groups—preference for Green over Blue increased with sample duration. It also reveals that preference for Green increased faster for Group 8 than Group 16. These results are consistent with LeT but not SET. The remaining panels show that the shape of LeT's predicted curves was roughly similar to the shape of the obtained curves. The major discrepancy between LeT and the data occurred for Group 16 in the two bottom panels, for in each case the model predicted curves considerably wider than the observed curves. In fact, LeT always predicts narrower curves for Group 8 than for Group 16, a prediction at odds with the data. Concerning SET, the shape of its predicted curves agreed with the data reasonably well when the choice was between Red and Yellow (second row), but in the other cases the shape of SET's curves was at odds with the shape of the obtained curves.

Converging evidence for the context effect

Machado and Arantes (2006) attempted to obtain the context effect in a different way. Their rationale was similar to using the retardation-of-acquisition test to determine whether a stimulus is a conditioned inhibitor (Rescorla, 1969). After a group of pigeons learned the prototypical double bisection task, it was divided into two and each new group learned a new temporal discrimination involving the 1-s and 16-s samples and the Green and Blue keys. The only difference between the two groups was that one learned the mapping {S1, S16} → {Blue, Green} and the other learned the alternative mapping {S1, S16} → {Green, Blue}. At issue was which group would learn the new discrimination faster.

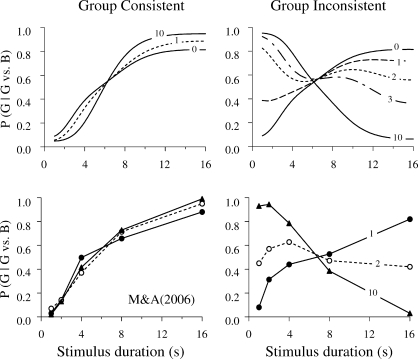

SET predicts equal speeds of acquisition. Because memories are context independent, there is no reason for one of the discriminations to be easier than the other. LeT predicts sharply different results for the two groups. According to LeT, learning the double bisection task creates a tendency to prefer Blue to Green after 1-s samples, but Green to Blue after 16-s samples. Therefore, for group {S1, S16} → {Blue, Green} the new task will be easy because it is consistent with the tendency induced by the previous training. In contrast, for group {S1, S16} → {Green, Blue} the new task will be difficult because it is inconsistent with the tendency induced by the previous training. According to LeT, the acquisition of Group Inconsistent should be retarded compared to the acquisition of Group Consistent.

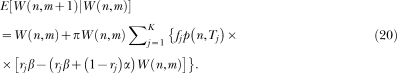

The top panels of Figure 5 show LeT's specific predictions. During the first session with the new discrimination, both groups will behave similarly despite opposite contingencies of reinforcement. Whereas Group Consistent will be close to the steady state since the first session, Group Inconsistent will need a few sessions to reach the steady state. The bottom panels show the results. For Group Consistent, preference for Green increased with sample duration and the psychometric functions did not change appreciably from the first to the last session. For Group Inconsistent, during the first session, preference for Green increased with sample duration despite the opposite contingencies of reinforcement! During the second session, preference did not change systematically with sample duration. By the last session, preference for Green decreased systematically with sample duration in accord with the contingencies of reinforcement. This pattern of results is strongly consistent with LeT but not with SET.

Fig 5.

The top panels show the predictions of LeT for Groups Consistent and Inconsistent. Each curve shows the probability of choosing Green over Blue as a function of sample duration. The number on each curve identifies the session for which the curve applies (e.g., curve 0 = immediately after double bisection training, curve 1 = after one session with the new discrimination training, etc.). The bottom panels show the data from Machado & Arantes (2006). Choices following the 1-s and 16-s samples were reinforced provided they were correct, but choices following the 2-, 4- and 8-s samples were not reinforced. Green was correct following the 16-s samples for Group Consistent and the 1-s samples for Group Inconsistent.

Summary

The studies reviewed above (see also Oliveira & Machado, 2009) exploited one of the differences between the SET and LeT models, the context sensitivity of temporal memories. In the double bisection task, LeT predicted a context effect but SET did not. In all studies, the context effect was obtained—in simultaneous and successive discrimination tasks, directly on test trials and indirectly through its effects on the acquisition of a new discrimination, and with and without local or global cues signaling the forthcoming trial. Examining the two models has revealed an unknown property of timing, the context effect: Temporal memories are context dependent.

What is Represented in Memory? The Free-Operant Psychophysical Procedure Studies

The next studies examined another difference between SET and LeT, namely, what is represented in memory. Imagine this hypothetical situation. Two pigeons are exposed to 60-s trials. For pigeon A, one reinforcer is scheduled on each trial; for pigeon B one reinforcer is scheduled every fourth trial on average. For both pigeons, scheduled reinforcers are delivered randomly at 15-s or 45-s since trial onset; never at other times. Hence, pigeons A and B receive food at the same moments within the trial, but the absolute reinforcement rate at those moments is four times higher for pigeon A than pigeon B.

According to SET the memory contents of pigeons A and B will be identical because memory represents only the moments of reinforcement. The 2 pigeons will learn that reinforcement occurs at 15 s and 45 s, but because extinction plays no direct role in timing, they will not learn how often reinforcement occurs at those moments. In contrast, according to LeT, the memory of the 2 pigeons (i.e., the associative links) will differ because memory represents the moments of reinforcement (via which links are changed) and the rate of reinforcement at those moments (via by how much the links change with reinforcement and extinction). Therefore, the 2 pigeons will learn not only that reinforcement occurs at 15 s and 45 s, but also how often it occurs at those times9.

The basic finding

One way to examine this issue empirically is through the free operant psychophysical procedure, FOPP (Bizo & White, 1994a, 1994b, 1995a, 1995b; Killeen, Hall, Bizo, 1999; Stubbs, 1980). A 50-s trial starts with the illumination of two keylights, L and R. For the first 25 s only L choices are reinforceable; for the last 25 s only R choices are reinforceable. During a baseline condition the reinforcers are scheduled by two independent Variable-Interval (VI) 60-s schedules. The results show that, as time into the trial elapses, the proportion of R pecks increases from 0 to 1 according to a sigmoid function, with indifference around the middle of the trial. This finding is illustrated by the empty squares in the top left panel of Figure 6 (Bizo & White, 1995a). When the experimenters made the L key richer by changing the VI schedules (e.g., VI 40 s for L and VI 120 s for R) the birds switched to the R key later than during the baseline and the psychometric function shifted to the right. Conversely when the experimenters made the L key poorer (VI 120 s for L and VI 40 s for R) the animals switched to the R key earlier than in the baseline and the psychometric function shifted to the left.

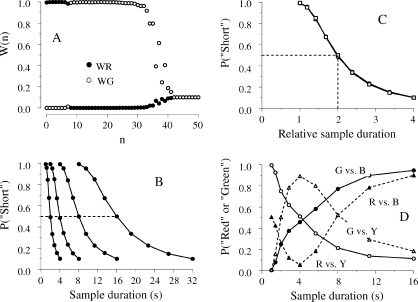

Fig 6.

Psychometric functions obtained with the Free Operant Psychophysical Procedure—pecks to one key, say L, are reinforced (according to one or more VIs) only during the first half of the trial, and pecks to the other key, R, are reinforced (also according to one or more VIs) only during the second half of the trial. In the top panels, when the overall reinforcement rate favored the L key (first one of the two VI schedules), the psychometric function shifted to the right; when it favored the R key (last one of the two VI schedules), it shifted to the left. The middle panels show that when the overall reinforcement rates differ, the psychometric function shifts only if the local reinforcement rates differ around the middle of the trial; the bottom right panels show that when the overall reinforcement rates are equal, the functions shift provided the local reinforcement rates differ in the middle of the trial. The data are from Bizo & White (1995a) (top left panel) and Machado & Guilhardi (2000) (remaining panels). The curves show the fit of the LeT model.

Machado & Guilhardi (2000) reproduced Bizo & White's (1995a) experiment, but, for reasons explained below, they divided the 60-s trial into four segments. Pecks to the L key were reinforced only during the first two segments; pecks to the R key were reinforced only during the last two segments. Reinforcers were scheduled by four independent VIs, each operating during one segment. The notation “120–120 / 40–40”, for example, means that L pecks were reinforced according to a VI 120s during the first segment and another VI 120s during the second segment, but R pecks were reinforced according to a VI 40s during the third segment and another VI 40s during the fourth segment. The results, displayed in the top right panel of Figure 6, show that when the pigeons experienced a threefold difference in reinforcement rate between the L and R keys, the psychometric functions shifted appreciably.

SET has not been applied to the FOPP. However, its usual rules of memory formation would suggest the following account. The animal would form two memory stores, one containing the times of reinforcement for L key pecks and the other the times of reinforcement for R key pecks. Given that reinforcers are set up according to a VI schedule, the reinforced times will be distributed uniformly across the interval and independently of the VI parameters (see Machado & Guilhardi, 2000). Hence, according to SET, the animal's memories will not change with variations in the VI schedules and therefore the psychometric functions should not shift. More generally, the memory contents of SET cannot predict the experimental findings because they are insensitive to changes in reinforcement rate that are not accompanied by changes in the distribution of reinforcement times.

For LeT the shifts of the psychometric function depend on the link vectors. As before, divide the states into three classes, the states most active at the beginning (“Early”), around the middle (“Middle”), or at the end (“Late”) of the trial. Given the reinforcement contingencies, the “Early” states will be linked mostly with the L response and therefore the pigeons will prefer the L key at trial onset; the “Late” states will be linked mostly with the R response and therefore the pigeons will prefer the R key at the end of the trial. The “Middle” states will be linked differently across conditions. When the VIs are equal, these states will be linked equally with the two keys and therefore, around the middle of the trial, the pigeon will be indifferent; when the VIs favor the L key, the “Middle” states will be linked more with the L than the R key and therefore, around the middle of the trial, the pigeon continues to prefer the L key and the psychometric function shifts to the right. Conversely, when the VI for the L key is poorer, those states will be linked more with the R key and the psychometric function shifts to the left. The lines in the top panels of Figure 6 show LeT's account (see Machado & Guilhardi, 2000, for a more detailed explanation and mathematical details).

Local rates at time t

LeT makes one finer prediction—the psychometric function will shift only when the differences in reinforcement rate between the two keys occur in the middle of the trial. That is, for the function to shift, it is neither sufficient nor necessary that one key delivers more rewards than the other. Two sets of results support this claim. The first (Machado & Guilhardi, 2000, Experiment 1) addressed the sufficiency condition by comparing the shifts in two groups of pigeons (see middle row in Figure 6). The difference in the overall reinforcement rate between the keys was similar in the two groups, but whereas the left panel group experienced different reinforcement rates around the middle of the trial and similar rates at the extremes of the trial, the right panel group experienced a difference at the extremes but not at the middle of the trial. According to LeT, only the former group should show a shift. As the middle panels show, the results were consistent with LeT. Hence, a difference in overall reinforcement rates between the two keys is not sufficient to move the psychometric function.

The second experiment (Machado & Guilhardi, 2000, Experiment 2) addressed the necessary condition. The L and R keys always delivered the same overall reinforcement rate (see bottom panels). However for the left panel group the reinforcement rates around the middle of the trial differed, but for the right panel group they were equal. LeT predicted a shift in the former group only and the results were consistent with the predictions. Similar shifts were obtained also with rats (Guilhardi, Macinnis, Church & Machado, 2007). Hence, a difference in overall reinforcement rate between the two keys is not necessary to move the psychometric function.

Summary

The FOPP studies looked into another difference between SET and LeT, the contents of temporal memory. According to LeT, memory represents both the times of reinforcement and the reinforcement frequencies at those times; according to SET, memory represents only the times of reinforcement. However, it does not follow that SET cannot account for the empirical findings obtained with the FOPP. In fact, it is possible that by combining a) a threshold carefully biased by the difference in absolute reinforcement rates with b) memory stores that represent relative reinforcement rates, SET could predict the shifts of the psychometric function. If that proves to be the case, then more informative (and perhaps more complex) experiments will have to be designed to disentangle the two conceptions of what is represented in temporal memory.

Is Temporal Memory Concentrated or Distributed? The Challenge of Mixed-FI Schedule

SET is not a learning model. However, like any other model, to be able to work at all it must make minimal assumptions about learning—for example, that two memories are formed in the simple bisection task {S1, S4}→{Red, Green}. Minimal as they may be, these assumptions may have unanticipated consequences. Continuing with the example, if a theory assumes that an animal forms two memory stores (see, e.g., Gibbon, 1981, 1991; Gibbon et al., 1984; Gallistel, 1990), the theory must be reasonably clear about how the stores are accessed. In SET, this means answering the following question, “At the end of the trial, how does the timing system decide in which memory to save the current number in the accumulator?” The answer is straightforward: “If the reinforcer came from pecking the Red key, the number is saved in one memory store; if it came from pecking the Green key it is saved in another.” More generally, accumulator counts are saved to a particular memory store on the basis of the structural features of the task (e.g., choosing this or that distinctive key and getting a reward). Moreover, because reinforcement of the two types of pecks follows different sample durations, one memory store will come to represent the 1-s interval (MRed), and the other store will come to represent the 4-s interval (MGreen). The theory has no major difficulty accounting for the temporal discrimination.

The basic finding

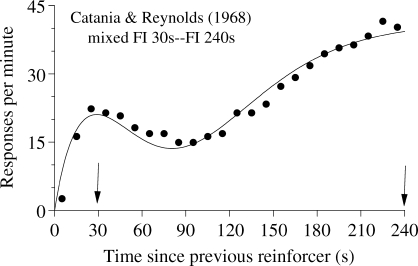

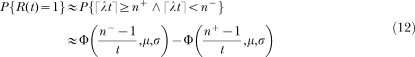

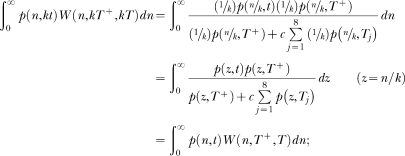

Consider now a simpler task. A pigeon receives food for pecking a key after either 30 s or 240 s have elapsed since trial onset. There is only one key and one feeder in the situation and no cue signals whether the current trial will be short or long. The results of this mixed FI 30s-FI 240s experiment show that during the long trials average response rate increases from the beginning of the trial until approximately 30 s have elapsed, then it decreases, and then it increases again until the end of the trial. Figure 7 shows one example from Catania and Reynolds (1968; see also Ferster & Skinner, 1957, pp. 597–605; Leak & Gibbon, 1995; Whitaker, Lowe & Wearden, 2003, 2008). Leak and Gibbon showed that on most long trials the pigeons paused at the onset of the trial, then pecked until the shorter FI elapsed, paused again, and then pecked again until the end of the trial (break-run-break-run pattern). Early cumulative records from Ferster and Skinner also show, during the longer FIs, a significant pause or deceleration past the time of the shorter FI. As the authors put it, “a well-marked priming exists after the shorter interval, and a falling-off into a curvature appropriate to a longer interval” (p. 597).

Fig 7.

Average data from one pigeon exposed to a mixed FI 30s–FI 120s schedule (points) and the fit of the LeT model (curve). Data from Catania & Reynolds (1968).

This performance could be derived from SET by assuming that the animal stored the counts obtained at 30 s and 240 s into distinct memory stores. As Leak and Gibbon (1995, p. 6) put it, "in SET, there is assumed to be a single clock but an independent memory distribution for each criterion time interval". Then at the beginning of the trial the bird sampled a number from the “short” store, compared that number with the current number in the accumulator, pecked the key when the two numbers were sufficiently close, stopped pecking when they became sufficiently different again, at which time it sampled a number from the “long” store, and then executed the same routine. The account predicts the break-run-break-run within-trial pattern, the two peaks in the average response rate curve, and the fact that the widths of the two peaks show the scalar property (see also Whitaker et al, 2003, 2008).

A logical problem

Unfortunately, the account begs the question because that which was supposed to be explained was assumed in the explanation. In contrast with the bisection task, the reinforcers in the mixed-FI schedules have the same source and no distinct signal cues the two trials. Hence, how does the timing system “direct” the counts to the appropriate memory store? To reply that when the count is small it is directed to one store and when large to another explains nothing, for the reply simply replaces one unexplained discrimination (short vs. long intervals) by another (small vs. large counts).

To be consistent and avoid begging the question, the current version of SET must assume that the animal's memories are indexed (formed, accessed, etc.) by structural features of the situation, by distinctive cues being timed, or by the source of the reinforcers, for example, and not by time itself. A coherent account would proceed by stating that when the reinforcers come from a single source and are not correlated with distinct stimuli, the counts in the accumulator are all lumped into one and the same memory store—the memory is concentrated. Therefore when the reinforcers are obtained at two distinct moments, as in mixed-FI schedules, the distribution of the counts in memory will be a mixture of two distributions, the one induced by the reinforcers delivered at short intervals (30 s) and the other by the reinforcers delivered at long intervals (240 s). The predicted pattern of behavior also will be a mixture across trials of two patterns, the break-and-run pattern associated with an FI 30 s and the break-and-run pattern associated with an FI 240 s. This prediction is incorrect because the observed pattern is break-run-break-run within most trials (Leak & Gibbon, 1995).

The same problem is present in another study (Mellon, Leak, Fairhurst, & Gibbon, 1995). Pigeons received reinforcers at 16, 32, or 48 s since trial onset, without external signals cueing the FI interval. To explain the data, the authors assumed three distinct memory stores representing the three reinforcement times, but they did not ask how the memories might be formed in the first place—how does the timing system decide where to save a particular accumulator count? In addition, to fit the data, the authors assumed that the three memories were sampled in the correct order (i.e., first the memory for the 16-s interval, second the memory for 32-s interval, and lastly the memory for the 48-s interval), which may be correct, but they did not explain how the system knows which memory is first, second, and third. Surprisingly, to account for changes in response rate across the 48-s intervals, the authors also assumed different absolute response rates at different moments into the trial. That is, a temporal discrimination was assumed when that temporal discrimination was part of the problem to be explained.

LeT does not face the same difficulties because its equivalent of the memory counts (the links) are not concentrated in a memory bin. They remain distinct and accessed by the states themselves. In the mixed-FI schedule, the most active states around 30 s and 240 s will be linked with the operant response more strongly than the most active states at times t ≪ 30 s and 30 ≪ t ≪ 240 s. Hence, average response rate around the moments of reinforcement will be higher than at other moments, which matches the obtained bimodal response curve (see Figure 7).

However, LeT has two main difficulties in dealing with mixed-FI schedules. First, because the local reinforcement rate is lower at 30 s than 240 s, LeT always predicts higher peak rates at the long than the short FI. And second, because the overall reinforcement rate remains the same, LeT predicts greater precision in the timing of the longer than the shorter FI. Although these predictions occasionally hold, as Figure 7 shows, most data sets from mixed-FIs contradict them (for further analyses see Whitaker et al., 2003, 2008).

Summary

The mixed-FI analysis questions SET's assumption that the representations of time intervals are lumped into a memory bin. In addition, it identifies a logical problem with SET that needs to be solved (see also Machado & Silva, 2007, and Gallistel, 2007). Because LeT generates bimodal response rate distributions without begging the question, it suggests that temporal memories may be distributed and accessed serially.

What is Learned in FI Schedules and the Peak Procedure?

Behavior in time-based schedules has both stochastic and nonlinear properties. For example, in FI schedules subjects typically pause after the reinforcer for a variable amount of time and then respond until the end of the trial. The variable length of the pause illustrates the stochastic property; the abrupt transition from no responding to a high rate of responding illustrates the nonlinear property. Another example comes from the peak procedure (Catania, 1970; Roberts, 1981). Here, FI trials are intermixed with significantly longer trials that end without reinforcement, the empty or peak-interval trials. On these longer trials, subjects pause for a variable interval, typically shorter than the FI, respond for another variable interval, typically until the FI elapses, and then pause again either until the end of the trial or until a new bout of responding begins (break-run-break or break-run-break-run patterns; Church, Meck, & Gibbon, 1994; Kirkpatrick-Steger, Miller, Betti, & Wasserman, 1996; Sanabria & Killeen, 2007).

SET was designed with the stochastic and nonlinear structure of behavior in mind. It accounts for the nonlinear properties by means of a threshold-based decision rule. In FI schedules, the animal starts to respond when the relative discrepancy between the number in the accumulator and a sample extracted from the memory of reinforced times falls below a threshold. In the peak procedure, the same start rule applies, but then the animal stops responding when the same relative discrepancy falls above either the same threshold or another threshold (Gibbon et al., 1984). LeT on the other hand was designed to deal with the average performance in time-based schedules and therefore it does not account for the trial-by-trial variability in behavior or for its nonlinear properties. This is one of LeT's major shortcomings.

The problem

Despite differences of conception and scope, the models share common ground in that both describe what animals learn when exposed to an FI T-s schedule or a corresponding peak procedure (i.e., a procedure comprising FI T s and empty trials). According to both models, the animal learns that food occurs at a particular time since the beginning of the trial. In SET, the average of the counts stored in memory represents the time of food and their variability represents the uncertainty associated with that time. In LeT, the distribution of associative strength across the links represents also the average and the variability of the time of food. However, neither model accounts adequately for a well-known feature of responding in these two situations. In the peak procedure, a well trained animal will stop responding shortly after T s elapse but, in an FI schedule a well trained animal will not stop responding for a long interval if the reinforcer is omitted (Ferster & Skinner, 1957; Machado & Cevik, 1998; Monteiro & Machado, 2009). If in both situations the animals learned that food occurs at time T, then why do they pause in the peak procedure, but continue to respond in the FI schedule?

Another way of framing the problem is in term of temporal generalization: If the effects of reinforcement at T s generalize to neighboring times, both before and after T, and if this generalization explains why the animal starts to respond only when it is sufficiently close to T, then why does the animal not stop responding, when food is omitted in the FI, as soon as it is sufficiently away from T? Note that we are not talking about the effects of chronic exposure to reinforcement omission in the FI schedule (e.g., Staddon & Innis, 1969; see also Staddon & Cerutti, 2003), or the effects of prolonged extinction following FI training (e.g., Crystal & Baramidze, 2006; Machado & Cevik, 1998; Monteiro & Machado, 2009), but on the immediate effects of omitting the reinforcer.

In common presentations of SET (e.g., Church, 2003; Gibbon, 1991; Lejeune et al., 2006), only the start rule is invoked to explain performance in FI schedules, but both the start and stop rules are invoked to explain performance in the peak procedure. In both situations the start rule will determine when the animal starts responding, but only in the peak procedure will the stop rule determine when the animal stops. Hence, SET “solves” the problem by stating that responding in the FI schedule persists for a long while because the stop rule is not used. Unfortunately, though, the explanation omits a critical step: What determines when the stop rule is used? In other words, how do the empty trials, the only difference between the FI and the corresponding peak procedure, “activate” the stop rule? The question is pertinent because the empty trials are in every respect similar to other segments without reinforcement that the animal experiences during simple FI schedules. To answer that only empty trials give the animal the opportunity to learn to stop responding past the reinforcement time, states an obvious fact, but it does not explain how that fact causes the behavioral difference. We believe this omission is not trivial because by stressing only the moments of reinforcement, which obviously remain the same in the FI schedule and the corresponding peak procedure, SET has no principled way to conceptualize the distinctive role of the empty trials in activating the stop rule.

LeT accounts reasonably well for the average rate curve in the peak procedure: The states most active around the reinforcement time, say, 40 s, will be strongly linked with the operant response, but the earlier and later states will extinguish their couplings with the operant response. This profile of couplings (earlier and later states uncoupled, “middle” states coupled) explains why average response rate increases from trial onset, peaks around 40 s, and then decreases. The problem for LeT is to explain why in the FI schedule response rate remains high past the reinforcement time. Because in the FI schedule the trials never lasted significantly longer than 40 s, the later states did not have a chance to become coupled with the operant response. Hence, when the reinforcer is omitted and these states become the most active, they should not sustain response rate for a long interval. The model predicts that response rate will decline shortly past the time of reinforcement. This prediction is incorrect (e.g., Machado & Cevik, 1998; Monteiro & Machado, 2009).

Summary

In an FI T-s schedule and its corresponding peak procedure, the reinforcement moments are the same, namely, about T s from trial onset. Why then do animals trained on an FI schedule continue to respond for a long period of time if the reinforcer is omitted, whereas in the peak procedure they stop responding shortly after the reinforcement time? A principled account of this straightforward and well known fact still challenges SET and LeT.

III. A HYBRID MODEL

Both models have strengths and weaknesses. SET's strengths are its ability to explain the stochastic, nonlinear structure of responding in concurrent timing tasks and the scalar property. The latter is no small feat given the ubiquity of the scalar property across a wide range of procedures and behavioral measures (but see Lejeune & Wearden, 2006). Its weaknesses seem to be its assumptions concerning memory—concentrated in bins, insensitive to context, one-dimensional, and not accessed by temporal cues. Curiously, LeT's strengths and weaknesses seem to be the opposite. On the positive side, LeT postulates distributed, two-dimensional, and context-sensitive memories accessed serially. On the negative side, LeT has serious difficulties handling the scalar property when two or more intervals are timed in mixed-FI schedules, but the overall reinforcement rate does not change. The model predicts a clear violation of the scalar property that is contrary to the data (Machado, 1997; Whitaker et al., 2003, 2008). In addition, LeT simply does not deal with the stochastic, nonlinear structure of behavior (for other limitations see Machado & Cevik, 1998, and Rodríguez-Gironés & Kacelnik, 1999).

We have explored the possibility that a hybrid between SET and LeT could overcome at least some of the weaknesses, while retaining most of the strengths, of each model (see Church 1997 and Kirkpatrick & Church, 1998, on the virtues of hybridization). The new model preserves the overall learning structure of LeT but replaces its state-activation dynamics by a scalar-inducing dynamics equivalent to the pacemaker–accumulator structure of SET. A stochastic interpretation of the state dynamics plus a threshold-based decision rule enables the new model to deal with the stochastic and nonlinear structure of behavior and generate the scalar property without adjusting its parameters.

In what follows we explain how the new model works. Then we extend it to three concurrent timing tasks (FI schedule, peak procedure, mixed-FI schedules), and two retrospective timing tasks (temporal bisection and temporal generalization). Throughout we will focus mainly on the qualitative aspects of the model, but in the Appendix we present some mathematical analyses and an algorithm to simulate the model.

Model Assumptions

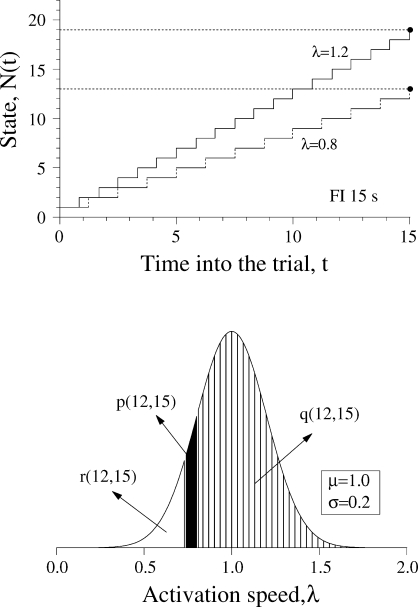

Killeen and Weiss (1987) proposed a general framework to understand pacemaker–accumulator systems, with scalar variance induced by counting errors in the accumulator, Poisson variance induced by random changes in the pacemaker's interpulse intervals, and constant variance induced by motor latencies or delays in starting the counting process, for example. Here, we assume only scalar variance to see how far the model can go with a minimal assumption.

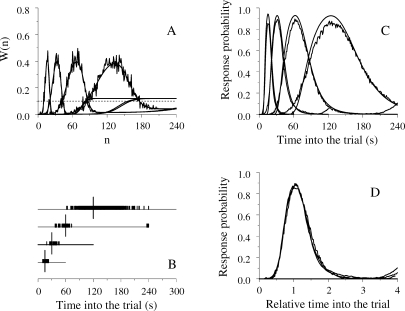

On each trial, a set of states, numbered n = 1, 2, …, is activated serially at a rate of λ states per s. That is, the first state will be active from time 0 to time 1/λ, the second state will be active from time 1/λ to time 2/λ, and so on. The activation of the states is like a wave travelling across them with velocity λ. This velocity is constant within a trial but varies randomly across trials according to a Gaussian distribution with mean µ and standard deviation σ.

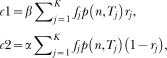

State n has an associative link with the operant response and the strength of the link changes at the end of each trial. Let n* denote the active state when the trial ends. Then the following rules apply:

-

1

Reinforcement rule. If the trial ends with reinforcement then n* is a reinforced state and ΔW(n*) = β(1−W(n*)). If the trial ends without reinforcement, its link changes according to the extinction rule described next.

-

2

Extinction rule. The strength of the link of all extinguished states decreases by the amount ΔW(n) = −(α/n*)W(n), where n* is the active state at the end of the trial.10

-

3

For all states that were not active during the trial, ΔW(n) = 0.

Finally, while state n is active, responses occur at rate A provided the link has strength greater than a threshold θ, that is, W(n) > θ, with 0 < θ < 1. Because we were not interested in absolute response rate, we let A = 1 throughout the study.

In words, states become active in succession; if the associative link of the active state is greater than a threshold the animal responds; the link of the state active at the time of reinforcement increases, whereas the links of all its predecessors decrease. The new model has six free parameters: The state dynamics is governed by the mean, µ, and the standard deviation, σ of the activation wave; learning is governed by the extinction parameter, α, the reinforcement parameter, β, and the initial value of the associative links, represented by W0; and the decision to respond is governed by the threshold parameter, θ. However, steady state performance depends effectively on three parameters only, the ratio σ/μ (i.e., the coefficient of variation of the activation wave), the ratio α/β (i.e., the relative effect of extinction), and θ.

The new model differs from LeT in three major assumptions. First, whereas in LeT all states are active at t > 0, albeit in different degrees, and their activation is described by a Poisson distribution, in the new model only one state is active and the state activation is described by a Gaussian distribution (Gibbon, 1992). Second, in the extinction rule, parameter α is replaced by α/n*. The extra complexity brings a major benefit. In LeT, for the scalar property to hold, α had to be inversely proportional to the overall reinforcement rate, that is, the parameter had to be adjusted; the new rule yields the scalar property without adjusting its parameters (see below). And third, the new model can deal with the variability and nonlinearity of within-trial performance in concurrent timing tasks.

Concurrent Timing

The next three figures show the model's output in FI schedules, the peak procedure, and mixed-FI schedules. Throughout, the parameters were μ = 1, σ = 0.2, α = 1, β = 0.2, θ = 0.1, and W0(n) = 0.12, for all states. Concerning the last two parameters, what is important is not their specific values but the relation W0(n) > θ, that is, all links have initial strength greater than the threshold, insuring that initially the animal responds regardless of which state is active. We simulated 10 stat-pigeons, each exposed to 20 sessions of 50 trials each, averaged the data from the last 5 sessions of each stat-pigeon, and then averaged the data across stat-pigeons. The time step equaled Δt = 0.1 s and the model's output (0 = no response, 1 = response) was collected every second.

FI schedules