Abstract

This study compared training outcomes obtained by 147 substance abuse counselors who completed 8 self-paced online modules on Cognitive Behavioral Therapy (CBT), and attended a series of four weekly group supervision sessions using web conferencing software. Participants were randomly assigned to two conditions that systematically varied the degree to which they explicitly promoted adherence to the CBT protocol, and the degree of control that they afforded participants over the sequence and relative emphasis of the training curriculum. Outcomes were assessed at baseline and immediately following training. Counselors in both conditions demonstrated similar improvements in CBT knowledge and self-efficacy. Counselors in the low-fidelity condition demonstrated greater improvement on one of three measures of job-related burnout when compared to the high-fidelity condition. The study concludes that it is feasible to implement a technology-based training intervention with a geographically diverse sample of practitioners, that two training conditions applied to these samples of real-world counselors do not produce statistically or clinically significant differences in knowledge or self-efficacy, and that further research is needed to evaluate how a flexible training model may influence clinician behavior and patient outcomes.

Keywords: Web-Based Training, Cognitive Behavioral Therapy, Substance Use Disorder Counselors, Blended Learning

Self-paced, or asynchronous Web-based Training (WBT) can be an efficacious means of imparting knowledge about Cognitive Behavioral Therapy to practicing substance use disorder (SUD) counselors (LoCastro, Larson, Amodeo, Muroff & Gerstenberger, 2008; Sholomskas et al., 2005; Weingardt, Villafranca, & Levin, 2006). This finding is unsurprising, as the educational literature has long demonstrated that distance learning technology, including WBT, can result in equivalent gains in knowledge relative to traditional face-to-face classroom training workshops (Weingardt, 2004; Russell, 2001).

However, few would argue that didactic training alone, whether technology-based or face-to-face, is sufficient to promote the sustained adoption of a new strategy or technique among practicing mental health treatment providers. Learning an advanced skill, such as incorporating an evidence-based intervention into one's daily clinical practice, understandably requires ongoing feedback, consultation and supervision in addition to an initial workshop (Davis, Thomson, Oxman, & Haynes, 1995; Miller, Sorenson, Selzer & Brigham, 2006; Miller, Yahne, Moyers, Martinez, & Pirritano, 2004).

In addition to being a critical element of successful clinical training, clinical supervision has also been found to serve a protective function in terms of counselor's psychological well-being. Knudsen, Ducharme, and Roman (2008) found that SUD counselors' perceived quality of clinical supervision was associated with reductions in job burnout, emotional exhaustion and intention to quit. This is of particular importance given the very high rate of voluntary turnover that has been found among counselors who treat patients with substance use disorders.

Advances in instructional technology now provide a mechanism to support the provision of supervision to groups of clinicians who are in different geographical locations. Synchronous e-Learning is a term used to describe real-time, instructor-led learning events in which the instructor and all learners are logged in at the same time and communicate directly with each other, but are not physically present at the same location. Web conferencing or virtual classroom platforms such as WebEx and LiveMeeting typically include such features as bidirectional audio and video streaming (so the audience and instructor can see and hear each other), a virtual whiteboard to enable real-time illustrations, online surveys tests or polls that can provide rapid feedback, and the ability to share Web sites, PowerPoint slides, software applications and digital video clips with the audience (Weingardt, 2004).

The present study evaluates a combination or “blend” of an asynchronous e-Learning intervention consisting of a self-paced online course in Cognitive-Behavioral Therapy for Substance Use Disorders (www.nidatoolbox.org), with a series of live, online virtual supervision sessions designed to promote clinical application of the material covered in the online course. The integration of multiple methods of information delivery into a single learning system has been referred to as “blended learning” (Cucciare, Weingardt, & Villafranca, 2008). This blending of self-paced modules with live group consultation combines the strengths of the self-paced WBT medium, which lies in the efficient delivery of high quality, standardized didactic training content, with the advantages of the synchronous e-Learning medium, which provides participants with the opportunity to discuss clinical issues during a series of real time group consultation sessions.

Because both the didactic and live supervision elements of this training program occur entirely online, this approach has the potential to address one of the primary barriers to delivering large-scale training in evidence-based SUD treatment practices; namely, the significant resource constraints and other organizational challenges that characterize the publicly-funded SUD treatment system. SUD treatment programs are notoriously underfunded and organizationally unstable, with very high rates of voluntary turnover among both clinical staff and management (McLellan, Carise, & Kleber, 2003). These factors conspire to make SUD treatment programs particularly difficult organizational environments in which to conduct face-to-face training and other in vivo interventions to promote the adoption of evidence-based practices (Joe, Broome, Simpson & Rowan-Szal, 2007; Simpson, Joe, & Rowan-Szal, 2007)

The present blended, technology-based approach has the potential to deliver high quality training in evidence-based treatment practices directly to front line clinicians, regardless of the organizational environment in which they work. As we will discuss, this “direct to consumer” approach has the potential to allow foundations, government agencies and other entities to develop and deliver training to a geographically dispersed audience of SUD counselors in a cost-effective and scalable fashion. The primary aim of the present study is to evaluate the feasibility of this technology-based blended training intervention in a diverse sample of practicing community SUD counselors. Demonstrating the feasibility of this approach is critical given that community SUD counselors may lack computer proficiency and/or practice in organizations that lack even the most basic computing resources for clinical staff.

A secondary aim of the present study is to evaluate the degree to which flexibility in the design and delivery of technology-based training resources may affect learner outcomes. A variety of theoretical and practical considerations suggest that a flexible training model may improve learner outcomes relative to a more structured curriculum designed to promote adherence to a manual-based training protocol. From the theoretical perspective, research in Instructional Systems of Design (ISD) has long demonstrated that training designed to maximize learner control promotes active learner engagement with the instructional content and ultimately improves learner outcomes (White, 2007). From the practical perspective, research has found that organizations that promote flexible, supportive workplace environments and job autonomy may reduce job burnout and voluntary turnover (Knudsen, Johnson, & Roman, 2003).

The present study compared training outcomes obtained by substance abuse counselors who were randomly assigned to one of two conditions that covered identical training content, but systematically varied the amount of control participants had over (a) the order of topics to be covered, (b) the relative emphasis they placed on each topic, and (c) the agenda and focus of supervision sessions. In sum, the present study sought to answer two research questions. First, is it feasible to implement a 100% technology-based, blended learning protocol to train a sample of practicing substance abuse counselors in Cognitive Behavioral Therapy? Second, does providing more flexibility in the process of learning CBT and receiving CBT-related supervision lead to greater improvements in self-reported ratings of counselors' knowledge of CBT, self-efficacy regarding CBT interventions, and job burnout than a more structured training protocol?

Method

Design

A total of 147 practicing SUD counselors were randomly assigned to one of two conditions. Participants in both conditions (a) completed an online training course in Cognitive Behavioral Therapy for Substance Use Disorders (www.nidatoolbox.org) and (b) attended a series of four weekly group supervision meetings using an interactive Web Conferencing platform (www.webex.com). Both groups also completed pre- and post-intervention questionnaires assessing knowledge of the CBT content, provider self-efficacy, and job burnout.

The online CBT course was created by translating the content from the NIDA therapy manual “A Cognitive Behavioral Approach: Treating Cocaine Addiction” (Carroll, 1998) into a series of interactive, media-rich, web-based modules for clinician training. Each of the eight topics in the CBT manual resulted in a stand alone, problem focused module (e.g. “Problem Solving”, “Coping with Craving”, “Refusal Skills”) designed to provide counselors with the knowledge and skills required to successfully implement that set of CBT strategies and/or techniques in their clinical practice. Each module or “tool” employs a variety of vignettes, video role plays, graphics and animated sequences to promote active engagement with the material. The course is currently available at www.nidatoolbox.org, and available for free Continuing Education credit through the Addiction Technology Transfer Centers' searchable database of distance learning courses (www.addictioned.org).

The experimental conditions were differentiated by the degree to which they explicitly promoted adherence to the CBT protocol. In the High Fidelity (HF) condition, participants completed the eight online training modules in the order prescribed in the original treatment manual, two modules per week, for one month. Participants assigned to the Low Fidelity (LF) condition were also given one month to complete all eight training modules. However, participants in the LF condition were encouraged to access the modules in any order, placing greater emphasis on those topics that they thought would be most relevant to their own clinical work. Ongoing weekly web conferencing sessions reflected these different emphases. Group supervision sessions in the HF condition were highly structured, following a curriculum that focused discussion on counselor experiences relevant to the two topics of the week. In contrast, group supervision in the LF condition was relatively unstructured, with a flexible agenda that follows participants' various interests.

Recruitment

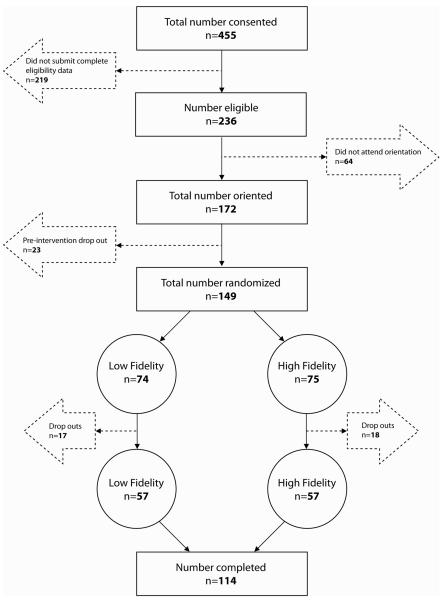

Recruitment fliers were distributed via email lists maintained by the Pacific Southwest Addiction Technology Transfer Center (ATTC) and the Northwest Frontier ATTC. Electronic fliers were also distributed via email lists maintained by colleagues at the Counsellor Certification Board of Oregon, Substance Abuse Services at Columbia University Medical Center, and the Alcohol and Drug Abuse Institute at the University of Washington. The only eligibility criteria were that an individual be currently practicing as a SUD counselor with a full caseload (30 hours per week or greater) and be neither a private practitioner nor a compensated federal employee. Participants faxed pay check stubs to research staff members, who placed follow-up phone calls to verify that these criteria were met. Of the 455 respondents who initially expressed interest in the study, 236 counselors were deemed eligible to participate, 172 participated in an initial orientation session, and 147 were ultimately randomized to experimental conditions (see Figure 1)

Figure 1.

Participant flow (CONSORT diagram)

Procedures

The investigators relied upon internet technology to conduct every stage of the project, from recruitment and consent, through ongoing training and live group supervision sessions, to follow-up data collection. All study procedures were approved by the Stanford University Institutional Review Board. Project staff used FormSite (http://formsite.com/) to collect initial enrollment information and to obtain informed consent. All enrollment data that participants entered into FormSite was stored on an SSL encrypted server in order to ensure the protection of sensitive personally identifiable information. Participants who failed to answer any required fields were unable to enroll in the study.

Eligible participants were required to attend a 30-minute online orientation session. This orientation was designed to support the participation of counselors who had minimal computer experience. The orientation session provided counselors with an overview of the Learning Management System (LMS) that they would use to access study materials, as well as a general introduction to the WebEx virtual conferencing environment in which the group supervision sessions would be held. The SumTotal LMS (www.sumtotalsystems.com) handled virtually all study logistics, including scheduling group supervision sessions, the administration of the pre and post-test assessments, and providing timely access to the prescribed self-paced CBT training modules. Importantly, the orientation session also guided participants through the process of testing their computer systems to ensure that they could participate in all subsequent sessions and activities.

Participants who completed the orientation session were sent individual e-mails containing an assigned username and password to be used for the duration of the study, and a link providing access to the online pre-test. After completion of the pre-test, subjects were asked to logon to the LMS with their username and password, and choose from among a set of available dates and times to attend a series of four virtual group supervision sessions. There were ultimately 28 groups across the two conditions, 14 in HF and 14 in LF. The number of group participants ranged from 3 to 7 with a mean of 7 participants per group. All groups were completed within a two-month time frame by a single postdoctoral fellow who was trained and closely supervised by the first author (KRW). Counselors who participated in all phases of the trial were eligible for a total of $250 in subject compensation. Payments were allocated as follows: $50 for baseline assessment, $100 for attending at least 3 of the 4 weekly supervision/consultation sessions, and $100 for completing post-training assessment.

High versus Low Fidelity Conditions

The LMS was used to govern access to the self-paced training modules in a manner that was consistent with the condition to which a participant was assigned. Participants in the Low Fidelity (LF) condition were able to access all eight online training modules from the beginning of the protocol. In contrast, participants in the HF condition were only able to access the modules in the order in which they were assigned, consistent with the order in which they appear in the original CBT manual (Carroll, 1998).

HF and LF conditions also differed on a variety of other key dimensions (see Table 1). The HF condition was characterized by a structured format in which the facilitator followed a set agenda and used prepared slides to guide discussion of the material contained in the assigned CBT modules. The explicit goals of the training and supervision sessions in the HF condition were to promote adherence to the CBT protocol, as operationalized in the Yale Adherence & Competence Scale (YACS; Carroll et al., 2000). As such, the facilitator consistently encouraged counselors to (a) adhere to the session structure, (b) to maintain an explicit skills training focus, and (c) to teach clients to recognize, avoid and cope with triggers (i.e. conduct functional analyses).

Table 1.

Factors that differentiate high and low fidelity training conditions.

| High Fidelity Training Condition | Low Fidelity Training Condition |

|---|---|

one of the following at least 3 times/session

|

|

Adherence to session structure was encouraged by referring to the “20/20/20 rule” which dictates that counselors spend the first 20 minutes of each session setting an agenda, assessing substance use, craving, and high-risk situations since the last session, eliciting patients concerns, and discussing the practice exercise. In the second 20-minute segment, counselors were encouraged to introduce and discuss the current session topic and relate it to current concerns. The last 20 minutes were to focus on exploring the patient's understanding of and reactions to the topic, assignment of a new practice exercise, and anticipation of potential upcoming high risk situations.

Participants in the HF condition were encouraged to maintain an explicit skills training focus in their application of CBT techniques by teaching, modeling, rehearsing, and discussing specific coping skills. They were reminded that in-depth discussion of specific coping skills must include an introduction and rationale for the skill, a thorough explanation of it using examples, linking the skill to past client behavior, and assessment of the client's understanding of the skill.

Counselors in the HF condition were also encouraged to teach clients to recognize, avoid and cope with triggers (i.e., to conduct functional analyses of their behavior). Such functional analyses include a thorough discussion of past high-risk situations or triggers, past actions taken by the client to avoid or cope with those situations, and anticipation of future high-risk situations, including the development of realistic, elaborate, detailed plans for how to successfully navigate those situations without resorting to substance use.

In contrast, the LF condition was characterized by a peer-led format, with the agenda for each supervision session arrived at by group consensus, and the facilitator prepared to follow the group discussion wherever it might lead. Participants in the LF condition were not exposed to any of the prepared slides during the group supervision sessions that reinforced the points covered in the CBT training modules, nor were they explicitly encouraged to adhere to the 20/20/20 rule, to maintain a deliberate skills training focus, or to conduct functional analyses with their clients.

Measures

Participants in both HF and LF conditions completed an identical battery of assessment instruments before and after the training intervention. The assessment questionnaire was administered via the online data collection tool, Inquisite Survey Builder (http://www.inquisite.com/). This online survey tool is similar to the web form that was used to collect initial enrollment information, but allows for more pages of questions, branching questions or conditional logic, supports a variety of reporting functions, and uses encryption to ensure the privacy and confidentiality of respondent data.

CBT Knowledge

A seventy-three item multiple choice test was constructed to measure knowledge regarding the content contained in the 8 CBT training modules. Test items for each module were developed to assess the student performance objectives outlined for that module, e.g. “Slowing down the tape” is a technique used for ____ (a) Relaxation, (b) Recognizing automatic thoughts, (c) Recalling negative consequences, or (d) Reviewing notes.

CBT Self-Efficacy

The authors modified the Provider Efficacy Questionnaire (PEQ; Ozer et al., 2004) to assess participants' confidence in their ability to competently deliver CBT treatment to clients. Twenty-six items were developed to assess provider self-efficacy regarding each of the 8 CBT topics or modules. Participants rated each of the items on an 11-point Likert scale, ranging from ‘Not at all confident’ (0) to ‘Extremely confident’ (10) regarding their ability to apply the skills and techniques covered in that module.

Job Burnout

Participants completed the Maslach Burnout Inventory – Human Services Survey (MBI-HSS; Maslach & Jackson, 1996). This widely used, psychometrically valid instrument consists of 22 statements of job-related feelings. Participants respond to each statement by rating if they ever feel this way about their job, and if so, how often. Response options ranged from “Never” (0) to “Every day” (6). Responses are scored along three subscales: emotional exhaustion, which is defined as the perception that one's emotional resources have been completely expended, depersonalization, referring to the development of cynical attitudes toward one's clients, and a decreased sense of personal accomplishment.

Results

Participant Characteristics

Table 2 provides demographic data for our sample of substance abuse counselors grouped by training condition. The mean age of the total sample was 47 years (SD = 9.6). The majority of participants were female (62.1%) and Caucasian (65.3%). Our sample was relatively well-educated (67.7% had a bachelor's degree or higher), and the majority of participants identified themselves as being in recovery from a SUD (67.8%). Most participants had some familiarity with CBT prior to enrolling in the study (88.4%). Participants in the two conditions did not differ on any of these demographic variables, nor did they differ on baseline measures of CBT Knowledge, CBT Self-Efficacy, or Job Burnout. However, a significantly higher proportion of women were observed to have dropped out of the study (80% women v. 20% men) when compared to participants who completed the protocol (57% women v. 43% men), χ2 (1, 147) = 5.950, p = .02. No other statistically significant differences were found between those who completed the protocol and those who dropped out.

Table 2.

Participant Characteristics for both Training Conditions and Total Sample.

| Variables | Low Fidelity (n = 74) | High Fidelity (n = 73) | Total (N = 147) |

|---|---|---|---|

| Age M (SD) | 47(9.2) | 48(10.0) | 47(9.6) |

| Gender n (%)1 | |||

| Male | 30(40.5%) | 25(35.2%) | 55(37.9%) |

| Female | 44(59.5%) | 46(64.8%) | 90(62.1%) |

| Race/Ethnicity n (%) | |||

| African American | 17(23.0%) | 12(16.4%) | 29(19.7%) |

| Asian/Pacific Islander | 0(0%) | 1(1.4%) | 1(.7%) |

| Caucasian | 44(59.5%) | 52(71.2%) | 98(65.3%) |

| Hispanic/Latino | 7(9.5%) | 5(6.8%) | 12(8.2%) |

| Other | 6(8.1%) | 3(4.1%) | 9(6.1%) |

| Education n (%)2 | |||

| High School Diploma/GED | 16(21.6%) | 13(18.1%) | 29(19.9%) |

| Associates Degree | 18(24.3%) | 9(12.5%) | 27(18.5%) |

| Bachelors Degree | 13(17.6%) | 15(20.8%) | 28(19.2%) |

| Post-Graduate Degree | 27(36.5%) | 35(48.6%) | 62(42.5%) |

| SUD Recovery n (%)3 | |||

| Yes | 47(63.5%) | 52(72.2%) | 99(67.8%) |

| No | 27(36.5%) | 20(27.8%) | 47(32.2%) |

| Familiar with CBT n (%) | |||

| Yes | 65(87.8%) | 65(89.0%) | 130(88.4%) |

| No | 9(12.2%) | 8(11.0%) | 17(11.6%) |

| Primary Work Setting n (%) | |||

| Outpatient | 45(60.8%) | 48(65.8%) | 93(63.3%) |

| Controlled | 29(39.2%) | 25(34.2%) | 54(36.7%) |

Note. Participants in the two training conditions did not differ significantly on any of the above pre-training demographic characteristics.

N = 145

N = 146

N = 146

Treatment Completion Rates

Of the 147 community substance abuse counselors assigned to one of the two CBT training conditions, 35 (24%) dropped out of the study before completion of the program. This dropout rate is comparable with the approximately 22% participant dropout rate in similar training studies (Sholomskas et al., 2005). The proportion of dropouts was not significantly different across the two training conditions: χ2 (1, N = 147) = .057, p = .811. In addition, dropouts were similar to completers on all demographic variables including age: t (1, 144) = −.907, p = .37; gender: χ2 (1, N = 145) = .13, p = .72, education: χ2 (3, N = 146) = 2.80, p = .42, and ethnicity: χ2 (4, N = 147) = 1.08, p = .90. They were also similar on pre-training levels of CBT Knowledge: t (1, 145) = −1.69, p = .10; CBT Self-Efficacy: t (1, 145) = .57, p = .57, and the Personal Accomplishment: t (1, 145) = −.18, p = .86; and Depersonalization (t (1, 145) = .58, p = .38) subscales of the Provider Burnout Questionnaire. However, dropouts and completers did differ significantly on the Emotional Exhaustion subscale, t (1, 145) = 2.23, p = .03, with dropouts reporting greater emotional exhaustion (M = 27.20, SD = 10.92) than completers (M = 23.13, SD = 8.91).

Data Analytic Approach

For the primary analysis, several repeated measures analysis of variance (ANOVA) were conducted to examine the presence of Main (Time) and Interaction (Time × Condition) effects on all three main outcomes (CBT Knowledge, Self-Efficacy, and Provider Burnout) and their relative subscales. We then explored potential differences in the subscales to determine which (if any) differences were observed between groups on specific aspects of these outcomes. Sample size varied in the following analyses due to missing data observed in the outcome measures.

First, we examined changes in total CBT knowledge scores from pre to post training. The Time (pre and post) × Condition (Low or High Fidelity) interaction was not significant: Wilks's F (1, 110) = 2.78, p = .10 and ηp2 = .02. However, both training conditions improved in CBT Knowledge from pre to post training, as evident by the statistically significant Main effect of Time, Wilks's F (1, 110) = 103.53, p = .00, and ηp2 = .49 (see Table 3). We then examined changes in the eight subscales of the CBT Knowledge test pre and post training. No significant Time × Condition interaction was observed for any of the subscales.

Table 3.

Means and Standard Deviations for the CBT Knowledge, Self-Efficacy, and Burnout Measures

| Low Fidelity M (SD) (n = 57) | High Fidelity M (SD) (n = 55) | |||

|---|---|---|---|---|

| Measures | Baseline | Posttraining | Baseline | Posttraining |

| CBT Knowledge (Total) | 57.33(6.04) | 60.81(4.94) | 56.69(7.09) | 61.53(6.61) |

| Coping with Craving | 13.61(1.77) | 14.77(1.45) | 13.58(1.78) | 15.02(1.48) |

| Motivation | 5.77(1.07) | 6.07(.98) | 5.78(1.55) | 5.96(1.10) |

| Refusal Skills | 9.09(.91) | 9.35(.86) | 9.04(1.19) | 9.56(1.00) |

| Decisions | 5.65(1.14) | 6.36(1.11) | 5.56(1.32) | 6.16(1.26) |

| Coping Plan | 5.37(.79) | 5.61(.67) | 5.38(.78) | 5.62(.83) |

| Problem Solving | 6.56(1.05) | 6.94(.87) | 6.33(1.22) | 6.80(1.11) |

| Case Management | 5.72(1.52) | 5.91(1.46) | 5.49(1.40) | 6.22(1.49) |

| HIV | 5.56(1.31) | 5.77(1.23) | 5.53(1.50) | 6.18(1.49) |

| Self-Efficacy (Total) | 226.47(26.60) | 252.46(21.15) | 230.95(29.40) | 253.11(23.49) |

| Coping with Craving | 44.39(5.87) | 49.12(4.45) | 44.80(7.63) | 48.98(5.46) |

| Motivation | 37.77(4.66) | 40.18(3.41) | 37.91(4.48) | 40.27(4.18) |

| Refusal Skills | 35.35(5.69) | 39.65(3.69) | 36.62(5.57) | 40.47(4.03) |

| Decisions | 14.77(4.88) | 19.21(2.46) | 15.98(4.24) | 18.67(3.29) |

| Coping Plan | 19.22(1.99) | 20.26(1.64) | 19.51(2.28) | 20.42(2.05) |

| Problem Solving | 6.18(3.26) | 9.51(1.20) | 6.20(3.14) | 8.89(1.87) |

| Case Management | 33.28(8.22) | 36.02(7.28) | 33.85(8.39) | 36.85(5.82) |

| HIV | 35.51(4.25) | 38.49(3.61) | 36.07(4.94) | 38.55(4.19) |

| Burnout | ||||

| Emotional Exhaustion | 22.54(8.79) | 20.84(7.38) | 23.72(9.07) | 23.40(10.12) |

| Depersonalization | 8.77(3.21) | 8.67(3.26) | 8.40(3.24) | 9.07(4.26) |

| Personal Accomplishment | 49.30(5.30) | 51.46(4.15) | 49.83(3.89) | 50.13(4.58) |

Next, we tested changes participants' ratings of CBT self-efficacy from pre to post training. The Time × Condition interaction for CBT Self-Efficacy was not statistically significant, Wilks's F (1,110) = .68, p = .41, and ηp2 = .01. However, as was the case with CBT knowledge, both training conditions showed improvement in CBT self-efficacy from pre to post training, Wilks's F (1, 110) = 110.72, p = .00, and ηp2 = .50. We also examined changes in ratings of self-efficacy associated with each of the eight CBT modules and found no significant Time × Condition interactions.

Finally, we tested for differential changes in participants' perceived degree of workplace burnout on three subscales of the MBI: Emotional Exhaustion, Depersonalization, and Personal Accomplishment. Although the Time × Condition interaction effect was not statistically significant for Emotional Exhaustion, Wilks's F (1,110) = 1.65, p = .20, and ηp2 = .03 nor for Depersonalization, Wilks's F (1,110) = 1.93, p = .17, and ηp2 = .02, a significant interaction effect was found for Personal Accomplishment, Wilks's F (1,110) = 4.33, p = .04, and ηp2 = .04. Participants in the Low Fidelity condition reported a greater reduction in perceived burnout related to Personal Accomplishment. Participants in both groups improved significantly in this domain of burnout from pre to post training, Wilks's F (1,110) = 7.45, p = .01, and ηp2 = .06.

Discussion

Although other researchers have evaluated different blends of technology, training and supervision in promoting the adoption of CBT for treating SUDs (LoCastro et al., 2008; Scholomskas et al., 2005) to our knowledge, this is the first study to test the feasibility of implementing this innovative blend of instructional technology in a geographically diverse sample of practicing community substance use counselors. This study has several limitations. First, we did not include a no-training control condition. Both groups of participants received some training in CBT making it difficult to parse out the effect of the protocol from the influence of other potential influencing factors (e.g., regression to the mean). A no training control condition would have helped more clearly elucidate the impact of our training on important provider outcomes such as job-related burnout. Second, we did not directly examine whether the supervision/consultation sessions consistently differed from one another. For example, the LF supervision/consultation sessions were designed to be more flexible than the HF conditions, but no manipulation check (e.g., coding of audio tapes) was used to ensure that these sessions remained consistent. Thus, it is difficult to determine the whether any differential outcomes between the two conditions were due to the experimental manipulation. Third, no direct measure of clinician behavior was used in the study. This would have had the obvious advantage of shedding some light on how much of an impact our training protocols had on the adoption of CBT-based therapy skills in actual clinical practice. Fourth, patient outcomes were not measured in this study. An important next step in this line of research will be to examine whether the level of structure provided in different blended learning interventions have differential impacts on clinician practices and patient outcomes.

Despite these limitations, the present study provides us with a valuable demonstration of the feasibility of a blended training intervention that takes place entirely on the Internet. It is critically important to establish the feasibility of this approach because community-based substance abuse counselors typically practice in organizations that lack computer and information resources (e.g., email) (McLellan et al., 2003), and report a high degree of workplace burnout (Knudsen et al., 2006). These factors conspire to make community SUD treatment providers a particularly challenging audience for a training intervention that relies entirely on Internet technology. The present study provides compelling evidence that given a single 30-minute orientation session, and ongoing technical support, a diverse audience of practicing SUD counselors can successfully engage with both synchronous and asynchronous online training resources, and demonstrate significant improvement on measures of knowledge and self-efficacy as a result.

The results of this feasibility study have important implications for dissemination and implementation of evidence-based treatment practices, because the technology-based training program that was evaluated is entirely scalable. A training intervention is said to be scalable if one can rapidly increase the number of trainees while only marginally increasing the resources required to train those additional individuals. Once the WBT modules have been developed and deployed, the cost of delivering the course content to an additional 10, 20, or even 1,000 counselors is fairly trivial. Furthermore, using web conferencing technology to facilitate group supervision sessions is far more scalable than holding face-to-face supervision groups as it allows a single centrally located clinician to conduct multiple supervision sessions per day with groups composed of trainees from around the country or around the world.

In addition to testing the feasibility of this innovative blended learning intervention, the present study compared learner outcomes across two conditions that varied in the degree to which they explicitly promoted adherence to the manual-based CBT treatment protocol. Participants in both experimental conditions demonstrated equivalent improvements in scores on a test of knowledge about CBT, and similar improvements in their ratings of their self-efficacy in their ability to use CBT in their clinical practice. The present results suggest that counselors who participate in relatively more structured training designed to promote adherence to a manual-based treatment may not result in better training outcomes than counselors who are afforded a greater degree of control over their individual training experience.

This finding has important implications for those who are interested in implementing evidence-based treatments for individuals with SUDs. In the “Stage Model of Psychotherapy Development” (Onken, Blaine, & Battjes, 1997) alternately been referred to as the “Psychotherapy Technology Model” (Morgenstern & McKay, 2007) or “Systematic Replication Model” (Hayes, 2002), dissemination research tests the external validity of scientifically proven technologies, identifying subpopulations of responders and non-responders, and the necessary and sufficient conditions for replication of the results obtained in efficacy trials. Clinical training is thus conceptualized as occurring at the end of a long, unidirectional translation pipeline. Its primary purpose is to promote adherence, fidelity and compliance with the treatment protocol among counselors practicing in the field. Adherence is typically measured by listening to audiotapes of clinicians working with clients, and having an independent rater code those interactions according to a standardized system (e.g. Yale Adherence & Competence Scale, YACS; Carroll et al., 2000; Motivational Interviewing Treatment Integrity scale for Motivational Interviewing, MITI; Moyers, Martin, Manuel & Miller, 2002). The success of a training intervention is then judged primarily by the degree to which trainees can adhere to the manual-based treatment protocol.

Although this model has tremendous intuitive appeal, Morgenstern & McKay (2007) have recently invited us to question some of its most fundamental assumptions. These authors point out that literally hundreds of methodologically rigorous randomized control trials have yet to yield convincing evidence that theory-based active ingredients of so-called ESTs are curative agents, or that the specific factors captured in the manual based treatment protocols are more important than non-specific ones. Barring such clear evidence, it has become increasingly difficult to justify adherence to these specific factors as the primary goal of clinical training in SUD.

An alternative perspective on the objectives of clinical training reflects the broader, more comprehensive view of evidence based practices that have been proposed in recent years. The APA Presidential Task Force on Evidence Based Practice (2006) defines Evidence-based Practice in Psychology (EBPP) as “the integration of the best available research evidence with clinical expertise in the context of patient characteristics, culture and preferences”. This model is consistent with the principle of evidence-based medicine endorsed by the Institute of Medicine (2001), which conceptualizes evidence based practice as a stool resting upon a three “legs”; (a) research evidence, (b), clinical expertise of the health professional and (c) the unique preferences and expectations of the client (APA, 2006; Spring, 2007).

If we expand our view of implementing effective practices beyond the unidirectional transmission of theory-based active ingredients of manual based treatment protocols (Weingardt & Gifford, 2007), training in evidence-based practices can similarly move beyond a narrow focus on promoting adherence to treatment protocols and embrace the clinical expertise of the counseling professional and the individual challenges faced by the clients that he or she is working with. Such a view is consistent with that expressed by Hayes (2002), who outlined a “practical application model”, wherein dissemination research examines how to improve outcomes in the real world of health care delivery. In Hayes' model, the focus is not on strict adherence to a manual-based treatment protocol, but rather on the identification of “…technologies that clinicians are willing to accept and adopt. If they are to hard to learn, too confusing, too complex, or too boring, then that limits their practical applicability… (p. 411).“

Rather than being trained to closely follow a prescribed sequence of interventions, an alternative training model would explicitly encourage trainees to use their best clinical judgment to pick and choose among the clinical strategies and techniques, with an eye towards the unique challenges experienced by their individual clients. Building upon the popular metaphor that clinicians learning new strategies and techniques are like craftsmen obtaining new tools, this practical application model has been termed “Toolbox Adoption” (Guydish, Manser, Jessup, & Tajima, 2007). In this model, training provides counselors with new clinical tools which they are then free to select and apply in eclectic fashion. Such a self-directed approach to clinical training is reflective of contemporary evidence-based models of training and performance improvement which uniformly advocate for maximizing “learner control” over training experiences (see Committee on Developments in the Science of Learning, 1999; pp. 192).

Of course the ultimate test of a clinical training program is whether it results in improved outcomes among the patients of clinicians who are trained. More research is clearly needed to determine whether the flexible, blended training model described in the present paper will result such improved patient outcomes. However, the present study does suggest that such a flexible model can result in equivalent improvements in therapist knowledge and self-efficacy as a more traditional training approach. Furthermore, our results suggest that a more flexible training model may also increase feelings of personal accomplishment among counselors relative to clinicians who participate in a more traditional, structured training program. Given the extraordinarily high rates of voluntary turnover among clinicians who treat substance use disorder, this finding alone is encouraging and worthy of further exploration.

Acknowledgments

The work described in this manuscript was supported by the Health Services Research and Development Service, Department of Veterans Affairs, and by a grant from the National Institute on Drug Abuse (R21DAO07993).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The opinions expressed are those of the authors and do not reflect the official positions of the Department of Veterans Affairs nor the National Institute on Drug Abuse.

References

- APA Presidential Task Force on Evidence-Based Practice Evidence-based practice in psychology. American Psychologist. 2006;61(4):271–285. doi: 10.1037/0003-066X.61.4.271. [DOI] [PubMed] [Google Scholar]

- Carroll KM. A Cognitive Behavioral Approach: Treating Cocaine Addition. US Department of Health and Human Services, National Institutes of Health; Rockville, MD: 1998. (Therapy Manual for Drug Addiction NIH Publication Number 98-4308). [Google Scholar]

- Carroll KM, Nich C, Sifry R, Frankforter T, Nuro KF, Ball SA, et al. A general system for evaluating therapist adherence and competence in psychotherapy research in the addictions. Drug and Alcohol Dependence. 2000;57:225–238. doi: 10.1016/s0376-8716(99)00049-6. [DOI] [PubMed] [Google Scholar]

- Committee on the Developments in the Science of Learning . How people learn: brain, mind, experience, and school. National Academy Press; Washington, DC: 1999. [Google Scholar]

- Cucciare MA, Weingardt K, Villafranca S. Using blended learning to implement evidence based psychotherapies. Clinical Psychology: Science and Practice. 2008;15:299–307. [Google Scholar]

- Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance: A systematic review of the effect of continuing medical education strategies. Journal of the American Medical Association. 1995;274(9):700–705. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- Guydish J, Manser ST, Jessup MA, Tajima B. Adoption of motivational interviewing/motivational enhancement therapy; Presented at the American Psychological Association Annual Meeting; San Francisco, CA. August 18, 2007.2007. [Google Scholar]

- Hayes SC. Getting to dissemination. Clinical Psychology: Science and Practice. 2002;9(4):410–415. [Google Scholar]

- Institute of Medicine . Crossing the quality chasm: A new health system for the 21st century. National Academy Press; Washington, D.C.: 2001. [PubMed] [Google Scholar]

- Joe GW, Broome KM, Simpson DD, Rowan-Szal GA. Counselor perceptions of organizational factors and innovations training experience. Journal of Substance Abuse Treatment. 2007;33(2):171–182. doi: 10.1016/j.jsat.2006.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen HK, Ducharme LJ, Roman PM. Counselor emotional exhaustion and turnover intention in therapeutic communities. Journal of Substance Abuse Treatment. 2006;31:173–180. doi: 10.1016/j.jsat.2006.04.003. [DOI] [PubMed] [Google Scholar]

- Knudsen HK, Johnson JA, Roman PM. Retaining counseling staff at substance abuse treatment centers: effects of management practices. Journal of Substance Abuse Treatment. 2003;24:129–135. doi: 10.1016/s0740-5472(02)00357-4. [DOI] [PubMed] [Google Scholar]

- LoCastro JS, Larson MJ, Amodeo M, Muroff J, Gerstenberger E. Web-delivered CBT training and dissemination for community based counselors: The Teach-CBT project; Presented at the Research Society on Alcoholism Annual Meeting; Washington DC. June 30, 2008.2008. [Google Scholar]

- Maslach C, Jackson SE. Maslach Burnout Inventory: Manual. Consulting Psychologists Press; Palo Alto, CA: 1986. [Google Scholar]

- McLellan AT, Carise D, Kleber HD. Can the national addiction treatment infrastructure support the public's demand for quality care? Journal of Substance Abuse Treatment. 2003;25:117–121. [PubMed] [Google Scholar]

- Miller WR, Sorenson JL, Selzer JA, Brigham GS. Disseminating evidence-based practices in substance abuse treatment: A review with suggestions. Journal of Substance Abuse Treatment. 2006;31:25–39. doi: 10.1016/j.jsat.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Martinez J, Pirritano M. A Randomized Trial of Methods to Help Clinicians Learn Motivational Interviewing. Journal of Consulting and Clinical Psychology. 2004;72(6):1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Morgenstern J, McKay JR. Rethinking the paradigms that inform behavioral treatment research for substance use disorders. Addiction. 2007;102(9):1377–89. doi: 10.1111/j.1360-0443.2007.01882.x. [DOI] [PubMed] [Google Scholar]

- Moyers TB, Martin T, Manual JK, Miller WR. The motivational interviewing treatment integrity (MITI) code: version 2.0. Retrieved May 15, 2008 at http://www.oregon.gov/DHS/mentalhealth/ebp/fidelity/mi.pdf.

- Onken LS, Blaine JD, Battjes RN. Behavioral therapy research: a conceptualization of a process. In: Henggeler SW, Santos AB, editors. Innovative Approaches for Difficult-to-Treat Populations. American Psychiatric Press; Washington, DC: 1996. pp. 477–485. [Google Scholar]

- Ozer EM, Adams SH, Gardner LR, Mailloux DE, Wibbelsman CJ, Irwin CE., Jr. Provider self-efficacy and the screening of adolescents for risky health behaviors. Journal of Adolescent Health. 2004;35(2):101–107. doi: 10.1016/j.jadohealth.2003.09.016. [DOI] [PubMed] [Google Scholar]

- Russell T. The No Significant Difference Phenomenon: A Comparative Research Annotated Bibliography on Technology for Distance Education. 5th ed. International Distance Education Certification Center; Montgomery AL: 2001. [Google Scholar]

- Scholomskas DE, Syracuse-Siewert G, Rounsaville BJ, Ball SA, Nuro KF, Carroll KM. We don't train in vain: A dissemination trial of three strategies of training clinicians in cognitive behavioral therapy. Journal of Consulting and Clinical Psychology. 2005;73(1):106–115. doi: 10.1037/0022-006X.73.1.106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Joe GW, Rowan-Szal GA. Linking the elements of change: Program and client responses to innovation. Journal of Substance Abuse Treatment. 2007;33(2):201–209. doi: 10.1016/j.jsat.2006.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spring B. Evidence-based practice in clinical psychology: What it is, why it matters, what you need to know. Journal of Clinical Psychology. 2007;63(7):611–631. doi: 10.1002/jclp.20373. [DOI] [PubMed] [Google Scholar]

- Weingardt KR. The role of instructional design and technology in the dissemination of empirically supported, manual based therapies. Clinical Psychology: Science & Practice. 2004;11(3):313–331. [Google Scholar]

- Weingardt KR, Gifford EV, Caroll KM, Rounsaville BJ. Expanding the vision of implementing effective practices. Addiction. 2007;2007;102:864–865. Commentary on. A vision of the next generation of behavioral therapies research in the addictions. [Google Scholar]

- Weingardt KR, Villafranca SW, Levin C. Technology-based training in cognitive behavioral therapy for substance abuse counselors. Substance Abuse. 2006;27(3):19–25. doi: 10.1300/J465v27n03_04. [DOI] [PubMed] [Google Scholar]

- White JC. Learner-centered teacher-student relationships are effective: a meta-analysis. Review of Educational Research. 2007;77(1):113–143. [Google Scholar]