Abstract

We study nonparametric estimation for current status data with competing risks. Our main interest is in the nonparametric maximum likelihood estimator (MLE), and for comparison we also consider a simpler ‘naive estimator’. Groeneboom, Maathuis and Wellner [8] proved that both types of estimators converge globally and locally at rate n1/3. We use these results to derive the local limiting distributions of the estimators. The limiting distribution of the naive estimator is given by the slopes of the convex minorants of correlated Brownian motion processes with parabolic drifts. The limiting distribution of the MLE involves a new self-induced limiting process. Finally, we present a simulation study showing that the MLE is superior to the naive estimator in terms of mean squared error, both for small sample sizes and asymptotically.

Keywords and phrases: Survival analysis, Current status data, Competing risks, Maximum likelihood, Limiting distribution

1. Introduction

We study nonparametric estimation for current status data with competing risks. The set-up is as follows. We analyze a system that can fail from K competing risks, where K ∈ ℕ is fixed. The random variables of interest are (X, Y), where X ∈ ℝ is the failure time of the system, and Y ∈ {1,…,K} is the corresponding failure cause. We cannot observe (X,Y) directly. Rather, we observe the ‘current status’ of the system at a single random observation time T ∈ ℝ, where T is independent of (X,Y). This means that at time T, we observe whether or not failure occurred, and if and only if failure occurred, we also observe the failure cause Y. Such data arise naturally in cross-sectional studies with several failure causes, and generalizations arise in HIV vaccine clinical trials [see 10].

We study nonparametric estimation of the sub-distribution functions F01,…,F0K, where F0k(s) = P(X ≤ s,Y = k), k = 1,…,K. Various estimators for this purpose were introduced in [10, 12], including the nonparametric maximum likelihood estimator (MLE), which is our primary focus. For comparison we also consider the ‘naive estimator’, an alternative to the MLE discussed in [12]. Characterizations, consistency, and n1/3 rates of convergence of these estimators were established in Groeneboom, Maathuis and Wellner [8]. In the current paper we use these results to derive the local limiting distributions of the estimators.

1.1. Notation

The following notation is used throughout. The observed data are denoted by (T, Δ), where T is the observation time and Δ = (Δ1,…,ΔK+1) is an indicator vector defined by Δk = 1{X ≤ T,Y = k} for k = 1,…,K, and ΔK+1 = 1{X > T}. Let (Ti, Δi), i = 1,…,n, be n i.i.d. observations of (T, Δ), where Note that we use the superscript i as the index of an observation, and not as a power. The order statistics of T1,…,Tn are denoted by T(1),…,T(n). Furthermore, G is the distribution of T, Gn is the empirical distribution of Ti, i = 1,…,n, and ℙn is the empirical distribution (Ti, Δi), i = 1,…,n. For any vector (x1,…,xK) ∈ ℝK we define so that, for example, and For any K-tuple F = (F1,…,FK) of sub-distribution functions, we define FK+1(s) = ∫u>sdF+(u) = F+(∞) – F+(s).

We denote the right-continuous derivative of a function f : ℝ ⟼ ℝ by f′ (if it exists). For any function f : ℝ ⟼ ℝ, we define the convex minorant of f to be the largest convex function that is pointwise bounded by f. For any interval I, D(I) denotes the collection of cadlag functions on I. Finally, we use the following definition for integrals and indicator functions:

Definition 1.1

Let dA be a Lebesgue-Stieltjes measure, and let W be a Brownian motion process. For t < t0, we define and

1.2. Assumptions

We prove the local limiting distributions of the estimators at a fixed point t0, under the following conditions: (a) The observation time T is independent of the variables of interest (X,Y); (b) For each k = 1,…,K, 0 < F0k(t0) < F0k(∞), and F0k and G are continuously differentiable at t0 with positive derivatives f0k(t0) and g(t0); (c) The system cannot fail from two or more causes at the same time. Assumptions (a) and (b) are essential for the development of the theory. Assumption (c) ensures that the failure cause is well-defined. This assumption is always satisfied by defining simultaneous failure from several causes as a new failure cause.

1.3. The estimators

We first consider the MLE. The MLE F̂n = (F̂n1,…,F̂nK) is defined by where

| (1) |

and ℱK is the collection of K-tuples F = (F1,…,FK) of sub-distribution functions on ℝ with F+ ≤ 1. The naive estimator F̃n = (F̃n1,…,F̃nK) is defined by for k = 1,…,K, where ℱ is the collection of distribution functions on ℝ, and

| (2) |

Note that F̃nk only uses the kth entry of the Δ-vector, and is simply the MLE for the reduced current status data (T, Δk). Thus, the naive estimator splits the optimization problem into K separate well-known problems. The MLE, on the other hand, estimates F01,…,F0K simultaneously, accounting for the fact that is the overall failure time distribution. This relation is incorporated both in the object function ln(F) (via the term log(1 – F+)) and in the space ℱK over which is maximized (via the constraint F+ ≤ 1).

1.4. Main results

The main results in this paper are the local limiting distributions of the MLE and the naive estimator. The limiting distribution of F̃nk corresponds to the limiting distribution of the MLE for the reduced current status data (T, Δk). Thus, it is given by the slope of the convex minorant of a two-sided Brownian motion process plus parabolic drift [9, Theorem 5.1, page 89], known as Chernoff's distribution. The joint limiting distribution of (F̃n1,…,F̃nK) follows by noting that the K Brownian motion processes have a multinomial covariance structure, since Δ|T ∼ MultK+1(1, (F01(T),…,F0,K+1(T))). The drifted Brownian motion processes and their convex minorants are specified in Definitions 1.2 and 1.5. The limiting distribution of the naive estimator is given in Theorem 1.6, and is simply a K-dimensional version of the limiting distribution for current status data. A formal proof of this result can be found in [14, Section 6.1].

Definition 1.2

Let W = (W1,…,WK) be a K-tuple of two-sided Brownian motion processes originating from zero, with mean zero and covariances

| (3) |

where Σjk = g(t0)−1 {1{j = k}F0k(t0) – F0j(t0)F0k(t0)}. Moreover, V = (V1,…,VK) is a vector of drifted Brownian motions, defined by

| (4) |

Following the convention introduced in Section 1.1, we write and Finally, we use the shorthand notation ak = (F0k(t0))−1, k = 1,…,K + 1.

Remark 1.3

Note that W is the limit of a rescaled version of Wn = (Wn1,…,WnK), and that V is the limit of a recentered and rescaled version of Vn = (Vn1,…,VnK), where Wnk and Vnk are defined by (17) and (6) of [8]:

| (5) |

Remark 1.4

We define the correlation between Brownian motions Wj and Wk by

Thus, the Brownian motions are negatively correlated, and this negative correlation becomes stronger as t0 increases. In particular, it follows that r12 → −1 as F0+(t0)→ 1, in the case of K = 2 competing risks.

Definition 1.5

Let H̃ = (H̃1,…,H̃K) be the vector of convex minorants of V, i.e., H̃k is the convex minorant of Vk, for k = 1,…,K. Let F̃ = (F̃1,…,F̃K) be the vector of right derivatives of H̃.

Theorem 1.6

Under the assumptions of Section 1.2, n1/3{F̃n(t0 + n−1/3t) – F0(t0)} →d F̃(t) in the Skorohod topology on (D(ℝ))K.

The limiting distribution of the MLE is given by the slopes of a new self-induced process Ĥ = (Ĥ1,…,ĤK), defined in Theorem 1.7. We say that the process Ĥ is ‘self-induced’, since each component Ĥk is defined in terms of the other components through Due to this self-induced nature, existence and uniqueness of Ĥ need to be formally established (Theorem 1.7). The limiting distribution of the MLE is given in Theorem 1.8. These results are proved in the remainder of the paper.

Theorem 1.7

There exists an almost surely unique K-tuple Ĥ = (Ĥ1,…,ĤK) of convex functions with right-continuous derivatives F̂ = (F̂,…,F̂K), satisfying the following three conditions:

akĤk(t) + aK+1Ĥ+(t) ≤ akVk(t) + aK+1V+(t), for k = 1,…,K, t ∈ ℝ,

∫{akĤk(t) + aK+1Ĥ+(t) – akVk(t) – aK+1V+(t)}dF̂k(t) = 0, k = 1,…,K,

For all M > 0 and k = 1,…,K, there are points τ1k < −M and τ2k > M so that akĤk(t) + aK+1Ĥ+(t) = akVk(t) + aK+1V+(t) for t = τ1kand t = τ2k.

Theorem 1.8

Under the assumptions of Section 1.2, n1/3{F̂n(t0 + n−1/3t) – F0(t0)} →d F̂(t) in the Skorohod topology on (D(ℝ))K. Thus, the limiting distributions of the MLE and the naive estimator are given by the slopes of the limiting processes Ĥ and H̃, respectively. In order to compare Ĥ and H̃ we note that the convex minorant H̃k of Vk can be defined as the almost surely unique convex function H̃k with right-continuous derivative F̃k that satisfies (i) H̃k(t) ≤ Vk(t) for all t ∈ ℝ, and (ii) ∫{H̃k(t) – Ṽk(t)}dF̃k(t) = 0. Comparing this to the definition of Ĥk in Theorem 1.7, we see that the definition of Ĥk contains the extra terms Ĥ+ and V+, which come from the term log(1 – F+(t)) in the log likelihood (1). The presence of Ĥ+ in Theorem 1.7 causes Ĥ to be self-induced. In contrast, the processes H̃k for the naive estimator depend only on Vk, so that H̃ is not self-induced. However, note that the processes H̃1,…,H̃K are correlated, since the Brownian motions W1,…,WK are correlated (see Definition 1.2).

1.5. Outline

This paper is organized as follows. In Section 2 we discuss the new self-induced limiting processes Ĥ and F̂. We give various interpretations of these processes and prove the uniqueness part of Theorem 1.7. Section 3 establishes convergence of the MLE to its limiting distribution (Theorem 1.8). Moreover, in this proof we automatically obtain existence of Ĥ, hence completing the proof of Theorem 1.7. This approach to proving existence of the limiting processes is different from the one followed by [5, 6] for the estimation of convex functions, who establish existence and uniqueness of the limiting process before proving convergence. In Section 4 we compare the estimators in a simulation study, and show that the MLE is superior to the naive estimator in terms of mean squared error, both for small sample sizes and asymptotically. We also discuss computation of the estimators in Section 4. Technical proofs are collected in Section 5.

2. Limiting processes

We now discuss the new self-induced processes Ĥ and F̂ in more detail. In Section 2.1 we give several interpretations of these processes, and illustrate them graphically. In Section 2.2 we prove tightness of {F̂k – f0k(t0)t} and {Ĥk(t) – Vk(t)}, for t ∈ ℝ. These results are used in Section 2.3 to prove almost sure uniqueness of Ĥ and F̂.

2.1. Interpretations of Ĥ and F̂

Let k ∈ {1,…,K}. Theorem 1.7 (i) and the convexity of Ĥk, imply that akĤk + aK+1Ĥ+ is a convex function that lies below akVk + aK+1V+. Hence, akĤk + aK+1Ĥ+ is bounded above by the convex minorant of akVk + aK+1V+. This observation leads directly to the following proposition about the points of touch between akĤk + aK+1Ĥ+ and akVk + aK+1V+:

Proposition 2.1

For each k = 1,…,K, we define 𝒩k and 𝒩̂k by

| (6) |

| (7) |

Then the following properties hold: (i) 𝒩̂k ⊂ 𝒩k, and (ii) At points t ∈ 𝒩̂k, the right and left derivatives of akĤk(t) + aK+1Ĥ+(t) are bounded above and below by the right and left derivatives of the convex minorant of akVk(t) + aK+1V+(t).

Since akVk + aK+1V+ is a Brownian motion process plus parabolic drift, the point process 𝒩k is well-known from [4]. On the other hand, little is known about 𝒩̂k, due to the self-induced nature of this process. However, Proposition 2.1 (i) relates 𝒩̂k to 𝒩k, and this allows us to deduce properties of 𝒩̂k and the associated processes Ĥk and F̂k. In particular, Proposition 2.1 (i) implies that F̂k is piecewise constant, and that Ĥk is piecewise linear (Corollary 2.2). Moreover, Proposition 2.1 (i) is essential for the proof of Proposition 2.16, where it is used to establish expression (30). Proposition 2.1 (ii) is not used in the sequel.

Corollary 2.2

For each k ∈ {1,…,K}, the following properties hold almost surely: (i) 𝒩̂k has no condensation points in a finite interval, and (ii) F̂k is piecewise constant and Ĥk is piecewise linear.

Proof. 𝒩k is a stationary point process which, with probability one, has no condensation points in a finite interval [see 4]. Together with Proposition 2.1 (i), this yields that with probability one, 𝒩̂k has no condensation points in a finite interval. Conditions (i) and (ii) of Theorem 1.7 imply that F̂k can only increase at points t ∈ 𝒩k. Hence, F̂k is piecewise constant and Ĥk is piecewise linear.

Thus, conditions (i) and (ii) of Theorem 1.7 imply that akĤk + aK+1H^+ is a piecewise linear convex function, lying below akVk + aK+1V+, and touching akVk + aK+1V+ whenever F̂k jumps. We illustrate these processes using the following example with K = 2 competing risks:

Example 2.3 Let K = 2, and let T be independent of (X,Y). Let T,Y and X|Y be distributed as follows: G(t) = 1 – exp(−t), P(Y = k) = k/3 and P(X ≤ t|Y = k) = 1 – exp(−kt) for k = 1,2. This yields F0k(t) = (k/3){1 – exp(−kt)} for k = 1,2.

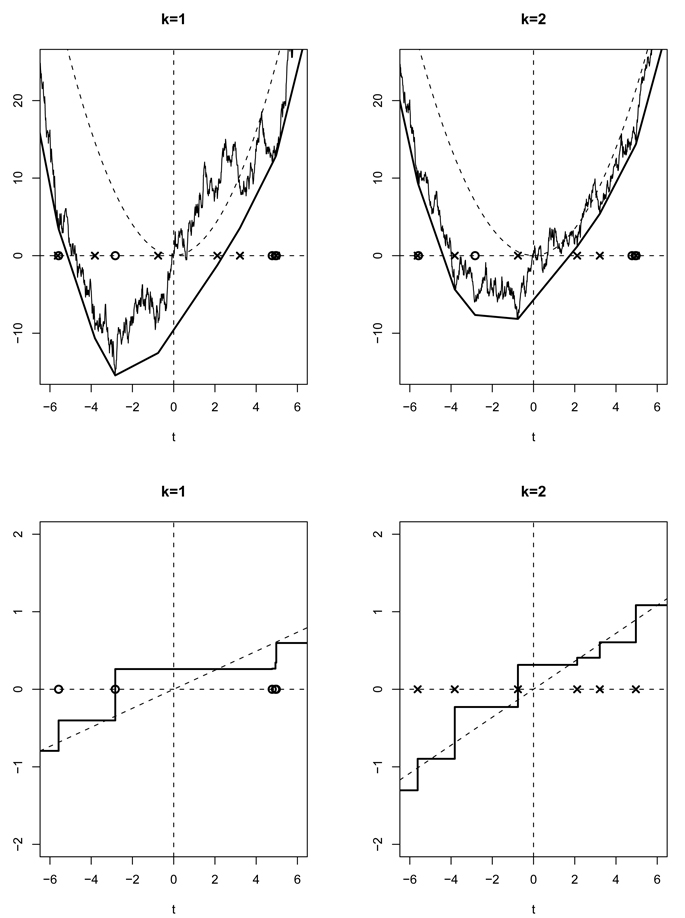

Figure 1 shows the limiting processes akVk + aK+1V+, akĤk + aK+1Ĥ+, and F̂k, for this model with t0 = 1. The relevant parameters at the point t0 = 1 are:

F01(1) = 0.21, F02(1) = 0.58, f01(1) = 0.12, f02(1) = 0.18, g(1) = 0.37.

FIG 1.

Limiting processes for the model given in Example 2.3 for t0 = 1. The top row shows the processes akVk + aK+1V+ and akĤk + ak+1Ĥ+, around the dashed parabolic drifts akf0k(t0)t2/2 + aK+1f0+(t0)t2/2. The bottom row shows the slope processes F̂k, around dashed lines with slope f0k(t0). The circles and crosses indicate jump points of F̂1 and F̂2, respectively. Note that akĤk + ak+1Ĥ+ touches akVk + aK+1V+ whenever F̂k has a jump, for k = 1, 2.

The processes shown in Figure 1 are approximations, obtained by computing the MLE for sample size n = 100,000 (using the algorithm described in Section 4), and then computing the localized processes and (see Definition 3.1 ahead).

Note that F̂1 has a jump around −3. This jump causes a change of slope in akĤk + aK+1Ĥ+ for both components k ∈ {1,2}, but only for k = 1 is there a touch between akĤk + aK+1Ĥ+ and akVk + aK+1V+. Similarly, F̂2 has a jump around −1. Again, this causes a change of slope in akĤk + aK+1Ĥ+ for both components k ∈ {1,2}, but only for k = 2 is there a touch between akĤk + aK+1Ĥ+ and akVk + aK+1V+. The fact that akĤk + aK+1Ĥ+ has changes of slope without touching akVk + aK+1V+ implies that akĤk + aK+1Ĥ+ is not the convex minorant of akVk + aK+1V+.

It is possible to give convex minorant characterizations of Ĥ, but again these characterizations are self-induced. Proposition 2.4 (a) characterizes Ĥk in terms of and Proposition 2.4 (b) characterizes Ĥk in terms of

Proposition 2.4

Ĥ satisfies the following convex minorant characterizations:

- For each k = 1,…,K, Ĥk(t) is the convex minorant of

(8) - For each k = 1,…,K, Ĥk(t) is the convex minorant of

where and(9)

Proof. Conditions (i) and (ii) of Theorem 1.7 are equivalent to:

for k = 1,…,K. This gives characterization (a). Similarly, characterization (b) holds since conditions (i) and (ii) of Theorem 1.7 are equivalent to:

for k = 1,…,K.

Comparing the MLE and the naive estimator, we see that H̃k is the convex minorant of Vk, and Ĥk is the convex minorant of Vk + (aK+1/ak){V+ – Ĥ+}. These processes are illustrated in Figure 2. The difference between the two estimators lies in the extra term (aK+1/ak){V+ – Ĥ+}, which is shown in the bottom row of Figure 2. Apart from the factor aK+1/ak, this term is the same for all k = 1,…,K. Furthermore, aK+1/ak = F0k(t0)/F0,K+1(t0) is an increasing function of t0, so that the extra term (aK+1/ak){V+ – Ĥ+} is more important for large values of t0. This provides an explanation for the simulation results shown in Figure 3 of Section 4, which indicate that MLE is superior to the naive estimator in terms of mean squared error, especially for large values of t. Finally, note that (aK+1/ak){V+ – Ĥ+} appears to be nonnegative in Figure 2. In Proposition 2.5 we prove that this is indeed the case. In turn, this result implies that H̃k ≤ Ĥk (Corollary 2.6), as shown in the top row of Figure 2.

FIG 2.

Limiting processes for the model given in Example 2.3 for t0 = 1. The top row shows the processes Vk and their convex minorants H̃k (grey), together with Vk + (ak+1/ak)(V+–Ĥ+ and their convex minorants Ĥk (black). The dashed lines depict the parabolic drift f0k(t0)t2/2. The middle row shows the slope processes F̃k (grey) and F̂k (black), which follow the dashed lines with slope f0k(t0). The bottom row shows the ‘correction term’ (ak+1/ak)(V+–Ĥ+) for the MLE.

FIG 3.

Relative MSEs, computed by dividing the MSE of the MLE by the MSE of the other estimators. All MSEs were computed over 1000 simulations for each sample size, on the grid 0, 0.01, 0.02,…, 3.0.

Proposition 2.5

Ĥ+ (t) ≤ V+(t) for all t ∈ ℝ.

Proof. Theorem 1.7 (i) can be written as

The statement then follows by summing over k = 1,…,K.

Corollary 2.6

H̃k(t) ≤ Ĥk(t) for all k = 1,…,K and t ∈ ℝ.

Proof. Let k ∈ {1,…,K} and recall that H̃k is the convex minorant of Vk. Since V+ – Ĥ+ ≥ 0 by Proposition 2.5, it follows that H̃k is a convex function below Vk + (aK+1/ak){V+ – Ĥ+}. Hence, it is bounded above by the convex minorant Ĥk of Vk + (aK+1/ak){V+ – Ĥ+}.

Finally, we write the characterization of Theorem 1.7 in a way that is analogous to the characterization of the MLE in Proposition 4.8 of [8]. We do this to make a connection between the finite sample situation and the limiting situation. Using this connection, the proofs for the tightness results in Section 2.2 are similar to the proofs for the local rate of convergence in [8, Section 4.3]. We need the following definition:

Definition 2.7

For k = 1,…,K and t ∈ ℝ, we define

| (10) |

Note that Sk is the limit of a rescaled version of the process Snk = akWnk + aK+1Wn+, defined in (18) of [8].

Proposition 2.8

For all k = 1,…,K, for each point τk ∈ 𝒩̂k (defined in (7)) and for all s ∈ ℝ we have:

| (11) |

and equality must hold if s ∈ 𝒩̂k.

Proof. Let k ∈ {1,…,K}. By Theorem 1.7 (i), we have

where equality holds at t = τk ∈ 𝒩̂k. Subtracting this expression for t = τk from the expression for t = s, we get:

The result then follows by subtracting from both sides, and using that dVk(u) = F̄0k(u)du + dWk(u) (see (4)).

2.2. Tightness of Ĥ and F̂

The main results of this section are tightness of {F̂k(t) – F̄0k(t)} (Proposition 2.9) and {Ĥk(t) – Vk(t)} (Corollary 2.15), for t ∈ ℝ. These results are used in Section 2.3 to prove that Ĥ and F̂ are almost surely unique.

Proposition 2.9

For every ϵ > 0 there is an M > 0 such that

Proposition 2.9 is the limit version of Theorem 4.17 of [8], which gave the n1/3 local rate of convergence of F̂nk. Hence, analogously to [8, Proof of Theorem 4.17], we first prove a stronger tightness result for the sum process {F̂+(t) – F̄0+(t)}, t ∈ ℝ.

Proposition 2.10

Let β ∈ (0,1) and define

| (12) |

Then for every ϵ > 0 there is an M > 0 such that

Proof. The organization of this proof is similar to the proof of Theorem 4.10 of [8]. Let ϵ > 0. We only prove the result for s = 0, since the proof for s ≠ 0 is equivalent, due to stationarity of the increments of Brownian motion.

It is sufficient to show that we can choose M > 0 such that

In fact, we only prove that there is an M such that

since the proofs for the inequality F̂+(t) ≤ F̄0+(t – Mv(t)) and the interval (−∞,0] are analogous. In turn, it is sufficient to show that there is an m1 > 0 such that

| (13) |

where pjM satisfies as M → ∞. We prove (13) for

| (14) |

where d1 and d2 are positive constants. Using the monotonicity of F̂+, we only need to show that P(AjM) < pjM for all j ∈ ℕ and M > m1, where

| (15) |

We now fix M > 0 and j ∈ ℕ, and define τkj = max{𝒩̂k ∩ (−∞,j + 1]}, for k = 1,…,K. These points are well defined by Theorem 1.7 (iii) and Corollary 2.2 (i). Without loss of generality, we assume that the sub-distribution functions are labeled so that τ1j ≤ ⋯ ≤ τKj. On the event AjM, there is a k ∈ {1,…,K} such that F̂k(j + 1) ≥ F̄0k(sjM). Hence, we can define ℓ ∈ {1,…,K} such that

| (16) |

| (17) |

Recall that F̂ must satisfy (11). Hence, P(AjM) equals

| (18) |

| (19) |

Using the definition of τℓj and the fact that F̂ℓ is monotone nondecreasing and piecewise constant (Corollary 2.2), it follows that on the event AjM we have, F̂ℓ(u) ≥ F̂ℓ(τℓj) = F̂ℓ(j + 1) ≥ F̄0ℓ(sjM), for u ≥ τℓj. Hence, we can bound (18) above by

For m1 sufficiently large, this probability is bounded above by pjM/2 for all M > m1 and j ∈ ℕ, by Lemma 2.11 below. Similarly, (19) is bounded by pjM/2, using Lemma 2.12 below.

Lemmas 2.11 and 2.12 are the key lemmas in the proof of Proposition 2.10. They are the limit versions of Lemmas 4.13 and 4.14 of [8], and their proofs are given in Section 5. The basic idea of Lemma 2.11 is that the positive quadratic drift b(sjM – w)2 dominates the Brownian motion process Sk and the term C(sjM – w)3/2. Note that the lemma also holds when C(sjM – w)3/2 is omitted, since this term is positive for M > 1. In fact, in the proof of Proposition 2.10 we only use the lemma without this term, but we need the term C(sjM – w)3/2 in the proof of Proposition 2.9 ahead. The proof of Lemma 2.12 relies on the system of component processes. Since it is very similar to the proof of Lemma 4.14, we only point out the differences in Section 5.

Lemma 2.11

Let C > 0 and b > 0. Then there exists an m1 > 0 such that for all k = 1,…,K, M > m1and j ∈ ℕ,

where sjM = j + Mv(j), and Sk(·), v(·) and pjM are defined by (10), (12) and (14), respectively.

Lemma 2.12

Let ℓ be defined by (16) and (17). There is an m1 > 0 such that

where sjM = j + Mv(j), τℓj = max{𝒩̂ℓ ∩ (−∞,j + 1]} and v(·), pjM and AjM are defined by (12), (14) and (15), respectively.

In order to prove tightness of {F̂k(t) – F̄0k(t), t ∈ ℝ, we only need Proposition 2.10 to hold for one value of β ∈ (0,1), analogously to [8, Remark 4.12]. We therefore fix β = 1/2, so that Then Proposition 2.10 leads to the following corollary, which is a limit version of Corollary 4.16 of [8]:

Corollary 2.13

For every ϵ > 0 there is a C > 0 such that

This corollary allows us to complete the proof of Proposition 2.9.

Proof of Proposition 2.9

Let ϵ > 0 and let k ∈ {1,…,K}. It is sufficient to show that there is an M > 0 such that P(F̂k(t) ≥ F̄0k(t + M)) < ϵ and P(F̂k(t) ≤ F̄0k(t – M)) < ϵ for all t ∈ ℝ. We only prove the first inequality, since the proof of the second one is analogous. Thus, let t ∈ ℝ and M > 1, and define

Note that τk is well-defined because of Theorem 1.7 (iii) and Corollary 2.2 (i). We want to prove that P(BkM) < ϵ. Recall that F̂ must satisfy (11). Hence,

| (20) |

By Corollary 2.13, we can choose C > 0 such that, with high probability,

| (21) |

uniformly in τk ≤ t, using that u3/2 > u for u > 1. Moreover, on the event BkM, we have yielding a positive quadratic drift. The statement now follows by combining these facts with (20), and applying Lemma 2.11.

Proposition 2.9 leads to the following corollary about the distance between the jump points of F̂k. The proof is analogous to the proof of Corollary 4.19 of [8], and is therefore omitted.

Corollary 2.14

For all k = 1,…,K, letandbe, respectively, the largest jump point ≤ s and the smallest jump point > s of F̂k. Then for every ϵ > 0 there is a C > 0 such thatfor k = 1,…,K, s ∈ ℝ. Combining Theorem 2.9 and Corollary 2.14 yields tightness of {Ĥk(t) – Vk(t)}:

Corollary 2.15

For every ϵ > 0 there is an M > 0 such that

2.3. Uniqueness of Ĥ and F̂

We now use the tightness results of Section 2.2 to prove the uniqueness part of Theorem 1.7, as given in Proposition 2.16. The existence part of Theorem 1.7 will follow in Section 3.

Proposition 2.16Let Ĥ and H satisfy the conditions of Theorem 1.7. Then Ĥ ≡ H almost surely.

The proof of Proposition 2.16 relies on the following lemma:

Lemma 2.17

Let Ĥ = (Ĥ1,…,ĤK) and H = (H1,…,HK) satisfy the conditions of Theorem 1.7, and let F̂ = (F̂1,…,F̂K) and F = (F1,…,FK) be the corresponding derivatives. Then

| (22) |

where ψk : ℝ → ℝ is defined by

| (23) |

Proof. We define the following functional:

Then, letting

| (24) |

| (25) |

and using we have

| (26) |

Using integration by parts, we rewrite the last term of the right side of (26) as:

| (27) |

The inequality on the last line follows from: (a) by Theorem 1.7 (ii), and (b) since D̂k(t) ≤ 0 by Theorem 1.7 (i) and Fk is monotone nondecreasing. Combining (26) and (27), and using the same expressions with F and F̂ interchanged, yields

By writing out the right side of this expression, we find that it is equivalent to

| (28) |

This inequality holds for all m ∈ ℕ, and hence we can take lim infm→∞. The left side of (28) is a monotone sequence in m, so that we can replace lim infm→∞. by limm→∞. The result then follows from the definitions of ψk, Dk, and D̂k in (23) – (25).

We are now ready to prove Proposition 2.16. The idea of the proof is to show that the right side of (22) is almost surely equal to zero. We prove this in two steps. First, we show that it is of order Op(1), using the tightness results of Proposition 2.9 and Corollary 2.15. Next, we show that the right side is almost surely equal to zero.

Proof of Proposition 2.16

We first show that the right side of (22) is of order Op(1). Let k ∈ {1,…,K}, and note that Proposition 2.9 yields that {Fk(m) – F̄0k(m)} and {F̂k(m) – F̄0k(m)} are of order Op(1), so that also {Fk(m) – F̂k(m)} = Op(1). Similarly, Corollary 2.15 implies that {Hk(m) – Ĥk(m)} = Op(1). Using the same argument for −m, this proves that the right side of (22) is of order Op(1).

We now show that the right side of (22) is almost surely equal to zero. Let k ∈ {1,…,K}. We only consider |Fk(m) – F̂k(m)‖Hk(m) – Ĥk(m)|, since the term |Fk(m) – F̂k(m)‖H+(m) – Ĥ+(m)| and the point –m can be treated analogously. It is sufficient to show that

| (29) |

Let τmk be the last jump point of Fk before m, and let τ̂mk be the last jump point of F̂k before m. We define the following events

Let ϵ1 > 0 and ϵ2 > 0. Since the right side of (22) is of order Op(1), it follows that ∫{Fk(t) – F̂k(t)}2dt = Op(1) for every k ∈ {1,…,K}. This implies that as m → ∞ . Together with the fact that m – {τmk ∨ τ̂mk} = Op(1) (Corollary 2.14), this implies that there is an m1 > 0 such that P(E1m(ϵ1)c) < ϵ1 for all m > m1. Next, recall that the points of jump of Fk and F̂k are contained in the set 𝒩k, defined in Proposition 2.1. Letting τ′mk = max{𝒩k ∩ (–∞, m]}, we have

| (30) |

The distribution of m – τ′mk is independent of m, non-degenerate and continuous [see 4]. Hence, we can choose δ > 0 such that the probabilities in (30) are bounded by ϵ2/2 for all m. Furthermore, by tightness of {Hk(m) – Ĥk(m)}, there is a C > 0 such that P(E3m(C)c) < ϵ2/2 for all m. This implies that P(Em(ϵ1,δ,C)c) < ϵ1 + ϵ2 for m > m1.

Returning to (29), we now have for η > 0:

using the definition of E3m(C) in the last line. The probability in the last line equals zero for ϵ1 small. To see this, note that Fk(m) – F̂k(m) > η/C, m – {τmk ∨ τ̂mk} > δ, and the fact that Fk and F̂k are piecewise constant on m – {τkm ∨ τ̂mk} imply that

so that E1m(ϵ1) cannot hold for ϵ1 < η2δ/C2.

This proves that the right side of (22) equals zero, almost surely. Together with the right-continuity of Fk and F̂k, this implies that Fk ≡ F̂k almost surely, for k = 1,…,K. Since Fk and F̂k are the right derivatives of Hk and Ĥk, this yields that Hk = Ĥk + ck almost surely. Finally, both Hk and Ĥk satisfy conditions (i) and (ii) of Theorem 1.7 for k = 1,…,K, so that c1 = ⋯ = cK = 0 and H ≡ H^ almost surely.

3. Proof of the limiting distribution of the MLE

In this section we prove that the MLE converges to the limiting distribution given in Theorem 1.8. In the process, we also prove the existence part of Theorem 1.7.

First, we recall from [8, Section 2.2] that the naive estimators F˜nk, k = 1,…,K, are unique at t ∈ {T1,…,Tn}, and that the MLEs F̂nk, k = 1,…,K, are unique at t ∈ 𝒯K, where for k = 1,…,K [see 8, Proposition 2.3]. To avoid issues with non-uniqueness, we adopt the convention that F̃nk and F̂nk, k = 1,…,K, are piecewise constant and right-continuous, with jumps only at the points at which they are uniquely defined. This convention does not affect the asymptotic properties of the estimators under the assumptions of Section 1.2. Recalling the definitions of G and Gn given in Section 1.1, we now define the following localized processes:

Definition 3.1

For each k = 1,…,K, we define:

| (31) |

| (32) |

| (33) |

| (34) |

where cnk is the difference between and at the last jump point τnk of before zero, i.e.,

| (35) |

Moreover, we define the vectors and

Note that differs from by a vertical shift, and that We now show that the MLE satisfies the characterization given in Proposition 3.2, which can be viewed as a recentered and rescaled version of the characterization in Proposition 4.8 of [8]. In the proof of Theorem 1.8 we will see that, as n → ∞, this characterization converges to the characterization of the limiting process given in Theorem 1.7.

Proposition 3.2

Let the assumptions of Section 1.2 hold, and let m > 0. then

where uniformly in t ∈ [–m, m].

Proof. Let m > 0 and let τnk be the last jump point of F̂nk before t0. It follows from the characterization of the MLE in Proposition 4.8 of [8] that

| (36) |

where equality holds if s is a jump point of F̂nk. Using that t0 – τnk = Op(n−1/3) by [8, Corollary 4.19], it follows from [8, Corollary 4.20] that Rnk(τnk, s) = Op(n−2/3), uniformly in s ∈ [t0 – m1n1/3,t0 + m1n−1/3]. We now add

to both sides of (36). This gives

| (37) |

where equality holds if s is a jump point of F^nk, and where

Note that ρnk(τnk, s) = op(n−2/3), uniformly in s ∈ [t0 – m1n−1/3,t0 + m1n−1/3], using (29) in [8, Lemma 4.9] and t0 – τnk = Op(n−1/3) by [8, Corollary 4.19]. Hence, the remainder term R′nk in (37) is of the same order as Rnk. Next, consider (37), and write let s = t0 + n−1/3t, and multiply by n2/3/g(t0). This yields

| (38) |

where equality holds if t is a jump point of and where

| (39) |

Note that uniformly in t ∈ [−m1, m1], using again that t0 – τnk = Op(n−1/3). Moreover, note that is left-continuous. We now remove the random variables cnk by solving the following system of equations for H1,…,HK:

The unique solution is

Definition 3.3

We define where with defined by (39), and where and are given in Definition 34. We use the notation ·|[−m,m] to denote that processes are restricted to [−m,m]. We now define a space for Ûn|[−m,m]:

Definition 3.4

For any interval I, let D−(I) be the collection of ‘caglad’ functions on I (left-continuous with right limits), and let C(I) denote the collection of continuous functions on I. For m ∈ ℕ, we define the space

endowed with the product topology induced by the uniform topology on I × II × III, and the Skorohod topology on IV.

Proof of Theorem 1.8

Analogously to the work of [6, Proof of Theorem 6.2] on the estimation of convex densities, we first show that Ûn|[−m,m] is tight in E[−m,m] for each m ∈ ℕ. Since by Proposition 3.2, it follows that is tight in (D−[−m,m])K endowed with the uniform topology. Next, note that the subset of D[−m,m] consisting of absolutely bounded nondecreasing functions is compact in the Skorohod topology. Hence, the local rate of convergence of the MLE [see 8, Theorem 4.17] and the monotonicity of k = 1,…,K, yield tightness of in the space (D[−m,m])K endowed with the Skorohod topology. Moreover, since the set of absolutely bounded continuous functions with absolutely bounded derivatives is compact in C[−m,m] endowed with the uniform topology, it follows that is tight in (C[−m,m])K endowed with the uniform topology. Furthermore, is tight in (D[−m,m])K endowed with the uniform topology, since uniformly on compacta. Finally, cn1,…,cnK are tight since each cnk is the difference of quantities that are tight, using that t0 – τnk = Op(n−1/3) by [8, Corollary 4.19]. Hence, also is tight in (C[−m,m])K endowed with the uniform topology. Combining everything, it follows that Ûn[−m,m] is tight in E[−m,m] for each m ∈ ℕ.

It now follows by a diagonal argument that any subsequence Ûn′ of Ûn has a further subsequence Ûn″ that converges in distribution to a limit

Using a representation theorem (see, e.g., [2], [15, Representation Theorem 13, page 71], or [17, Theorem 1.10.4, page 59]), we can assume that Ûn″ →a.s U. Hence, F = H′ at continuity points of F, since the derivatives of a sequence of convex functions converge together with the convex functions at points where the limit has a continuous derivative. Proposition 3.2 and the continuous mapping theorem imply that the vector (V,H,F) must satisfy

for all m ∈ ℕ, where we replaced Vk(t–) by Vk(t), since V1,…,VK are continuous.

Letting m → ∞ it follows that H1,…,HK satisfy conditions (i) and (ii) of Theorem 1.7. Furthermore, Theorem 1.7 (iii) is satisfied since t0 – τnk = Op(n−1/3) by [8, Corollary 4.19]. Hence, there exists a K-tuple of processes (H1,…,HK) that satisfies the conditions of Theorem 1.7. This proves the existence part of Theorem 1.7. Moreover, Proposition 2.16 implies that there is only one such K-tuple. Thus, each subsequence converges to the same limit H = (H1,…,HK) = (Ĥ1,…,ĤK) defined in Theorem 1.8. In particular, this implies that in the Skorohod topology on (D(ℝ))K.

4. Simulations

We simulated 1000 data sets of sizes n = 250, 2500 and 25000, from the model given in Example 2.3. For each data set, we computed the MLE and the naive estimator. For computation of the naive estimator, see [1, pages 13–15] and [9, pages 40–41]. Various algorithms for the computation of the MLE are proposed by [10, 11, 12]. However, in order to handle large data sets, we use a different approach. We view the problem as a bivariate censored data problem, and use a method based on sequential quadratic programming and the support reduction algorithm of [7]. Details are discussed in [13, Chapter 5]. As convergence criterion we used satisfaction of the characterization in [8, Corollary 2.8] within a tolerance of 10−10. Both estimators were assumed to be piecewise constant, as discussed in the beginning of Section 3.

It was suggested by [12] that the naive estimator can be improved by suitably modifying it when the sum of its components exceeds one. In order to investigate this idea, we define a ‘scaled naive estimator’ by

for k = 1,…,K, where we take s0 = 3. Note that for t ≤ 3. We also defined a ‘truncated naive estimator’ If F̃n+(T(n)) ≤ 1, then for all k = 1,…,K. Otherwise, we let sn = min{t : F̃n+(t) > 1} and define

for k = 1,…,K. Note that for all t ∈ ℝ.

We computed the mean squared error (MSE) of all estimators on a grid with points 0, 0.01, 0.02,…,3.0. Subsequently, we computed relative MSEs by dividing the MSE of the MLE by the MSE of each estimator. The results are shown in Figure 3. Note that the MLE tends to have the best MSE, for all sample sizes and for all values of t. Only for sample size 250 and small values of t, the scaled naive estimator outperforms the other estimators; this anomaly is caused by the fact that this estimator is scaled down so much that it has a very small variance. The difference between the MLE and the naive estimators is most pronounced for large values of t. This was also observed by [12], and they explained this by noting that only the MLE is guaranteed to satisfy the constraint F+(t) ≤ 1 at large values of t. We believe that this constraint is indeed important for small sample sizes, but the theory developed in this paper indicates that it does not play any role asymptotically. Asymptotically, the difference can be explained by the extra term (aK+1/ak){V+ – Ĥ+} in the limiting process of the MLE (see Proposition 2.4), since the factor aK+1/ak = F0k(t)/F0,K+1(t) is increasing in t.

Among the naive estimators, the truncated naive estimator behaves better than the naive estimator for sample sizes 250 and 2500, especially for large values of t. However, for sample size 25000 we can barely distinguish the three naive estimators. The latter can be explained by the fact that all versions of the naive estimator are asymptotically equivalent for t ∈ [0, 3], since consistency of the naive estimator ensures that limn→∞ F̃n+(3) ≤ 1 almost surely. On the other hand, the three naive estimators are clearly less efficient than the MLE for sample size 25000. These results support our theoretical finding that the form of the likelihood (and not the constrained F+ ≤ 1) causes the different asymptotic behavior of the MLE and the naive estimator.

Finally, we note that our simulations consider estimation of F0k(t), for t on a grid. Alternatively, one can consider estimation of certain smooth functional of F0k. The naive estimator was suggested to be asymptotically efficient for this purpose [12], and [14, Chapter 7] proved that the same is true for the MLE. A simulation study that compares the estimators in this setting is presented in [14, Chapter 8.2].

5. Technical proofs

Proof of Lemma 2.11

Let k ∈ {1,…,K} and j ∈ ℕ = {0,1,…}. Note that for M large, we have for all w ≤ j + 1:

Hence, the probability in the statement of Lemma 2.11 is bounded above by

In turn, this probability is bounded above by

| (40) |

where λkjq = b(sjM – (j – q + 1))2/2 = b(Mu(j) + q – 1)2/2.

We write the qth term in (40) as

where bk is the standard deviation of Sk(1) and and Bk(·) is standard Brownian motion. Here we used standard properties of Brownian motion. The second to last inequality is given in for example [16, equation 6, page 33], and the last inequality follows from Mills’ ratio [see 3, Equation (10)]. Note that bkjq ≤ d all j ∈ ℕ, for some d > 0 and all M > 3. It follows that (40) is bounded above by

which in turn is bounded above by d1 exp(−d2(Mu(j))3), for some constants d1 and d2, using (a + b)3 ≥ a3 + b3 for a, b ≥ 0.

Proof of Lemma 2.12

This proof is completely analogous to the proof of Lemma 4.14 of [8], upon replacing F̂nk(u) by F^k(u), F0k(u) by F̄0k(u), dG(u) by du, Snk(·) by Sk(·), τnkj by τkj, snjM by sjM, and AnjM by AjM. The only difference is that the second term on the right side of [8, equation (69)] vanishes, since this term comes from the remainder term Rnk(s,t), and we do not have such a remainder term in the limiting characterization given in Proposition 3.2.

Footnotes

Supported in part by NSF grant DMS-0203320

Supported in part by NSF grants DMS-0203320 and DMS-0503822 and by NI-AID grant 2R01 AI291968-04

AMS 2000 subject classifications: Primary 62N01, 62G20; secondary 62G05

Contributor Information

Piet Groeneboom, Department of Mathematics, Delft University of Technology, Mekelweg 4, 2628 CD Delft, The Netherlands, e-mail: p.groeneboom@its.tudelft.nl.

Marloes H. Maathuis, Department of Statistics, University of Washington, Box 354322, Seattle, WA 98195, USA, e-mail: marloes@stat.washington.edu.

Jon A. Wellner, Department of Statistics, University of Washington, Box 354322, Seattle, WA 98195, USA, e-mail: jaw@stat.washington.edu.

REFERENCES

- 1.Barlow RE, Bartholomew DJ, Bremner JM, Brunk HD. Statistical Inference Under Order Restrictions. The Theory and Application of Isotonic Regression. New York: John Wiley & Sons; 1972. [Google Scholar]

- 2.Dudley RM. Distances of probability measures and random variables. Ann. Math. Statist. 1968;39:1563–1572. [Google Scholar]

- 3.Gordon RD. Values of Mills’ ratio of area to bounding ordinate and of the normal probability integral for large values of the argument. Ann. Math. Statistics. 1941;12:364–366. [Google Scholar]

- 4.Groeneboom P. Brownian motion with a parabolic drift and Airy functions. Probability Theory and Related Fields. 1989;81:79–109. [Google Scholar]

- 5.Groeneboom P, Jongbloed G, Wellner JA. A canonical process for estimation of convex functions: The “invelope” of integrated Brownian motion + t4. Ann. Statist. 2001;29:1620–1652. [Google Scholar]

- 6.Groeneboom P, Jongbloed G, Wellner JA. Estimation of a convex function: Characterizations and asymptotic theory. Ann. Statist. 2001;29:1653–1698. [Google Scholar]

- 7.Groeneboom P, Jongbloed G, Wellner JA. Technical Report 2002–13. The Netherlands: Vrije Universiteit Amsterdam; 2002. The support reduction algorithm for computing nonparametric function estimates in mixture models. Available at arXiv:math/ST/0405511. [Google Scholar]

- 8.Groeneboom P, Maathuis MH, Wellner JA. Current status data with competing risks: consistency and rates of convergence of the MLE. Ann. Statist. 2007 doi: 10.1214/009053607000000983. accepted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Groeneboom P, Wellner JA. Information Bounds and Nonparametric Maximum Likelihood Estimation. Basel: Birkhäauser Verlag; 1992. [Google Scholar]

- 10.Hudgens MG, Satten GA, Longini IM. Nonparametric maximum likelihood estimation for competing risks survival data subject to interval censoring and truncation. Biometrics. 2001;57:74–80. doi: 10.1111/j.0006-341x.2001.00074.x. [DOI] [PubMed] [Google Scholar]

- 11.Jewell NP, Kalbfleisch JD. Maximum likelihood estimation of ordered multinomial parameters. Biostatistics. 2004;5:291–306. doi: 10.1093/biostatistics/5.2.291. [DOI] [PubMed] [Google Scholar]

- 12.Jewell NP, Van der Laan MJ, Henneman T. Nonparametric estimation from current status data with competing risks. Biometrika. 2003;90:183–197. [Google Scholar]

- 13.Maathuis MH. Master's thesis. The Netherlands: Delft University of Technology; 2003. Nonparametric Maximum Likelihood Estimation for Bivariate Censored Data. Available at http://www.stat.washington.edu/marloes/papers. [Google Scholar]

- 14.Maathuis MH. Ph.D. thesis. University of Washington; 2006. Nonparametric Estimation for Current Status Data with Competing Risks. Available at http://www.stat.washington.edu/marloes/papers. [Google Scholar]

- 15.Pollard D. Convergence of Stochastic Processes. New York: Springer-Verlag; 1984. Available at http://ameliabedelia.library.yale.edu/dbases/pollard1984.pdf. [Google Scholar]

- 16.Shorack GR, Wellner JA. Empirical Processes with Applications to Statistics. New York: John Wiley & Sons; 1986. [Google Scholar]

- 17.Van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes: With Applications to Statistics. New York: Springer-Verlag; 1996. [Google Scholar]