Abstract

Background

Self-reported screening behaviors from national surveys often over-estimate screening utilization, and the amount of overestimation may vary by demographic characteristics. We examine self-report bias in mammography screening rates overall, by age, and by race/ethnicity.

Methods

We use mammography registry data (1999–2000) from the Breast Cancer Surveillance Consortium (BCSC) to estimate the validity of self-reported mammography screening collected by two national surveys. First we compare mammography use from 1999–2000 for a geographically-defined population (Vermont) with self-reported rates in the prior two years from the 2000 Vermont Behavioral Risk Factor Surveillance System (BRFSS). We then use a screening dissemination simulation model to assess estimates of mammography screening from the 2000 National Health Interview Survey (NHIS).

Results

Self-report estimates of mammography use in the prior two years from the Vermont BRFSS are 14–27 percentage points higher than actual screening rates across age groups. The differences in NHIS screening estimates from models are similar for women 40–49 and 50–59 years and greater than for those 60–69, or 70–79 (27 and 26 percentage points vs. 14, and 14, respectively). Over reporting is highest among African American women (24.4 percentage points) and lowest among Hispanic women (17.9) with non-Hispanic white women in between (19.3). Values of sensitivity and specificity consistent with our results are similar to previous validation studies of mammography.

Conclusion

Over-estimation of self-reported mammography usage from national surveys varies by age and race/ethnicity. A more nuanced approach that accounts for demographic differences is needed when adjusting for over-estimation or assessing disparities between populations.

Keywords: breast neoplasms, data collection, mammography, recall, reproducibility of results

Introduction

National estimates of the use of cancer screening procedures are based primarily on self-reported results from the National Health Interview Survey (NHIS) 1 and the Behavior Risk Factor Surveillance System (BRFSS)2, such as prior screening mammography use, is well-known to be subject to biases such as social response bias and recall bias (1) (2). However quantifying the effect of over reporting on population estimates of screening behavior is difficult.

Studies evaluating the validity of self-reported mammography screening have shown varying amounts of over-reporting among samples or defined populations that have typically been limited in size. Validation of self-reported mammography use within the past 2 years with medical records from managed care populations have demonstrated reasonably high agreement (~70%–88%) with high sensitivity (81%–99%) but lower specificity (40%–63%) (3–8). Studies focused on minority, low income or diverse populations have shown a wider range of agreement and variation between race and ethnic groups for mammography use (9–15). These results along with a recent meta-analysis (2) suggest that national survey data overestimate mammography screening usage and do not capture important racial/ethnic differences in utilization. An unbiased method for evaluating mammography utilization at the population level is needed that will provide more accurate estimates of disparities among race/ethnic populations.

This study examines the amount of self-report bias in mammography screening rates by age, race and ethnicity, utilizing population-based longitudinal data on mammography usage collected by the National Cancer Institute’s (NCI) Breast Cancer Surveillance Consortium (BCSC) (16). More specifically, we examine the validity of survey responses for large, geographically defined populations. First, we consider the state of Vermont. The percent of women living in Vermont who received a mammogram in the years 1999 and 2000 recorded in the Vermont Breast Cancer Surveillance System (VBCSS) or in the neighboring areas covered in the New Hampshire Mammography Network is compared with the percent of women who self-reported in 2000 that they were screened in the previous 2 years from the Vermont BRFSS. Including mammograms for women who live in Vermont but received a mammogram in New Hampshire allows for a more complete accounting of mammograms received. These data allows us to directly compare of self-reported screening rates to actual mammograms recorded in the BCSC data base. This analysis updates a similar comparison performed by the Vermont Program for Quality in Health Care for the years 1994–1996 which found a 17 percentage point difference between BRFSS self reported rates of recent mammography use and an estimate from the Vermont Mammography Registry (a component of the VBCSS) for women 52–64 years of age.3

Since we are interested in understanding national screening rates and Vermont is not representative of the U.S. as a whole, we perform a second analysis based on data from six different geographically defined locations in the BCSC representing approximately 5% of the U.S. population. We use a screening dissemination model to generate simulated results for the U.S. population and compare these results with national cross-sectional estimates from the year 2000 NHIS for the percent of women that received a mammogram in the previous two years. We update a model for mammography dissemination and usage in the U.S. population (17) based on longitudinal data from the BCSC to include additional years of data from the BCSC and to model screening utilization by race and ethnicity. The difference between modeled and self- reported screening rates are put into context of previously reported validation studies by comparison with reported values of sensitivity (percent of women who did have a mammograms who accurately report having a mammogram in the previous two years) and specificity (percent of women who did not have a mammogram who accurately report not having a mammogram in the previous two years).

Materials and Methods

Study Population and Data

The BCSC is an NCI-supported research initiative that collects population-based longitudinal data on mammography usage and performance in clinical practice through mammography registries that are linked to cancer outcomes. 4 (16). Each registry and the BCSC statistical coordinating center have received IRB approval for either active or passive consenting processes or a waiver of consent to enroll participants, link data, and perform analytic studies. All procedures are Health Insurance Portability and Accountability Act (HIPAA) compliant and all registries and the SCC have received a Federal Certificate of Confidentiality and other protection for the identities of women, physicians, and facilities who are subjects of this research. The BCSC has collected data on screening mammograms since 1994 in seven geographically defined research sites, and represents approximately 5% of the U.S. population. Data from these sites are transformed to a standard data format and sent to a statistical coordinating center that pools the data for analysis. Research data collection sites are Vermont Breast Cancer Surveillance System (VBCSS), New Hampshire Mammography Network (NHMN), Colorado Mammography Project (CMAP), Carolina Mammography Registry (CMR), New Mexico Mammography Project (NMMP), and San Francisco Mammography Registry (SFMR). Group Health Center for Health Studies in Washington, one of the 7 BCSC sites, was not included in this analysis because it consists of an HMO population where the majority of women follow a 2-year screening interval with a formal reminder system.

The pooled data set includes all mammograms performed at participating facilities whether or not that woman lives in the defined geographical area. It does not include records for mammograms obtained outside the geographically defined areas of the BCSC or in facilities within the defined areas that do not participate in the BCSC. Generally, research sites do not include information from all facilities where mammographic exams are given within a particular geographic area. The exception is VTBCSS which includes all mammography facilities in the state of Vermont, allowing us to achieve complete ascertainment of mammography utilization.

Mammography exams classified as screening by the radiologist from the BCSC are used either directly or as inputs into a larger model to estimate the number of woman who received a mammogram in the years 1999 or 2000 as a percent of the population. These estimates are compared to the percent of the population who reported having a screening mammogram and the percent who had a mammogram for any reason in the past 2 years for the 2000 calendar year.

The percent of women reporting a mammogram within the past two years and the percent of women reporting a mammogram for any reason from BRFSS and NHIS surveys are calculated using SUDAAN to account for the complex design of these surveys.

BCSC estimates of the percent of women in Vermont that received a screening mammogram in 1999 or 2000

The number of screening mammography exams recorded in the BCSC for women who live in Vermont from the years 1999 and 2000 are combined with population estimates from the 2000 census to estimate age-specific screening rates. We include mammograms that were performed at BCSC facilities in New Hampshire for woman whose primary residence was in Vermont based on their home zip code. The probability of a mammography in the past 2 years for 10-year age groups is calculated as the number of women with at least one screening mammogram in either 1999 or 2000 recorded in the BCSC divided by the census population in that age group. These estimates are compared with the year 2000 BRFSS survey results for mammography use in Vermont.

Modeled estimates of the percent of women in the United States who received a screening mammogram in 1999 or 2000

With the exception of Vermont where all facilities in the state are included in the BCSC, the BCSC data set does not contain all mammography exams that occurred in a defined geographical area. Therefore, a population denominator is not available to obtain screening rates for the BCSC data set. Instead, we use a modeling approach to estimate the percent of the women in the U.S. that received a mammogram in a specific time period from these data. Our approach extends a previous model by allowing for different screening rates by race and ethnicity.(17) The model consists of two separate components that together describe screening patterns for 5-year birth cohorts in the U.S. during the years 1975– 2000. The first component describes the age at which a woman receives an initial screening exam and the second estimates the interval between successive screening exams

Simulation is used to combine the two components of the model and generate individual screening histories representative of the U.S. population over time. This simulation output is then used to estimate the percent of women in specified age and racial/ethnic groups that had a screening mammogram in the two year period 1999 and 2000. Results from the model are compared with national estimates of mammography use from the 2000 NHIS. A detailed description of the modeling is available upon request.

Calculation of Sensitivity and Specificity

Since this is not a validation study, we can not directly estimate the sensitivity and specificity of the survey respondents. However it is possible to identify values for sensitivity and specificity that are consistent with our findings assuming that the modeled results represent the true percent of women that had a mammogram over a two year period. We can then compare these values with the existing literature on the validity of self-reported mammography use. The equation below allows us to specify a value for sensitivity and calculate a corresponding value for specificity that would relate to the modeled and observed percentages.

Results

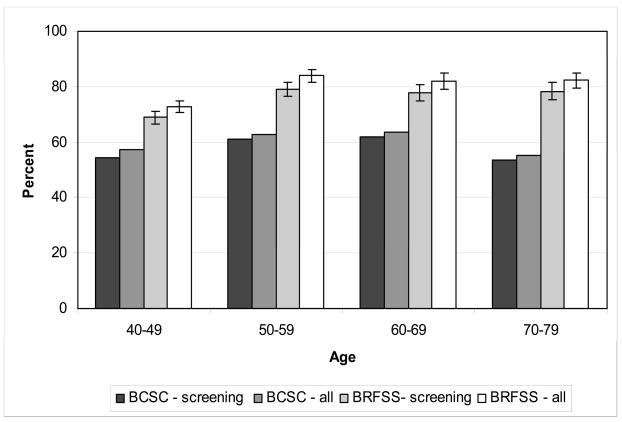

Figure 1 shows the percent of women who reported having a mammogram for any reason and specifically for screening in the past two years from the 2000 Vermont BRFSS and the percent of women who live in Vermont that received a screening mammogram in either 1999 or 2000 based on the BSCS data. When comparing self-report to BCSC data for the state of Vermont, differences of 15, 20, 17, and 27 percentage points are found for screening mammograms for ages 40–49, 50–59, 60–69, 70–79 years, respectively. When considering mammography for any reason, the differences are similar with percentage point differences of 16, 21, 28, and 27.

Figure 1.

The percent of women who live in Vermont who had a mammography examination for any reason and specifically for screening from the Breast Cancer Surveillance Consortium and the percent of women who reported have a mammogram for any reason or specifically for screening in the previous 2 years from the 2000 Vermont Behavioral Risk Factors Survey (BRFS). Single standard error bars are added to the BRFS estimates.

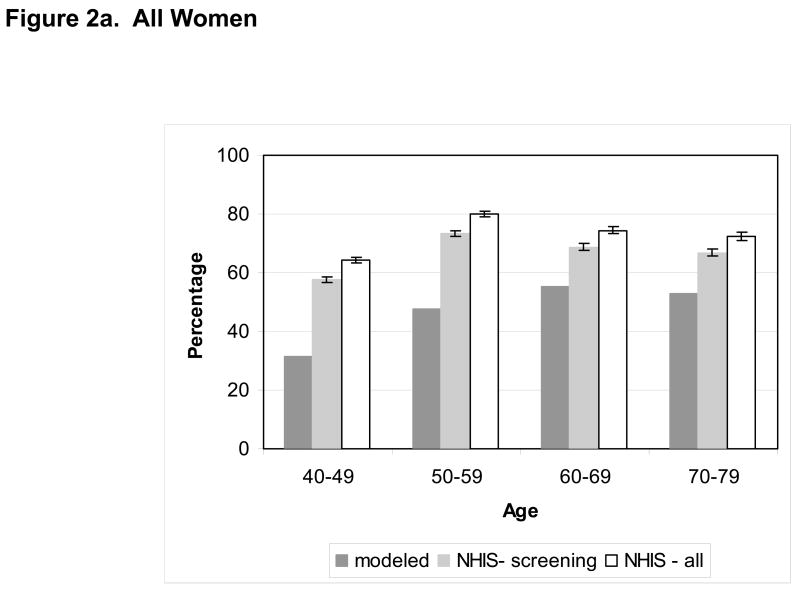

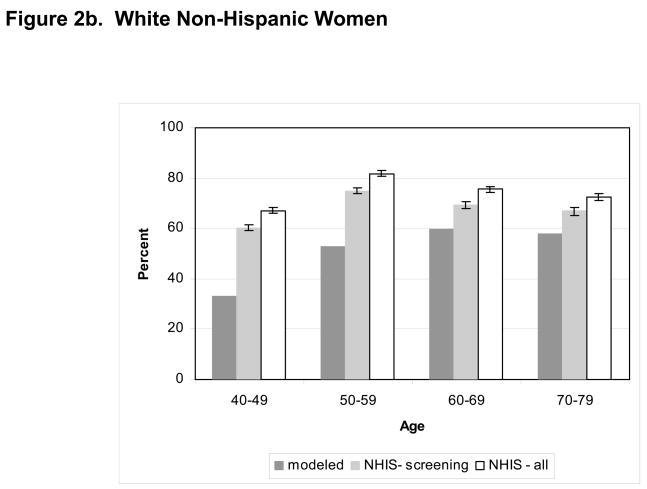

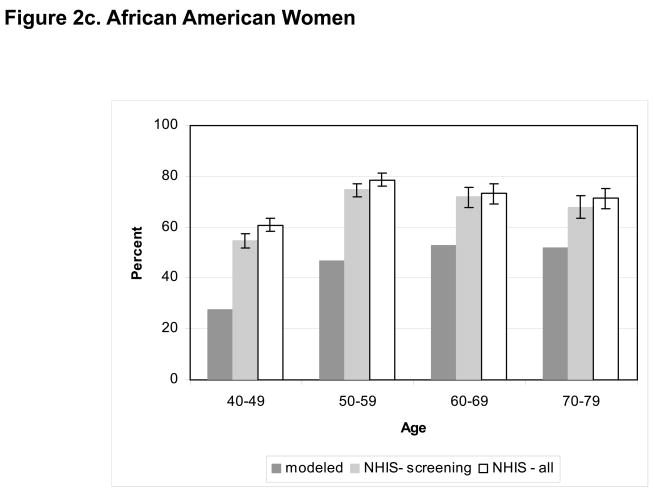

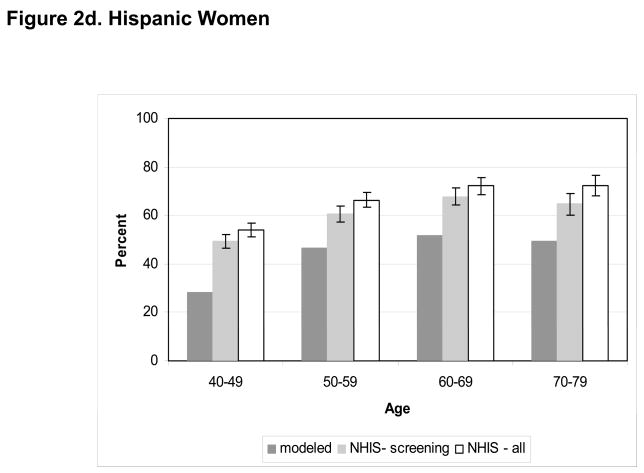

Figures 2a–2d show similar results for the nationally representative 2000 NHIS survey and the percent of women receiving a screening mammogram in either 1999 or 2000 based on the mammography dissemination and usage model. Figure 2a shows all women combined and 2b–2d gives results for white, non-Hispanic white, African American, and Hispanic women. The difference in screening rates given by the model and those reported in 2000 NHIS is shown in table 1. Modeled and self-reported estimates for the percent of women who had a mammogram in the previous two years are highest and over reporting is lowest for non-Hispanic white women. This result is consistent with the majority of misclassification being attributed to women who were not screened in the defined time period, leading to an underestimation of the disparities between groups. Self reported screening rates were similar for African Americans and Hispanics. However, a bigger difference is found between modeled and self-reported estimates among African American women, suggesting more over reporting among African American women. Over reporting is highest in younger age groups where the level of recent screening is the lowest.

Figure 2.

The percent of women predicted to have a mammogram in the years 1999 and 2000 based on the mammography dissemination and usage model and the percent of women who reported have a mammogram for any reason or specifically for screening in the previous 2 years from the 2000 National Health Information Survey (NHIS). Single standard error bars are added to the NHIS estimates. a) All Women b) Non-Hispanic White Women c) Non Hispanic African American Women d) Hispanic Women.

Table 1.

Difference in percentage points between NHIS 2000 national estimates of mammography screening in the previous two years and modeled rates

| Age (years) | |||||

|---|---|---|---|---|---|

| 40–79 | 40–49 | 50–59 | 60–69 | 70–79 | |

| All Women | 21.9 | 26.5 | 25.9 | 13.6 | 13.9 |

| Non-Hispanic White | 19.3 | 27.4 | 22.2 | 9.4 | 9.1 |

| African American | 24.4 | 27.3 | 28.0 | 18.8 | 16.3 |

| Hispanic | 17.9 | 21.3 | 14.3 | 16.1 | 15.6 |

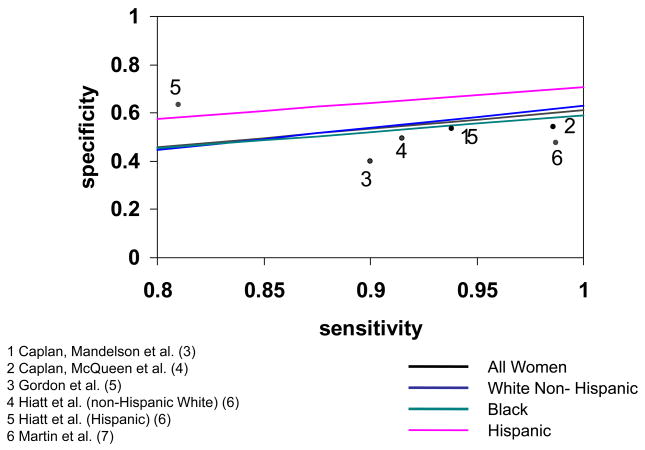

Figure 3 plots the values of sensitivity and specificity consistent with the comparison between modeled results and self-reported NHIS rates by race/ethnicity. Previously published estimates of sensitivity and specificity have been added to the graph to present our results in the context of the current literature on self report bias related to mammography screening.

Figure 3.

Values of reporting sensitivity and specificity that are consistent with the differences between modeled screening rates in 1999 and 2000 and self reported mammography screening rates in the previous 2 years from the 2000 National Health Information Survey and previously reported estimates for sensitivity and specificity from the literature.

Discussion

Estimates of screening mammography utilization rates are typically based on self-reported information, because surveys are an efficient method to obtain information on a large number of individuals. Medical records, such as those obtained in the BCSC, are generally considered to provide more accurate information on mammography usage than self reported data from state and national surveys; however, studies rarely collect both medical records and self-report to be able to actually compare the difference. This study is unusual because it examines the validity of survey response on a geographically defined population, using the Census as denominator, rather than focusing on a particular group such as members of an HMO. The approach presented provides an estimate of recent screening rates that is likely to be representative of the U.S. population.

The mammography model used is based largely on the BCSC data. Previous work has compared counties included in the BCSC to all counties in the U.S. to gauge representativeness of the BCSC to the U.S. (18) A number of county level variables were similar between the U.S. and counties included in the BCSC, with BCSC counties appearing to have slightly higher income and education levels.

Our results are consistent with previously reported studies. The 17–28 percentage point difference between the observed rates of screening within the prior two years in Vermont and estimates based on self-report from the Vermont BRFSS is consistent with previous work. Values for sensitivity and specificity that would explain the differences between modeled screening rates and national survey estimates are in line with previously reported studies that sought to validate survey-based estimates for the percent of women that were screened in the previous two years. Estimates of screening mammography based on BCSC data are consistently lower then those reported by NHIS or BRFSS (19).

Sensitivity of self-report of health behaviors are consistently high while specificity tends to be lower, resulting in an overestimation of the percent of the population actually adhering to recommended screening behavior. Therefore misreporting occurs mainly in women who have not had a mammogram in the previous two years and over reporting is greatest in the groups that have the lowest screening rates. We found the largest difference between modeled and self-reported screening rates among younger women and African Americans, both of whom had the lowest screening rates.

Lower screening rates among African American women results in more over reporting in African American women than in non-Hispanic white women even with similar values of sensitivities and specificities. Systematic underestimation of disparities between these two groups is a result of this pattern of misreporting. This is consistent with previous analyses that have shown a lower percent of self-reported mammogram utilization can be validated by medical reports for African American women compared to white women. For example, Holt et al. (11) found self-reported mammography use similar between white and African American women, but lower rates of validated mammography among African American women. McPhee et al. (13) also reported a lower validation rate for self-reported mammograms among African American women than white women.

Our results confirm other findings that Hispanic women may have different sensitivity and specificity values than white and African American women resulting in less over reporting. Hiatt et al. (6) reported lower sensitivity and higher specificity for Hispanic as compared to non-Hispanic white women, and Lawrence et al.(20) showed lower sensitivity in Mexican Americans compared to Euro-Americans. Other analyses (15) have shown lower sensitivity and specificity in Puerto Rican women as compared to African American or non-Hispanic white women. In contrast with our results, previous studies have shown a lower validation rate in Hispanic women compared to non-Hispanic white women (6;13) (20), (15). Hispanic women appear to have different patterns for misreporting mammography usage, and those patterns may vary within subgroups of the Hispanic population. A large percentage of Hispanic women in the BCSC come from New Mexico.

Over-reporting among women who have not received a mammogram also affects trends over time. During time-periods when screening rates have increased, the true amount of improvement will be masked since over-reporting will be decreasing at the same time that screening is increasing. For the first time since mammography rates have been ascertained, there was a reported decrease in the percent of the population reporting recent mammography use between 2000 and 2005 (21). When screening rates decrease, we would expect that over reporting would increase, leading to an underestimation of the actual decrease in screening rates observed in 2005.

Women may over-report the use of mammography screening in survey situations for several reasons. The phenomenon of “telescoping” (i.e., remembering that an event occurred more recently then it actually did) can lead to systematic under-reporting of the time since last mammogram and over-reporting of the prevalence of women who adhere to a guideline-based screening interval, such as the past one or two years. The difference between the modeled and self-reported results may be related to the difference between being a “regular” screener and actually receiving the screening exam within the exact two year cut-off considered. Our model, as well as previous work (22;23) show that even regular screeners often do not achieve the recommended interval. A woman who sees herself as a regular screener may report an interval of two years even if the interval was slightly longer. The phenomenon of telescoping leads women to underestimate the time since their last mammography, but it is possible that self reported rates better represent women who come in regularly for exams even if it is not within the exact two year time frame.

Since recommendations for annual or biennial breast cancer screening are well publicized, women may feel compelled to give a socially desirable response of having a recent screening mammogram even when untrue. Some women may lack the knowledge necessary to properly answer survey questions about prior mammography screening. Another possibility is that women who choose to answer the survey question have different screening behaviors than non-responders. NHIS 2000 had an overall response rate of 72% and the 2000 Vermont BRFSS had a response rate of 50%. Selection bias could also contribute to the differences observed.

Several data limitations were encountered when developing the mammography dissemination and usage model. The model consists of separate components for the time to first mammography examination and the time between mammography examinations. The time until a first mammography exam component is based on self-report data from surveys for whether or not a woman has ever received a mammogram, which is also subject to self-report bias. However, previous work suggests that self report is more accurate when measuring if a woman has ever had a mammography then measuring if she had a mammogram within some specified time period.(10) Although both the NHIS and BRFSS surveys obtain information on the reason for the most recent mammogram, they do not contain similar information on all mammograms ever received. Therefore we can not directly determine if a woman has ever had a mammogram for the purposes of screening. This may result in underestimating the age at first screening mammography, ultimately leading to an overestimation of the amount of screening in the population.

Under certain circumstances the repeat mammography component of the model includes self-reported data. At each visit, women were asked when they had their last mammogram. If there was a discrepancy between the date of the last mammography recorded in the BCSC data and the date of self-reported last mammogram, the model included the minimum time estimate from the two sources when this discrepancy was greater than 6 months. This inclusion of self-report data was done to allow for the possibility that a woman received a mammogram at a facility that was not covered by the BCSC. The inclusion of self-reported data may overestimate frequency of mammography and result in an underestimation of the bias between self report and registry data on mammography use.

The modeling contains uncertainty on several levels. The data used to fit model parameters are subject to the limitations described above. Given the data, the parameter estimates have an associated variance. The parameter estimates are then use to simulate outcomes representing the US population. We do not include confidence intervals for the modeled screening rates because it would be difficult to quantify the true variance around these rates. Although bias in the modeled estimate may contribute to the difference reported, the results from the comparison of national estimates are very consistent with the Vermont comparison which is not subject to the potential modeling bias.

To obtain accurate information on screening behaviors, a consistent system of electronic medical records that links patients’ records from all sources of health care and then de-identifies them for purposes of research is needed. In the absence of such a system, recommendations described in Newell et al. (1) may help maximize accuracy associated with self-report. Systematic errors in self-reported screening rates result in biased estimates of disparities. Sensitivity and specificity estimates can be used to adjust self-report data to better capture difference between groups and trends over time.

Acknowledgments

Data collection for this work was supported by a NCI-funded Breast Cancer Surveillance Consortium co-operative agreement (U01CA63740, U01CA86076, U01CA86082, U01CA63736, U01CA70013, U01CA69976, U01CA63731, U01CA70040). The collection of cancer incidence data used in this study was supported in part by several state public health departments and cancer registries throughout the U.S. For a full description of these sources, please see: http://breastscreening.cancer.gov/work/acknowledgement.html. We thank the BCSC investigators, participating mammography facilities, and radiologists for the data they have provided for this study. A list of the BCSC investigators and procedures for requesting BCSC data for research purposes are provided at: http://breastscreening.cancer.gov/.

Data for research purposes are provided at: http://breastscreening.cancer.gov/

Footnotes

National Center for Health Statistics, “National Health Interview Survey” http://www.cdc.gov/nchs/nhis.htm accessed 4/13/2009

Centers for Disease Control and Prevention, “Behavioral Risk Factors Surveillance System” http://www.cdc.gov/brfss/ accessed 4/13/2009

Vermont Program for Quality in Health Care http://www.vpqhc.org/Archived/QualityReports/qr4/quality.pdf accessed 4/13/2009

National Cancer Institute “Breast Cancer Surveillance Consortium” http://breastscreening.cancer.gov/ accessed 4/13/2009

Reference List

- 1.Newell SA, Girgis A, Sanson-Fisher RW, Savolainen NJ. The accuracy of self-reported health behaviors and risk factors relating to cancer and cardiovascular disease in the general population: A critical review. Am J Prev Med. 1999;17:211–29. doi: 10.1016/s0749-3797(99)00069-0. [DOI] [PubMed] [Google Scholar]

- 2.Rauscher GH, Johnson TP, Cho YI, Walk JA. Accuracy of Self-Reported Cancer-Screening Histories: A Meta-analysis. Cancer Epidemiol Biomarkers Prev. 2008;17:748–57. doi: 10.1158/1055-9965.EPI-07-2629. [DOI] [PubMed] [Google Scholar]

- 3.Caplan LS, Mandelson MT, Anderson LA. Validity of Self-reported Mammography: Examining Recall and Covariates among Older Women in a Health Maintenance Organization. Am J Epidemiol. 2003;157:267–72. doi: 10.1093/aje/kwf202. [DOI] [PubMed] [Google Scholar]

- 4.Caplan LS, McQueen DV, Qualters JR, Leff M, Garrett C, Calonge N. Validity of Women’s Self-Reports of Cancer Screening Test Utilization in a Managed Care Population. Cancer Epidemiol Biomarkers Prev. 2003;12:1182–7. [PubMed] [Google Scholar]

- 5.Gordon NP, Hiatt RA, Lampert DI. Concordance of self-reported data and medical record audit for six cancer screening procedures. J Natl Cancer Inst. 1993;85:566–70. doi: 10.1093/jnci/85.7.566. [DOI] [PubMed] [Google Scholar]

- 6.Hiatt RA, Perezstable EJ, Quesenberry C, Sabogal F, Oterosabogal R, Mcphee SJ. Agreement Between Self-Reported Early Cancer Detection Practices and Medical Audits Among Hispanic and Non-Hispanic White Health Plan Members in Northern California. Prev Med. 1995;24:278–85. doi: 10.1006/pmed.1995.1045. [DOI] [PubMed] [Google Scholar]

- 7.Martin LM, Leff M, Calonge N, Garrett C, Nelson DE. Validation of self-reported chronic conditions and health services in a managed care population. Am J Prev Med. 2000;18:215–18. doi: 10.1016/s0749-3797(99)00158-0. [DOI] [PubMed] [Google Scholar]

- 8.King ES, Rimer BK, Trock B, Balshem A, Engstrom P. How valid are mammography self-reports? Am J Public Health. 1990;80:1386–8. doi: 10.2105/ajph.80.11.1386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Champion VL, Menon U, McQuillen DH, Scott C. Validity of self-reported mammography in low-income African-American women. Am J Prev Med. 1998;14:111–7. doi: 10.1016/s0749-3797(97)00021-4. [DOI] [PubMed] [Google Scholar]

- 10.Degnan D, Harris R, Ranney J, Quade D, Earp JA, Gonzalez J. Measuring the use of mammography: two methods compared. Am J Public Health. 1992;82:1386–8. doi: 10.2105/ajph.82.10.1386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Holt K, Franks P, Meldrum S, Fiscella K. Mammography self-report and mammography claims: racial, ethnic, and socioeconomic discrepancies among elderly women. Med Care. 2006;44:513–8. doi: 10.1097/01.mlr.0000215884.81143.da. [DOI] [PubMed] [Google Scholar]

- 12.McGovern PG, Lurie N, Margolis KL, Slater JS. Accuracy of Self-Report of Mammography and Pap Smear in a Low-Income Urban Population. Am J Prev Med. 1998;14:201–8. doi: 10.1016/s0749-3797(97)00076-7. [DOI] [PubMed] [Google Scholar]

- 13.McPhee SJ, Nguyen TT, Shema SJ, et al. Validation of Recall of Breast and Cervical Cancer Screening by Women in an Ethnically Diverse Population. Prev Med. 2002;35:463–73. doi: 10.1006/pmed.2002.1096. [DOI] [PubMed] [Google Scholar]

- 14.Suarez L, Goldman DA, Weiss NS. Validity of Pap smear and mammogram self-reports in a low-income Hispanic population. Am J Prev Med. 1995;11:94–8. [PubMed] [Google Scholar]

- 15.Tumiel-Berhalter LM, Finney MF, Jaén CR. Self-report and primary care medical record documentation of mammography and Pap smear utilization among low-income women. J Natl Med Assoc. 2004;96:1632–9. [PMC free article] [PubMed] [Google Scholar]

- 16.National Cancer Institute. Breast Cancer Surveillance Consortium: Evaluating Screening Performance in Practice. Bethesda, MD: National Cancer Institute, National Institutes of Health, U.S. Department of Health and Human Services; 2004. NIH Publication No. 04-5490. [Google Scholar]

- 17.Cronin KA, Yu B, Krapcho M, et al. Modeling the dissemination of mammography in the United States. Cancer Causes Control. 2005;16:701–12. doi: 10.1007/s10552-005-0693-8. [DOI] [PubMed] [Google Scholar]

- 18.Sickles EA, Miglioretti DL, Ballard-Barbash R, et al. Performance Benchmarks for Diagnostic Mammography. Radiology. 2005;235:775–90. doi: 10.1148/radiol.2353040738. [DOI] [PubMed] [Google Scholar]

- 19.Carney PA, Goodrich ME, MacKenzie T, et al. Utilization of screening mammography in New Hampshire. Cancer. 2005;108:1726–32. doi: 10.1002/cncr.21365. [DOI] [PubMed] [Google Scholar]

- 20.Lawrence VA, De Moor C, Glenn ME. Systematic Differences in Validity of Self-Reported Mammography Behavior: A Problem for Intergroup Comparisons? Prev Med. 1999;29:577–80. doi: 10.1006/pmed.1999.0575. [DOI] [PubMed] [Google Scholar]

- 21.Rakowski W, Breen N, Meissner H, et al. Prevalence and correlates of repeat mammography among women aged 55–79 in the Year 2000 National Health Interview Survey. Prev Med. 2004;39:1–10. doi: 10.1016/j.ypmed.2003.12.032. [DOI] [PubMed] [Google Scholar]

- 22.Taplin SH, Mandelson MT, Anderman C, et al. Mammography diffusion and trends in late-stage breast cancer: evaluating outcomes in a population. Cancer Epidemiol Biomarkers Prev. 1997;6:625–31. [PubMed] [Google Scholar]

- 23.Clark MA, Rakowski W, Bonacore LB. Repeat Mammography: Prevalence Estimates and Considerations for Assessment. Ann Behav Med. 2003;26:201–11. doi: 10.1207/S15324796ABM2603_05. [DOI] [PubMed] [Google Scholar]