Abstract

Humans use facial cues to convey social dominance and submission. Despite the evolutionary importance of this social ability, how the brain recognizes social dominance from the face is unknown. We used event-related brain potentials (ERP) and functional magnetic resonance imaging (fMRI) to examine the neural mechanisms underlying social dominance perception from facial cues. Participants made gender judgments while viewing aggression-related facial expressions as well as facial postures conveying dominance or submission. ERP evidence indicates that the perception of dominance from aggression-related emotional expressions occurs early in neural processing while the perception of social dominance from facial postures arises later. Brain imaging results show that activity in the fusiform gyrus, superior temporal gyrus and lingual gyrus, is associated with the perception of social dominance from facial postures and the magnitude of neural response in these regions differentiates between perceived dominance and perceived submissiveness.

Keywords: emotion, ERP, facial, fMRI, social dominance, social perception

Social dominance hierarchy is a core principle underlying social relations between social groups (Sidanius & Pratto, 1999) and between individuals within a social group (Fiske, 1992). Across a range of species within the animal kingdom from simple organisms, such as ants and bees, to more complex ones, including chickens, wolves and primates (Wilson, 1975), dominant groups and individuals within the hierarchy often have primary access to precious resources such as food, territory and mates while submissive individuals may expect protection or care from those of higher rank (Fiske, 1992). Given the importance of access to resources for survival, recognizing who is socially dominant within the group is critical to the welfare of the individual as well as to the maintenance of overall group stability and cohesion.

Prior social psychological research suggests that people infer social dominance from two kinds of facial cues: aggression-related emotional expressions and facial postures that vary in eye gaze and vertical head orientation. Facial expressions of anger, a signal of threat or potential aggression, are perceived as highly dominant, while fearful expressions are perceived as highly submissive (Hess, Blairy, & Kleck, 2000; Knutson, 1996). Aggression-related emotional displays are expressed to instigate fights that challenge an existing hierarchical order or to jockey for position during the formation of a new social hierarchy (Ellyson & Dovidio, 1985). Additionally, people may also use neutral facial postures to convey their social position (Ellyson & Dovidio, 1985). In particular, facial postures consisting of direct eye gaze and an upward head tilt express dominance, while facial postures with averted eye gaze and a downward head orientation communicate submission (Mignault & Chaundhuri, 2003). These neutral facial postures have been shown to effectively communicate social dominance and submission (Mignault & Chaunduri, 2003), however, unlike anger and fear facial expressions, they do not necessarily communicate the potential for aggression to the same extent as aggression-related emotional expressions (Hess, etal., 2000). Instead, neutral facial postures of dominance and submission may be more effective signals of social status in a stable social hierarchy, rather than signals to instigate a change in social hierarchy or to jockey for position during the formationof a social hierarchy. Given the prevalence of social hierarchy within and across social groups (Sidanius & Pratto, 1999) as well as the adaptive benefits of accurate social dominance perception, it is plausible that the ability to infer social dominance derives from adaptive mechanisms in the mind and brain specialized for recognizing social dominance cues in others. However, despite rich understanding of how people infer social dominance and submission from nonverbal cues, little is known about how neural systems facilitate the perception of social dominance from facial cues in humans.

Intracranial event-related potential (ERP) recordings from squirrel monkeys reveal a graded N200 amplitude response to pictures of high, medium and low status monkey and human neutral faces (Pineda, Sebestyen, & Nava, 1994). Social status of the face was determined by independent ratings from human perceivers who first observed separate group interactions of humans and monkeys and then ranked each individual human and monkey’s social status within their respective social group. High-ranking faces elicited the largest N200 amplitude, followed by mid-ranking and then low-ranking faces. In previous work on humans the N200 has been associated with face processing and visual attention (Allison, Puce, Spencer, & McCarthy, 1999a,1999b). The work on monkeys suggests that the N200 might also be influenced by social status cues. More recently, a neuropsychological investigation showed that human patients with damage to the ventromedial prefrontal cortex are not impaired in making social dominance judgments from facial cues, despite profound impairments in other kinds of social cognitive tasks (Karafin, Tranel, & Adolphs, 2004). These results suggest that the neural machinery underlying social dominance perception may involve cortical areas associated with face recognition and visual attention, but to a certain extent, are likely to be distinct from the neural bases of other kinds of basic social inferences, such as emotion recognition.

Convergent evidence from neuropsychological, neuroimaging and electrophysiological studies shows that social inferences from emotional expressions recruit a network of brain regions, including the temporal and occipitotemporal cortex, the amygdala, and frontal regions of the brain (Adolphs, 2002, 2003; Phan Wager, Taylor, & Liberzon, 2002; Sprengelmeyer, Rausch, Eysel, & Przuntek, 1998). Certain regions, such as the medial prefrontal and the superior temporal cortices are thought to be essential for core computations for emotional processing (Adolphs, 2002; Frith 2007). The superior temporal gyrus processes dynamic, changeable features of the face that are critical to conveying emotion (e.g. eye and mouth movements) (Allison, Puce, & Mc Carthy, 2000; Langton, Watt, & Bruce, 2000). This region including the superior temporal gyrus and temporal pole are thought to have bidirectional projections to and from the amygdala, which is thought to assess the valence and intensity of emotional stimuli (Adolphs, 2003). Limbic and paralimbic cortical regions, such as the amygdala, hippocampus, insula and orbitofrontal regions, may facilitate emotion recognition from the face by ‘simulating’ the emotional state conveyed in the emotional expression and drawing on prior experiences (Adolphs, 2002; Wild, Erb, & Bartels, 2001). In contrast to social dominance inference which occurs around 200 ms into the processing stream (Pineda, et al., 1994) emotion recognition from the face is a highly rapid process with modulation of early cortical activity by affect occurring as early as 112 ms post-stimulus (Pizzagalli et al., 2002).

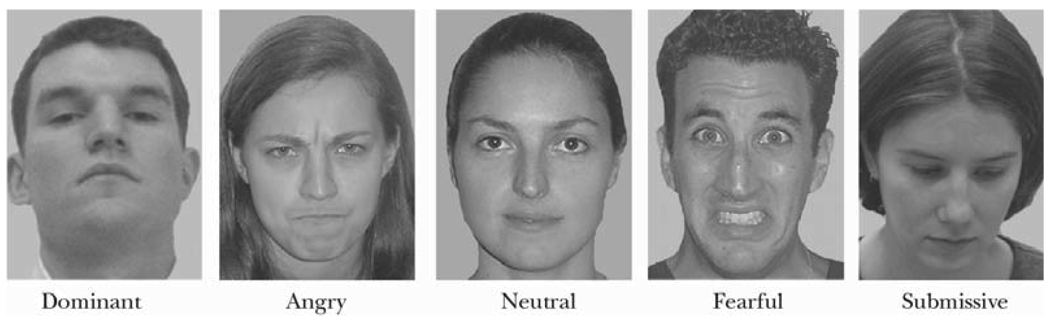

To determine whether perceiving social dominance from an emotional expression and a facial posture recruits similar or distinct neural circuitry, we used electrophysiological and brain imaging techniques to measure brain activity while participants viewed grey-scale pictures from a standardized set of angry, fearful, neutral, dominant and submissive faces in two experiments (Figure 1). Participants viewed blocks of faces alternating within blocks of fixation crosses. While viewing each face, participants made a gender judgment and responded with an appropriate button press. As with previous studies on social perception from the face, we predicted that there would be a neural response to each facial display despite the absence of explicit processing of emotion (Adolphs, 2002; Phan, et al., 2002). We then investigated participant’s ability to recognize each type of face with a post-scanning explicit recognition test. In this test, participants saw each face again and rated how angry, fearful, submissive, dominant and approachable the face seemed on 1 to 7 Likert scales.

Figure 1.

Sample stimuli.

Based on the distinct communicative functions of facial expressions of emotion and facial postures of social dominance, we hypothesized that distinct neural systems underlie the perception of social dominance from these two facial cues.

Method

General procedure

All participants were healthy, right-handed Caucasian college males1 recruited from the Boston, MA and Hanover, NH area. Stimuli for both experiments consisted of grey-scale photographs of 20 males and 20 females with either an anger, fearful, dominant, submissive or neutral facial expression and a fixation cross (see Figure 1). All photographs were standardized for size, luminance and background in Adobe Photoshop. For both experiments, participants viewed each face serially and performed a gender categorization task.

ERP experiment

Fourteen healthy, right-handed male Caucasian college students participated in this study. Each facial image was on the screen for 750 ms (visual angle ~4.3°) and was preceded by a 200 ms baseline period. There was a 2000 ms interval between trials. During the ITI and baseline periods, a cross fixation was displayed in the center of the screen. Presentation order of the faces was randomized for each participant with each picture in the set being displayed eight times. There were four breaks evenly spaced throughout the study so that the participants could rest.

Electroencephalogram (EEGs) were recorded from nine cortical sites (Fz, C3, Cz, C4, Pz, T5, T6, O1, O2) using an electrode cap from Electro-Cap International, Inc. Electrooculogram was recorded using tin electrodes placed on the outer canthi, right supraorbital and right suborbital positions. Cortical impedances did not exceed 5 kW. Cortical signals were amplified and filtered using a Biopac Systems EEG100B EEG amplifier. EEG was measured with a tin electrode attached to the tip of the nose as reference (Gratton, Coles, & Donchin, 1983). Cortical signals were passed through an analog high- and low-pass filter at 0.01 Hz and 35 Hz, respectively. Digital sampling for all physiological signals occurred at 250 Hz.

Data were segmented into 1000 ms post stimulus epochs with a 200 ms prestimulus baseline and organized by trial type. Eye blink and movement were digitally corrected according to established standards (Gratton et al., 1983). EEG data were manually scored to exclude trials with artifact. Trials were then baselined to 200 ms prestimulus and averaged for each participant within each stimulus condition. A Principal Components Analysis was performed in order to elucidate specific components. Based on this analysis and visual inspection of grand means and individual subject means, epoch windows were selected for components, P1, recorded from O1 and O2 sites, and N200, recorded from T5 and T6 sites. The window for P1 was 129–135ms and for N200 was 160–215 ms. The P1 and N200 components were then peak picked within its epoch window. Amplitude and latency for P1 and N200 underwent statistical analysis using SPSS software.

Functional magnetic resonance imaging ( fMRI) experiment

Seven Caucasian healthy, right-handed male college students participated in this study. A block design was used consisting of eight functional runs. For each functional run, participants viewed facial stimuli in five epochs separated by six epochs of fixation. Each epoch contained photographs of 10 angry, 10 fearful, 10 dominant, 10 submissive, or 10 neutral facial expressions. Order of epoch presentation was counterbalanced across runs for each individual. Each stimulus was shown for 2000 ms with an interstimulus interval of 500 ms.

Participants were scanned using a 1.5T GE Signa scanner with a custom-made headcoil. Foam padding around the head was used to minimize head movement. Twenty-two contiguous coronal slices (5 mm thickness, 1 mm gap) were collected at 1.5 T (GE Signa) using a gradient-echo echo-planar pulse sequence (TR = 2500 ms, TE = 40 ms, flip angle = 90°, FOV = 24 × 24 cm, matrix acquisition = 64 × 64 voxels). High-resolution T1-weighted images were acquired for all slices that received functional scans. These anatomical images were used for slice selection before functional imaging and to correlate functional activation with anatomical structures.

Following scanning, participants were given an explicit behavioral rating test. Participants viewed stimuli previously presented on a Macintosh laptop computer screen and evaluated how much each face seemed angry, afraid, dominant, submissive and approachable on a Likert scale from 1–7. Participants indicated their responses with an appropriate button press. Mean ratings of behavioral data were computed for each of the rating types and face type for each participant. These mean ratings were then analyzed using a 5 (ratings: angry, fearful, dominant, submissive, approachable) × 5 (face type: angry, fearful, dominant, fearful, neutral) repeated-measures analysis of variance (ANOVA).

Preprocessing and statistical analysis on anatomical and functional images were conducted using SPM99. Raw functional images were first reconstructed into ANALYZE format and then motion corrected in three dimensions using the six parameter, rigid-body, least squares realignment routine. T1-weighted anatomical images were then spatially normalized to the T1 template. Parameters created from this routine were used to normalize each individual participant’s motion-corrected functional images into common stereotaxic space (Talairach & Tournoux, 1988). Normalized images were smoothed using a 8 mm FWHM Gaussian kernel.

Single participant analysis was performed using a fixed effects general linear model. For each participant, contrast images were derived for relevant contrasts of interest. Group analysis was conducted using individual participant’s contrast images by entering them into a random effects general linear model, which allows for population inference. Significant clusters of activation for each contrast of interest are reported in Table 2 at a statistical threshold of p < .005, extant threshold > 5 voxels. Coordinates of activation were converted from the Montreal Neurological Institute (MNI) coordinates outputted from SPM into Talairach coordinates using the mni2tal algorithm in MATLAB. Talairach coordinates were converted into Brodmann areas using Talaiarch Daemon software.

Table 2.

Main brain regions activated for each face type versus neutral*

| Talairach coordinates (mm) |

t-value (at peak) |

Cluster size No. of voxels |

|||

|---|---|---|---|---|---|

| Brain region | x | y | z | ||

| Dominant versus neutral faces | |||||

| Right lingual gyrus | 27 | −54 | 13 | 5.9 | 34 |

| Right superior temporal gyrus | 60 | −51 | 3 | 5.6 | 5 |

| Right insula | 39 | 9 | 15 | 4.9 | 11 |

| Submissive versus neutral faces | |||||

| Right cingulate gyrus | 42 | −8 | 23 | 10.3 | 29 |

| Medial occipital gyrus | 39 | −72 | 19 | 8.4 | 37 |

| Right lingual gyrus | 21 | −47 | 2 | 8.0 | 50 |

| Left lingual gyrus | −29 | −47 | 2 | 7.6 | 26 |

| Left inferior parietal lobe | −53 | −22 | 24 | 6.9 | 34 |

| Right medial temporal gyrus | 42 | −63 | 21 | 6.2 | 12 |

| Right superior temporal gyrus | 48 | −51 | 25 | 6.0 | 14 |

| Angry versus neutral faces | |||||

| Left postcentral gyrus | −59 | −22 | 34 | 8.6 | 7 |

| Left medial occipital gyrus | −53 | −66 | 11 | 8.2 | 22 |

| Right medial temporal gyrus | 45 | −49 | 5 | 7.5 | 7 |

| Left medial frontal gyrus | −9 | −14 | 59 | 6.3 | 6 |

| Left cingulate gyrus | −18 | −21 | 39 | 6.1 | 15 |

| Fearful versus neutral faces | |||||

| Right anterior cingulate gyrus | 6 | 38 | −4 | 8.1 | 5 |

| Left cingulate gyrus | −15 | −42 | 30 | 7.3 | 14 |

| Left insula | −42 | −11 | 11 | 7.1 | 21 |

| Right precentral gyrus | 51 | −9 | 36 | 6.7 | 12 |

Listed clusters are based on threshold of p < .005, uncorrected, extent threshold ≥ 5. All coordinates are in Talairach and Tournoux system.877

Given our a priori interest in examining the relationship between the conscious perception of dominance and submission from two kinds of facial cues and brain activity, regression analyses were conducted in SPM99, using the simple regression algorithm. For each participant, a behavioral rating difference score was computed as mean dominance ratings for dominant faces minus mean dominance ratings for neutral faces. Additionally, a behavioral rating difference score was also computed as mean submissive ratings for submissive faces minus mean submissive ratings for neutral faces. Each participant’s dominant difference scores were then entered into the SPM analysis as a regressor along with each participant’s contrast image of dominant faces to neutral faces. Submissive difference scores were also entered as a regressor into a separate SPM simple regression analysis along with each participant’s contrast image of submissive faces to neutral faces. The resulting contrast images were superimposed over a group mean anatomical image created in SPM99 for visualization purposes. Results of the regression were first inspected at the p < .001, and then visualized at the p < .01, uncorrected level, for better visualization purposes. We did not conduct additional regression analyses using emotion ratings given our central interest in identifying neural regions that were critical to social dominance inference, rather than emotion recognition.

Results

ERP study results

Participants made highly accurate gender categorization judgments (M = 91.8%, SE = 1.2 %).2 Participants demonstrated a significant own-gender bias such that they categorized male faces (M = 93.3%, SE = 1.2%), better than female faces (M = 90.3 %, SE = 1.5%) (F(1, 13) = 5.7, p < .03). Gender categorization ability did not significantly differ across type of face (F(4, 52) = 0.54, p = .71). However, participants’ accuracy significantly varied as a function of both gender and type of face (F(4, 52) = 4.81, p < .002). Male participants were significantly less accurate at recognizing gender in female angry faces (M = 83.0%, SE = 5.7%) relative to male angry faces (M = 96.7%, SE = 1.2%) and all other types of female faces. Of all the female faces, male participants recognized the submissive female faces most accurately (M = 93.3%, SE = 1.5%). In contrast, male participants were least accurate at recognizing gender in submissive male faces (M = 91.3%, SE = 0.9%) relative to all other male faces.

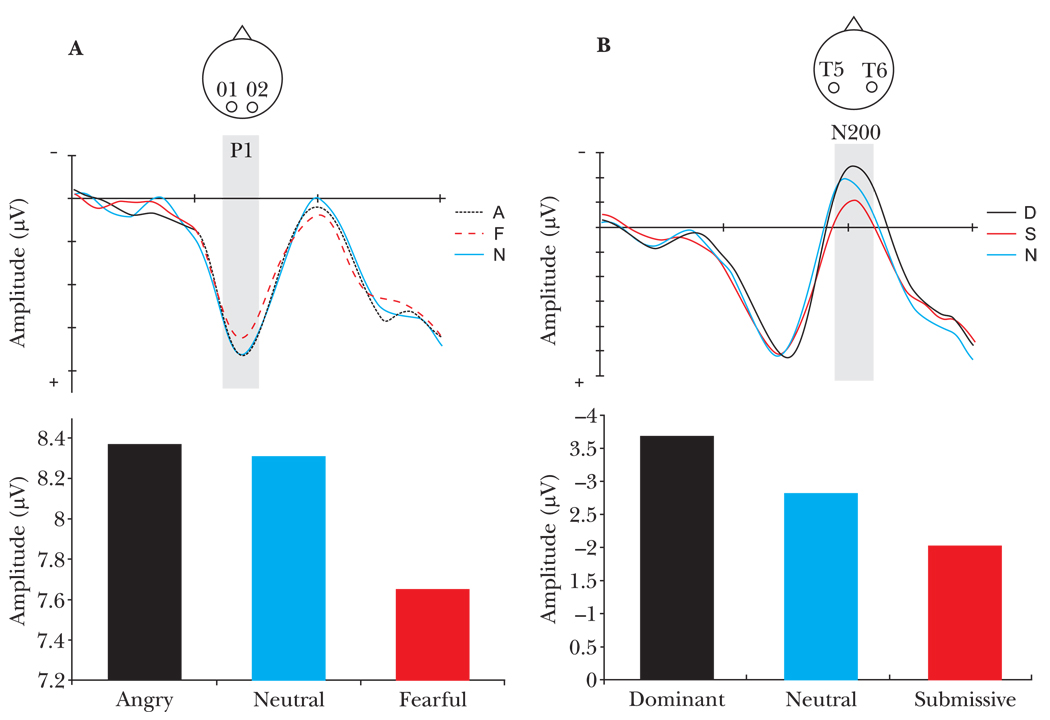

ERPs differed in their temporal sensitivity to the two kinds of facial cues of social dominance. An early ERP, the P1 component, occurring approximately 100 ms after stimulus onset and recorded over occipital regions of the brain, showed early sensitivity to fearful facial expressions, in both amplitude (M = 7.65, SE = 0.89) (F(4, 52) = 2.56, p < .05), and latency (M = 130.92 ms, SE = 2.12) (F(4, 52) = 4.73, p < .002) (see Figure 2a and Table 2). The P1 component has previously been demonstrated to respond preferentially to emotional stimuli and most likely receives contributions from limbic regions (Eimer & Holmes, 2002).

Figure 2.

ERP measures of face processing. (a) P1 response recorded from electrodes O1 and O2 showed modulation of peak amplitude, occurring between 129–135 ms, by type of facial expression of emotion F(4,52 = 2.56, p < .05). (b) N200 response recorded from electrodes T5 and T6 displayed modulation of peak amplitude, occurring between 160–240 ms, by type of facial posture F(4,52 = 3.06, p < .05).

The N200 component, a later ERP recorded over occipitotemporal regions, displayed modulation in neural response solely to facial postures of social dominance as shown in Figure 2b and Table 2. Moreover, the direction of N200 amplitude modulation from a neutral face baseline depended on the degree of social dominance conveyed by the facial cue. Dominant facial postures elicited the greatest negative amplitude response (M = −3.66, SE = 0.80) while submissive facial postures evoked the least (M = −2.06, SE = 0.62) (F(4, 52) = 3.06, p < .02).3 The N200 component is typically elicited during face processing (Allison et al., 1999a, 1999b), and most likely originates from neural activity in the fusiform gyrus and adjoining occipitotemporal brain regions (Allison et al., 2000; Kanwisher, McDermott, & Chun, 1997). As shown by Pineda et al., (1994), the N200 is sensitive to social status perception in nonhuman primates. Strikingly, our findings corroborate results of previous ERP recordings from nonhuman primates showing a nearly identical pattern of graded N200 amplitude modulation determined by the amount of social dominance conveyed in the facial cue.

fMRI study results

Results from the behavioral recognition test confirmed that participants explicitly recognized each facial display along the corresponding rating dimension as shown in Table 1 (angry, 5.90 ± 0.52; dominant, 5.30 ± 0.88; neutral, 5.07 ± 0.54; fearful, 6.48 ± 0.36; submissive, 5.71 ± 1.17; F(4, 52) = 63.03, p < 0.0001).4 Angry expressions were rated as highest on the angry dimension; but, as expected based on previous research, they were also rated as moderately dominant. Similarly, fearful expressions were highest on the fearful dimension but they were also rated as moderately submissive, indicating that angry and fearful facial expressions convey not only emotional states, but also social dominance information. In contrast, dominant and submissive facial postures were rated highly only on the dominant and submissive dimensions, respectively, and not on the emotional dimensions.

Table 1.

Behavioral performance on explicit facial judgment ratings performed after the fMRI experiment

| Type of face | |||||

|---|---|---|---|---|---|

| Dominant | Anger | Neutral | Fear | Submissive | |

| Rating dimension | |||||

| Dominant | 5.30 | 4.51 | 3.14 | 1.84 | 1.65 |

| Angry | 3.47 | 5.90 | 2.05 | 2.37 | 1.98 |

| Approachable | 2.63 | 2.13 | 5.07 | 3.21 | 3.88 |

| Fearful | 1.88 | 1.82 | 1.86 | 6.48 | 3.27 |

| Submissive | 1.69 | 1.98 | 3.23 | 4.70 | 5.71 |

Note: Bold numbers indicate which dimension each face type was rated highest on.

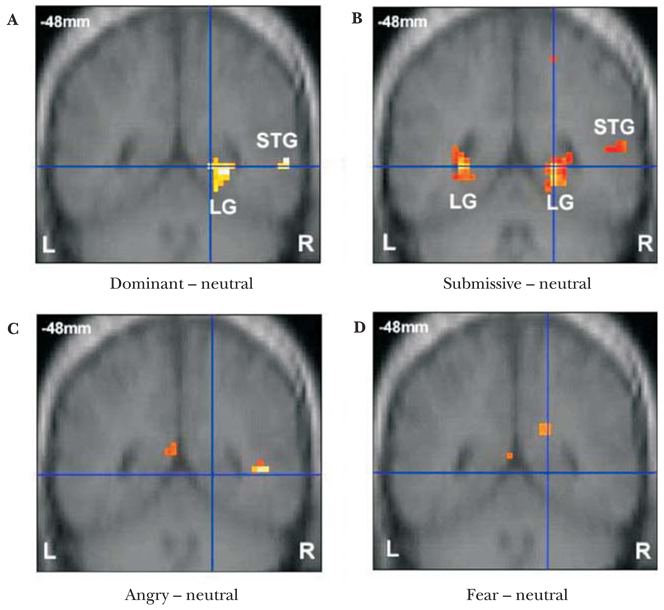

Whole-brain imaging results revealed spatially distinct neural systems recruited during perception of the different kinds of facial cues. Angry emotional expressions activated the left post-central gyrus, left medial occipital gyrus, right medial temporal gyrus, left medial frontal gyrus and left cingulate gyrus while fearful emotional expressions recruited the right anterior cingulate gyrus, left cingulate, left insula and right precentral gyrus (Table 3), regions that have been previously implicated in facial emotion recognition (Blair, 2003; Phan et al., 2002). These brain regions were not activated in response to facial postures of social dominance. In contrast, dominant and submissive facial postures relative to a neutral face baseline activated overlapping regions in the right superior temporal gyrus and right lingual gyrus (Table 3 and Figures 3a and b), areas not recruited during the perception of emotional expressions (Table 3 and Figure 3c and d).

Table 3.

Mean peak amplitude (in µV ± SE) and latency (in ms ± SE) of the P1 and N200 components, averaged across participants and occipital and temporal sites, respectively

| P1 | N200 | |||

|---|---|---|---|---|

| Type of Face | Amplitude | Latency | Amplitude | Latency |

| Dominant | 8.96 (0.99) | 135.57 (2.07) | −3.67 (0.80)* | 198.36 (3.33) |

| Angry | 8.37 (0.98) | 133.64 (2.51) | −2.91 (0.71) | 197.36 (3.42) |

| Approachable | 8.31 (0.93) | 131.29 (1.90) | −2.81 (0.73) | 193.36 (2.92) |

| Fear | 7.65 (0.89)* | 130.93 (2.12)* | −3.06 (0.80) | 194.50 (4.06) |

| Submissive | 8.84 (0.77) | 129.92 (2.00) | −2.07 (0.62)* | 194.36 (2.99) |

Significantly different from other facial types (p < .05)

Figure 3.

Group BOLD response in posterior brain regions showing (a) right lingual gyrus and right superior temporal gyrus in response to dominant relative to neutral faces and (b) bilateral lingual gyri and right superior temporal gyrus response to submissive relative to neutral faces, but no lingual or superior temporal gyrus response for (c) angry relative to neutral faces or (d) fear relative to neutral faces.

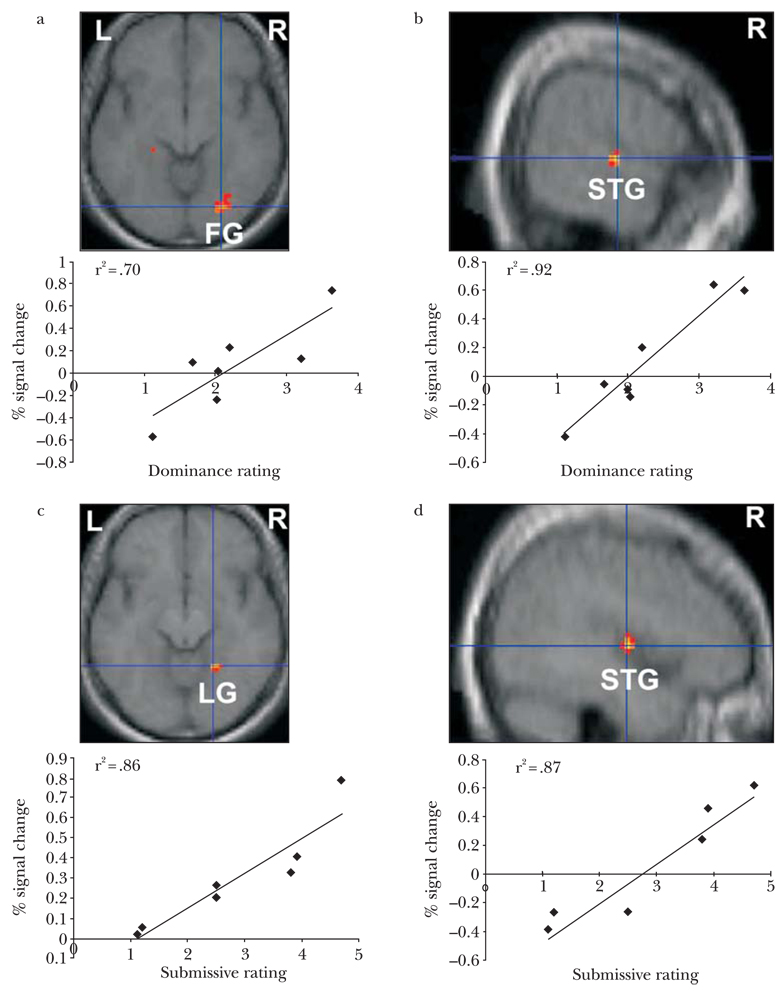

To more directly examine the relationship between recognition of dominance and submission and brain activation, we conducted a whole-brain regression analysis using difference scores of behavioral ratings for dominant versus neutral and submissive versus neutral facial postures and brain activation differences in response to dominant versus neutral and submissive versus neutral facial postures. First, dominance ratings were entered as a covariate in the dominant versus neutral face contrast for the seven participants. Higher dominance ratings for dominant relative to neutral faces were significantly correlated with greater signal change in the right fusiform (peak voxel: X = 27, Y = −78, Z = −9) and right superior temporal gyrus (peak voxel: X = 45, Y = −9, Z = 3) (Figure 4a and b). We conducted a similar regression analysis to investigate the relationship between perceived submission and brain activation using difference scores of submissive ratings for submissive versus neutral faces and differences in brain responses to submissive versus neutral faces. Higher submissive ratings for submissive relative to neutral faces were significantly correlated with greater signal change in the right lingual gyrus (peak voxel: X = 21, Y = −51, Z = −12) and right superior temporal gyrus (peak voxel: X = 60, Y = −15, Z = 3) (Figure 4c and d).

Figure 4.

Correlations between BOLD signal response and ratings for dominant and submissive faces. (a) positive correlation in right fusiform and right superior temporal gyrus (peak coordinates 27, −78, −9 and 60, −15, 3, R2 = .70 and R2 = .92, respectively, P < .01) between degree of signal change and perceived dominance; (b) positive correlation in right lingual gyrus and right superior temporal gyrus (peak coordinates 21, −51, −12 and 45, −9, 3, R2 = .86 and R2 = .86, respectively, P < .01, uncorrected) between degree of signal change and perceived submission.

Discussion

A parsimonious interpretation of the behavioral, electrophysiological and functional neuroimaging results is that social dominance perception occurs from two kinds of facial cues, emotional expressions and facial postures, which recruit temporally and spatially distinct neural responses in the brain. Aggression-related emotional expressions such as fear modulated neural activity as early as 120 ms after stimulus onset while dominant and submissive facial postures affected brain activity later at around 200 ms, which is consistent with prior electrophysiological work on dominance perception in monkeys (Pineda et al., 1994). Fear and anger expressions elicited greater neural activation in frontal and limbic brain areas whereas dominant and submissive facial postures activated overlapping neural regions including the right superior temporal gyrus and right lingual and adjacent fusiform area. Neural responses to facial postures, in particular, show sensitivity to the type and magnitude of the social dominance cue being perceived.

Nonhuman primate single-cell recordings and human brain imaging have previously demonstrated that the superior temporal gyrus, fusiform gyrus and lingual gyrus play a critical role in processing specific features of faces (e.g. eye gaze direction) and in perceiving actual or implied biological motion (Kanwisher et al., 1997; Perrett, Hietanen, Oram, & Benson, 1992; Puce et al., 2003; Servas, Osu, Santi, & Kawato, 2002). In particular, recent neuroimaging work in humans on the neural bases of mutual and averted gaze show an increased right lateralized response in the posterior region of cells along the superior temporal gyrus as well as the fusiform gyrus response during direct or mutual eye gaze relative to averted eye gaze (George, Driver, & Dolan, 2001; Pelphrey, Viola, & McCarthy, 2004). Our neuroimaging results corroborate these findings of a larger right-lateralized neural response to mutual (e.g. dominant facial posture) relative to averted gaze (e.g. submissive facial posture). Moreover, based on single-cell recording in macaques, cells along the upper bank of the superior temporal gyrus have been previously hypothesized as a putative neural region dedicated to the perception and communication of social dominance cues (Allison et al., 2000; Langton et al,. 2000). Critically, our results extend previous neuroimaging results by showing that the right superior temporal gyrus and fusiform gyrus not only differentiate mere perceptual aspects of faces such as their form, motion and eye gaze direction, but also underlie more complex, social inferences such as the perception of social dominance and submission. Consistent with a prior neuropsychological study of dominance judgments in patients with ventromedial prefrontal cortex damage (Karafin, Tranel, & Adolphs,, 2004), we also did not observe greater activation in ventromedial prefrontal cortex during implicit processing of dominant and submissive facial postures.

Our findings regarding when and where the brain processes emotional expressions and facial postures of social dominance may be due to the unique social function of each type of facial cue. Although they convey some social dominance information, facial expressions of fear and anger primarily provide information about a person’s emotional state and motivation to flee from or instigate aggression (Hess et al., 2000). It is likely advantageous to have specialized neural machinery that can quickly detect these kinds of facial cues and prepare the perceiver to respond appropriately to a potential threat. Facial postures of social dominance and submission signal neither direct or approaching threat nor acute emotional states (Hess et al., 2000). Rather, they communicate social standing and thus, subtly cue a broader array of social information such as access to resources, the likelihood of taking the lead or following others, and even mate potential (Fiske, 1992). Therefore, perceiving social dominance from facial postures may not require as rapid a neural processing stream as aggression-related emotional expressions, but instead involve its own unique neural machinery. Relatedly, the neural population encoding social dominance may be different from that of encoding aggression-related emotional responses because dominance and emotion can vary largely independently. It is possible to be in a dominant or submissive social position with or without expressing aggression, and it is possible to express aggression, whether or not one is in a dominant social position. As a general rule, orthogonal classes of information appear to be processed by separate neuronal populations in the brain. Such modularity permits parallel, rapid, and dedicated processing.

There are several limitations of the current studies. One limitation is the inclusion of only male participants. Social dominance recognition and expression differs in interesting ways between genders. Females are more attuned to interpersonal aggression whereas males are more sensitive to overt acts of physical aggression (Eagly & Steffen, 1986). Future research is needed to determine whether or not gender differences in social dominance perception exist at the neural level and if so, to what extent this is a result of differences in testosterone. Another limitation of the current research is that it only examines social dominance expressed in faces; however, social dominance is also perceived through bodily postures (e.g. expanded vs. constricted posture) and language use (e.g. polite vs impolite utterances) (Ellyson & Dovidio, 1985; Tiedens, 2001). Future work is needed to determine whether or not the neural responses reported here arise from domain-specific neural machinery evolved solely to recognize social dominance from different types of perceptual cues or are a result of more general-purpose neural systems dedicated to the integration and interpretation of facial cues along multiple perceptual and social dimensions.

Another limitation of the current study is that we examined the relationship between implicit social dominance inferences and neural responses to facial cues of social dominance and submission. Prior neuroimaging research has demonstrated neural differences in implicit versus explicit processing of emotional expressions (Habel et al., 2007). Future research may examine the possibility that explicit social dominance inferences may elicit greater common neural circuitry between emotional expressions and facial postures relative to implicit inferences. Finally, we only examined social dominance inferences from one social group, White Americans. Given the wealth of behavioral research suggesting differences in social dominance between different racial, cultural and socioeconomic status groups, it is important for future work to examine whether group membership modulates neural responses to dominant and submissive facial cues (Sidanius & Pratto, 1999).

In sum, these results provide evidence for spatially and temporally distinct neural populations which perceive social dominance from facial cues and extend previous neurobiological findings from the animal literature to humans. Importantly, the neural correlates associated with the perception of dominance from facial postures (eye gaze and head orientation) seem to be distinct from those related the perception of dominance from facial emotional expressions, such as fear. Given the evolutionary prevalence and importance of social dominance hierarchy across species and across human social groups, it is plausible that the primate brain has specialized mechanisms for perceiving social dominance. The current research provides initial evidence for this hypothesis and lays a foundation for future social neuroscience research examining the extent to which the human brain selectively processes social dominance cues. By characterizing the neurobiological bases of social dominance inference in humans, we may enrich our understanding of how and why social hierarchy permeates social relations between social groups and between individuals within a social group.

Acknowledgements

We thank Emily Stapleton, Pat Noonan, and Ashli Owen-Smith for assistance with stimuli collection and Heather Gordon, Joe Moran, Gagan Wig, and Tammy Larouche for help with fMRI data collection. This work is supported by NSF Graduate Fellowship to J.Y.C., NSF BCS-0435547 grant to N.A., and NIH RO3 MH0609660-01 grant to P.U.T.

Biographies

JOAN Y. CHIAO is an assistant professor of psychology at Northwestern University. Her research interests include social, cultural, and affective neuroscience.

REGINALD B. ADAMS, JR. is an assistant professor of psychology at Pennsylvania State University. His research interests include using theory and methods from visual cognition and affective neuroscience to study how we extract social and emotional meaning from nonverbal cues, particularly via the face.

PETER U. TSE is an associate professor of psychology at Dartmouth College. His research interests include using fMRI, DTI, and psychophysics to study the cognitive and neural bases of visual perception and consciousness.

WILLIAM T. LOWENTHAL was a research assistant in the Interpersonal Perception and Communication lab at Harvard University.

JENNIFER A. RICHESON is an associate professor of psychology at Northwestern University. Her research interests include prejudice, stereotyping, and intergroup relations.

NALINI AMBADY is a professor of psychology at Tufts University. Her research interests include how social factors interplay with perception, cognition, and behavior across multiple levels of analysis.

Footnotes

Given the importance of gender of both the perceiver and target on social dominance perception and the heightened sensitivity of males to aggression, we only included male participants in the current studies.

A 2 (gender of face) × 5 (type of face) repeated-measures ANOVA was conducted on accuracy and reaction time results of the gender categorization task. Significant effects were only found for accuracy measures which are reported in text.

Latency of the N200 component was not significantly affected by type of face, (F(4, 52) = 1.41, p = .24).

Explicit behavioral ratings were analyzed in a 5 (type of face) × 5 (type of rating) repeated measures ANOVA.

Contributor Information

Joan Y. Chiao, Northwestern University

Reginald B. Adams, Jr., The Pennsylvania State University

Peter U. Tse, Dartmouth College

Lowenthal Lowenthal, Tufts University.

Jennifer A. Richeson, Northwestern University

Nalini Ambady, Tufts University.

Notes

- Adolphs R. Neural systems for recognizing emotion. Current Opinion in Neurobiology. 2002;12:169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Cognitive neuroscience of human social behavior. Nature Reviews Neuroscience. 2003;4:165–178. doi: 10.1038/nrn1056. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: Role of the STS region. Trends in Cognitive Science. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception. I: Potentials generated in occipitotemporal cortex to face and non-face stimuli. Cerebral Cortex. 1999a;9:415–430. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception. III: Effects of top-down processing on face-specifi c potentials. Cerebral Cortex. 1999b;9:445–458. doi: 10.1093/cercor/9.5.445. [DOI] [PubMed] [Google Scholar]

- Blair RJR. Facial expressions, their communicatory functions and neuro-cognitive substrates. Proceedings of the Royal Society of London B: Philosophical Transactions: Biological Sciences. 2003;358:561–572. doi: 10.1098/rstb.2002.1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boehm C. Hierarchy in the forest: The evolution of egalitarian behavior. Cambridge, MA: Harvard University Press; 1999. [Google Scholar]

- Eagly AH, Steffen VJ. Gender and aggressive behavior: A meta-analytic review of the social psychological literature. Psychological Bulletin. 1986;100:309–330. [PubMed] [Google Scholar]

- Eimer M, Holmes A. An erp study on the time course of emotional face processing. Neuroreport. 2002;13(4):1–5. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Ellyson S, Dovidio J. Power, dominance and nonverbal behavior. New York: Springer-Verlag; 1985. [Google Scholar]

- Fiske A. The four elementary forms of sociality: framework for a unifi ed theory of social relations. Psychological Review. 1992;99:689–723. doi: 10.1037/0033-295x.99.4.689. [DOI] [PubMed] [Google Scholar]

- Frith CD. The social brain? Philosophical Transactions of the Royal Society, Series B. 2007;362:671–678. doi: 10.1098/rstb.2006.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George N, Driver J, Dolan R. Seeing gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage. 2001;13:1102–1112. doi: 10.1006/nimg.2001.0769. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MG, Donchin E. A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology. 1983;55:468–484. doi: 10.1016/0013-4694(83)90135-9. [DOI] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin-Exner I, Gur RC, et al. Amygdala activation and facial expressions: Explicit emotion discrimination versus implicit emotion processing. Neuropsychologia. 2007;45:2369–2377. doi: 10.1016/j.neuropsychologia.2007.01.023. [DOI] [PubMed] [Google Scholar]

- Hess U, Blairy S, Kleck RE. The influence of facial emotion displays, gender and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior. 2000;24:265–283. [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karafin MS, Tranel D, Adolphs R. Dominance attributions following damage to the ventromedial prefrontal cortex. Journal of Cognitive Neuroscience. 2004;16:1796–1804. doi: 10.1162/0898929042947856. [DOI] [PubMed] [Google Scholar]

- Knutson B. Facial expressions of emotion infl uence interpersonal trait inferences. Journal of Nonverbal Behavior. 1996;20:165–182. [Google Scholar]

- Langton SR, Watt RJ, Bruce II. Do the eyes have it? Cues to the direction of social attention. Trends in Cognitive Science. 2000;4(2):50–59. doi: 10.1016/s1364-6613(99)01436-9. [DOI] [PubMed] [Google Scholar]

- Mignault A, Chaundhuri A. The many faces of a neutral face: Head tilt and perception of dominance and emotion. Journal of Nonverbal Behavior. 2003;27:111–132. [Google Scholar]

- Pelphrey KA, Viola RJ, McCarthy G. When strangers pass: Processing of mutual and averted social gaze in superior temporal sulcus. Psychological Science. 2004;15:598–603. doi: 10.1111/j.0956-7976.2004.00726.x. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Hietanen JK, Oram MW, Benson PJ. Organization and function of cells responsive to faces in the temporal cortex. Proceedings of the Royal Society of London B: Biological Sciences. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: A meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002;16:331–348. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- Pineda JA, Sebestyen G, Nava C. Face recognition as a function of social attention in non-human primates: An erp study. Cognitive Brain Research. 1994;2:1–12. doi: 10.1016/0926-6410(94)90015-9. [DOI] [PubMed] [Google Scholar]

- Pizzagalli DA, Lehmann D, Hendrick AM, Regard M, Pascual-Margui RD, Davidson RJ. Affective judgments of faces modulate early activity (approximately 160 ms) within the fusiform gyri. Neuroimage. 2002;16:663–677. doi: 10.1006/nimg.2002.1126. [DOI] [PubMed] [Google Scholar]

- Pratto F, Sidanius J, Stallworth LM, Malle BF. Social dominance orientation: A personality variable predicting social and political attitudes. Journal of Personality and Social Psychology. 1994;67:741–763. [Google Scholar]

- Puce A, Syngeniotis A, Thompson JC, Abbott DF, Wheaton KJK, Castiello U. The human temporal lobe integrates facial form and motion: Evidence from fMRI and ERP studies. Neuroimage. 2003;19:861–869. doi: 10.1016/s1053-8119(03)00189-7. [DOI] [PubMed] [Google Scholar]

- Servas P, Osu R, Santi A, Kawato M. The neural substrates of biological motion perception: An fMRI study. Cerebral Cortex. 2002;12:772–782. doi: 10.1093/cercor/12.7.772. [DOI] [PubMed] [Google Scholar]

- Sidanius J, Pratto F. Social dominance: An intergroup theory of social hierarchy and oppression. New York: Cambridge University Press; 1999. [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H. Neural structures associated with recognition of facial expressions of basic emotions. Proceedings of the Royal Society of London B: Biological Science. 1998;265:1927–1931. doi: 10.1098/rspb.1998.0522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotactic atlas of the human brain. Stuttgart: Thieme; 1988. [Google Scholar]

- Tiedens LZ. Anger and advancement versus sadness and subjugation: The effects of negative emotion expressions on social status conferral. Journal of Personality and Social Psychology. 2001;80:86–94. [PubMed] [Google Scholar]

- Wild B, Erb M, Bartels M. Are emotions contagious? Evoked emotionally expressive faces: Quality, quantity, time course and gender differences. Psychiatry Research. 2001;102:109–123. doi: 10.1016/s0165-1781(01)00225-6. [DOI] [PubMed] [Google Scholar]

- Wilson EO. Sociobiology. Cambridge, MA: Harvard University Press; 1975. [Google Scholar]